GeForce GTX 570 Review: Hitting $349 With Nvidia's GF110

A month ago, Nvidia launched its GeForce GTX 580, and it was everything we wanted back in March. Now the company is introducing the GeForce GTX 570, also based on its GF110. Is it fast enough to make us forget the GF100-based 400-series ever existed?

Tessellation: Unigine Gives Us Synthetic Numbers

For the past nine months, we’ve been running Unigine’s Heaven benchmark as a measure of tessellation performance. I’ve never really been happy with that. After all, Heaven is an engine demo, and it’s a little disingenuous to make judgment calls based on what largely boils down to a synthetic measurement.

Fortunately, HAWX 2 emerged right after the GeForce GTX 580 launch. The game is heavy on tessellation, so Nvidia was naturally all about it. But I made a conscious decision not to roll the HAWX 2 benchmark into testing prior to launch, as AMD suggested that it might be improving its performance picture through a patch or driver that’d dial down the game’s use of tessellation. Neither materialized, though, and now we’re facing a retail title that people are actually playing. Suddenly, its performance becomes much more relevant.

The exciting thing about HAWX 2 is that it’s tessellation for the sake of improving image quality. We’ve already seen tessellation for the sake of improving performance in Civilization V, along with tessellation sans any real gratification in DiRT 2 and Aliens Vs. Predator. So this was a new one for us.

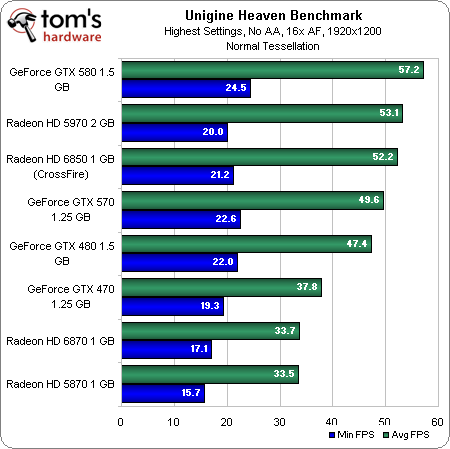

First, let’s create a baseline by benchmarking the latest crop of cards in Unigine’s Heaven test.

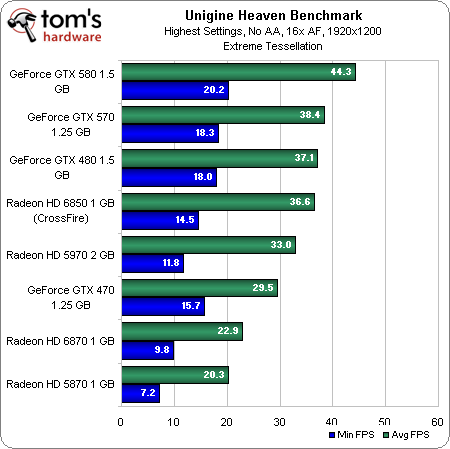

Clearly, Nvidia’s cards handle the Extreme tessellation setting better than AMD’s, but using Normal tessellation, AMD still fares pretty well. Claims that the company improved tessellation performance in its Radeon HD 6800-series seem fairly well founded by the 6850 CrossFire’s showing against the Radeon HD 5970.

Also interesting is that the GeForce GTX 570 is faster than the 480 in all three tests. This makes sense, since we're talking about the same number of PolyMorph engines in play, but higher clocks on the GTX 570.

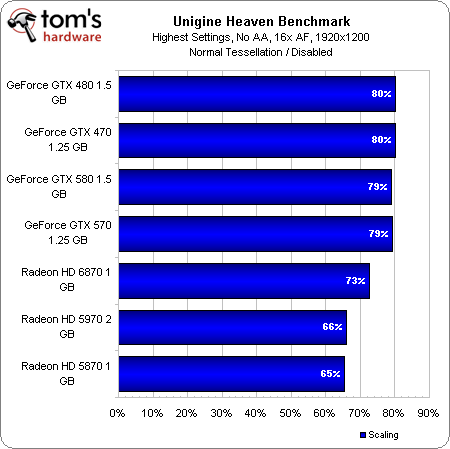

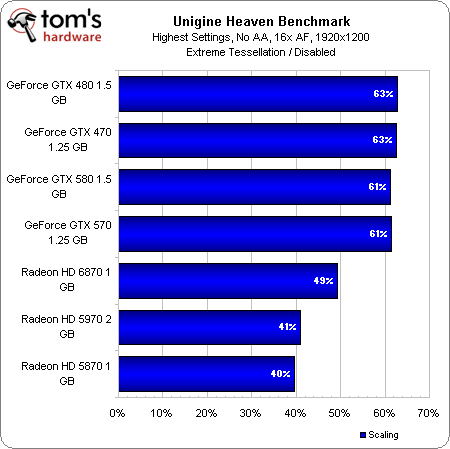

A look at scaling tells the whole tale, though. The AMD cards are brutalized by the use of Extreme tessellation. They’re less affected by Unigine’s Normal setting, but it’s still an overall loss to Nvidia.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

How about the fact that, regardless of whether the card features 16, 15, or 14 PolyMorph engines enabled, all of Nvidia’s tested products seem to scale pretty evenly? It certainly appears that something other than geometry processing power is holding our GTX 500- and 400-series cards back here—or at least, the PolyMorph engines aren’t scaling as well as Nvidia might have otherwise implied.

Current page: Tessellation: Unigine Gives Us Synthetic Numbers

Prev Page Meet Nvidia’s GeForce GTX 570 Next Page Tessellation: HAWX 2 Gives Us Real-World Numbers-

thearm Grrrr... Every time I see these benchmarks, I'm hoping Nvidia has taken the lead. They'll come back. It's alllll a cycle.Reply -

xurwin at $350 beating the 6850 in xfire? i COULD say this would be a pretty good deal, but why no 6870 in xfire? but with a narrow margin and if you need cuda. this would be a pretty sweet deal, but i'd also wait for 6900's but for now. we have a winner?Reply -

sstym thearmGrrrr... Every time I see these benchmarks, I'm hoping Nvidia has taken the lead. They'll come back. It's alllll a cycle.Reply

There is no need to root for either one. What you really want is a healthy and competitive Nvidia to drive prices down. With Intel shutting them off the chipset market and AMD beating them on their turf with the 5XXX cards, the future looked grim for NVidia.

It looks like they still got it, and that's what counts for consumers. Let's leave fanboyism to 12 year old console owners. -

nevertell It's disappointing to see the freaky power/temperature parameters of the card when using two different displays. I was planing on using a display setup similar to that of the test, now I am in doubt.Reply -

reggieray I always wonder why they use the overpriced Ultimate edition of Windows? I understand the 64 bit because of memory, that is what I bought but purchased the OEM home premium and saved some cash. For games the Ultimate does no extra value to them.Reply

Or am I missing something? -

theholylancer hmmm more sexual innuendo today than usual, new GF there chris? :DReply

EDIT:

Love this gem:

Before we shift away from HAWX 2 and onto another bit of laboratory drama, let me just say that Ubisoft’s mechanism for playing this game is perhaps the most invasive I’ve ever seen. If you’re going to require your customers to log in to a service every time they play a game, at least make that service somewhat responsive. Waiting a minute to authenticate over a 24 Mb/s connection is ridiculous, as is waiting another 45 seconds once the game shuts down for a sync. Ubi’s own version of Steam, this is not.

When a reviewer of not your game, but of some hardware using your game comments on how bad it is for the DRM, you know it's time to not do that, or get your game else where warning.