What Does It Take To Turn The PC Into A Hi-Fi Audio Platform?

Most hi-fi audio is stored in digital form. With advancements in lossless compression, bit-perfect ripping/streaming, HD audio formats, multi-terabyte storage, and PC-friendly DACs, has the PC earned a place among high-end audio gear? At what price point?

Test Setup: The Blind Testing Process

Objective or Subjective?

Nowhere in this article do we talk about technical specifications or benchmarking individual components. If you want that information, it's available for all three discrete devices. Realtek doesn't provide measurements, only specs, but those are published online too. The point we are making is that, they should all be completely transparent. Realtek's codec shouldn't follow far behind, at least on paper.

If that's true, then we shouldn't be able to tell them apart in a sequence of blind listening tests. That's the angle we're setting out to explore, hence our subjective approach.

A Properly-Blind Subjective Methodology

It's easy to be influenced in a listening test by what you expect to hear. If you feel like you can be objective without a blind test, then great. But we know we cannot. So, we went to every length possible to remove expectations, correcting for any factor that provided unwarranted information.

Typical A/B tests let you hear A, then B, then a random sequence of As and Bs, testing to see if you can correctly tell them apart. If you can guess correctly with a 95% confidence interval, then it's fairly certain that you can tell them apart. If not, you must concede you can't. It's really that simple.

We've deliberately complicated the event in that this is essentially a blind tailored A/B/C/D test. We have four devices. We test one track at a time. We test each track eight times. The only guarantee is that each device will be presented twice in the sequence, though that could be in any order (even consecutively). A proper blind test would not guarantee equal distribution in the sequence, since that creates some form of expectation. But that was a compromise we had to make to generate sufficient data samples for each device.

The tests are conducted with a partner helping us by selecting the sources randomly. During each test, we write down our subjective thoughts. At the end of each run (lasting the first few minutes of each track), if we feel comfortable doing so, we make a guess on the device we just heard. After the eight runs, we compare our impressions and guesses to the actual device list, which our partner wrote down separately.

As you already know, every device is carefully volume-matched, demonstrating good matching across three representative test tones. Only Realtek's ALC889 codec could not quite get there due to its technical limitations.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

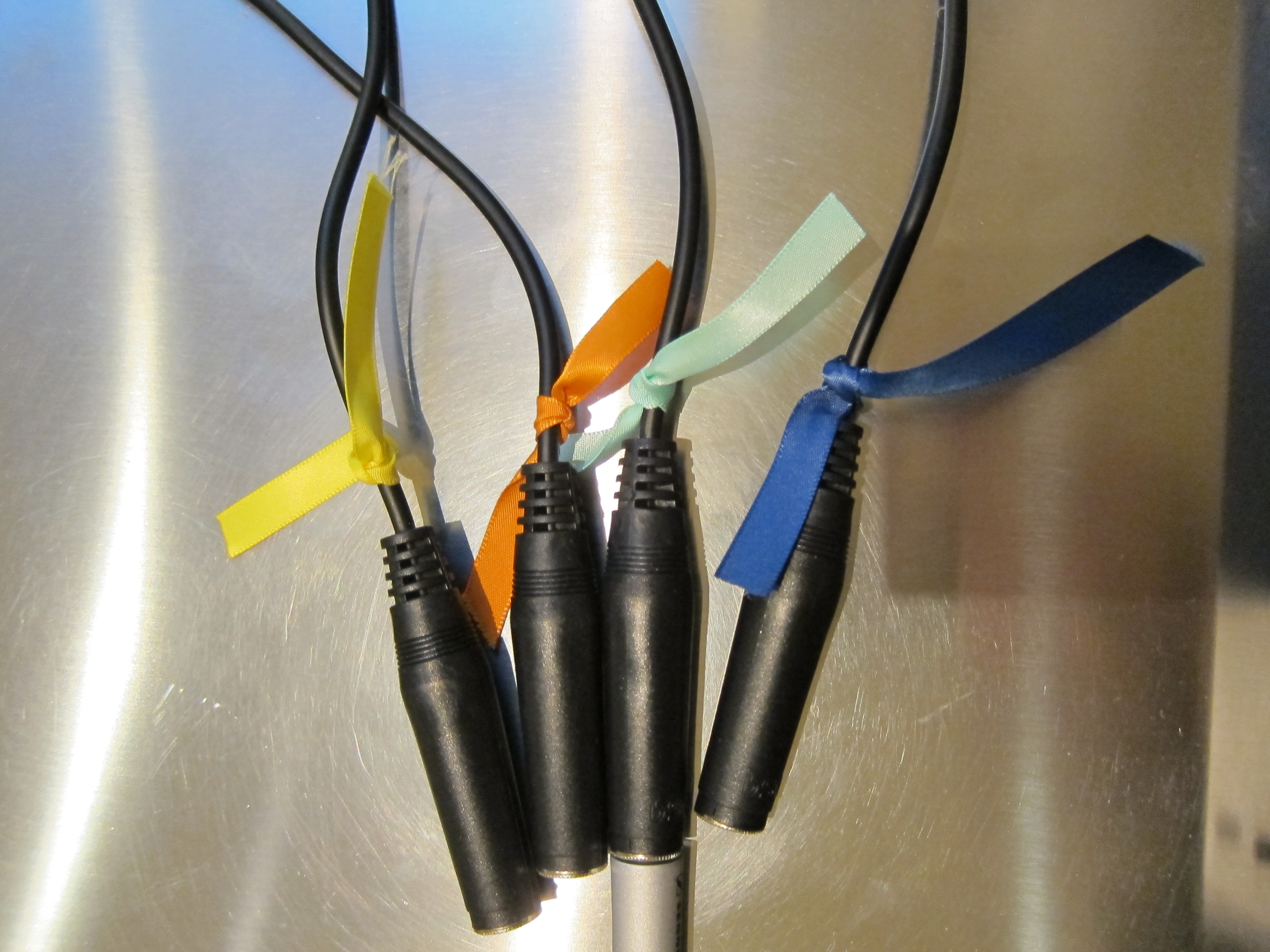

Furthermore, we used identical color-coded extension cables from each devices, so the partner didn't need to move from the test bench at all and the connecting noise for each device was the same. We went one step further and removed the headphones in between runs as the partner was switching connections to avoid hearing any distinct connection-related click or pop.

Due to time constraints, not all listeners tested all content. We also had some issues with volume-matching the Realtek ALC889, and those are called out where they're relevant.

Our precautions worked well; we could not tell the devices apart from each other in any way except their sound.

Challenging the Methodology

A few of the listeners who tried replicating the process above challenged our methodology. This is good, fair, and needs to be openly discussed. So, we present those challenges below.

We were questioned on:

- The process of listening to the same track multiple times using the same or a different device (versus switching across devices seamlessly)

- Using four devices (versus doing A/B testing of individual device pairs)

- The applicability/extensibility of these tests from headphones to full-sized speakers

On the first point, we agree that there is some merit to this. Human acoustic memory happens to be short-lived. Not only that, but rarely are individuals conscious of it. So, trying to "remember" and "compare" how a given track sounds over time (even after multiple seconds) is really, really difficult. With that said, because we were testing on familiar hardware using our favorite tracks, we felt we should have been able to identify differences, with at least directional reliability, if we could hear them. But yes, ideally, we would have liked to try seamlessly switching as well. Unfortunately, we could not find any 1/4" TRS stereo rapid-switching boxes and, even if they exist, foobar2000 won't output over more than a single device at a time (and, we may be wrong on this one, but neither does Windows). Running multiple instances of foobar2000 at the same time is possible, though it creates temporal alignment issues. The idea is nice; it's just technically problematic.

On the second point, our purpose here wasn't telling pairs of devices apart, but rather trying to gauge whether any one component sounded significantly better or worse than the others. Based on what we were trying to achieve, I think our methodology is even better than A/B pairs. This is one challenge thus we'd like to directly rebuke.

Finally, regarding the last point: we agree. These tests, as they were conducted, only apply to headphones. More specifically, they apply to high-impedance headphones. Hopefully, we'll get the opportunity to extend our experimentation to low-impedance headphones in the near future. Full-sized speakers are more challenging for a variety of reasons, and we can't promise that'll happen any time soon.

Wrapping Up

If you've read through the last four dense pages of setup background, then you can appreciate the complexity of arranging proper blind tests. We did our best with the equipment, knowledge, and time we had available to create the best possible experiment, documenting each and every step so that you can judge for yourself how relevant these tests are to you.

The tests aren't perfect, and we don't claim they are. They cannot be generalized beyond the specific cases we tested, and we don't claim they can be. Nevertheless, we hope you'll find them interesting within the scope of their applicability.

We also would have liked to test more devices. If there's enough reader interest, you can bet we'll follow up with a wider range of products.

Current page: Test Setup: The Blind Testing Process

Prev Page Test Setup: Cables, Software, And Tracks Next Page Results: Dragonborn / Jeremy Soule-

SuckRaven Bravo ! Awesome, and a very thorough review. Even though as you mention, audio gear is not usually the forté/emphasis of the reviews here, it's refreshing to have someone at least try to cut through the (more often-than-not) overpriced arena of bullshit that is the field of "high-end" audio. I applaud the review, and the effort. Keep up the good work. More please.Reply -

PudgyChicken Just wondering, why not test a Creative X-Fi Titanium HD or something like that alongside the ASUS Xonar? It would be interesting to see some of the differences between different PCIe sound cards in this matchup. However I understand that what you were really going for was showing the difference between price point and form factor at the same time, so perhaps not testing two PCIe cards makes sense.Reply -

kitsunestarwind The biggest thing I have found for the PC is no matter how good your DAC is , if your speakers and AMP are crap, then it will never sound better.People spend big money on DAC's and forget that you need a high Quality amp with very very low THD (total harmonic distortions) and a very good set of Full Range speakers with high sensitivity if you want good sound, instead of crappy (albeit expensive) computer speakers especially sets with a sub.Reply -

maestro0428 Wonderful article! I love listening to music and do so mostly at my PCs. I try to set up systems where audio is important in component selection. Although we all love drooling over expensive equipment, many times it is not all that necessary for an amazing experience. I'd love to see more! Including smaller, studio speakers as I believe that speakers/headphones are the most important part of the equation. Keep up the great work!Reply -

Someone Somewhere Agree totally with this. It always annoys me when people say they're spending over $100 on a sound card, especially when it turns out that they're using Optical out, and the whole thing is basically moot.I now have a nice source to link to.Reply -

1zacster The thing is you can't just pick up two sets of good headphones, try them on different DACs/AMPs and expect to hear major differences, it takes longer than 5 minutes for your ears to adjust to newer headphones and for the differences to actually show. This is like taking food from Left Bank and then bringing in a bunch of hobos and asking them tel tell the differences between the foods.Reply -

dogman-x I use an optical cable from my PC to a home theatre receiver. With this setup, stereo CD audio content is sent as raw PCM to the receiver, not compressed into DD or DTS. These days you can buy a very good quality home theatre receiver for less than $200. Audio quality is outstanding.Reply -

Memnarchon I would love to see ALC1150 in these tests too, since its widely used at most Z87 mobos.Reply