Video Quality Tested: GeForce Vs. Radeon In HQV 2.0

Test Class 2: Noise And Artifact Reduction

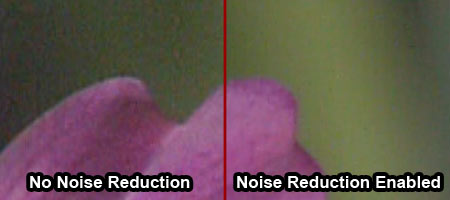

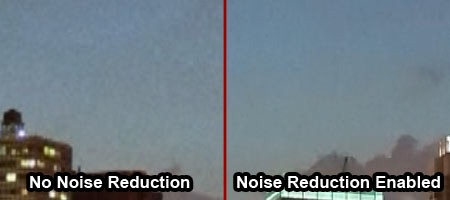

This class is all about improving poor-quality, noisy video. Today’s video processors can remove unwanted noise from video without noticeably blurring or degrading the picture.

Chapter 1: Random Noise Tests

Random noise is the grainy, changing pattern you might notice on poor-quality video. This chapter contains four tests, comprising four different video clips that present unique challenges for random noise removal. A perfect score of five is given for each scene if noise is reduced, but details are intact. The score is lowered to two if noise isn’t significantly reduced, and a score of zero is given if noise isn’t reduced or the details have been softened and smeared.

This test is fairly simple to assess, even if there is an element of subjectivity here. All of the graphics cards we’re testing achieve a perfect score, although we note that the Radeon noise reduction appears superior. GeForce noise reduction isn’t quite as strong when it comes to moving objects, no matter what level the de-noise option is set to.

| Random Noise Test Results (out of 5) | |||||

|---|---|---|---|---|---|

| Row 0 - Cell 0 | Radeon HD 6850 | Radeon HD 5750 | Radeon HD 5670 | Radeon HD 5550 | Radeon HD 5450 |

| Sailboat | 5 | 5 | 5 | 5 | 5 |

| Flower | 5 | 5 | 5 | 5 | 5 |

| Sunrise | 5 | 5 | 5 | 5 | 5 |

| Harbor Night | 5 | 5 | 5 | 5 | 5 |

| Header Cell - Column 0 | GeForce GTX 470 | GeForce GTX 460 | GeForce 9800 GT | GeForce GT 240 | GeForce GT 430 | GeForce 210 |

|---|---|---|---|---|---|---|

| Sailboat | 5 | 5 | 5 | 5 | 5 | 5 |

| Flower | 5 | 5 | 5 | 5 | 5 | 5 |

| Sunrise | 5 | 5 | 5 | 5 | 5 | 5 |

| Harbor Night | 5 | 5 | 5 | 5 | 5 | 5 |

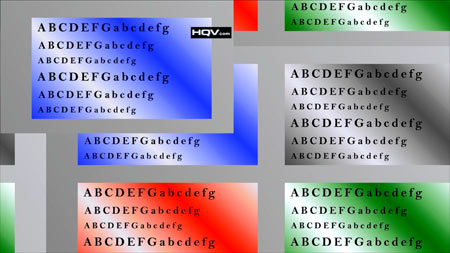

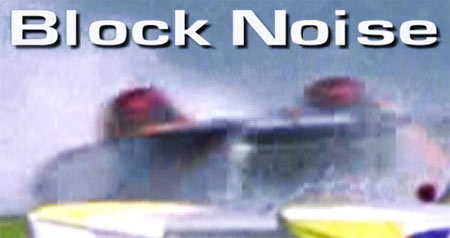

Chapter 2: Compression Artifacts Tests

Compression artifacts are very different from the grainy patterns in random noise. Compression noise usually manifests as blocky edges on objects or even squared-off areas of the video that contain nothing. This chapter has four test videos that suffer from compression artifacts: a moving text pattern, a roller coaster scene, a Ferris wheel scene, and a large bridge scene with a lot of slowly moving traffic.

To achieve a full score of five in each scene, compression artifacts must be significantly reduced, while the details remain crisp. A reduced score of three is given if the artifacts are substantially but “not fully” reduced, and zero is earned if the artifacts are not lessened or the scene is smeared and detail is softened.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

We find the compression artifacts test (and the upscaled compression artifacts test coming up) to be the most difficult tests to judge. The first problem is that the guidelines are quite subjective: five points for “significantly reduced” versus three points for “reduced, but not fully.” The other issue is that while certain cards are able to reduce some compression artifacts, the result isn’t dramatic. The changes are subtle at best.

| Compression Artifacts Test Results (out of 5) | |||||

|---|---|---|---|---|---|

| Row 0 - Cell 0 | Radeon HD 6850 | Radeon HD 5750 | Radeon HD 5670 | Radeon HD 5550 | Radeon HD 5450 |

| Scrolling Text | 5 | 5 | 3 | 3 | 3 |

| Roller Coaster | 5 | 5 | 3 | 3 | 3 |

| Ferris Wheel | 5 | 5 | 3 | 3 | 3 |

| Bridge Traffic | 5 | 5 | 3 | 3 | 3 |

| Header Cell - Column 0 | GeForce GTX 470 | GeForce GTX 460 | GeForce 9800 GT | GeForce GT 240 | GeForce GT 430 | GeForce 210 |

|---|---|---|---|---|---|---|

| Scrolling Text | 0 | 0 | 0 | 0 | 0 | 0 |

| Roller Coaster | 0 | 0 | 0 | 0 | 0 | 0 |

| Ferris Wheel | 0 | 0 | 0 | 0 | 0 | 0 |

| Bridge Traffic | 0 | 0 | 0 | 0 | 0 | 0 |

Let’s start with the Radeons. It appears that a relatively high de-noise value helps reduce compression artifacts, something that allows even the low-end Radeons to achieve decent scores here. Surprisingly, the de-blocking option doesn’t seem to make much difference, though. Once we reach the Radeon HD 5750, the GPU is powerful enough to enable mosquito noise reduction, and this does appear to have a positive (but relatively subtle) effect on compression artifacts. We do judge that this effect is enough to earn a score of five, however, and this sets the bar for the consumer graphics cards that we’ve tested.

The GeForce cards don’t have a dedicated option to reduce compression artifacts, such as mosquito noise reduction or de-blocking, and the noise reduction setting in the Nvidia driver doesn’t appear to affect anything but grainy noise. Because we can’t see any difference with noise reduction on or off, we’re forced to give the GeForce cards a zero score in this test.

Chapter 3: Upscaled Compression Artifacts Tests

The tests here are identical to the ones we looked at in Chapter 2, the only difference being that the video is now upscaled from a lower resolution. This results in more dramatic compression artifacts and looks more like something you might see from a low-resolution source—think YouTube video.

The GeForce cards still have a hard time with compression artifacts, and the Radeons struggle as well here. As a result, AMD's cards do not achieve the high scores across the board that they did in the previous test.

| Upscaled Compression Artifacts Test Results (out of 5) | |||||

|---|---|---|---|---|---|

| Row 0 - Cell 0 | Radeon HD 6850 | Radeon HD 5750 | Radeon HD 5670 | Radeon HD 5550 | Radeon HD 5450 |

| Scrolling Text | 3 | 3 | 3 | 3 | 3 |

| Roller Coaster | 5 | 5 | 3 | 3 | 3 |

| Ferris Wheel | 3 | 3 | 3 | 3 | 3 |

| Bridge Traffic | 3 | 3 | 3 | 3 | 3 |

| Header Cell - Column 0 | GeForce GTX 470 | GeForce GTX 460 | GeForce 9800 GT | GeForce GT 240 | GeForce GT 430 | GeForce 210 |

|---|---|---|---|---|---|---|

| Scrolling Text | 0 | 0 | 0 | 0 | 0 | 0 |

| Roller Coaster | 0 | 0 | 0 | 0 | 0 | 0 |

| Ferris Wheel | 0 | 0 | 0 | 0 | 0 | 0 |

| Bridge Traffic | 0 | 0 | 0 | 0 | 0 | 0 |

Current page: Test Class 2: Noise And Artifact Reduction

Prev Page Test Class 1: Video Conversion, Cont’d. Next Page Test Class 3: Image Scaling And EnhancementsDon Woligroski was a former senior hardware editor for Tom's Hardware. He has covered a wide range of PC hardware topics, including CPUs, GPUs, system building, and emerging technologies.

-

jimmysmitty I second the test using SB HD graphics. It might be just an IGP but I would like to see the quality in case I want to make a HTPC and since SB has amazing encoding/decoding results compared to anything else out there (even $500+ GPUs) it would be nice to see if it can give decent picture quality.Reply

But as for the results, I am not that suprised. Even when their GPUs might not perform the same as nVidia, ATI has always had great image quality enhancements, even before CCC. Thats an area of focus that nVidia might not see as important when it is. I want my Blu-Ray and DVDs to look great, not just ok. -

compton Great article. I had wondered what the testing criteria was about, and Lo! Tom's to the rescue. I have 4 primary devices that I use to watch Netflix's streaming service. Each is radically different in terms of hardware. They all look pretty good. But they all work differently. Using my 47" LG LED TV I did an informal comparison of each.Reply

My desktop, which uses a 460 really suffers from the lack of noise reduction options.

My Samsung BD player looks less spectacular that the others.

My Xbox looks a little better than the BD player.

My PS3 actually looks the best to me, no matter what display I use.

I'm not sure why, but it's the only one I could pick out just based on it's image quality. Netflix streaming is basically all I use my PS3 for. Compared to it, my desktop looks good and has several options to tweak but doesn't come close. I don't know how the PS3 stacks up, but I'm thinking about giving the test suite a spin.

Thanks for the awesome article. -

cleeve Reply9508697 said:Could you give the same evaluation to Sandy Bridge's Intel HD Graphics?.

That's Definitely on our to-do list!

Trying to organize that one now.

-

lucuis Too bad this stuff usually makes things look worse. I tried out the full array of settings on my GTX 470 in multiple BD Rips of varying quality, most very good.Reply

Noise reduction did next to nothing. And in many cases causes blockiness.

Dynamic Contrast in many cases does make things look better, but in some it revealed tons of noise in the greyscale which the noise reduction doesn't remove...not even a little.

Color correction seemed to make anything blueish bluer, even purples.

Edge correction seems to sharpen some details, but introduces noise after about 20%.

All in all, bunch of worthless settings. -

killerclick jimmysmittyEven when their GPUs might not perform the same as nVidia, ATI has always had great image quality enhancementsReply

ATI/AMD is demolishing nVidia in all price segments on performance and power efficiency... and image quality.

-

alidan killerclickATI/AMD is demolishing nVidia in all price segments on performance and power efficiency... and image quality.Reply

i thought they were loosing, not by enough to call it a loss, but not as good and the latest nvidia refreshes. but i got a 5770 due to its power consumption, i didn't have to swap out my psu to put it in and that was the deciding factor for me. -

haplo602 this made me lol ...Reply

1. cadence tests ... why do you marginalise the 2:2 cadence ? these cards are not US exclusive. The rest of the world has the same requirements for picture quality.

2. skin tone correction: I see this as an error on the part of the card to even include this. why are you correcting something that the video creator wanted to be as it is ? I mean the movie is checked by video profesionals for anything they don't want there. not completely correct skin tones are part of the product by design. this test should not even exist.

3. dynamic contrast: cannot help it, but the example scene with the cats had blown higlights on my laptopt LCD in the "correct" part. how can you judge that if the constraint is the display device and not the GPU itself ? after all you can output on a 6-bit LCD or on a 10-bit LCD. the card does not have to know that ... -

mitch074 "obscure" cadence detection? Oh, of course... Nevermind that a few countries do use PAL and its 50Hz cadence on movies, and that it's frustrating to those few people who watch movies outside of the Pacific zone... As in, Europe, Africa, and parts of Asia up to and including mainland China.Reply

It's only worth more than half the world population, after all. -

cleeve mitch074"obscure" cadence detection? Oh, of course... Nevermind that a few countries do use PAL and its 50Hz cadence on movies...Reply

You misunderstand the text, I think.

To clear it up: I wasn't talking about 2:2 when I said that, I was talking about the Multi-Cadence Tests: 8 FPS animation, etc.