Intel Core i7-3770K Review: A Small Step Up For Ivy Bridge

Quick Sync: A Secret Weapon, Refined

Back when Intel launched its Sandy Bridge architecture, I identified Quick Sync as the design’s secret weapon. Developed quietly for five years, it caught both AMD and Nvidia completely off guard. I projected that it’d take a year for both competitors to respond. And they have—AMD with its Video Codec Engine and Nvidia with NVEnc.

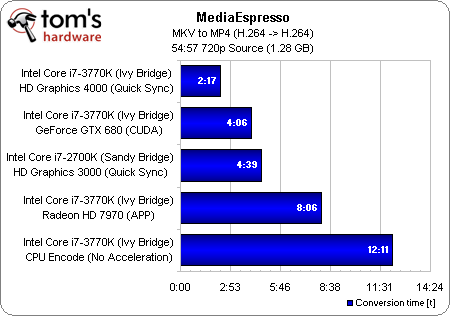

Unfortunately, AMD’s solution is still missing in action four months after it was first promised. Encoding on a Radeon HD 7000-series card has to be achieved through programmable shaders, rather than more energy-efficient fixed-function logic sitting idle on the die.

NVEnc is up and running, and a GeForce GTX 680 manages to outperform Intel’s first-gen Quick Sync implementation.

Nvidia’s victory is short-lived, though. HD Graphics blows everything else out of the water—and that’s even after biasing MediaEspresso toward quality rather than performance.

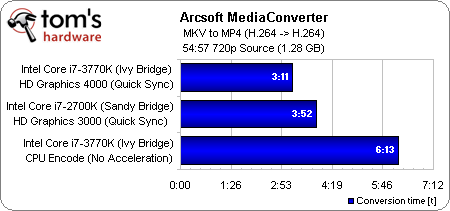

Arcsoft’s MediaConverter supports Quick Sync just fine, but the latest build doesn’t behave as well under APP or CUDA/NVEnc. Although scaling isn’t as aggressive, we still see how HD Graphics 4000 slices into the time it takes to transcode a large video file into something better suited to a portable device.

How’d They Do It?

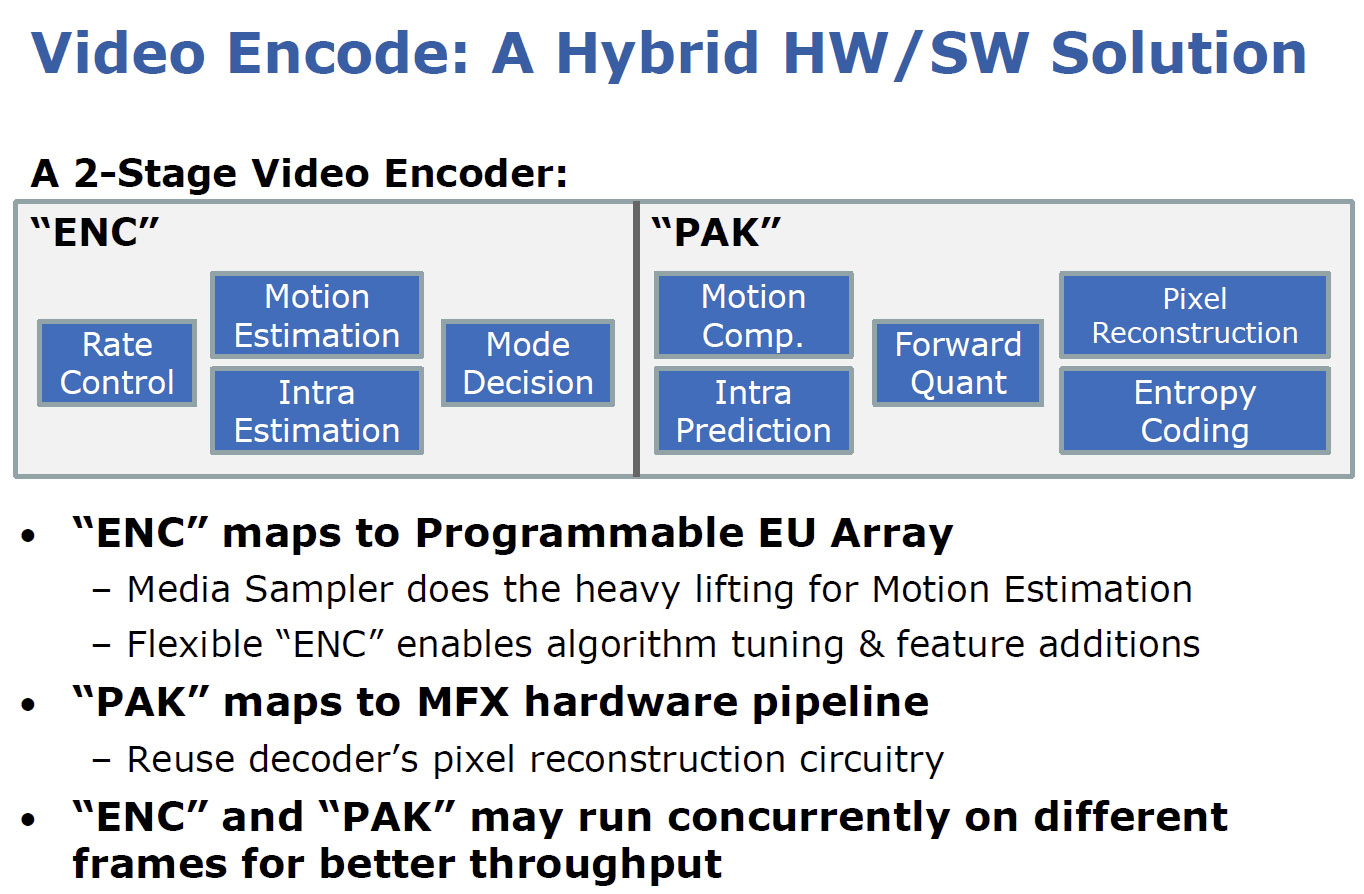

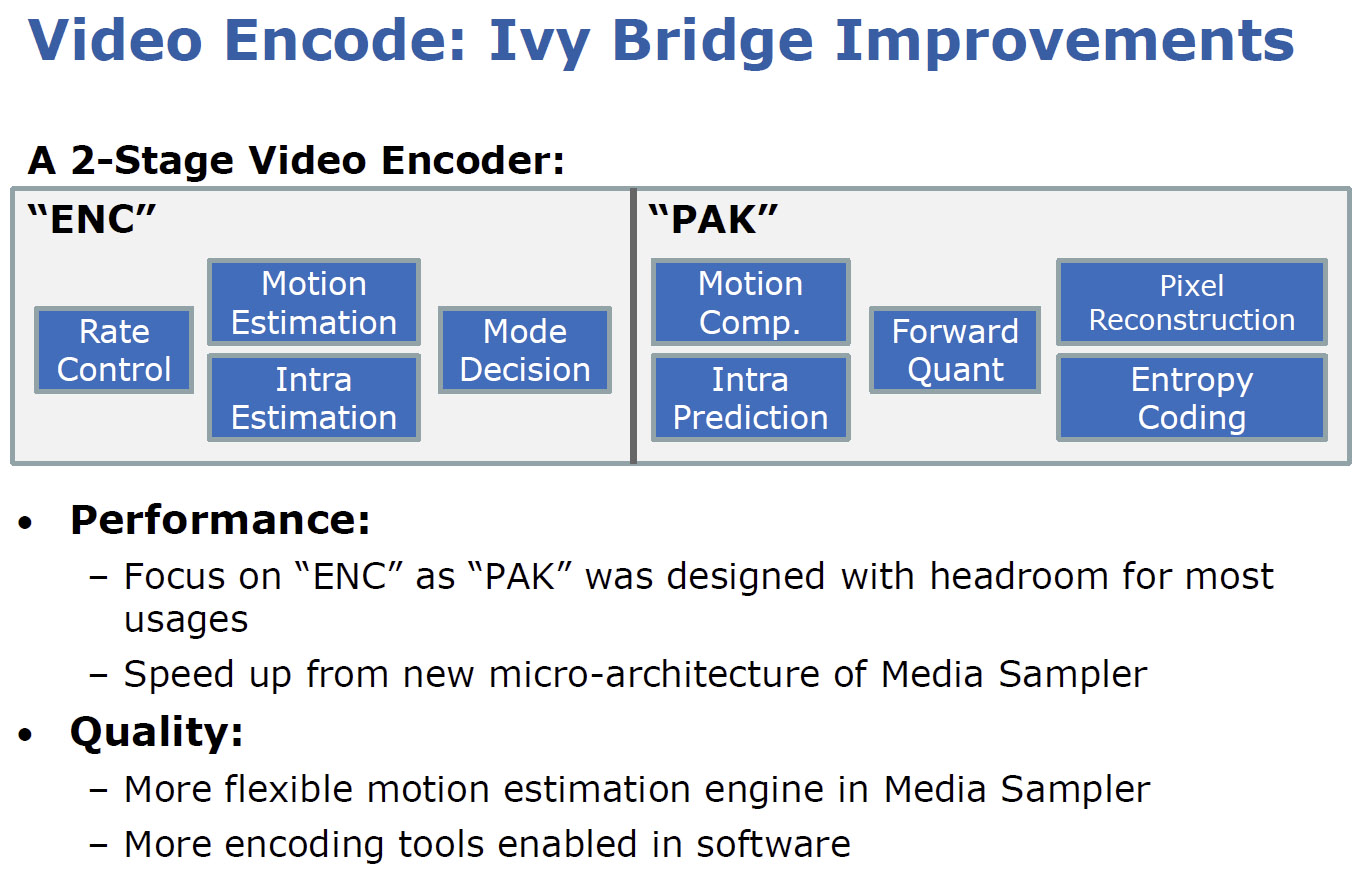

I had the pleasure of sitting down with Dr. Hong Jiang, Intel’s chief media architect, before last year’s Sandy Bridge introduction to get an in-depth look at how the company implemented Quick Sync. This year, he led a session at IDF discussing the improvements included with Ivy Bridge. The focus, he said, was squarely on performance. Faster processing gives developers more flexibility in implementing higher-quality filters. It also punches through workloads more quickly, returning the processor to idle and saving power.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

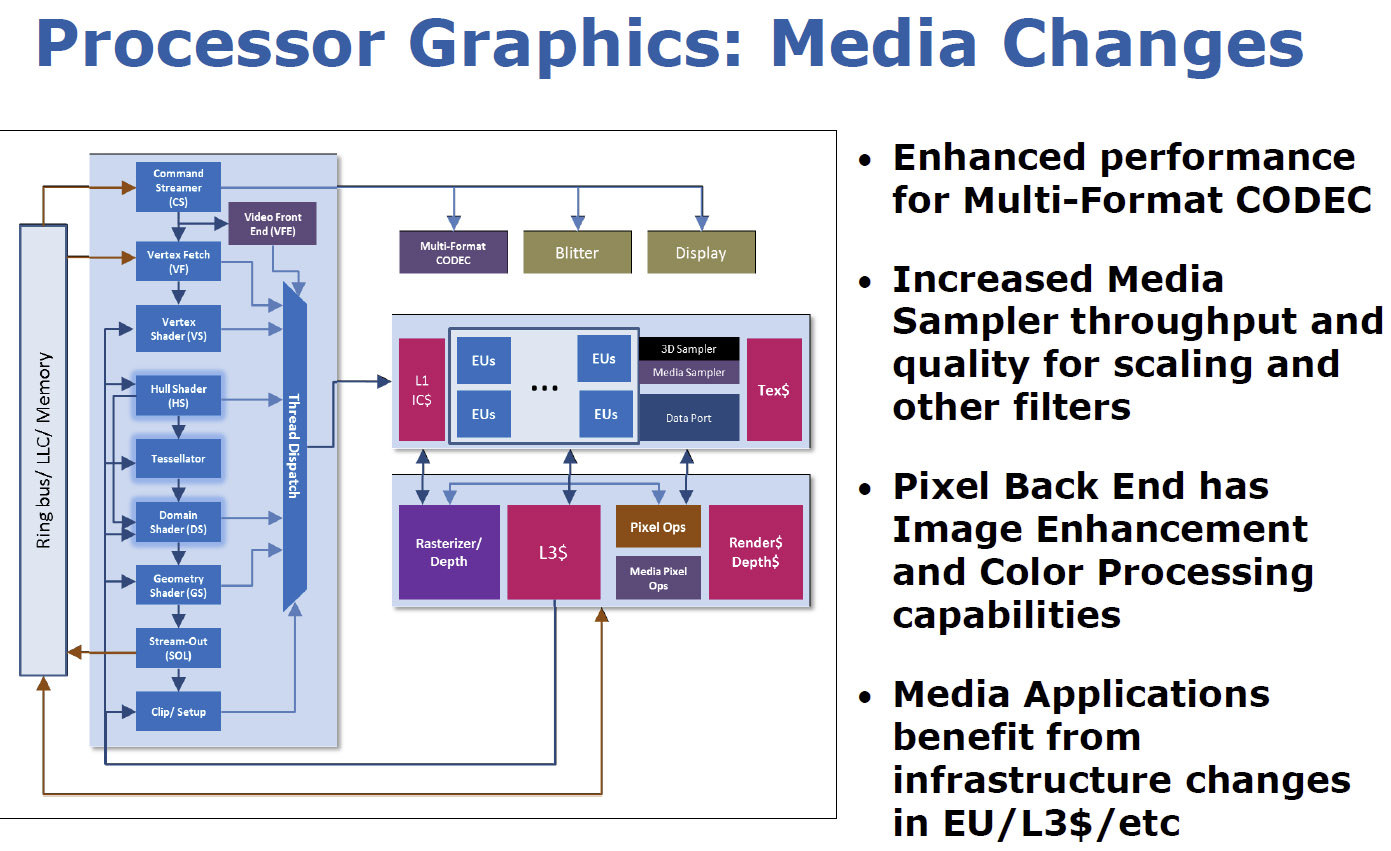

An increase in EU count helps Intel’s performance story, as does the inclusion of dedicated graphics L3 cache and greater Media Sampler throughput. Because the Media Sampler is part of that scalable third domain referred to as Slice, Intel can add resources in future generations to ratchet 3D and media performance up even more.

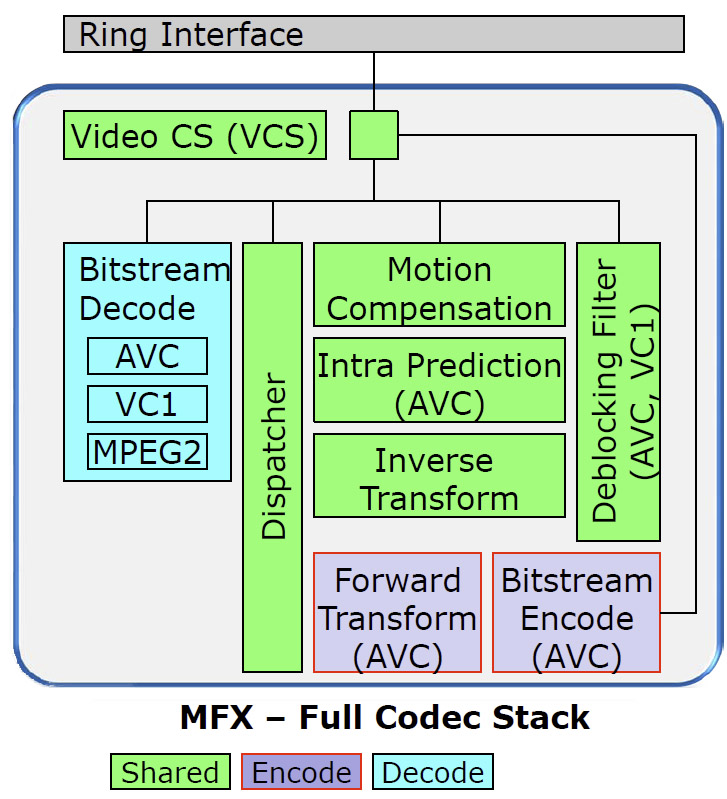

The Multi-Format Codec Engine (MFX) carries over from Sandy Bridge, enabling hardware-based H.264, VC-1, and MPEG-2 decoding, along with H.264 encoding. Intel apparently reworked its context-adaptive variable-length encoding and context-based adaptive binary arithmetic coding engines, though, which are both big mouthfuls referring to lossless encoding techniques that the MFX can decode faster.

Anticipating increasing demand for resolutions beyond 1080p in Ivy Bridge’s life cycle, Intel adds support to the MFX for 4096x4096 video decoding. In fact, Intel’s Jiang even claims the MFX can decode multiple 4K streams simultaneously.

Moving beyond Ivy Bridge’s decode capabilities, the media team also sought to improve the performance and quality of encoding tasks. A couple of paragraphs back I mentioned that the faster Media Sampler plays a part in Quick Sync’s speed-up. Specifically, it does the Motion Estimation stage’s heavy lifting, so greater throughput helps accelerate that step.

Now, Intel claims that its hardware-based encode solution achieves similar quality as a software solution. Last year, we wrote Video Transcoding Examined: AMD, Intel, And Nvidia In-Depth and found that the quality of every hardware-based encode engine sacrificed some degree of quality compared to a pure software solution. Faced with three new accelerated transcode technologies, we really need to spend some time putting each under a microscope to analyze how that story may have changed.

Current page: Quick Sync: A Secret Weapon, Refined

Prev Page HD Graphics 4000: Native Compute Support Next Page Platform Compatibility: Are Motherboard Vendors Ready?-

tecmo34 Nice Review Chris...Reply

Looking forward to the further information coming out this week on Ivy Bridge, as I was initially planning on buying Ivy Bridge, but now I might turn to Sandy Bridge-E -

jaquith Great and long waited review - Thanks Chris!Reply

Temps as expected are high on the IB, but better than early ES which is very good.

Those with their SB or SB-E (K/X) should be feeling good about now ;) -

xtremexx saw this just pop up on google, posted 1 min ago, anyway im probably going to update i have a core i3 2100 so this is pretty good.Reply -

ojas it's heeearrree!!!!! lol i though intel wan't launching it, been scouring the web for an hour for some mention.Reply

Now, time to read the review. :D -

zanny It gets higher temps at lower frequencies? What the hell did Intel break?Reply

I really wish they would introduce a gaming platform between their stupidly overpriced x79esque server platform and the integrated graphics chips they are pushing mainstream. 50% more transistors should be 30% or so more performance or a much smaller chip, but gamers get nothing out of Ivy Bridge. -

JAYDEEJOHN It makes sense Intel is making this its quickest ramp ever, as they see ARM on the horizon in today's changing market.Reply

They're using their process to get to places they'll need to get to in the future -

verbalizer OK after reading most of the review and definitely studying the charts;Reply

I have a few things on my mind.

1.) AMD - C'mon and get it together, you need to do better...2.) imagine if Intel made an i7-2660K or something like the i5-2550K they have now.

3.) SB-E is not for gaming (too highly priced...) compared to i7 or i5 Sandy Bridge

4.) Ivy Bridge runs hot.......

5.) IB average 3.7% faster than i7 SB and only 16% over i5 SB = not worth it

6.) AMD - C'mon and get it together, you need to do better...

(moderator edit..) -

Pezcore27 Good review.Reply

To me it shows 2 main things. 1) that Ivy didn't improve on Sandy Bridge as much as Intel was hoping it would, and 2) just how far behind AMD actually is... -

tmk221 It's a shame that this chip is marginally faster than 2700k. I guess it's all AMD fault. there is simply no pressure on Intel. Otherwise they would already moved to 8, 6, and 4 cores processors. Especially now when they have 4 cores under 77W.Reply

Yea yea I know most apps won't use 8 cores, but that's only because there was no 8 cores processors in past, not the other way around