Video Transcoding Examined: AMD, Intel, And Nvidia In-Depth

Image Quality: Examined

We recently covered image quality in our review of the first desktop Brazos mini-ITX board. If you already read it, then you know there were some surprising results when we dug into the platform's image decode/encode capabilities. All else being equal, the output quality of encoded video was fairly similar in comparing Intel and AMD. Nvidia's CUDA-accelerated output was another story entirely, though. Any video we pumped through it had discernible blockiness in high-motion scenes. We'd been warned about this previously, but it took a lot of A-B comparison to realize how bad the situation really was.

That brings us to today's image quality tests. There are many leftover questions from our Brazos coverage, which weren't really relevant there, but deserved their own in-depth exploration.

The Basics: Codecs and Decoders

We need to cover a few basic points before we dive into the nitty gritty. It is important to make the distinction between a codec and a file container. For example, Blu-ray content is often seen with a .m2ts file extension. However, the BDAV (Blu-ray Disc Audio/Video) container format is basically a storage wrapper. Three possible codecs can be used: MPEG-2, H.264, and VC-1.

So, what is the exact difference between a codec and a container? Think about your most recent vacation. Your luggage is the file container, and the type of luggage you choose dictates where you put your clothes, bath products, computer, and so on. The codec (compression decompression) is the manner in which you squash everything (the data) down to fit into your luggage. This basically applies to any multimedia content. For example, Microsoft's AVI (Audio Video Interleave) format is a file container, but it could have video encoded with a variety of codecs, from DivX to MPEG-2.

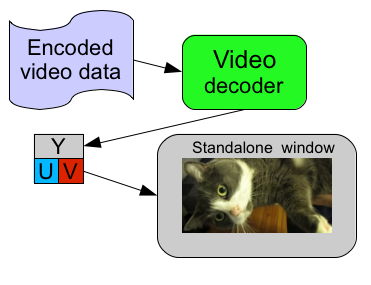

When you play something in a video player, generally, the encoded video data passes through the decoder and is spit out as YUV data (color space) and put onto your screen. The decoder recognizes the format and uncompresses the data into useful information that can undergo processing and be viewed.

Now, there are two types of decoders: software and hardware. Prior to UVD, PureVideo, and Intel's GMA 4500MHD, video was decoded using software-based decoders that relied on host processing power. That's why everyone used to make a big deal about playback software. CyberLink and InterVideo (now Corel) were really the only big names in town, which explains why ATI licensed the PowerDVD decoder for its ATI DVD Decoder back in the day. Of course, software decoders eat processor cycles, which doesn't really hurt performance on a modern CPU, but it does do bad things to battery life in a mobile environment.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Gradually, graphics card companies caught on to this and developed fixed-function decoders, which were simply logic circuits on the GPU dedicated to processing video. We refer to this as hardware acceleration today. The benefit was that host CPU utilization went down (approaching 0%) as the workload was offloaded onto the graphics processor.

This has some implications. Because a decoder processes video, it's harder to clearly define a baseline for quality or efficiency. Whether the video goes through a hardware- or software-based pipeline, something is getting its grubby mitts on that video data before it shows up on your monitor. Using software, you don't really need to draw a comparison between systems if you use the decoder. Though, using that same system, different decoders can output a different image or change the perception of image quality. For the most part, a Blu-ray disc played on Nvidia or AMD graphics hardware will look the same if you disable acceleration in PowerDVD. On both systems, video is being processed in software on the CPU, yielding the same output.

When you add hardware decoding to the mix, everything can look different. Why? Modern GPUs already have a portion of their design specifically dedicated to decoding and processing video data. This is the aforementioned fixed-function logic. The hardware-based decode acceleration on a Sandy Bridge-based processor is going to be designed and programmed differently from an AMD or Nvidia graphics card.

We should make this completely clear: there are no purely general-purpose GPU decoders. There is no decoder that runs completely in DirectCompute, APP, or CUDA. And any requests to add that support are nutty, because it'd be a self-defeating feature. As we explained in our Flash article, GPGPU is for processing raw data in a highly parallelized manner. But there is more to video than just raw data. There is a lot of image processing that occurs, and much of it has to be done in a serial manner. Fixed-function decoders live to decode and process video; they don't serve any other purpose. Shifting that burden to more general-purpose compute resources would be one step away from moving it back onto the CPU itself, since in both cases you'd be working with a software-based decoder.

With that said, Elemental Technologies (the company you likely know as having developed Badaboom) is unique in that it designed an MPEG-2 CUDA-based decoder. But it is not a pure GPGPU decoder. Parts of the pipeline, such as entropy encoding, syntax encoding, syntax decoding, and entropy decoding, have to be processed in a serial manner. There are other parts of the process that can be designed to run in parallel, such as motion estimation, motion compensation, quantization, discrete cosine transform, deblocking filtering, end loop deblocking. That is why Elemental's MPEG-2 decoder is really a half-and-half solution. Some stuff runs on the CPU; some runs on the CUDA cores.

Current page: Image Quality: Examined

Next Page Intel, AMD, And Nvidia: Decode And Encode Support-

spoiled1 Tom,Reply

You have been around for over a decade, and you still haven't figured out the basics of web interfaces.

When I want to open an image in a new tab using Ctrl+Click, that's what I want to do, I do not want to move away from my current page.

Please fix your links.

Thanks -

spammit omgf, ^^^this^^^.Reply

I signed up just to agree with this. I've been reading this site for over 5 years and I have hoped and hoped that this site would change to accommodate the user, but, clearly, that's not going to happen. Not to mention all the spelling and grammar mistakes in the recent year. (Don't know about this article, didn't read it all).

I didn't even finish reading the article and looking at the comparisons because of the problem sploiled1 mentioned. I don't want to click on a single image 4 times to see it fullsize, and I certainly don't want to do it 4 times (mind you, you'd have to open the article 4 separate times) in order to compare the images side by side (alt-tab, etc).

Just abysmal. -

cpy THW have worst image presentation ever, you can't even load multiple images so you can compare them in different tabs, could you do direct links to images instead of this bad design?Reply -

ProDigit10 I would say not long from here we'll see encoders doing video parallel encoding by loading pieces between keyframes. keyframes are tiny jpegs inserted in a movie preferably when a scenery change happens that is greater than what a motion codec would be able to morph the existing screen into.Reply

The data between keyframes can easily be encoded in a parallel pipeline or thread of a cpu or gpu.

Even on mobile platforms integrated graphics have more than 4 shader units, so I suspect even on mobile graphics cards you could run as much as 8 or more threads on encoding (depending on the gpu, between 400 and 800 Mhz), that would be equal to encoding a single thread video at the speed of a cpu encoding with speed of 1,6-6,4GHz, not to mention the laptop or mobile device still has at least one extra thread on the CPU to run the program, and operating system, as well as arrange the threads and be responsible for the reading and writing of data, while the other thread(s) of a CPU could help out the gpu in encoding video.

The only issue here would be B-frames, but for fast encoding video you could give up 5-15MB video on a 700MB file due to no B-frame support, if it could save you time by processing threads in parallel. -

intelx first thanks for the article i been looking for this, but your gallery really sucks, i mean it takes me good 5 mins just to get 3 pics next to each other to compare , the gallery should be updated to something else for fast viewing.Reply -

_Pez_ Ups ! for tom's hardware's web page :P, Fix your links. :) !. And I agree with them; spoiled1 and spammit.Reply -

AppleBlowsDonkeyBalls I agree. Tom's needs to figure out how to properly make images accessible to the readers.Reply -

kikireeki spoiled1Tom, You have been around for over a decade, and you still haven't figured out the basics of web interfaces.When I want to open an image in a new tab using Ctrl+Click, that's what I want to do, I do not want to move away from my current page.Please fix your links.ThanksReply

and to make things even worse, the new page will show you the picture with the same thumbnail size and you have to click on it again to see the full image size, brilliant! -

acku Apologies to all. There are things I can control in the presentation of an article and things that I cannot, but everyone here has given fair criticism. I agree that right click and opening to a new window is an important feature for articles on image quality. I'll make sure Chris continues to push the subject with the right people.Reply

Web dev is a separate department, so we have no ability to influence the speed at which a feature is implemented. In the meantime, I've uploaded all the pictures to ZumoDrive. It's packed as a single download. http://www.zumodrive.com/share/anjfN2YwMW

Remember to view pictures in the native resolution to avoid scalers.

Cheers

Andrew Ku

TomsHardware.com -

Reynod An excellent read though Andrew.Reply

Please give us an update in a few months to see if there has been any noticeable improvements ... keep your base files for reference.

I would imagine Quicksynch is now a major plus for those interested in rendering ... and AMD and NVidia have some work to do.

I appreciate the time and effort you put into the research and the depth of the article.

Thanks,

:)