Video Transcoding Examined: AMD, Intel, And Nvidia In-Depth

Software Decoding: All CPU, All the Time

Software-based decoding is a different beast altogether. So long as the instructions sets are the same, we are dealing with identical images, regardless of who manufactures the hardware.

When you use a hardware-based decoder, the video data is processed through a specific path that has DXVA API calls for hardware-accelerated decoding. To a certain degree, the flow of data can still be handled differently on dissimilar pieces of hardware using the same DXVA accelerated decoder. For the consumer, you have no way of knowing how much of the DXVA pipeline has been implemented (and to be fair, you probably don't care). That is why hardware decoding on WMP12 and PowerDVD can still produce a different image, even though they both use EVR and enjoy hardware-accelerated decoding.

For software-based decoding, we are using FrameShots to capture specific frames. Since it uses software-based decoders, there was no way we could do the first part of our analysis with it. Furthermore, we aren't able to compare it head-to-head against the hardware-accelerated decoding shots generated from WMP12. Why? This program doesn't use EVR. In fact, it uses Video Mixing Renderer 9 (VMR9). For that reason, we are only able to compare two software codecs against one other.

FrameShots uses a custom DirectShow filter that sits on the DS filter tree between the video decoder and the renderer. This means we are actually eliminating the video renderer as a variable to a certain extent, something we could not do with WMP12. The difference now is that we're using the DS filter to capture a specific subset of video data.

It is hard to pick out the differences here, but if you look at the edges of objects like the Humvees and the nose of the white plane to the right, aliasing seems a bit heavier with ffdshow. Even though we captured the same frame in both cases, aliasing is occurring in different places. This makes it all the harder to call out a clear winner here. After all, a jagged line is a jagged line.

These two images look basically identical when you overlay them. There are only two noticeable differences. With ffdshow, you get a bit more detail on the light reflecting off the top of the car. Yet, strangely, the decoder (or the renderer) seems to drop the top part of the colon in the top left time stamp.

Skipping directly to GP, we see a bit of a difference. MainConcept shows less detail and appears a bit smoother if you are looking at it pixel by pixel. In ffdshow, Gwyneth's hair appears a bit sharper, but overall, the picture looks grainier, too. Strangely, MainConcept is the one that drops half a colon in the time stamp.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

What would image quality comparison be without explosions? This seems to be another one of those cases where anything in high motion shows little variation. Honestly, we see more differences creep up in the slower scenes. I wish I could have shown the explosion screenshots for hardware decoding, but the native screen capture exceeds the limits of our image server. We have posted them on ZumoDrive, if you want to examine them yourself.

On a final note, when you use FrameShots without having installed ffdshow tryouts, it will default to the next available decoder. On our system, this happens to be the H.264 decoder from our installation of MainConcept Reference v2.0.0.1555. The decoder is an unknown version, so we are simply listing the program version it was included with, and we disabled hardware-accelerated decoding in our quality comparisons of the MainConcept H.264 decoder. We are aware that ffdshow tryouts recently added a limited degree of DXVA support, but it isn't part of the latest stable build.

There are a plethora of software decoders available on the market. We are only selecting ffdshow tryouts (build 3154) and MainConcept to make a point; all software decoders are not created equal.

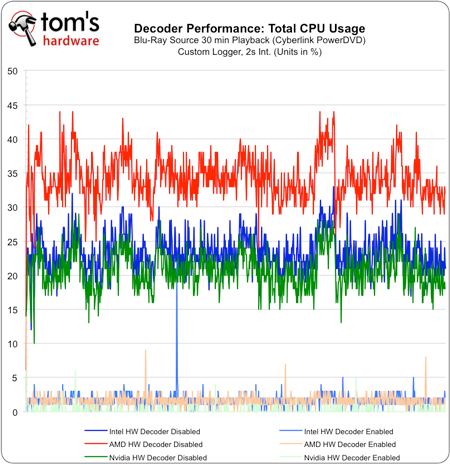

On that note, we want to present an interesting chart. It seems that, even in the same software-based decoder, we can get different performance results. When we play back our unprotected H.264 source in PowerDVD (build 10.0.2325.21), the results for hardware-accelerated decoding fall to 5% CPU utilization and under for all three graphics configurations.

But something strange happens when you disable hardware-accelerated decoding. In theory, everything should be running on the Core i5-2500K. Yet, in software-only mode, the numbers indicate otherwise. Somehow, simply dropping in a GeForce GTX 580 results in the lowest CPU use (remember, this is all running on the host processor). With Intel's integrated graphics enabled, the utilization is only marginally higher. Perhaps that's a result of the HD Graphics engine using resources that'd otherwise be freed up for the processing cores. More alarming, though, is that adding a Radeon HD 6970 spikes CPU utilization more noticeably. Indeed, if you look at the graph, you can see the CPU spikes occur in the same places as the software-based decoder is dealing with processing-hungry scenes at the same times.

We raised this issue to AMD and CyberLink, but we still have no satisfactory answer as to why it's occurring. We'll update this space should we get a clear answer.

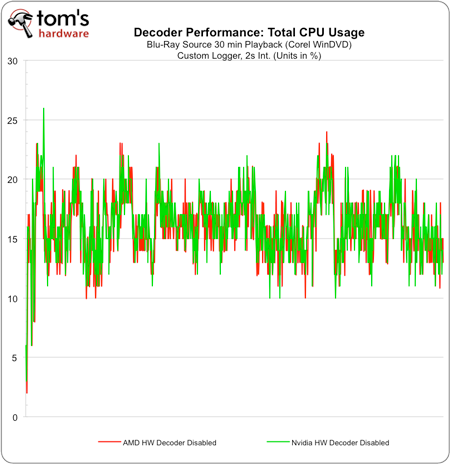

[Update 2/4/2011]: It turns out this was a bug, likely on Cyberlink's side. We contacted Corel for a copy of WinDVD. The results speak for themselves. No matter what GPU you drop in, CPU decoding is should be the same provided you use the same software decoder.

Current page: Software Decoding: All CPU, All the Time

Prev Page Hardware Decoder Quality: Examined Next Page Full Blu-ray Transcoding Speed: APP Versus CUDA Versus Quick Sync-

spoiled1 Tom,Reply

You have been around for over a decade, and you still haven't figured out the basics of web interfaces.

When I want to open an image in a new tab using Ctrl+Click, that's what I want to do, I do not want to move away from my current page.

Please fix your links.

Thanks -

spammit omgf, ^^^this^^^.Reply

I signed up just to agree with this. I've been reading this site for over 5 years and I have hoped and hoped that this site would change to accommodate the user, but, clearly, that's not going to happen. Not to mention all the spelling and grammar mistakes in the recent year. (Don't know about this article, didn't read it all).

I didn't even finish reading the article and looking at the comparisons because of the problem sploiled1 mentioned. I don't want to click on a single image 4 times to see it fullsize, and I certainly don't want to do it 4 times (mind you, you'd have to open the article 4 separate times) in order to compare the images side by side (alt-tab, etc).

Just abysmal. -

cpy THW have worst image presentation ever, you can't even load multiple images so you can compare them in different tabs, could you do direct links to images instead of this bad design?Reply -

ProDigit10 I would say not long from here we'll see encoders doing video parallel encoding by loading pieces between keyframes. keyframes are tiny jpegs inserted in a movie preferably when a scenery change happens that is greater than what a motion codec would be able to morph the existing screen into.Reply

The data between keyframes can easily be encoded in a parallel pipeline or thread of a cpu or gpu.

Even on mobile platforms integrated graphics have more than 4 shader units, so I suspect even on mobile graphics cards you could run as much as 8 or more threads on encoding (depending on the gpu, between 400 and 800 Mhz), that would be equal to encoding a single thread video at the speed of a cpu encoding with speed of 1,6-6,4GHz, not to mention the laptop or mobile device still has at least one extra thread on the CPU to run the program, and operating system, as well as arrange the threads and be responsible for the reading and writing of data, while the other thread(s) of a CPU could help out the gpu in encoding video.

The only issue here would be B-frames, but for fast encoding video you could give up 5-15MB video on a 700MB file due to no B-frame support, if it could save you time by processing threads in parallel. -

intelx first thanks for the article i been looking for this, but your gallery really sucks, i mean it takes me good 5 mins just to get 3 pics next to each other to compare , the gallery should be updated to something else for fast viewing.Reply -

_Pez_ Ups ! for tom's hardware's web page :P, Fix your links. :) !. And I agree with them; spoiled1 and spammit.Reply -

AppleBlowsDonkeyBalls I agree. Tom's needs to figure out how to properly make images accessible to the readers.Reply -

kikireeki spoiled1Tom, You have been around for over a decade, and you still haven't figured out the basics of web interfaces.When I want to open an image in a new tab using Ctrl+Click, that's what I want to do, I do not want to move away from my current page.Please fix your links.ThanksReply

and to make things even worse, the new page will show you the picture with the same thumbnail size and you have to click on it again to see the full image size, brilliant! -

acku Apologies to all. There are things I can control in the presentation of an article and things that I cannot, but everyone here has given fair criticism. I agree that right click and opening to a new window is an important feature for articles on image quality. I'll make sure Chris continues to push the subject with the right people.Reply

Web dev is a separate department, so we have no ability to influence the speed at which a feature is implemented. In the meantime, I've uploaded all the pictures to ZumoDrive. It's packed as a single download. http://www.zumodrive.com/share/anjfN2YwMW

Remember to view pictures in the native resolution to avoid scalers.

Cheers

Andrew Ku

TomsHardware.com -

Reynod An excellent read though Andrew.Reply

Please give us an update in a few months to see if there has been any noticeable improvements ... keep your base files for reference.

I would imagine Quicksynch is now a major plus for those interested in rendering ... and AMD and NVidia have some work to do.

I appreciate the time and effort you put into the research and the depth of the article.

Thanks,

:)