Video Transcoding Examined: AMD, Intel, And Nvidia In-Depth

Transcoding Quality Revisited: CUDA Problems?

This article was spawned from earlier image quality tests that we ran during for our desktop Brazos coverage. As far as we are concerned, sacrificing quality for speed is never a good thing.

When Chris first sent me the image quality screenshots, the differences were too stark not too notice. CUDA-based encoding comes out noticeably worse, and Nvidia representatives stopping by our lab acknowledged the issue.

You can see the difference for yourself by downloading the actual video clips. We threw three examples up on ZumoDrive: CUDA-based encoding, the Ion platform with hardware-accelerated encode and decode turned off, and AMD’s E-350 with hardware-accelerated decoding enabled. Watch them back to back and see for yourself. You'll notice that the issues are most noticeable in scenes with lots of motion. The best way to describe the issue would be latent blocking or pixelation that distorts the output quality.

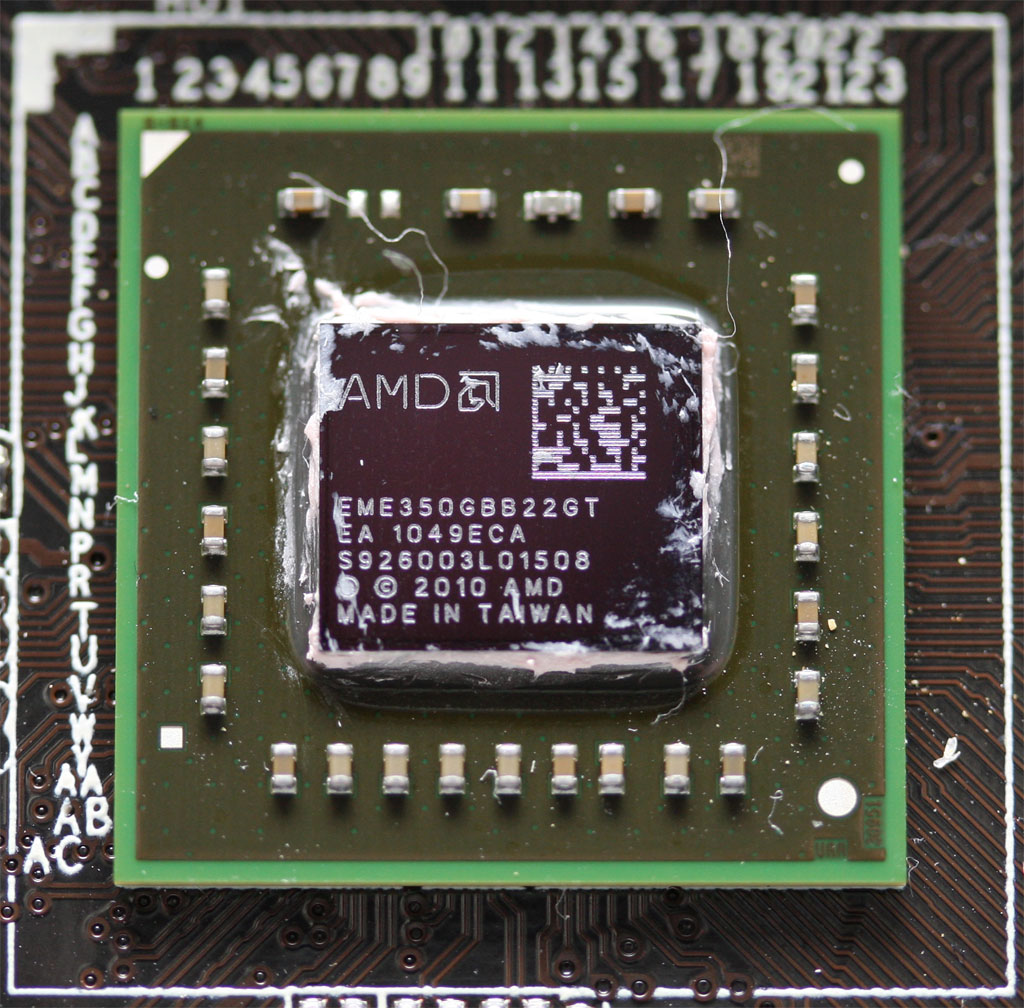

The comparison here is really very, very basic. Chris compared four test beds: the E-350-powered ASRock E350M1, the Athlon II X2 240e-driven 880GITX-A-E, the IONITX-P-E with Intel’s Celeron SU2300, and the Atom-powered IONITX-L-E. The two boards with Nvidia’s Ion chipset are going to deliver the same output as soon as you start involving hardware-accelerated encode and decode. The other two aren’t powerful enough to enable hardware-based encode support. So, our quality comparison becomes CUDA versus pure software versus two AMD platforms that apply hardware-accelerated decode support.

If you download the full-sized (720p) versions of all three of these software-based images and tab through them, you’ll see the sort of quality variation that’d require you to diff each shot—and that’s in a still frame. For all intents and purpose, they’re the same.

The same goes for all three boards with hardware-accelerated decoding applied. We can clearly see that what comes out of the decoder and is then operated on by the CPU during the encode stage is largely identical. Alternatively, you can tab between the software-only shot and the corresponding hardware decode version shown above to see that the decoded content is the same, whether the process happens on the CPU or GPU.

And then we let Ion’s CUDA cores handle the encode stage and things quickly get ugly. The specific frame Chris chose doesn't even show the worst of the worst from our 2:30-long trailer. But if you download the videos, the difference is extremely evident within the first thirty seconds.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

With our previous results in mind, let's move onto a more serious set of transcoding tests.

Current page: Transcoding Quality Revisited: CUDA Problems?

Prev Page Intel, AMD, And Nvidia: Decode And Encode Support Next Page Test Setup-

spoiled1 Tom,Reply

You have been around for over a decade, and you still haven't figured out the basics of web interfaces.

When I want to open an image in a new tab using Ctrl+Click, that's what I want to do, I do not want to move away from my current page.

Please fix your links.

Thanks -

spammit omgf, ^^^this^^^.Reply

I signed up just to agree with this. I've been reading this site for over 5 years and I have hoped and hoped that this site would change to accommodate the user, but, clearly, that's not going to happen. Not to mention all the spelling and grammar mistakes in the recent year. (Don't know about this article, didn't read it all).

I didn't even finish reading the article and looking at the comparisons because of the problem sploiled1 mentioned. I don't want to click on a single image 4 times to see it fullsize, and I certainly don't want to do it 4 times (mind you, you'd have to open the article 4 separate times) in order to compare the images side by side (alt-tab, etc).

Just abysmal. -

cpy THW have worst image presentation ever, you can't even load multiple images so you can compare them in different tabs, could you do direct links to images instead of this bad design?Reply -

ProDigit10 I would say not long from here we'll see encoders doing video parallel encoding by loading pieces between keyframes. keyframes are tiny jpegs inserted in a movie preferably when a scenery change happens that is greater than what a motion codec would be able to morph the existing screen into.Reply

The data between keyframes can easily be encoded in a parallel pipeline or thread of a cpu or gpu.

Even on mobile platforms integrated graphics have more than 4 shader units, so I suspect even on mobile graphics cards you could run as much as 8 or more threads on encoding (depending on the gpu, between 400 and 800 Mhz), that would be equal to encoding a single thread video at the speed of a cpu encoding with speed of 1,6-6,4GHz, not to mention the laptop or mobile device still has at least one extra thread on the CPU to run the program, and operating system, as well as arrange the threads and be responsible for the reading and writing of data, while the other thread(s) of a CPU could help out the gpu in encoding video.

The only issue here would be B-frames, but for fast encoding video you could give up 5-15MB video on a 700MB file due to no B-frame support, if it could save you time by processing threads in parallel. -

intelx first thanks for the article i been looking for this, but your gallery really sucks, i mean it takes me good 5 mins just to get 3 pics next to each other to compare , the gallery should be updated to something else for fast viewing.Reply -

_Pez_ Ups ! for tom's hardware's web page :P, Fix your links. :) !. And I agree with them; spoiled1 and spammit.Reply -

AppleBlowsDonkeyBalls I agree. Tom's needs to figure out how to properly make images accessible to the readers.Reply -

kikireeki spoiled1Tom, You have been around for over a decade, and you still haven't figured out the basics of web interfaces.When I want to open an image in a new tab using Ctrl+Click, that's what I want to do, I do not want to move away from my current page.Please fix your links.ThanksReply

and to make things even worse, the new page will show you the picture with the same thumbnail size and you have to click on it again to see the full image size, brilliant! -

acku Apologies to all. There are things I can control in the presentation of an article and things that I cannot, but everyone here has given fair criticism. I agree that right click and opening to a new window is an important feature for articles on image quality. I'll make sure Chris continues to push the subject with the right people.Reply

Web dev is a separate department, so we have no ability to influence the speed at which a feature is implemented. In the meantime, I've uploaded all the pictures to ZumoDrive. It's packed as a single download. http://www.zumodrive.com/share/anjfN2YwMW

Remember to view pictures in the native resolution to avoid scalers.

Cheers

Andrew Ku

TomsHardware.com -

Reynod An excellent read though Andrew.Reply

Please give us an update in a few months to see if there has been any noticeable improvements ... keep your base files for reference.

I would imagine Quicksynch is now a major plus for those interested in rendering ... and AMD and NVidia have some work to do.

I appreciate the time and effort you put into the research and the depth of the article.

Thanks,

:)