MSI Big Bang Fuzion: Pulling The Covers Off Of Lucid’s Hydra Tech

The Many Heads Of Hydra

Again, we’ll go into more depth on the Fuzion’s basic board design and BIOS when it comes time to explore the similar MSI Trinergy next week. The real differentiator here is Lucid’s Hydra 200 ASIC, which takes the place of Nvidia’s nForce 200. The Trinergy and Fuzion are both $350 P55-based boards, so what this really boils down to is a choice. Choose SLI/CrossFireX with support for up to 3-way SLI. Or choose the flexibility to mix/match cards/vendors.

When you go the former route, you play by the rules set forth by ATI and Nvidia for achieving a compatible multi-GPU configuration. The means identical GPU models in an SLI setup and GPUs from the same family when you go CrossFire. But you also get the validation those companies put into their established technologies.

Should you choose the latter path instead, you have five possible combinations opened up to you, three of which are exclusive to Lucid’s solution:

| Header Cell - Column 0 | LucidLogix-Exclusive | Example Config |

|---|---|---|

| N-Mode: Identical Nvidia Cards | No | GeForce GTX 285 / GeForce GTX 285 |

| N-Mode: Non-Identical Nvidia Cards | Yes | GeForce GTX 285 / GeForce GTX 260 |

| A-Mode: Identical ATI Cards | No | Radeon HD 5870 / Radeon HD 5870 |

| A-Mode: Non-Identical ATI Cards | Yes | Radeon HD 5870 / Radeon HD 4870 |

| X-Mode: Multi-Vendor | Yes | Radeon HD 5870 / GeForce GTX 285 |

So, we have a trio of capabilities you’ve never seen before: N-mode using non-identical Nvidia cards, A-mode using non-identical ATI cards, and X-mode leveraging multi-vendor interoperability, which was a “demo” mode previously, but was recently upgraded to a production status with Lucid’s most recent driver drop.

Achieving The Unachievable

The specifics of how Lucid is able to get GPUs from the same vendor (but with different performance profiles) and GPUs from different vendors working cooperatively is tightly related to the company’s load-balancing algorithms.

There’s a reason why ATI and Nvidia want you to put cards with common performance attributes together.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

ATI supports three different modes for displaying your favorite game across a pair of graphics cards: alternate frame rendering, whereby each GPU handles odd or even frames, supertiling mode, which divides the screen into a 32x32 pixel checkerboard of sections rendered alternately by each GPU, and scissor mode, where the screen is split, with one part rendered by GPU 1 and the other rendered by GPU2. ATI’s own product page admits that scissor mode is not as efficient as the company’s other techniques, but works best in OpenGL-based titles. Of course, the supertiling and scissor modes are largely technical additions to CrossFire, since ATI’s own list of best practices suggests programming with alternate frame rendering in mind. Thus, most of the apps you’d run in CrossFire are optimized for this mode anyway.

Nvidia supports two performance modes: split-frame rendering and alternate frame rendering. Split-frame works like ATI’s scissor mode, dividing the frame up to split its workload up between GPUs. And, as with ATI’s implementation, this isn’t as efficient as AFR. Alternate frame rendering functions similarly as well, assigning one card to even frames and the other to odd.

The problem with split-frame/scissor mode is that, while they help alleviate the pixel processing workload, each GPU still has to store the entire frame in its memory, so geometry and (arguably more important) memory bandwidth aren’t helped at all. Meanwhile, tiling is negatively affected by inter-frame dependencies, such as render targets being used in the following frame.

As a result, AFR is most often used. It makes sense, then, that you’d want GPUs with identical performance working on one frame after another. And even when you have this, one frame might take milliseconds longer to render than the one before, resulting in an artifact of multi-GPU configurations referred to as micro-stuttering. Though less perceptible at high speeds, it’s inevitable that I do a story covering CrossFire or SLI and get asked if the hardware in question exhibits signs of micro-stuttering. We’ll go into more depth on this shortly…

…the point is that Lucid did its homework in setting forth Hydra’s minimum requirements and determined it needed to: allow non-identical GPUs to work in the same system, facilitate scalability with more than one card installed, and eliminate the need for over-the-top connectors between cards. And since the company so clearly defines its goals, it becomes particularly easy for us to evaluate where it stands in relation to them today.

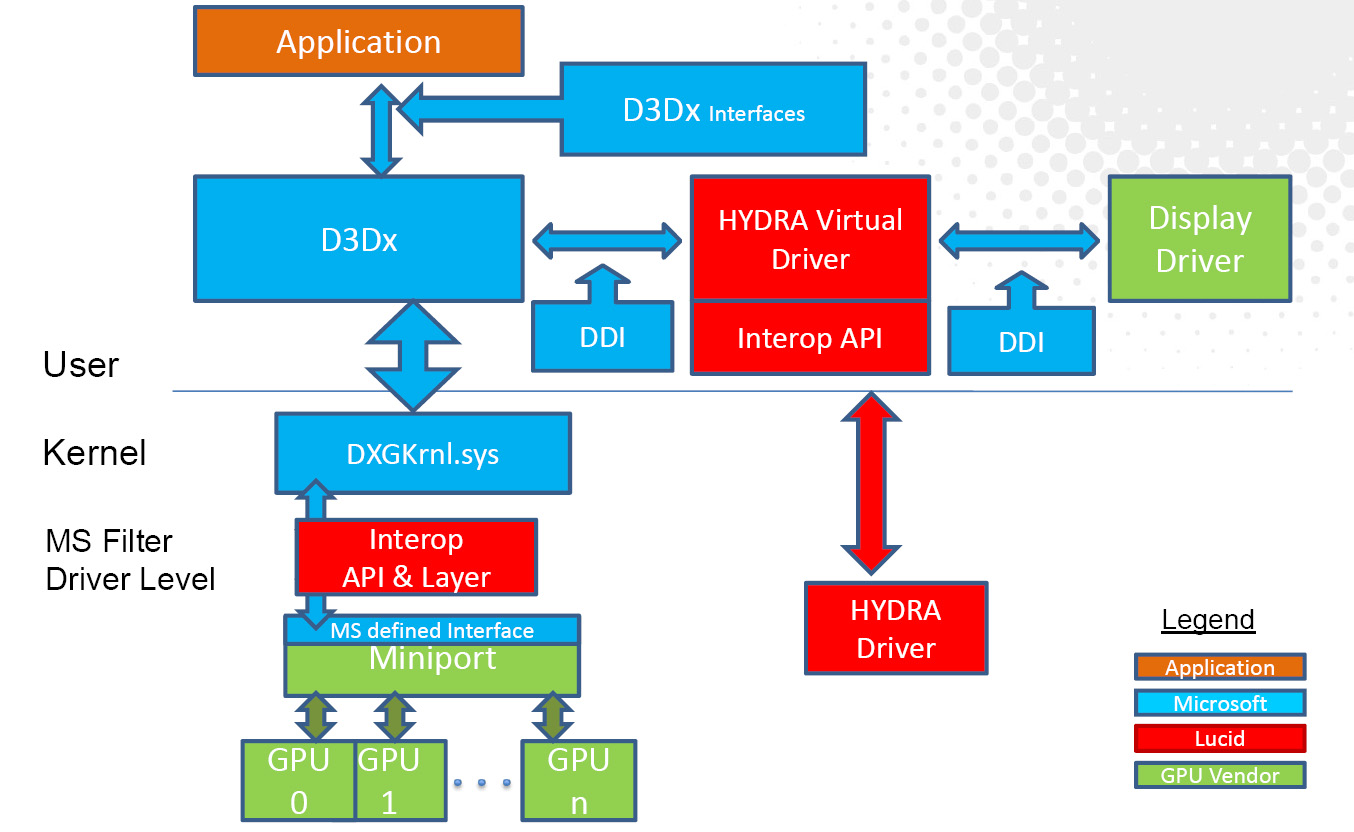

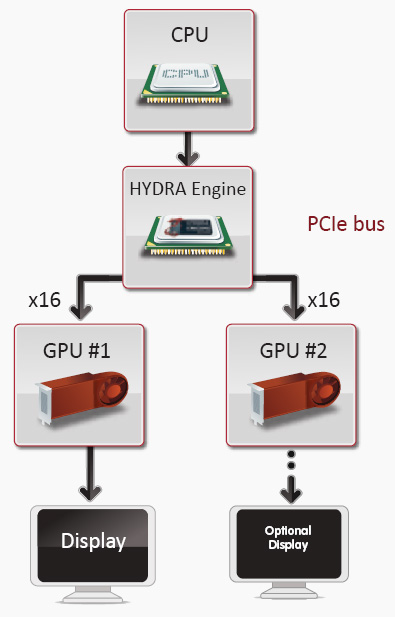

According to Lucid, its Hydra engine is able to intercept DirectX/OpenGL calls and, rather than use something like AFR to divvy up the workload, break each frame into “tasks.” A task isn’t necessarily a screen ratio or series of uniform tiles, but could instead be a 3D object, for example—it’s up to the engine to determine which rendering technique to use. Those tasks are then distributed to the installed GPUs (currently limited to two) through the vendor’s driver (which isn’t even aware of Lucid’s software running in front of it, since Hydra employs the standard Device Driver Interface in Windows 7). The completed tasks are returned to what Lucid calls its interop layer, where the frame is composited and sent to the GPU with an attached display.

In order to make this process dynamic—and by that I mean capable of supporting dissimilar GPUs—the Hydra employs a feedback mechanism that evaluates the performance of installed graphics hardware in real-time and load balances accordingly. Thus, in theory, Lucid’s technology circumvents the micro-stuttering issues of alternate frame rendering and resolves the inter-frame dependencies that penalize split-frame rendering.

Current page: The Many Heads Of Hydra

Prev Page MSI’s Big Bang Fuzion Motherboard Next Page Hydra 200: An Evolved ASIC-

Maziar Nice article,its very good for users for upgrading,because for current SLI/CF you need 2 exact cards but with Lucid you can use different cards as well,but it still needs to be more optimized and has a long way ahead of it,it looks very promising thoughReply -

Von_Matrices I'm highly doubtful of the Steam hardware survey. I think it is underestimating the number of multi-GPU systems. I for one am running 4850 crossfire and steam has never detected a multi-GPU system when I was asked for the hardware survey. The 90% NVIDIA SLI seems also seems a little too high to me.Reply -

Bluescreendeath The CPU scores for the 3D vantage tests are way off. You need to turn off PhysX when benchmarking the CPU or it will skewer the results...Reply -

Bluescreendeath So far, the best scaling has been in Crysis. The 5870/GTX285 combo benchmarks looked very promising.Reply -

cangelini BluescreendeathThe CPU scores for the 3D vantage tests are way off. You need to turn off PhysX when benchmarking the CPU or it will skewer the results...Reply

It's explained in the analysis ;-) -

kravmaga "But when you spend $350 on a motherboard, you’re using graphics cards that cost more than that. If you’re not, you aren’t doing it right"Reply

Quoted from the last page; I disagree with that statement.

There are plenty of people in situations where using this board is a better investment performance per dollars. This is all the more relevant as this technology will undoubtedly find its way into cheaper boards and budget oriented setups where it will make all the sense in the world to bench it using mid-end value parts.

I, for one, would have liked to see what using gtx260s and 5770s would look like in this same setup. As is, this review leaves many questions unanswered. -

SpadeM Well the review does give an answer in the form of: It's better to run a ATI card for rendering and a nvidia card for physics and cuda (if u're into transcoding/accelerating with coreavc etc.) with windows 7 installed.Reply

Or at least that is the conclusion i'm comfortable with at the moment. -

HalfHuman i also agree with the fact that a person who will buy this board will necessarily go for the highest priced vid boards. maybe some will but not all. there will be more who will try to save the older vid cards.Reply

i also understand why you paired the 5870 with nvidia's greatest. there is a catch however... lucid guys did not have the chance to play with 5xxx series too much and you may be evaluating something that is not too ripe. i guess the 4xxx series would have been a better chance to see how well the technology works. couple that with games that are not yet certified for lucid, couple that with how much complexity this technology has to overcome... i think this is a magnificent accomplishment o lucid team part.

i also think that in order for this technology to become viable it will go down in price and will be found in much cheaper boards. for the moment the "experimenting phase" is done on the expensive spectrum. i saw some early comparisons and the scaling was beautiful. i know that the system put together by lucid... but that is fine since that was only a demo to show that it works. judging on how fast this guys are evolving i guess that they will go mainstream this year.