CUDA-Enabled Apps: Measuring Mainstream GPU Performance

Help For the Rest of Us

I like an eye-popping benchmark as well as the next guy. But at the end of the day, I’m a user. I use computers to do useful things. And on days when I have to give back the Tom’s Hardware Lear jet, and all the ski bunnies go back to their warrens, I have a modest computer with modest components and not much budget to spare for $500 upgrades. I need technology that’s going to help me do what I want more efficiently, whether it’s play games, edit video, or help model genetic sequences.

Some applications are linear in nature and merely want to crank as quickly as possible on a single processing thread until the cows come home. Others are built to leverage parallelism. Everything from Unreal Engine 3 to Adobe Premiere has shown us the benefits of CPU-based multi-threading, but what if 4 or 8 or even 16 threads was just a beginning?

This is the promise behind Nvidia’s CUDA computing architecture, which, according to the company’s definition, can run thousands of threads simultaneously.

We've written about CUDA in the past, so hopefully you’re no stranger to the technology (if you did miss our coverage, check out Nvidia's CUDA: The End of the CPU?) For better or worse, though, most CUDA coverage in the press has focused on high-end hardware, even though the supporting logic has been present in Nvidia GPUs since the dawn of the GeForce 8. When you consider the huge enterprise dollars wrapped up in the high-performance computing (HPC) and professional graphics workstation markets—targeted by Nvidia’s Tesla and Quadro lines, respectively—no wonder this is where so much of Nvidia’s marketing attention has been.

But in 2009, we finally see a change. CUDA has come to the masses. There's a huge install base of compatible desktop graphics cards, and the mainstream applications able to exploit that built-in CUDA support are hitting one after the other.

From Nothing To Now

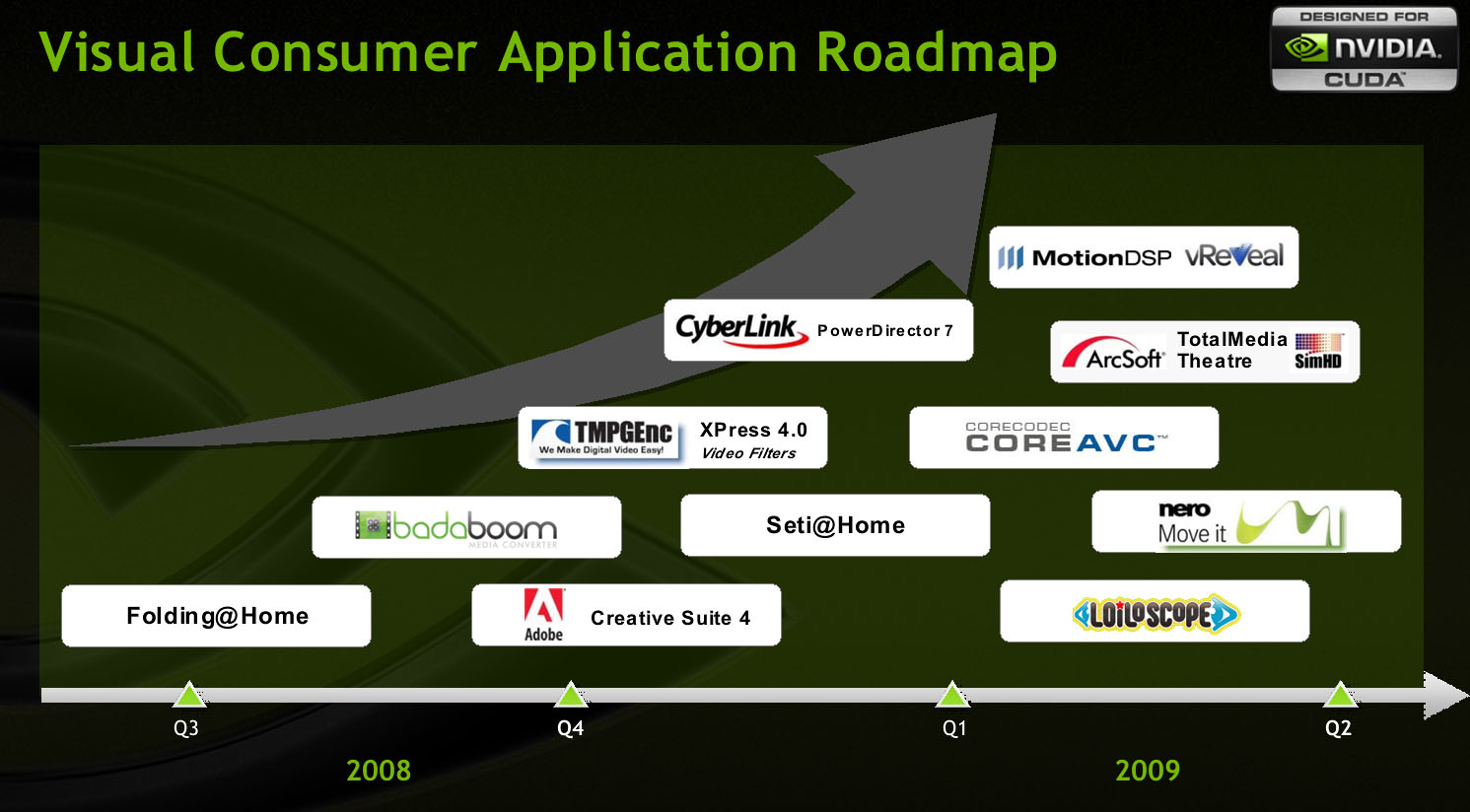

The first consumer-friendly CUDA app was Folding@Home, a university distributed computing project out of Stanford in which each user can crunch a chunk of raw data about protein behavior so as to better understand (and hopefully cure) several of humanity’s worst diseases. The application transitioned to CUDA compatibility in the second half of 2008. Very shortly afterward came Badaboom, the video transcoder from Elemental Technologies that, according to Elemental, can transcode up to 18 times faster than a CPU-only implementation.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Then came a whole slew of media applications for CUDA: Adobe Creative Suite 4, TMPGEnc 4.0 XPress, CyberLink PowerDirector 7, MotionDSP vReveal, Loilo LoiLoScope, Nero Move it, and more. Mirror’s Edge looks to be the first AAA game title to fully leverage CUDA-based PhysX technology for increasing visual complexity, allegedly by 10x to 20x. Expect to see more titles emerge in this vein—a lot more. While AMD and its ATI Stream technology have been mired in setbacks, Nvidia has been hyping its finished and proven CUDA to everyone who will listen...and developers now seem to be taking the message to heart.

That’s all well and good, but proof of CUDA’s incendiary capabilities has largely been proven on high-end GPUs. I’m on a tight budget. Friends are getting mowed down around me by lay-offs and wage-cuts like bubonic plague victims. You bet, I’d love to drop ten or twelve Benjamins on a 3-way graphics overhaul, but the reality is that, like many of you, I’ve only got one or two C-notes to spare. On a good day. So the question all of us who can’t afford the graphics equivalent of a five-star menage-a-troi should be asking is, “Does CUDA mean anything to me when all I can afford is a budget-friendly card for my existing system?”

Let’s find out. Today, we'll be looking at some of the most promising titles and measuring the speed-up garnered from a pair of mid-range GPUs.

-

SpadeM The 8800GS or with the new name 9600GSO goes for 60$ and delivers 96 stream processors. Would it be correct to assume that it would perform betwen the 9600 GT and 9800 GTX you reviewed?Reply

Other then that great article, been waiting for it since we got a sneak preview from Chris last week. -

curnel_D And I'll never take Nvidia marketing seriously until they either stop singing about CUDA being the holy grail of computing, or this changes: "Aside from Folding@home and SETI@home, every single application on Nvidia’s consumer CUDA list involves video editing and/or transcoding."Reply -

As more software will use CUDA, we will not only see a great boost in performance for e.g. video performance, but for parallel programing in general. This sky rocket this business into a new age!Reply

-

curnel_D l0bd0nAs more software will use CUDA, we will not only see a great boost in performance for e.g. video performance, but for parallel programing in general. This sky rocket this business into a new age!Honestly, I dont think a proprietary language will do this. If anything, it's likely to be GPGPU's in general, run by Open Computing Language.(OpenCL)Reply -

IzzyCraft Who knows it's just a clip he used he could be naming it anything for the hell of it.Reply

CUDA transcoding is very nice to someone that does H.264 transcoding at a high profile and lacks a 300+ dollar cpu who would spend hours transcoding a dvd on high profile settings.

Else from that CUDA acceleration has just been more of a feature nothing like a main event. Although can easly be the main attraction to someone that does a good flow of H.264 trasncoding/encoding.

Encoding/transcoding in h.264 high profile can easily make someone who is very content with their cpu and it's power become sad very quickly when they see the est time for their 30 min clip or something. -

I'm using CoreAVC since support was added for CUDA h264 decoding. I kinda feel stupid for buying a high end CPU (at the time) since playing all videos, no matter the resolution or bit-rate, leaves the CPU at near-idle usage.Reply

Vid card: 8600GTS

CPU: E6700 -

IzzyCraft Well you lucked in considering not all of the geforce 8 series supports H.264 decoding etc.Reply -

ohim they should remove Adobe CS4 suite from there since Cuda transcoding is only posible with nvidia CX videocards not with normal gaming cards wich supports cuda.Reply -

adbat CUDA means Miracle in my language :-) I it will do thoseReply

The sad thing is that ATI does not truly compete in CUDA department and there is not standard for it.