Nvidia GeForce GTX 1070 Graphics Card Roundup

Palit GTX 1070 GameRock Premium Edition

Why you can trust Tom's Hardware

JetStream, Super JetStream, GameRock, and GameRock Premium Edition: Palit sure does provide a lot of GeForce GTX 1070-based options. The card we're testing is just as bulky as its name thanks to the oversized cooler.

Despite all of the cooling headroom you'd seemingly get, this version does have issues with hysteresis, causing the fans to start and stop during warm-up. Unfortunately, the situation isn't any better, even after downloading an available firmware update. Consequently, we're putting further updates to this piece on hold until we're offered a real solution.

Just like the GTX 1080-based version, this card looks massive at first glance. But we'll have to test how much of that bold appearance translates into real-world benefits.

Technical Specifications

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Exterior & Interfaces

The fan shroud is made of relatively thick, white plastic. The top and front are decorated with metallic blue and brushed metal highlights.

A weight of 1053g makes this card about 180g lighter than the 1080-based version. However, with its length of 28.7cm, height of five inches (12.8cm), and two inches (5.2cm) of width, it boasts the same dimensions as its higher-end counterpart and also spans three slots. The two massive 100mm fans with rotor diameters of 96mm further emphasize the card's size.

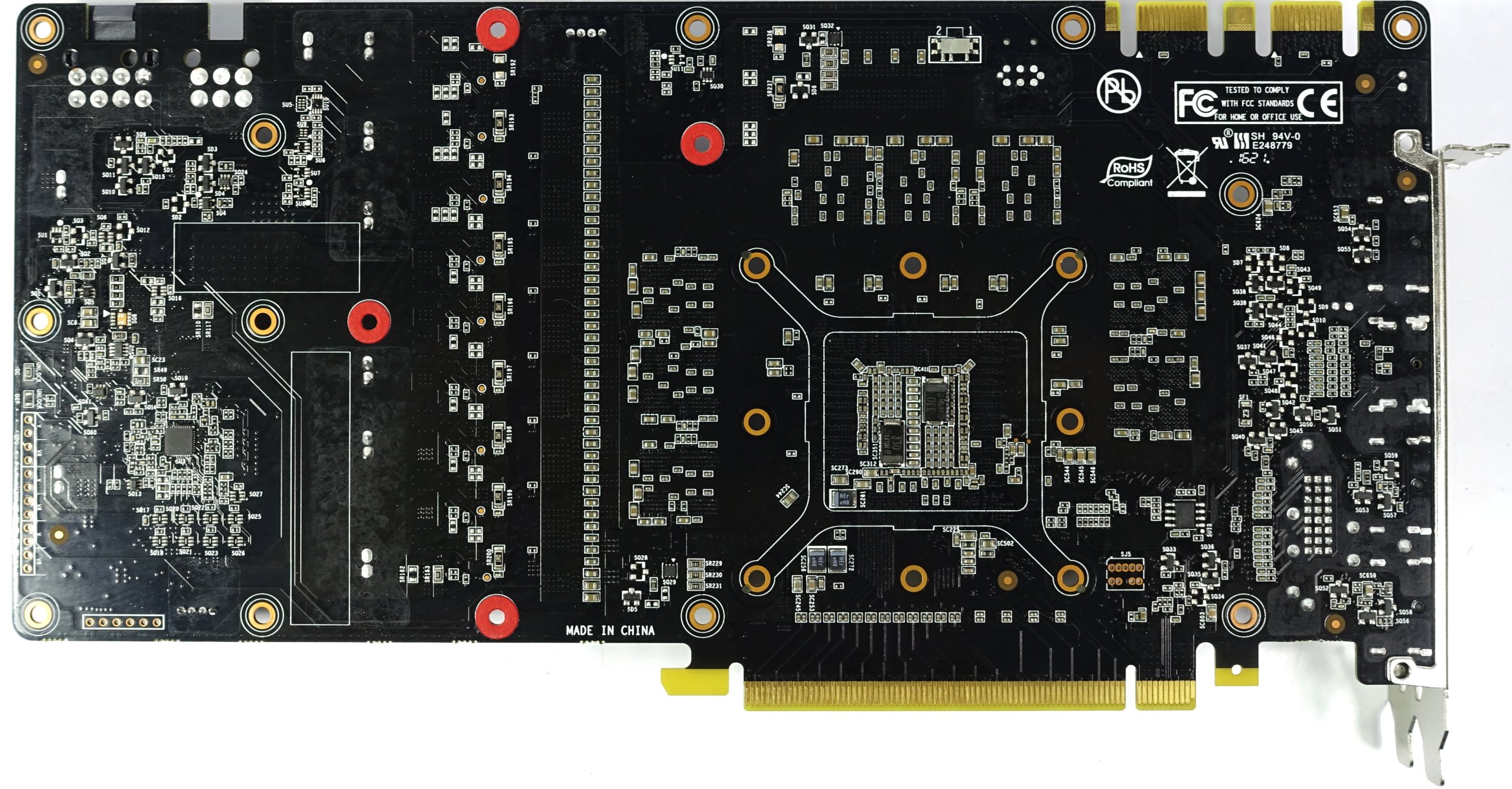

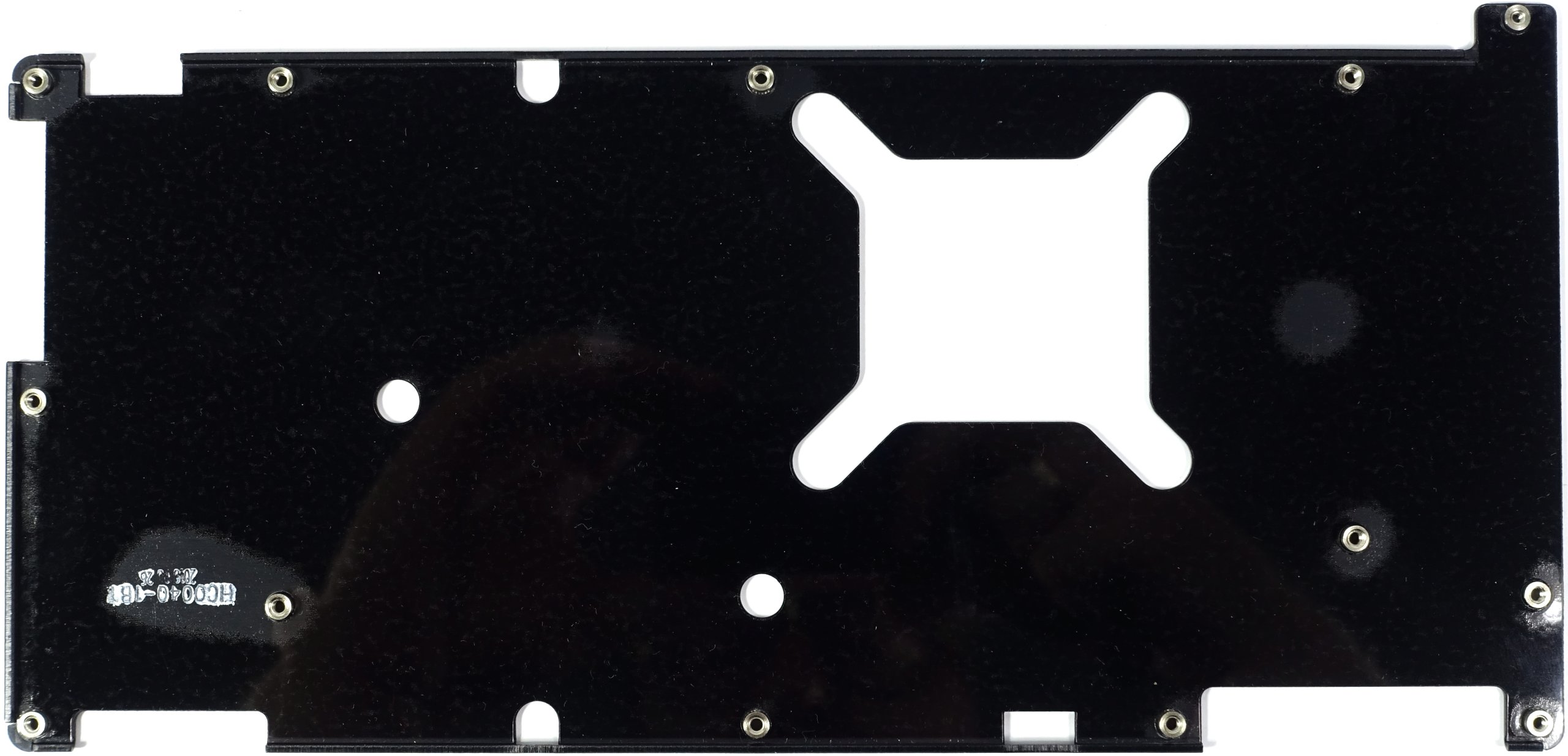

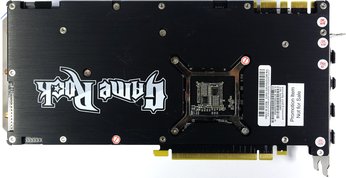

Flip the card over and it's covered by a one-piece backplate without any openings for ventilation. It is decorated with a highly visible GameRock logo and requires an additional 5mm for clearance. Since there are no thermal pads between the plate and PCB, the backplate serves decorative purposes only. While it is perfectly possible to use the card without this plate, removing it requires taking off other components, likely voiding Palit's warranty.

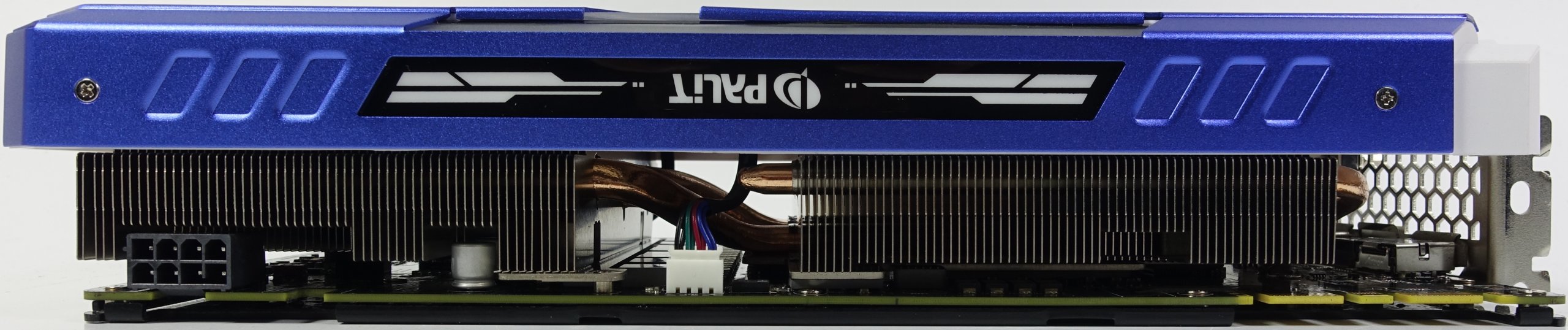

The top of the card is dominated by a centered, brightly lit Palit logo. An eight-pin auxiliary power connector is positioned at the board's end and rotated 180°. This isn't a humble product by any means; it stands out and wears its heft proudly.

At its end, the card is completely closed off, which makes sense since the fins are positioned vertically and won't allow any airflow in that direction anyway.

The slot bracket features five display outputs, of which four can be used simultaneously in multi-monitor setups. In addition to one dual-link DVI-D connector, which doesn't loop through any analog signal, you also get one HDMI 2.0b port and three DisplayPort 1.4-capable interfaces. The rest of the slot plate is peppered with openings that look like they're meant to facilitate airflow. However, they're more decorative than functional.

Board & Components

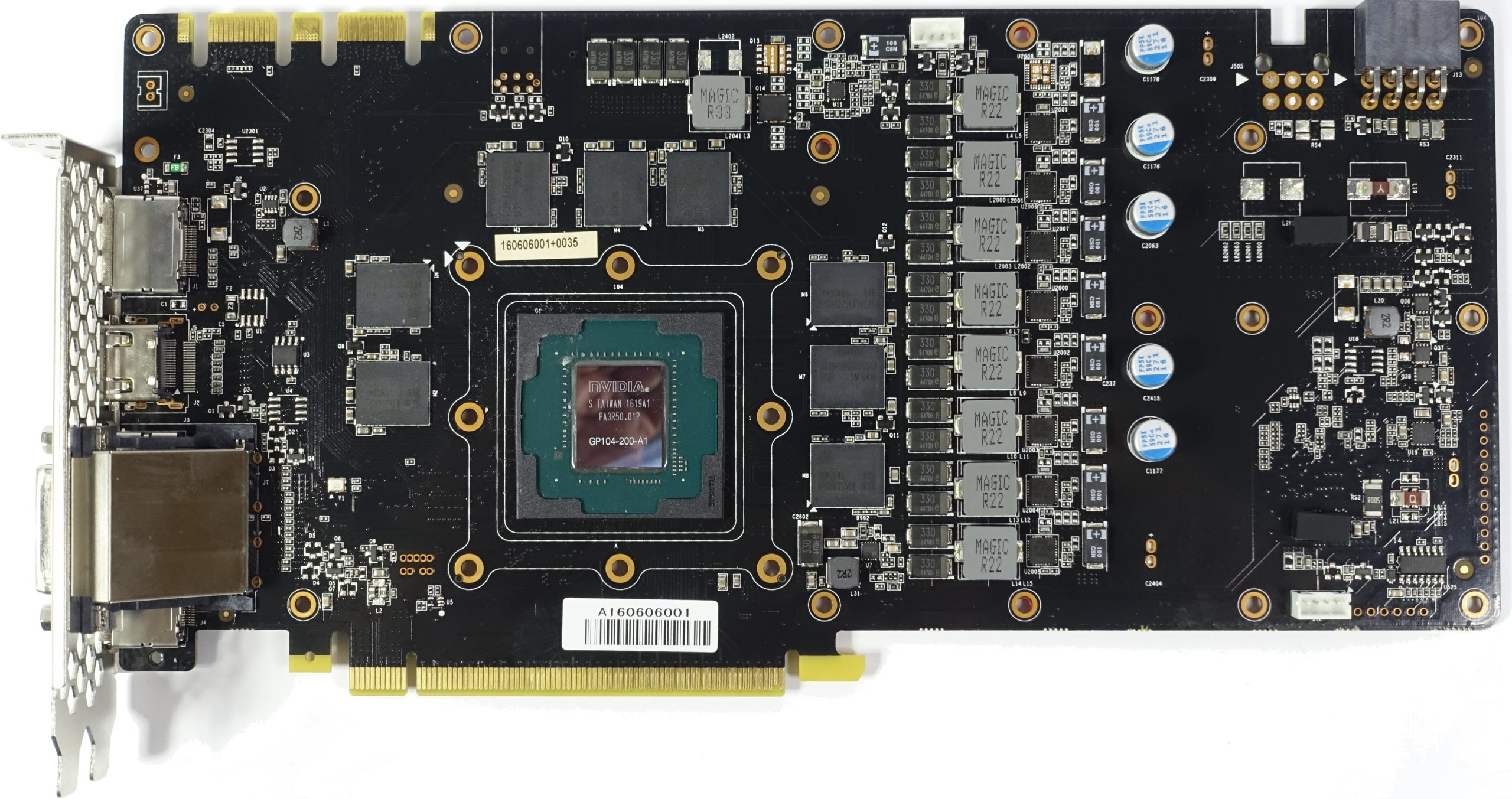

The board looks clean enough, similar to the 1080-based version. It uses eight Samsung K4G80325FB-HC25 modules, each able to store up to 8Gb (32x 256Mb). Each chip operates at voltages between 1.305 and 1.597V, depending on the selected clock frequency. However, caution should be advised! Some manufacturers have switched to Micron memory modules, which is recognizable in the BIOS naming scheme (86.04.26.xx.xx versus Samsung's 86.04.1E.xx.xx).

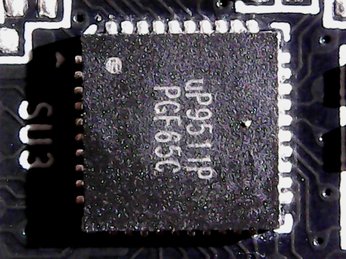

The 8+1-phase system, like Nvidia's reference cards, relies on the sparsely documented uP9511P for PWM control. Also like Nvidia's own implementation, the controller finds a home on the back of the PCB. All eight of the GPU's phases are realized using this component, which is actually designed as a 6+2-phase jack-of-all-trades.

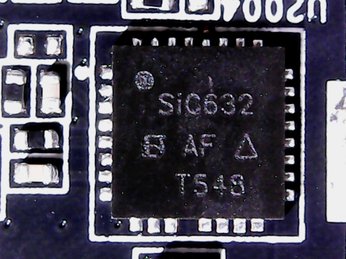

The DC/DC voltage converters' dual-channel MOSFETs are controlled directly, as these eight SiC632s are so-called driver MOSes. They combine the actual power MOSFETs for high-side and low-side, as well as the gate driver and Schottky diode on one chip. This is certainly more cost-efficient and a plus for compact designs, especially when a large number of voltage converter circuits are in play.

In contrast to Palit's GTX 1080 model, this board's memory gets power from one phase (instead of two). It's controlled by the same undocumented chip used on Nvidia's reference board, which should be almost identical to the well-known 1728. A dual N-Channel model is used for the MOSFETs, which combines both high-side and low-side.

The Foxconn coils are middle-class. Depending on the layout, they operate more or less quietly. And as is often the case, a well-known INA3221 handles current monitoring.

Two capacitors are installed right below the GPU to absorb and equalize peaks in voltage.

Power Results

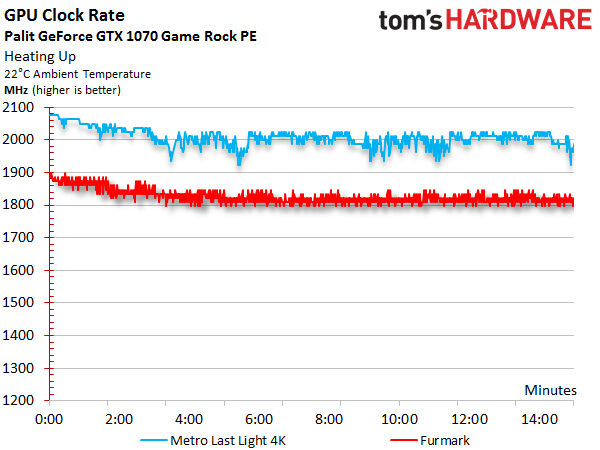

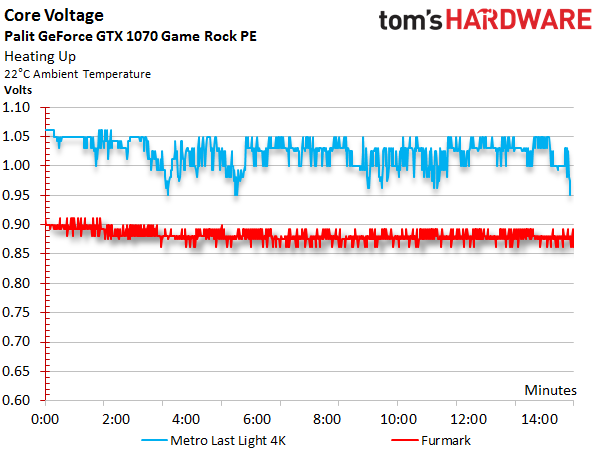

Before we look at power consumption, we should talk about the correlation between GPU Boost frequency and core voltage, which are so similar that we decided to put their graphs one on top of the other. This also shows that both curves drop as the GPU's temperature rises.

The graphs clearly show that the GPU Boost frequency after warm-up and under load falls from an excellent 2076 MHz to a still-good 1975 MHz (and sporadically a little lower). It is also apparent that voltage follows the sinking clock rates. While we measured up to 1.062V in the beginning, this value later drops as low as 0.901V.

Combining the measured voltages and currents allows us to derive a total power consumption we can easily confirm with our instrumentation by taking readings at the card's power connectors.

Since manufacturers sacrifice the lowest possible frequencies to gain an extra GPU Boost bin due to Nvidia's restrictions, the GTX 1070 GameRock Premium Edition's power consumption is slightly higher at idle. Palit sets the first GPU Boost step at 316 MHz.

| Idle | 11W |

|---|---|

| Idle Multi-Monitor | 13W |

| Blu-ray | 13W |

| Browser Games | 92-110W |

| Gaming (Metro Last Light 4K) | 173W |

| Torture (FurMark) | 174W |

These charts go into more detail on power consumption at idle, during 4K gaming, and under the effects of our stress test. The graphs show how load is distributed between each voltage and supply rail, providing a bird's eye view of load variations and peaks.

Temperature Results

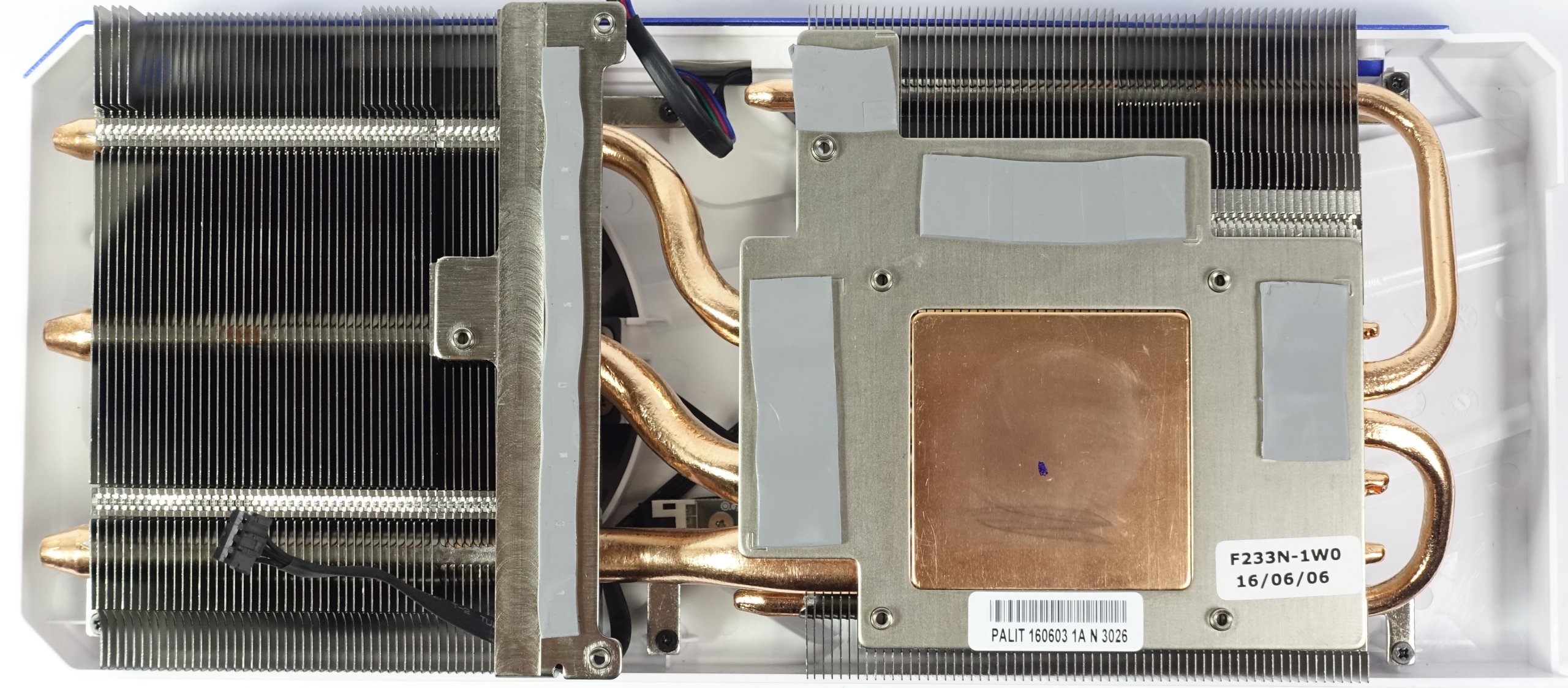

Of course, power dissipated as waste heat needs to be dealt with as efficiently as possible. So, we start by looking at the backplate, which doesn't do any real cooling and instead leaves that job to Palit's 2.5-slot thermal solution. The entire structure matches what you get on the GTX 1080 card. It appears almost decadently oversized given how much less power a 1070 consumes. On the other hand, this isn't a bad problem to have. We'd rather have too much cooling than not enough.

A copper sink moves heat away from the GPU and spreads it through a total of five pipes (three 8mm and two 6mm). Palit chose to orient the sink's fins vertically, which results in short, straight 8mm pipes that work more efficiently. The two smaller pipes don't do much except provide additional area to support the transport of heat away from the sink and towards the cooler's edges.

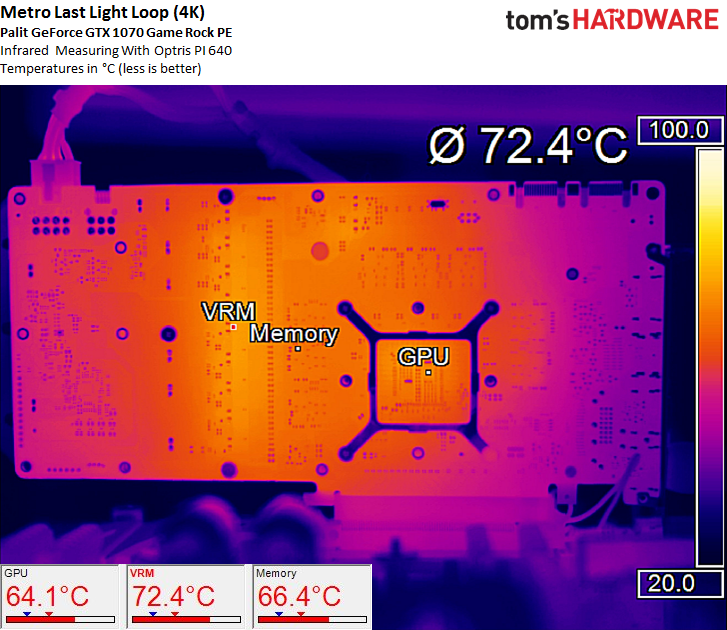

The performance of this truly monstrous cooler leaves little to be desired. Since the temperatures only get to 151°F (66°C) during our gaming loop (154°F/68°C in a closed case) and 151°F (66°C) as well during our stress test (158°F/70°C in a closed case), the fans only need to run at low power, which should have a positive impact on the measured noise level.

The transfer of heat away from the VRMs works perfectly, despite low fan speeds and very little airflow. That massive cooler and its endless fins works wonders.

During our stress test the temperatures do rise a bit at the hottest spot, despite low average power consumption. All other areas remain at perfectly safe values, though.

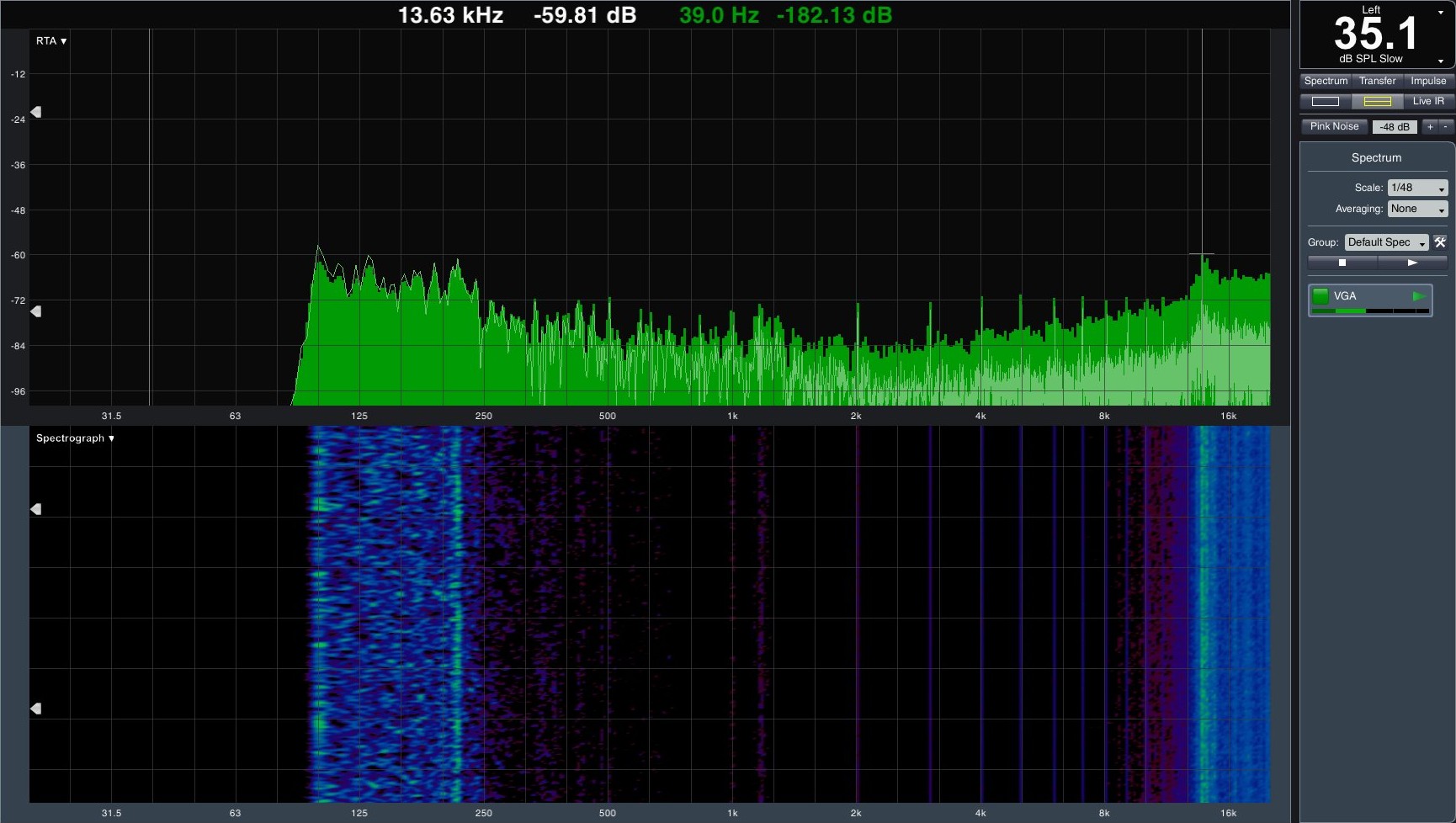

Sound Results

Let's talk a bit about the one single quality that dominated our impression of this graphics card: its noise level. While some folks might enjoy the deep roar of a well-oiled machine, we the sound of silence from our graphics cards. When it's disturbed, the culprit is usually a fan or sometimes the VRM's coils. But a maximum of 1000 RPM for the two fans should be no reason to declare a state of acoustic emergency.

To examine this behavior in more detail, we need to take a closer look at the fan curve, which unfortunately reveals an unpleasant surprise. Since the fans generally start late and keep quiet, it takes diligent measurement of tachometer signals and PWM values to detect poor hysteresis. Palit confirmed this for us, even.

When the card is idle, no noise is measurable due to its semi-passive mode. We thus abstained from trying to take any readings.

The values we measured under load are blissful, and the machine is a purring kitten. A result of 35.1 dB(A) is good considering the temperatures involved. Only the mid-range coils tend to stick out a bit. If it wasn't for their audible chirp disturbing the calm of night, you might even question whether the card was running at all.

Palit GTX 1070 GameRock Premium Edition

Reasons to buy

Reasons to avoid

MORE: Best Deals

MORE: Hot Bargains @PurchDeals

Current page: Palit GTX 1070 GameRock Premium Edition

Prev Page MSI GTX 1070 Gaming X 8GGet Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

adamovera Archived comments are found here: http://www.tomshardware.com/forum/id-3283067/nvidia-geforce-gtx-1070-graphics-card-roundup.htmlReply -

TheRev MasterOne Asus ROG Strix GeForce GTX 1070 and Gigabyte GeForce GTX 1070 G1 Gaming pics are switched ! Did I win something? a job?Reply -

eglass Disagree entirely about the 1070 being good value. It's the worst value in the 10-series lineup. $400 for a 1070 is objectively a bad value when $500 gets you into a 1080.Reply -

adamovera Reply

The filenames of the images are actually swapped as well, weird - fixed now, thanks!19761809 said:Asus ROG Strix GeForce GTX 1070 and Gigabyte GeForce GTX 1070 G1 Gaming pics are switched ! Did I win something? a job? -

barryv88 Are you guys serious!?? You recommend a 1070 card that costs $530 which isn't even available in the U.S? That sorta cash gets you a much quicker GTX 1080! The controversy on this site is just non stop. If your BEST CPU's list wasn't enough already...Reply -

bloodroses I wonder how the Gigabyte 1070 mini compares to the other mini cards like the Zotac and MSI unit?Reply -

adamovera Reply

This is a roundup of all the 1070's we've tested. The graphics card roundups originate with our German bureau and are re-posted in the UK, so they'll sometimes include EU-only products - I'm guessing they're appropriately priced to the competition in their intended markets.19761907 said:Are you guys serious!?? You recommend a 1070 card that costs $530 which isn't even available in the U.S? That sorta cash gets you a much quicker GTX 1080! The controversy on this site is just non stop. If your BEST CPU's list wasn't enough already...

The Palit received the lowest level award - the Asus, the MSI, and one of the Gigabyte boards are better options. -

JackNaylorPE Reply19761822 said:Disagree entirely about the 1070 being good value. It's the worst value in the 10-series lineup. $400 for a 1070 is objectively a bad value when $500 gets you into a 1080.

The 1080 , like it's predcessors (780 and 980) has consistently been the red headed stepchild of the nVidia lineup. So much so that nVidia even intentionally nerfed the performance of the x70 series because its performance was so close to the x80.

The 1080 has dropped in price because, sitting as it does between the 1080 Ti and the 1070... it doesn't exactly stand out. When the 780 Ti came out, the price of the $780 dropped $160 overnight, so much so that I immediately bought two of them and the two sets of game coupons knocked $360 off my XMas shoping list. At a net $650, it was a good buy.

Using the 1070 FE as a reference and the relative performance data published by techpowerup for example.....Used MSI Gaming model since it is one model line where TPU reviewed all 3 cards

The $404 MSI 1070 Gaming X is 104.2% as fast as the 1070 FE

The $550 MSI 1080 Gaming X is 128.2% as fast as the 1070 FE

The $740 MSI 1080 Ti Gaming X is 169.5% as fast as the 1070 FE

So the cost per dollar for comparable quality designs is:

MSI 1070 Gaming X = 104.2 / $404 = 0.258

MSI 1080 Gaming X = 128.2 / $550 = 0.233

MSI 1080 Ti Gaming X = 169.5 / $740 = 0.229

Even at $500 .. the 1080 only comes in 2nd place at 0.256, so no, the better value argument doesn't hold, even assuming we were getting an equal quality card.

Looked at other comparable as a means of comparison and they are for the most par equal or higher ....

Strix at $420, $550 and $780

AMP at $435, $534 and $750

Now with any technology, eeking those last bits of performance out anything always comes at a increased cost. You more of a cost premium going from Gold to Platinum rating on a PSU than you do from Bronze to Silver of even Gold. It's simply another example of Law of Diminishing Returns. So we should expect to pay more per each performance gain with each incremental increase and that hold here. You'd expect that for each increase in performance the % increase in price per dollar would get bigger. But the x80 is quite an aberration.

We get a whopping 10.7 drop of 0.025 from the 1070 to the 1080

We get a rather teeny 1.7 drop of 0.004 from the 1080 to the 1080 Ti

Therefore, logically.... you are paying a 10.7% cost penalty for the increased performance to move up to from the 1070 to 1080 ... whereas the cost penalty for the increased performance to move up to from the 1080 to 1080 Ti is only 1.7% This is why eacxh time the Ti has been introduced, 1080 sales have tanked.

Another way t look at it...

1070 => 1080 = 23% performance increase for $146 ROI = 15.8%

1080 => 1080 Ti = 32% performance increase for $190 ROI = 16.8 %

It's not a matter **if** you can get **a** 1080 at $500., it's whether you can get the one you want. How is it that the $550 models have more sales than the less expensive ones ? Some folks don't care about noise, some folks don't OC, some folks hope they will be able to get the full performance available to us **if** someone ever comes out with a BIOS editor. And yes, there will cards that are heavily discounted for any number of reasons ... low factory clock, noise or heat concerns , some have taken some hits from bad reviews or are discounted simply because sales are poor .... but if a card is selling well below the average price it is because it's not as well made or just isn't selling for real or imagined issues. (example being EVGA SC / FTW ACX designs are now fixed but but EVGA still has a black eye from the earlier cooler problems and if buying EVGA, peeps want iCX. Finally, the 1080 bears the burden of being compared with the 780 and 980 whicc again got lost between the higher / lower cards.

Given the above ROI numbers, I am surprised that all the 1080s have not dropped below $500. But to my eyes, the `080 only starts to make sense when the cost is below $520 and **the ones I'd buy** just aren't there yet

-

Adroid Yea I refuse to buy a 1070 because they are overpriced, period. I almost bought a 1080 but judging from the performance difference it simply wasn't worth it, either. There is not much a 1080 will do that a 1070 won't. What I mean is - a 1060 will run 1080p fine. With that in mind, a 1080 gives very marginal benefit at 2k, and neither one will run 4k smoothly - so what's the point.Reply

If the 1080 was a 350$ card, I might have bit, but as it stands now I'll be waiting for the 1080ti to drop a bit, which can run most games in 2k over 120fps - and justifying an upgrade from a GTX 700 series card. I'm not going to pay over $400 for a card that won't smoke my GTX 770... I can play all games on moderate settings now, so I want ultra settings at 2k that make use of a 144hz monitor - or bust. -

tyr8338 I`m using gigabyte 2 fan 1070 for over a year now and it`s really good, it`s good overclocker and is running at 1974 mhz overclock 24/7 and 8600 on ram, probably it would be able to go even higher but it`s fine for me :) It`s quiet but at around 50% fan speed it produces some strange vibration sound sometimes, it dosn`t bother me all that much tbh but it can be a little annoying.Reply