Nvidia GeForce GTX 1070 Graphics Card Roundup

NEW: Gigabyte GTX 1070 G1 Gaming 8G

Why you can trust Tom's Hardware

If there's one thing we've come to expect from Gigabyte's G1 Gaming cards, it's the highest possible performance at a reasonable price. The company's GeForce GTX 1070 G1 Gaming 8G follows that familiar mission with three fans and a fairly bold-looking fan shroud.

Technical Specifications

Exterior & Interfaces

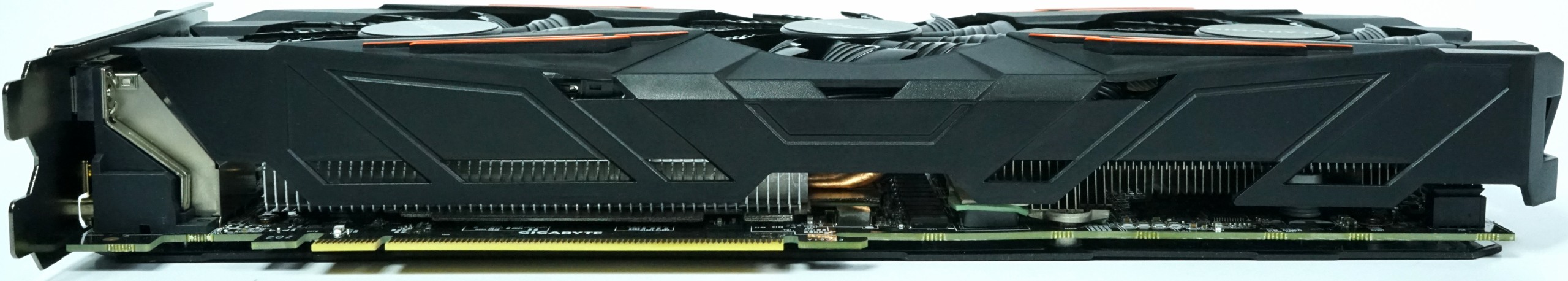

The fan shroud is made of anthracite-colored matte plastic with decorative orange highlights. Weighing in at a modest 797 grams, the G1 Gaming 8G is a true flyweight. A length of 28.2cm should fit easily in most cases, and a height of 11.5cm is about average. The 3.5cm width is typical of dual-slot designs.

A total of three 80mm fans are meant to ensure the right amount of airflow and pressure. The fact that you can't tell these fans are fairly small is due to the card's proportions, which make it look bigger than it actually is.

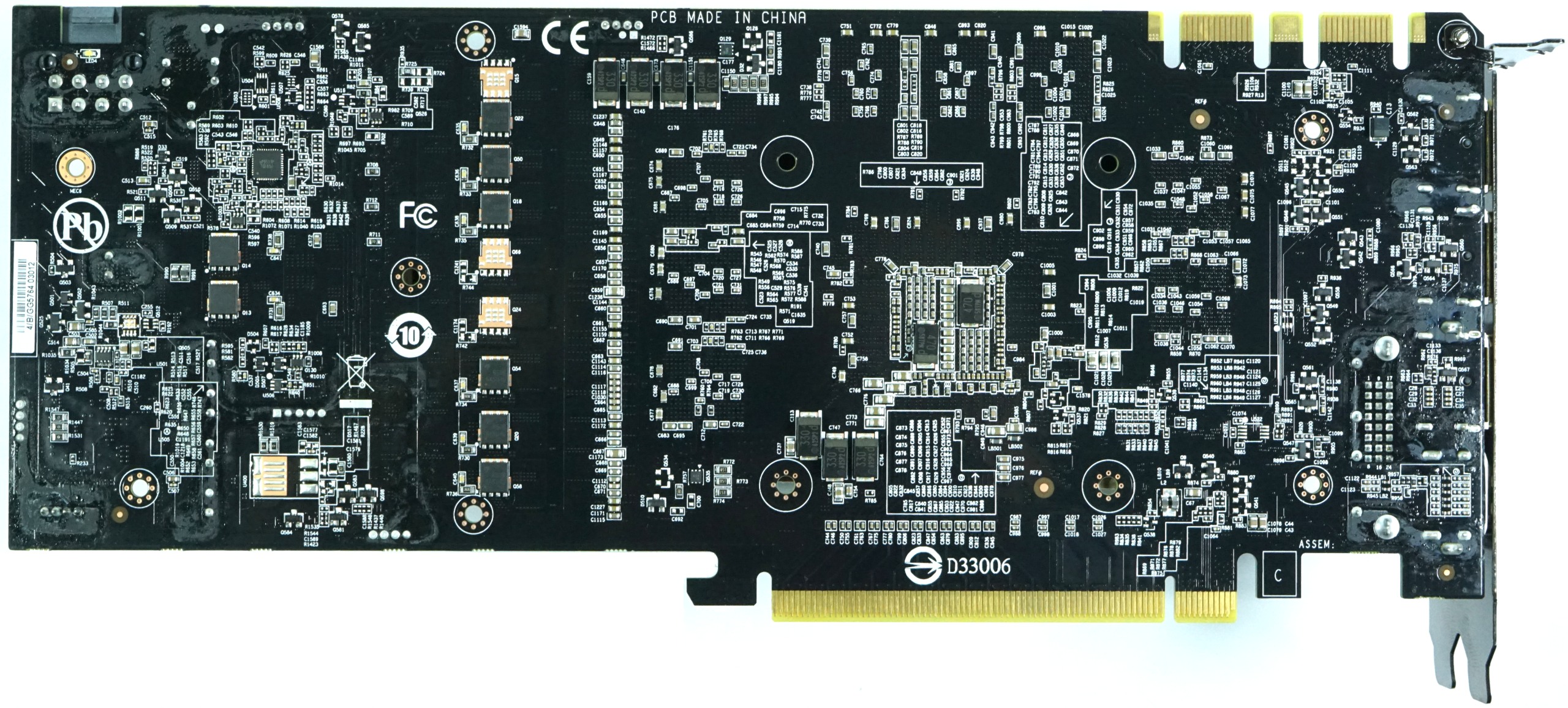

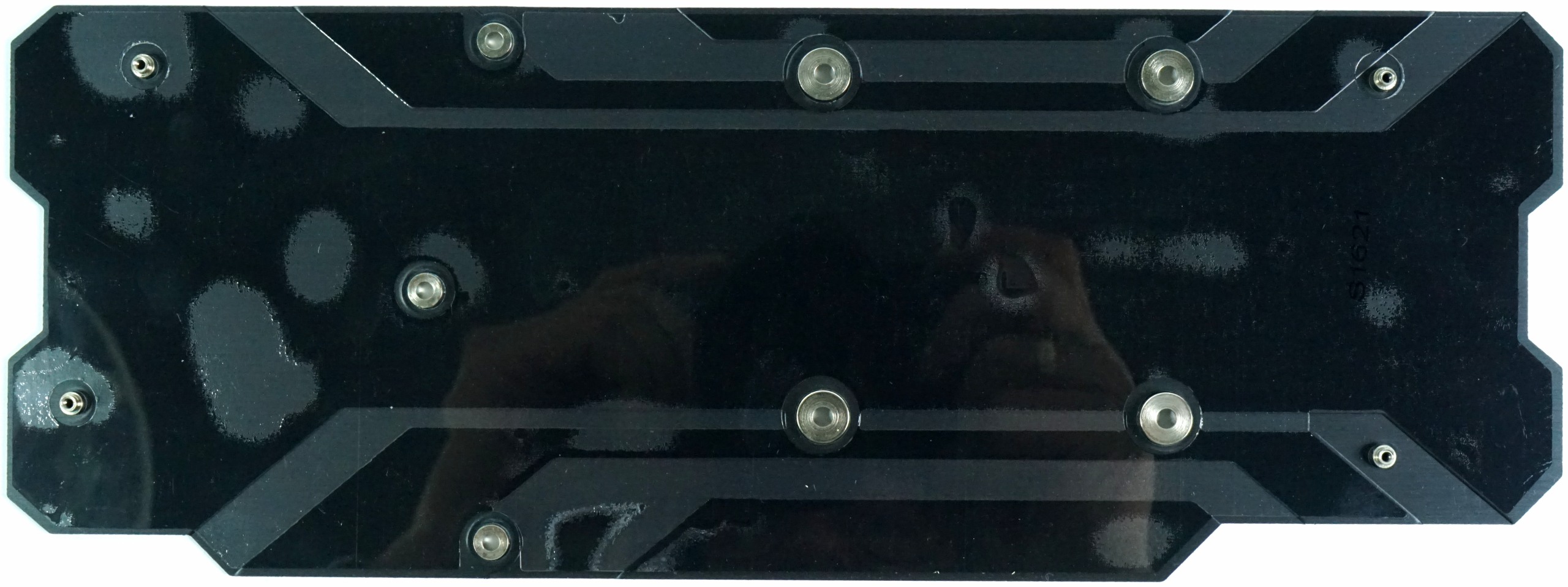

The back of the board is covered by a single-piece plate made of what looks like anodized aluminum and adorned with a white Gigabyte logo (there is no back-lighting). This backplate makes it necessary to plan for an extra 5mm of clearance behind the card, which may be relevant in multi-GPU configurations.

Up top, the card is branded with a Gigabyte logo back-lit by an RGB LED. There's also a fan-stop indicator and an eight-pin auxiliary power connector.

A peek at the end and bottom of the GeForce GTX 1070 G1 Gaming 8G reveals that its fins are oriented vertically. They won't allow any waste heat to exhaust out the back. Instead, hot air is pushed from the top and bottom, warming up other components in your case, along with your motherboard underneath. As such, this design decision is rather counterproductive.

The slot plate features five display outputs, of which a maximum of four can be used simultaneously in a multi-monitor setup. In addition to one dual-link DVI-D connector, the bracket also hosts one HDMI 2.0b port and three DisplayPort 1.4-capable interfaces. The rest of the slot plate has openings cut into it, which look like they're meant to help with airflow. In this configuration, however, they're not functional due to Gigabyte's fin design.

Board & Components

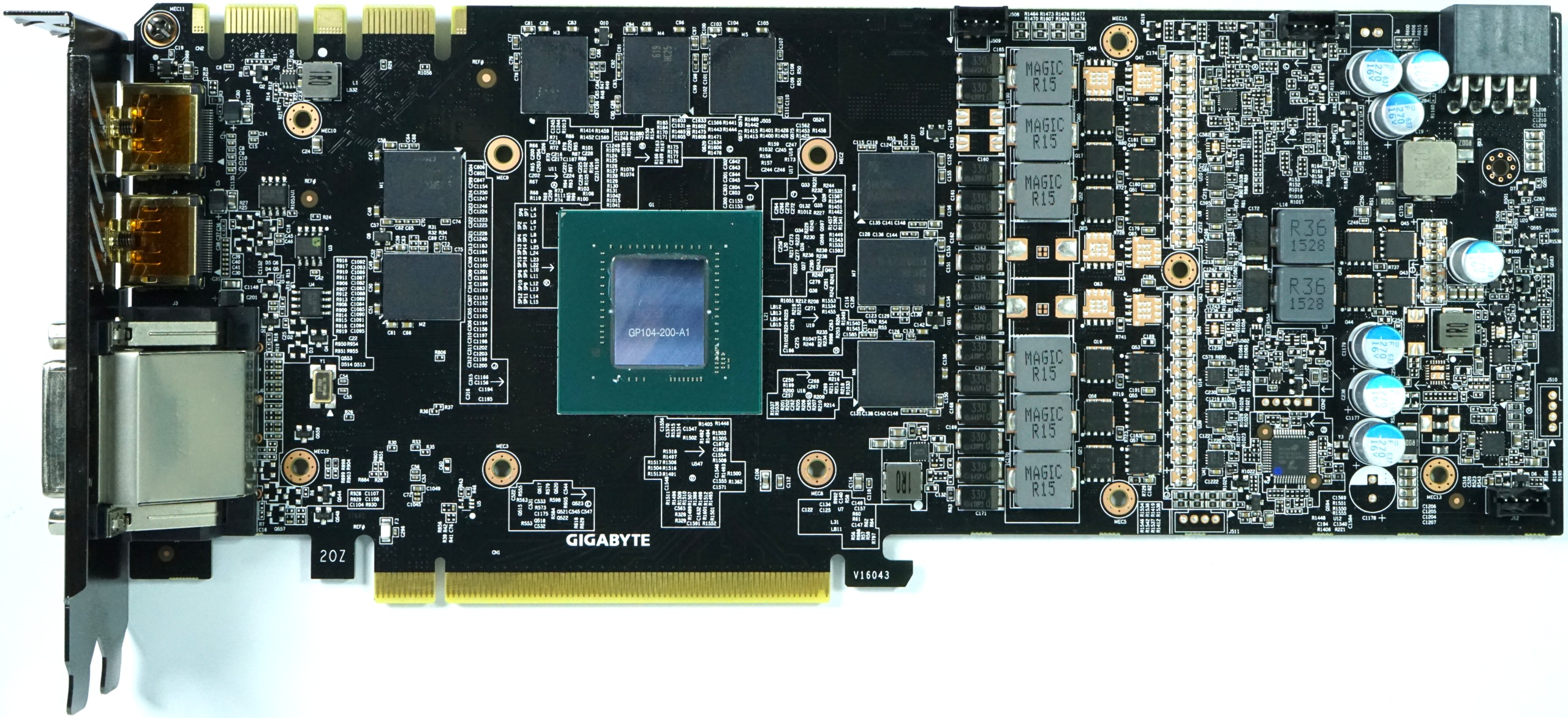

Gigabyte's PCB is proprietary with some conservative (yet interesting) component choices.

This card uses eight Samsung K4G80325FB-HC25 modules with a capacity of 8Gb (32x 256Mb). Each chip operates at voltages between 1.305 and 1.597V, depending on the selected clock frequency.

One unique addition is the Holtek HT32F52241 32-bit ARM Cortex-M0+ MCU, which Gigabyte uses for controlling the card's RGB effects.

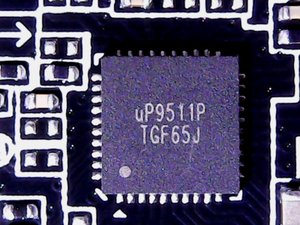

Gigabyte employs an almost oversized 6+2-phase design, wherein the six GPU phases are supplied by uPI Group's uP9511 eight-phase buck controller.

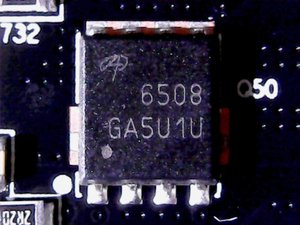

The GPU's voltage regulation is implemented using one Alpha & Omega AON6508 N-channel AlphaMOS on the low side of each phase and one AON6414A on the high side. What's interesting about these two MOSFETs is the low internal resistance across their performance range, as well as the low control values for the gates. It is thus safe to assume that this choice is an attempt to minimize voltage converter losses.

Gigabyte uses Foxconn's Magic coils, which are fully encapsulated and easy to install during automated assembly.

The memory's two phases are supplied by an unmarked PWM controller, which should roughly correspond to a uP1666 2/1-phase synchronous buck controller. It already comes with an integrated bootstrap Schottky diode and gate driver. The high- and low-side MOSFETs are similar to what we found on the GPU's power phases. Just the coils are slightly smaller.

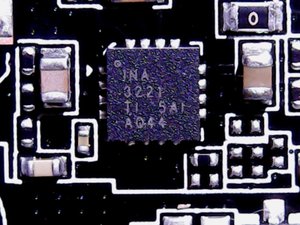

Current monitoring is handled by a triple-channel Texas Instruments INA3221. Two familiar capacitors are installed right below the GPU to absorb and equalize voltage peaks.

Power Results

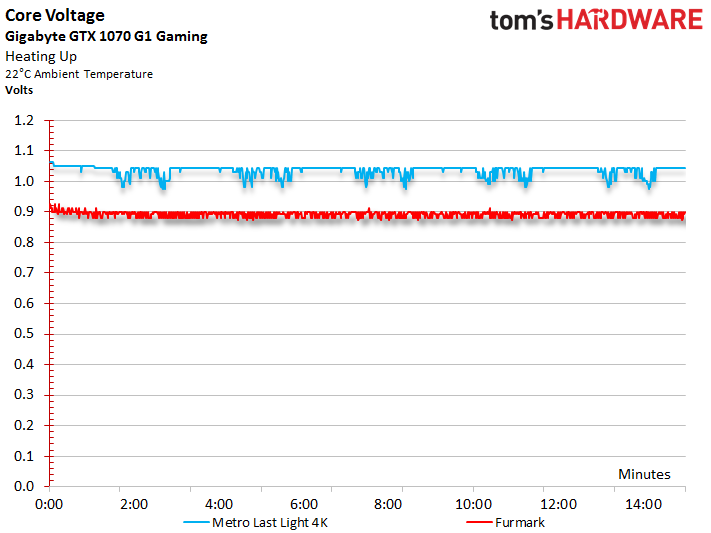

Before we look at power consumption, we should talk about the correlation between GPU Boost frequency and core voltage, which are so similar that we decided to put their graphs one on top of the other. This also shows that both curves drop as the GPU's temperature rises. Gigabyte uses a power target of ~185W, which in turn causes a relatively frenetic GPU Boost frequency. Short-term drops in clock rate hint that we, at least temporarily, hit the card's power limit.

After a warm-up run through our variable gaming load, the card's GPU Boost clock rate settles at an average 1936 MHz, down from a starting point of 1974 MHz. Under a more constant load, it falls to an average of 1759 MHz.

The voltage measurements look similar. Readings around 1.062V drop to 1.043V as the board's frequency slides.

Combining the measured voltages and currents allows us to derive a total power consumption we can easily confirm with our instrumentation by taking readings at the card's power connectors.

As a result of restrictions imposed by Nvidia, whereby the lowest attainable frequencies are sacrificed to hit higher GPU Boost clock rates, the power consumption of many factory-overclocked cards is disproportionately high when they're idle. This one can only go as low as 240 MHz. The following table shows what impact that has on our measurements:

| Idle | 13W |

|---|---|

| Idle Multi-Monitor | 14W |

| Blu-ray | 15W |

| Browser Games | 111-121W |

| Gaming (Metro Last Light 4K) | 180W |

| Torture (FurMark) | 183W |

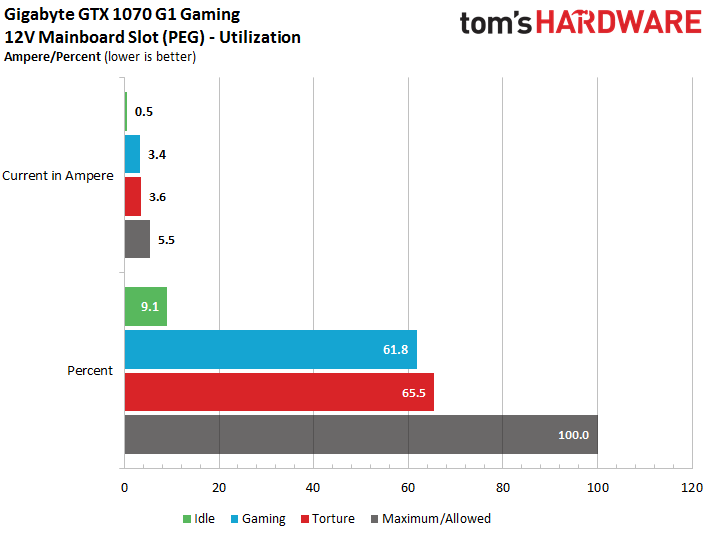

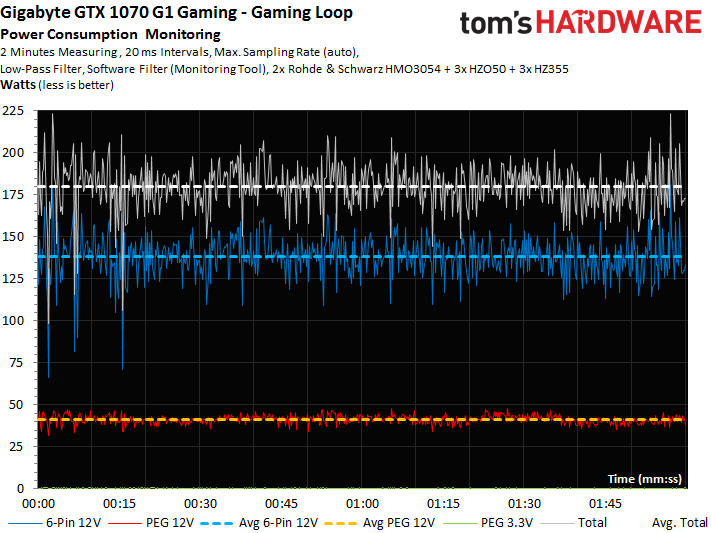

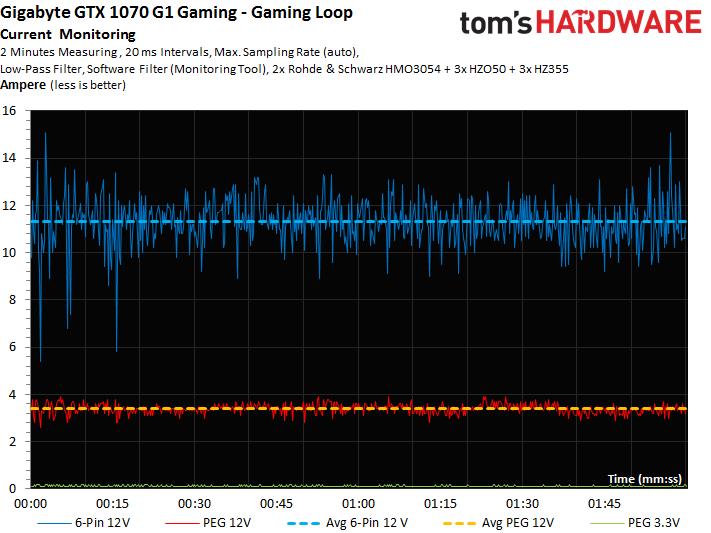

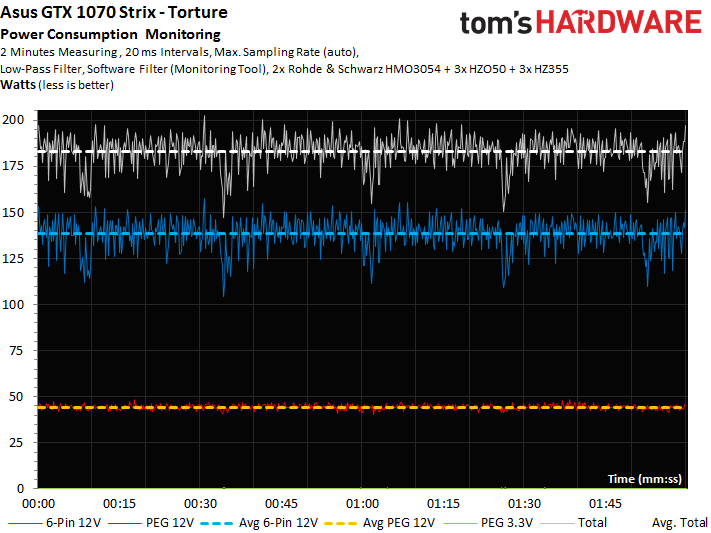

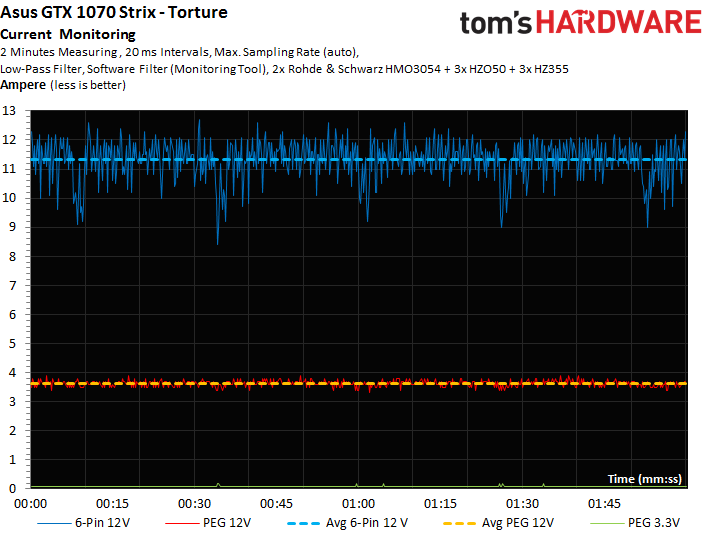

These charts go into more detail on power consumption at idle, during 4K gaming, and under the effects of our stress test. The graphs show how load is distributed between each voltage and supply rail, providing a bird's eye view of load variations and peaks.

The 3.6A we measure provides lots of margin below the PCI-SIG's 5.5A maximum for a PCIe slot, especially if you're using this card on an older motherboard. Gigabyte only feeds the memory through the PCIe slot; the other six phases are powered through the auxiliary eight-pin connector.

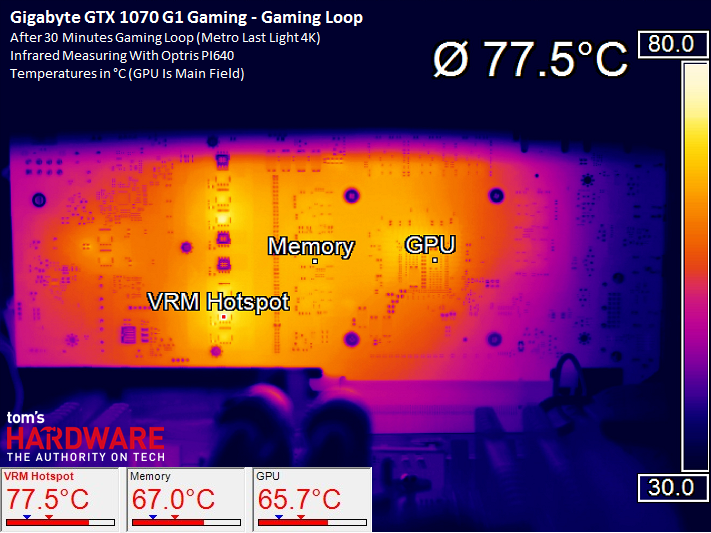

Temperature Results

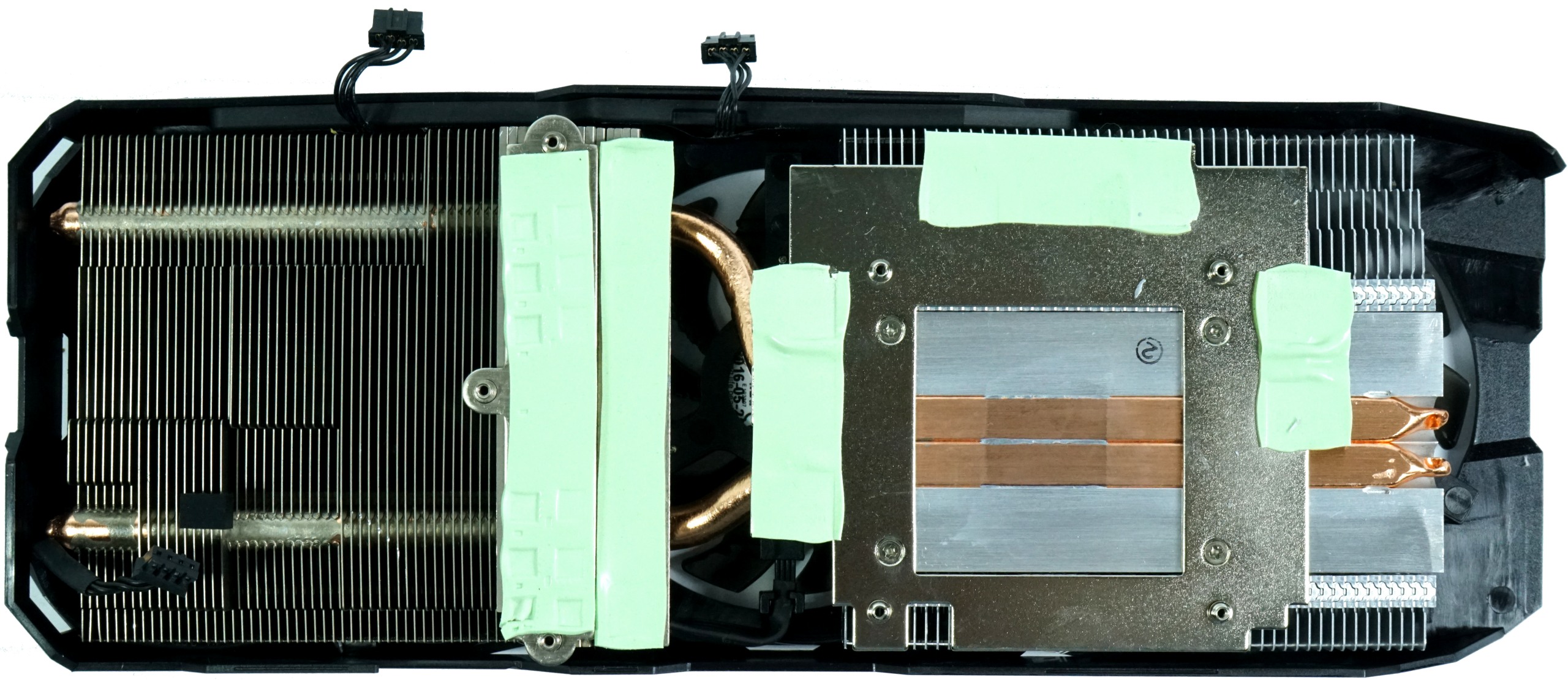

Gigabyte's backplate doesn't play an active role in cooling the GeForce GTX 1070 G1 Gaming 8G. It's mostly aesthetic, though the plate does contribute to the card's structural rigidity.

The built-in, two-stage heat sink for VRM and coils deserves a positive mention. We also like the fact that the memory modules are cooled directly by this simple design's stabilizing frame.

A direct consequence of this simplicity, unfortunately, is an uninspired and cheap-looking thermal solution. It consists of a finned sink with a thick aluminum base plate that also serves as a mount for two 8mm heat pipes in a flattened direct touch configuration. These pipes don't even cover the GPU entirely. They're hardly sufficient for moving excess heat. It would have been more appropriate for Gigabyte to use three heat pipes instead.

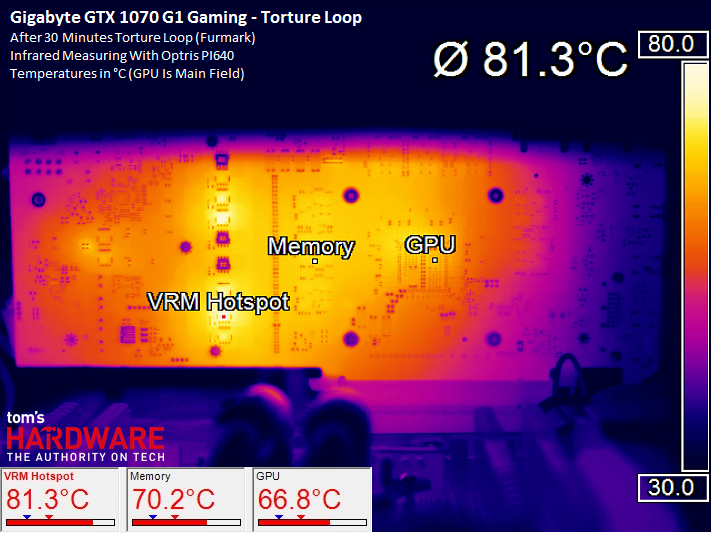

Of course, a maximum GPU temperature of 147°F (64°C) keeps this card in the green. But a safe thermal ceiling isn't attributable to the cooler, but rather Gigabyte's very low temperature target. After hitting that ceiling, the fans are audibly hard at work.

Looking at our infrared images, we see that the GPU package (and thus the PCB) is slightly warmer than the cooled GPU itself. A reading of 172°F (78°C) on the voltage converters is also bearable. But that's not surprising given dedicated cooling.

The same holds true when we run our stress test and observe the temperature rising only marginally. This cooler is merely acceptable, since it's already operating at its limit.

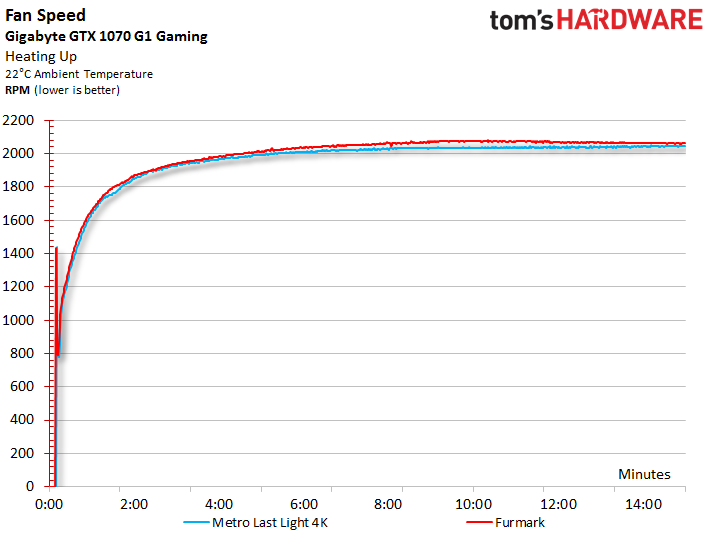

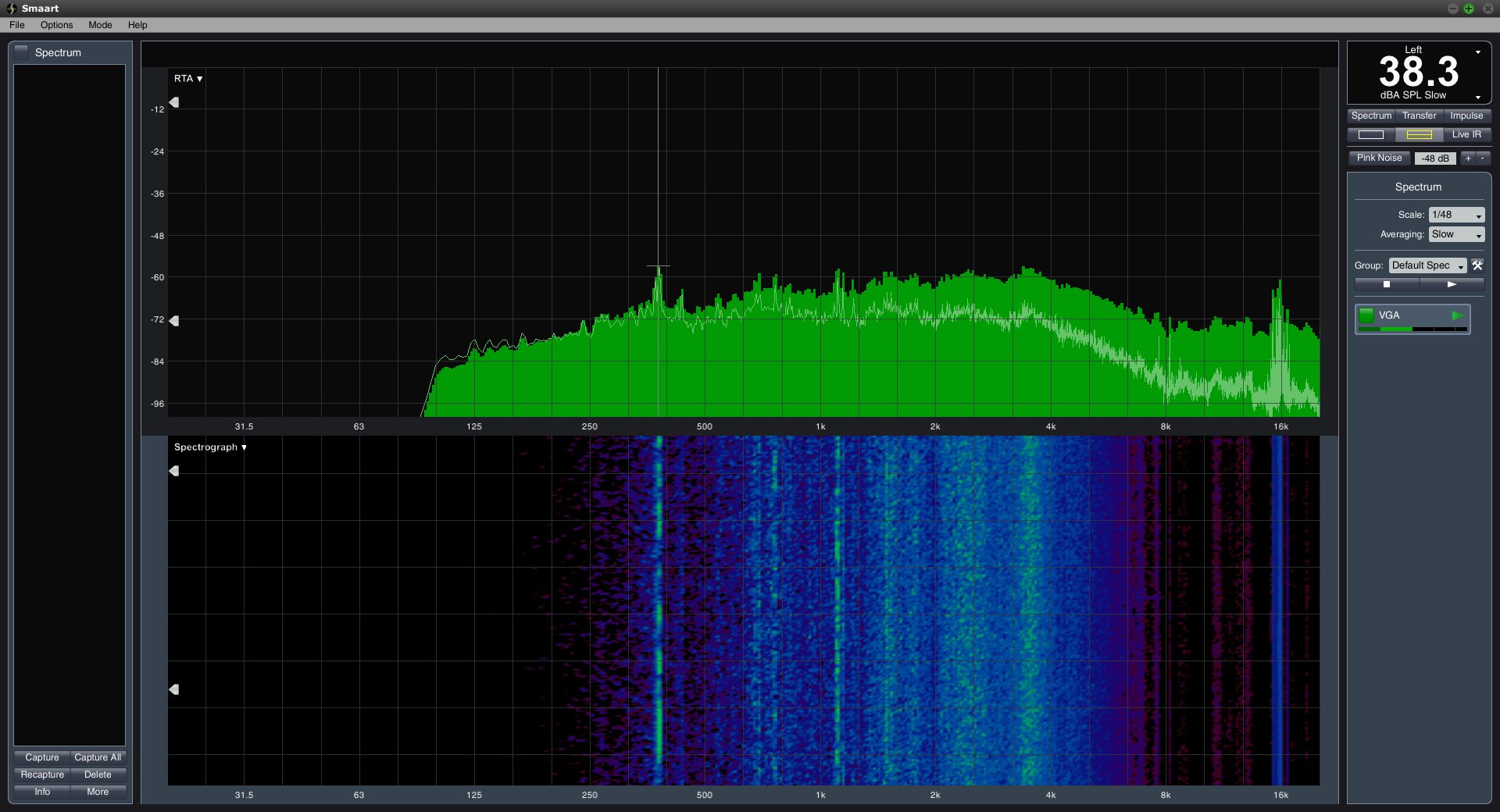

Sound Results

Hysteresis is perfectly implemented; there is no annoying on/off/on loop to worry about. However, permanent fan speeds above 2000 RPM are certainly nothing to celebrate, since they're clearly audible.

Although we've heard worse than 38.3 dB(A) under full load, Gigabyte's fans are still significantly louder than many other GeForce GTX 1070s, and unnecessarily so.

Gigabyte GeForce GTX 1070 G1 Gaming

Reasons to buy

Reasons to avoid

MORE: Nvidia GeForce GTX 1080 Roundup

MORE: Nvidia GeForce GTX 1060 Roundup

MORE: All Graphics Content

Current page: NEW: Gigabyte GTX 1070 G1 Gaming 8G

Prev Page NEW: Asus ROG Strix GTX 1070 Next Page Gigabyte GTX 1070 Mini ITX OCGet Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

adamovera Archived comments are found here: http://www.tomshardware.com/forum/id-3283067/nvidia-geforce-gtx-1070-graphics-card-roundup.htmlReply -

TheRev MasterOne Asus ROG Strix GeForce GTX 1070 and Gigabyte GeForce GTX 1070 G1 Gaming pics are switched ! Did I win something? a job?Reply -

eglass Disagree entirely about the 1070 being good value. It's the worst value in the 10-series lineup. $400 for a 1070 is objectively a bad value when $500 gets you into a 1080.Reply -

adamovera Reply

The filenames of the images are actually swapped as well, weird - fixed now, thanks!19761809 said:Asus ROG Strix GeForce GTX 1070 and Gigabyte GeForce GTX 1070 G1 Gaming pics are switched ! Did I win something? a job? -

barryv88 Are you guys serious!?? You recommend a 1070 card that costs $530 which isn't even available in the U.S? That sorta cash gets you a much quicker GTX 1080! The controversy on this site is just non stop. If your BEST CPU's list wasn't enough already...Reply -

bloodroses I wonder how the Gigabyte 1070 mini compares to the other mini cards like the Zotac and MSI unit?Reply -

adamovera Reply

This is a roundup of all the 1070's we've tested. The graphics card roundups originate with our German bureau and are re-posted in the UK, so they'll sometimes include EU-only products - I'm guessing they're appropriately priced to the competition in their intended markets.19761907 said:Are you guys serious!?? You recommend a 1070 card that costs $530 which isn't even available in the U.S? That sorta cash gets you a much quicker GTX 1080! The controversy on this site is just non stop. If your BEST CPU's list wasn't enough already...

The Palit received the lowest level award - the Asus, the MSI, and one of the Gigabyte boards are better options. -

JackNaylorPE Reply19761822 said:Disagree entirely about the 1070 being good value. It's the worst value in the 10-series lineup. $400 for a 1070 is objectively a bad value when $500 gets you into a 1080.

The 1080 , like it's predcessors (780 and 980) has consistently been the red headed stepchild of the nVidia lineup. So much so that nVidia even intentionally nerfed the performance of the x70 series because its performance was so close to the x80.

The 1080 has dropped in price because, sitting as it does between the 1080 Ti and the 1070... it doesn't exactly stand out. When the 780 Ti came out, the price of the $780 dropped $160 overnight, so much so that I immediately bought two of them and the two sets of game coupons knocked $360 off my XMas shoping list. At a net $650, it was a good buy.

Using the 1070 FE as a reference and the relative performance data published by techpowerup for example.....Used MSI Gaming model since it is one model line where TPU reviewed all 3 cards

The $404 MSI 1070 Gaming X is 104.2% as fast as the 1070 FE

The $550 MSI 1080 Gaming X is 128.2% as fast as the 1070 FE

The $740 MSI 1080 Ti Gaming X is 169.5% as fast as the 1070 FE

So the cost per dollar for comparable quality designs is:

MSI 1070 Gaming X = 104.2 / $404 = 0.258

MSI 1080 Gaming X = 128.2 / $550 = 0.233

MSI 1080 Ti Gaming X = 169.5 / $740 = 0.229

Even at $500 .. the 1080 only comes in 2nd place at 0.256, so no, the better value argument doesn't hold, even assuming we were getting an equal quality card.

Looked at other comparable as a means of comparison and they are for the most par equal or higher ....

Strix at $420, $550 and $780

AMP at $435, $534 and $750

Now with any technology, eeking those last bits of performance out anything always comes at a increased cost. You more of a cost premium going from Gold to Platinum rating on a PSU than you do from Bronze to Silver of even Gold. It's simply another example of Law of Diminishing Returns. So we should expect to pay more per each performance gain with each incremental increase and that hold here. You'd expect that for each increase in performance the % increase in price per dollar would get bigger. But the x80 is quite an aberration.

We get a whopping 10.7 drop of 0.025 from the 1070 to the 1080

We get a rather teeny 1.7 drop of 0.004 from the 1080 to the 1080 Ti

Therefore, logically.... you are paying a 10.7% cost penalty for the increased performance to move up to from the 1070 to 1080 ... whereas the cost penalty for the increased performance to move up to from the 1080 to 1080 Ti is only 1.7% This is why eacxh time the Ti has been introduced, 1080 sales have tanked.

Another way t look at it...

1070 => 1080 = 23% performance increase for $146 ROI = 15.8%

1080 => 1080 Ti = 32% performance increase for $190 ROI = 16.8 %

It's not a matter **if** you can get **a** 1080 at $500., it's whether you can get the one you want. How is it that the $550 models have more sales than the less expensive ones ? Some folks don't care about noise, some folks don't OC, some folks hope they will be able to get the full performance available to us **if** someone ever comes out with a BIOS editor. And yes, there will cards that are heavily discounted for any number of reasons ... low factory clock, noise or heat concerns , some have taken some hits from bad reviews or are discounted simply because sales are poor .... but if a card is selling well below the average price it is because it's not as well made or just isn't selling for real or imagined issues. (example being EVGA SC / FTW ACX designs are now fixed but but EVGA still has a black eye from the earlier cooler problems and if buying EVGA, peeps want iCX. Finally, the 1080 bears the burden of being compared with the 780 and 980 whicc again got lost between the higher / lower cards.

Given the above ROI numbers, I am surprised that all the 1080s have not dropped below $500. But to my eyes, the `080 only starts to make sense when the cost is below $520 and **the ones I'd buy** just aren't there yet

-

Adroid Yea I refuse to buy a 1070 because they are overpriced, period. I almost bought a 1080 but judging from the performance difference it simply wasn't worth it, either. There is not much a 1080 will do that a 1070 won't. What I mean is - a 1060 will run 1080p fine. With that in mind, a 1080 gives very marginal benefit at 2k, and neither one will run 4k smoothly - so what's the point.Reply

If the 1080 was a 350$ card, I might have bit, but as it stands now I'll be waiting for the 1080ti to drop a bit, which can run most games in 2k over 120fps - and justifying an upgrade from a GTX 700 series card. I'm not going to pay over $400 for a card that won't smoke my GTX 770... I can play all games on moderate settings now, so I want ultra settings at 2k that make use of a 144hz monitor - or bust. -

tyr8338 I`m using gigabyte 2 fan 1070 for over a year now and it`s really good, it`s good overclocker and is running at 1974 mhz overclock 24/7 and 8600 on ram, probably it would be able to go even higher but it`s fine for me :) It`s quiet but at around 50% fan speed it produces some strange vibration sound sometimes, it dosn`t bother me all that much tbh but it can be a little annoying.Reply