High-End Graphics Card Roundup

Benchmark Results: Tom Clancy’s Endwar

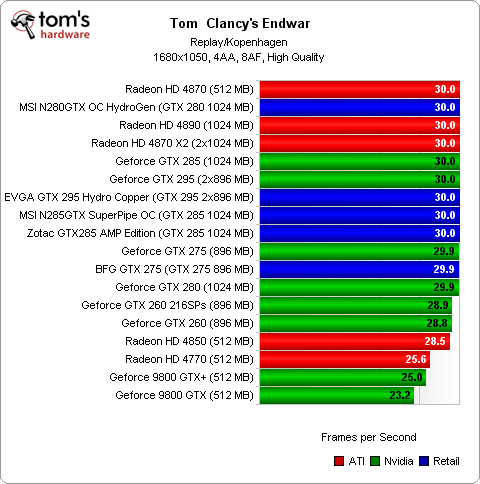

Endwar uses an enhanced Unreal 3 engine that looks very good on the screen. This game really isn’t ideal for benchmarking, since a software limiter caps its frame rate at 30 FPS. This is typical for most current real-time strategy games, which limits settings options for benchmarking to a narrow range.

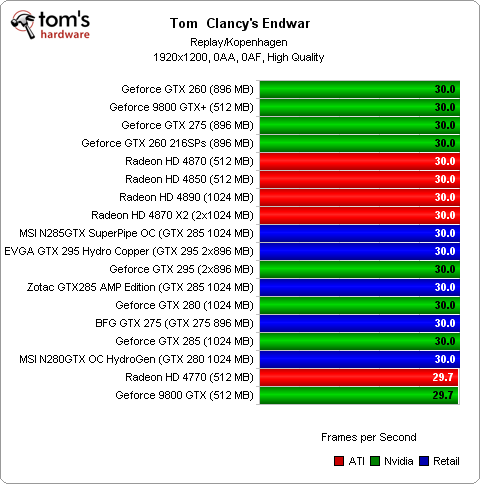

Nevertheless, we observed it was possible to depress frame rates below 30 FPS in Replay Kopenhagen. We could only use the 1920x1200 resolution without AA. In that case, the 3D engine and the fastest graphics cards all had enough headroom to hit the 30 FPS limit, which produced identical results for all contenders. Borderline cards in this category include the GeForce 9800 GTX+ and the Radeon HD 4870, both of which achieved frame rates of 29.5 FPS, which rounded up to 30 FPS. Any faster cards were clipped to 30 FPS, although they probably could have delivered at least a few frames per second more.

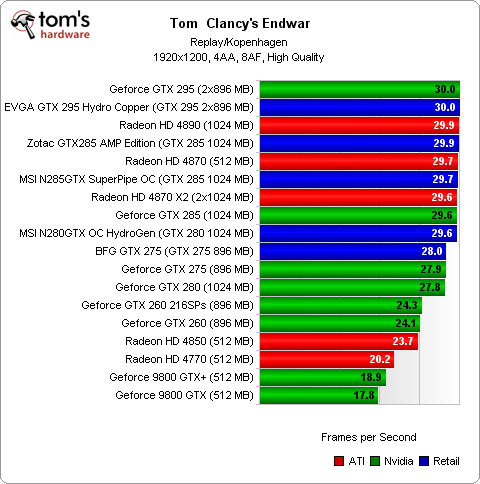

When AA was turned on, our measurements worked better because the replay could tax even the most powerful graphics cards more heavily. At the bottom of the range, the results are less ambiguous. If a graphics card lacks sufficient muscle, a difference of 10 FPS means a 30% decrease in performance. The High setting was as high as we could go for graphics quality.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Benchmark Results: Tom Clancy’s Endwar

Prev Page Benchmark Results: The Last Remnant Next Page Benchmark Results: Tom Clancy’s H.A.W.X-

And those HAWX benchmarks look ridiculous. ATi should wipe floor with nvidia with that. Of course you didn't put dx10.1 support on. Bastard...Reply

-

cangelini quarzOnly one ATi card? What happened to all those OC'd 4890s?Reply

These are the same boards that were included in the recent charts update, and are largely contingent on what vendors submit for evaluation. We have a review upcoming comparing Sapphire's new 1 GHz Radeon HD 4890 versus the stock 4890. It'll be up in the next couple of weeks, though. -

ohim Am i the only one that find this article akward since looking at the tests done on Ati cards on The Last Remnant game makes me wonder what went wrong ... i mean it`s UT3 engine ... why so low performance ?Reply -

curnel_D Ugh, please tell me that The Last Remnant hasnt been added to the benchmark suite.Reply

And I'm not exactly sure why the writer decided to bench on Endwar instead of World In Conflict. Why is that exactly?

And despite Quarz2's apparent fanboism, I think HAWX would have been better benched under 10.1 for the ATI cards, and used the highest stable settings instead of dropping off to DX9. -

anamaniac The EVGA 295 is the stuff gods game with.Reply

I would love that card. I would have to replace my whole system to work it properly however.

I want $1500 now... i7 920 (why get better? They all seem to be godly overclockers) and EVGA 295.

How about a test suit of the EVGA GTX 295 in crossfire for a quad-gpu configuration? I know there's driver issues, but it would be fun to see what it could do regardless. Along with seeing how far Toms can OC the EVGA GTX 295.

Actually... Toms just needs to do a new system building recommendation roundup. I find them useful personally, and would have used it myself had my cash source had not lost his job... -

Weird test:Reply

1) Where are the overclocking results?

2) Bad choice for benchmarks: Too many old DX9 based graphic engines (FEAR 2, Fallout 3, Left4Dead with >100FPS) or Endwar which is limited to 30FPS. Where is Crysis?

3) 1900x1200 as highest resolution for high-end cards? -

EQPlayer Seems that the cumulative benchmark graphs are going to be a bit skewed if The Last Remnant results are included in there... it's fairly obvious something odd is going on looking at the numbers for that game.Reply -

armistitiu Worst article in a long time. Why compare how old games perform on NVIDIA's high end graphic cards? Don't get me wrong i like them but where's all the Atomic stuff from Saphire, Asus and XFX had some good stuff from ATI too. So what.. you just took the reference cards from ATI and tested them? :| That is just wrong.Reply -

pulasky WOW what a piece of s********** is this """"""review"""""" Noobidia pay good in this days.Reply