AMD Radeon HD 7970: Promising Performance, Paper-Launched

A sample of AMD's next-generation Radeon HD 7970 landed in our lab just before Santa. Don't cross your fingers for one of these in your stocking, though. It's not available yet. Is it fast, though? Our benchmarks suggest yes, but more testing remains!

Graphics Core Next: The Southern Islands Architecture

While the Radeon HD 7970 is the first commercially-available product based on AMD’s Graphics Core Next architecture, the design itself is certainly no secret. In order to give developers some lead time to better exploit its upcoming hardware, Graphics Core Next was exposed in June 2011 at the Fusion Developer Summit. According to Eric Demers, the CTO of AMD’s graphics division, the existing VLIW architecture that was released with Radeon 2000 could still be leveraged for more graphics potential. However, it’s limited in general-purpose computing tasks. Instead of massaging old technology yet again, the company chose to invest in a completely new architecture.

More compute performance and flexibility are great, but gaming alacrity and visual quality remain the most pertinent responsibilities of high-end desktop graphics hardware. Thus, AMD’s challenge was to create a GPU with a broader focus, simultaneously improving the 3D experience. In order to do that, the company abandoned the Very Long Instruction Word architecture in favor of Graphics Core Next.

The Efficiency Advantage Of Graphics Core Next

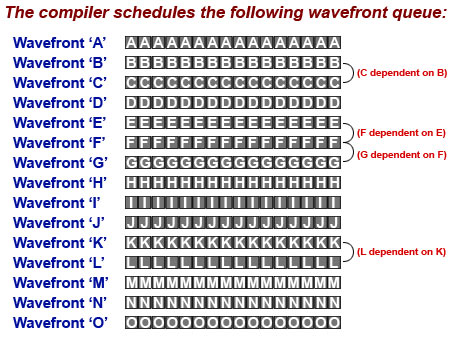

AMD’s VLIW architecture is very efficient at handling graphics instructions. Its compiler is optimized for mapping dot product math, which is at the heart of 3D graphics calculations. The design’s weakness is exposed when it has to schedule the scalar instructions seen in more general-purpose applications, though. Sometimes, it turns out that an instruction set, called a wavefront, can’t execute until another wavefront has been resolved. This is called a dependency. The problem is that the compiler can’t change the wavefront queue after it has been scheduled, so precious ALU potential is often wasted as instructions wait until the dependencies are addressed.

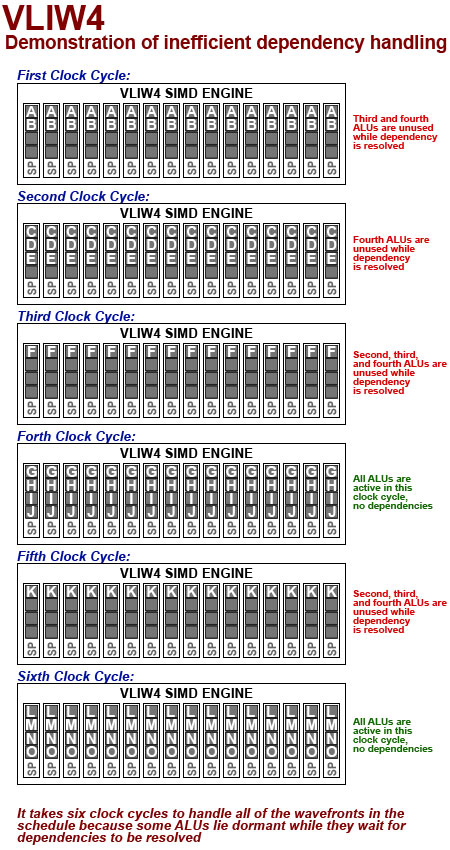

Here’s a theoretical example of how a Radeon HD 6970’s VLIW4 SIMD engine and its 16 banks of shader processors (each SP with four ALUs, totaling 64 ALUs per SIMD engine), would handle a wavefront queue that includes dependencies:

As you can see, the VLIW architecture doesn’t handle dependencies ideally. Wavefronts needlessly wait in the queue while free ALUs sit idle.

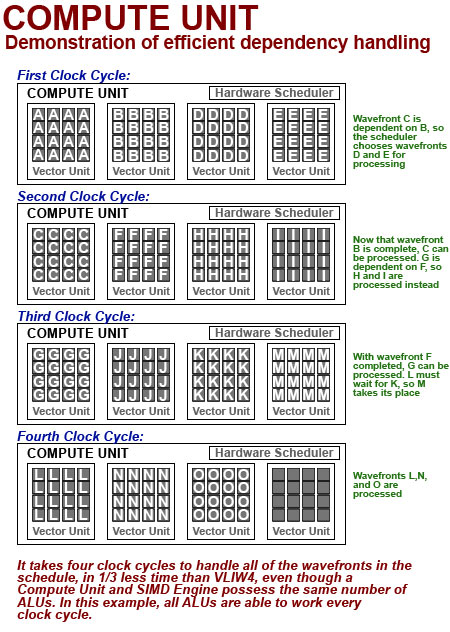

So, how do you optimize the amount of scalar work you can perform per clock cycle? Enter the Compute Unit, or CU, which replaces the SIMD engines to which we’ve grown accustomed.

Each CU has four Vector Units (VUs), each with 16 ALUs, for a total of 64 ALUs per CU. Thus, the number of ALUs per SIMD/CU is the same. The main difference is that, unlike the shader processors in a SIMD engine, each of the four VUs can be scheduled independently. The CU has its own hardware scheduler that's able to assign wavefronts to available VUs with limited out-of-order capability to avoid dependency bottlenecks.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

This is the key to better compute performance because it gives each VU the ability to work on different wavefronts if a dependency exists in the queue:

In our example, the same wavefront queue that took six clock cycles to complete on VLIW4 SIMD engine can be executed in four clock cycles on Graphics Core Next. AMD suggests that Radeon HD 7970 can achieve up to a 7.5x peak theoretical compute performance improvement over the Radeon HD 6970 due to higher utilization. The real-world difference depends on compiler efficiency, and in some compute tasks, the Radeon HD 7970 is barely better than the 6970 on a per-ALU and per-clock basis. We certainly saw lots of variance in our own benchmarks, as you’ll see. But it’s safe to say, based on our synthetic testing, that the Graphics Core Next compute potential exceeds VLIW4.

Deeper Into The Compute Unit

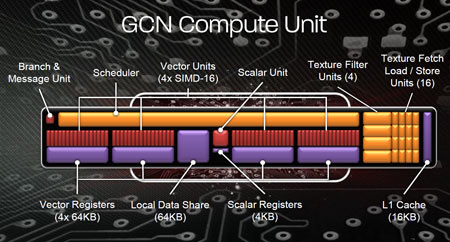

As mentioned previously, the CU replaces the SIMD engines (as named by AMD) we’ve seen since the Radeon 2000 days. We also noted that the CU is composed of four vector units, which, in turn, contain 16 ALUs and a register file each. And we know that the VUs operate independent of each other.

Let’s go into more depth on those VUs, though. Unlike the simplified clock cycle we use in the example above, each VU can process one-quarter of one wavefront per cycle. Equipped with four VUs, each CU can consequently process four wavefronts every four cycles, the equivalent of one wavefront per cycle per CU.

We haven’t yet talked about the CU’s scalar unit, which is primarily responsible for branching code and pointer arithmetic. The vector units could handle those tasks too, but this co-processor’s strength is in offloading scalar work in order to allow the vector units to flex their muscles in more appropriate ways.

Each CU has four texture units tied to a 16 KB read/write L1 cache, which is two times bigger than the VLIW4’s read-only cache. Historically, L1 was only used to read textures. Now, though, they can go back and forth through the same cache.

Current page: Graphics Core Next: The Southern Islands Architecture

Prev Page Radeon HD 7970: A Holiday Surprise That You Can't Buy Next Page Bringing It All Together: The Tahiti GPU And Radeon HD 7970Don Woligroski was a former senior hardware editor for Tom's Hardware. He has covered a wide range of PC hardware topics, including CPUs, GPUs, system building, and emerging technologies.

-

thepieguy If Santa is real, there will be one of these under my Christmas tree in a few more days.Reply -

a4mula From a gaming standpoint I fail to see where this card finds a home. For 1920x1080 pretty much any card will work, meanwhile at Eyefinity resolutions it's obvious that a single gpu still isn't viable. Perhaps this will be something that people would consider over 2x 6950, but that isn't exactly an ideal setup either. While much of the article was over my head from a technical standpoint, I hope the 7 series addresses microstuttering in crossfire. If so than perhaps 2x 7950 (Assuming a 449$) becomes a viable alternative to 3x 6950 2GB. I was really hoping we'd see the 7970 in at 449, with the 7950 in at 349. Right now I'm failing to see the value in this card.Reply -

cangelini a4mulaFrom a gaming standpoint I fail to see where this card finds a home. For 1920x1080 pretty much any card will work, meanwhile at Eyefinity resolutions it's obvious that a single gpu still isn't viable. Perhaps this will be something that people would consider over 2x 6950, but that isn't exactly an ideal setup either. While much of the article was over my head from a technical standpoint, I hope the 7 series addresses microstuttering in crossfire. If so than perhaps 2x 7950 (Assuming a 449$) becomes a viable alternative to 3x 6950 2GB. I was really hoping we'd see the 7970 in at 449, with the 7950 in at 349. Right now I'm failing to see the value in this card.Reply

I'll be trolling Newegg for the next couple weeks on the off-chance they pop up before the 9th. A couple in CrossFire could be pretty phenomenal, but it remains to be seen if they maintain the 6900-series scalability. -

cangelini thepieguyIf Santa is real, there will be one of these under my Christmas tree in a few more days.Reply

Hate to break it to you, but there won't be, unless you celebrate Christmas in mid-January.

Start treating your SO super-nice and ask for one for Valentine's Day! -

danraies cangeliniStart treating your SO super-nice and ask for one for Valentine's Day!Reply

If I ever find someone that will buy me a $500 graphics card for Valentine's Day I'll be proposing on the spot. -

a4mula cangeliniI'll be trolling Newegg for the next couple weeks on the off-chance they pop up before the 9th. A couple in CrossFire could be pretty phenomenal, but it remains to be seen if they maintain the 6900-series scalability.Reply

While I have little doubt that 2x of these cards would be very impressive, so would the $1100+ pricetag. I guess coming from the 580 SLI standpoint it might not seem like much, but if you've been considering the $750 ($900 for mobo+psu difference) 3x 6950 route like myself it seems like a major jump.

Of course this is all just initial reaction towards the earliest of benchmarks. Given awhile to really dig around the new 7xxx, while allowing it to mature from a driver standpoint might make the 3x6950 seem foolhardy.

-

Zombeeslayer143 WOW!!! I love the conslusion; all of it, which basically is interpretted as "I'm biased towards Nvidia," and trys to say don't buy this card! Has the nerve to mention Kepler as an alternative; right, Kepler, as in 1 year away. The GTX580 just got "Radeon-ed" in it's rear. I'm not biased towards either manufacturer, just love to see and give credit to a team of people with passion, vision, and hardwork come together and put their company back on the map, as is shown here today with AMD's launch of the 7970. It's AMD's version of "Tebow Time!!"Reply