AMD Radeon R9 380X Nitro Launch Review

Tonga’s been around for more than a year now, and it’s taken all this time for it to finally be available for regular desktop PCs. Before now, this configuration was exclusively offered for a different platform. But does it still make sense today?

Power Usage Results

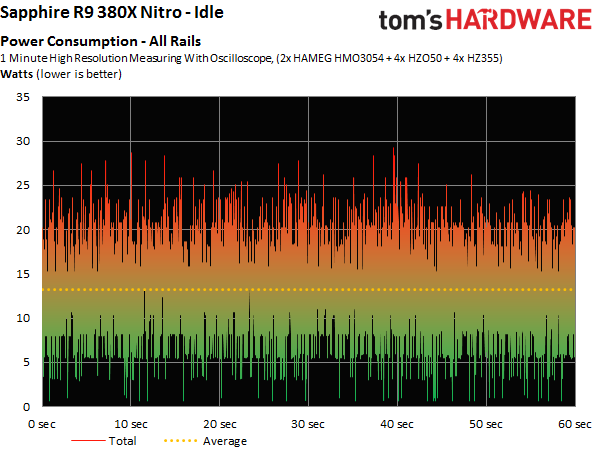

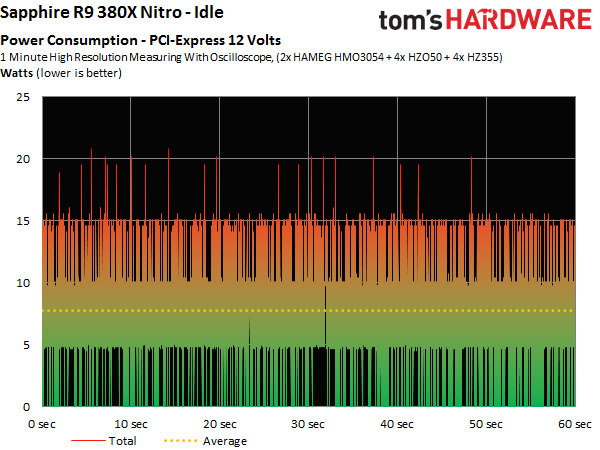

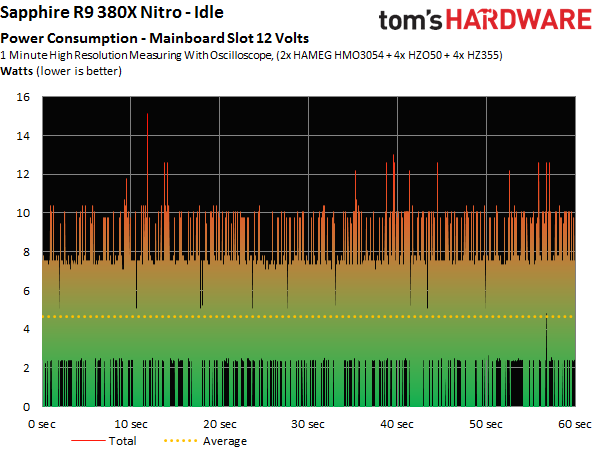

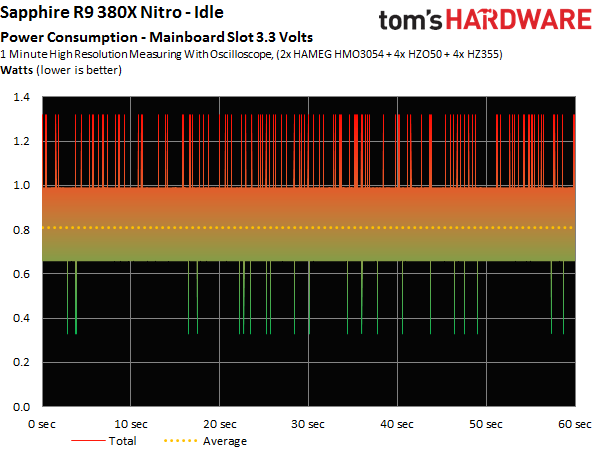

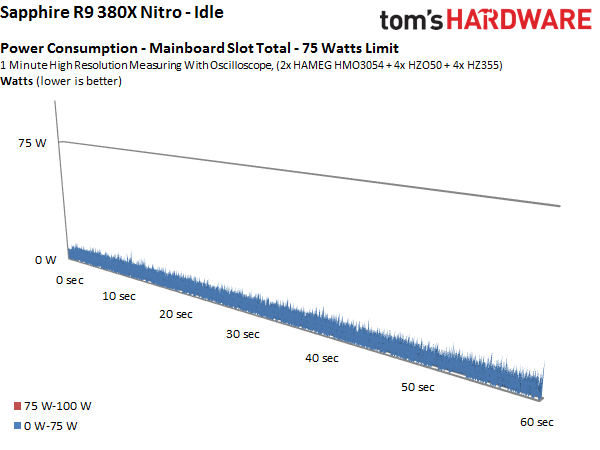

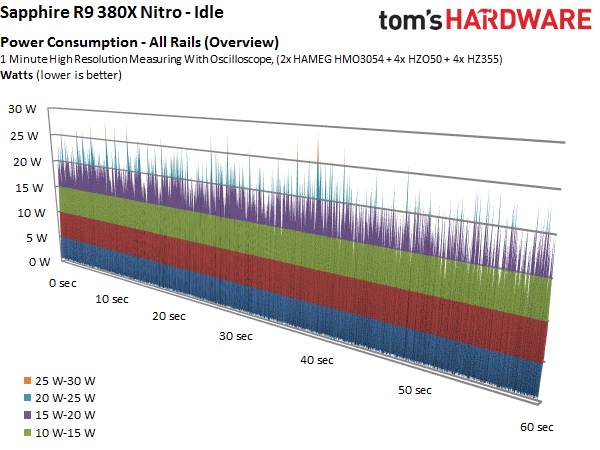

Measuring power consumption at idle can be difficult due to the system’s load spontaneously changing. For this reason, we use a longer observation and pick the most representative two-minute time interval for our reading. We then average the results from this interval.

Please note that the minimum and maximum results for the different rails in the tables below don’t necessarily add up to the overall total for that category. The reason for this is that the extremes on the individual rails don’t always occur all at the same time.

Idle Power Consumption

At idle, AMD's Radeon R9 380X consumes approximately 13W, and this number stays essentially the same when two monitors are connected.

| Header Cell - Column 0 | Minimum | Maximum | Average |

|---|---|---|---|

| PCIe | 0W | 21W | 8W |

| Motherboard 3.3V | 0W | 1W | 1W |

| Motherboard 12V | 0W | 15W | 5W |

| Graphics Card Total | 1W | 29W | 13W |

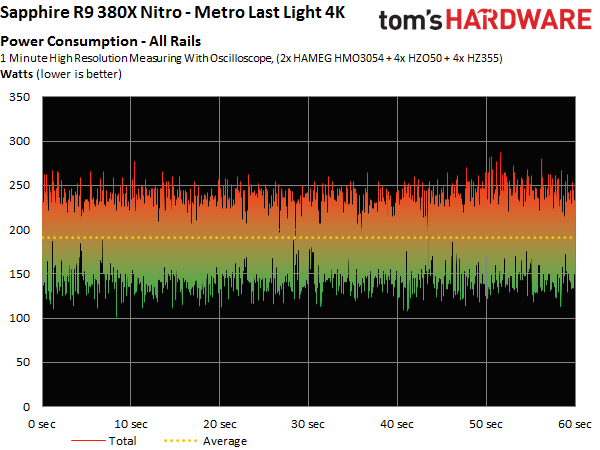

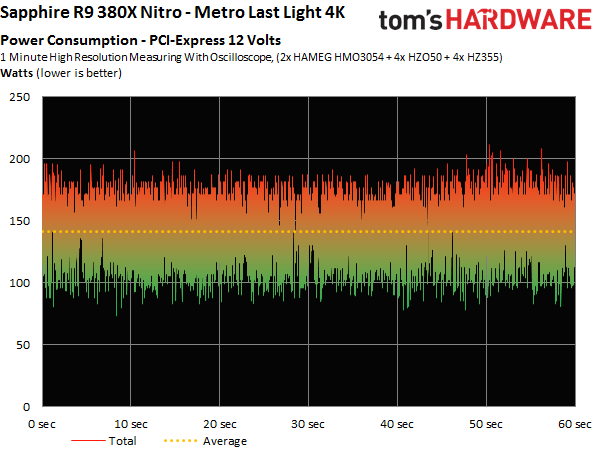

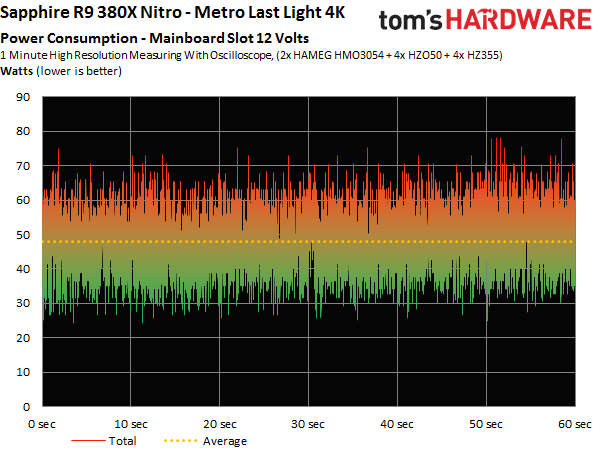

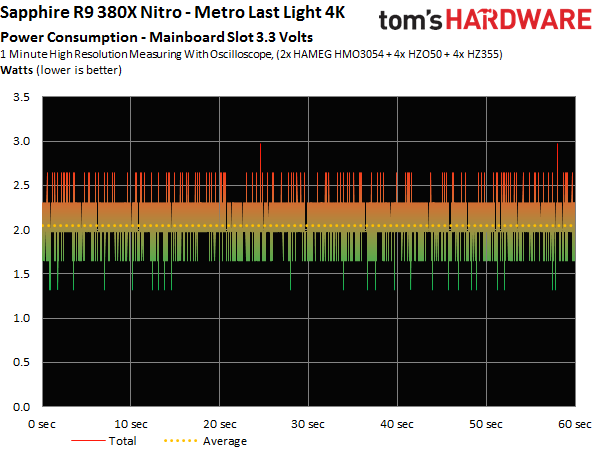

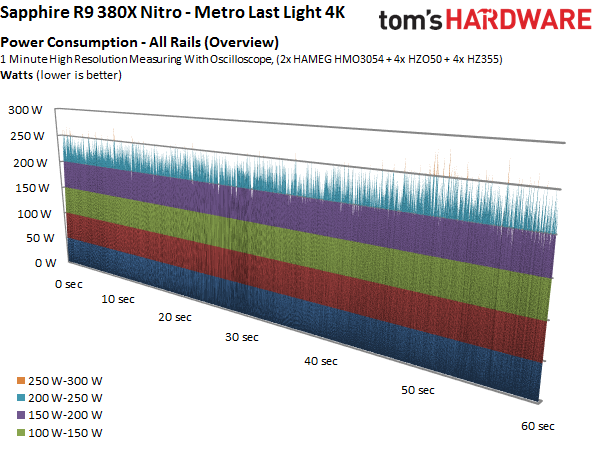

Gaming Power Consumption (Metro: Last Light Loop)

The gaming loop runs at Ultra HD, since that resolution imposes the highest power consumption. Sapphire's R9 380X comes in only six watts above MSI’s R9 380, which is a smaller delta than the performance increase of approximately nine percent might have suggested. Tonga XT turns out to be a bit more efficient across several different benchmark runs.

The table paints a fairly decent picture:

| Header Cell - Column 0 | Minimum | Maximum | Average |

|---|---|---|---|

| PCIe | 73W | 212W | 141W |

| Motherboard 3.3V | 1W | 3W | 2W |

| Motherboard 12V | 24W | 78W | 48W |

| Graphics Card Total | 102W | 287W | 191W |

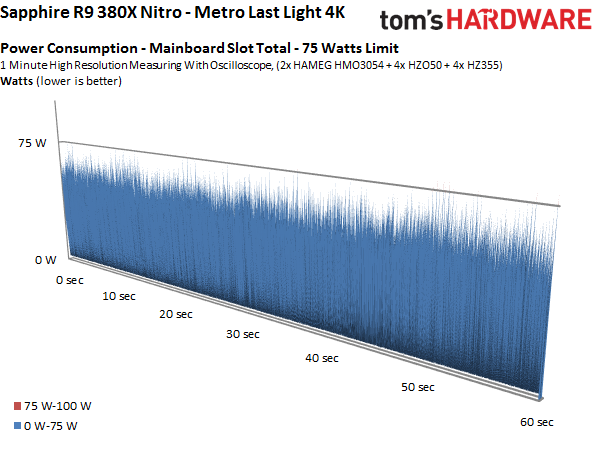

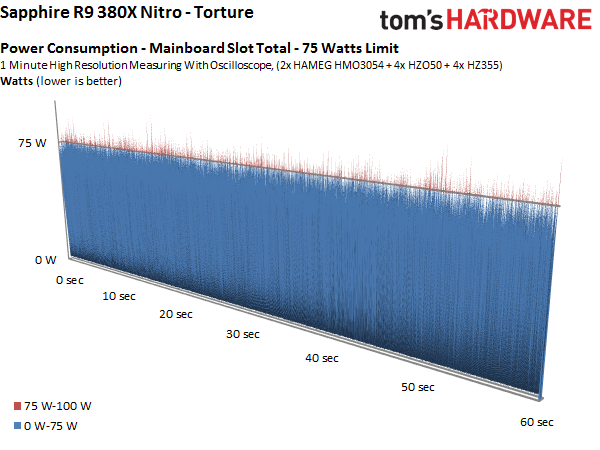

The motherboard slot never hits its output ceiling. Even the spikes almost never exceed the slot’s maximum. This is perfectly done.

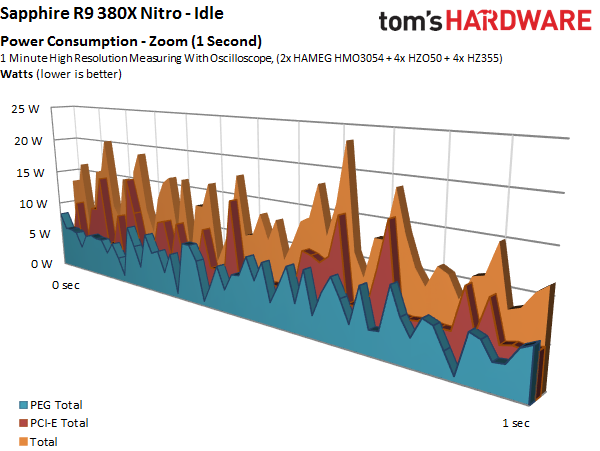

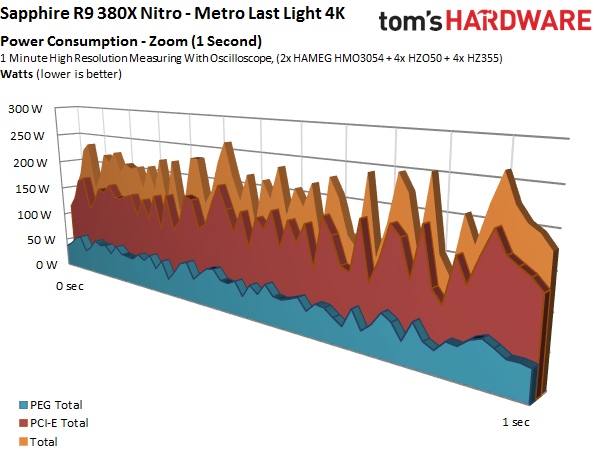

Pulling out a snapshot of just one moment in a game shows how power is distributed across the rails:

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

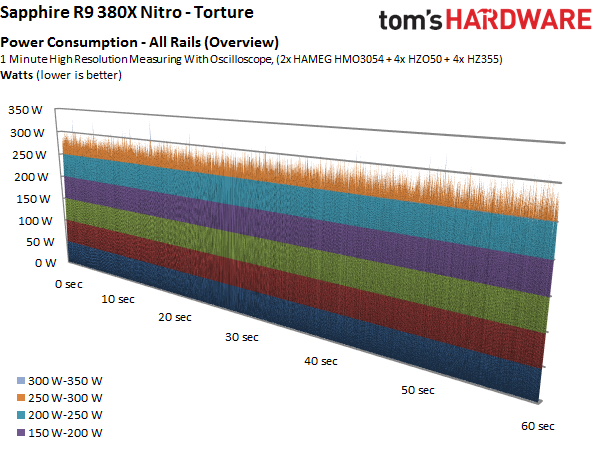

The overall distribution can be seen here in its usual gallery format:

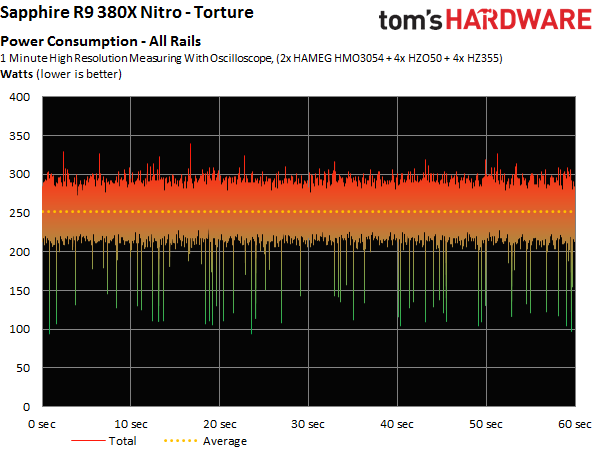

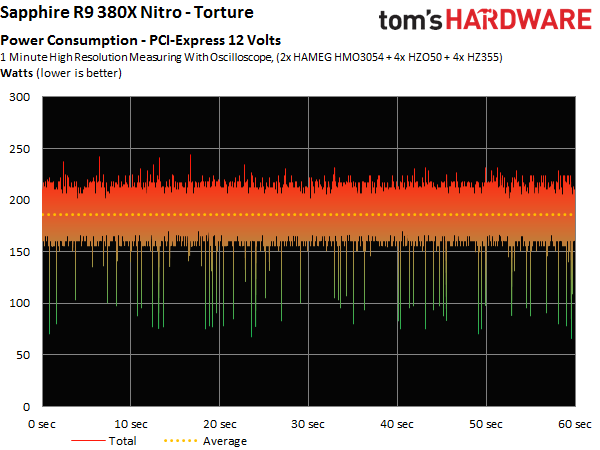

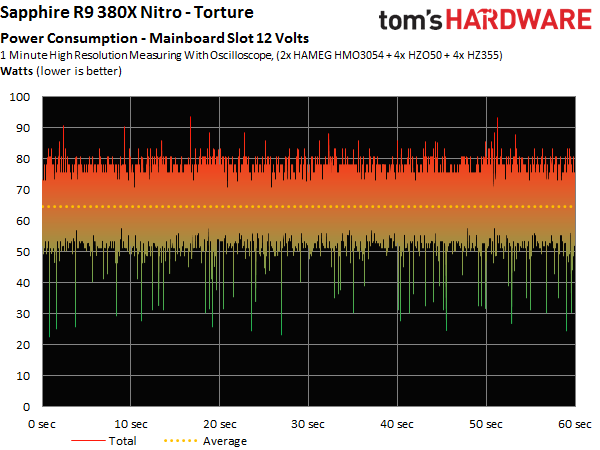

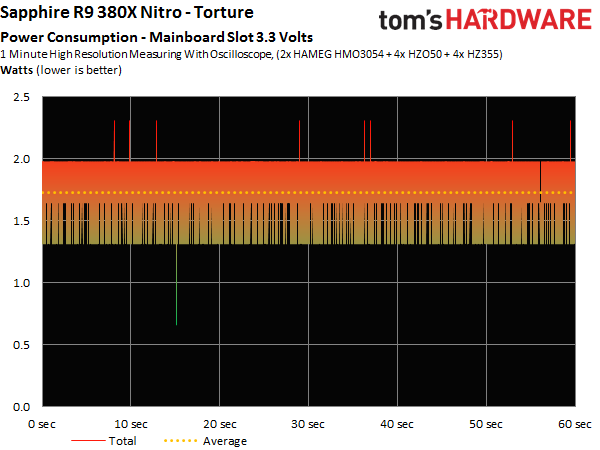

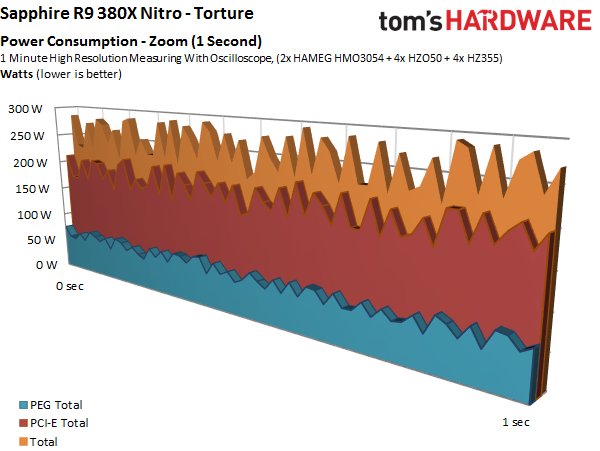

Full Load Power Consumption

Even when AMD's Radeon R9 380X is pushed as hard as possible via FurMark, its power supply stays stable. The new card does exhibit the same behavior we observed from the Nano, though: once it really gets going, it doesn’t stop for anything. The 252W it consumed during the torture test almost matched the 390(X).

| Header Cell - Column 0 | Minimum | Maximum | Average |

|---|---|---|---|

| PCIe | Row 0 - Cell 1 | 244W | 186W |

| Motherboard 3.3V | 1W | 2W | 2W |

| Motherboard 12V | 23W | 94W | 64W |

| Graphics Card Total | 94W | 340W | 252W |

We put together all of the individual full-load diagrams as well:

Current page: Power Usage Results

Prev Page QHD (2560x1440) Gaming Results Next Page Temperature Results

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

ingtar33 so full tonga, release date 2015; matches full tahiti, release date 2011.Reply

so why did they retire tahiti 7970/280x for this? 3 generations of gpus with the same rough number scheme and same performance is sorta sad. -

Eggz Seems underwhelming until you read the price. Pretty good for only $230! It's not that much slower than the 970, but it's still about $60 cheaper. Well placed.Reply -

chaosmassive been waiting for this card review, I saw photographer fingers on silicon reflection btw !Reply -

Onus Once again, it appears that the relevance of a card is determined by its price (i.e. price/performance, not just performance). There are no bad cards, only bad prices. That it needs two 6-pin PCIe power connections rather than the 8-pin plus 6-pin needed by the HD7970 is, however, a step in the right direction.Reply

-

FormatC ReplyI saw photographer fingers on silicon

I know, this are my fingers and my wedding ring. :P

Call it a unique watermark. ;) -

psycher1 Honestly I'm getting a bit tired of people getting so over-enthusiastic about which resolutions their cards can handle. I barely think the 970 is good enough for 1080p.Reply

With my 2560*1080 (to be fair, 33% more pixels) panel and a 970, I can almost never pull off ultimate graphic settings out of modern games, with the Witcher 3 only averaging about 35fps while at medium-high according to GeForce Experience.

If this is already the case, give it a year or two. Future proofing does not mean you should need to consider sli after only 6 months and a minor display upgrade. -

Eggz Reply16976217 said:Honestly I'm getting a bit tired of people getting so over-enthusiastic about which resolutions their cards can handle. I barely think the 970 is good enough for 1080p.

With my 2560*1080 (to be fair, 33% more pixels) panel and a 970, I can almost never pull off ultimate graphic settings out of modern games, with the Witcher 3 only averaging about 35fps while at medium-high according to GeForce Experience.

If this is already the case, give it a year or two. Future proofing does not mean you should need to consider sli after only 6 months and a minor display upgrade.

Yeah, I definitely think that the 980 ti, Titan X, FuryX, and Fury Nano are the first cards that adequately exceed 1080p. No cards before those really allow the user to forget about graphics bottlenecks at a higher standard resolution. But even with those, 1440p is about the most you can do when your standards are that high. I consider my 780 ti almost perfect for 1080p, though it does bottleneck here and there in 1080p games. Using 4K without graphics bottlenecks is a lot further out than people realize.

-

ByteManiak everyone is playing GTA V and Witcher 3 in 4K at 30 fps and i'm just sitting here struggling to get a TNT2 to run Descent 3 at 60 fps in 800x600 on a Pentium 3 machineReply