AMD Radeon R9 380X Nitro Launch Review

Tonga’s been around for more than a year now, and it’s taken all this time for it to finally be available for regular desktop PCs. Before now, this configuration was exclusively offered for a different platform. But does it still make sense today?

Temperature Results

GPU Temperatures

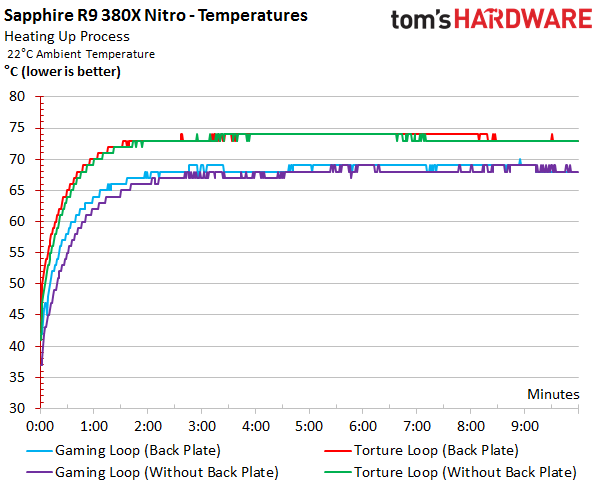

Since there’s a never-ending debate about backplates helping or not helping thermal performance, we decided to test with and without one in place. As mentioned, Sapphire puts pads between the back of its PCB and the backplate right behind the voltage regulation circuitry. It also indents the plate above that area.

We’ll soon see that this can help cool the voltage converters and the components around them. The GPU doesn’t really see a direct benefit, but overall, temperatures are observed to rise more slowly since less heat is transferred to the board itself through the VRM.

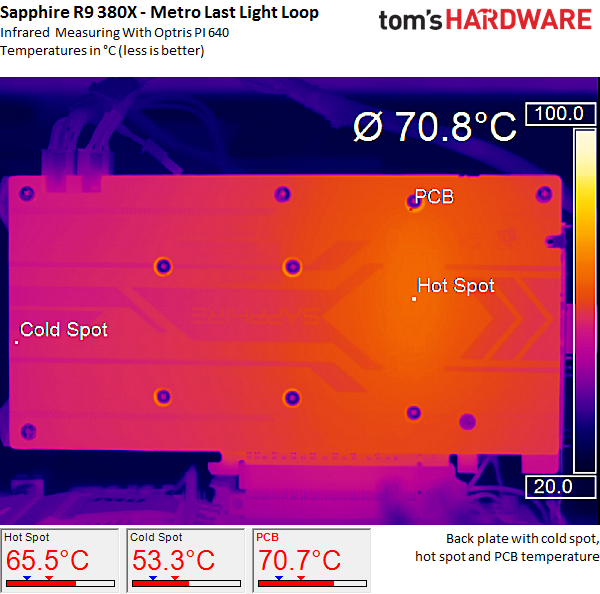

Let’s take another look at the back of AMD's Radeon R9 380X and its backplate to give the infrared images some context. The pictures illustrate what we just mentioned: the VRM is connected to the backplate via thermal pads, but the GPU does not enjoy any real advantage. This could have been done more efficiently by cutting a hole for the thermal pads into the isolation foil, which spans the entire surface of the backplate.

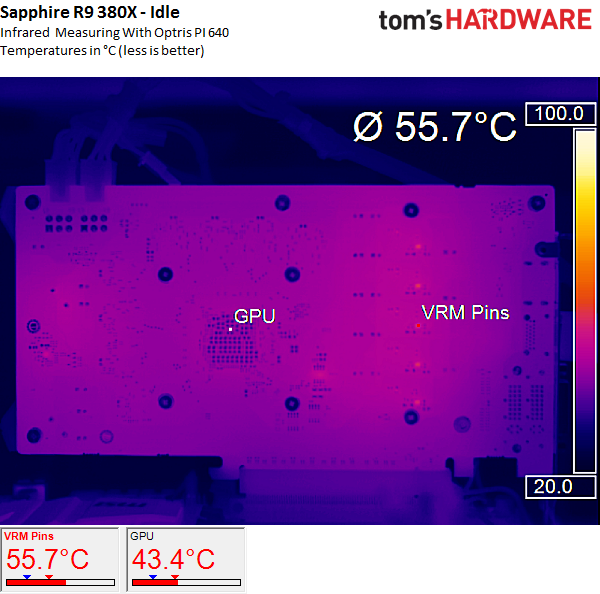

Idle Temperatures

First we'll look at the Radeon R9 380X’s temperatures without its backplate at idle. The GPU operates at approximately 43 degrees Celsius, since the card employs a semi-passive mode with its fans disabled. The VRM's temperature is fine as well.

Gaming Temperatures

During our gaming loop, the GPU's diode measures less than the interface temperature due to its proximity to the heat sink; the PCB is actually warming the processor. Adding thermal pads here would have been the logical choice. Since the card forces fan speed higher until its temperature target is reached, any improvement would have resulted in a markedly cooler and quieter graphics card.

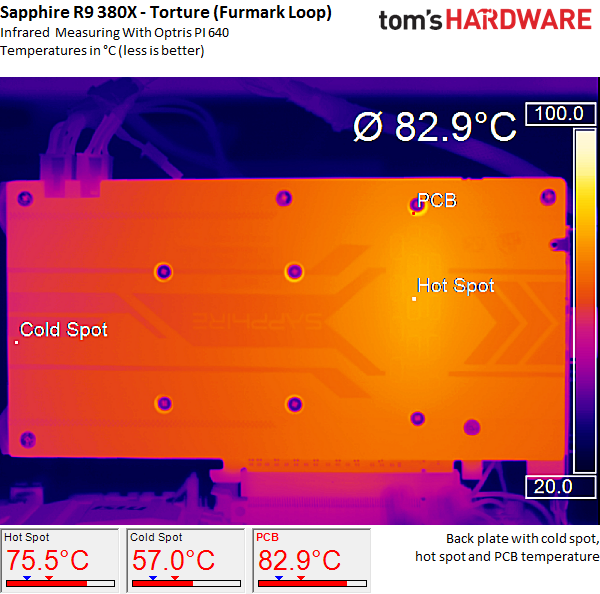

With the backplate in place, the temperature difference between the hottest and coldest part of AMD's PCA is 12 degrees Celsius. Without it, we measured the VRM's temperature at 93 degrees Celsius, whereas it’s now at 90 degrees Celsius in the same place, measured via a temperature probe inserted under the backplate. This slightly lower reading has a positive influence on the overall thermal story in the sense that the cover provided by the backplate doesn’t drive up the GPU's temperature. It usually does because the backplate makes it harder to dissipate the GPU’s waste heat.

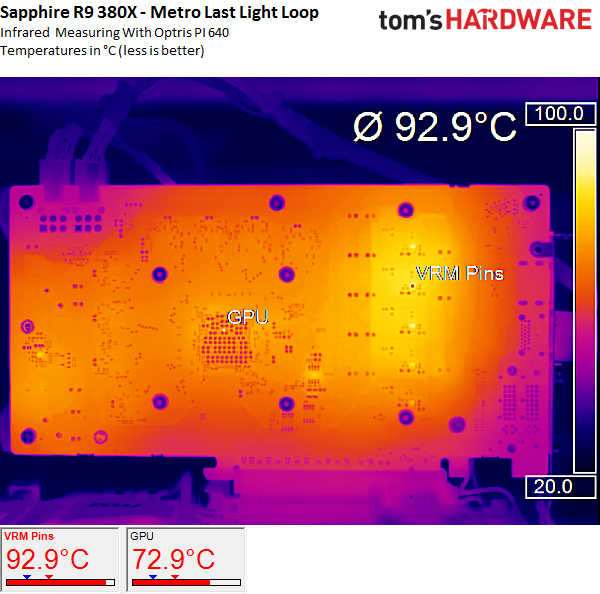

Full Load Temperatures

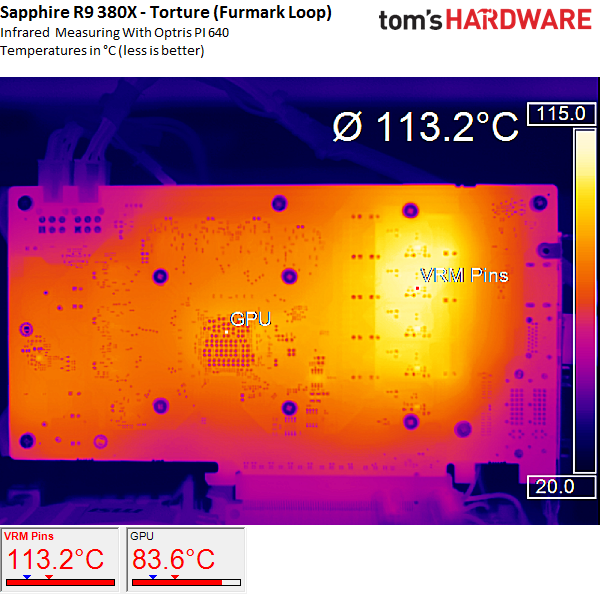

Now we see what happens when the VRM delivers more than 250W to the GPU, much of which is dissipated as waste heat. Without the backplate, we’re looking at temperatures in excess of 113 degrees Celsius. That's well beyond acceptable. Consequently, thermal energy spreads across the PCB and heats the GPU from the back. Almost 84 degrees Celsius where Tonga interfaces with the PCB is massive. At that point, the card is both hot and loud.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

With the backplate attached, the VRM's temperature is significantly lower at 107 degrees Celsius. The difference between the hottest and coolest parts of the board is now 18 degrees. This proves that a backplate can do more than look pretty and keep the graphics card stable. I can also make a positive impact on thermals if only a few square inches of padding are thrown in.

The VRM’s backplate-based cooling solution has ramifications for the fans as well. This makes sense, since the graphics card will try to achieve its target GPU temperature. Do higher temperatures from the back mean higher rotational speeds?

Temperatures Overview

A comparison between the results with and without the backplate shows that Sapphire does a great job with its cooling solution.

| Ambient Temperature22 °C | Open Bench Table, Gaming Loop | Open Bench Table, Torture | Closed Case,Gaming Loop | Closed Case,Torture | VRM Maximum(Torture) |

|---|---|---|---|---|---|

| Sapphire R9 380X Nitro(With Backplate) | 69 °C | 74 °C | 72-73 °C | 79 °C | 105 °C |

| Sapphire R9 380X Nitro(Without Backplate) | 69 °C | 73 °C | 73-74 °C | 80-81 °C | 113 °C |

Current page: Temperature Results

Prev Page Power Usage Results Next Page Overclocking and Noise Results

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

ingtar33 so full tonga, release date 2015; matches full tahiti, release date 2011.Reply

so why did they retire tahiti 7970/280x for this? 3 generations of gpus with the same rough number scheme and same performance is sorta sad. -

Eggz Seems underwhelming until you read the price. Pretty good for only $230! It's not that much slower than the 970, but it's still about $60 cheaper. Well placed.Reply -

chaosmassive been waiting for this card review, I saw photographer fingers on silicon reflection btw !Reply -

Onus Once again, it appears that the relevance of a card is determined by its price (i.e. price/performance, not just performance). There are no bad cards, only bad prices. That it needs two 6-pin PCIe power connections rather than the 8-pin plus 6-pin needed by the HD7970 is, however, a step in the right direction.Reply

-

FormatC ReplyI saw photographer fingers on silicon

I know, this are my fingers and my wedding ring. :P

Call it a unique watermark. ;) -

psycher1 Honestly I'm getting a bit tired of people getting so over-enthusiastic about which resolutions their cards can handle. I barely think the 970 is good enough for 1080p.Reply

With my 2560*1080 (to be fair, 33% more pixels) panel and a 970, I can almost never pull off ultimate graphic settings out of modern games, with the Witcher 3 only averaging about 35fps while at medium-high according to GeForce Experience.

If this is already the case, give it a year or two. Future proofing does not mean you should need to consider sli after only 6 months and a minor display upgrade. -

Eggz Reply16976217 said:Honestly I'm getting a bit tired of people getting so over-enthusiastic about which resolutions their cards can handle. I barely think the 970 is good enough for 1080p.

With my 2560*1080 (to be fair, 33% more pixels) panel and a 970, I can almost never pull off ultimate graphic settings out of modern games, with the Witcher 3 only averaging about 35fps while at medium-high according to GeForce Experience.

If this is already the case, give it a year or two. Future proofing does not mean you should need to consider sli after only 6 months and a minor display upgrade.

Yeah, I definitely think that the 980 ti, Titan X, FuryX, and Fury Nano are the first cards that adequately exceed 1080p. No cards before those really allow the user to forget about graphics bottlenecks at a higher standard resolution. But even with those, 1440p is about the most you can do when your standards are that high. I consider my 780 ti almost perfect for 1080p, though it does bottleneck here and there in 1080p games. Using 4K without graphics bottlenecks is a lot further out than people realize.

-

ByteManiak everyone is playing GTA V and Witcher 3 in 4K at 30 fps and i'm just sitting here struggling to get a TNT2 to run Descent 3 at 60 fps in 800x600 on a Pentium 3 machineReply