AMD Radeon R9 380X Nitro Launch Review

Tonga’s been around for more than a year now, and it’s taken all this time for it to finally be available for regular desktop PCs. Before now, this configuration was exclusively offered for a different platform. But does it still make sense today?

QHD (2560x1440) Gaming Results

AMD is making a lot of noise about the Radeon R9 380X being designed for 2560x1440. Consequently, the company can’t really blame us for running our benchmarks at QHD using the settings that PC gamers want to see. Since AMD also says its Radeon R9 380 is a true FHD-oriented graphics card, we need to examine how these claims hold up in light of the six to ten percent delta between the two cards.

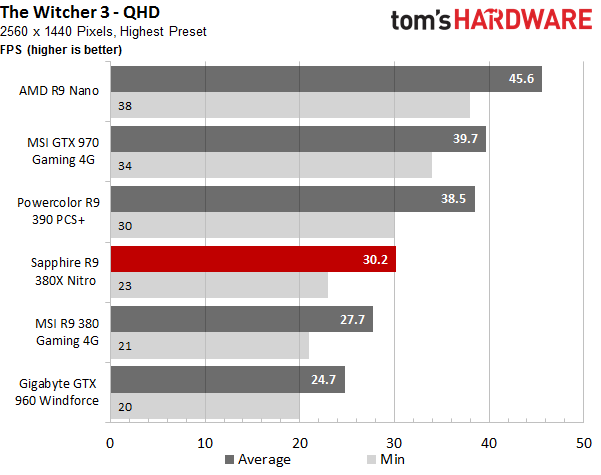

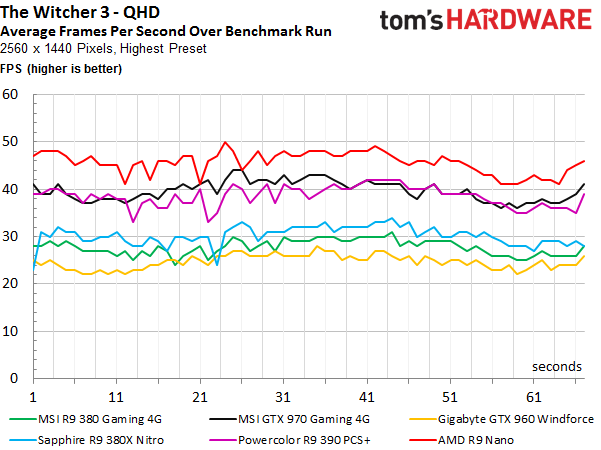

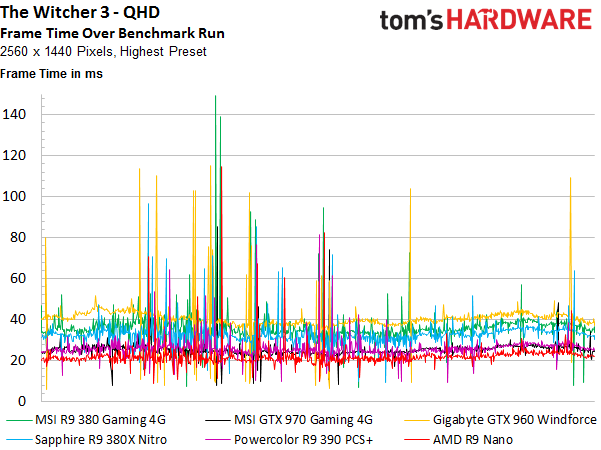

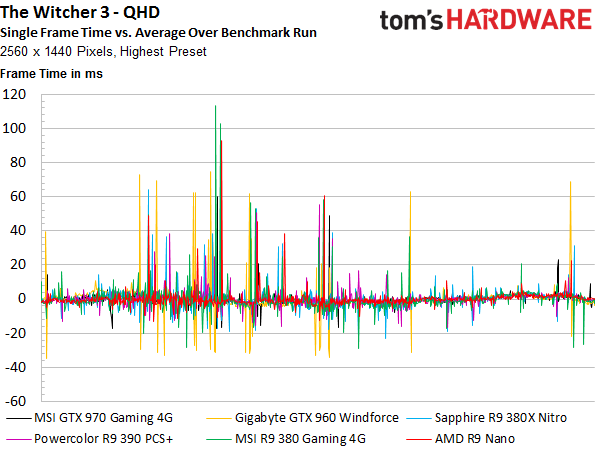

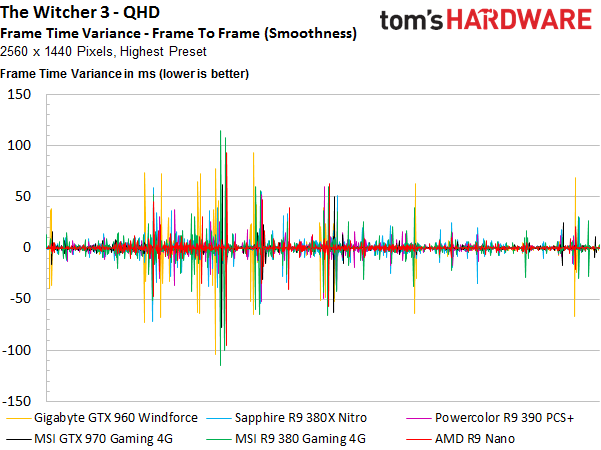

The Witcher 3: Wild Hunt

The difference between AMD’s Radeon R9 380X and 380 is nine percent in this benchmark at QHD. That's up from six percent at FHD. The game certainly isn’t playable using either graphics card without significantly lowering some of the settings, though.

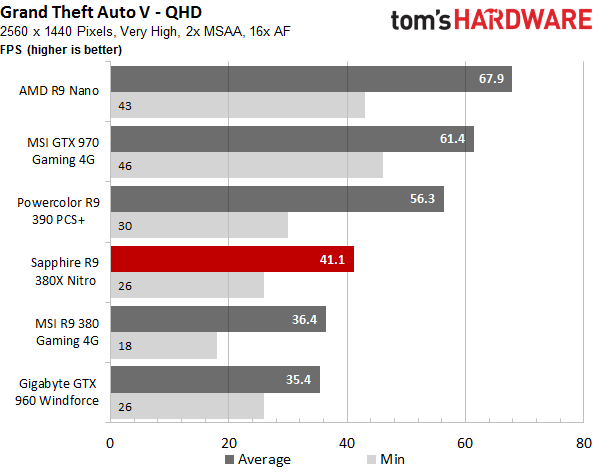

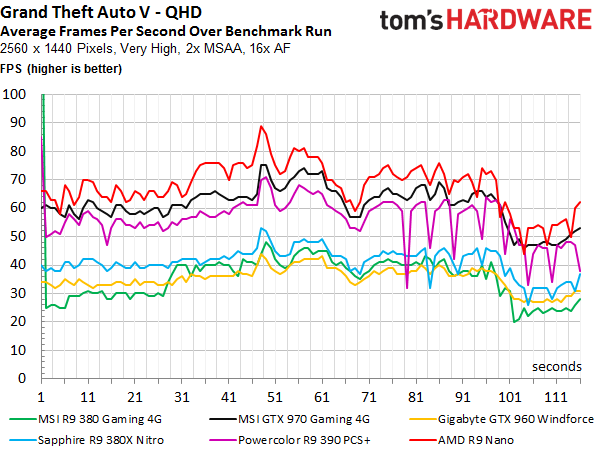

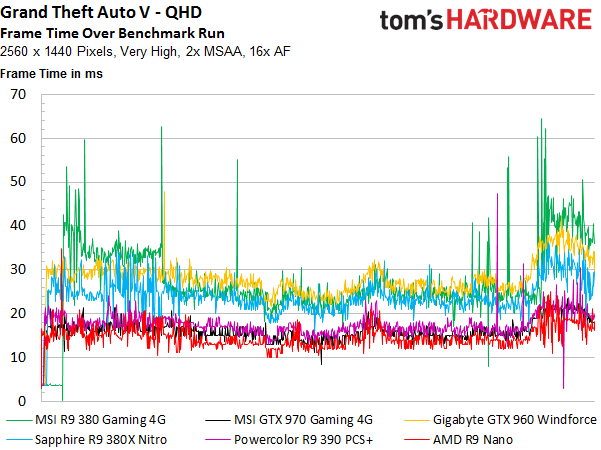

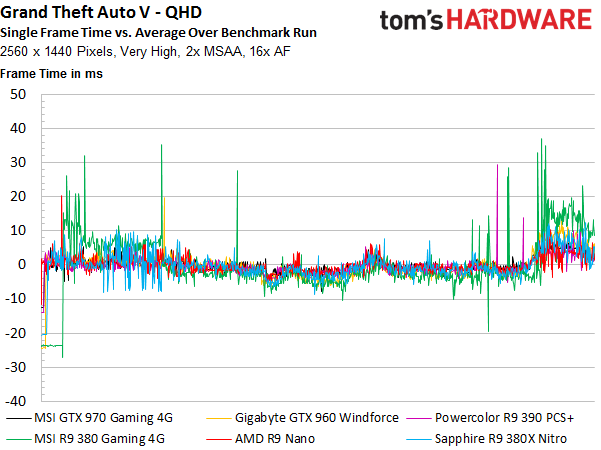

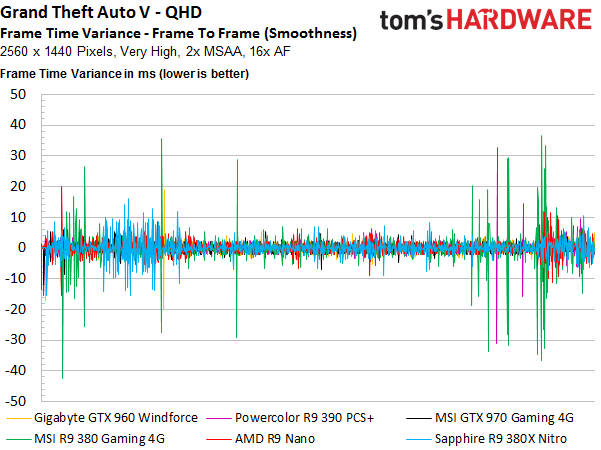

Grand Theft Auto V

Again, the Radeon R9 380X’s advantage over the 380 increases at the higher resolution. This time it rises from five to 11 percent. Forty FPS isn’t a great result, but it’s still considered playable. Sixty FPS could be achieved with a few lowered graphics settings.

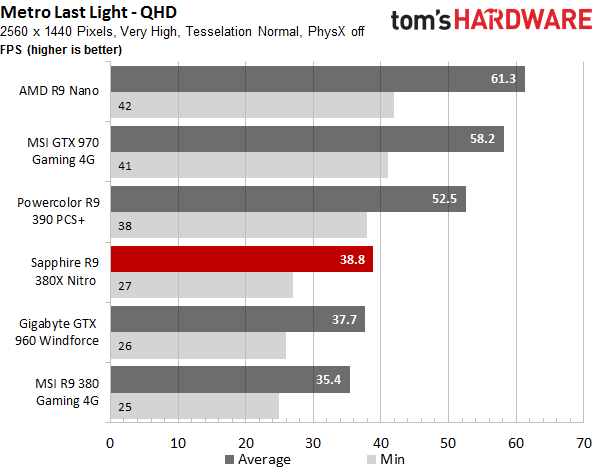

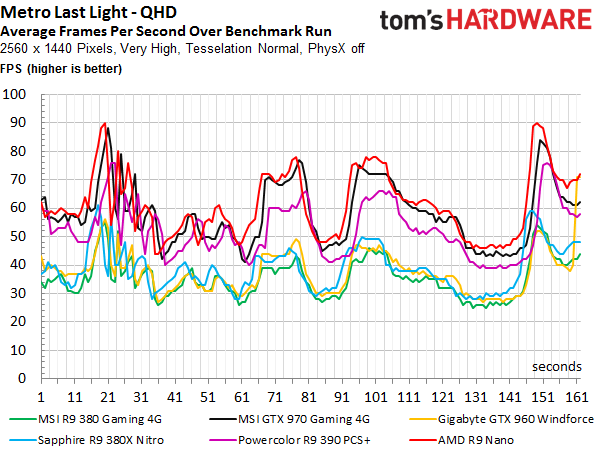

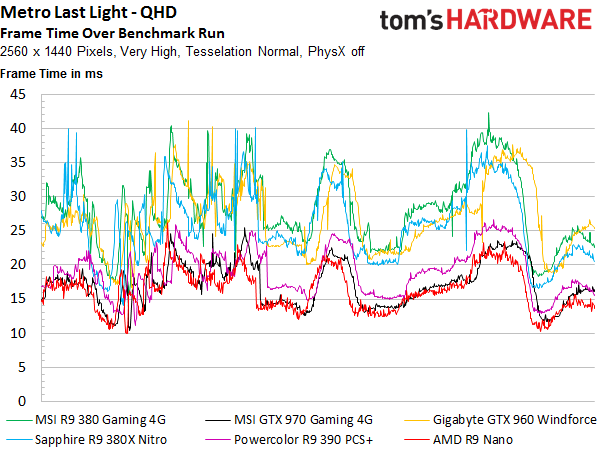

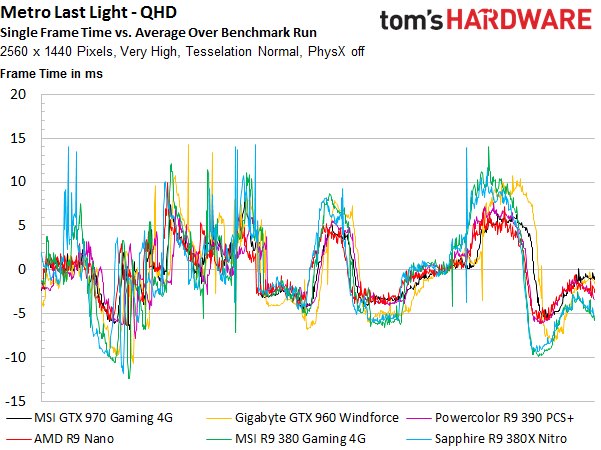

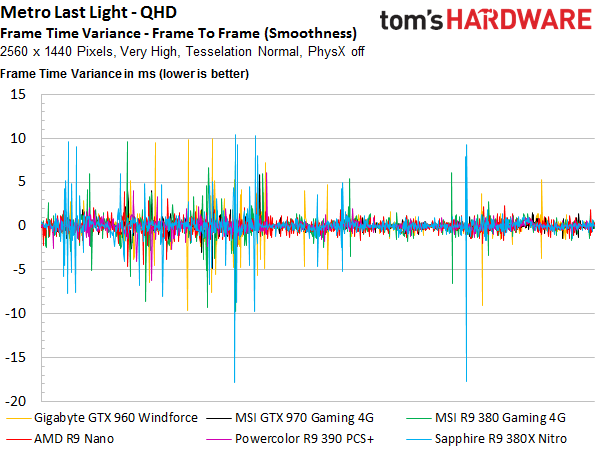

Metro: Last Light

AMD’s new graphics card manages to hold onto the nine percent lead over the 380 that we saw at 1920x1080. We’re puzzled again by the fact that the theoretically slower Nvidia GeForce GTX 960 is able to keep up and finish right between AMD’s two similar boards. Without tessellation, AMD’s offerings would likely come out ahead, though.

Then again, the exact order doesn’t really matter since none of these cards provide a smooth gaming experience. At this resolution, you'd want to use the Medium graphics preset and a dialed-back tessellation setting to make AMD's Radeon R9 380X more playable.

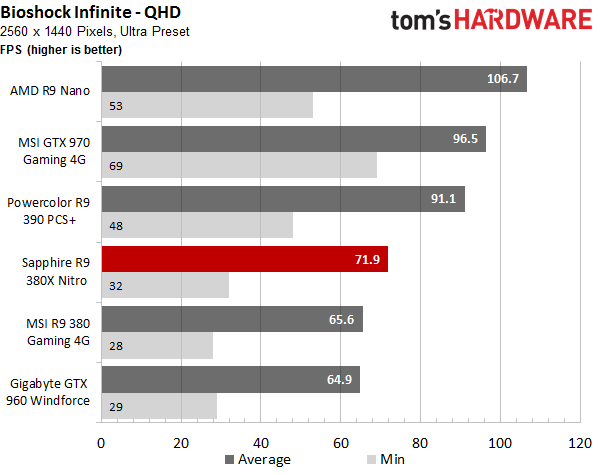

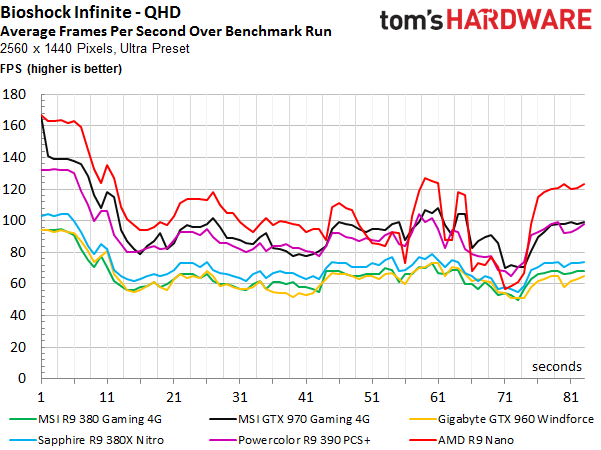

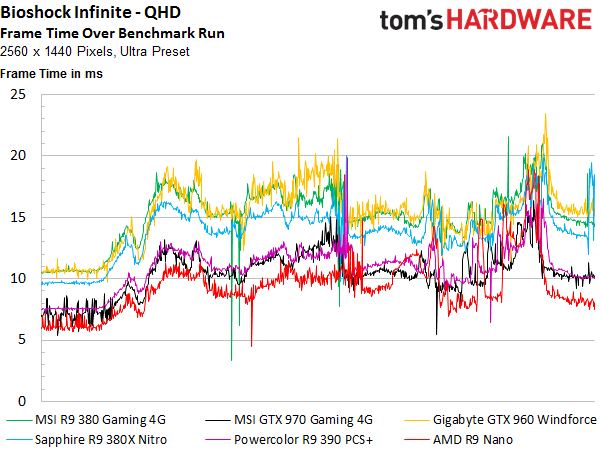

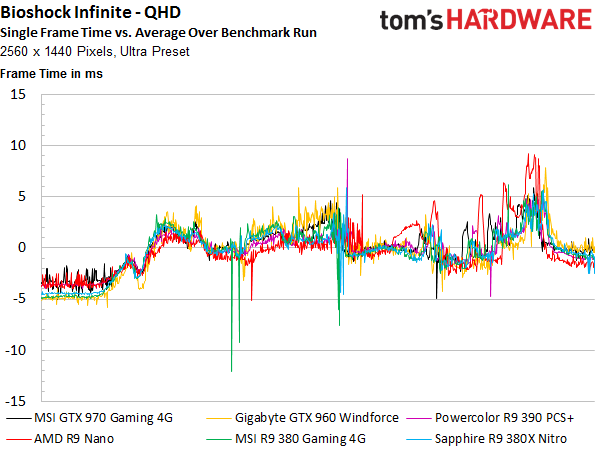

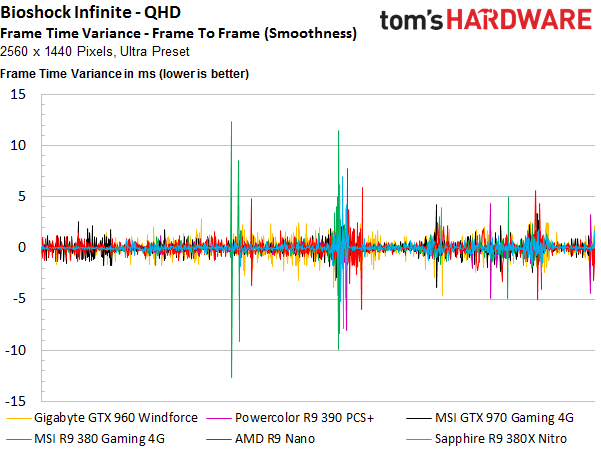

Bioshock Infinite

The Radeon R9 380X increases its lead over the 380 again, this time from six to nine percent. That still isn't noticeable when you actually play the game, though. Both graphics cards produce playable results, and the 380X’s frame times aren't appreciably better.

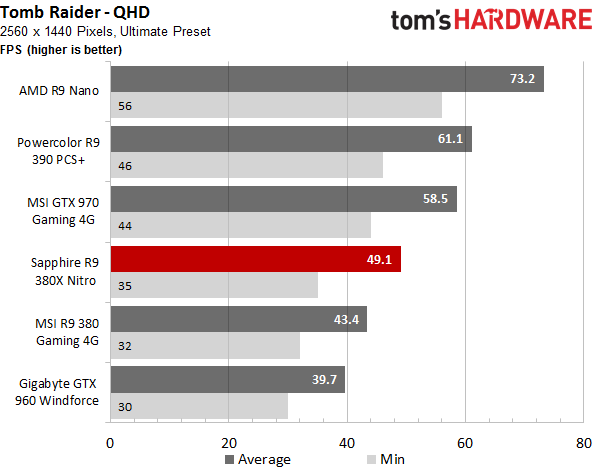

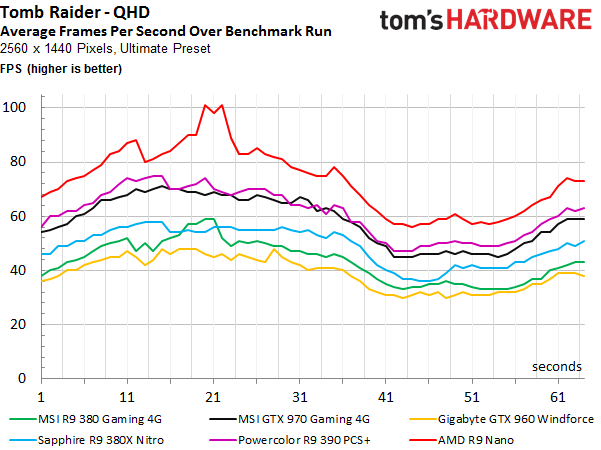

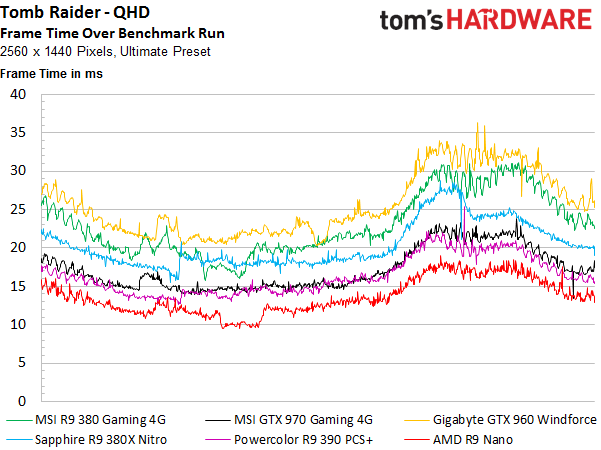

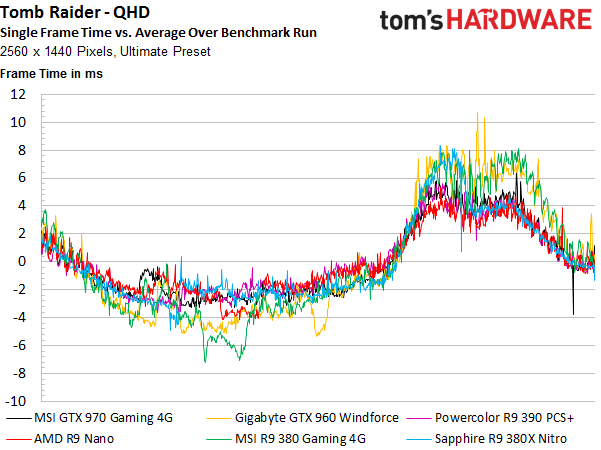

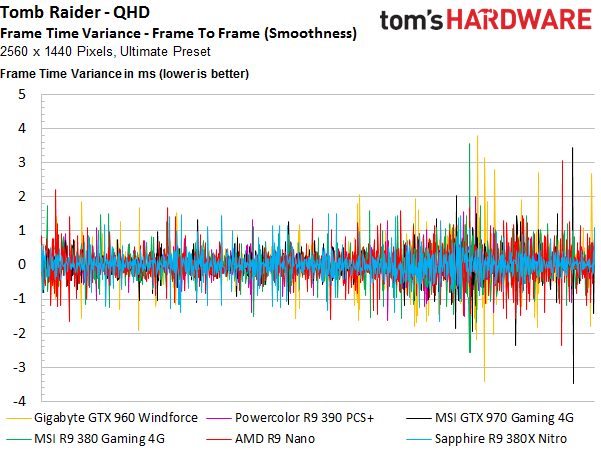

Tomb Raider

AMD's Radeon R9 380X is 13 percent faster than the X-less 380 in this benchmark at QHD, up from almost 10 percent in FHD. Tomb Raider also marks the first example of a test where the two cards yield a noticeably different subjective experience.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

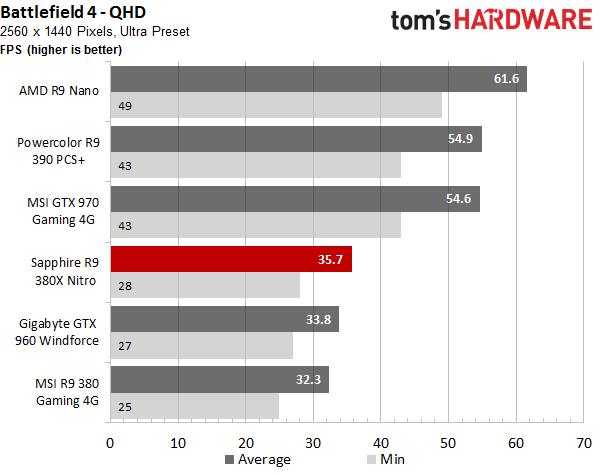

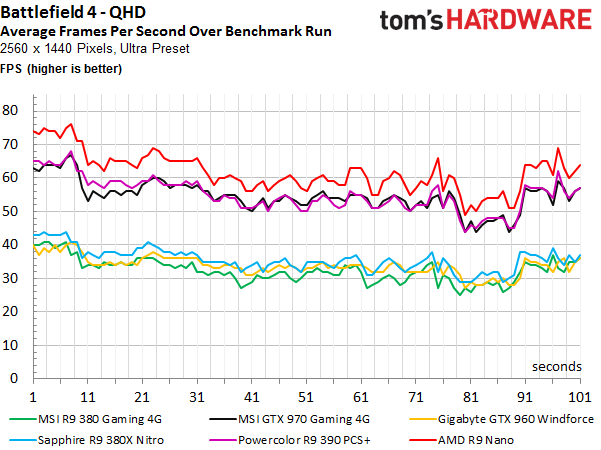

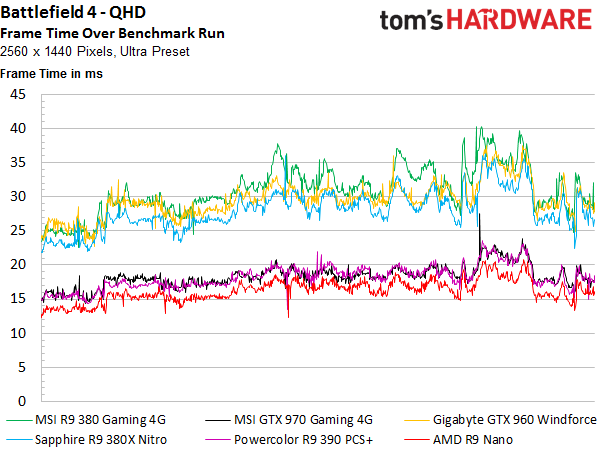

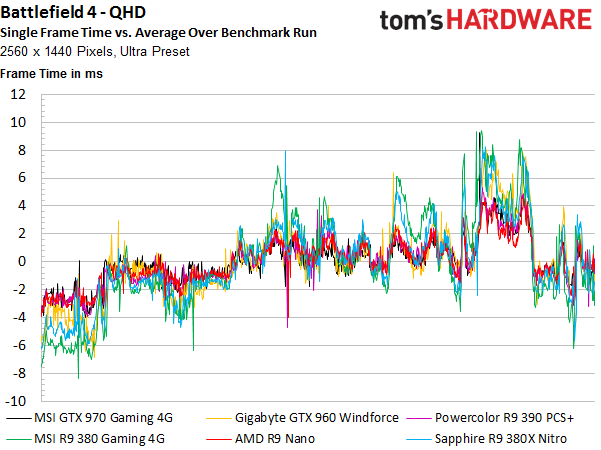

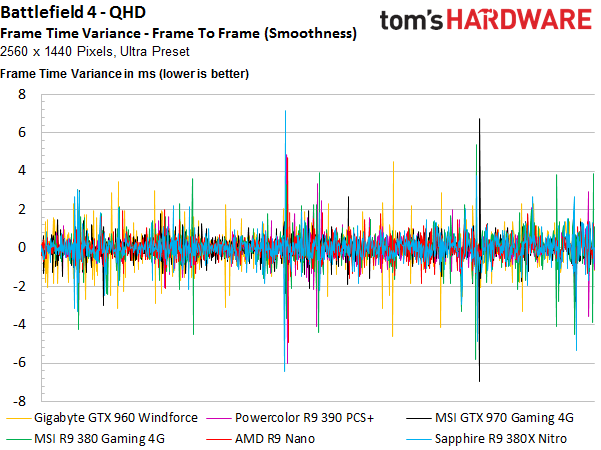

Battlefield 4 (Campaign)

Even though Nvidia’s GeForce GTX 960 beats the AMD Radeon R9 380 once again in Battlefield 4, the 380X beats it in turn to the tune of 10 percent. Unfortunately, none of the cards are actually playable. For that, you'd need to drop the quality preset a couple of notches.

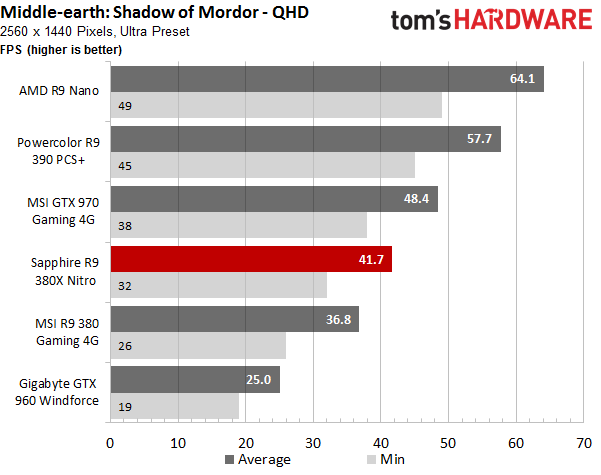

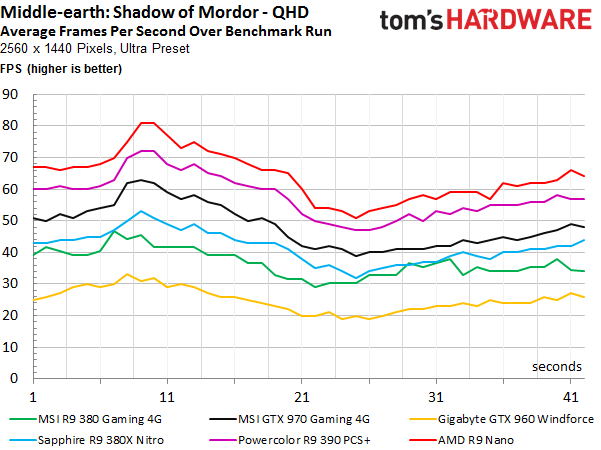

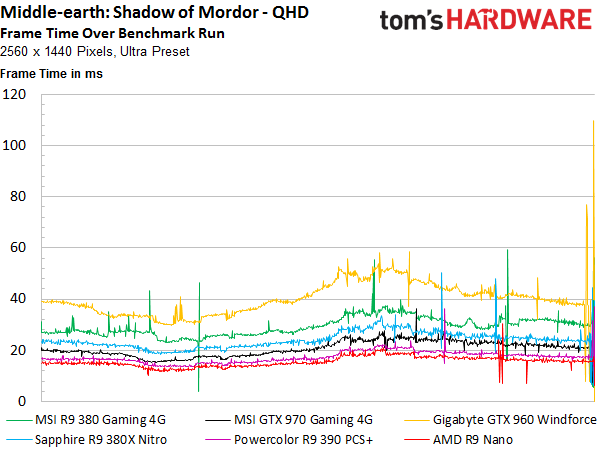

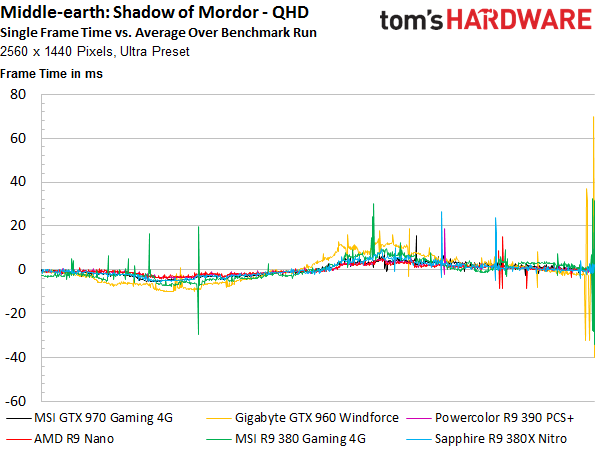

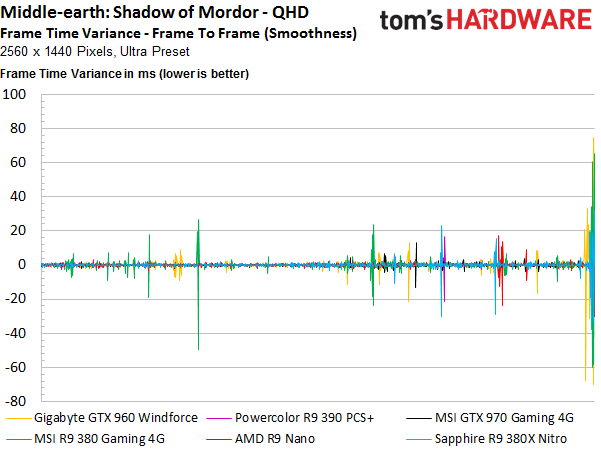

Middle-Earth: Shadow of Mordor

This is the first time the Radeon R9 380X’s advantage over the 380 actually shrinks. It was 16 percent at Full HD, and now it's 13 percent at QHD. Again, you'd need to compromise graphics quality to make Middle-earth playable at 2560x1440.

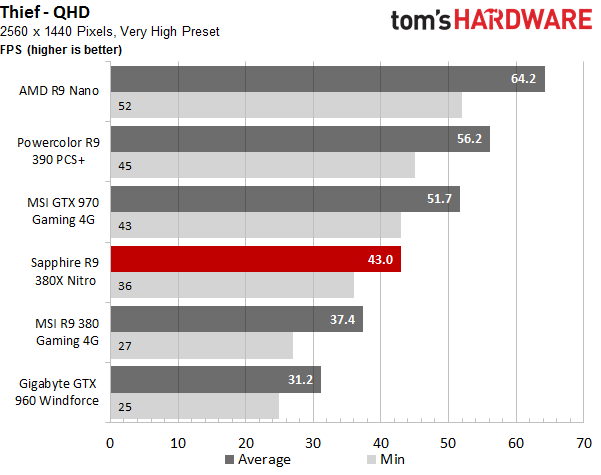

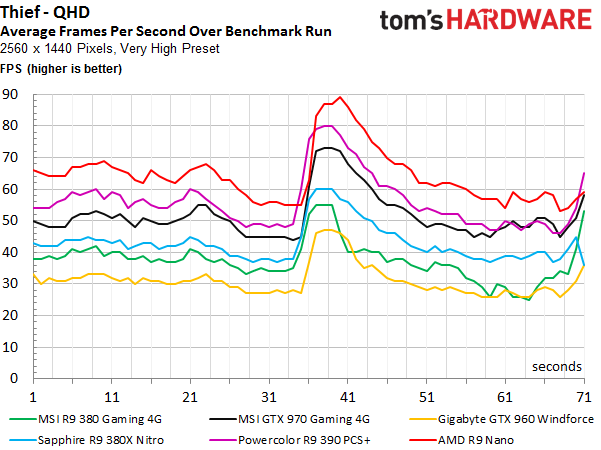

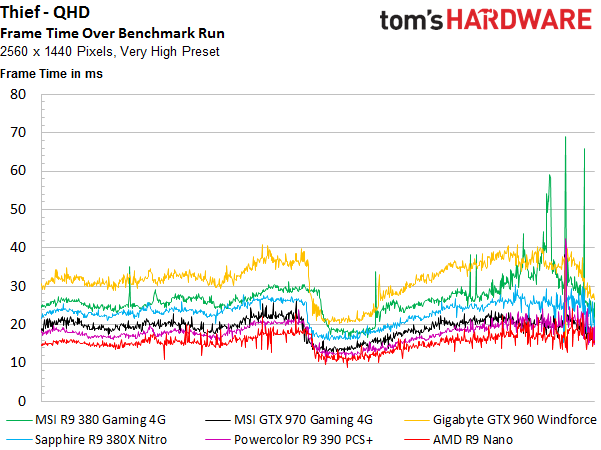

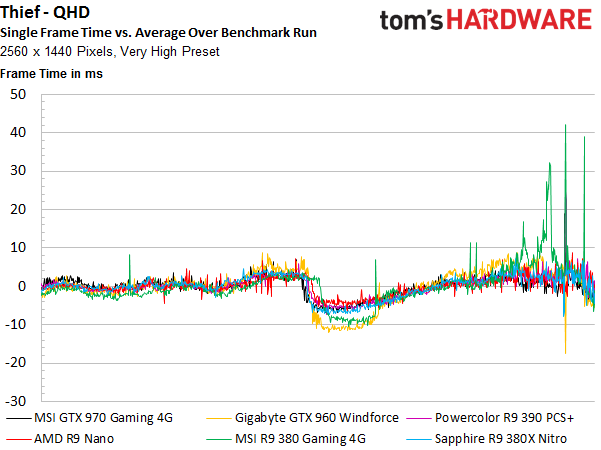

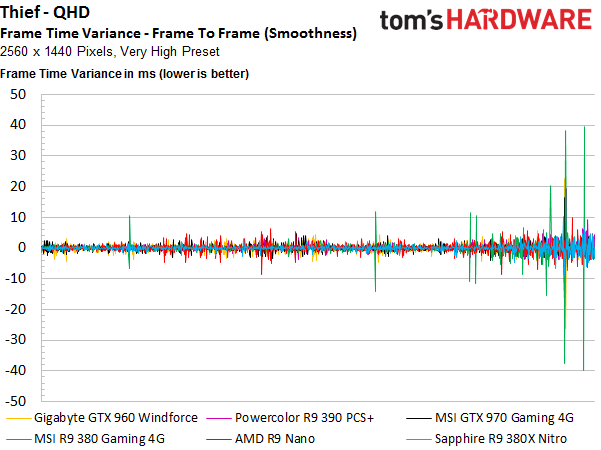

Thief

The Radeon R9 380X almost doubles its lead from eight to 15 percent. The difference still isn’t really all that noticeable during actual gameplay though, which is generally very choppy. Lowered graphics settings would give every contender a much-needed boost, of course.

Nvidia’s GeForce GTX 960 doesn’t stand a chance, likely due to its 2GB of GDDR5. Unfortunately, we didn’t have a model on-hand with more graphics memory.

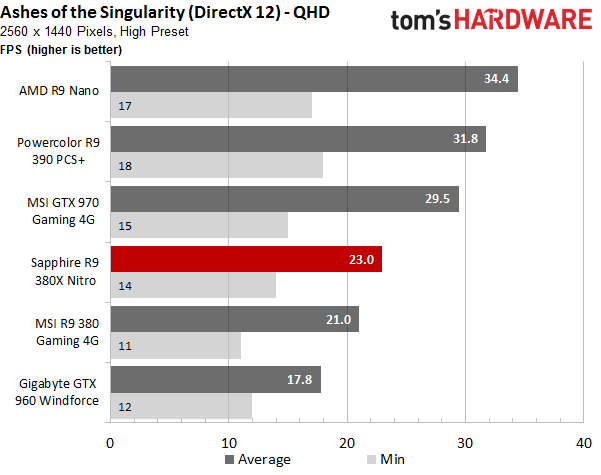

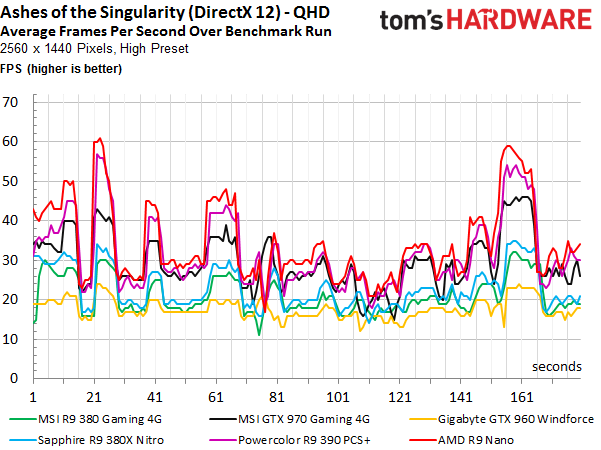

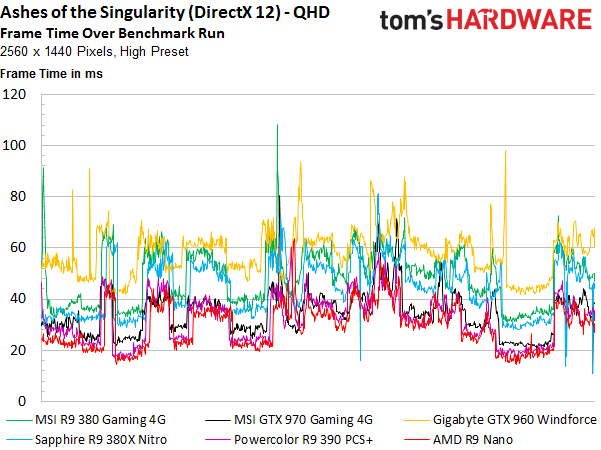

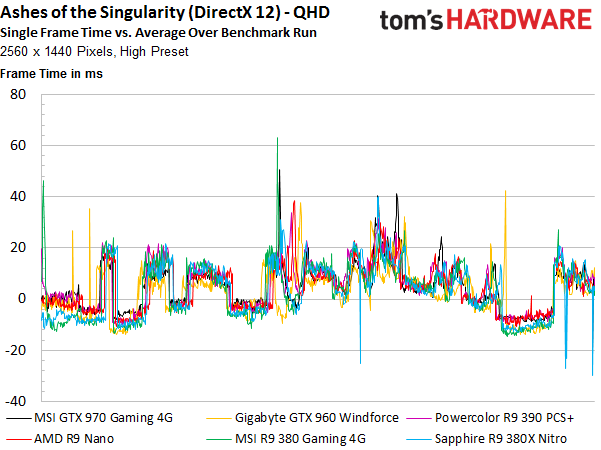

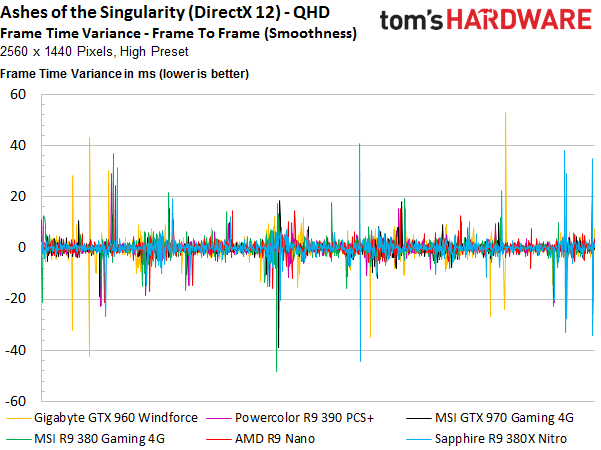

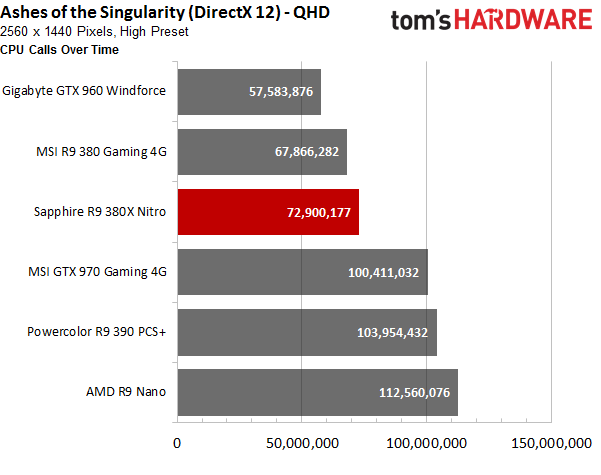

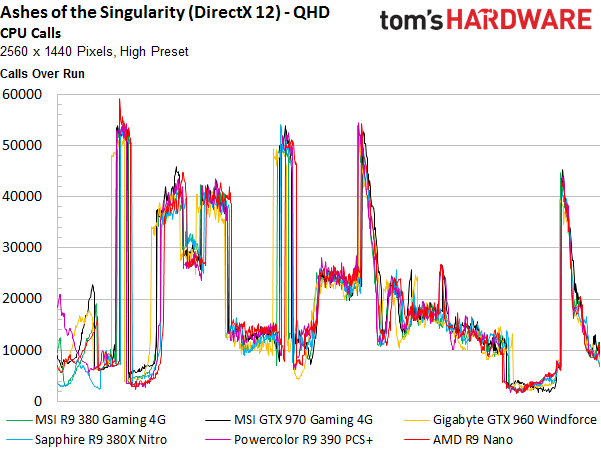

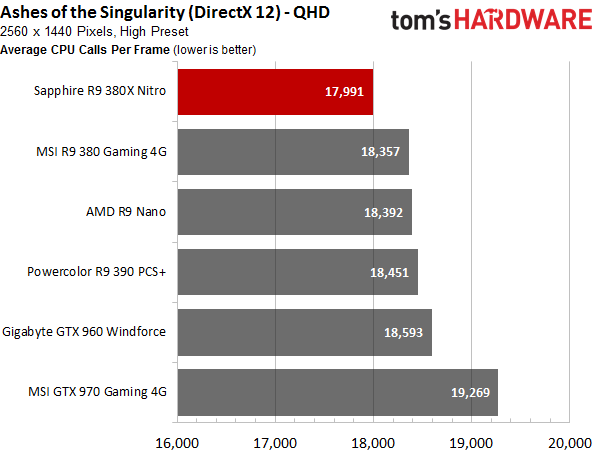

Ashes of the Singularity

We’re again looking at the render times of individual frames from the different views. The total rendering time is congruent with how demanding the benchmark scenes are.

And again, we report the number of CPU calls, along with the ratio of rendered frames.

Bottom Line

We wouldn’t go so far as to call AMD's Radeon R9 380X unsuitable for 2560x1440, but there are certainly settings you won't get to enable if you try to make QHD happen. Maxed-out presets are simply too demanding. Drop the slider a few levels, though, and the new card should generally provide a playable experience.

The one problem we have with this situation is that you could say the same thing about AMD's cheaper Radeon R9 380. The difference between them is never large enough to make a practical difference. Depending on the title and the graphics settings, the 380X might be a bit faster or a bit less slow, but that’s about it.

Current page: QHD (2560x1440) Gaming Results

Prev Page FHD (1920x1080) Gaming Results Next Page Power Usage Results

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

ingtar33 so full tonga, release date 2015; matches full tahiti, release date 2011.Reply

so why did they retire tahiti 7970/280x for this? 3 generations of gpus with the same rough number scheme and same performance is sorta sad. -

Eggz Seems underwhelming until you read the price. Pretty good for only $230! It's not that much slower than the 970, but it's still about $60 cheaper. Well placed.Reply -

chaosmassive been waiting for this card review, I saw photographer fingers on silicon reflection btw !Reply -

Onus Once again, it appears that the relevance of a card is determined by its price (i.e. price/performance, not just performance). There are no bad cards, only bad prices. That it needs two 6-pin PCIe power connections rather than the 8-pin plus 6-pin needed by the HD7970 is, however, a step in the right direction.Reply

-

FormatC ReplyI saw photographer fingers on silicon

I know, this are my fingers and my wedding ring. :P

Call it a unique watermark. ;) -

psycher1 Honestly I'm getting a bit tired of people getting so over-enthusiastic about which resolutions their cards can handle. I barely think the 970 is good enough for 1080p.Reply

With my 2560*1080 (to be fair, 33% more pixels) panel and a 970, I can almost never pull off ultimate graphic settings out of modern games, with the Witcher 3 only averaging about 35fps while at medium-high according to GeForce Experience.

If this is already the case, give it a year or two. Future proofing does not mean you should need to consider sli after only 6 months and a minor display upgrade. -

Eggz Reply16976217 said:Honestly I'm getting a bit tired of people getting so over-enthusiastic about which resolutions their cards can handle. I barely think the 970 is good enough for 1080p.

With my 2560*1080 (to be fair, 33% more pixels) panel and a 970, I can almost never pull off ultimate graphic settings out of modern games, with the Witcher 3 only averaging about 35fps while at medium-high according to GeForce Experience.

If this is already the case, give it a year or two. Future proofing does not mean you should need to consider sli after only 6 months and a minor display upgrade.

Yeah, I definitely think that the 980 ti, Titan X, FuryX, and Fury Nano are the first cards that adequately exceed 1080p. No cards before those really allow the user to forget about graphics bottlenecks at a higher standard resolution. But even with those, 1440p is about the most you can do when your standards are that high. I consider my 780 ti almost perfect for 1080p, though it does bottleneck here and there in 1080p games. Using 4K without graphics bottlenecks is a lot further out than people realize.

-

ByteManiak everyone is playing GTA V and Witcher 3 in 4K at 30 fps and i'm just sitting here struggling to get a TNT2 to run Descent 3 at 60 fps in 800x600 on a Pentium 3 machineReply