AMD Radeon R9 380X Nitro Launch Review

Tonga’s been around for more than a year now, and it’s taken all this time for it to finally be available for regular desktop PCs. Before now, this configuration was exclusively offered for a different platform. But does it still make sense today?

Features & Overview

The “Nitro” moniker indicates that Sapphire is doing something different with this card's cooling solution. At first glance, everything looks consistent with the new generation of Nitro-branded cards.

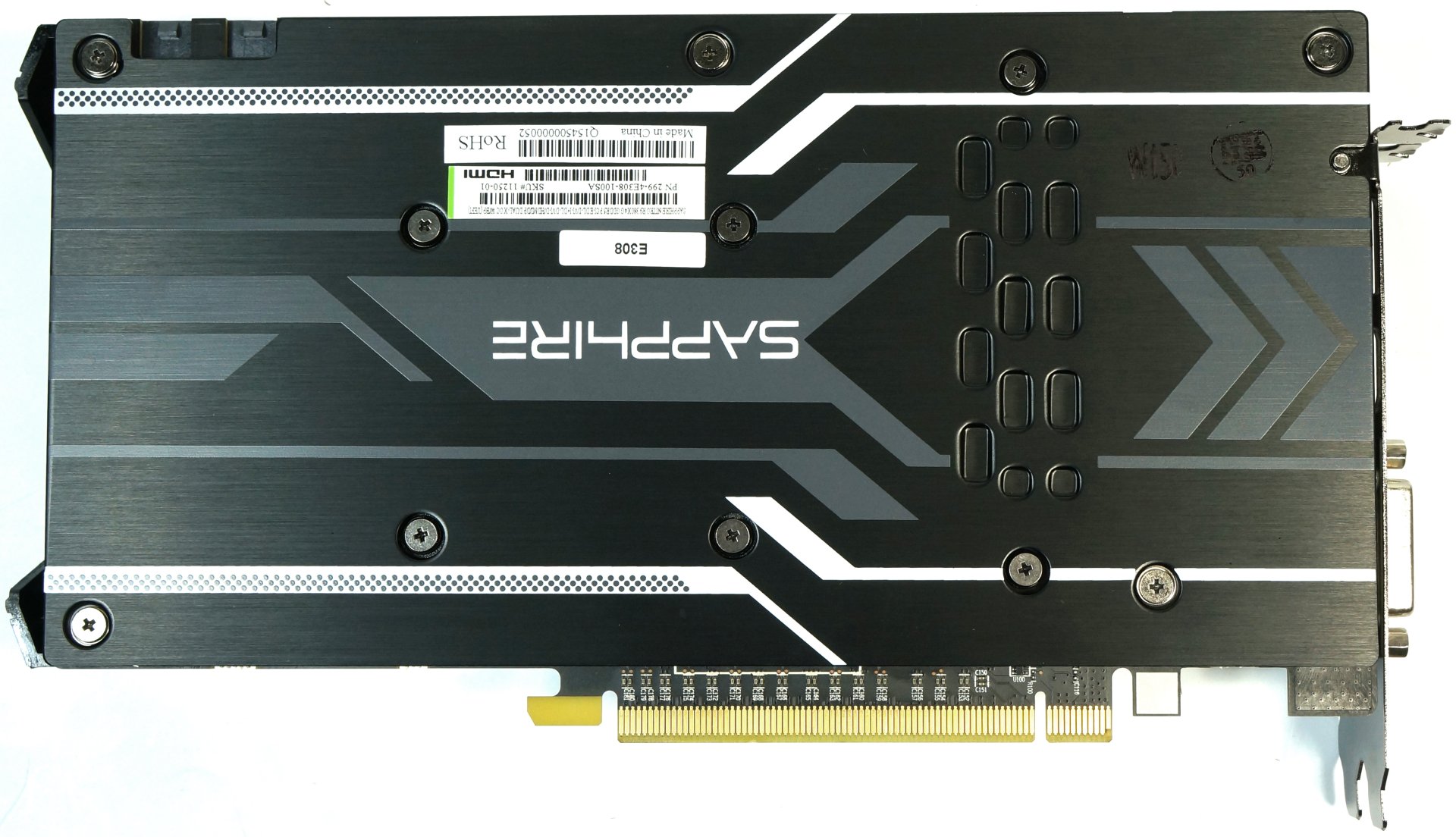

Let’s first turn the card all the way around and take a look at its backplate. It’s a sight to see: Sapphire forgoes any and all openings and vents, but noticeably indents certain areas of the plate above the voltage converters. You'll need to remove it if you want to know what's behind those sections.

With the plate set aside, we’re greeted by a sight for sore eyes: there are finally thermal pads between the backplate and the sections of PCB where the voltage regulation circuitry's pins exit. If and how this makes a difference in cooling performance remains to be seen.

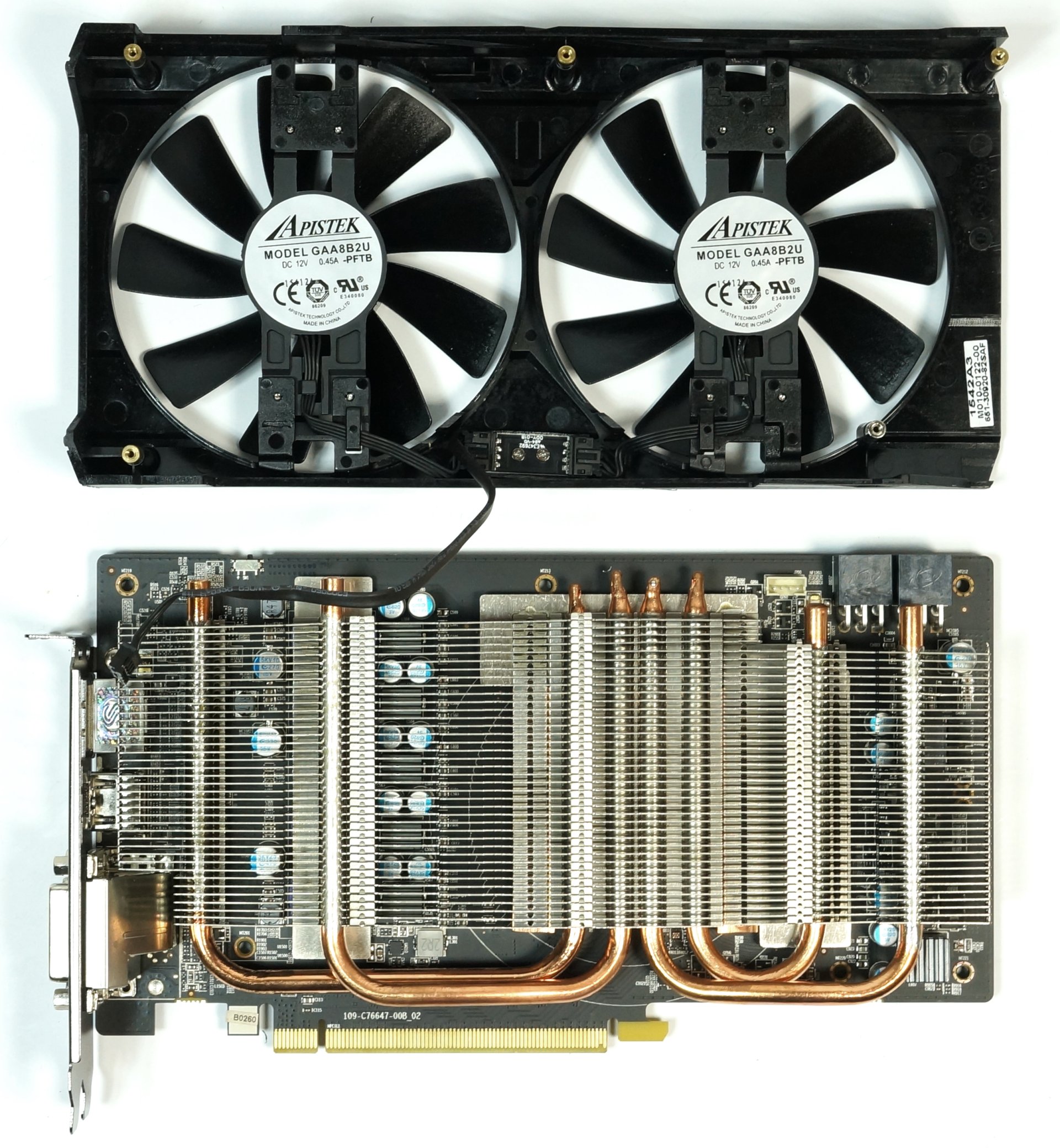

The two 10cm fans are made by Apistek. They max out at 2500 RPM, and under a full load, we measure six watts of overall power consumption between them.

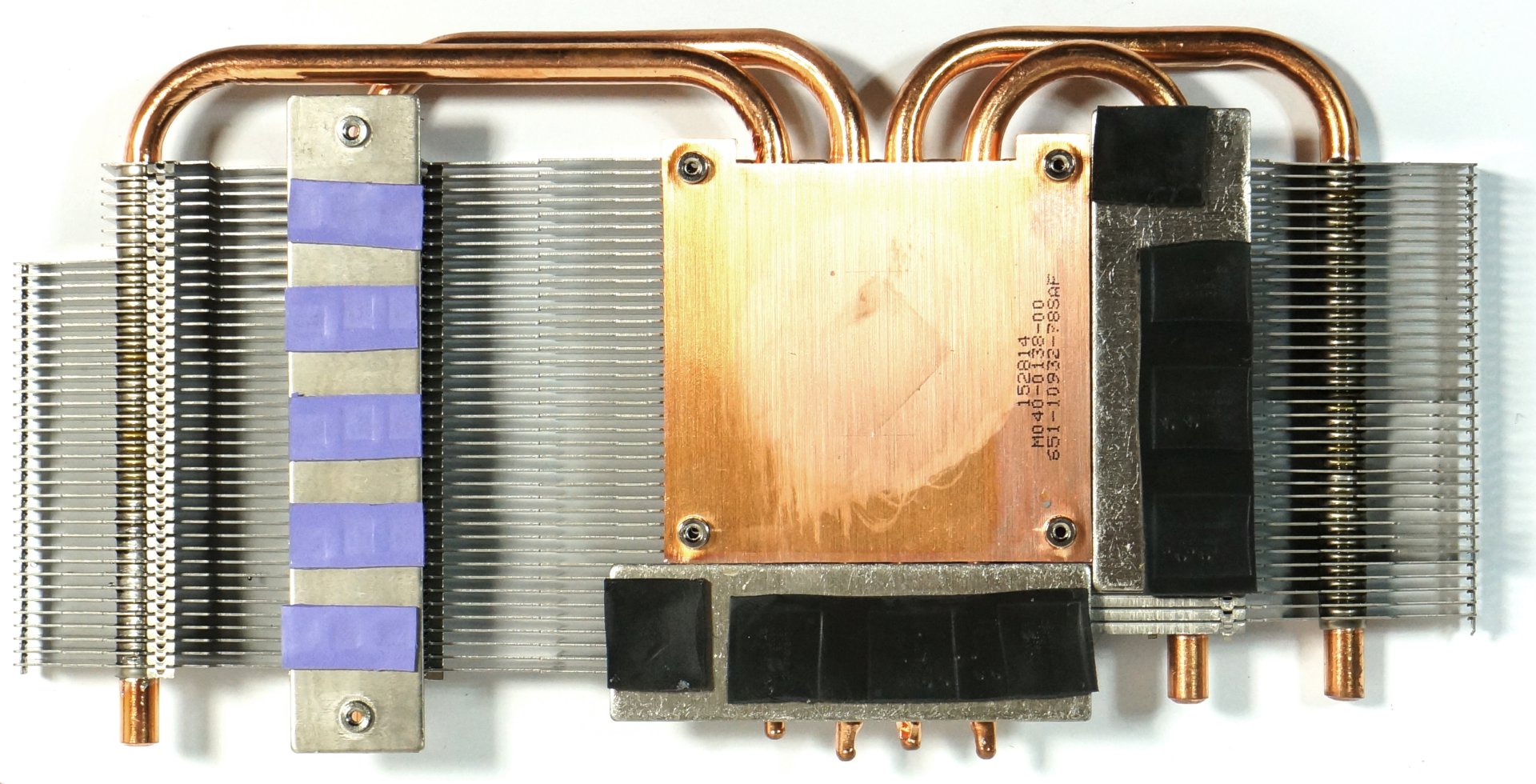

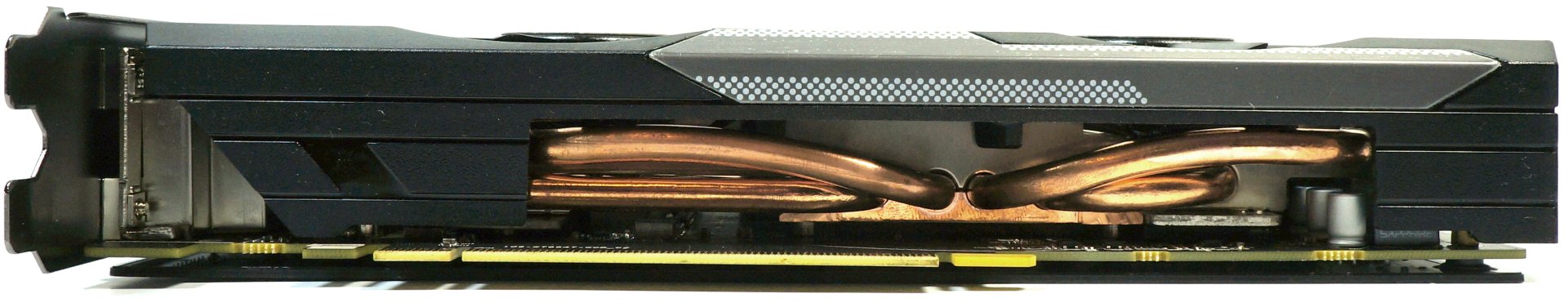

Fortunately, Sapphire uses a massive copper heat sink and forgoes the cut-down heat pipes (DHT) and aluminum body. The efficient sink dissipates thermal energy evenly through the four 6mm heat pipes, which take it to the horizontally-oriented cooling fins.

The voltage converters and memory modules don't make contact with the heat sink, but are linked directly to the cooler’s body with the help of surfaces specifically designed for this purpose and thermal pads.

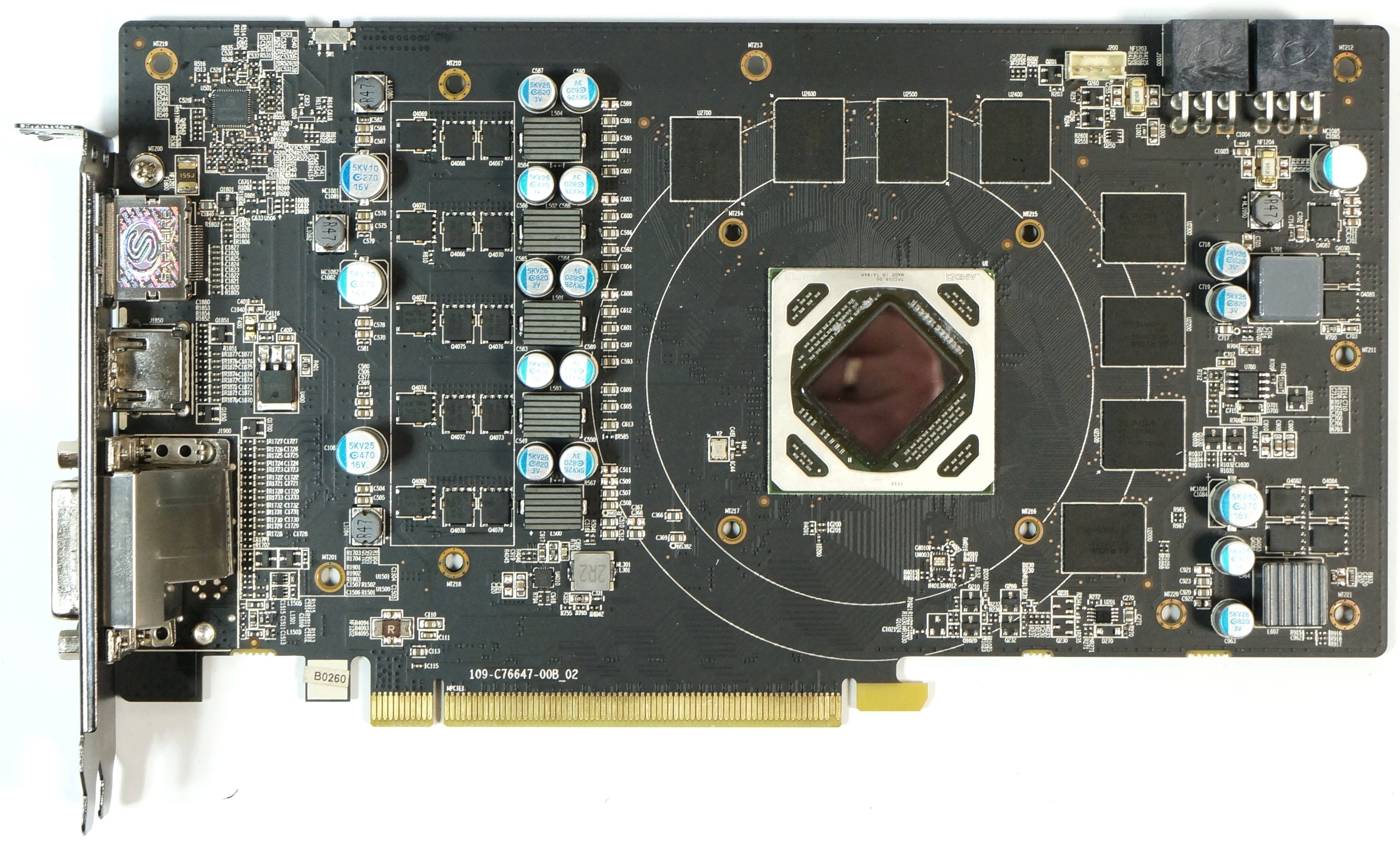

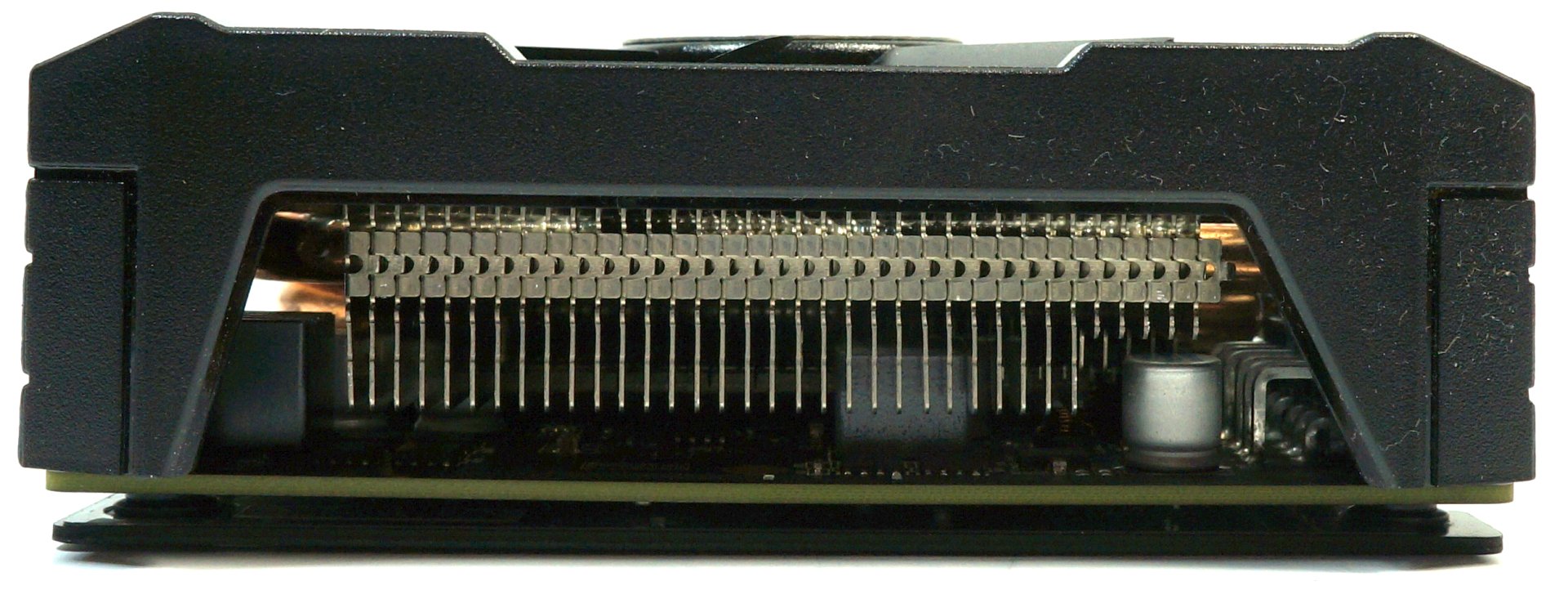

On the other side of the PCA, we find the five-phase VRM on the left. This needs to provide up to 250W during our stress test. In stark contrast, the phase for the memory (at the right-bottom) runs mostly idle with its maximum 5W.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

By the way, Sapphire utilizes much better coils than its corporate parent PC Partner uses on the Radeon Fury X and Nano. Overall, the voltage conversion design is excellent.

The heat pipes can be seen on the bottom. Their positioning allows the graphics card to stay relatively flat and its height isn’t increased unnecessarily.

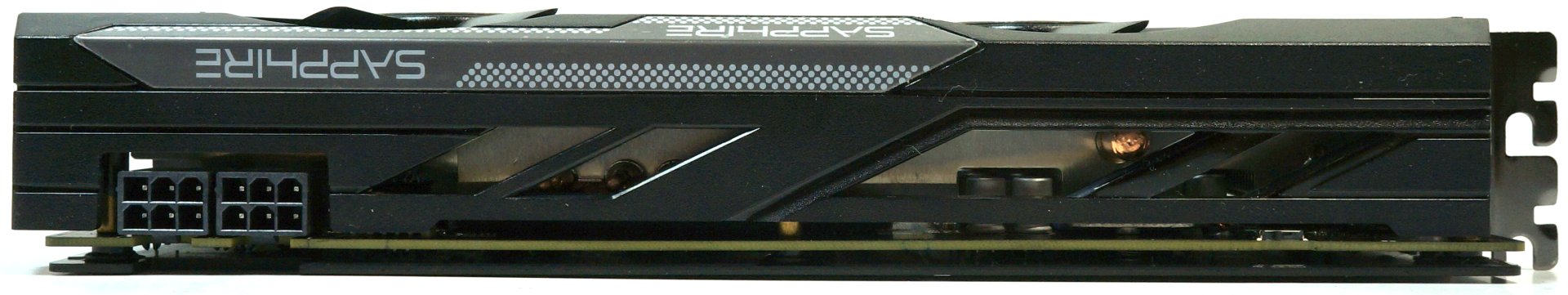

On the top, the only interesting features are the dual six-pin PCIe power connectors. The board is recessed right next to them, which gives away that these connectors are turned by 180 degrees. This should come in handy when installing the card, and it'll give the cooler some additional space to boot.

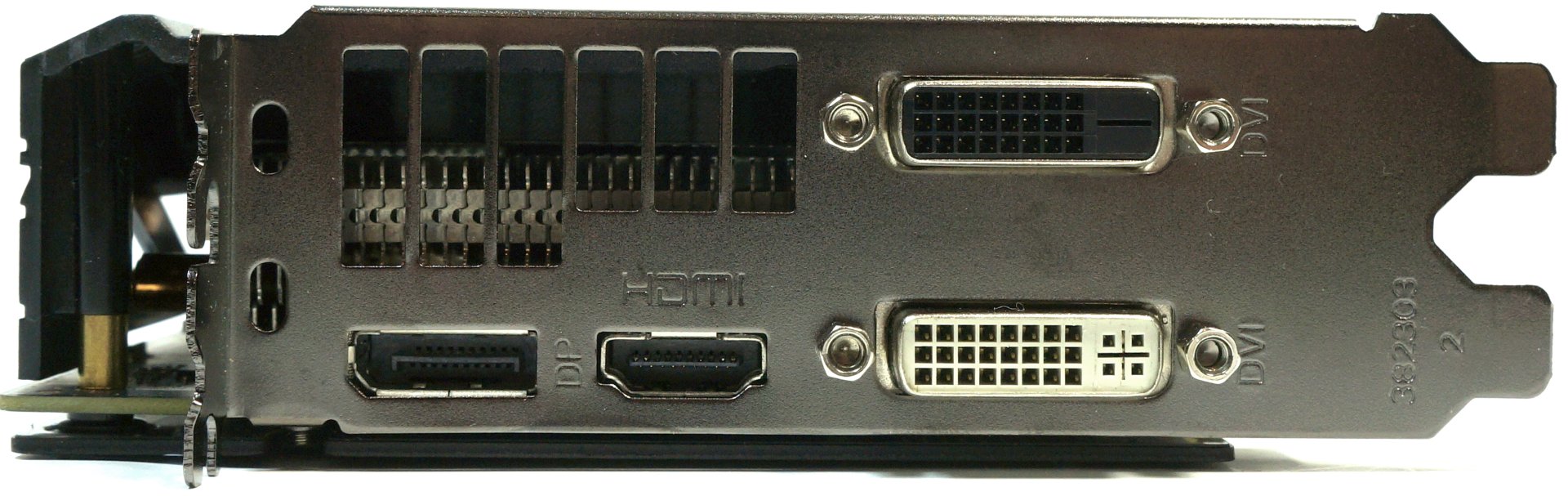

Last but not least is the back of the card. Sapphire does manage to avoid blowing waste heat directly onto the motherboard by implementing horizontally-oriented cooling fins. That also means some hot air can exit the case through openings in the back, next to the connectors (though much of it recirculates into your chassis, which we don't care for).

The second DVI port could (and should) have been a DisplayPort connector instead. You do get one full-sized DP interface though, along with HDMI 1.2a output.

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

ingtar33 so full tonga, release date 2015; matches full tahiti, release date 2011.Reply

so why did they retire tahiti 7970/280x for this? 3 generations of gpus with the same rough number scheme and same performance is sorta sad. -

Eggz Seems underwhelming until you read the price. Pretty good for only $230! It's not that much slower than the 970, but it's still about $60 cheaper. Well placed.Reply -

chaosmassive been waiting for this card review, I saw photographer fingers on silicon reflection btw !Reply -

Onus Once again, it appears that the relevance of a card is determined by its price (i.e. price/performance, not just performance). There are no bad cards, only bad prices. That it needs two 6-pin PCIe power connections rather than the 8-pin plus 6-pin needed by the HD7970 is, however, a step in the right direction.Reply

-

FormatC ReplyI saw photographer fingers on silicon

I know, this are my fingers and my wedding ring. :P

Call it a unique watermark. ;) -

psycher1 Honestly I'm getting a bit tired of people getting so over-enthusiastic about which resolutions their cards can handle. I barely think the 970 is good enough for 1080p.Reply

With my 2560*1080 (to be fair, 33% more pixels) panel and a 970, I can almost never pull off ultimate graphic settings out of modern games, with the Witcher 3 only averaging about 35fps while at medium-high according to GeForce Experience.

If this is already the case, give it a year or two. Future proofing does not mean you should need to consider sli after only 6 months and a minor display upgrade. -

Eggz Reply16976217 said:Honestly I'm getting a bit tired of people getting so over-enthusiastic about which resolutions their cards can handle. I barely think the 970 is good enough for 1080p.

With my 2560*1080 (to be fair, 33% more pixels) panel and a 970, I can almost never pull off ultimate graphic settings out of modern games, with the Witcher 3 only averaging about 35fps while at medium-high according to GeForce Experience.

If this is already the case, give it a year or two. Future proofing does not mean you should need to consider sli after only 6 months and a minor display upgrade.

Yeah, I definitely think that the 980 ti, Titan X, FuryX, and Fury Nano are the first cards that adequately exceed 1080p. No cards before those really allow the user to forget about graphics bottlenecks at a higher standard resolution. But even with those, 1440p is about the most you can do when your standards are that high. I consider my 780 ti almost perfect for 1080p, though it does bottleneck here and there in 1080p games. Using 4K without graphics bottlenecks is a lot further out than people realize.

-

ByteManiak everyone is playing GTA V and Witcher 3 in 4K at 30 fps and i'm just sitting here struggling to get a TNT2 to run Descent 3 at 60 fps in 800x600 on a Pentium 3 machineReply