In Theory: How Does Lynnfield's On-Die PCI Express Affect Gaming?

Benchmark Results: H.A.W.X.

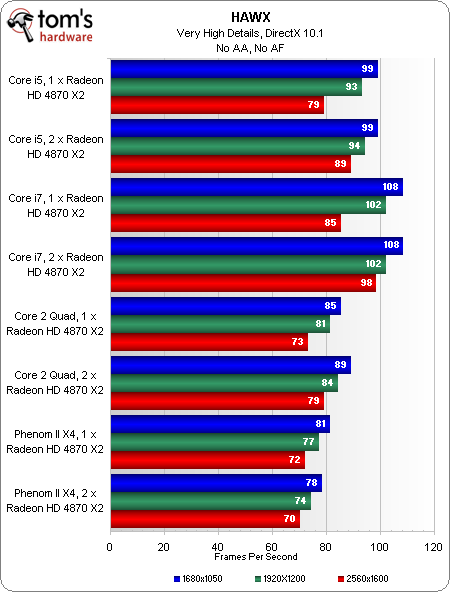

H.A.W.X. might be a sharp-looking sim with DirectX 10.1 optimizations, but it’s painfully apparent that without anti-aliasing turned on, 1680x1050 and 1920x1200 perform the same, regardless of whether you’re using one or two of ATI’s fastest video cards.

Stepping up to 2560x1600 does separate the field a bit, showing Core i7 in the lead (with one and two cards), and Core i5 besting Core 2 Quad in single- and dual-card environments, too.

Core i5 picks up a 12% performance increase with the addition of CrossFire. Core i7 picks up 15% higher frame rates. Core 2 Quad only gains 8%, while Phenom II actually loses 2% of its performance with the addition of a second graphics card.

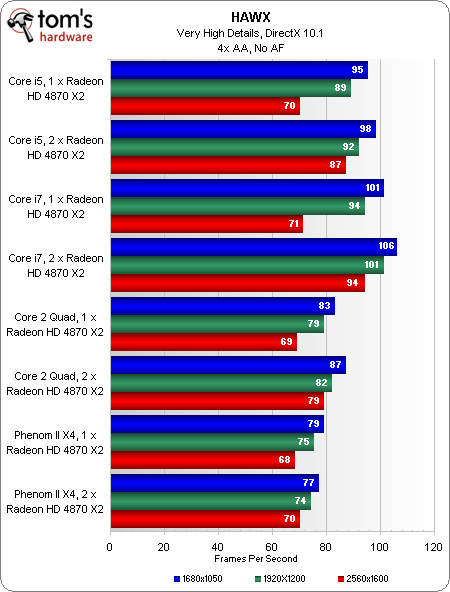

Once again, 1680x1050 and, to a somewhat lesser extent 1920x1200 show the limitations of each system, regardless of how many graphics cards are used to try improving game performance. To that end, Core i7 asserts itself as the least-bottlenecked, followed by Core i5, Core 2 Quad, and Phenom II.

It takes stepping up to our highest tested resolution to show some benefit to using a pair of Radeon HD 4870 X2s. Core i5 picks up an impressive 24% additional performance. Core i7 trumps that with a 32% gain (likely thanks to the twin x16 PCIe links). Having its 16-lane link split in two holds our Core 2 Quad setup back to a 14% gain, while the same design limits Phenom II to a 3% boost.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Benchmark Results: H.A.W.X.

Prev Page Benchmark Results: World In Conflict Next Page Conclusion-

bucifer I do not agree with the choices made in this article. You don't buy 2*4870x2 and the you slam a x4 920. The choices do not make sense.Reply

You should have used the best cpu(ex i7 920 oc@4GHz) to try to eliminate all bottlenecks and truly emphasize the limitations of x8/x16 pci-e lanes.

The rest of the testing was done to include the new i5 which is not bad but not relevant for the bottleneck. I know many people would like to see how i5+p55 handles the gpu power but it's a highly unlikely scenario that someone would actually but such powerful and expensive cards on pair them with a cheaper cpu and a limited platform.

I just think you should have tested things separately in different articles. -

radnor I know you used a 2.8Ghz Deneb for Clock-per-clock comparisons. MAkes sense. But a 2.8 Ghz Deneb is something really no unlocked. Ussually unlock versions go 3.5Ghz on stock VID, non BE PArts can reach 3.3Ghz safely.Reply

A 2.8 Deneb/Lynnfield/Bloomfield have completely diferent prices. You are comparing a R6 vs a R1. I7 is the Busa trouting everybody else. Of course the prices are very diferent. -

cangelini Gents, if you want to see the non-academic comparisons, I have the 965 BE compared in two other pieces for more real-world comparisons!Reply

http://www.tomshardware.com/reviews/intel-core-i5,2410.html

and

http://www.tomshardware.com/reviews/core-i5-gaming,2403.html

Thanks for the feedback notes! -

bounty "Will Core i5 handicap you right out of the gate with multi-card configurations? The aforementioned gains evaporated in real-world games, where Core i7’s trended slightly higher, perhaps as a result of Hyper-Threading or its additional memory channel"Reply

Well you answered will i5 handicap you without hyperthreading, x8 by x8 and dual channel. It will by 5-10% If you wanted to narrow it down to memory channels, hyperthreading or the x8 by x8 you could have pice the game with the biggest spread and enabled each of those options selectively. Would have been kinda interesting to see which had the biggest impact. -

Shnur Great article! But then again... I don't see why a 955 wasn't used in this scenario... since the 920 is thing that nobody uses. Already that we know that i7 is superior to AMD flagship in multi-GPU configurations you're taking a crappy AMD CPU, buying a 790GX doesn't mean you're going to cut on the chip... and you're talking about who's performing better in 8x lanes... from my point of view it's a bad comparison, and there should have been a chip that'll be actually able to take a difference between 1 card and two and the from 16x and 8x.Reply

And thanks for the other linked reviews, but I'm not talking about comparing the chips themselves, I'm trying to figure out is 8x still good enough or I need to pay more for 16x? -

cangelini Shunr,Reply

Thanks much for the feedback--again, this wasn't meant to be about the CPUs, but the PCI Express links. If you want to know about the processors themselves at retail clocks, check out the gaming story, which does reflect x16/x16 and x8/x8 in the LGA 1366 and LGA 1156 configs.

Hope that helps!

Chris -

Shadow703793 megabusterAMD better have something up its sleeves or it's instakill.lol! do you mean instagib?Reply

Joking aside, AMD needs something to counter this.