GeForce GTX 570 Review: Hitting $349 With Nvidia's GF110

A month ago, Nvidia launched its GeForce GTX 580, and it was everything we wanted back in March. Now the company is introducing the GeForce GTX 570, also based on its GF110. Is it fast enough to make us forget the GF100-based 400-series ever existed?

The BS Of Benchmarking

There is a lot of jockeying for position that goes on behind the scenes in the graphics business. This isn’t new; it’s been going on for years. Things really escalated ahead of the Radeon HD 6800-series launch, though. Nvidia was playing around with pricing and individual overclocked cards, AMD tried to discredit upcoming discounts on GeForce GTX 400-series boards, Nvidia pushed the HAWX 2 demo super hard before the game was available to play, and, apparently, AMD started making some changes to its drivers.

As a general rule, I try to remove myself from anything that doesn’t directly impact the way you and I both play games. After all, do the results of a game that hasn’t launched yet mean anything when they come from a demo? Does it matter if price cuts are temporary if you can realize the value of a discount today? So much of the stupid crap that goes on doesn’t matter, at the end of the day.

An Issue Of Image Quality

Well, a while back, Nvidia approached me about an optimization that had found its way into AMD’s driver, which would demote FP16 render targets, improving performance quantifiably. But after talking to both parties, there were three reasons I had a hard time getting too worked up: 1) the optimization affected a handful of games that nobody plays anymore, 2) identifying the quality difference required diffing the images—in other words, the changes were so slight that you had to use an application to compare the per-pixel differences, and 3) most important, AMD offered a check-box in its driver to disable Catalyst AI, the mechanism by which the surface format optimization was enabled.

Now, you could say that the principle of changing the image from what the developer intended, in and of itself, is reason to take up arms against AMD. Indeed, altering image quality is a slippery slope, and what I might find inoffensive might immediately be noticeable to you. That just didn’t seem like a battle worth fighting, though.

And We’re Sliding…

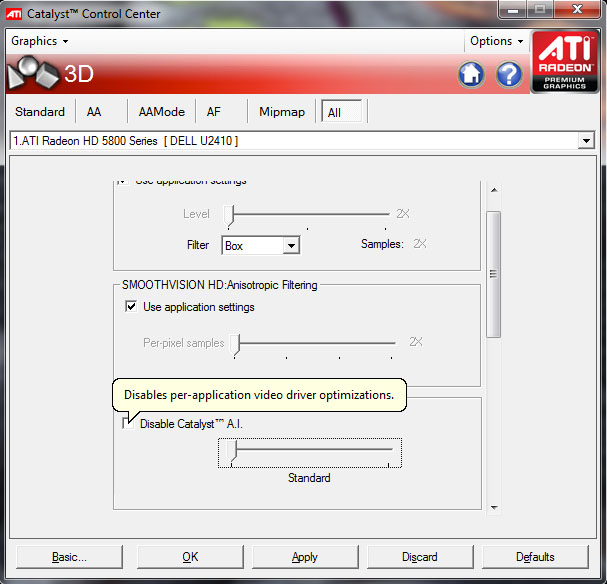

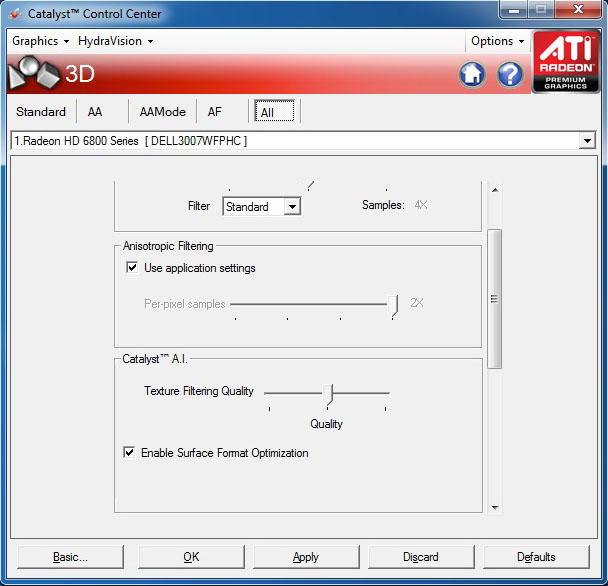

But maybe it was, after all. In its most recent Catalyst 10.10e hotfix release, AMD removed the option to disable Catalyst AI. Instead, there’s now an option to turn that specific Surface Format Optimization on or off. The Catalyst AI texture filtering slider only gives the option of Performance, Quality, and High Quality, though, with no explanation of what’s being tuned. Without the option to turn off Catalyst AI altogether, I was less comfortable with optimizations AMD might make in the background for the sake of its performance (this works itself out in the end; keep reading).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

What’s more, the change’s timing was suspect. I didn’t have time to dig too far into Nvidia’s recent accusation that AMD is tinkering with texture filtering quality in its newest drivers, mostly impacting the Radeon HD 6800-series cards. However, a number of German Web sites announced that they’d disable Catalyst AI entirely for testing Radeon HD 5800-series cards in order to achieve comparable image quality. And then the option to disable Catalyst AI disappears entirely? Things that make you go hmmm…

Perhaps even that wouldn’t have been so bad. But then AMD holds a conference call with the German Web sites that rode its back over quality concerns first brought to light after Radeon HD 6850 and 6870 launched. Fortunately, translating German is something we do pretty damn well (special thanks to Benjamin Kraft at Tom’s Hardware DE).

The recounting of the meeting published by ht4u.net said that the new High Quality setting in Catalyst AI purportedly disables all optimizations, akin to what the Disable Catalyst AI option did in the past, while the Quality option strikes a balance between what AMD considers performance and image quality. In other words, the company says it is not purposely doing anything in software that would diminish image quality. We asked AMD for comment on the new slider, and it confirmed that setting High Quality indeed turns off optimizations, addressing the first concern we brought up.

At the same time, though, AMD acknowledged to the Germans an image quality issue that was causing the shimmering originally reported by those sites with the 6800-series cards, even with High Quality toggled. The conclusion the site drew was that it’d take a hardware revision to correct the issue. AMD tells us it can fix the problem via drivers, and that it’ll involve blurring the textures so they don’t shimmer.

Much Ado?

With all of that said, I went through seven different modern DirectX 11 titles looking for problematic areas that’d make for easy demonstrations, and, despite knowing about the issues and squinting at a 30” screen, came away with very little conclusive in the real world. These are subtle differences we’re talking about here.

Representatives at Nvidia did shoot over a handful of different videos comparing GeForce GTX 570 to Radeon HD 5870 and Radeon HD 6870 using Crysis Warhead. In them, the shimmering is painfully clear. Additionally, we were directed to a multiplayer map in Battlefield: Bad Company 2 that’d show it. But running around the single-player campaign didn’t turn up anything blatant.

Moving forward, I'm inclined to use AMD's High Quality Catalyst AI setting for graphics card reviews, if only to get the best possible filtering quality. Unfortunately, Nvidia is going to have to compete against the Quality slider position today, though, as it sent its GeForce GTX 570 with far too little time to retest everything we ran for the GeForce GTX 580 review less than a month ago. For the time being, we're going to have to leave everything at default and point out the observed and confirmed image quality issue currently affecting Radeon HD 6800-series cards. This may or may not become a factor in your buying decision, but right now, the bottom line is that Nvidia offers better texture filtering, regardless of whether you’re one of the folks who can appreciate it.

Why do we care if the difference is so nuanced? Because we have to take any deviation in image quality seriously—we don’t want to see Nvidia drop its own texture filtering default to help boost performance (yes, Nvidia has its own set of filtering-based quality settings it could tool with as well). Hopefully, that sort of tit-for-tat isn’t on the table in Santa Clara.

Current page: The BS Of Benchmarking

Prev Page Tessellation: HAWX 2 Gives Us Real-World Numbers Next Page Test Hardware And Software-

thearm Grrrr... Every time I see these benchmarks, I'm hoping Nvidia has taken the lead. They'll come back. It's alllll a cycle.Reply -

xurwin at $350 beating the 6850 in xfire? i COULD say this would be a pretty good deal, but why no 6870 in xfire? but with a narrow margin and if you need cuda. this would be a pretty sweet deal, but i'd also wait for 6900's but for now. we have a winner?Reply -

sstym thearmGrrrr... Every time I see these benchmarks, I'm hoping Nvidia has taken the lead. They'll come back. It's alllll a cycle.Reply

There is no need to root for either one. What you really want is a healthy and competitive Nvidia to drive prices down. With Intel shutting them off the chipset market and AMD beating them on their turf with the 5XXX cards, the future looked grim for NVidia.

It looks like they still got it, and that's what counts for consumers. Let's leave fanboyism to 12 year old console owners. -

nevertell It's disappointing to see the freaky power/temperature parameters of the card when using two different displays. I was planing on using a display setup similar to that of the test, now I am in doubt.Reply -

reggieray I always wonder why they use the overpriced Ultimate edition of Windows? I understand the 64 bit because of memory, that is what I bought but purchased the OEM home premium and saved some cash. For games the Ultimate does no extra value to them.Reply

Or am I missing something? -

theholylancer hmmm more sexual innuendo today than usual, new GF there chris? :DReply

EDIT:

Love this gem:

Before we shift away from HAWX 2 and onto another bit of laboratory drama, let me just say that Ubisoft’s mechanism for playing this game is perhaps the most invasive I’ve ever seen. If you’re going to require your customers to log in to a service every time they play a game, at least make that service somewhat responsive. Waiting a minute to authenticate over a 24 Mb/s connection is ridiculous, as is waiting another 45 seconds once the game shuts down for a sync. Ubi’s own version of Steam, this is not.

When a reviewer of not your game, but of some hardware using your game comments on how bad it is for the DRM, you know it's time to not do that, or get your game else where warning.