LLMs

Latest about LLMs

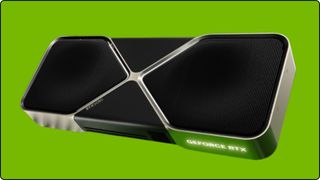

Nvidia's Priority Access program reportedly kicks off, invitations sent out for RTX 5090 customers

By Aaron Klotz published

If you're a Priority Access member, Nvidia might have already sent you an email.

Chinese CPU maker Zhaoxin rolls out DeepSeek support to all processors

By Sunny Grimm published

Zhaoxin, a Chinese CPU OEM, has announced that its full processor family fully supports DeepSeek models with parameters ranging from 1.5B to 671B.

Google Cloud launches first Blackwell AI GPU-powered instances

By Anton Shilov published

Google Cloud's A4X VMs based on Nvidia's NVL72 machines with 72 B200 GPUs and 36 Grace CPUs are now available for rent.

Nvidia brings back scalper-beating Verified Priority Access program for RTX 50 Founders Edition GPUs

By Anton Shilov published

Select Nvidia customers in the U.S. can get GeForce RTX 5080 FE and GeForce RTX 5090 FE graphics cards directly from Nvidia with Verified Priority Access program.

Micron introduces 4600 PCIe Gen 5 NVMe client SSD, promises lower AI load times

By Roshan Ashraf Shaikh published

The 4600 SSD uses G9 TLC NAND and an eight-channel SMI2508 controller.

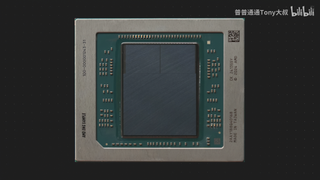

AMD's game-changing Strix Halo APU, formally known as the Ryzen AI Max+, poses for new die shots and gets annotated

By Hassam Nasir last updated

New die shots offer a glimpse of the intricacies that went into designing AMD's flagship Strix Point or Ryzen AI Max APUs.

Elon Musk's Grok 3 is now available, beats ChatGPT in some benchmarks

By Jowi Morales published

Elon Musk just launched Grok 3, which he claims to be the most powerful model available right now.

Modder crams LLM onto Raspberry Pi Zero USB stick

By Sayem Ahmed published

A Raspberry Pi Zero can run a local LLM using llama.cpp. But, while functional, slow token speeds make it impractical for real-world use.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.