Nvidia GeForce GTX 1080 Graphics Card Roundup

Benchmark Results

Why you can trust Tom's Hardware

Gaming

We test every card after a suitable warm-up period to avoid unfair differences in GPU Boost frequencies. All benchmarks are run six times; the first one is used to get the GPU hot again.

These cards are all press samples operating at the same settings as retail models in our best effort to ensure one vendor doesn't get a leg up on another using non-representative clock rates.

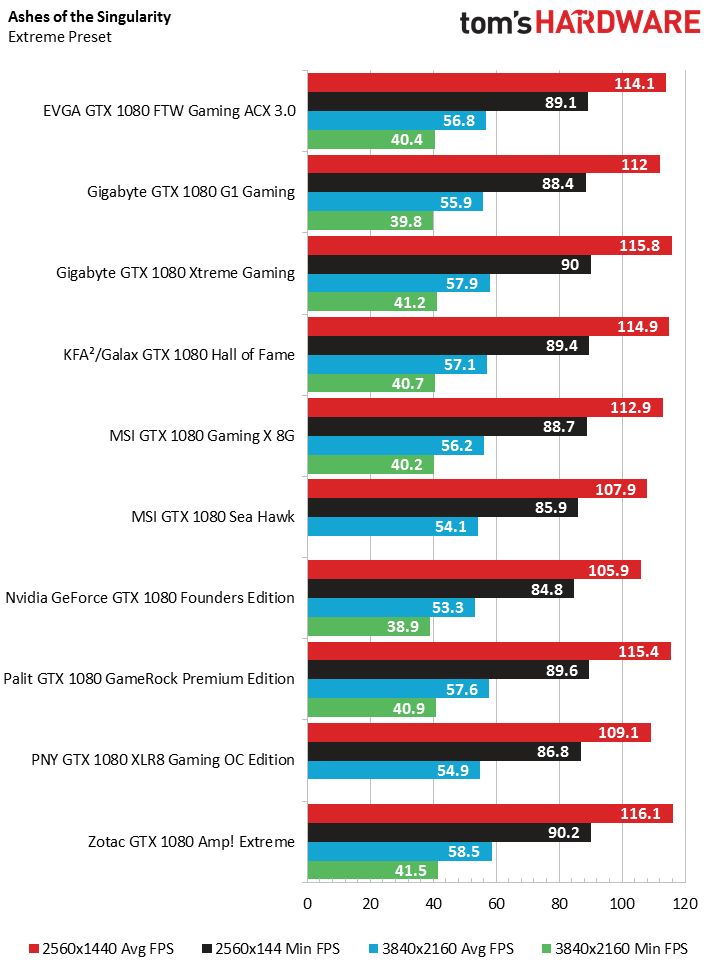

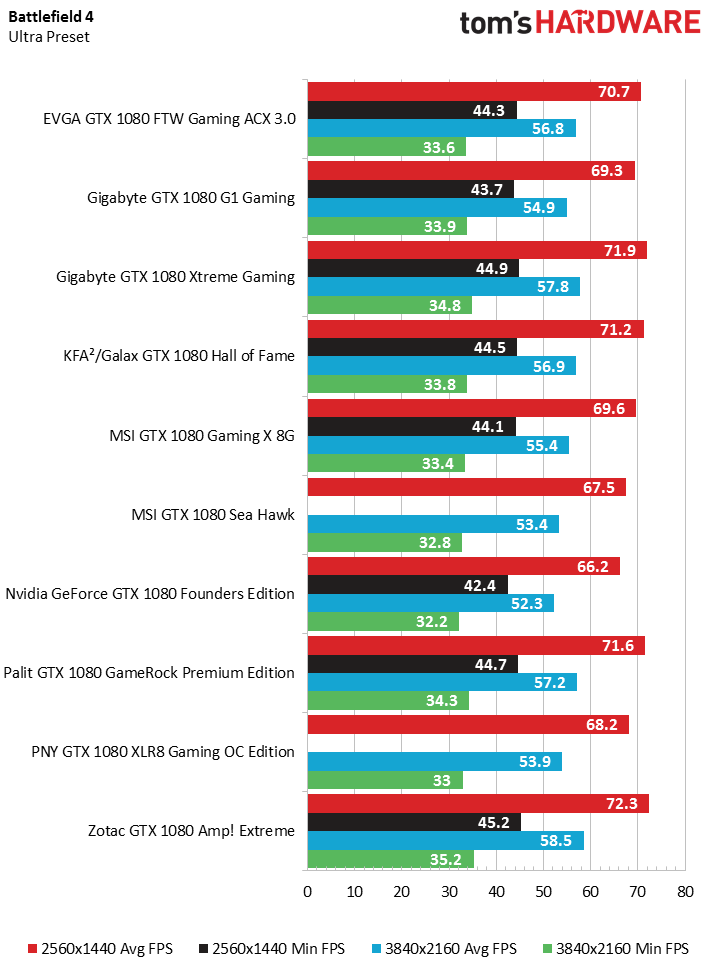

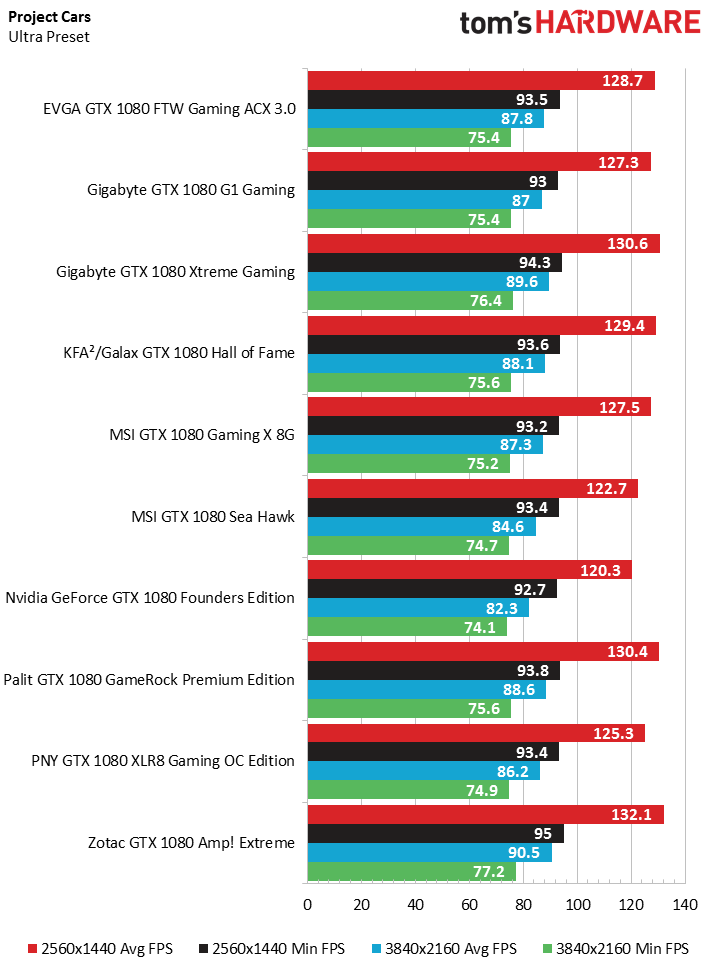

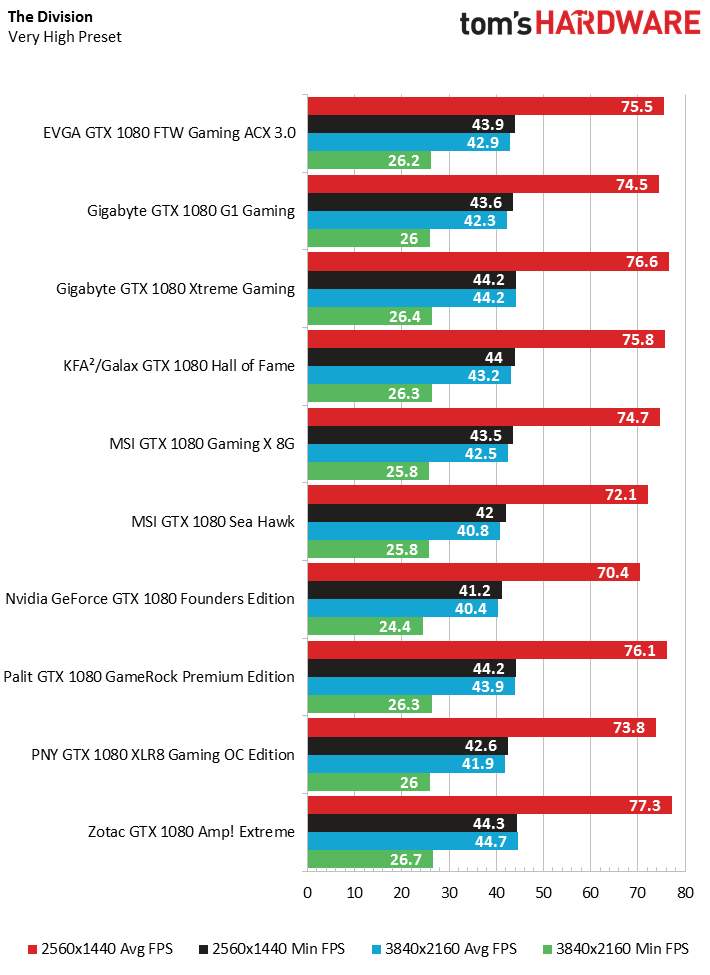

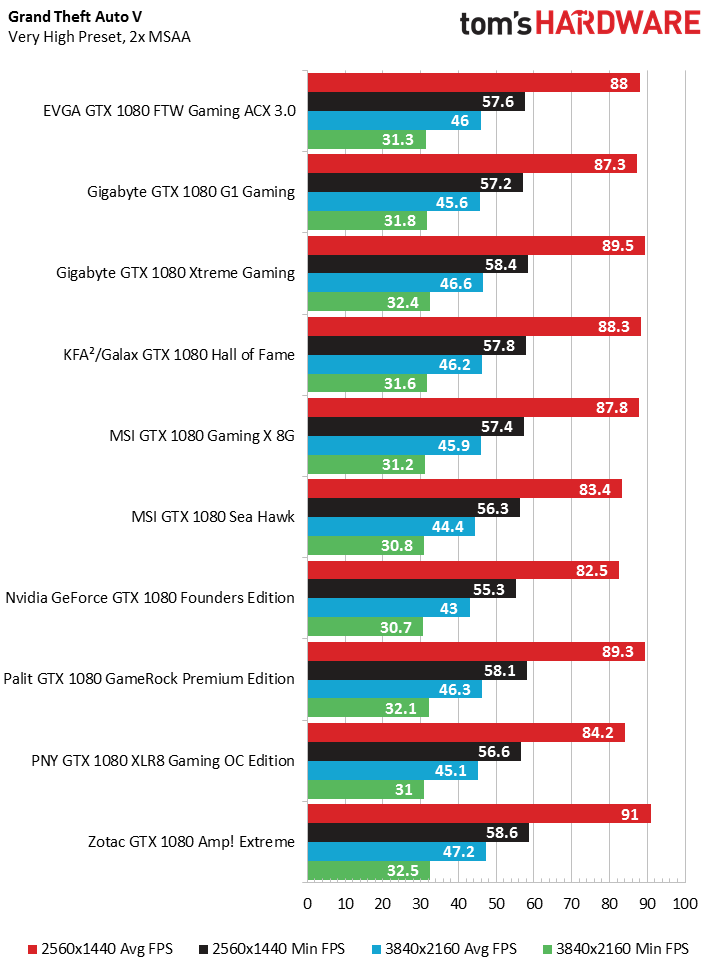

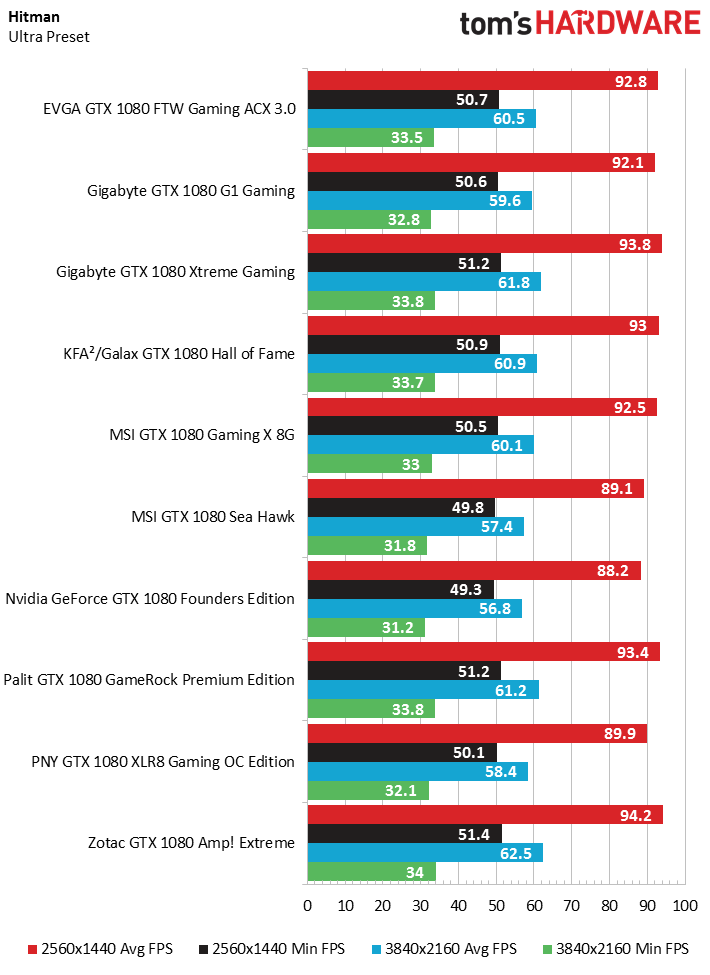

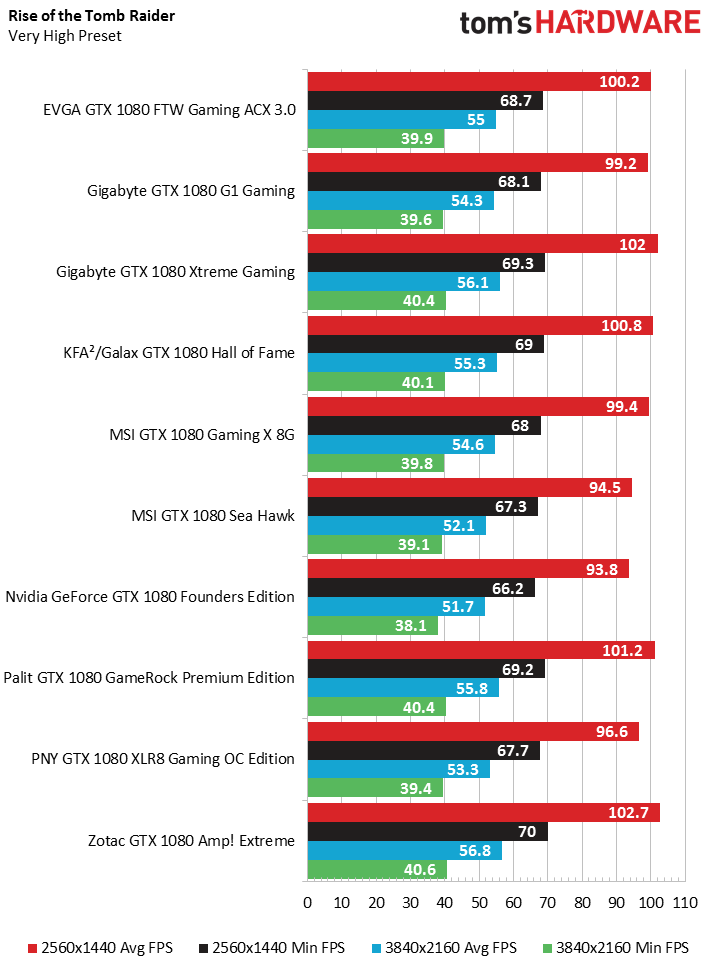

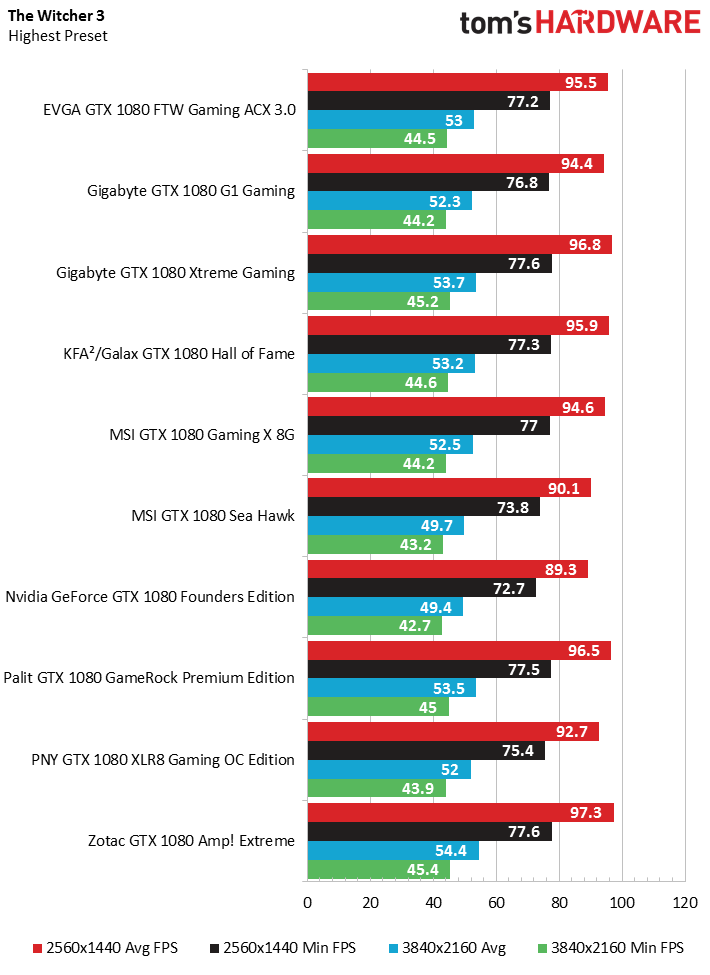

The following galleries each contain four images, covering two tested resolutions. We put our focus on QHD (2560x1440) and UHD (3840x2160), plotting out average and minimum frame rates for each resolution in separate graphs.

All of the factory-overclocked cards offer similar performance, more or less. That's why our primary focus centers on evaluating the more technical aspects of each board design, along with their coolers. This is where differences in noise, power, and temperatures are most likely to come from.

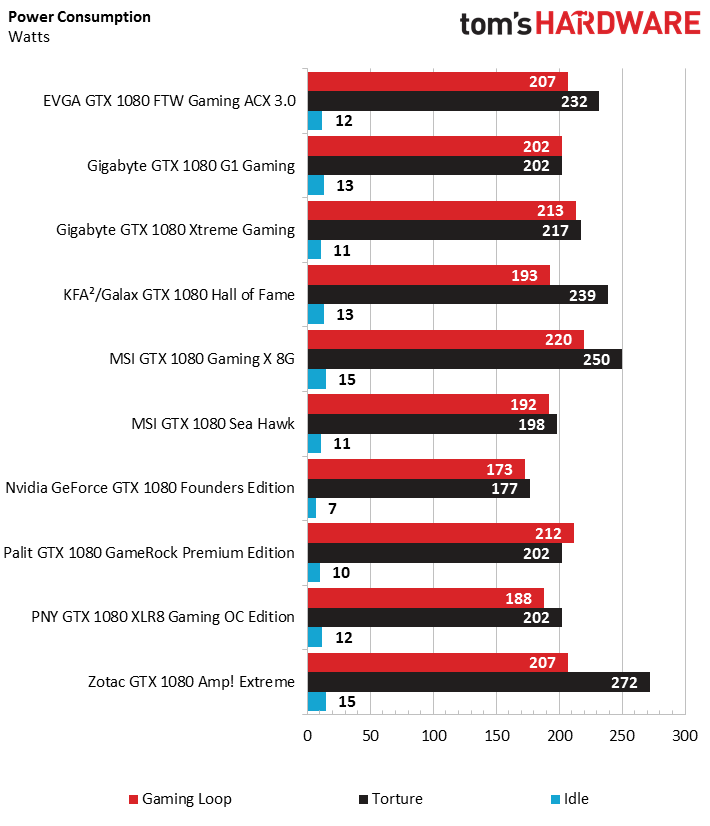

Power Consumption

We begin by comparing the power consumption of each card in our gaming loop, stress test, and at idle. Depending on the power targets specified by each manufacturer, we sometimes measured substantial differences. We're ignoring the decimal places in our bar graphs, since those values are too small and would be within measurement tolerances.

We also found that some cards with lower power targets started to throttle during our stress test, resulting in lower power consumption numbers. This did not, however, have a negative impact on general gaming performance for any of the tested cards, as the stress test merely represents a worst-case scenario.

We confirmed that MSI's retail cards will ship with a slightly lower power target (max. 240 to 250 watts) after an internal discussion and evaluation of our measurements.

This also applies to the BIOS versions with OC mode enabled by default, which employ a roughly 20 MHz-higher base and GPU Boost frequency. In the interest of fairness, we tested both MSI cards using normal mode, without the overclocked base and GPU Boost rates. This doesn't affect our power consumption measurements, though.

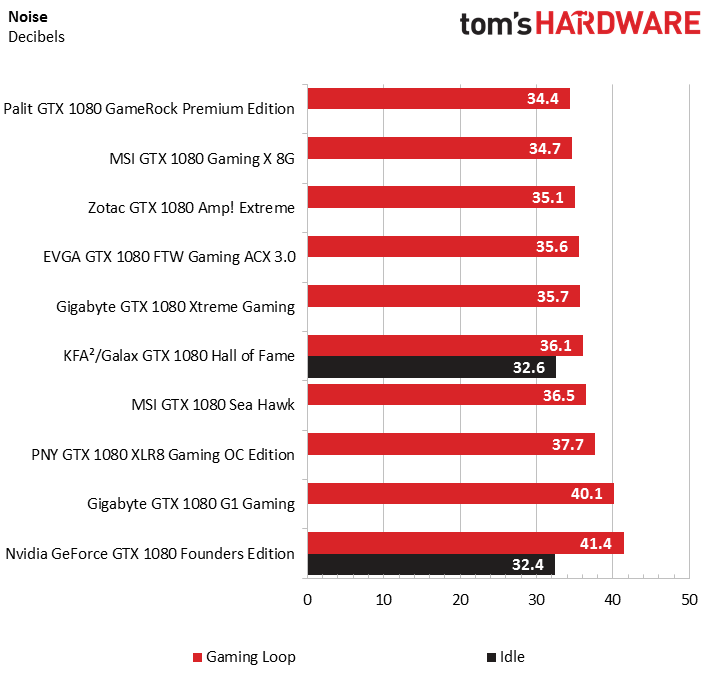

Noise

For the following comparison, we divide all of the gallery's bar graphs into gaming loop and idle, even if "noise" in practice spans a wide spectrum and the "character" of the sound varies a lot, with each card having highly individual results. Therefore, it is important not to compare just the absolute numbers, but also the frequency spectrum we're presenting.

Many of the cards implement a semi-passive mode, where their fans remain off when the card is idle. Thus, we refrained from taking measurements in that state. Even in our anechoic chamber, levels of 22 dB(A) and below merely represent ambient noise.

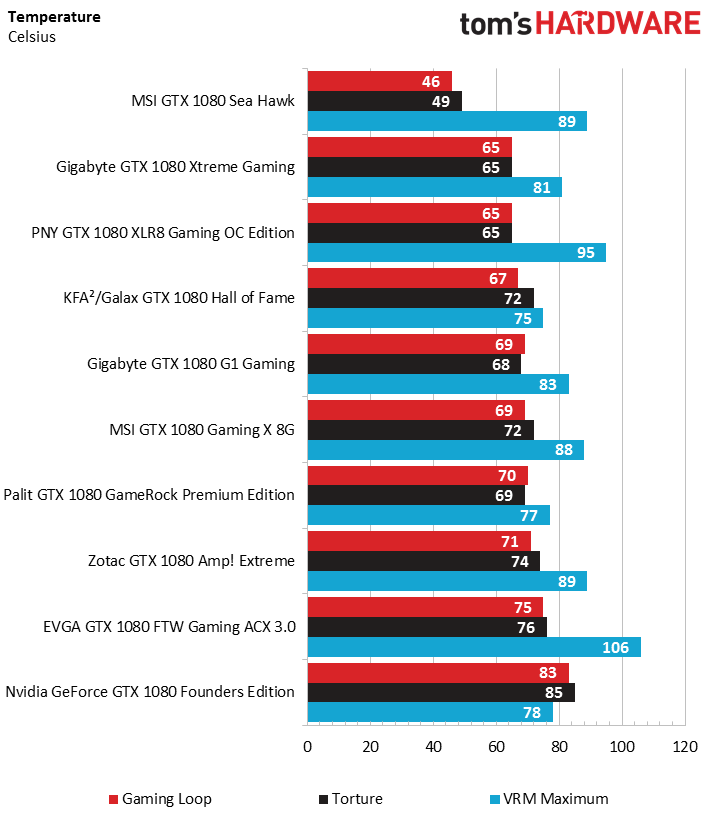

Temperature

For this comparison, we divide the gallery's bar graphs into gaming loop, stress test, and peak temperatures measured on the MOSFETs.

We occasionally compared the temperatures on our benchmark table with those measured inside a closed case and found them to be no more than two or three Kelvin higher.

Since temperatures in a closed case also depend heavily on the enclosure's cooling performance, the only representative and reproducible values are those measured on our benchmark table. Those are the ones we compare.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Benchmark Results

Prev Page Introduction & Overview Next Page Nvidia GeForce GTX 1080 Founders EditionGet Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

ledhead11 Love the article!Reply

I'm really happy with my 2 xtreme's. Last month I cranked our A/C to 64f, closed all vents in the house except the one over my case and set the fans to 100%. I was able to game with the 2-2.1ghz speed all day at 4k. It was interesting to see the GPU usage drop a couple % while fps gained a few @ 4k and able to keep the temps below 60c.

After it was all said and done though, the noise wasn't really worth it. Stock settings are just barely louder than my case fans and I only lose 1-3fps @ 4k over that experience. Temps almost never go above 60c in a room around 70-74f. My mobo has the 3 spacing setup which I believe gives the cards a little more breathing room.

The zotac's were actually my first choice but gigabyte made it so easy on amazon and all the extra stuff was pretty cool.

I ended up recycling one of the sli bridges for my old 970's since my board needed the longer one from nvida. All in all a great value in my opinion.

One bad thing I forgot to mention and its in many customer reviews and videos and a fair amount of images-bent fins on a corner of the card. The foam packaging slightly bends one of the corners on the cards. You see it right when you open the box. Very easily fixed and happened on both of mine. To me, not a big deal, but again worth mentioning. -

redgarl The EVGA FTW is a piece of garbage! The video signal is dropping randomly and make my PC crash on Windows 10. Not only that, but my first card blow up after 40 days. I am on my second one and I am getting rid of it as soon as Vega is released. EVGA drop the ball hard time on this card. Their engineering design and quality assurance is as worst as Gigabyte. This card VRAM literally burn overtime. My only hope is waiting a year and RMA the damn thing so I can get another model. The only good thing is the customer support... they take care of you.Reply -

Nuckles_56 What I would have liked to have seen was a list of the maximum overclocks each card got for core and memory and the temperatures achieved by each coolerReply -

Hupiscratch It would be good if they get rid of the DVI connector. It blocks a lot of airflow on a card that's already critical on cooling. Almost nobody that's buying this card will use the DVI anyway.Reply -

Nuckles_56 Reply18984968 said:It would be good if they get rid of the DVI connector. It blocks a lot of airflow on a card that's already critical on cooling. Almost nobody that's buying this card will use the DVI anyway.

Two things here, most of the cards don't vent air out through the rear bracket anyway due to the direction of the cooling fins on the cards. Plus, there are going to be plenty of people out there who bought the cheap Korean 1440p monitors which only have DVI inputs on them who'll be using these cards -

ern88 I have the Gigabyte GTX 1080 G1 and I think it's a really good card. Can't go wrong with buying it.Reply -

The best card out of box is eVGA FTW. I am running two of them in SLI under Windows 7, and they run freaking cool. No heat issue whatsoever.Reply

-

Mike_297 I agree with 'THESILVERSKY'; Why no Asus cards? According to various reviews their Strixx line are some of the quietest cards going!Reply -

trinori LOL you didnt include the ASUS STRIX OC ?!?Reply

well you just voided the legitimacy of your own comparison/breakdown post didnt you...

"hey guys, here's a cool comparison of all the best 1080's by price and performance so that you can see which is the best card, except for some reason we didnt include arguably the best performing card available, have fun!"

lol please..