Nvidia GeForce GTX 1080 Graphics Card Roundup

Gigabyte GTX 1080 G1 Gaming

Why you can trust Tom's Hardware

Although Gigabyte sells six different versions of the 1080, we're starting with the company's GeForce GTX 1080 G1 Gaming. On the next page we add its 1080 Xtreme Gaming model. Once we make the rounds and cover more of the competition's hardware, we'll circle back around to see what else Gigabyte has to offer.

Let's start with a summary of the 1080 G1 Gaming's technical specifications:

Technical Specifications

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Exterior & Interfaces

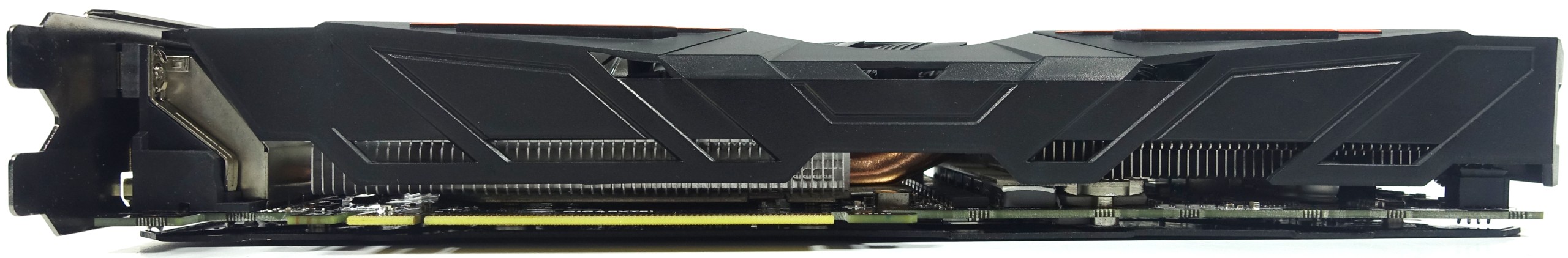

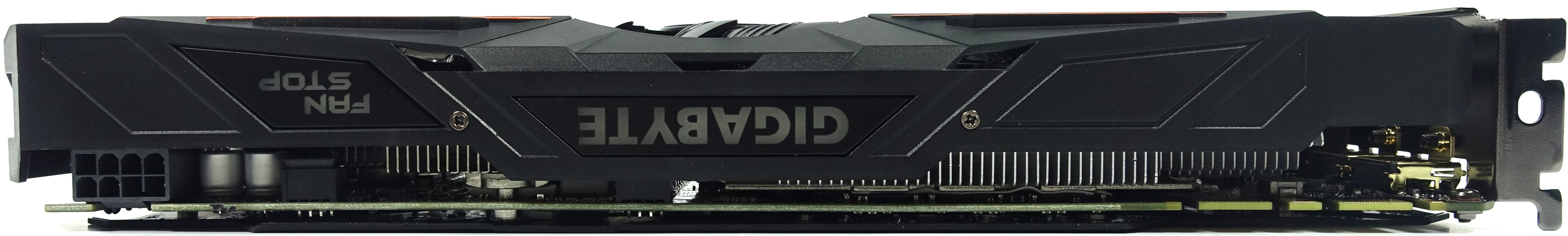

Gigabyte's shroud is made of thin plastic and doesn't exude the same high quality as the metallic covers over some of its previous Windforce-equipped models. The trade-off is that this card weighs just 31oz (871g), despite measuring 11 inches (28.4cm) long, 4 1/3 inches (11cm) high, and 1 3/8 inches (3.5cm) wide.

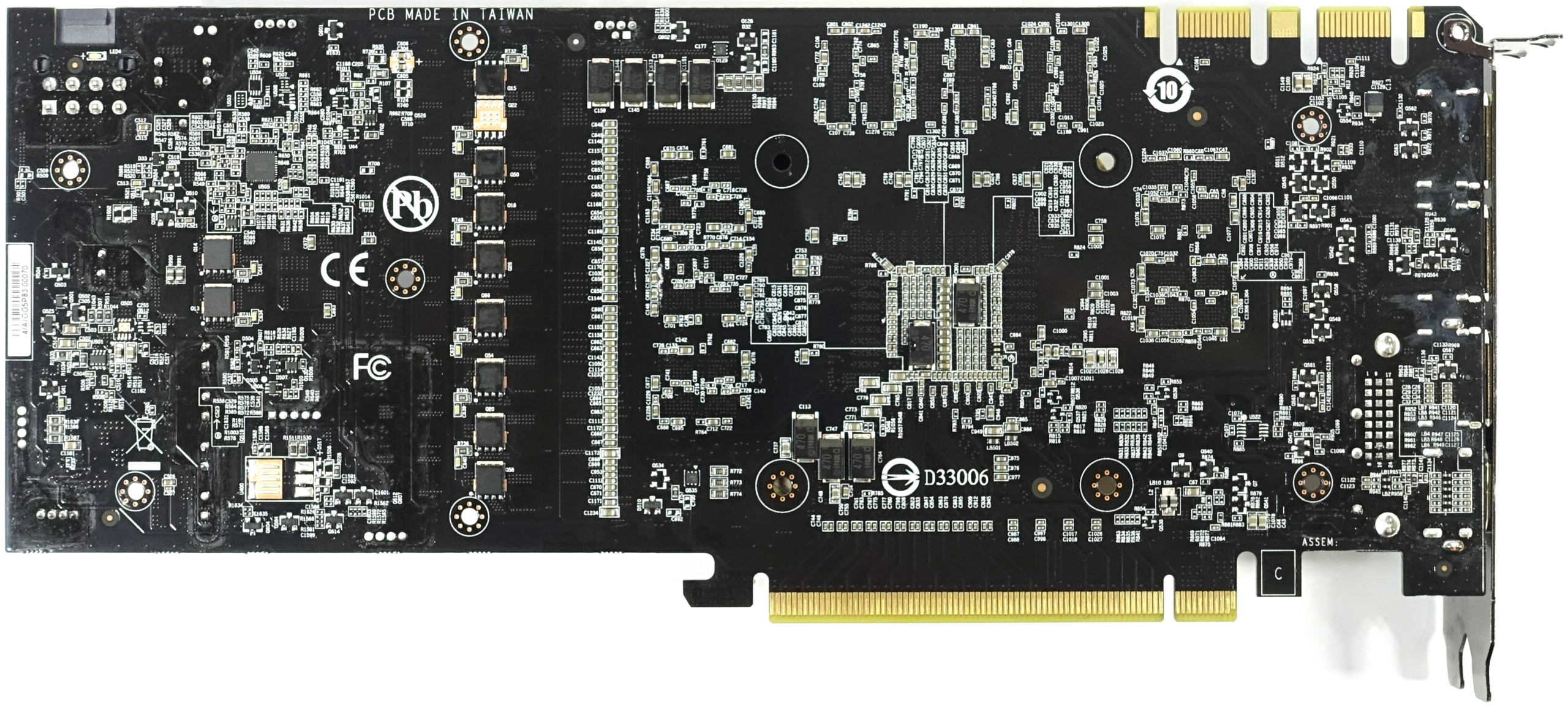

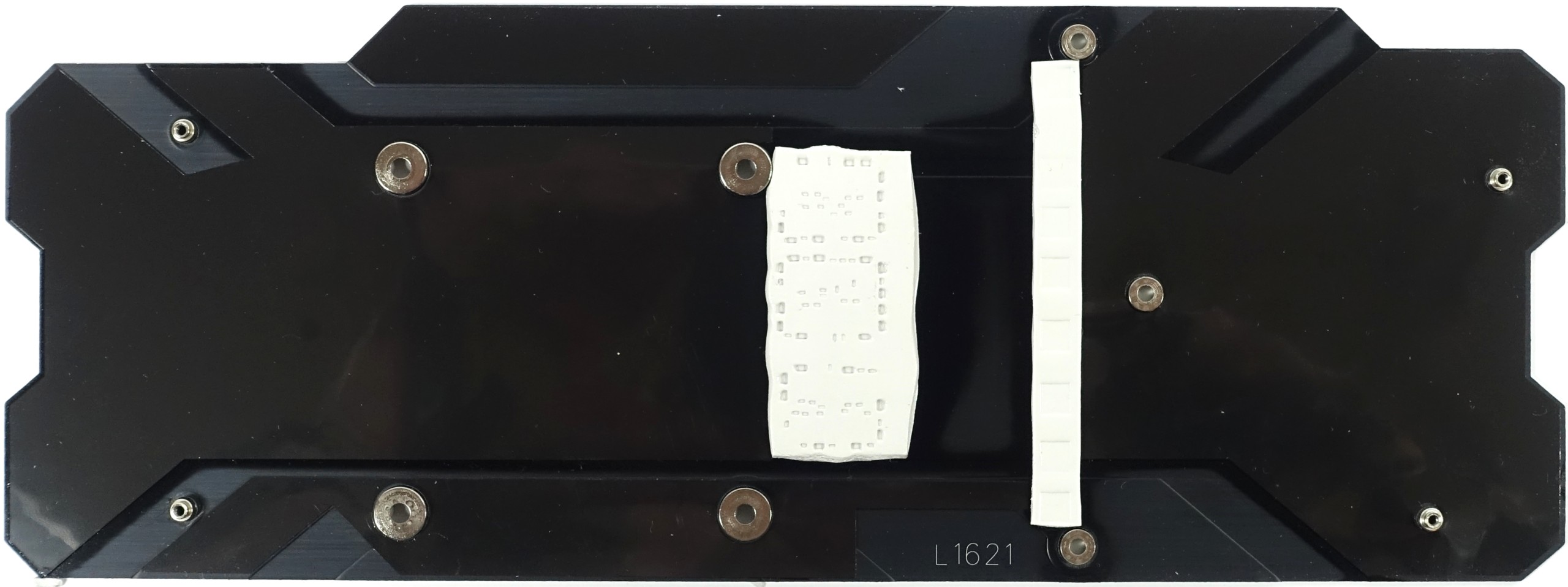

The card is covered by a single-piece backplate. It lacks openings for ventilation, but is connected to the hot spots via thermal tape. Plan to accommodate an additional one-fifth of an inch (5mm) in depth beyond the plate, which may become relevant in multi-GPU configurations.

While it is perfectly possible to use this card without its backplate, removing it requires disassembling the whole cooler and likely voiding Gigabyte's warranty.

The top of the card is dominated by a centered Gigabyte label, glowing in bright colors, and an LED indicator for the card's silent mode. The eight-pin power connector is rotated 180° and positioned at the end of the card. The design may be a matter of taste, but we don't think it's anything to write home about.

At its end, the card is completely closed off. This makes sense, as the fins are positioned vertically and won't allow any airflow down there anyway.

The rear bracket features five outputs, of which a maximum of four can be used simultaneously in a multi-monitor setup. In addition to one dual-link DVI-D connector (be aware that there is no analog signal), the bracket also exposes one HDMI 2.0b and three DisplayPort 1.4-ready outputs. The rest of the plate is mostly solid, with several openings cut into it that look like they're supposed to improve airflow, but don't actually do anything.

Board & Components

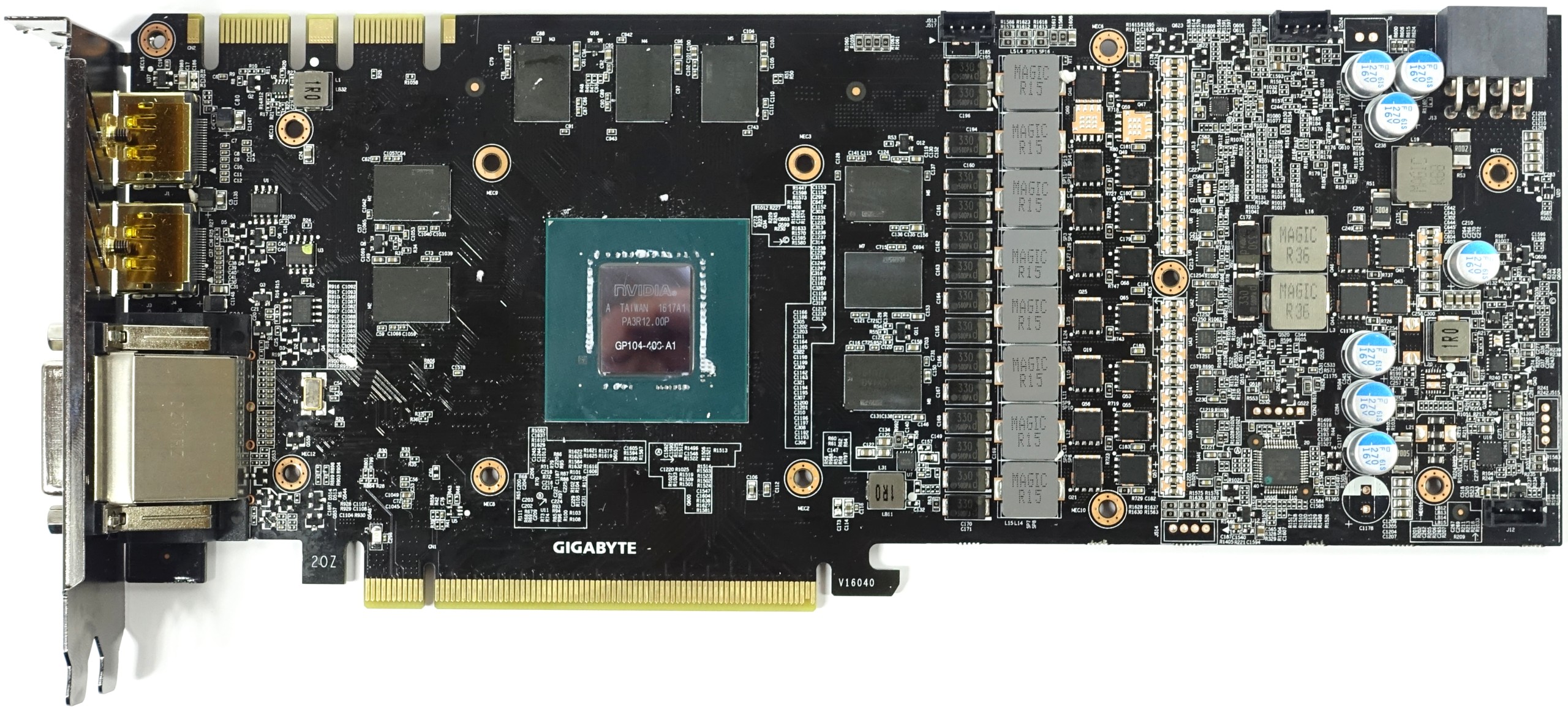

Gigabyte's Xtreme Gaming card, dissected on the next page, employs a different layout than the G1 Gaming, which has just enough space for one eight-pin power connector.

The card uses GDDR5X memory modules from Micron, which are sold along with Nvidia's GPU to board partners. Eight memory chips (MT58K256M32JA-100) transferring at 10 MT/s are attached to a 256-bit interface, allowing for a theoretical bandwidth of 320 GB/s.

The 8+2-phase power supply relies on the sparsely documented µP9511P as a PWM controller, just like Nvidia's reference cards. Since this component can't communicate directly with the MOSFETs on the VRM phases, Gigabyte utilizes separate PWM drivers (gate drivers) to talk to a total of three single-channel MOSFETs per phase. We were pleasantly surprised by this rather elaborate solution.

Not using more cost-effective dual-channel MOSFETs (and thereby sacrificing space on the card) may have been a deliberate choice to improve efficiency and dissipate heat more effectively, especially since the third line of MOSFETs is positioned on the back of the board and may benefit from cooling through the board's backplate. This is a unique feature in the field of 1080s we're reviewing.

As seen previously, two capacitors are installed right below the GPU to absorb and equalize voltage peaks. The board layout seems pretty dense, but it's organized well.

Power Results

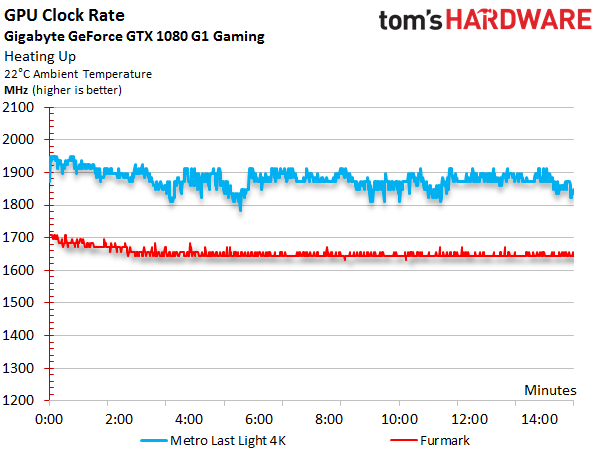

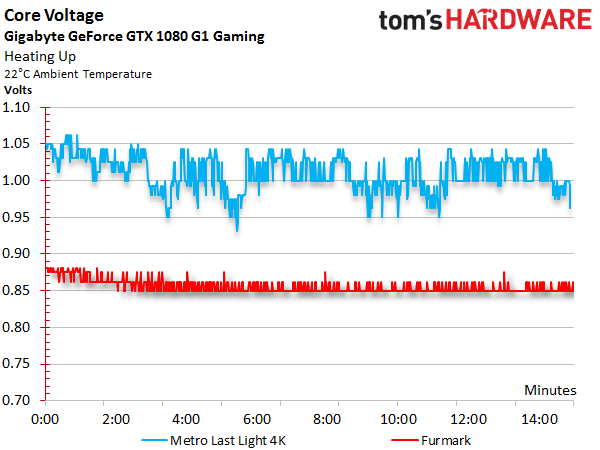

Before we look at power consumption, we should talk about the correlation between GPU Boost frequency and core voltage, which show such striking similarity that we deliberately put their graphs one after the other. Notice how the two curves don't drop as much in the face of rising temperatures compared to Nvidia's reference design.

Where the GPU Boost frequency falls to 1873 MHz under load (and even further during our stress test), this movement is mirrored by our voltage measurements. We recorded up to 1.062V in the beginning (similar to the Founders Edition model), and the value drops below 0.962V later on (still above the readings collected for our Founders Edition sample).

Summing up measured voltages and currents, we then arrive at a total consumption figure we can easily confirm with our test equipment by monitoring the card's power connectors.

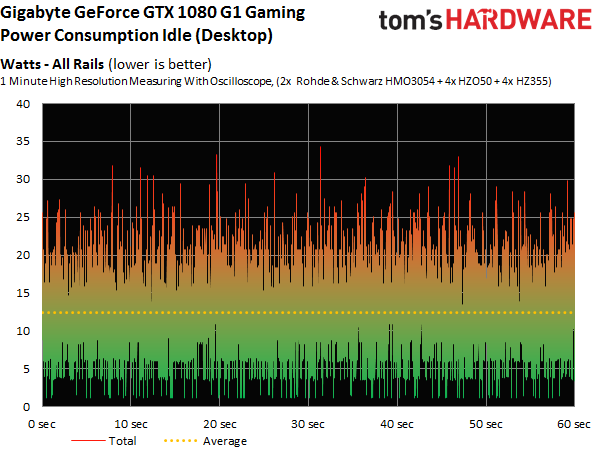

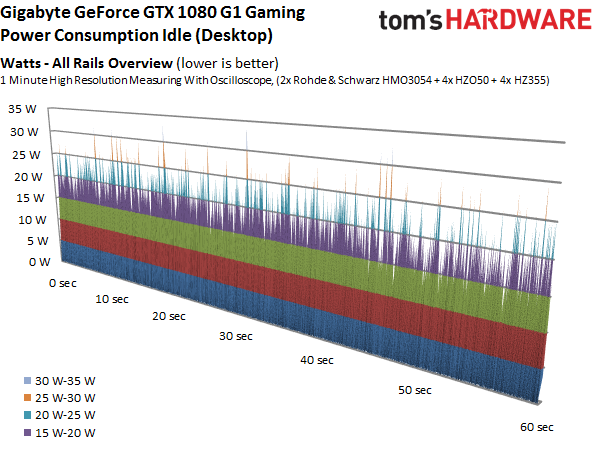

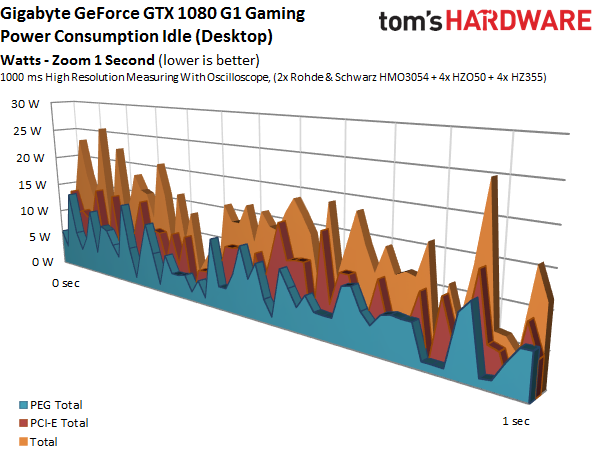

As a result of Nvidia's restrictions, manufacturers sacrifice the lowest possible frequency bin in order to gain an extra GPU Boost step. So, Gigabyte's power consumption is disproportionately high when idle. In all fairness, the company manages this behavior relatively well compared to some of its competition.

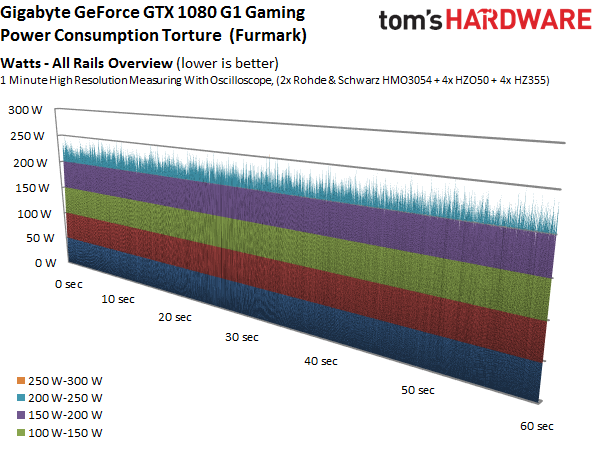

Our complete power measurements are as follows:

| Power Consumption | |

|---|---|

| Idle | 13W |

| Idle Multi-Monitor | 15W |

| Blu-ray | 14W |

| Browser Games | 115-132W |

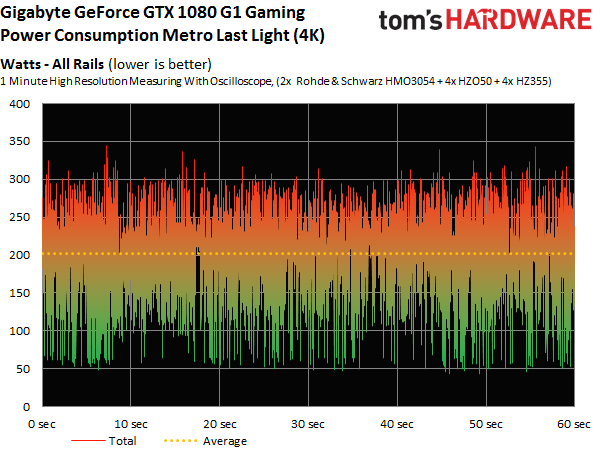

| Gaming (Metro Last Light at 4K) | 202W |

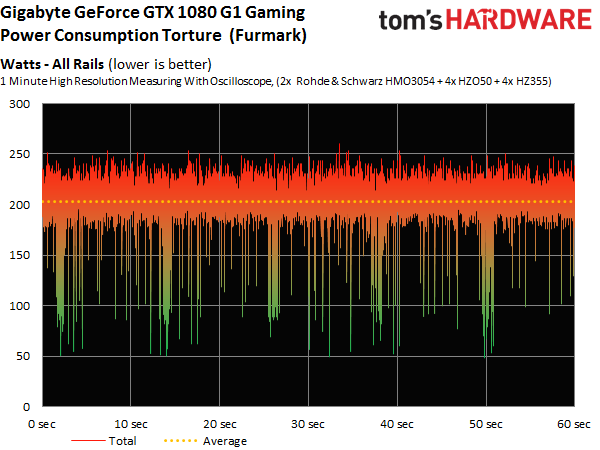

| Torture (FurMark) | 203W |

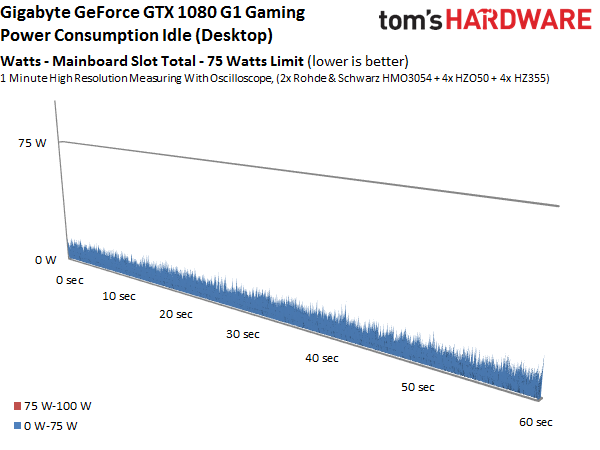

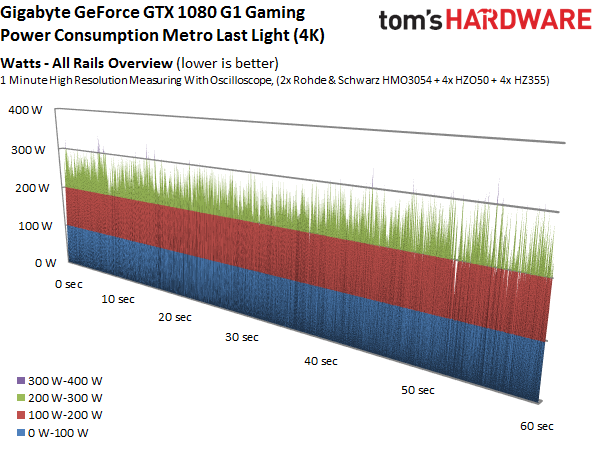

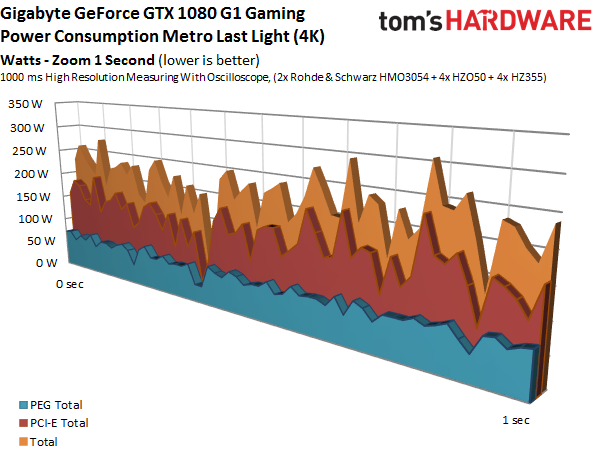

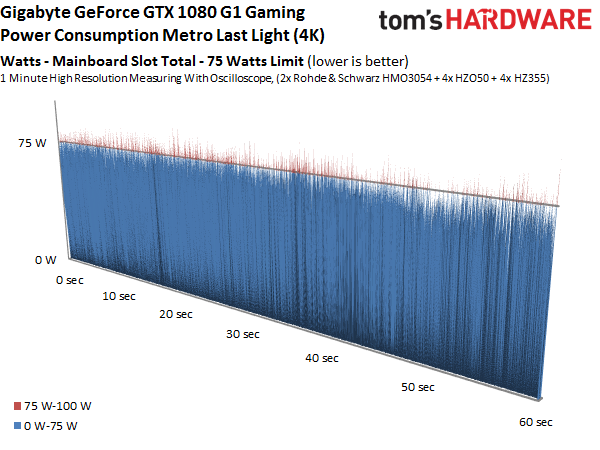

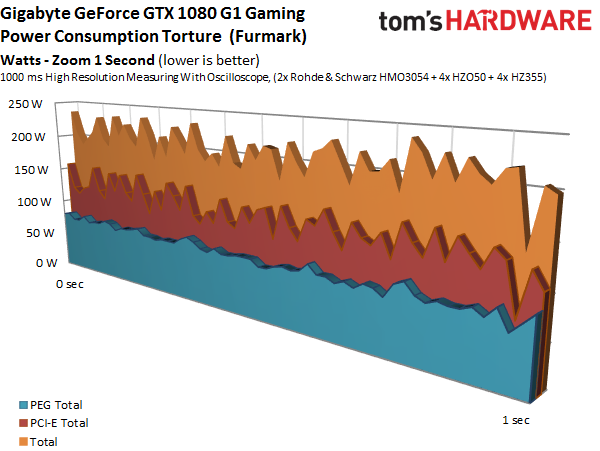

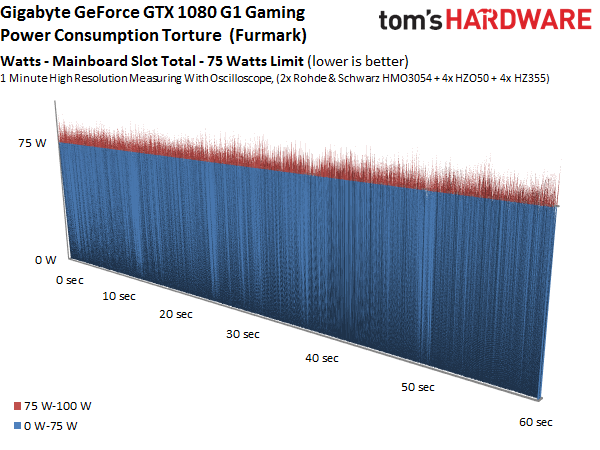

Now let's take a more detailed look at power consumption when the card is idle, when it's gaming at 4K, and during our stress test. The graphs show the distribution of load between each voltage and supply rail, providing a bird's eye view of variations and peaks:

Temperature Results

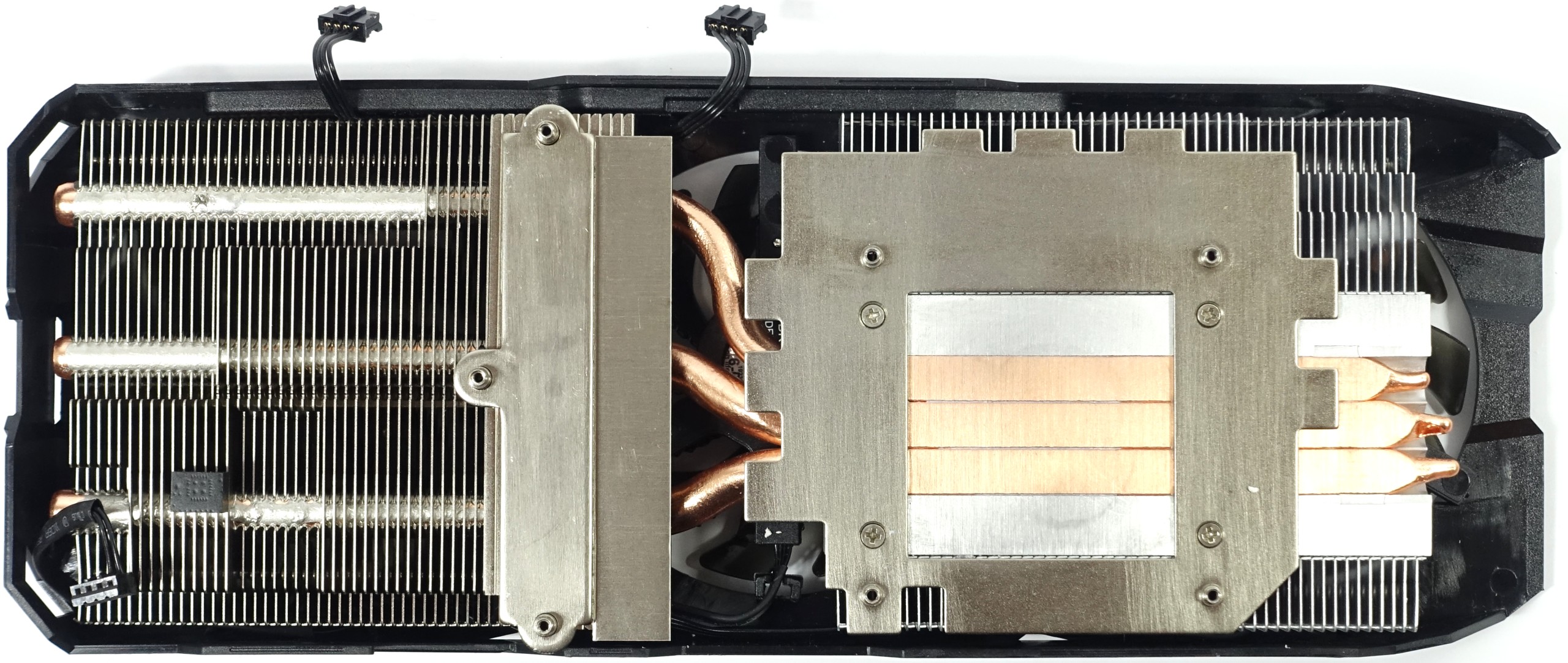

Naturally, heat output is directly related to power consumption, and the 1080 G1 Gaming's ability to dissipate that thermal energy can only be understood by looking at its cooling solution.

Gigabyte relies on its aging Windforce design. In this case, the copper heat sink gives way to a simpler (and more affordable) direct heat touch solution that facilitates contact between the cooler's three partially flattened pipes made of sintered composite material and the GPU.

The rest of the plate is meant to cool the memory and (commendably) the voltage regulators. Added thermal pads ensure good contact.

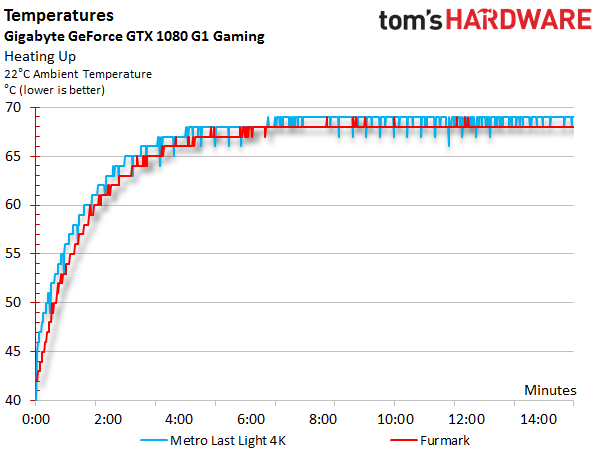

The slightly better performance of this cooler compared to Nvidia's Founders Edition card is also reflected by the temperature graph, as a limit of 158°F (70°C) is not, or hardly (162°F/72°C in a closed case) exceeded.

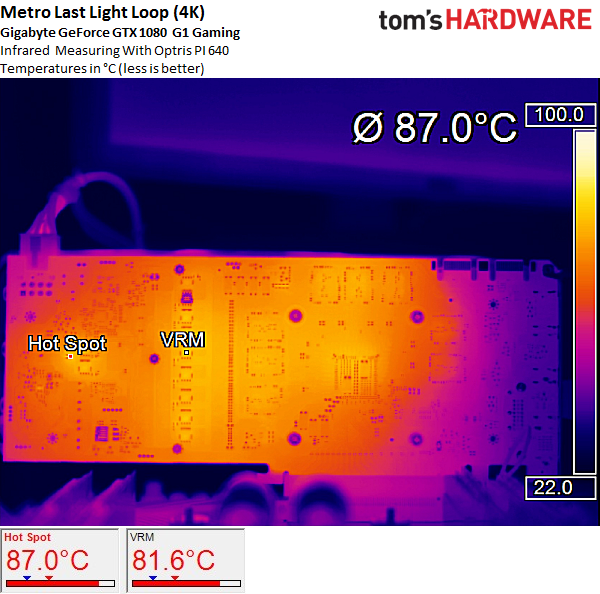

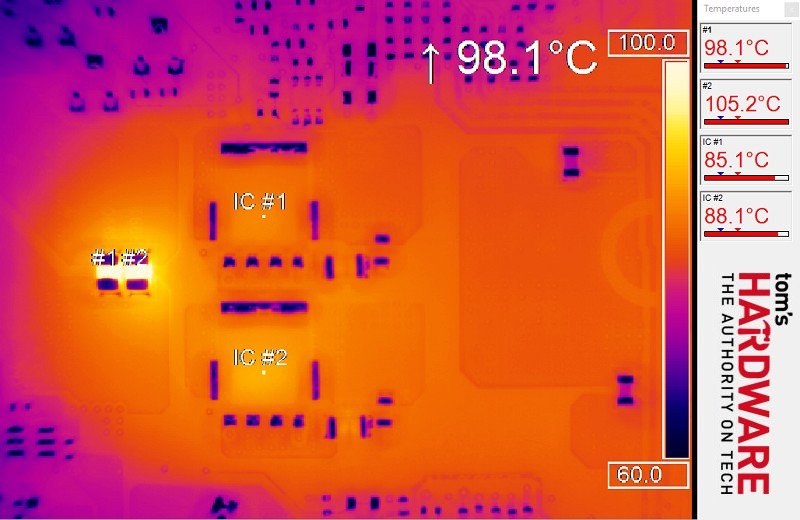

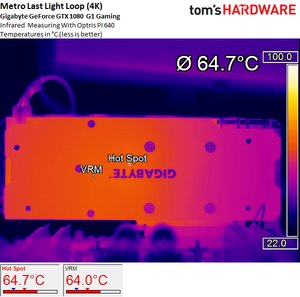

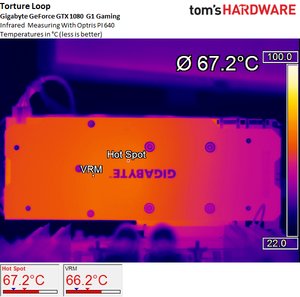

Unfortunately, upon a closer look at the infrared images, it becomes clear that this card does have troublesome areas. While the voltage regulation circuitry stays relatively cool at 180°F (82°C), two smaller SMD capacitors glow at an unpleasant 189°F (87°C).

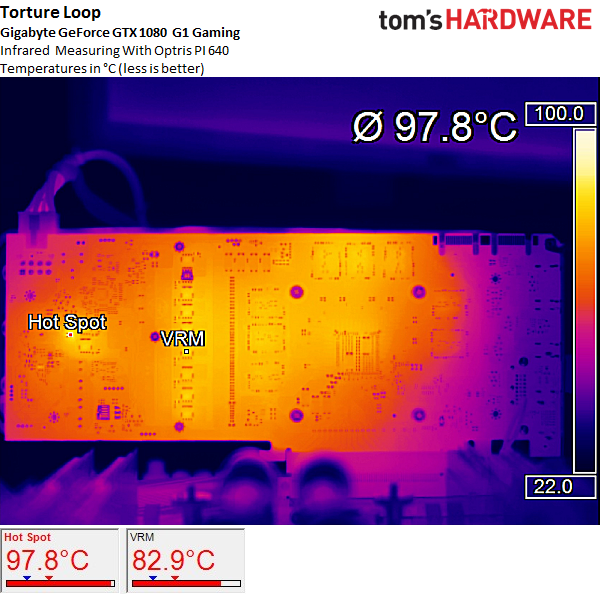

During our torture loop, those capacitors (R990 and R991) even reach temperatures of 212°F (100°C), despite a marginal increase in heat measured at the voltage regulators.

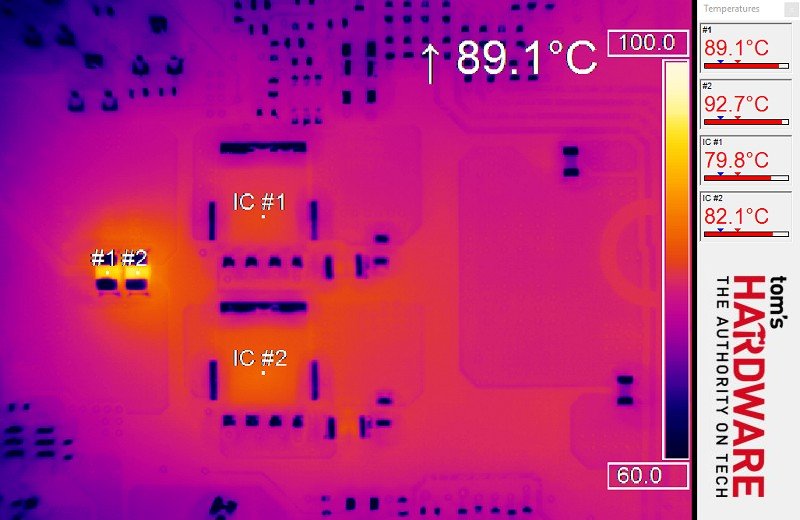

To test the two shunts more accurately, we change the distance to just 2.75in (7cm) and swap the IR camera's lens, since the areas we need to measure are just 1mm². A look at the specs and an email to Gigabyte quickly confirmed that our findings shouldn't cause any problems.

Even after a FurMark run, the measured temperature proves acceptable if the specifications are to be trusted. Of course, that's not taking into account the PWM controller residing right above the two capacitors on the board's top side. It generates a fair amount of heat as well, resulting in two hot spots right on top of one another.

Our initial testing showed that the severity of this problem could easily be mitigated with an additional thermal pad, so Gigabyte implemented our suggestion on its retail cards.

With the backplate mounted (sporting thermal pads between the RAM modules and VRM), the "outside" temperatures appear as follows:

Sound Results

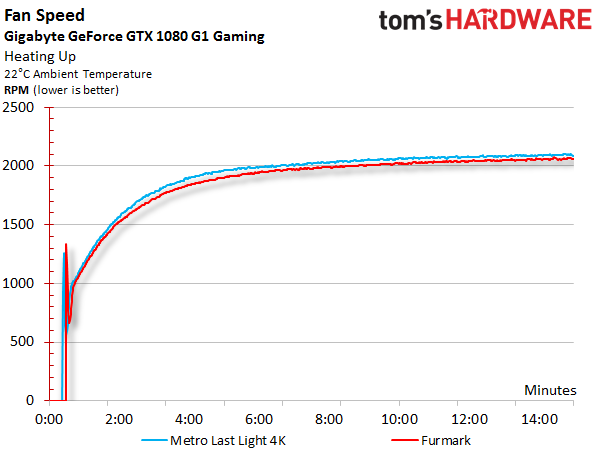

Since power consumption during our gaming and stress tests is similar, it comes as little surprise that the fan curves are also a close match. The behavior during start-up and an overall clean profile reliably prevents multiple on/off cycles once the fans spin up. In addition, the start-up speed is chosen in such a way that the fans should continue to start reliably even as they age. The same goes for turning the fans off once the card cools down.

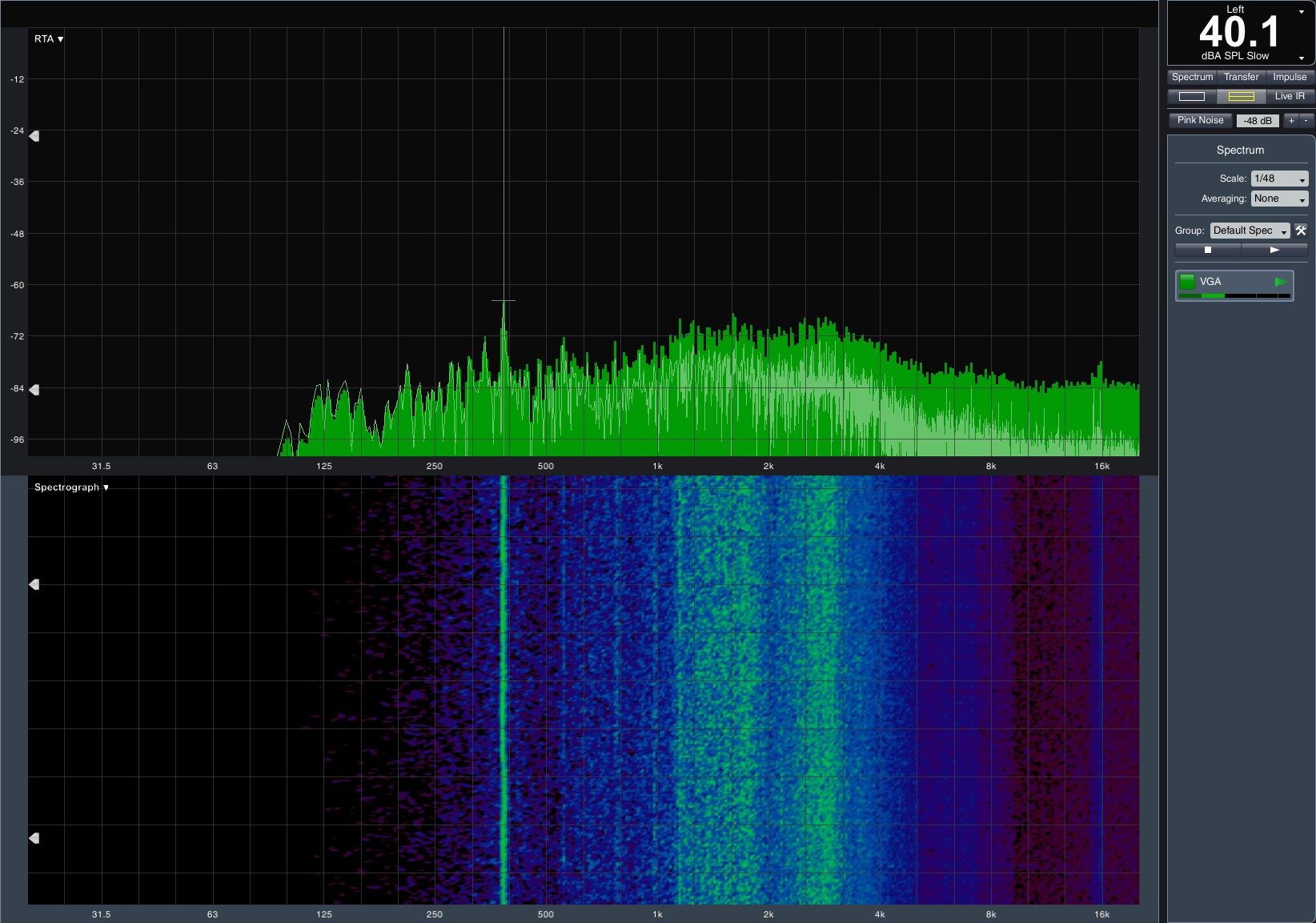

When the card is idle, its noise is not measurable thanks to a semi-passive mode that keeps the fans from spinning. However, running at full load for a while easily raises the noise level to 40 dB(A). It gets even louder during our torture test.

To be frank, we're not impressed with the card's acoustics. They can be improved by manually adjusting the fan curve and increasing the temperature target to 167°F (75°C). Doing so reduces the maximum GPU Boost frequency though, and you may start to hear an audible chirp coming from the coils.

Considering what other manufacturers achieve using aggressive coolers, the G1 Gaming's solution almost feels unambitious. It's missing the tender loving care competing vendors put into their own 1080-based cards. Though the G1 Gaming isn't bad in this regard, the end result isn't particularly good either.

Gigabyte GeForce GTX 1080 G1 Gaming

Reasons to buy

Reasons to avoid

MORE: Best Deals

MORE: Hot Bargains @PurchDeals

Current page: Gigabyte GTX 1080 G1 Gaming

Prev Page Galax/KFA² GTX 1080 Hall of Fame Next Page Gigabyte GTX 1080 Xtreme GamingGet Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

ledhead11 Love the article!Reply

I'm really happy with my 2 xtreme's. Last month I cranked our A/C to 64f, closed all vents in the house except the one over my case and set the fans to 100%. I was able to game with the 2-2.1ghz speed all day at 4k. It was interesting to see the GPU usage drop a couple % while fps gained a few @ 4k and able to keep the temps below 60c.

After it was all said and done though, the noise wasn't really worth it. Stock settings are just barely louder than my case fans and I only lose 1-3fps @ 4k over that experience. Temps almost never go above 60c in a room around 70-74f. My mobo has the 3 spacing setup which I believe gives the cards a little more breathing room.

The zotac's were actually my first choice but gigabyte made it so easy on amazon and all the extra stuff was pretty cool.

I ended up recycling one of the sli bridges for my old 970's since my board needed the longer one from nvida. All in all a great value in my opinion.

One bad thing I forgot to mention and its in many customer reviews and videos and a fair amount of images-bent fins on a corner of the card. The foam packaging slightly bends one of the corners on the cards. You see it right when you open the box. Very easily fixed and happened on both of mine. To me, not a big deal, but again worth mentioning. -

redgarl The EVGA FTW is a piece of garbage! The video signal is dropping randomly and make my PC crash on Windows 10. Not only that, but my first card blow up after 40 days. I am on my second one and I am getting rid of it as soon as Vega is released. EVGA drop the ball hard time on this card. Their engineering design and quality assurance is as worst as Gigabyte. This card VRAM literally burn overtime. My only hope is waiting a year and RMA the damn thing so I can get another model. The only good thing is the customer support... they take care of you.Reply -

Nuckles_56 What I would have liked to have seen was a list of the maximum overclocks each card got for core and memory and the temperatures achieved by each coolerReply -

Hupiscratch It would be good if they get rid of the DVI connector. It blocks a lot of airflow on a card that's already critical on cooling. Almost nobody that's buying this card will use the DVI anyway.Reply -

Nuckles_56 Reply18984968 said:It would be good if they get rid of the DVI connector. It blocks a lot of airflow on a card that's already critical on cooling. Almost nobody that's buying this card will use the DVI anyway.

Two things here, most of the cards don't vent air out through the rear bracket anyway due to the direction of the cooling fins on the cards. Plus, there are going to be plenty of people out there who bought the cheap Korean 1440p monitors which only have DVI inputs on them who'll be using these cards -

ern88 I have the Gigabyte GTX 1080 G1 and I think it's a really good card. Can't go wrong with buying it.Reply -

The best card out of box is eVGA FTW. I am running two of them in SLI under Windows 7, and they run freaking cool. No heat issue whatsoever.Reply

-

Mike_297 I agree with 'THESILVERSKY'; Why no Asus cards? According to various reviews their Strixx line are some of the quietest cards going!Reply -

trinori LOL you didnt include the ASUS STRIX OC ?!?Reply

well you just voided the legitimacy of your own comparison/breakdown post didnt you...

"hey guys, here's a cool comparison of all the best 1080's by price and performance so that you can see which is the best card, except for some reason we didnt include arguably the best performing card available, have fun!"

lol please..