Nvidia GeForce GTX 1080 Graphics Card Roundup

Palit GTX 1080 GameRock Premium Edition

Why you can trust Tom's Hardware

JetStream, Super JetStream, GameRock, and GameRock Premium Edition. Palit sure gives us a lot of options for picking the GeForce GTX 1080 we really want. Unfortunately for our U.S. readers, none of them are readily available. Palit's "Where to Buy" page lists countries in Asia, Australia, Europe, and Africa, but North and South America are notably missing. Nevertheless, Tom's Hardware serves an international audience, so we're reviewing Palit's GeForce GTX 1080 GameRock Premium Edition (or GPR), its newest flagship.

Palit already reacted to an initial issue we found with its fans and introduced a few changes, namely replacing the fan module. We're now able to test the latest version of its retail card, and this story reflects those updates. Gaming performance hasn't changed much, but other features have. Our tests include a new BIOS with a slightly higher power target, per our suggestion.

Technical Specifications

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Exterior & Interfaces

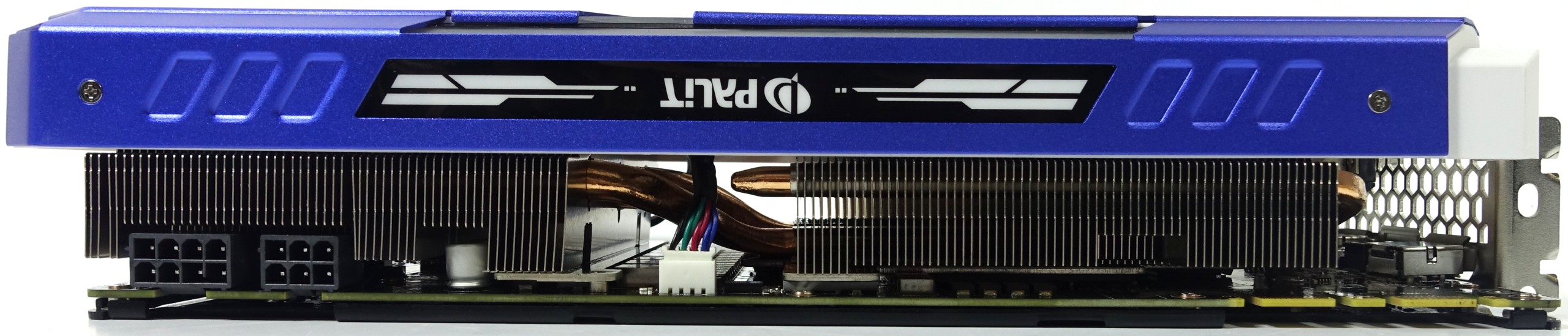

Palit's shroud is made of relatively thick, white plastic. The card's top and front are adorned in blue, white, and silver. A hefty 42oz (1181g) weight makes this one of the heaviest GeForce GTX 1080s in our round-up. It measures 11⅓ inches (28.7cm) long, five inches (12.8cm) tall, and a full two inches (5.2cm) wide, occupying a full three expansion slots. Two massive four-inch (10cm) fans with a rotor diameter of 3.8in (9.6cm) highlight the card's bulky appearance even more.

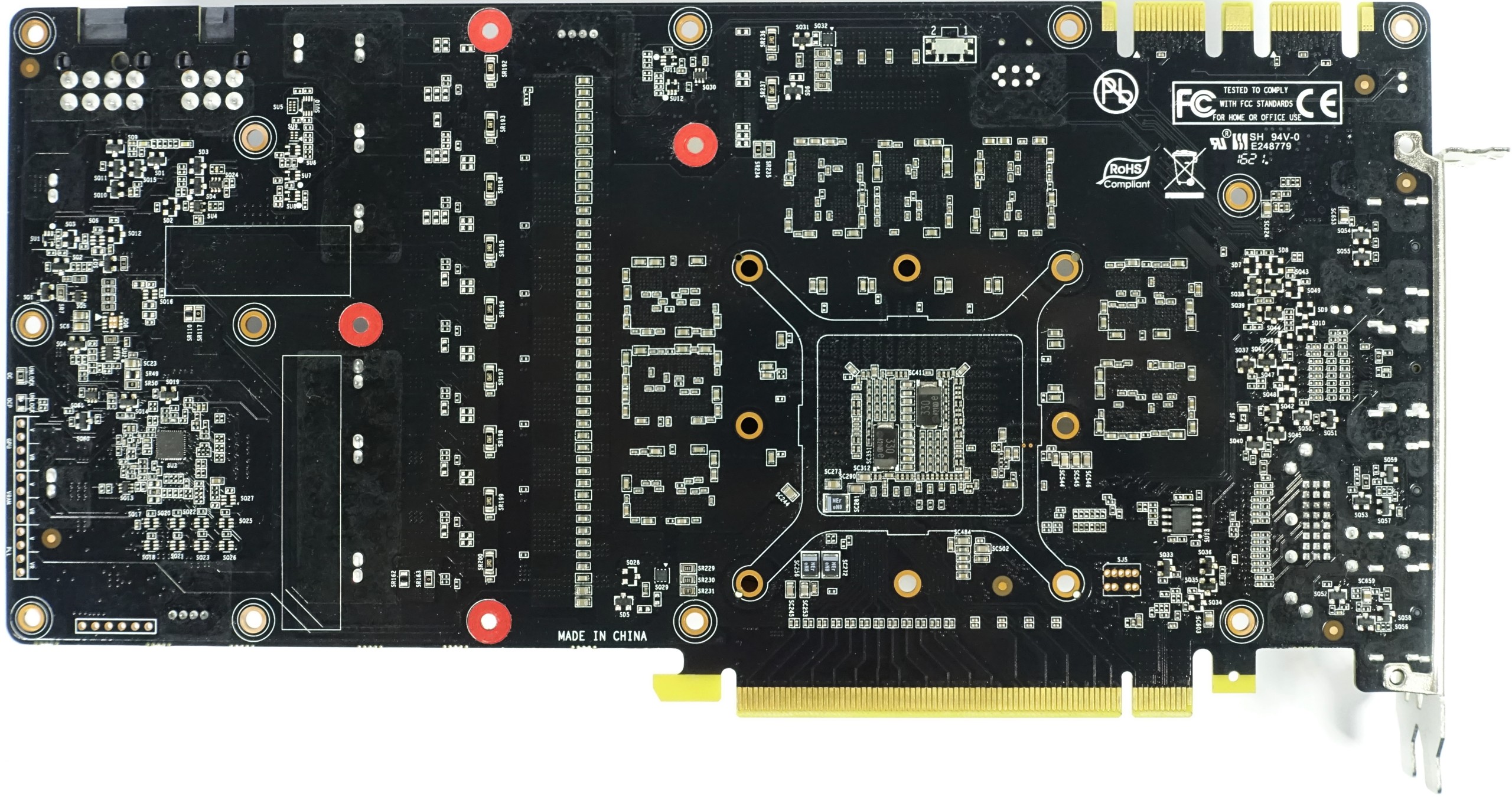

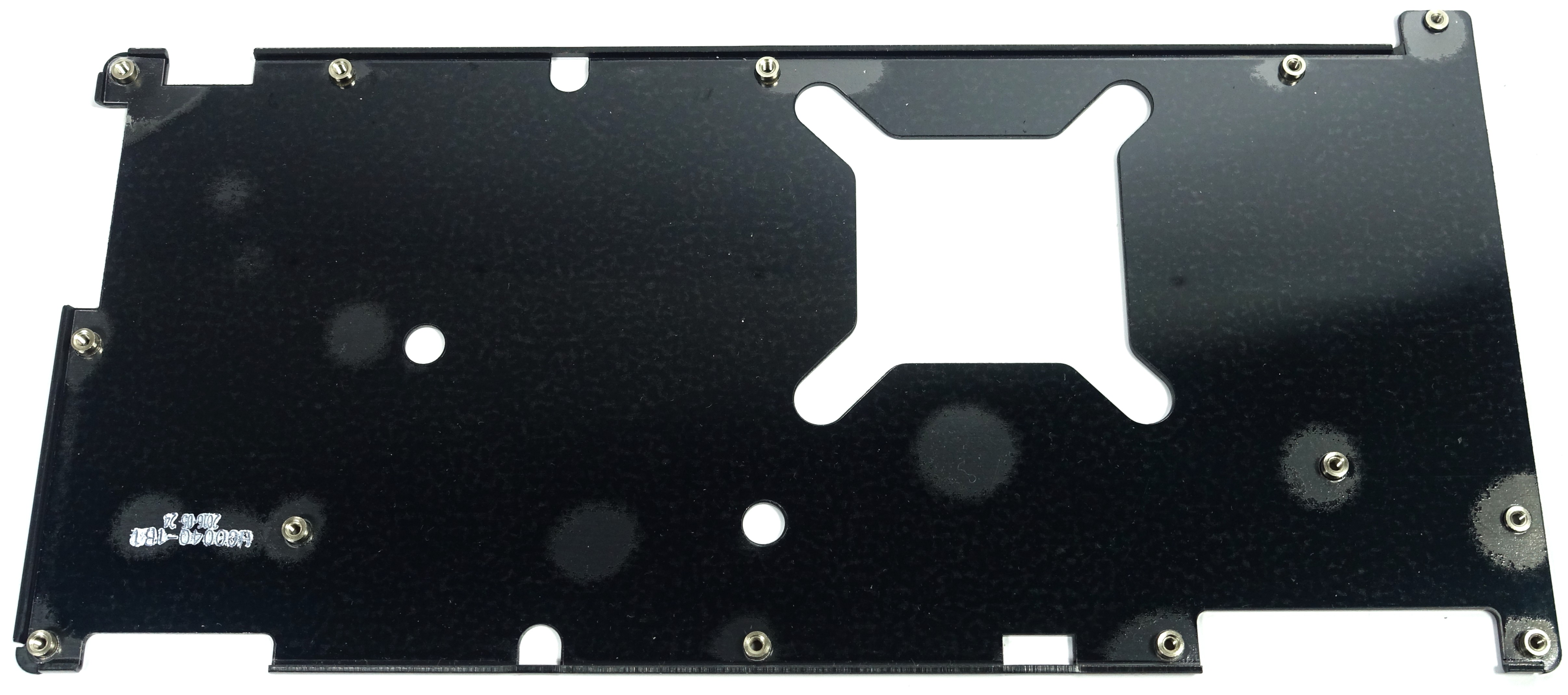

Around back there's a single plate without any openings for ventilation. Instead, there's a GameRock emblem printed on the metal. Plan for an additional one-fifth of an inch (5mm) in depth beyond the plate, which may become relevant in multi-GPU configurations. Since there are no thermal pads between the plate and PCB, the backplate is merely decorative. Although it's possible to use the card without this plate, removing it requires some disassembly, likely voiding Palit's warranty.

You'll find a brightly-lit Palit logo on top of the card. Farther down the board, six- and eight-pin power connectors are rotated 180°. A bulky appearance definitely doesn't convey modesty.

At its end the card is completely closed off, which makes sense since the fins are positioned vertically and won't allow any airflow through the front or back anyway.

The rear bracket features five outputs, of which a maximum of four can be used simultaneously in a multi-monitor setup. In addition to one dual-link DVI-D connector (be aware that there is no analog signal), the bracket also exposes one HDMI 2.0b and three DisplayPort 1.4-ready outputs. The rest of the plate is mostly solid, with several openings cut into it that look like they're supposed to improve airflow, but don't actually do anything.

Board & Components

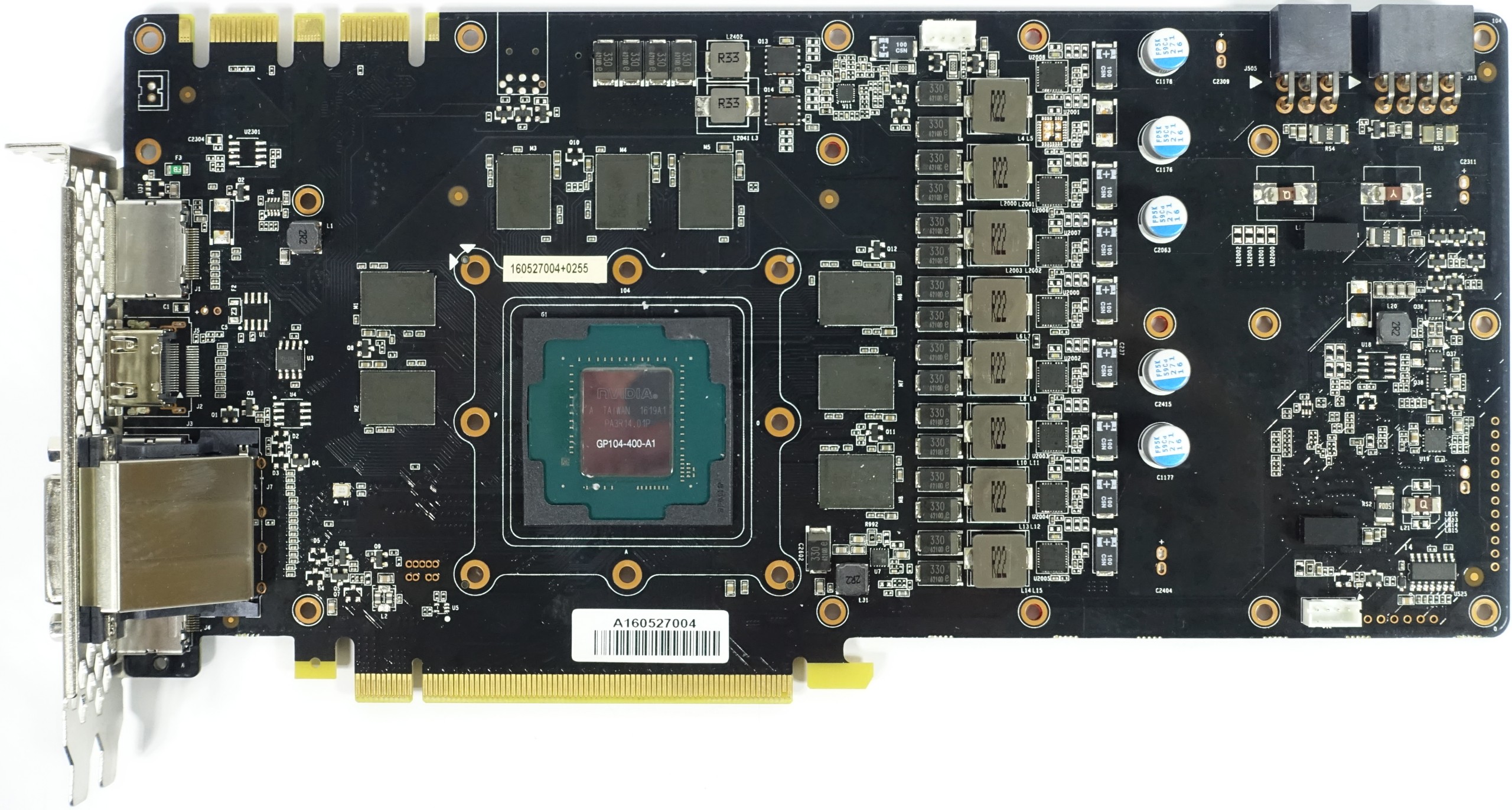

Components on Palit's board seem to be arranged well, aside from the same cheap coils that Nvidia uses on its reference design. We'll revisit this topic when it comes time to talk about acoustics.

Like all of the other GeForce GTX 1080s, Palit's GameRock Premium uses GDDR5X memory modules from Micron, which are sold along with Nvidia's GPU to board partners. Eight memory chips (MT58K256M32JA-100) transferring at 10 MT/s are attached to a 256-bit interface, allowing for a theoretical bandwidth of 320 GB/s.

As we were reviewing Palit's changes to the 1080 GameRock Premium Edition, after our initial review, we also took a second look at the board. One of this card's peculiarities is that Palit uses the smaller uPI Semiconductor uP1666 on a separate controller to deal with the memory's two power phases. Thus, it ends up implementing all eight of the GPU's phases through the 6+2-phase µP9511P. This removes the need for doubling via multiple converter loops per phase, and primarily benefits balancing.

Two capacitors are installed right below the GPU to absorb and equalize peaks in voltage, similar to Nvidia's reference design.

Power Results

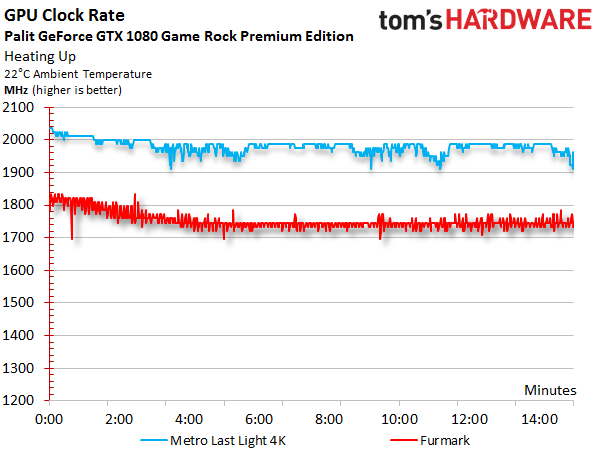

The graphs show that after warm-up and under load, GPU Boost falls to 1949 MHz and at times even lower. Those fluctuations are more visible than the ones we measured from cards with higher power targets, such as MSI's GeForce GTX 1080 Gaming X 8G. Still, the average GPU Boost clock rate remains pleasantly high, likely due to an elevated initial step.

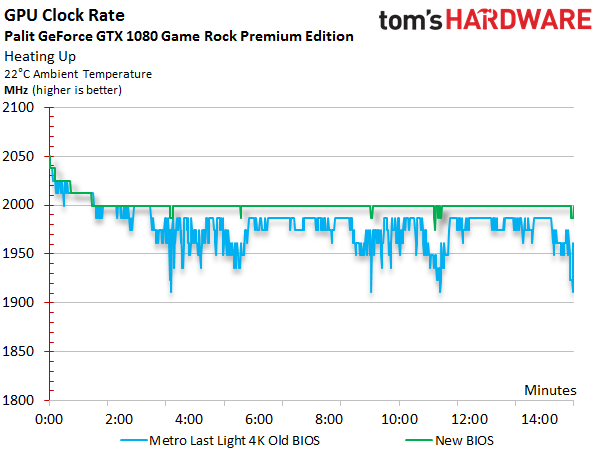

Let's now take a look at what the updated BIOS with the higher default power target can do. GPU Boost now stays constant at 2 GHz throughout our gaming loop, which may increase frame rates by up to 2%, slightly exceeding the margin of error in our benchmarks. Frankly, though, the most noticeable improvement should come from smoother animation owing to lower frame times.

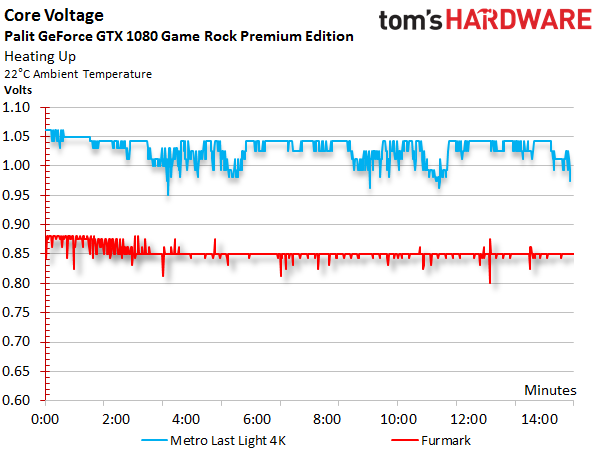

The graph corresponding to Palit's original BIOS shows how voltage follows frequency down over time. While we measured up to 1.062V in the beginning (just as we did with the Founders Edition), that value later drops just below 0.962V.

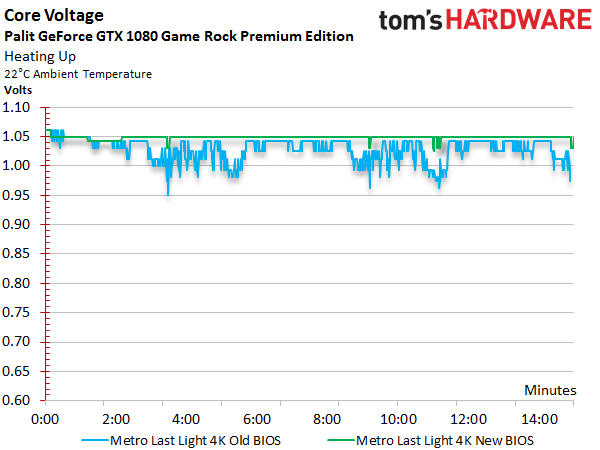

This is no longer the case with the new BIOS. Not only does the frequency remain constant, but so does voltage. The measured value stabilizes at 1.05V.

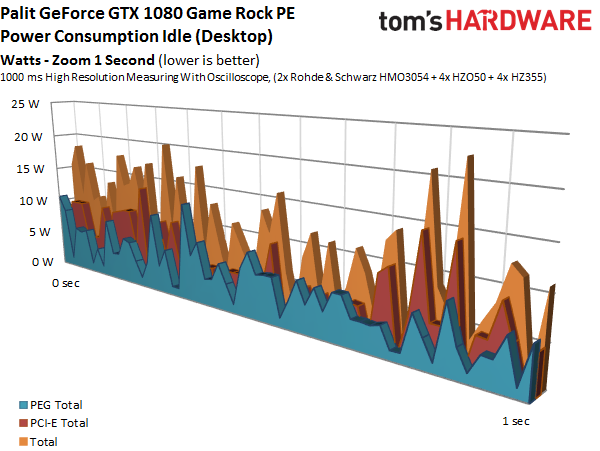

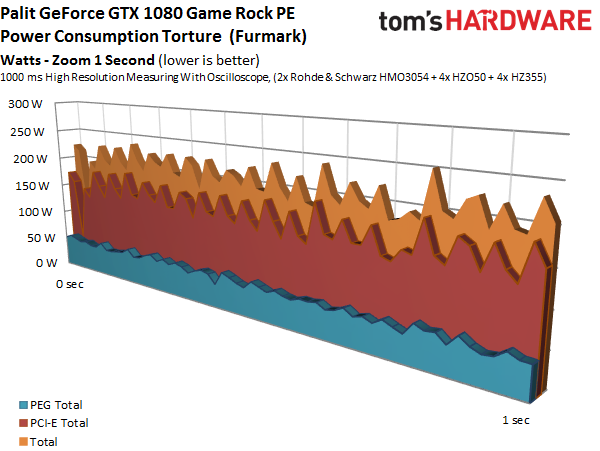

Summing up measured voltages and currents, we arrive at a total consumption figure we can easily confirm with our test equipment by monitoring the card's power connectors.

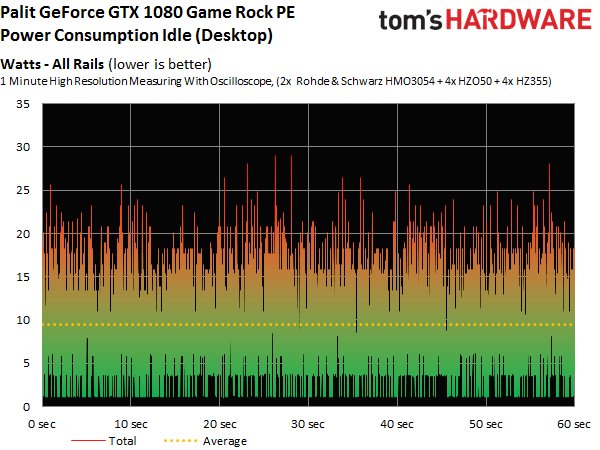

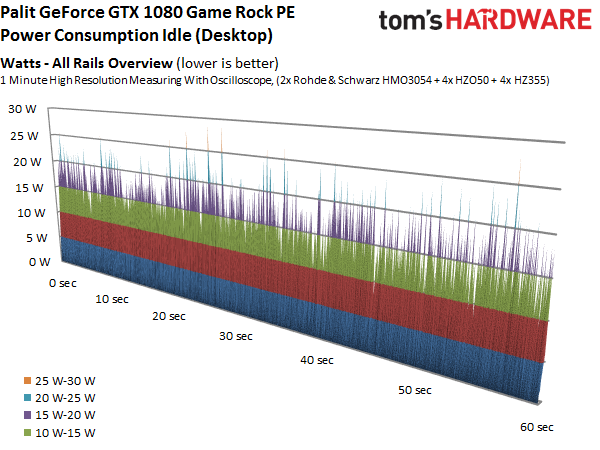

As a result of Nvidia's restrictions, manufacturers sacrifice the lowest possible frequency bin in order to gain an extra GPU Boost step. So, the GTX 1080 GameRock Premium Edition's power consumption is slightly higher at idle. Palit sets the first step at 291 MHz, resulting in a moderate 10W-higher measurement than competing boards like MSI's 1080 Gaming X 8G.

Also interesting is that the GameRock Premium's original BIOS only used 202W during our stress test due to its lower power target. Consumption does rise with the new BIOS, but less than expected. An extra 6W for more stable frequency and voltage curves seems like a small price to pay.

| Power Consumption | |

|---|---|

| Idle | 10W |

| Idle Multi-Monitor | 11W |

| Blu-ray | 12W |

| Browser Games | 99-116W |

| Gaming (Metro Last Light at 4K) | 212W |

| Torture (FurMark) | 218W |

| Gaming (Metro Last Light at 4K) @ 2114 MHz | 202W |

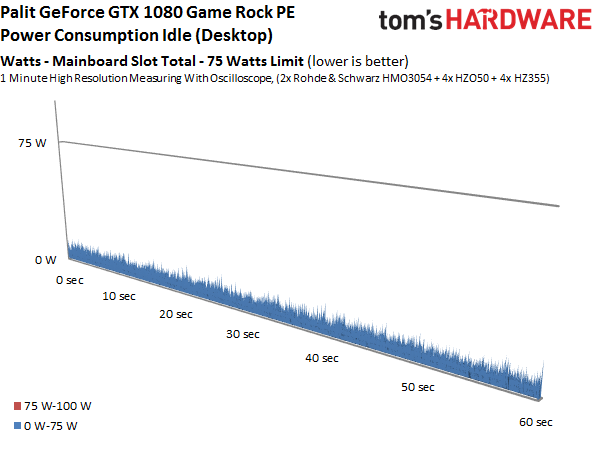

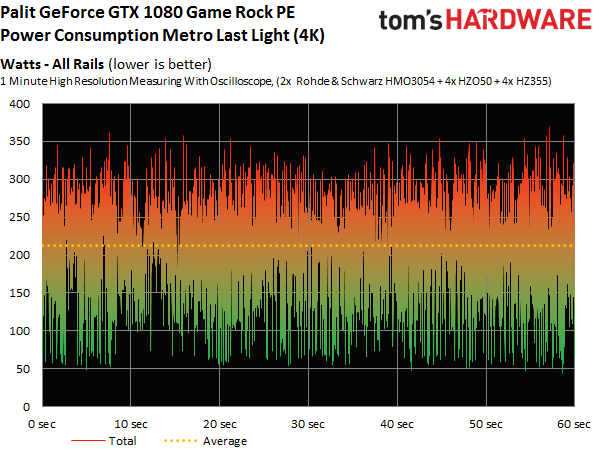

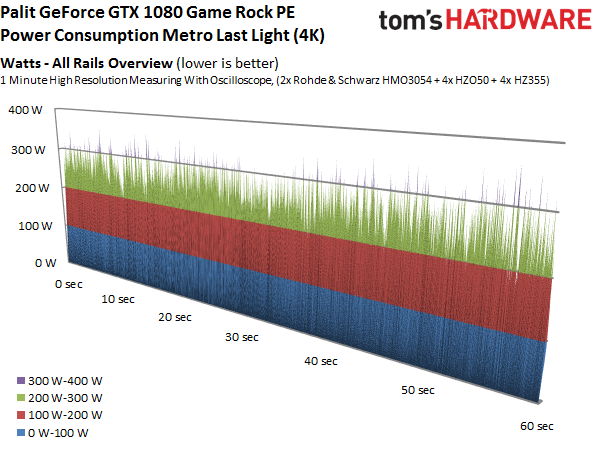

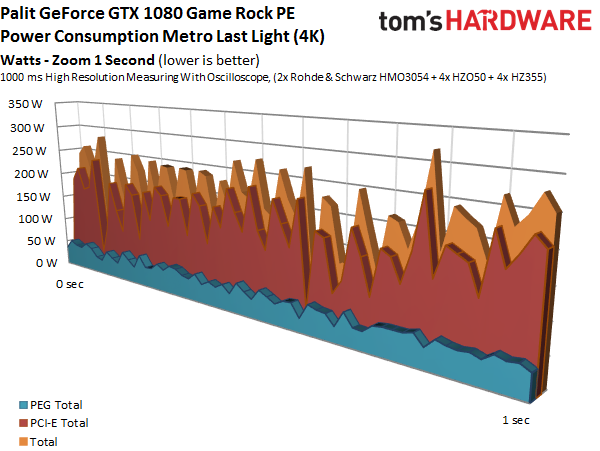

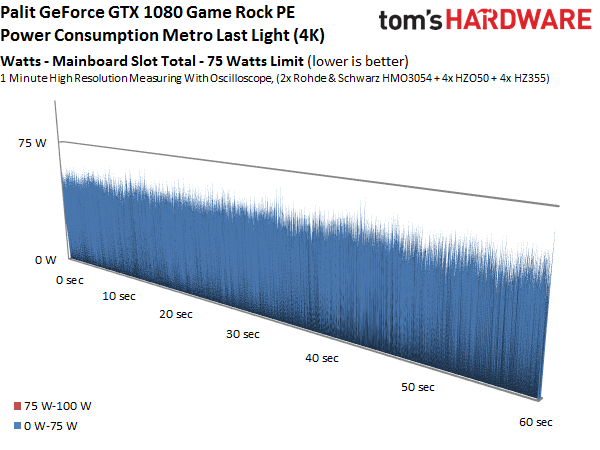

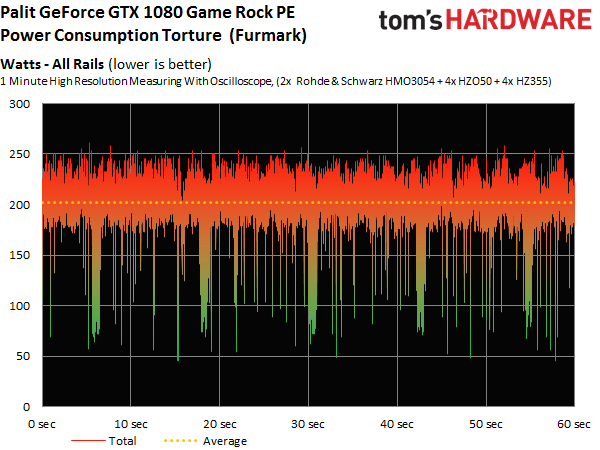

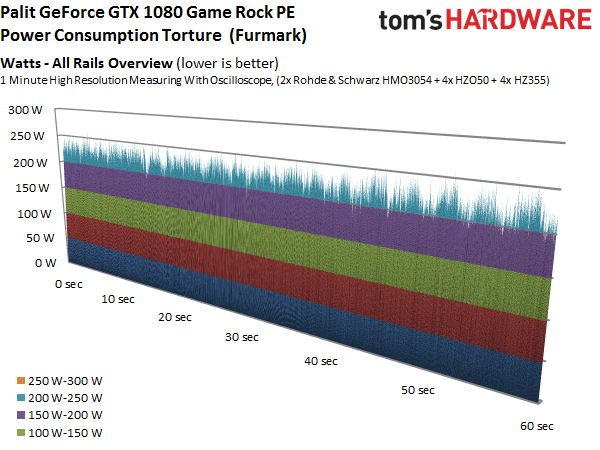

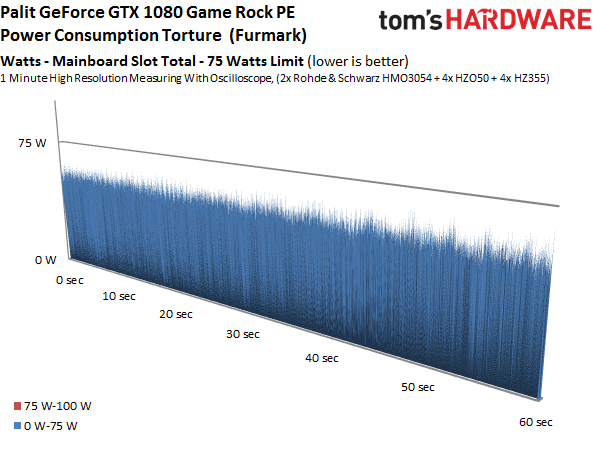

Now let's take a more detailed look at power consumption when the card is idle, when it's gaming at 4K, and during our stress test. The graphs show the distribution of load between each voltage and supply rail, providing a bird's eye view of variations and peaks:

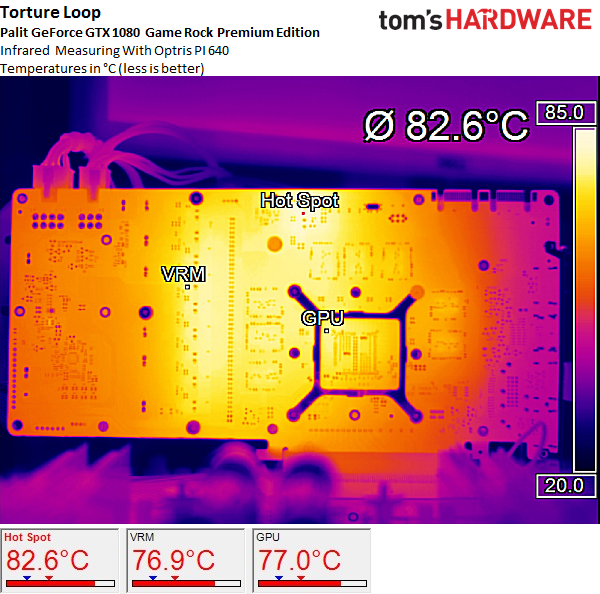

Temperature Results

Of course, waste heat needs to be dissipated as efficiently as possible. The backplate doesn't help with this at all, instead leaving the work to Palit's bulky two-and-a-half-slot cooler.

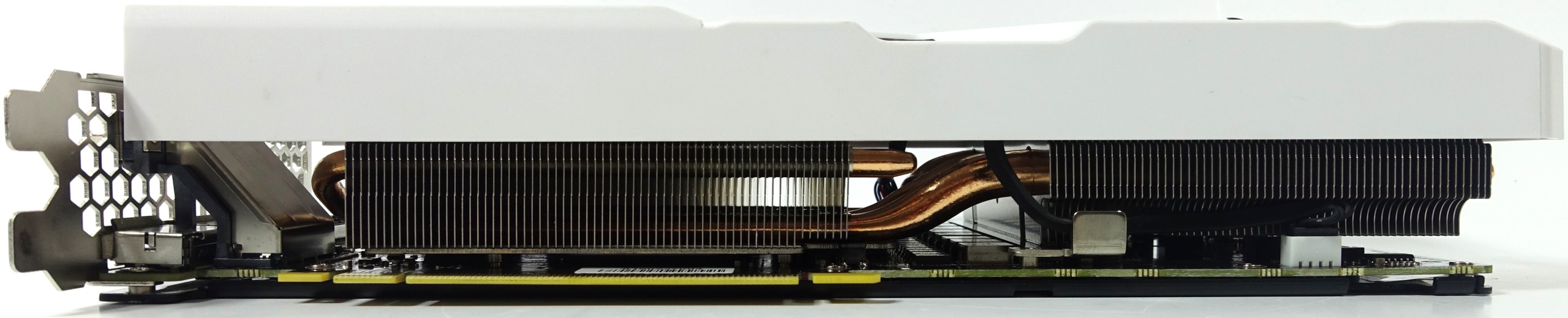

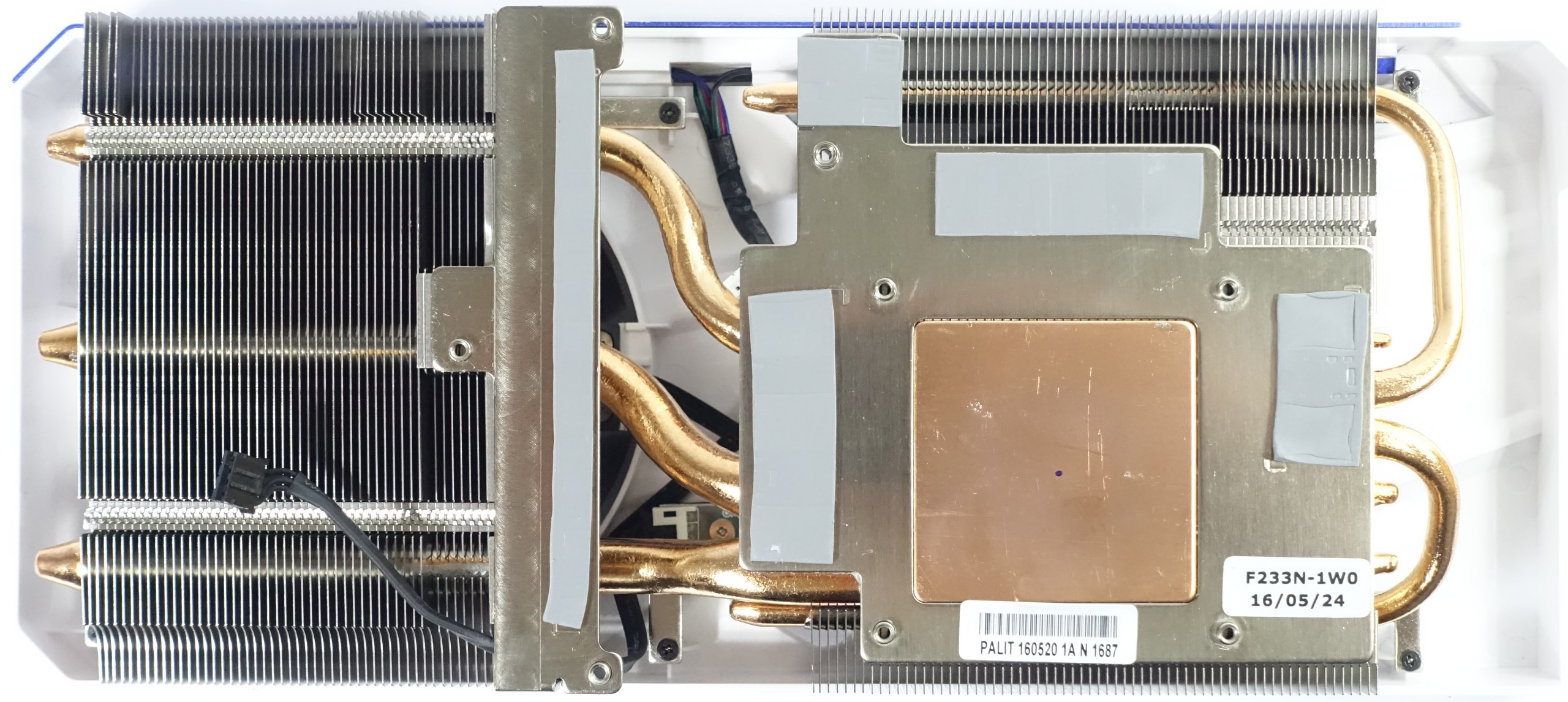

A copper sink draws heat away from the GPU and distributes it along five pipes (three ⅓-inch/8mm and two¼-inch/6mm). The sink's fins are oriented vertically, which results in short heat pipes that work more efficiently. The two smaller pipes provide additional area to support the transport of thermal energy away from the copper block and towards the cooler's edges.

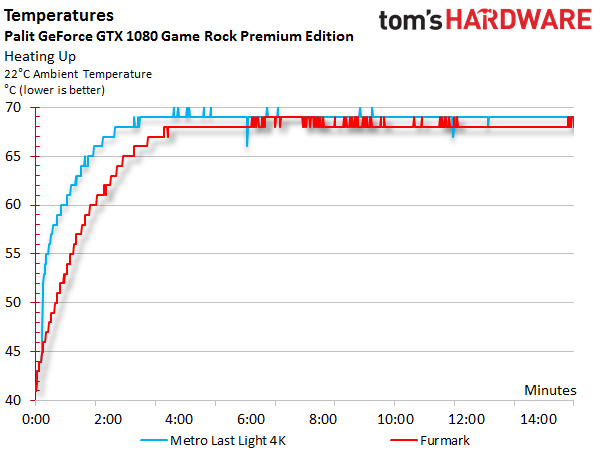

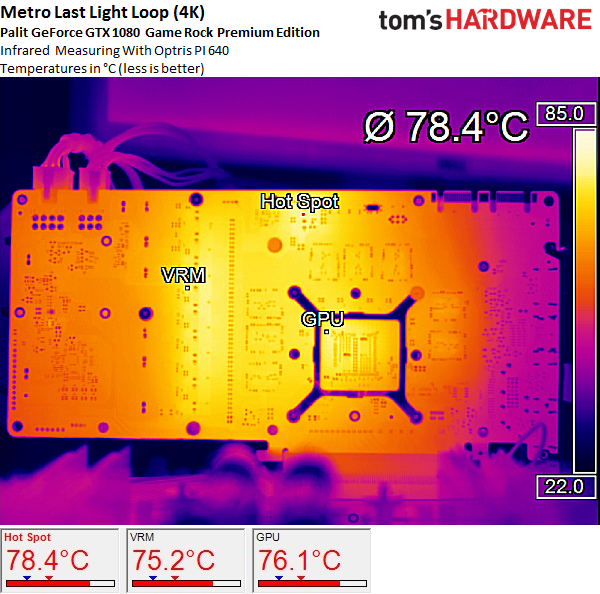

The performance of this truly monstrous cooler leaves little to be desired. Since the temperature target is set around 158°F (70°C), the fans only need to spin slowly, which should have a positive effect on our noise measurements.

Heat transfer away from the VRMs works well despite low fan speeds and minimal airflow. The massive cooler and its many fins make sure of that.

Temperatures do rise at the hot spot during our stress test, but all other areas remain cool enough.

Sound Results

In the interest of transparency, we're going to present the sound measurements generated from Palit's first GameRock Premium sample, and then show how the company addressed our concerns.

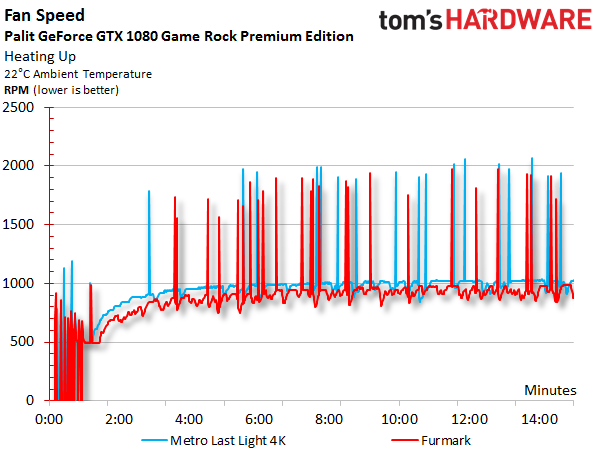

Whereas automotive enthusiasts might enjoy the roar of a well-oiled machine, we prefer the sound of silence from our graphics cards. When it's disturbed, the culprit is usually the fans or coils. This card's fans operate at a maximum of 1000 RPM though, which doesn't seem worrisome at all.

The fan curve does uncover an unpleasant surprise, though. Since the fans generally start late and remain very quiet, it takes a trained ear to hear that hysteresis doesn't really work. Palit confirmed our findings and promised a BIOS update to address the issue.

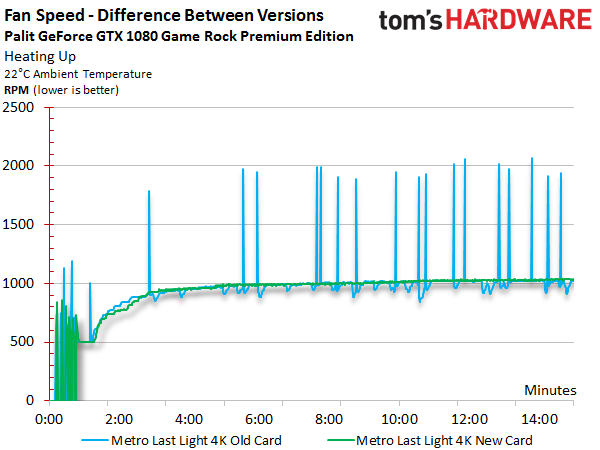

We also talked to Palit's R&D department about the spikes in this chart. They may not be audible due to the rotor blades' inertia, but we certainly measured them. In response, Palit decided to replace its fan modules, resulting in only a brief interruption of production. The result speaks for itself: the new card (green curve) no longer demonstrates abnormal behavior:

When the card is idle, its noise is not measurable since Palit implements a semi-passive mode. Naturally, there's no reason to try taking measurements in that state.

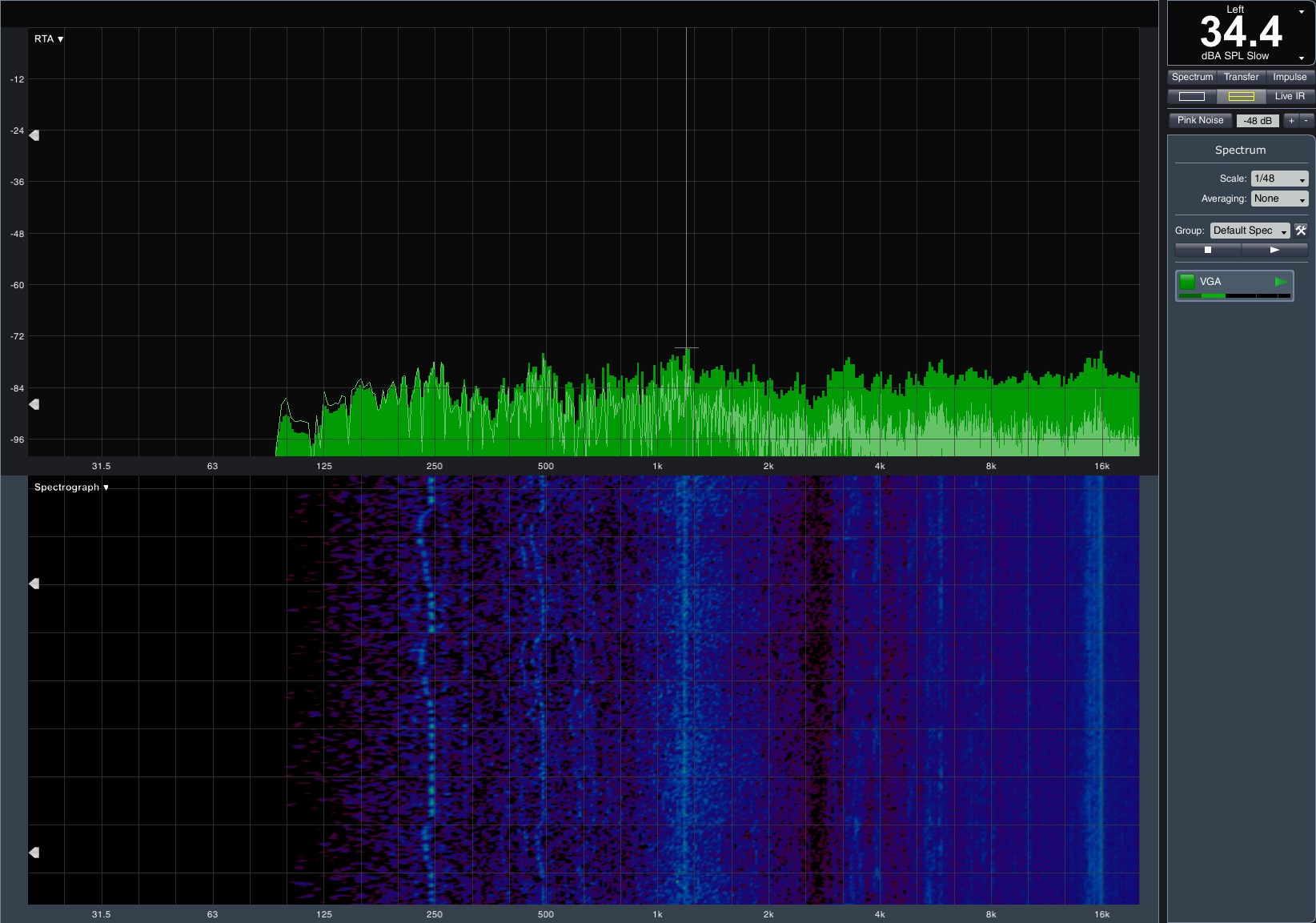

The values we measured under load are blissful, and Palit's fixes yield a purring kitten instead of a growling tiger. Readings around 34.4 dB(A) are great given the temperatures involved. Only the low-budget coils tend to stick out a bit. If it wasn't for their audible chirp, you might not even know the GameRock Premium was running.

The new BIOS met our expectations, leading to the stabilization of clock rate and voltages. A power consumption increase of roughly 6W doesn't cause higher temperatures or more noise, we we're fine with it.

Our Impression, Post-Update

Palit made the right move by replacing its fan modules and pushing out a new BIOS. The unpleasant RPM spikes disappeared, and so did the noise related to the fan ball bearings. These issues weren't only noticed by us; they were mentioned in the forums, too. But because the issue was related to variations in production quality, early adopters without the idiosyncrasies we spotted may have gotten lucky.

The new BIOS does not just provide a little extra performance, it also enables slightly better smoothness, since GPU Boost maintains clock rates more consistently instead of jumping around. As of 10/28/16, the updated BIOS is live on Palit's website for anyone who already owns this card.

Palit GTX 1080 GameRock

Reasons to buy

Reasons to avoid

MORE: Best Deals

MORE: Hot Bargains @PurchDeals

Current page: Palit GTX 1080 GameRock Premium Edition

Prev Page MSI GTX 1080 Sea Hawk Next Page PNY GeForce GTX 1080 XLR8 Gaming OC EditionGet Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

ledhead11 Love the article!Reply

I'm really happy with my 2 xtreme's. Last month I cranked our A/C to 64f, closed all vents in the house except the one over my case and set the fans to 100%. I was able to game with the 2-2.1ghz speed all day at 4k. It was interesting to see the GPU usage drop a couple % while fps gained a few @ 4k and able to keep the temps below 60c.

After it was all said and done though, the noise wasn't really worth it. Stock settings are just barely louder than my case fans and I only lose 1-3fps @ 4k over that experience. Temps almost never go above 60c in a room around 70-74f. My mobo has the 3 spacing setup which I believe gives the cards a little more breathing room.

The zotac's were actually my first choice but gigabyte made it so easy on amazon and all the extra stuff was pretty cool.

I ended up recycling one of the sli bridges for my old 970's since my board needed the longer one from nvida. All in all a great value in my opinion.

One bad thing I forgot to mention and its in many customer reviews and videos and a fair amount of images-bent fins on a corner of the card. The foam packaging slightly bends one of the corners on the cards. You see it right when you open the box. Very easily fixed and happened on both of mine. To me, not a big deal, but again worth mentioning. -

redgarl The EVGA FTW is a piece of garbage! The video signal is dropping randomly and make my PC crash on Windows 10. Not only that, but my first card blow up after 40 days. I am on my second one and I am getting rid of it as soon as Vega is released. EVGA drop the ball hard time on this card. Their engineering design and quality assurance is as worst as Gigabyte. This card VRAM literally burn overtime. My only hope is waiting a year and RMA the damn thing so I can get another model. The only good thing is the customer support... they take care of you.Reply -

Nuckles_56 What I would have liked to have seen was a list of the maximum overclocks each card got for core and memory and the temperatures achieved by each coolerReply -

Hupiscratch It would be good if they get rid of the DVI connector. It blocks a lot of airflow on a card that's already critical on cooling. Almost nobody that's buying this card will use the DVI anyway.Reply -

Nuckles_56 Reply18984968 said:It would be good if they get rid of the DVI connector. It blocks a lot of airflow on a card that's already critical on cooling. Almost nobody that's buying this card will use the DVI anyway.

Two things here, most of the cards don't vent air out through the rear bracket anyway due to the direction of the cooling fins on the cards. Plus, there are going to be plenty of people out there who bought the cheap Korean 1440p monitors which only have DVI inputs on them who'll be using these cards -

ern88 I have the Gigabyte GTX 1080 G1 and I think it's a really good card. Can't go wrong with buying it.Reply -

The best card out of box is eVGA FTW. I am running two of them in SLI under Windows 7, and they run freaking cool. No heat issue whatsoever.Reply

-

Mike_297 I agree with 'THESILVERSKY'; Why no Asus cards? According to various reviews their Strixx line are some of the quietest cards going!Reply -

trinori LOL you didnt include the ASUS STRIX OC ?!?Reply

well you just voided the legitimacy of your own comparison/breakdown post didnt you...

"hey guys, here's a cool comparison of all the best 1080's by price and performance so that you can see which is the best card, except for some reason we didnt include arguably the best performing card available, have fun!"

lol please..