Nvidia GeForce GTX 1080 Graphics Card Roundup

Nvidia GeForce GTX 1080 Founders Edition

Why you can trust Tom's Hardware

The GeForce GTX 1080 Founders Edition is Nvidia's current desktop flagship by its own design. Despite an emphasis on craftsmanship in the company's marketing materials and generally improved efficiency, the reference design will have a hard time trying to justify its high price compared to compelling solutions from add-in board partners. This is doubly applicable since the restrictive power target of just 180W and a 172°F (80°C) temperature target are real limitations.

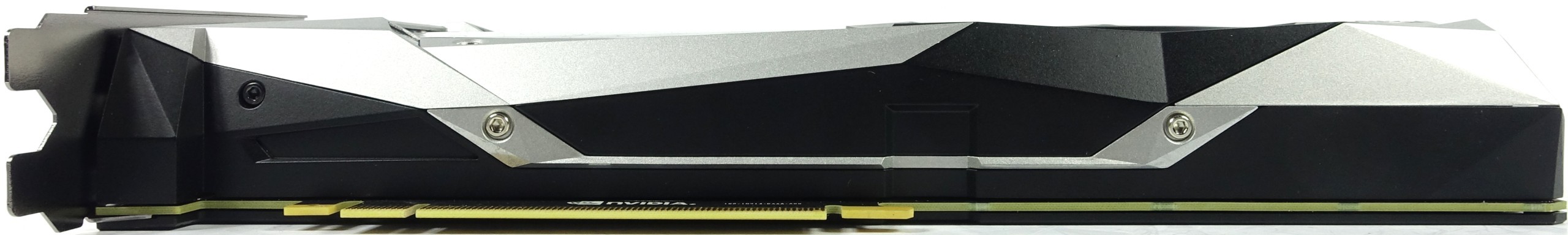

As with the GeForce GTX 1070 Founders Edition, Nvidia chose a mechanical-looking design and a radial fan. The card's true two-slot form factor also makes it a good option in multi-GPU machines.

Technical Specifications

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Exterior & Interfaces

The GeForce GTX 1080 Founders Edition's shroud is made of injection molded aluminum, and colored both silver and black. The metal cover coveys plenty of quality, but also results in a weight of more than 35 ounces (one kilogram). Both this 1080 and Nvidia's GeForce GTX 1070 Founders Edition have an almost identical weight (the 1080 is just one-third of an ounce, or 10 grams heavier).

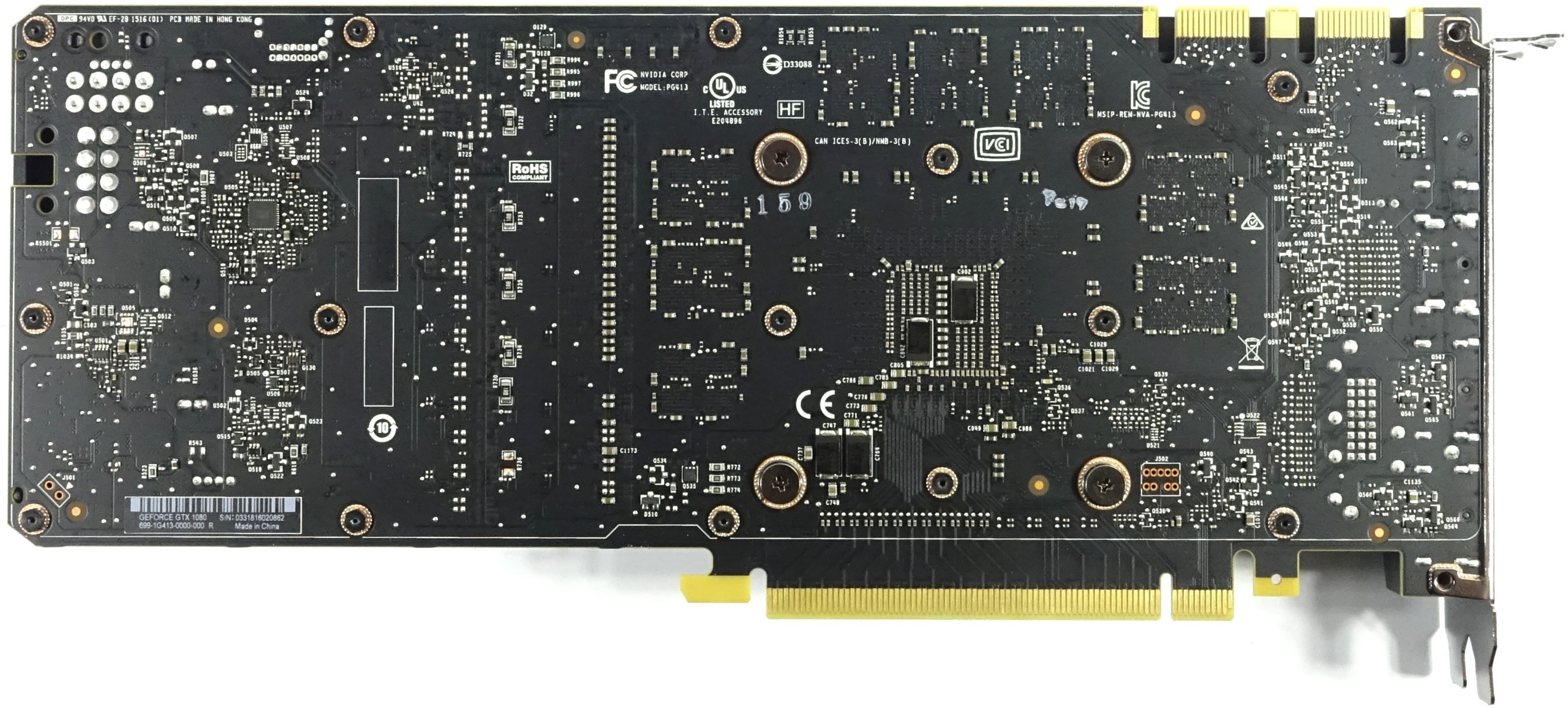

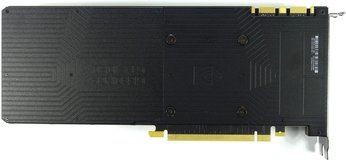

Around back, the board is covered by a two-piece plate that serves no practical purpose other than to facilitate a more finished appearance. If you want to increase airflow in a multi-card configuration with 1080s back to back, the plate can be unscrewed without causing a problem.

The top of the card is dominated by a glowing, green GeForce GTX logo. The eight-pin auxiliary power connector is positioned at the end of the card. The sharp-edged and mechanical design may be a matter of taste, but it certainly is distinctive.

A peek at the card's back reveals fins and a mounting frame. Three screw holes are provided for attaching brackets to stabilize the card in a case.

Five outputs populate the rear bracket, four of which can be used simultaneously in a multi-monitor setup. You get one dual-link DVI-D connector, one HDMI 2.0b port, and three DisplayPort 1.4 outputs. The rest of the plate is ventilated to maximize exhaust flow.

Board & Components

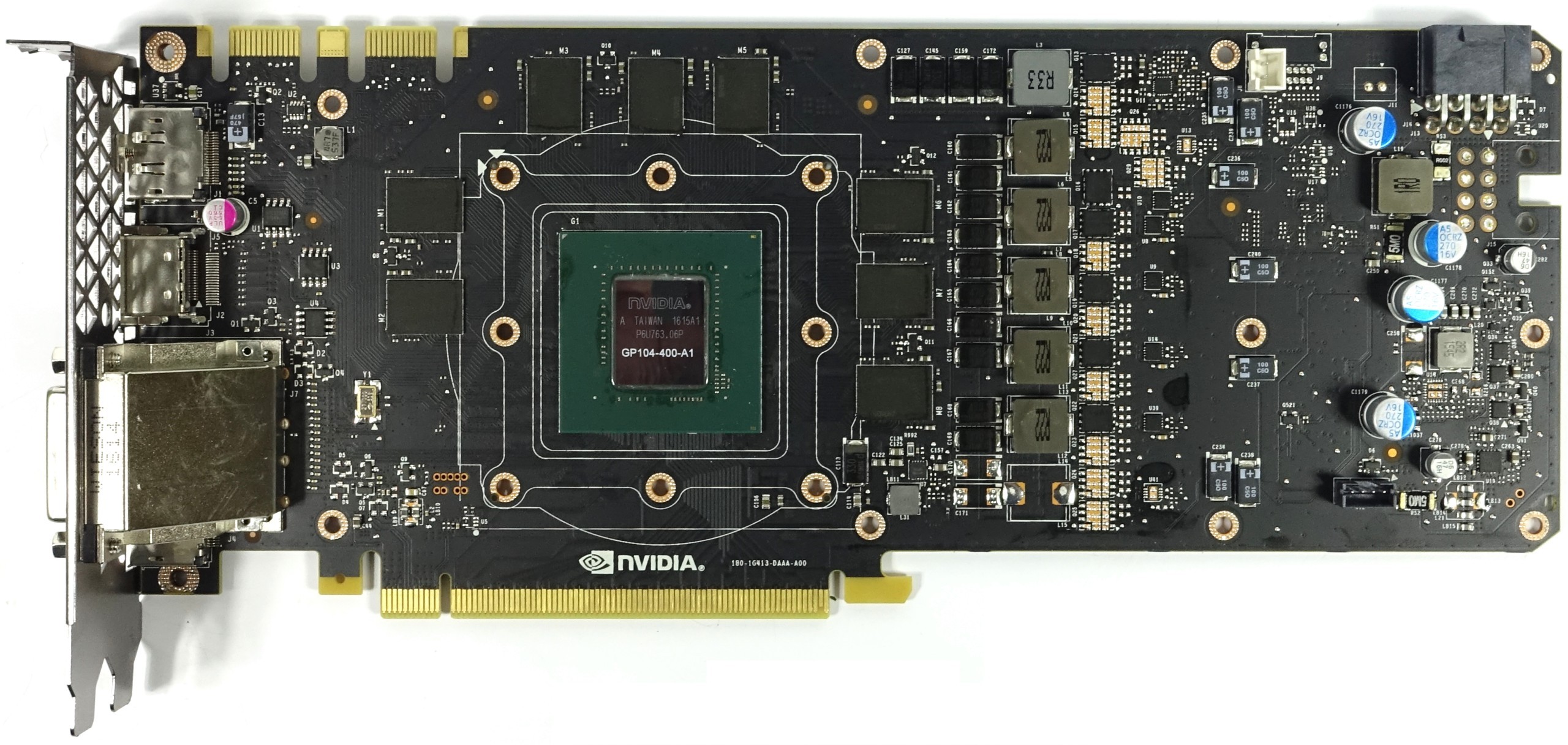

A glance at the PCB reveals that it offers significantly more space than is actually used. In addition to one power phase for the memory, five of the six possible phases for the GPU are implemented. There's even space for an extra power connector, if it's needed.

As you no doubt know, Nvidia taps GDDR5X memory from Micron for this board. Eight of these memory chips operating at 1251 MHz are connected to the GPU through an aggregate 256-bit interface, enabling a theoretical bandwidth of 320 GB/s.

The 5+1-phase system relies on the sparsely documented µP9511P PWM controller. Since this controller can't communicate directly with the VRM's phases, Nvidia utilizes 53603A chips for solid PWM drivers (gate drivers) and controlling the power MOSFETs (primarily of type 4C85N).

Two capacitors are installed right below the GPU to absorb and equalize peaks in voltage. The board design looks tidy and well thought-out.

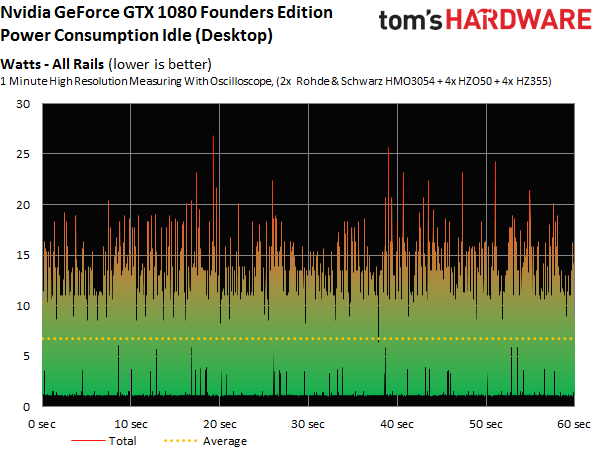

Power Results

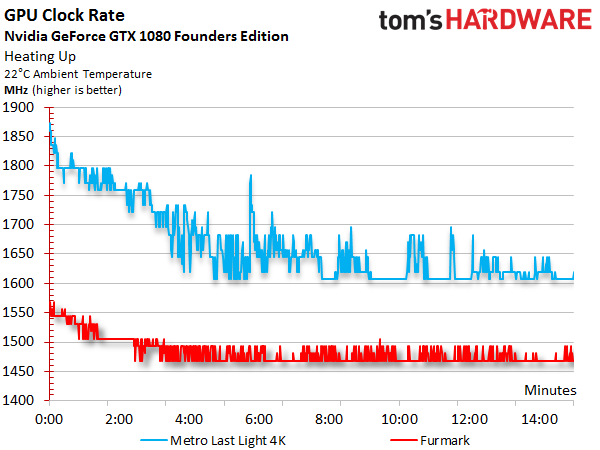

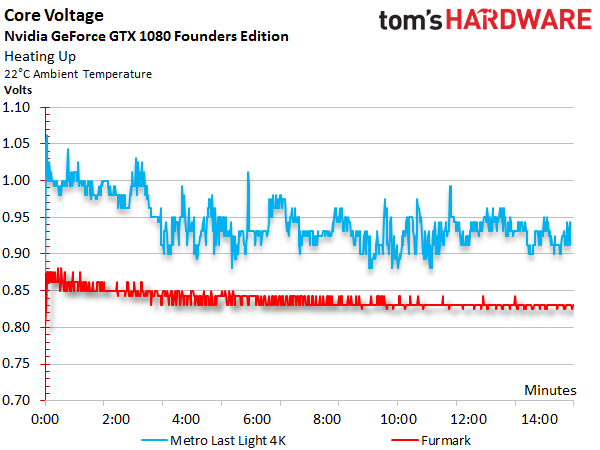

Before we look at power consumption, we should talk about the correlation between GPU Boost frequency and core voltage, which are so similar that we decided to put their graphs one on top of the other. This also shows that both curves drop as the GPU's temperature rises.

After warm-up and under load, the GPU Boost clock rate at times drops to GP104's base frequency of 1.605 GHz. This is mirrored in our voltage measurements. While we measured up to 1.062V at first, that number temporarily drops as low as 0.881V.

Combining the measured voltages and currents allows us to derive a total power consumption we can easily confirm with our instrumentation by taking readings at the card's power connectors. In fact, let's start with the measured power consumption values in the following table:

| Power Consumption | |

|---|---|

| Idle | 7W |

| Idle Multi-Monitor | 10W |

| Blu-ray | 11W |

| Browser Games | 94-113W |

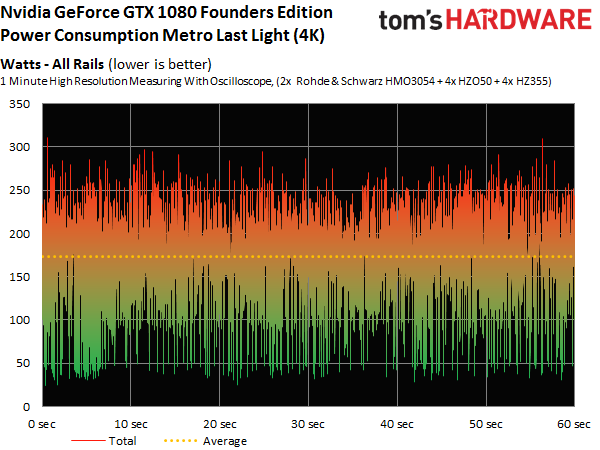

| Gaming (Metro Last Light at 4K) | 173W |

| Torture (FurMark) | 177W |

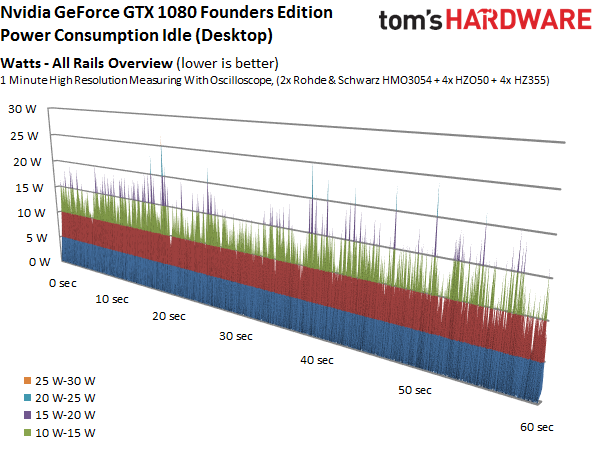

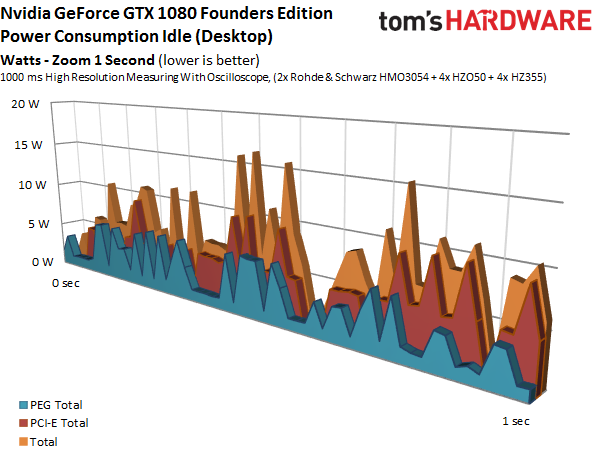

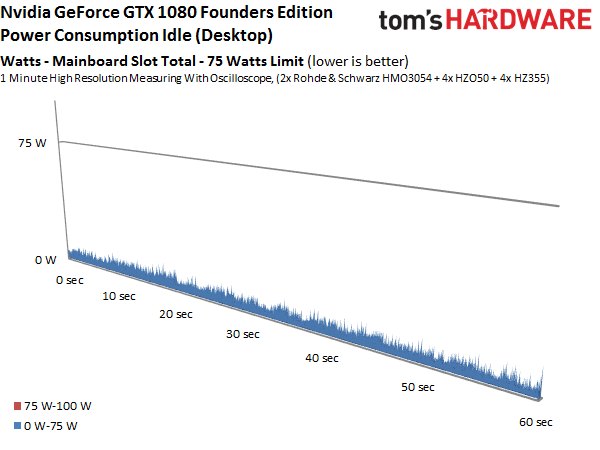

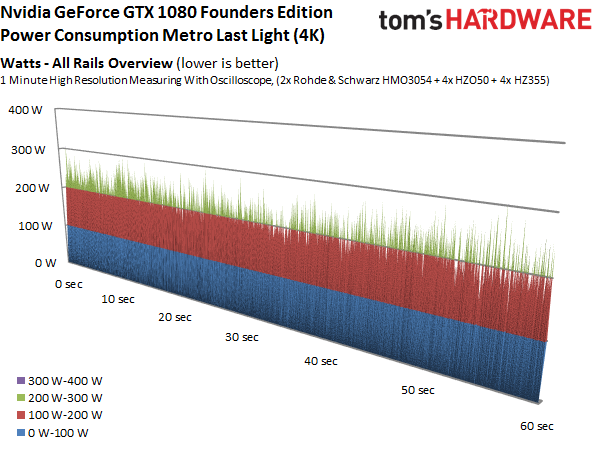

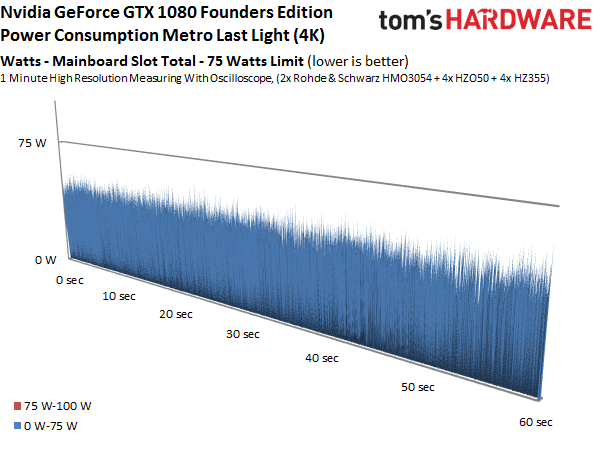

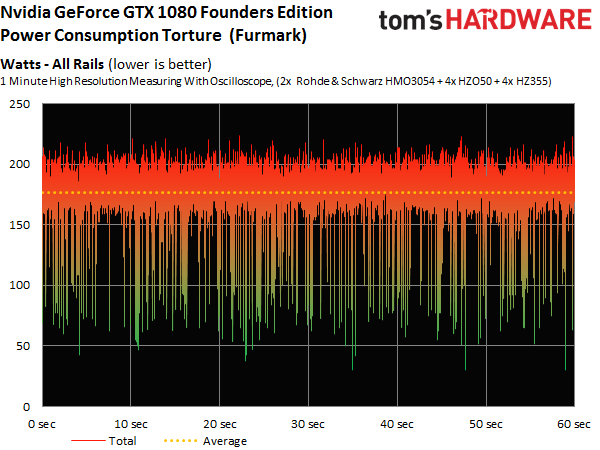

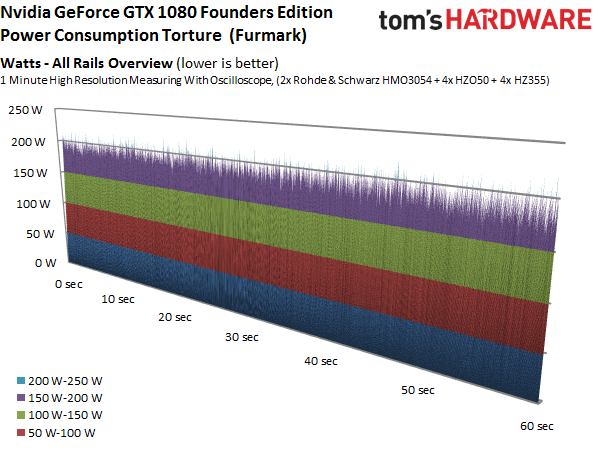

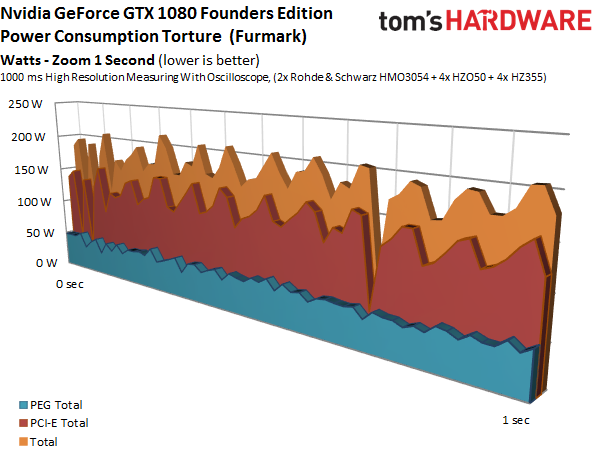

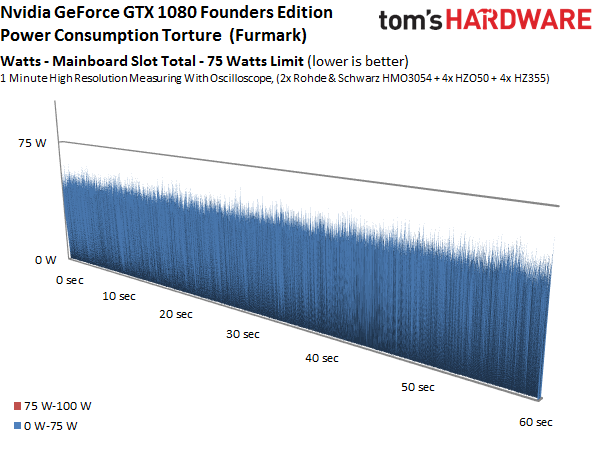

These charts go into more detail on power consumption at idle, during 4K gaming, and under the effects of our stress test. The graphs show how load is distributed between each voltage and supply rail, providing a bird's eye view of load variations and peaks.

Temperature Results

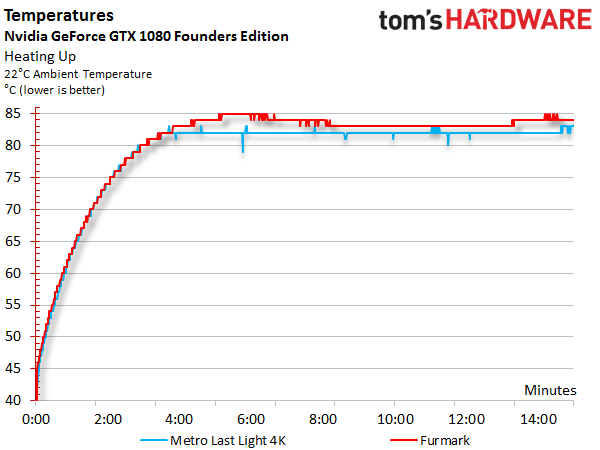

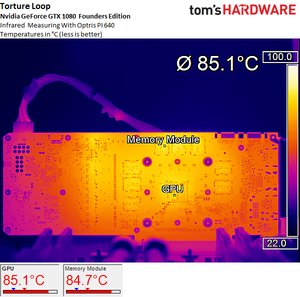

To dissipate the GP104 GPU's waste heat, Nvidia's GeForce GTX 1080 Founders Edition employs a real vapor chamber solution. The 1070 Founders Edition does not benefit from this same technology. The vapor chamber is a compact module that's attached to the PCB via four screws and positioned over the GPU package.

A radial fan pulls air from inside the chassis and blows it across heat sink fins on the vapor chamber, exhausting that air through the output bracket. The mounting frame is not only used to stabilize the card, but it also helps cool the voltage regulators and memory ICs.

The vapor chamber's performance is slightly better than the 1070 Founders Edition's copper heat sink, as you might expect. Still, temperatures rise to almost 185°F (85°C) during our stress test and 180°F (82°C) during the gaming loop. Then again, that's hardly shocking since the 1080 is rated 30W higher.

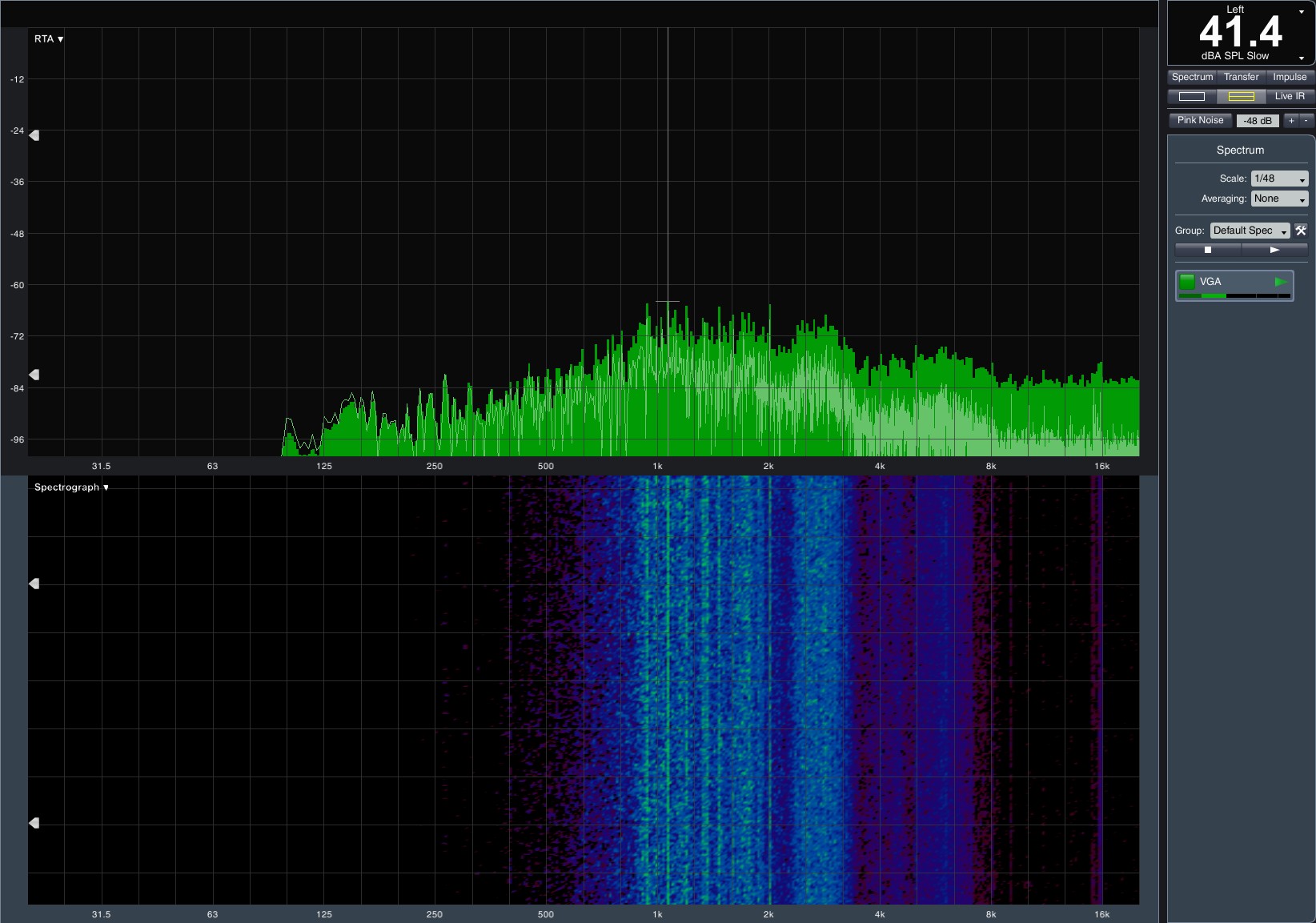

Sound Results

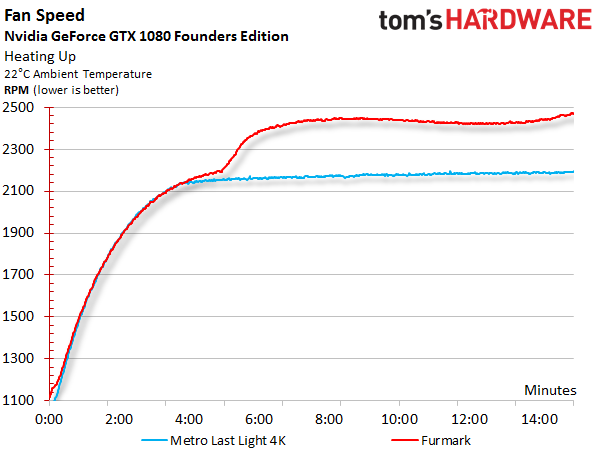

Initially, both fan speed curves stay relatively close to one another. But at a certain point in our benchmark, FurMark forces the RPM measurement to burst much higher. During the more real-world gaming loop, however, the fan cruises along just above 2100 RPM.

Noise levels are relatively low when the card is idle, even if the fan's sound has a slightly snarly character. Nvidia doesn't gift the 1080 Founders Edition with a semi-passive mode. But then again, it wouldn't be particularly useful on a card with a radial fan anyways.

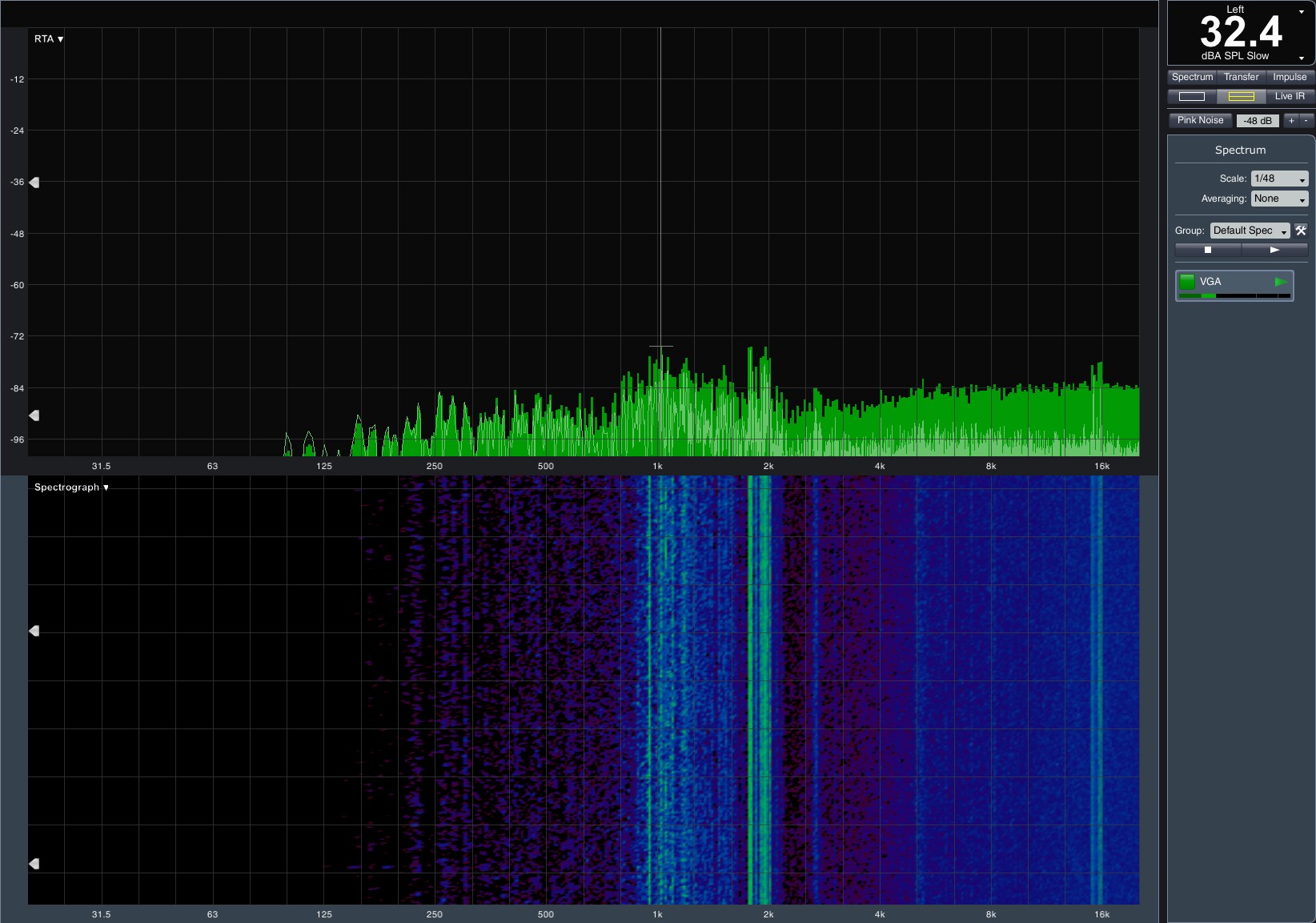

Even under longer gaming loads, noise levels stay below the 42 dB(A) mark. Not bad for a radial fan. However, during our purposely taxing stress test, the fans ramp up beyond 46 dB(A). The frequency spectrum is rather wide though, so the white noise doesn't feel too intrusive.

Overall, Nvidia's thermal solution is workable. The radial fan is great for exhausting hot air from the card's back, but it's miles away from making the 1080 Founders Edition quiet.

Nvidia GeForce GTX 1080 Founders Edition

Reasons to buy

Reasons to avoid

MORE: Best Deals

MORE: Hot Bargains @PurchDeals

Current page: Nvidia GeForce GTX 1080 Founders Edition

Prev Page Benchmark Results Next Page EVGA GeForce GTX 1080 FTW Gaming ACX 3.0Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

ledhead11 Love the article!Reply

I'm really happy with my 2 xtreme's. Last month I cranked our A/C to 64f, closed all vents in the house except the one over my case and set the fans to 100%. I was able to game with the 2-2.1ghz speed all day at 4k. It was interesting to see the GPU usage drop a couple % while fps gained a few @ 4k and able to keep the temps below 60c.

After it was all said and done though, the noise wasn't really worth it. Stock settings are just barely louder than my case fans and I only lose 1-3fps @ 4k over that experience. Temps almost never go above 60c in a room around 70-74f. My mobo has the 3 spacing setup which I believe gives the cards a little more breathing room.

The zotac's were actually my first choice but gigabyte made it so easy on amazon and all the extra stuff was pretty cool.

I ended up recycling one of the sli bridges for my old 970's since my board needed the longer one from nvida. All in all a great value in my opinion.

One bad thing I forgot to mention and its in many customer reviews and videos and a fair amount of images-bent fins on a corner of the card. The foam packaging slightly bends one of the corners on the cards. You see it right when you open the box. Very easily fixed and happened on both of mine. To me, not a big deal, but again worth mentioning. -

redgarl The EVGA FTW is a piece of garbage! The video signal is dropping randomly and make my PC crash on Windows 10. Not only that, but my first card blow up after 40 days. I am on my second one and I am getting rid of it as soon as Vega is released. EVGA drop the ball hard time on this card. Their engineering design and quality assurance is as worst as Gigabyte. This card VRAM literally burn overtime. My only hope is waiting a year and RMA the damn thing so I can get another model. The only good thing is the customer support... they take care of you.Reply -

Nuckles_56 What I would have liked to have seen was a list of the maximum overclocks each card got for core and memory and the temperatures achieved by each coolerReply -

Hupiscratch It would be good if they get rid of the DVI connector. It blocks a lot of airflow on a card that's already critical on cooling. Almost nobody that's buying this card will use the DVI anyway.Reply -

Nuckles_56 Reply18984968 said:It would be good if they get rid of the DVI connector. It blocks a lot of airflow on a card that's already critical on cooling. Almost nobody that's buying this card will use the DVI anyway.

Two things here, most of the cards don't vent air out through the rear bracket anyway due to the direction of the cooling fins on the cards. Plus, there are going to be plenty of people out there who bought the cheap Korean 1440p monitors which only have DVI inputs on them who'll be using these cards -

ern88 I have the Gigabyte GTX 1080 G1 and I think it's a really good card. Can't go wrong with buying it.Reply -

The best card out of box is eVGA FTW. I am running two of them in SLI under Windows 7, and they run freaking cool. No heat issue whatsoever.Reply

-

Mike_297 I agree with 'THESILVERSKY'; Why no Asus cards? According to various reviews their Strixx line are some of the quietest cards going!Reply -

trinori LOL you didnt include the ASUS STRIX OC ?!?Reply

well you just voided the legitimacy of your own comparison/breakdown post didnt you...

"hey guys, here's a cool comparison of all the best 1080's by price and performance so that you can see which is the best card, except for some reason we didnt include arguably the best performing card available, have fun!"

lol please..