Nvidia GeForce GTX 1080 Graphics Card Roundup

EVGA GeForce GTX 1080 FTW Gaming ACX 3.0

Why you can trust Tom's Hardware

FTW is the shortened version of For The Win, and with that abbreviation EVGA sets the bar pretty high for itself. We do like that the card can be disassembled without voiding its warranty. The terms of EVGA's coverage are thus very favorable to water-cooling and modding enthusiasts. This is downright uncommon, which is why we want to point it out upfront. But we still don't know anything about the 1080 FTW Gaming ACX 3.0's technical attributes...yet, at least.

Technical Specifications

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Exterior & Interfaces

The cooler cover is made of light metal. Together with the underlying acrylic plate, the extra tacked on are primarily designed to provide some eye candy. As a result, the whole top of the card looks like it's bathed in color; built-in RGB LEDs light up the shroud thanks to numerous recesses. Right out of the gate, EVGA's FTW is the brightest card in our test field.

Weighing 38oz (1077g), this card isn't particularly heavy. But it's not a lightweight either. Measuring 11 inches (or 27.7cm), it isn't excessively long. It's five inches (or 12.5cm) tall, though, and 1 3/8 inch (3.5cm) wide, matching many dual-slot graphics cards.

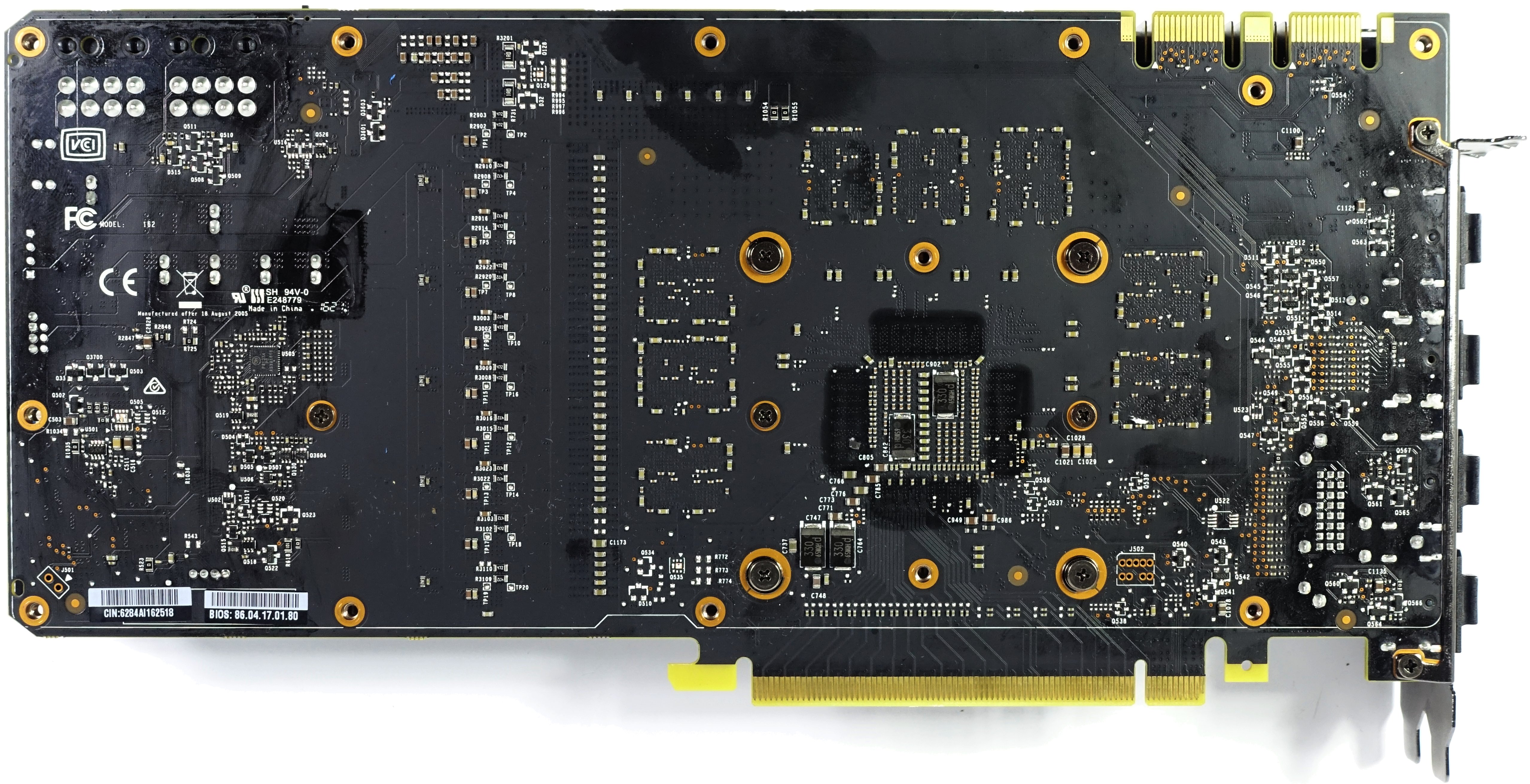

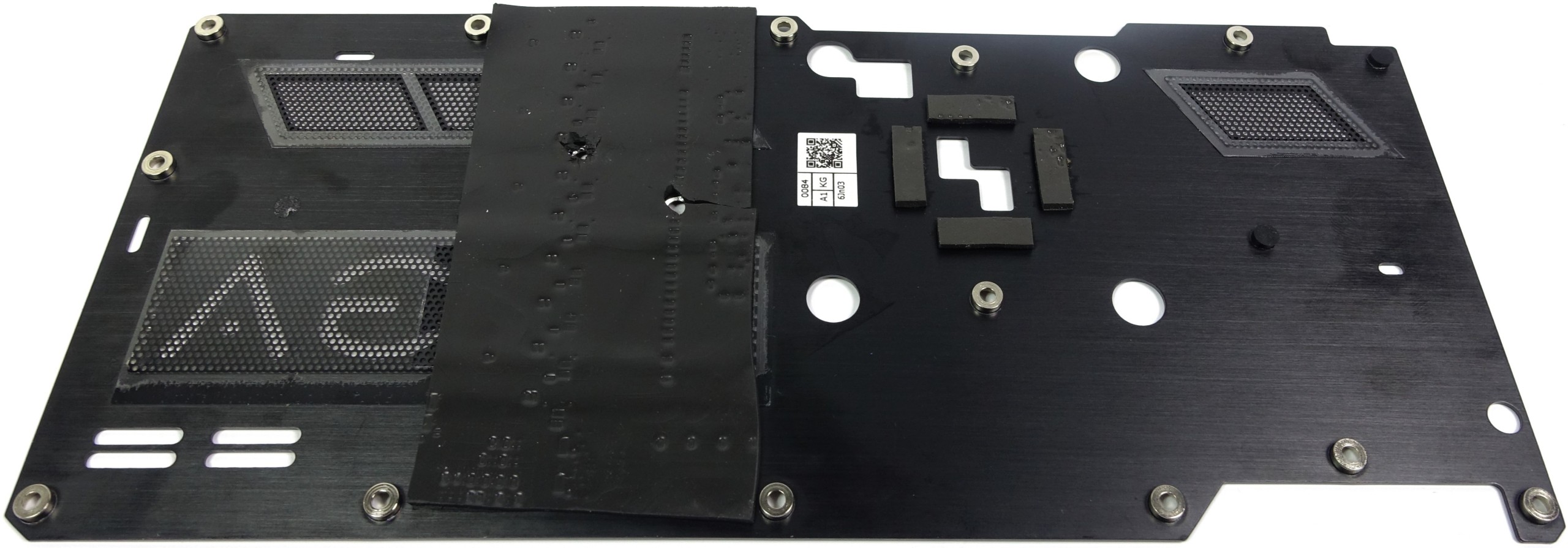

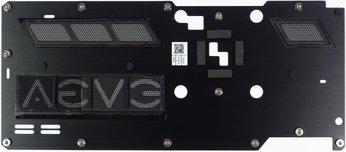

The back of the board is covered by a single-piece plate with several openings for ventilation. You'll have to plan for an additional one-fifth of an inch (5mm) beyond the backplate, which could negatively affect configurations with multiple cards right next to each other. It is perfectly possible to use the card without that cover, of course. However, removing it also necessitates pulling off the cooler.

The top of the card features EVGA's logo. Two eight-pin power connectors are positioned at the end of the card, right where we'd expect to find them. As with most designs that strive to be unique, this one is a matter of personal preference. We're sure it'll find its fans, though. While there are undoubtedly fancier cards available, being fancy isn't always a compliment either.

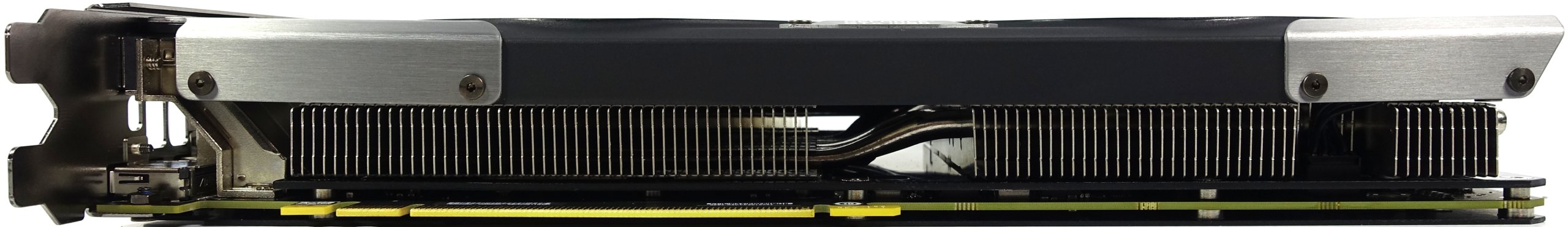

Fins visible from the end and bottom of the 1080 FTW Gaming ACX 3.0 show that they're positioned vertically, and won't allow air to flow out of the back. Instead, exhaust is pushed from the top and down, toward the motherboard.

The rear bracket exposes five connectors, four of which can be used simultaneously in a multi-monitor setup. In addition to one dual link DVI-D connector, you also get one HDMI 2.0b port and three full-sized DisplayPort 1.4 outputs. Ventilation holes dot the rest of the bracket. They don't serve any purpose though, given EVGA's fin orientation.

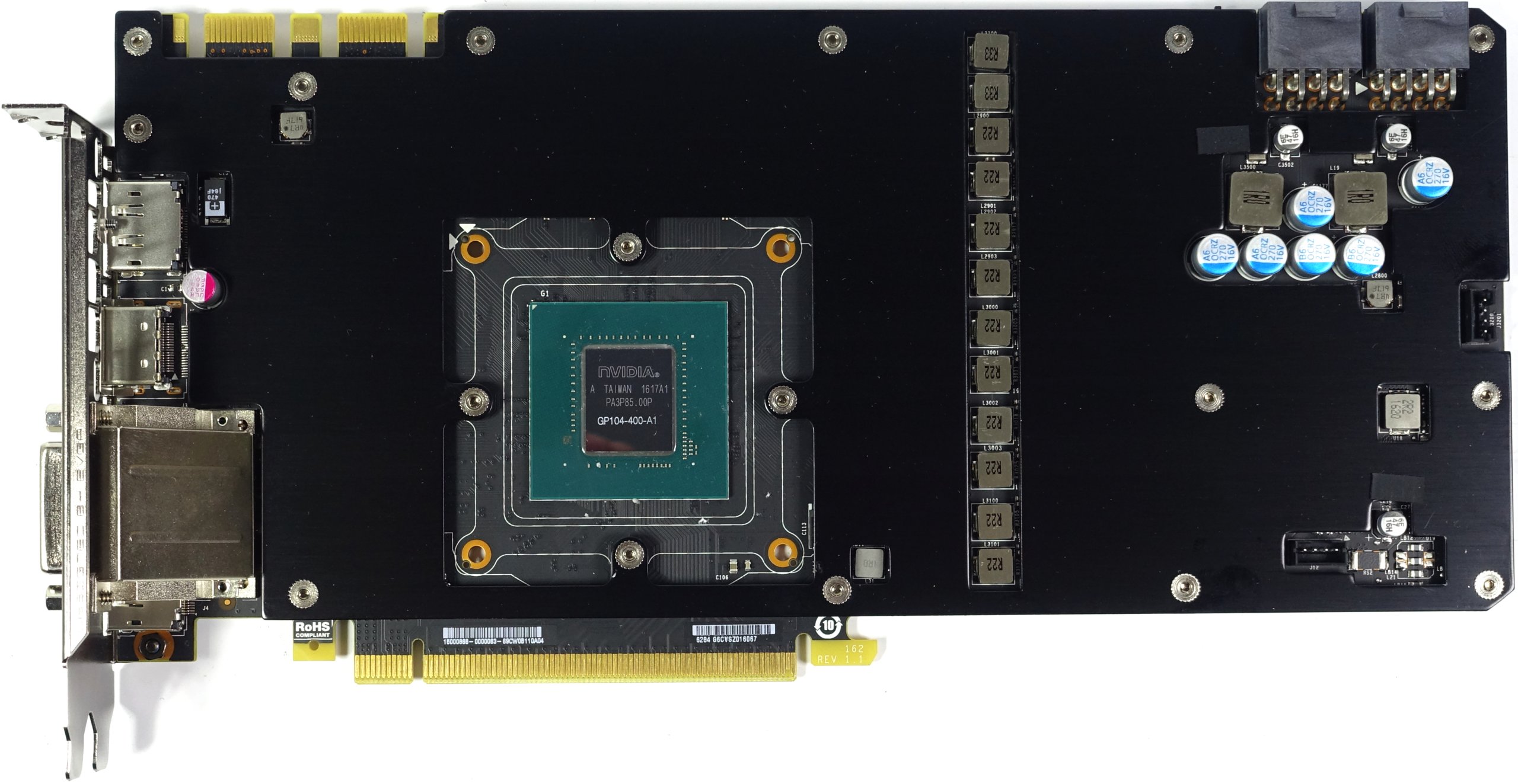

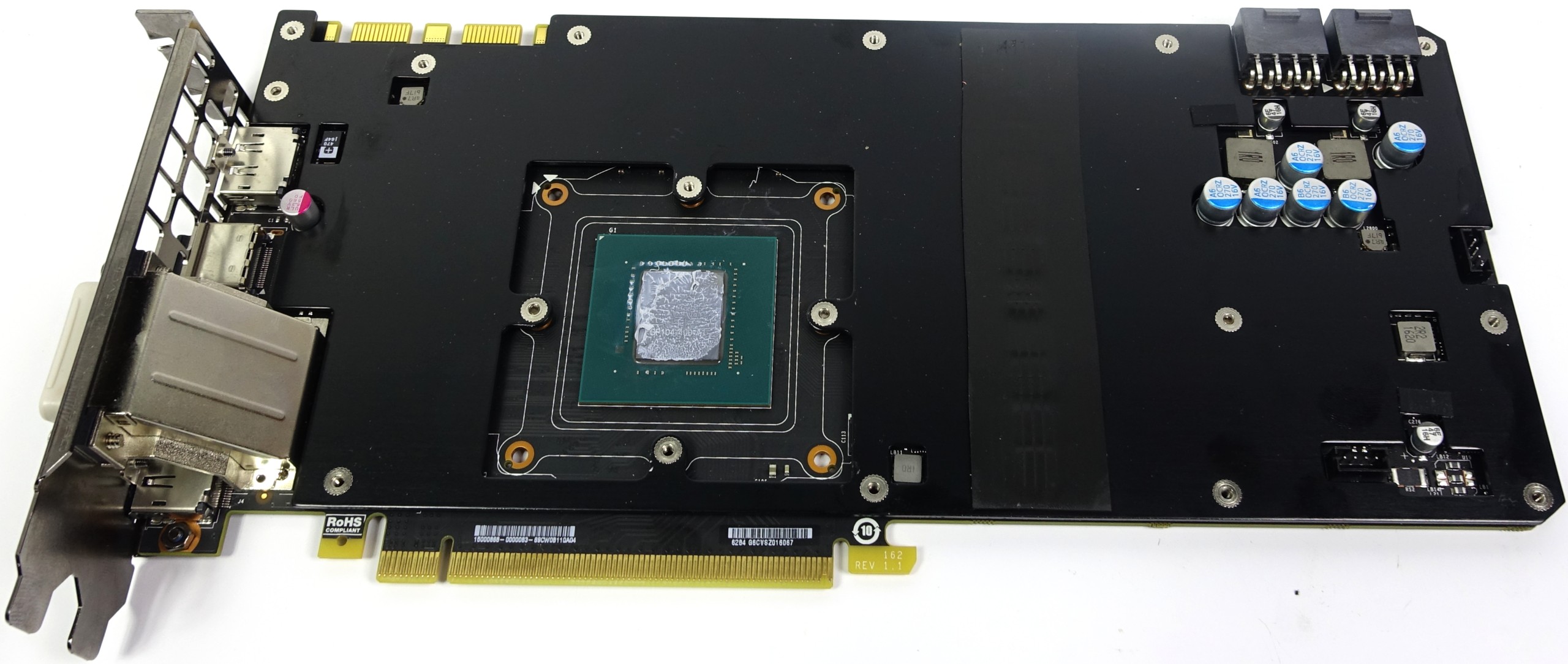

Board & Components

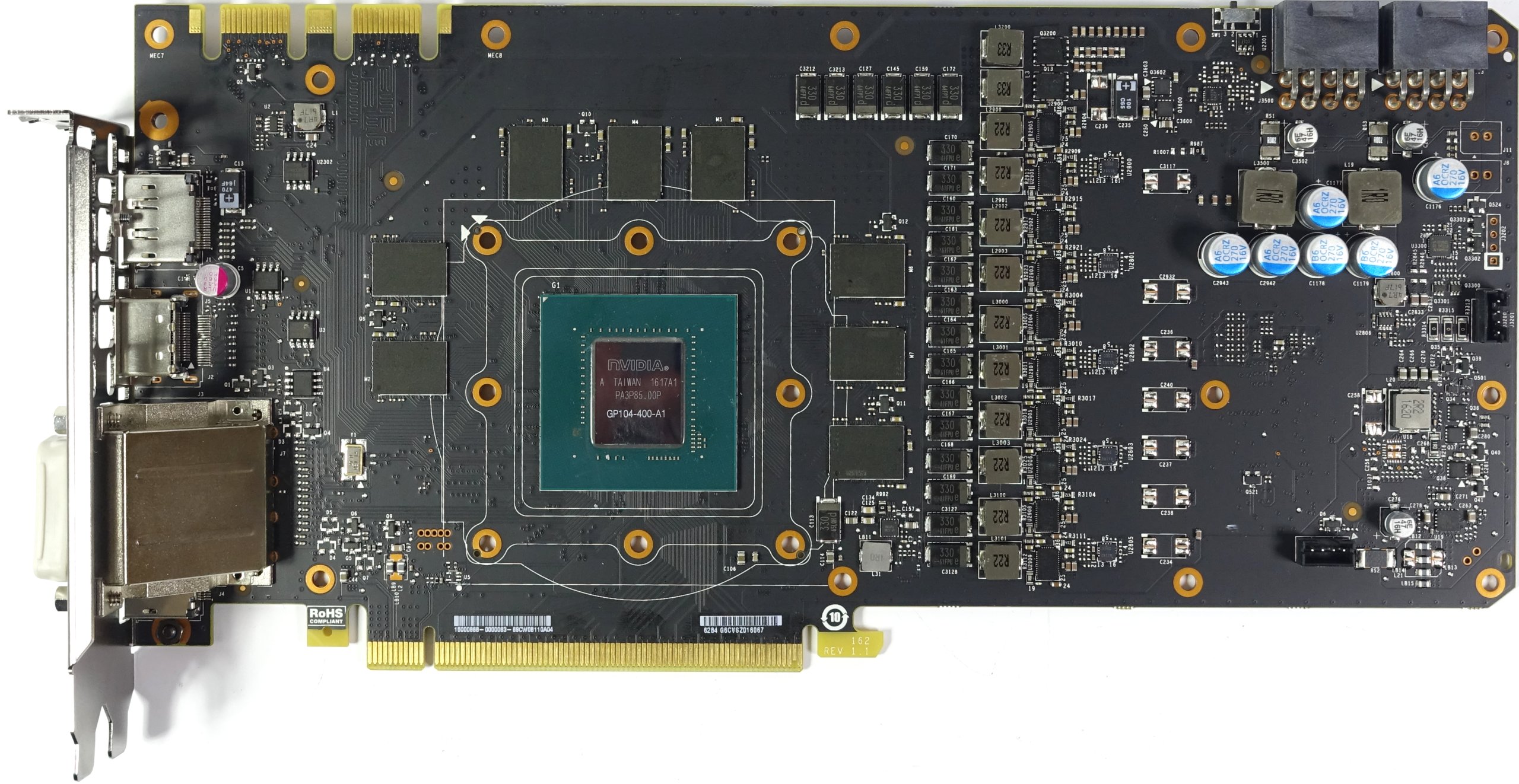

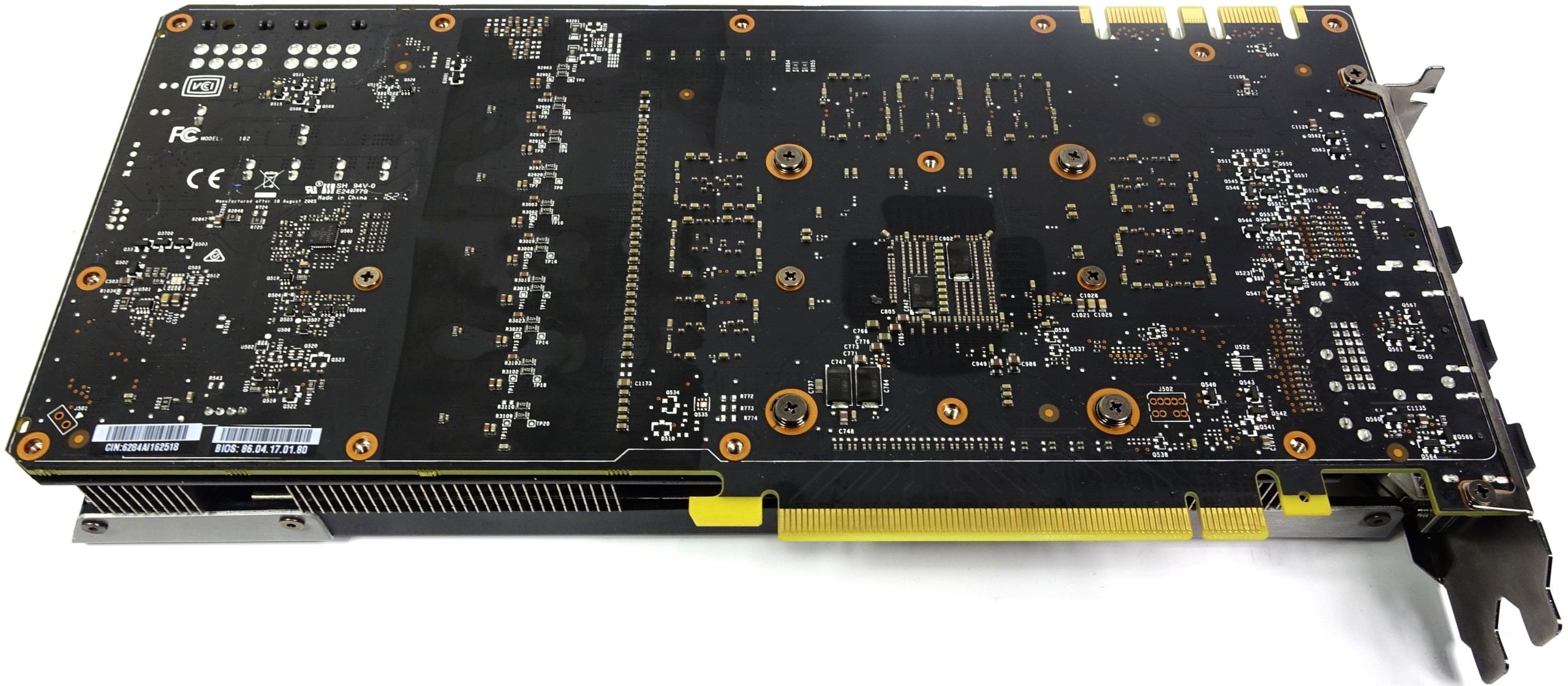

EVGA leverages its own circuit board design. At first glance, the card seems to employ a very clean layout that sparks our curiosity due to its many on-board components.

EVGA naturally uses the Micron GDDR5X memory modules that Nvidia sells along with its GPU. Eight of them operating at 1251 MHz are connected to GP104 through an aggregate 256-bit interface, enabling up to 320 GB/s of bandwidth.

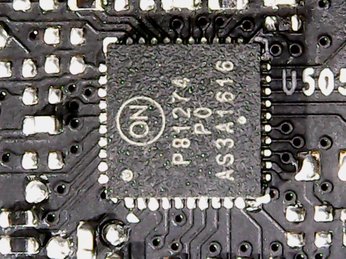

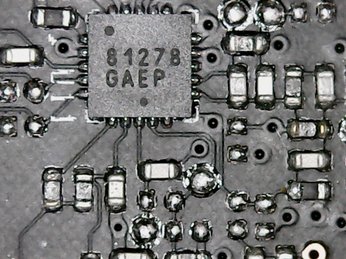

Unlike Nvidia's reference design, EVGA's 5+2-phase system relies on an NPC81274 from ON Semiconductor as its PWM controller. Even if the card is advertised as having 10 power phases, that's technically a little deceiving. In reality, there are five phases, each of which is split into two separate converter circuits. This isn't a new trick by any means. It does help improve the distribution of current to create a larger cooling area. Furthermore, the shunt connection reduces the circuit's internal resistance. This is achieved with a NCP81162 current balancing phase doubler, which also contains the gate and power drivers.

For voltage regulation, one highly-integrated NCP81382 is used per converter circuit, which combines the high-side and low-side MOSFETs, as well as the Schottky diode, in a single chip. Thanks to the doubling of converter circuits, the coils are significantly smaller. This can be quite an advantage since the current per circuit is smaller as well. As a result, conductors can be reduced in diameter while retaining the same inductance.

Yet, compared to the KFA²/Galax GeForce GTX 1080 HoF, which uses this effect for a total of 15 converter circuits, EVGA achieves rather modest results as far as cooling is concerned. As we get into the 1080 FTW's benchmark results, we'll present some data backing that claim.

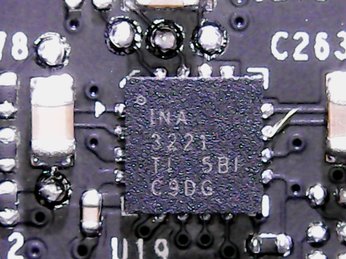

Current monitoring is enabled through a three-channel INA3221.

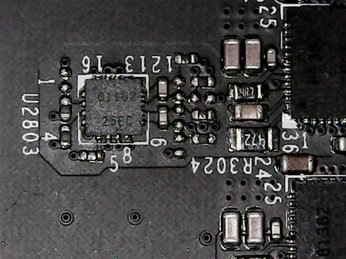

The memory modules are powered by two separate phases controlled with a NPC81278, which integrates the gate driver and PWM VID interface. A NTMFD4C85N by ON Semiconductor combines both high-side and low-side MOSFETs in one chip.

Two familiar capacitors are installed right below the GPU to absorb and equalize voltage peaks.

Power Results

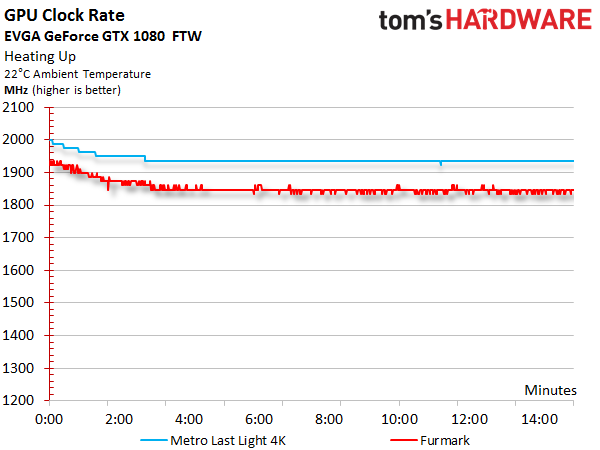

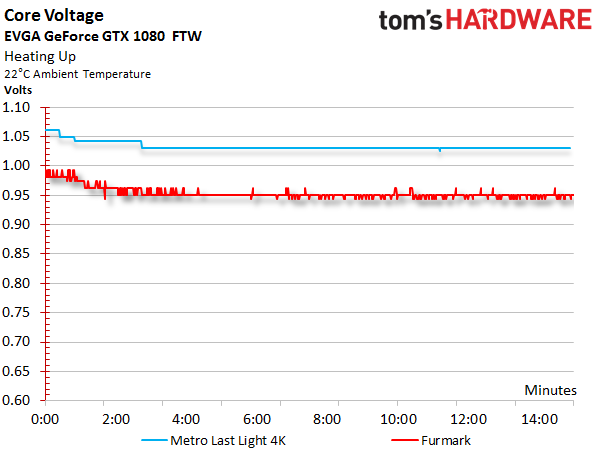

Before we look at power consumption, we should talk about the correlation between GPU Boost frequency and core voltage, which are so similar that we decided to put their graphs one on top of the other. EVGA uses a very high power target that, in turn, allows for a relatively constant GPU Boost clock rate. It only drops slightly as temperature increases, and the observed voltage behaves similarly.

After warming up in our gaming workload, the GPU Boost frequency, which initially started at 2 GHz, settles at a stable value of 1936 MHz. This falls to 1848 MHz under constant load.

Our voltage measurements look similar: while we observe up to 1.062V in the beginning, that number dips to an average 1.031V.

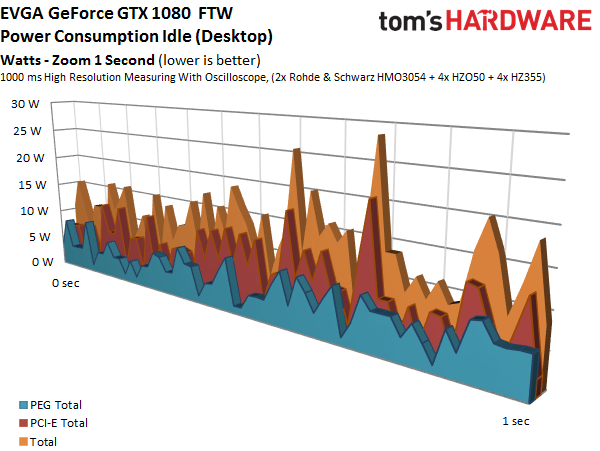

Combining the measured voltages and currents allows us to derive a total power consumption we can easily confirm with our instrumentation by taking readings at the card's power connectors.

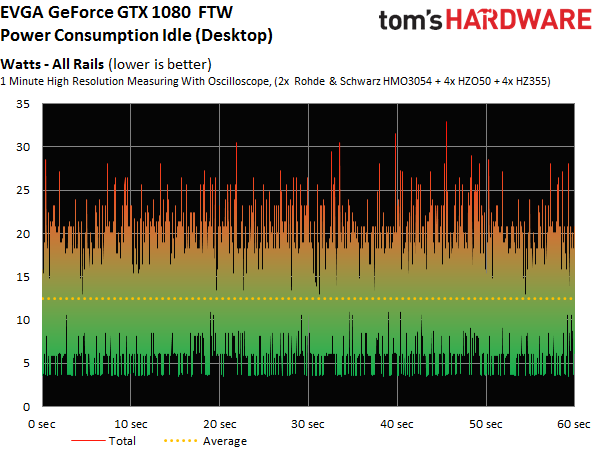

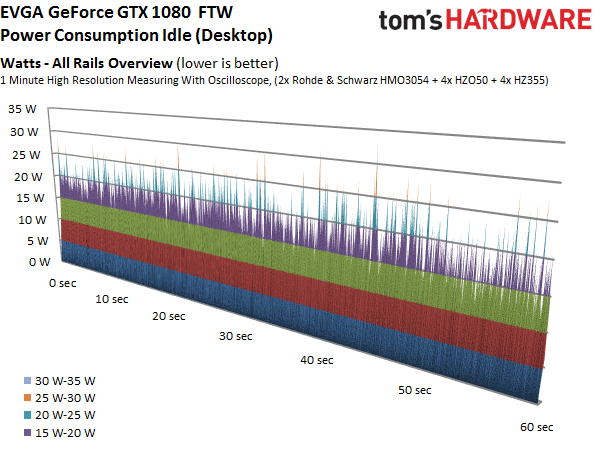

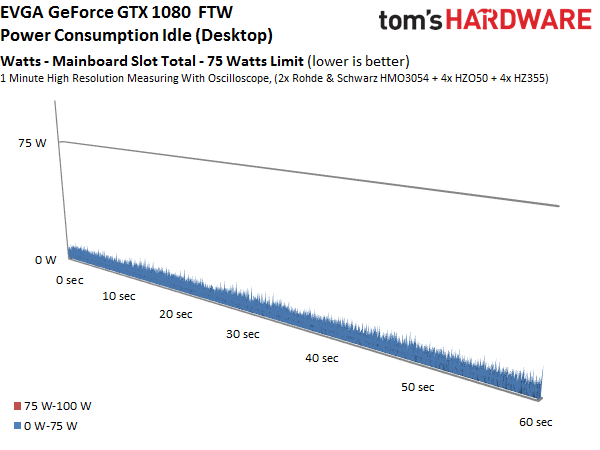

Since Nvidia forces its partners to sacrifice the lowest possible clock rate in order to gain an extra GPU Boost bin, this card's power consumption is disproportionately high as it idles at 253 MHz. EVGA handles this setback fairly well, though. The consequences are listed below:

| Power Consumption | |

|---|---|

| Idle | 12W |

| Idle Multi-Monitor | 15W |

| Blu-ray | 14W |

| Browser Games | 115-135W |

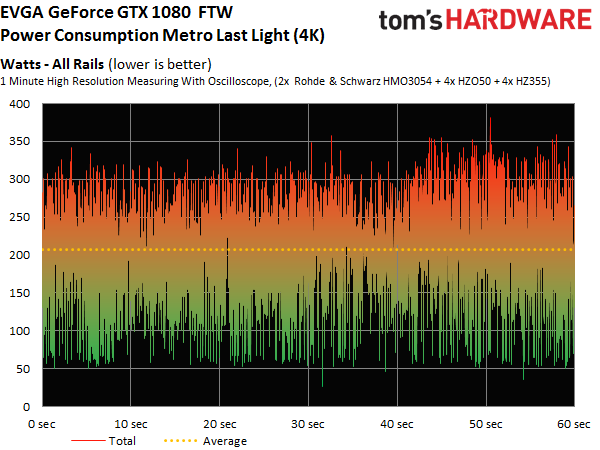

| Gaming (Metro Last Light at 4K) | 207W |

| Torture (FurMark) | 232W |

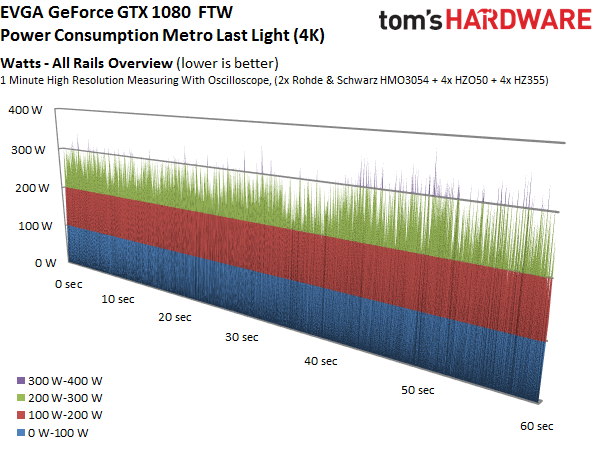

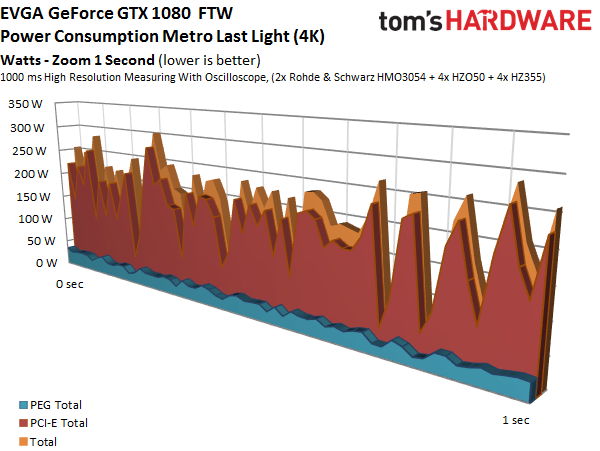

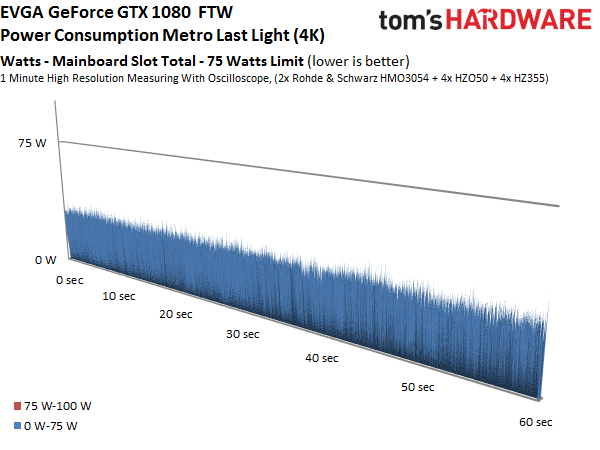

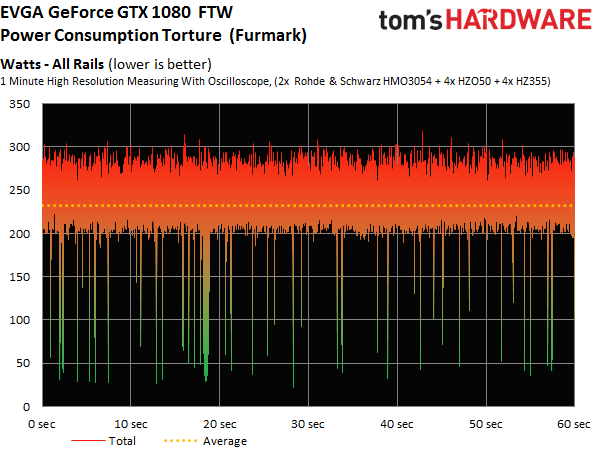

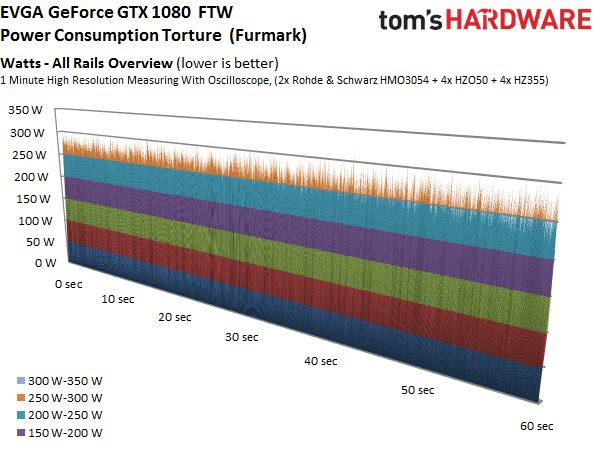

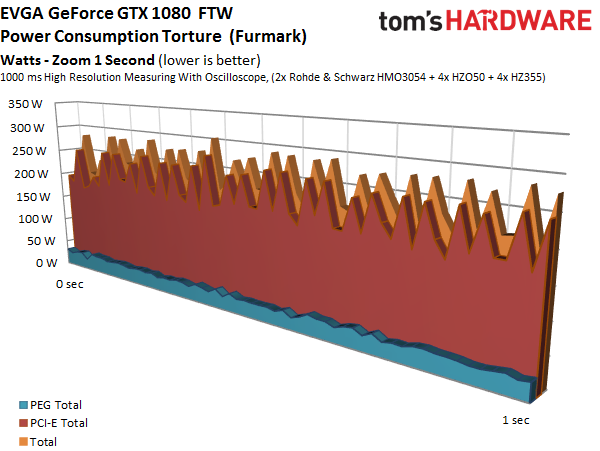

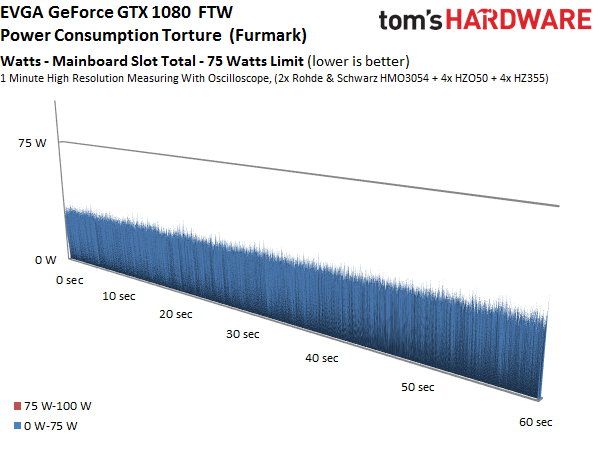

These charts go into more detail on power consumption at idle, during 4K gaming, and under the effects of our stress test. The graphs show how load is distributed between each voltage and supply rail, providing a bird's eye view of load variations and peaks.

Temperature Results

Update, 11/26/16: In the original version of this round-up, we exposed an issue with EVGA's ACX 3.0 thermal solution. The company used our feedback to improve its design, in the process giving customers a couple of options for modifying their existing cards. What follows is a review of those options and their impact on cooling/noise. We're replacing the previous data with results from our updated testing, since that most accurately reflects production hardware.

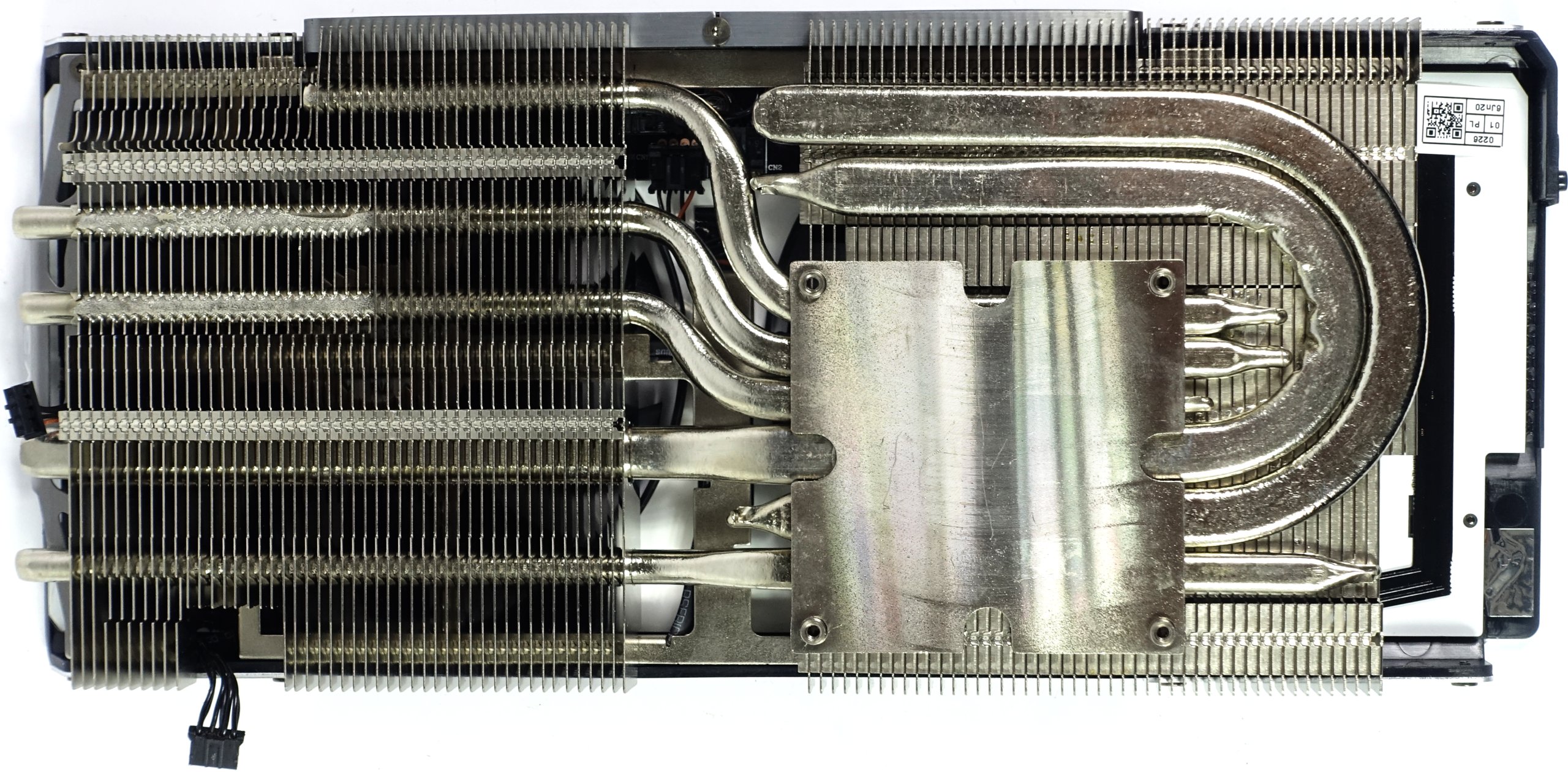

Naturally, heat output is directly related to power consumption, and the 1080 FTW's ability to dissipate that thermal energy can only be understood by looking at its cooling solution.

As with the 1080 Founders Edition, the backplate is mostly aesthetic; it doesn't serve much practical purpose. At best, it helps with the card's structural stability.

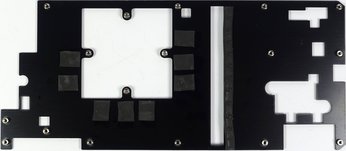

Unfortunately, the way the voltage regulation circuitry was originally cooled exposed a design flaw. This problem was evident in the mounting plate, which does draw heat away from the VRMs and memory. The plate has a cut-out for the coils, meant to keep the VRMs and memory from heating each other up. Unfortunately, it also reduced the cooling surface for an area right below the center of the fan rotor, where airflow is weak to begin with.

Under normal circumstances, the company's decision might have still turned out alright. But the GeForce GTX 1080 FTW Gaming ACX 3.0 has an exorbitantly high power target, which chases the card up to and beyond 230W under load. This puts a lot of stress on the VRMs. Given those conditions, the limited surface area just couldn't keep up.

The cooler uses a nickel-plated heat sink, along with four quarter-inch (6mm) and two one-third-inch (8mm) flattened heat pipes. Its actual capacity is adequate. But a look at the GPU temperatures shows that the upper limit of this short, dual-slot solution is in sight. We originally measured up to 167°F (75°C) during our gaming loop and up to 172°F (78°C) in a closed case. Under maximum load, the reading rose to 171°F (77°C), and 176 to 178°F (80 to 81°C) in a closed case. That was just too high, especially when you consider the data was collected in an air-conditioned room set to 72°F (22°C).

Re-Testing After EVGA's Thermal Modification

Clearly, something needed to be done. Back in November, we published EVGA Addresses GeForce GTX 1080 FTW PWM Temperature Problems, linking to new BIOS versions for five different cards. EVGA also offers optional thermal pads to any affected customer who requests them. Customers who do not feel comfortable updating the BIOS or who damage their card installing the thermal pad will receive EVGA’s full support, the company says.

For our part, we committed to a re-test once EVGA implemented its planned changes, and that’s what you see going live alongside our GeForce GTX 1080 round-up.

In order to address some of the challenges originally posed by EVGA’s card, we drilled two holes into the backplate, right above the two hottest points identified in our previous tests, and cut a circular part off the thermal pads to give our infrared camera a clearer view of the board. Since we are using the same card as before, the results are directly comparable.

Meanwhile, several images have surfaced in forums allegedly showing that the thermal pads used in manufacturing don't completely fill the gap between the front plate and memory modules. Working with enthusiasts online, we analyzed several EVGA cards and couldn’t find evidence of this gap issue, as the picture below shows:

In every case, the pads were placed correctly, even if they weren’t a tight fit. Furthermore, the pictures on the Internet all show the pads sticking to the front cover, and the gap is between the memory module and thermal pad. To better understand what this means, it’s necessary to know a bit about how graphics cards are manufactured.

Thermal pads are almost exclusively glued onto the memory modules first. The front plate is attached later, together with the backplate. Thus, if a thermal pad is stuck to a plate now, at the very least it had to have firmer contact at some earlier point in time.

Our observation is that if the backplate is removed, and then the additional screws holding the front panel are removed as well, that plate is bent in such a way that it leaves a slightly angled gap, which looks very similar to these images. This is also the case if the backplate has been reattached, but the additional screws holding the front panel have not been screwed back in during reassembly.

There could, however, be gaps in the case of a GeForce GTX 1070 with this same type of cooler if the GDDR5 memory modules are a different height than the GDDR5X modules on 1080 cards. And since the thermal pads on the memory modules are kept as thin as possible, that could lead to the reported behavior.

Soon it won’t matter, though. As of mid-November, EVGA will make its thermal pads 0.2 mm thicker, just to be 100% sure there aren’t any issues. This change applies to the pads included in the thermal mod we requested, and those in mass production as well. Furthermore, the newest retail cards will come with the new BIOS versions already installed.

Because we are curious to see how much the new pads affect EVGA’s cards, we’ll test them in three steps. First, we’ll test the pad between the back of the board and the backplate. Second, we’ll test the previously installed pad and another pad between the front plate and heat sink’s cooling fins. Third, we’ll combine all of the aforementioned modifications together with the new BIOS.

Doing so should tell us if it’s really necessary to flash EVGA’s firmware and accept the disadvantage of higher noise levels. If the thermal pads themselves do the trick, perhaps it’s possible to ignore the software side altogether.

EVGA supplies a small plastic bag containing one large thermal pad (for the back) and a smaller one (for the front). They’re complemented by a supply of original EVGA thermal paste, which we’ll use later when it comes time to prepare the heat sink for modifications to the front plate. The backplate, however, is very easy to remove.

According to EVGA, you should install the larger of the two thermal pads as shown below. However, this positioning means that, in some places, the thermal pad will have direct contact with a protective foil (rather than the backplate itself). EVGA uses this foil to seal most of the holes in the backplate. We decided to leave the backplate in its original condition, but recommend removing these thermally unfavorable coverings to create more contact surface and improve airflow.

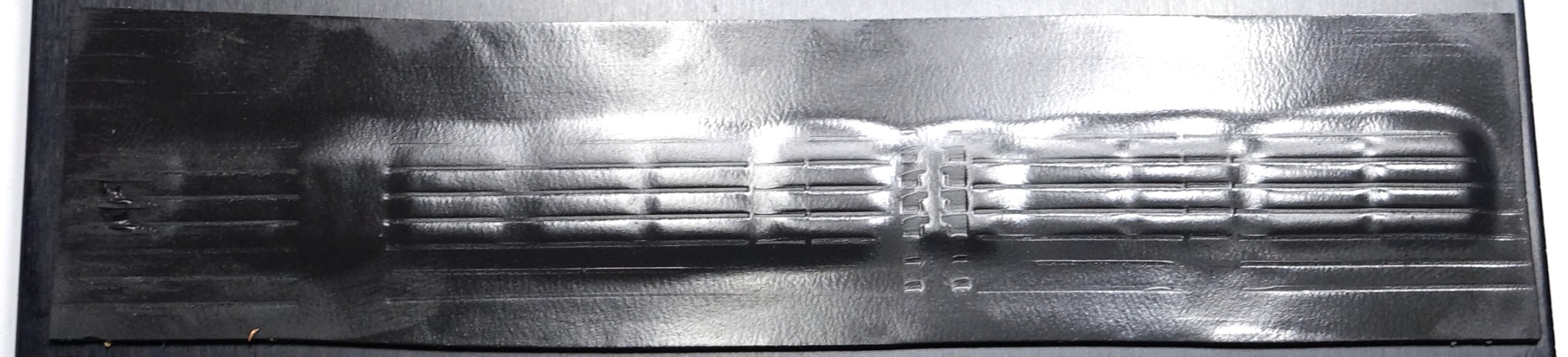

The darker area on the PCB shows where the thermal pad was positioned during installation. Since the visible area is quite large, it can be assumed that the adhesive thermal pads create good contact. This also speaks to their quality.

The voltage regulators are completely covered, and the RAM is covered at least in part. This result could be optimized further if the thermal pad was positioned about four-fifths of an inch (two centimeters) further to the right, as seen from the back of the PCB. In that case, two rectangular cut-outs need to be made in the top and bottom corners on the right-hand side so that the thermal pad doesn't cover two holes needed for screws.

Applying the thermal pads to the front panel is a bit trickier, since EVGA explicitly recommends covering the long-hole cut-out for the coils, creating a closed surface. Furthermore, this closed surface must also be tall enough to provide sufficient contact with the cooling fins of the main heat sink.

The image taken after re-disassembly shows visible stripe-shaped impressions where this contact happens. We made sure to include the area around the coils, which was one of the hottest parts of the board during our previous measurements. This should significantly reduce the heat propagation inside the board, especially towards memory and GPU.

Measurements During The Gaming Loop

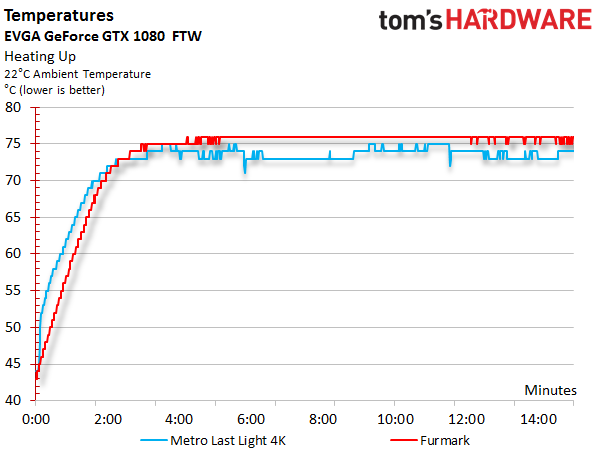

Our metrics are demanding, so the card’s power consumption increases to values that very few games actually reach. It’s reasonably safe to assume that the temperatures we measure are indeed representative of a worst-case scenario.

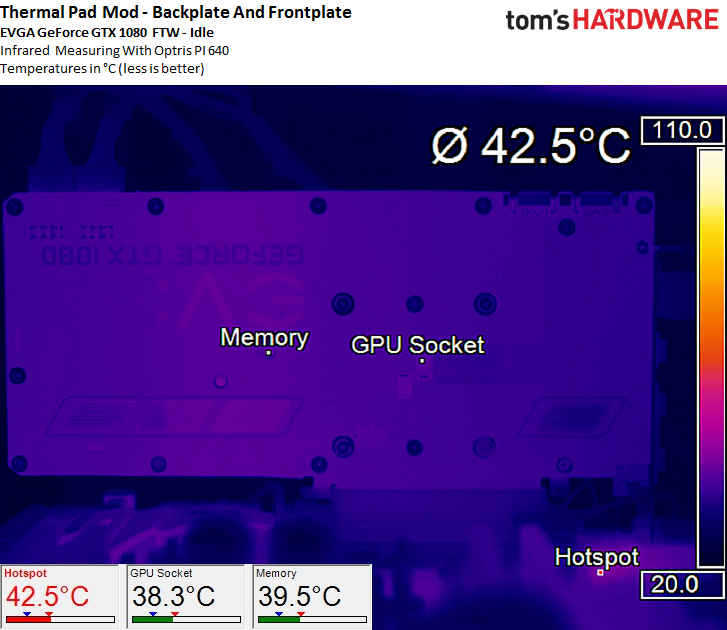

When the card is idle, the memory and GPU both remain below 104°F (40°C).

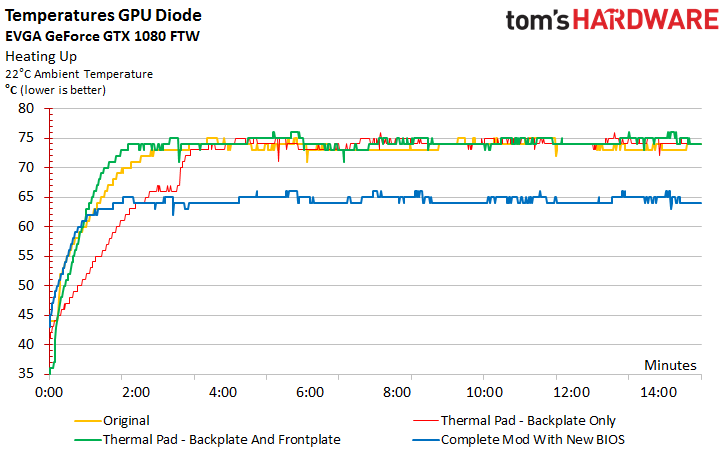

The first measurements we’re presenting come from the GPU diode after all three stages of the thermal modification. We’ll compare those readings to our results prior to EVGA’s fix.

The temperature measurements without flashing the BIOS look very similar, which of course is due to the old fan curve. This curve changes significantly in the modified BIOS.

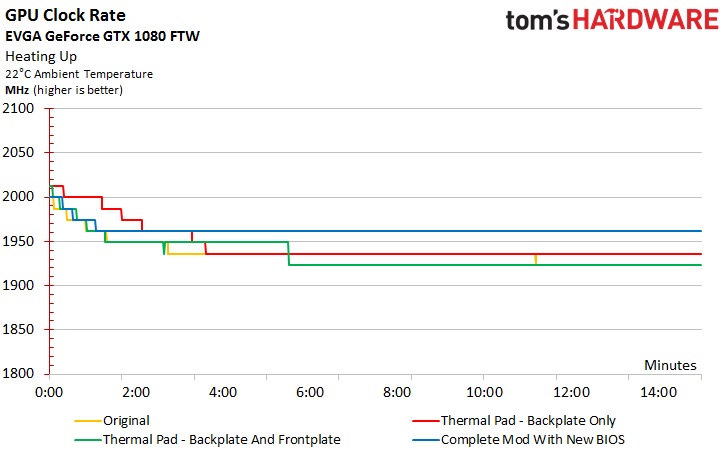

Since those temperatures also have a direct influence on GPU Boost clock rates, we add frequency results to the chart below:

With the original fan curve, GPU Boost frequencies follow the temperatures. Physically modifying the card with thermal pads doesn’t significantly change the GPU temperature or GPU Boost values. Only the new BIOS, with its corresponding higher fan speed and acoustic output, cool the GPU noticeably, enabling more aggressive frequencies.

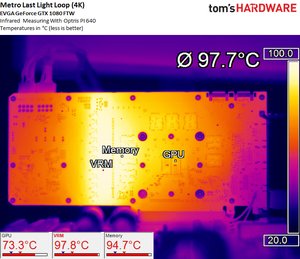

This wasn’t the focus of our original results, though. The focus there was excessive temperatures measured on completely different parts of the board. To follow up, we need our IR camera.

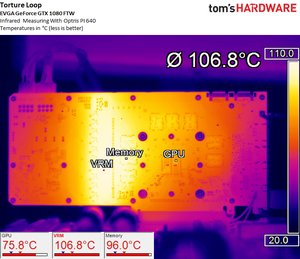

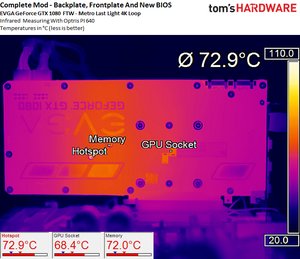

Original Measurements Without the Modification

To recap, our original round-up results showed the memory modules at their officially specified thermal limits, even during our Metro: Last Light gaming loop.

We measured the card in its original state a second time, with the backplate attached, just to be really sure. The noise levels remained more or less the same.

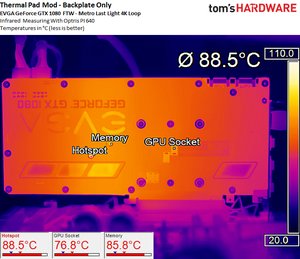

Measurements After Installing the Thermal Pad on the Back

First, we want to see how much the thermal pad between the circuit board and backplate improves cooling on its own. This may serve as an example in the future, predicting what another poorly-cooled card might gain from similar modifications. The upside here is that we don’t need to remove the cooler; we can perform the mod without handling thermal paste or worrying about parts we took off.

As we can see, memory modules and MOSFETs are about nine degrees Kelvin cooler! However, the memory temperatures are still too high during our stress test. We also note that the GPU package (not the GPU itself) is hotter due to a significantly warmer backplate. Now we know why the temperature of the GPU diode was a little higher with thermal pad in place compared to the original readings without the pad.

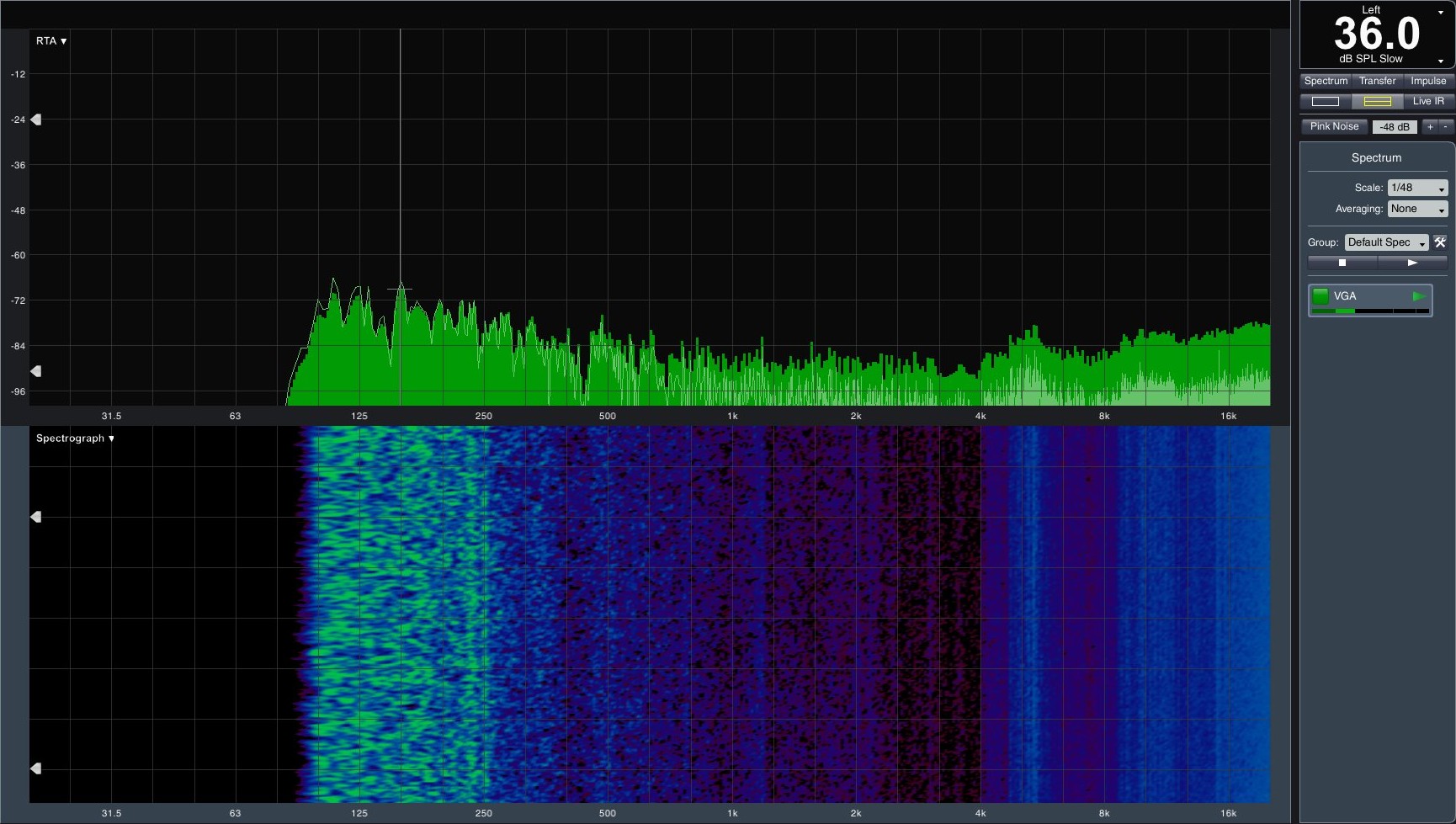

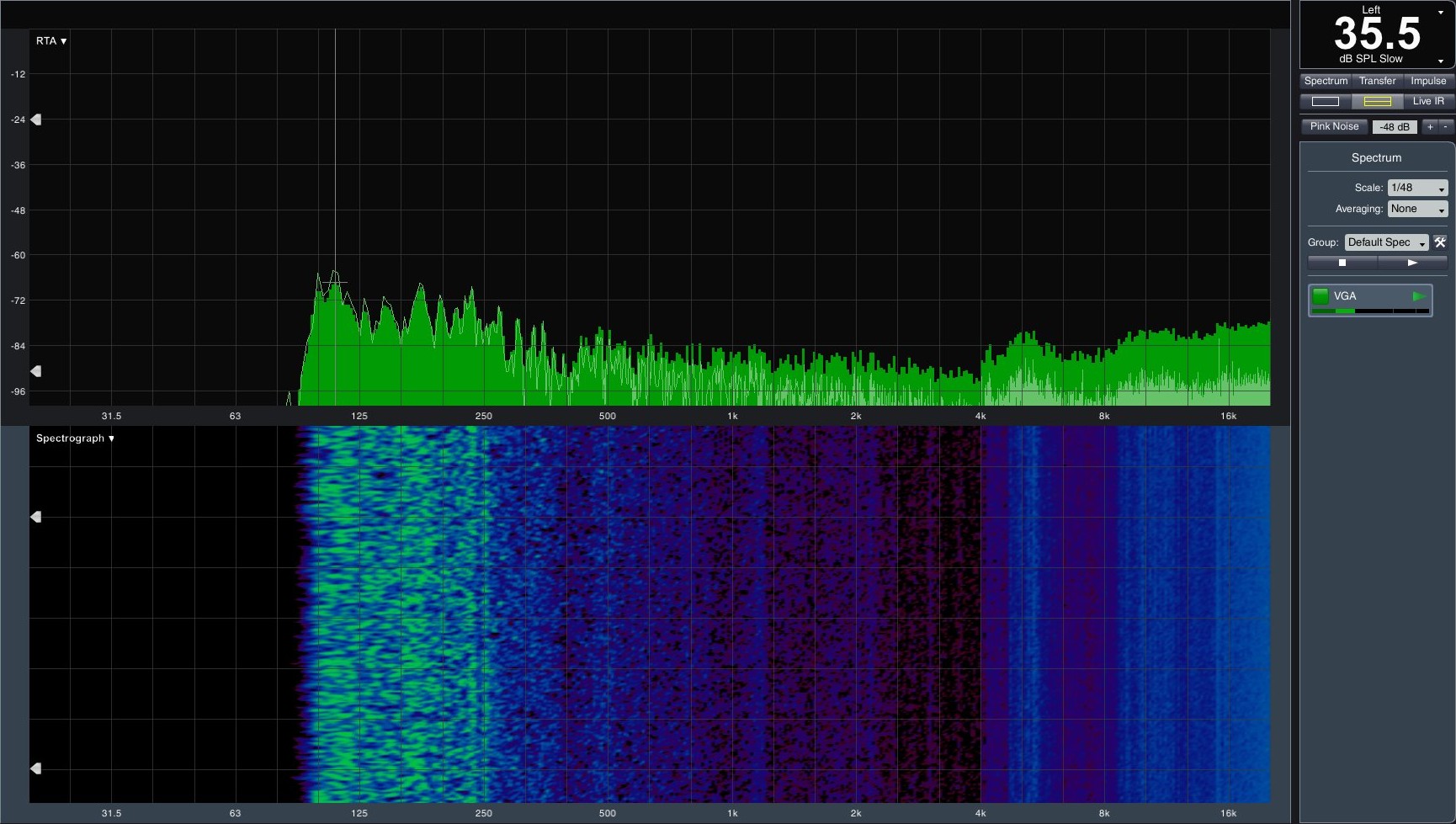

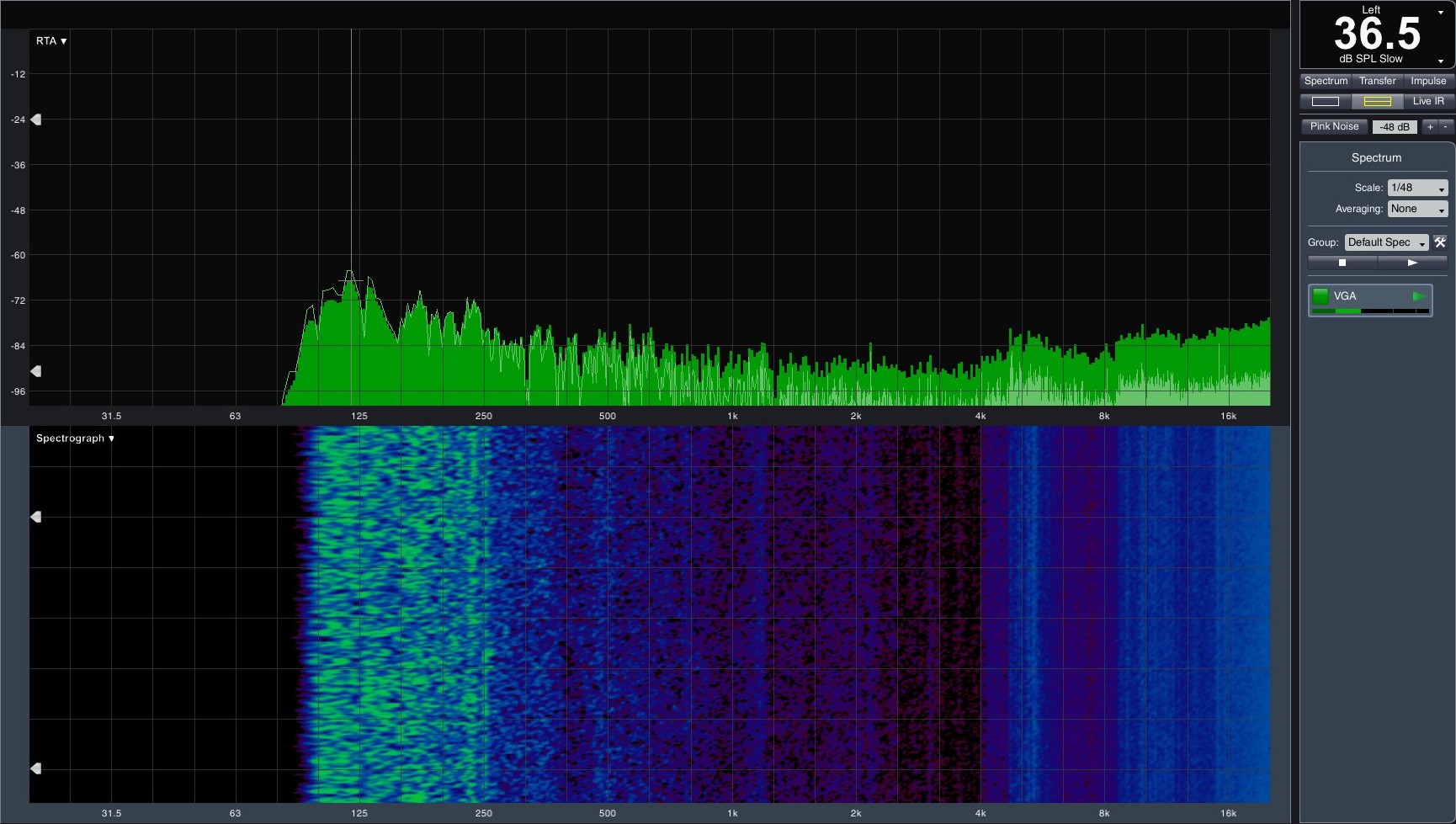

It's also noteworthy that the card is a little bit quieter, which could be due to the lower overall GPU diode temperatures, even if the peaks are a little higher at times. On the other hand, 0.5 dB(A) doesn’t make an audible difference:

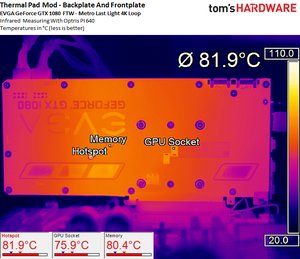

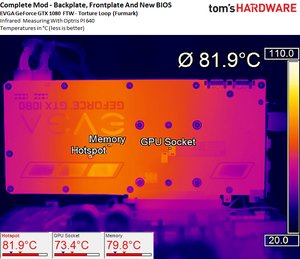

Measurements with Thermal Pads on Front and Back

We are going to throw in another thermal pad and connect the upper side of the front plate to the large heat sink using thermal paste. This solution clearly improves heat dissipation, especially for the coils and area around the VRMs. A closer look at the measurements shows that the VRMs are already 16 degrees Kelvin cooler than the first test without thermal pads. That’s incredibly significant.

The memory enjoys plenty of relief as well: almost 15 degrees Kelvin in the gaming loop and at least seven degrees during the stress test. These modifications improve the card's cooling enough to allow some reserves for warmer days. With them, EVGA has no reason to shy away from the competition.

The thermal mod with both pads in place leaves us with similar results; we observe similar noise levels as the original, too.

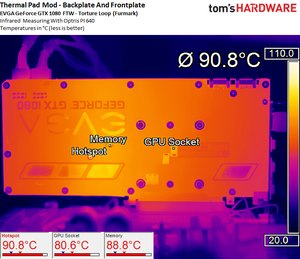

Measurements of all Modifications, Including the BIOS

Even if it doesn't seem necessary at this point, we still have one more option for improving thermal performance. As such, we flashed the BIOS on our card to EVGA’s latest, available on the company’s website.

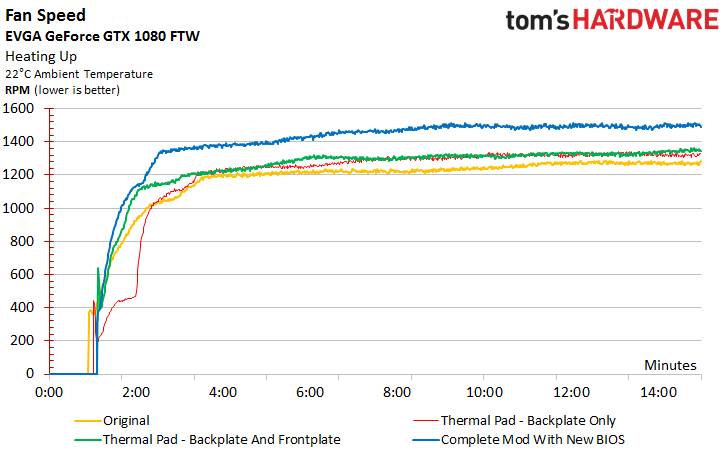

The fans spring into action and jump to high RPM, yielding impressive results. The GPU Boost frequency increases, but so does the noise level. Originally, the fan spun at 1300 RPM; now it’s over 1500 RPM. That ~200 RPM jump exacts a notable price that we’ll quantify shortly.

A look at the temperatures makes us wonder why the original BIOS didn’t have the fan spinning 100 RPM faster already. We think the new firmware’s fan curve is a little too aggressive. Even half of the increase would have sufficed.

An incredible 25-degree Kelvin drop on the VRMs in our gaming loop and stress test is a testament to EVGA’s effort. But was it really necessary to go to such extremes?

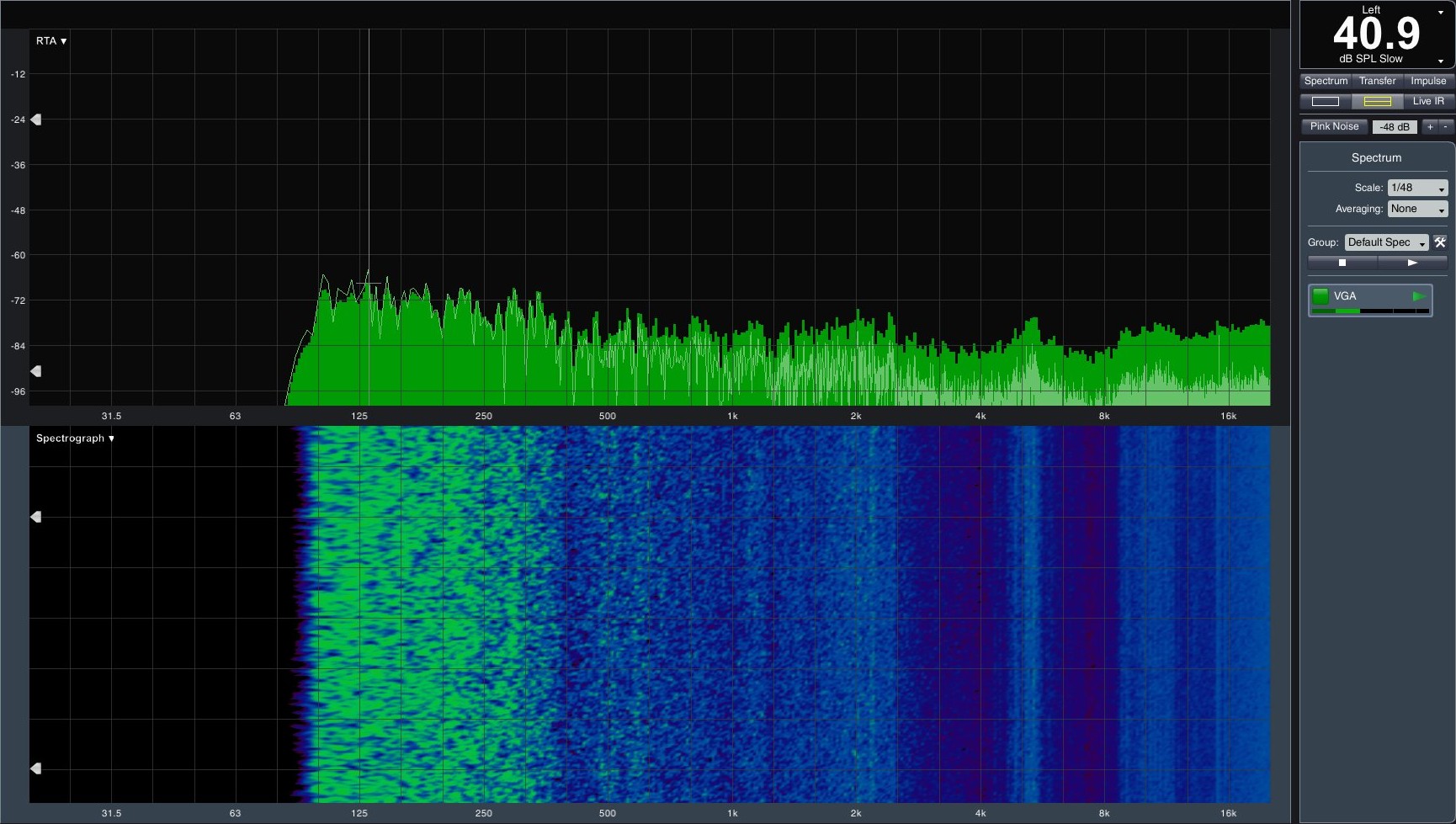

Now we want to see what these measures do to acoustics. A reading of almost 41 dB(A) the card is distinctly audible and what most would consider loud. The only upside is a more uniform fan noise that can be filtered relatively easily with an insulated case.

A more moderate curve would have left the card between 37 and 38 dB(A), and sufficiently cooled. In the end, the BIOS update gets a small question mark, though we can at least understand it’s easier to implement than a hardware modification.

Conclusion

Two months passed after we first contacted EVGA at the beginning of September and the publication of this update on Tom’s Hardware DE. The company should have probably reacted faster to counter the forum posts from unhappy customers discussing poor thermal performance.

In the end, though, it’s the final result that matters, and EVGA did successfully solve its issue. If the ACX 3.0-equipped cards are used in cases with at least moderate airflow, the BIOS update is superfluous. EVGA would be well-advised to simply install the two thermal pads in mass production and leave the new firmware on its site as an optional download. Otherwise, you’re going to be subjected to 41 dB(A) of noise for a slightly higher GPU Boost frequency. Adding the thermal pads is enough to achieve what gamers want: a cool, but still pleasantly quiet card. Enthusiasts willing to go with the noisier option for a bit of extra performance can flash the BIOS or, even easier, set up a custom fan curve with EVGA’s Those who absolutely want to go with the noisier option to gain a smidgen of extra performance could either just flash the BIOS, or (even easier) set up a custom fan curve with EVGA's own Precision XOC software.

EVGA GeForce GTX 1080 FTW Gaming ACX 3.0

Reasons to buy

Reasons to avoid

MORE: Best Deals

MORE: Hot Bargains @PurchDeals

Current page: EVGA GeForce GTX 1080 FTW Gaming ACX 3.0

Prev Page Nvidia GeForce GTX 1080 Founders Edition Next Page Galax/KFA² GTX 1080 Hall of FameGet Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

ledhead11 Love the article!Reply

I'm really happy with my 2 xtreme's. Last month I cranked our A/C to 64f, closed all vents in the house except the one over my case and set the fans to 100%. I was able to game with the 2-2.1ghz speed all day at 4k. It was interesting to see the GPU usage drop a couple % while fps gained a few @ 4k and able to keep the temps below 60c.

After it was all said and done though, the noise wasn't really worth it. Stock settings are just barely louder than my case fans and I only lose 1-3fps @ 4k over that experience. Temps almost never go above 60c in a room around 70-74f. My mobo has the 3 spacing setup which I believe gives the cards a little more breathing room.

The zotac's were actually my first choice but gigabyte made it so easy on amazon and all the extra stuff was pretty cool.

I ended up recycling one of the sli bridges for my old 970's since my board needed the longer one from nvida. All in all a great value in my opinion.

One bad thing I forgot to mention and its in many customer reviews and videos and a fair amount of images-bent fins on a corner of the card. The foam packaging slightly bends one of the corners on the cards. You see it right when you open the box. Very easily fixed and happened on both of mine. To me, not a big deal, but again worth mentioning. -

redgarl The EVGA FTW is a piece of garbage! The video signal is dropping randomly and make my PC crash on Windows 10. Not only that, but my first card blow up after 40 days. I am on my second one and I am getting rid of it as soon as Vega is released. EVGA drop the ball hard time on this card. Their engineering design and quality assurance is as worst as Gigabyte. This card VRAM literally burn overtime. My only hope is waiting a year and RMA the damn thing so I can get another model. The only good thing is the customer support... they take care of you.Reply -

Nuckles_56 What I would have liked to have seen was a list of the maximum overclocks each card got for core and memory and the temperatures achieved by each coolerReply -

Hupiscratch It would be good if they get rid of the DVI connector. It blocks a lot of airflow on a card that's already critical on cooling. Almost nobody that's buying this card will use the DVI anyway.Reply -

Nuckles_56 Reply18984968 said:It would be good if they get rid of the DVI connector. It blocks a lot of airflow on a card that's already critical on cooling. Almost nobody that's buying this card will use the DVI anyway.

Two things here, most of the cards don't vent air out through the rear bracket anyway due to the direction of the cooling fins on the cards. Plus, there are going to be plenty of people out there who bought the cheap Korean 1440p monitors which only have DVI inputs on them who'll be using these cards -

ern88 I have the Gigabyte GTX 1080 G1 and I think it's a really good card. Can't go wrong with buying it.Reply -

The best card out of box is eVGA FTW. I am running two of them in SLI under Windows 7, and they run freaking cool. No heat issue whatsoever.Reply

-

Mike_297 I agree with 'THESILVERSKY'; Why no Asus cards? According to various reviews their Strixx line are some of the quietest cards going!Reply -

trinori LOL you didnt include the ASUS STRIX OC ?!?Reply

well you just voided the legitimacy of your own comparison/breakdown post didnt you...

"hey guys, here's a cool comparison of all the best 1080's by price and performance so that you can see which is the best card, except for some reason we didnt include arguably the best performing card available, have fun!"

lol please..