Intel Arc Graphics Performance Revisited: DX9 Steps Forward, DX12 Steps Back (Updated)

Over a dozen driver updates in the past four months

Intel’s Arc Alchemist GPUs launched toward the latter part of 2022, vying for a spot among the best graphics cards. You can see where they land on our GPU benchmarks hierarchy as well, but that's only part of the story. Intel has been busily updating drivers on a regular basis since launch, and rarely does a fortnight pass without at least one new driver to test.

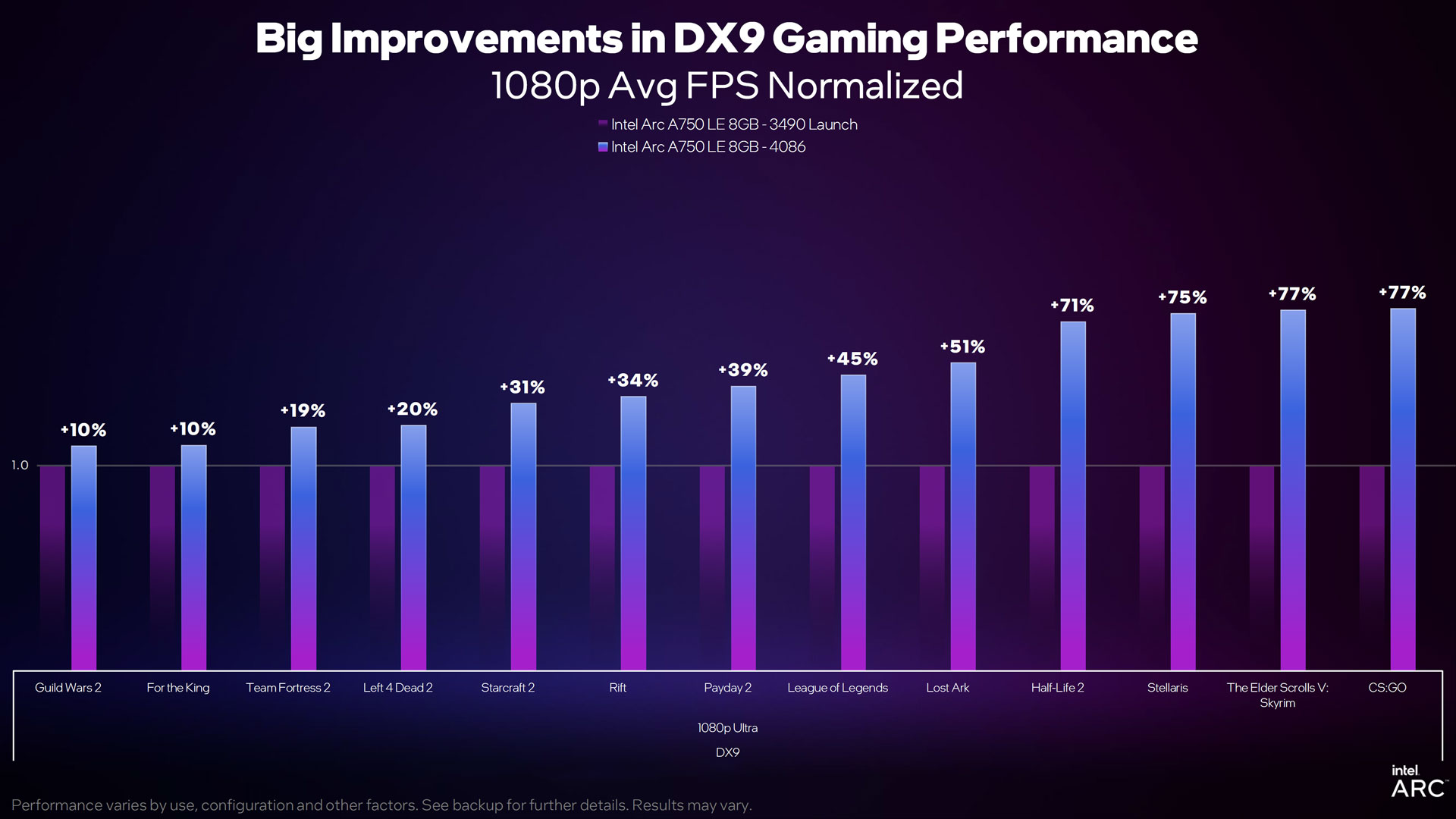

And that's the crux of the story: How much better are Intel’s latest drivers compared to the original launch drivers? The company made a lot of noise about its improved DirectX 9 performance, claiming 43% higher performance on the A750 with recent drivers compared to the original 3490 launch drivers — and at the same time dropping the price of the A750 to $250 down from $290. What about newer games, though?

We set about testing (and retesting) every Arc graphics card, including the Arc A770 8GB using an ASRock model that we haven't quite got around to reviewing just yet, due to all the retesting that's been going on.

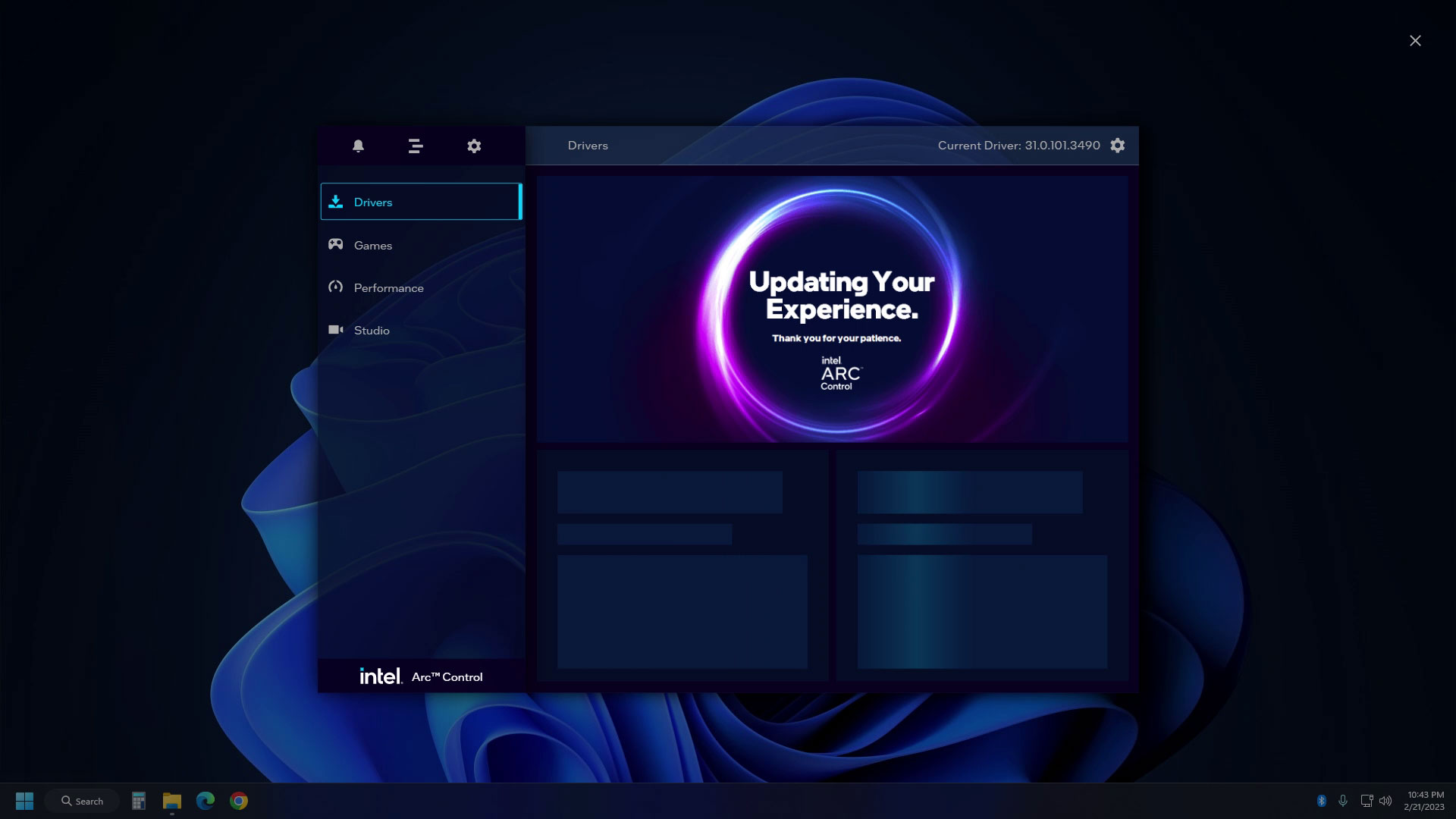

We began our testing a couple of weeks back, right after the 4123 Intel drivers became available. Last week, version 4125 drivers came out, but a quick check on one of the Arc cards indicates the only changes are related to some recent game launches and that the performance otherwise remains the same as the 4123 drivers for our test suite.

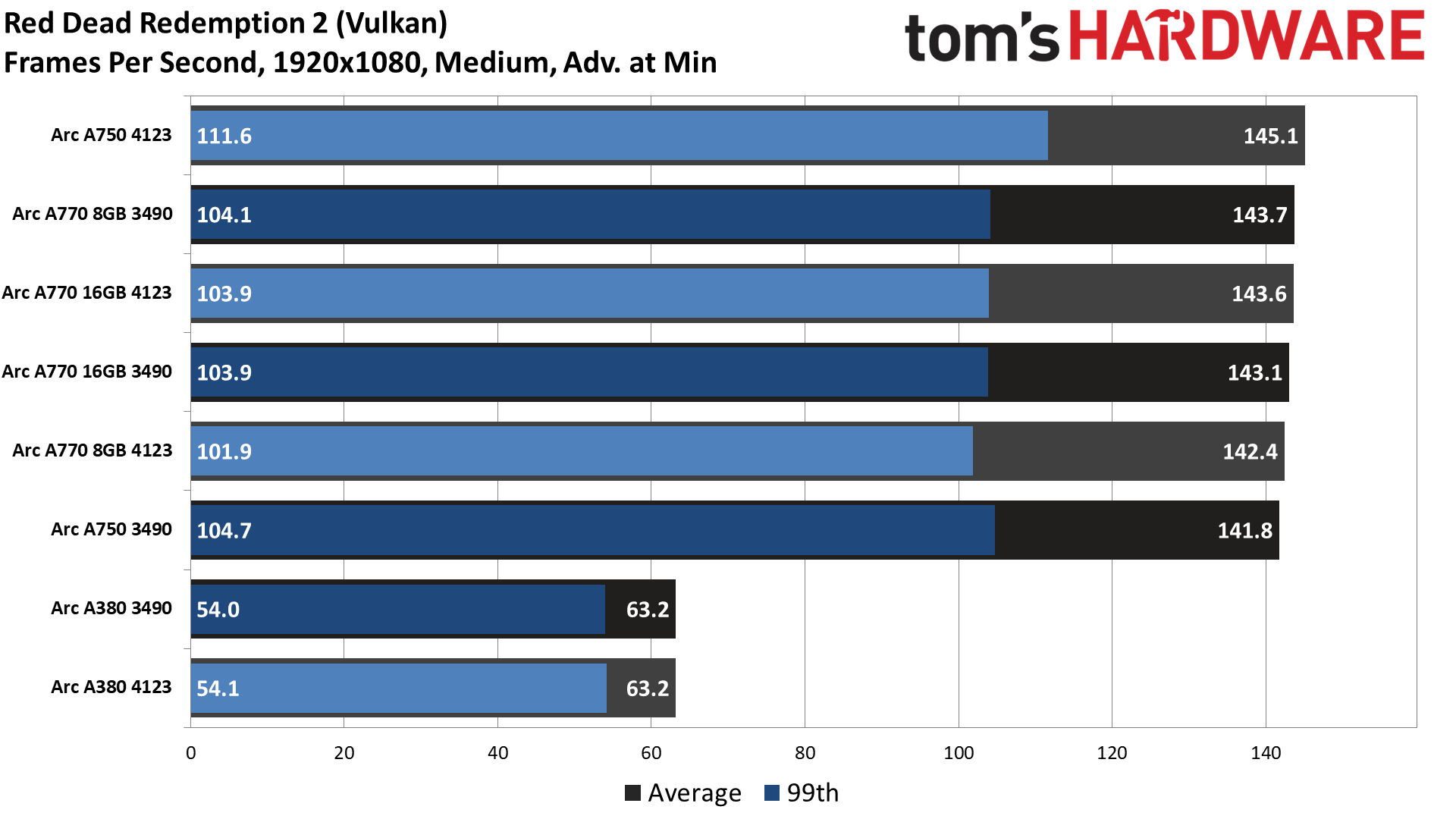

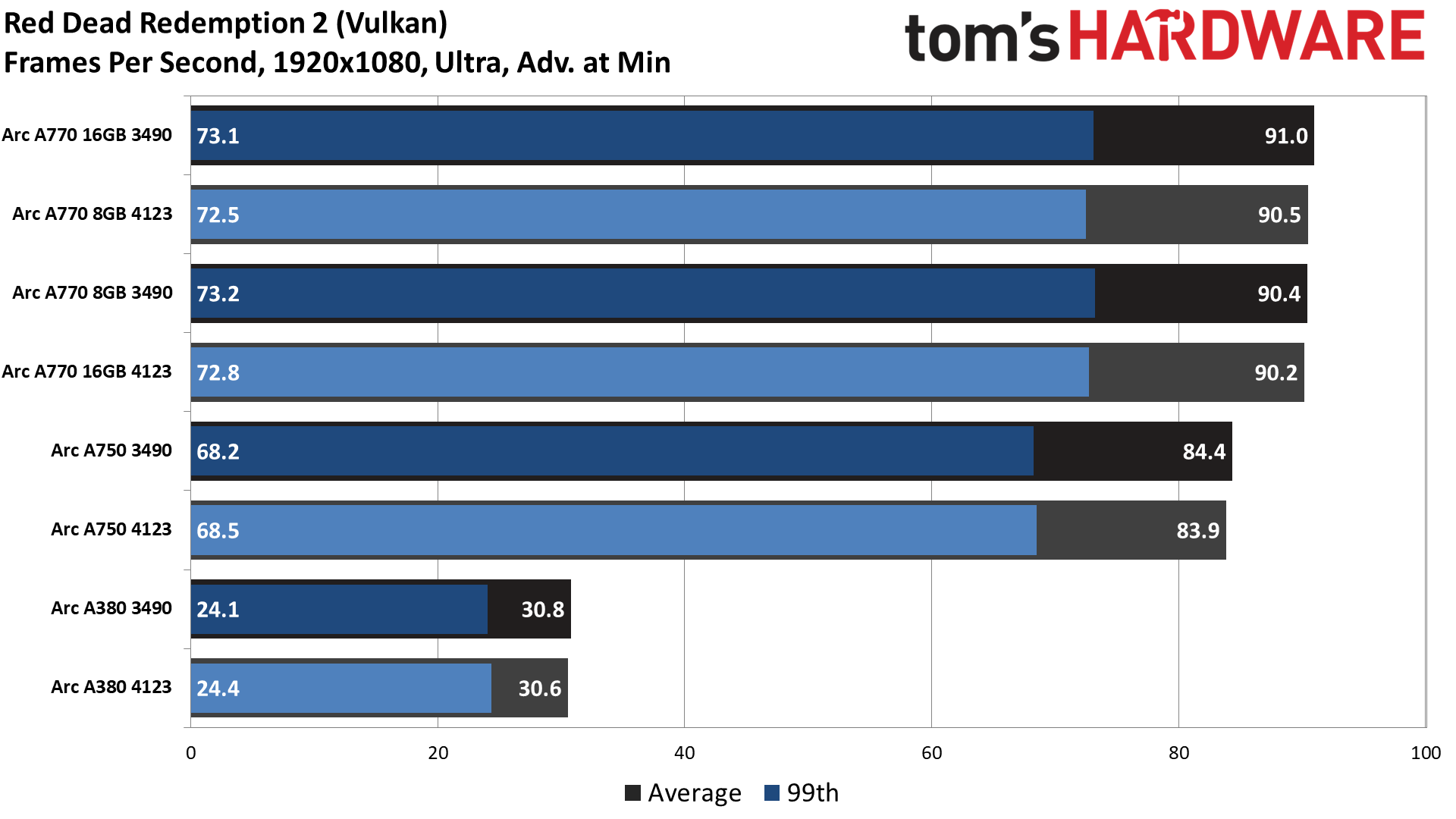

Update: Intel just released the 4146 drivers on February 28, 2023. These fix a problem that we noticed with Red Dead Redemption 2 crashing on the Arc A750, among other things. We have included the results for that one game from the newer drivers (as before we couldn't get a result).

Plugging the Holes in the Arc

Arc arrived with what were arguably the best GPU drivers Intel has ever created – but that’s not saying much. A few years ago, a lot of games simply refused to work at all on Intel’s integrated graphics solutions — and even those games that worked would often perform poorly. Intel’s DG1 helped pave the way for more frequent driver updates, but things were still iffy back in 2021.

Since the first dedicated Arc A380 cards started showing up in China — which meant they also started getting shipped to individuals around the world — Intel has been cranking out updated drivers on a regular basis. There are presently eleven different versions of Arc drivers available from Intel: five WHQL (Windows Hardware Quality Labs) certified and six beta drivers, but there were other “hotfix” or beta drivers that are no longer listed.

Many of the updates have been targeted at one or two specific games — there were two different drivers that addressed problems with Spider-Man Remastered performance on the Arc A380, for example. Other updates have had much further reaching ramifications, with the biggest change being DirectX 9 optimizations.

DirectX 9, 20 Years Later

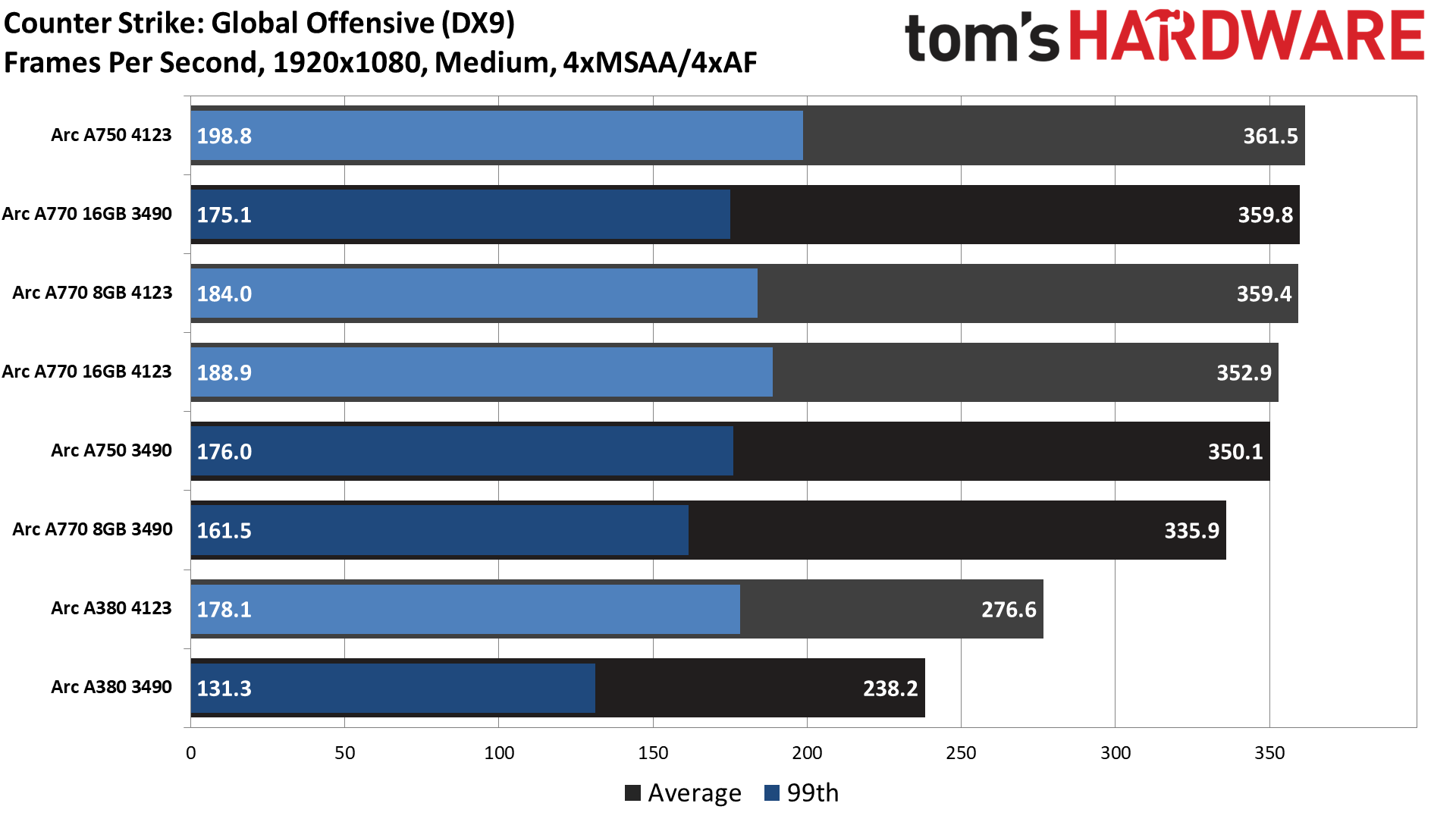

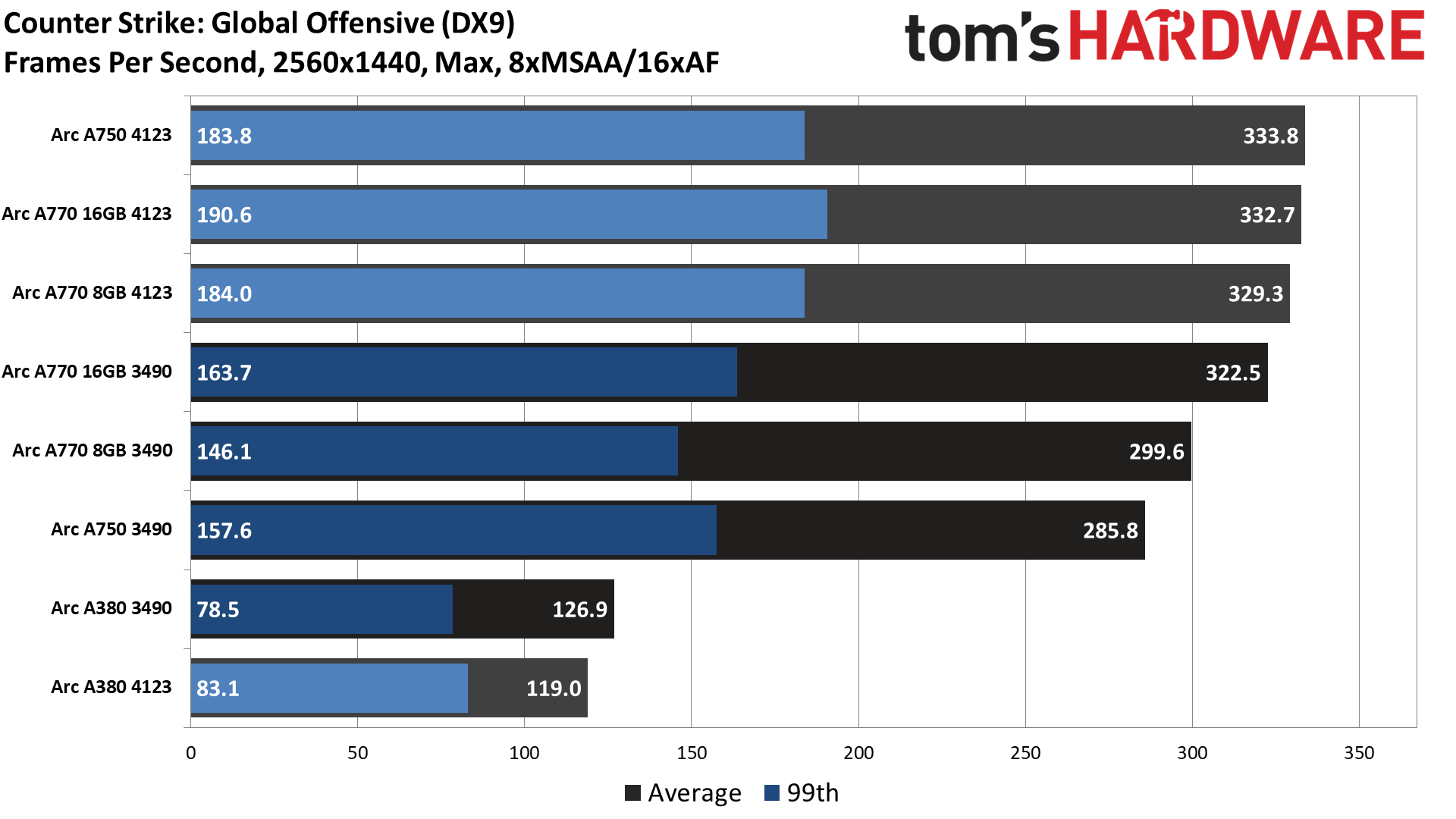

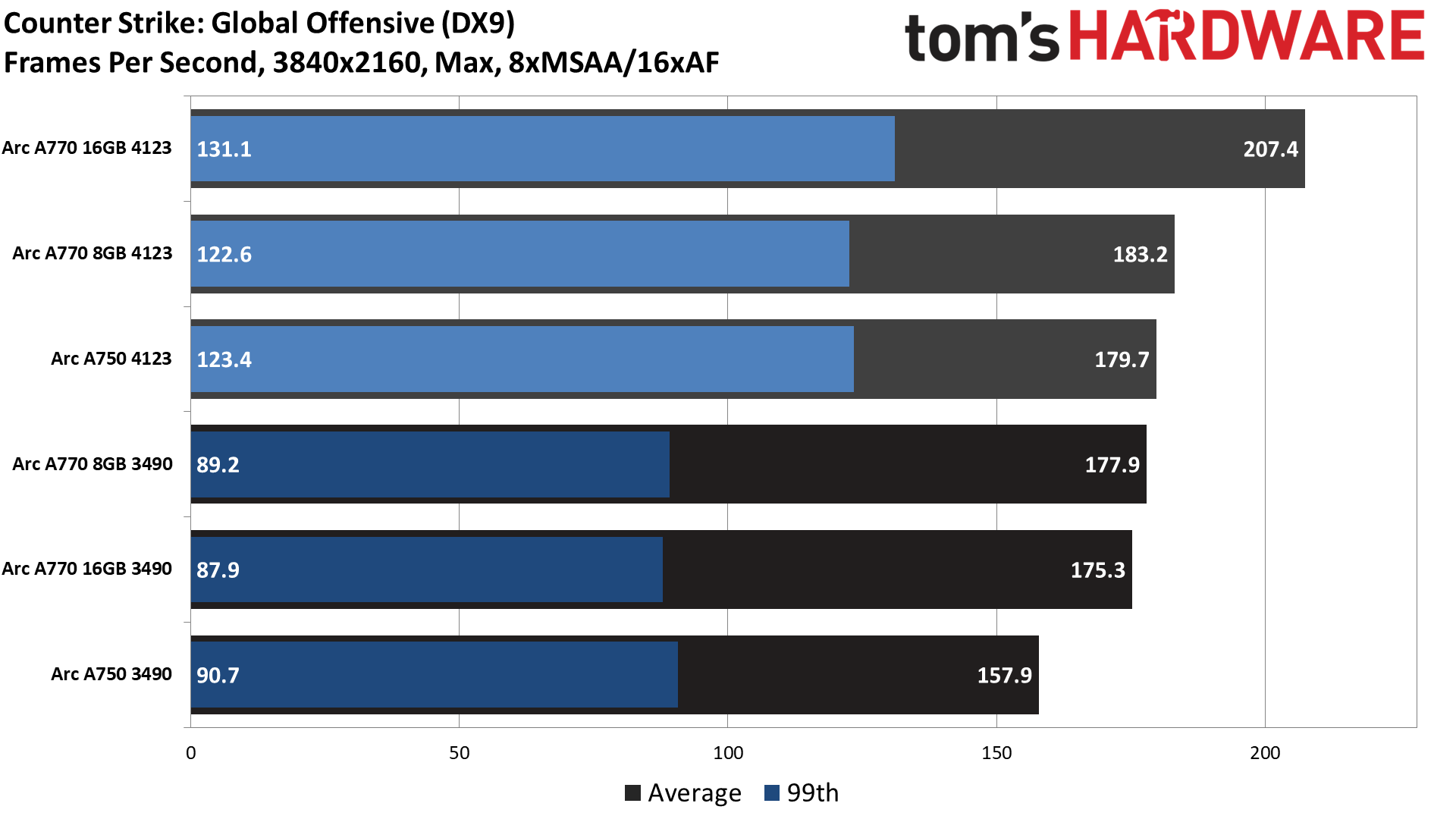

Microsoft’s DirectX 9 API became publicly available back in 2002, but it continues to see quite a bit of use even today. Some of that goes back to the venerable Valve Source engine used for Half-Life 2, which saw widespread adoption for various mods and spinoff games like Counter-Strike: Global Offensive, Left 4 Dead 2 and Team Fortress 2; StarCraft 2 and League of Legends likewise continue to use DX9.

Considering how old the API is, it’s easy to understand Intel’s decision to “focus on modern [DX12 and Vulkan] APIs” for the launch of the Arc graphics cards. Most recently released games use DirectX 12 or DirectX 11, or sometimes Vulkan. Why put a bunch of effort into trying to optimize the drivers for older games? For DX9 compatibility, Intel opted for support via DX12 emulation, which probably sounded like a good idea at some point.

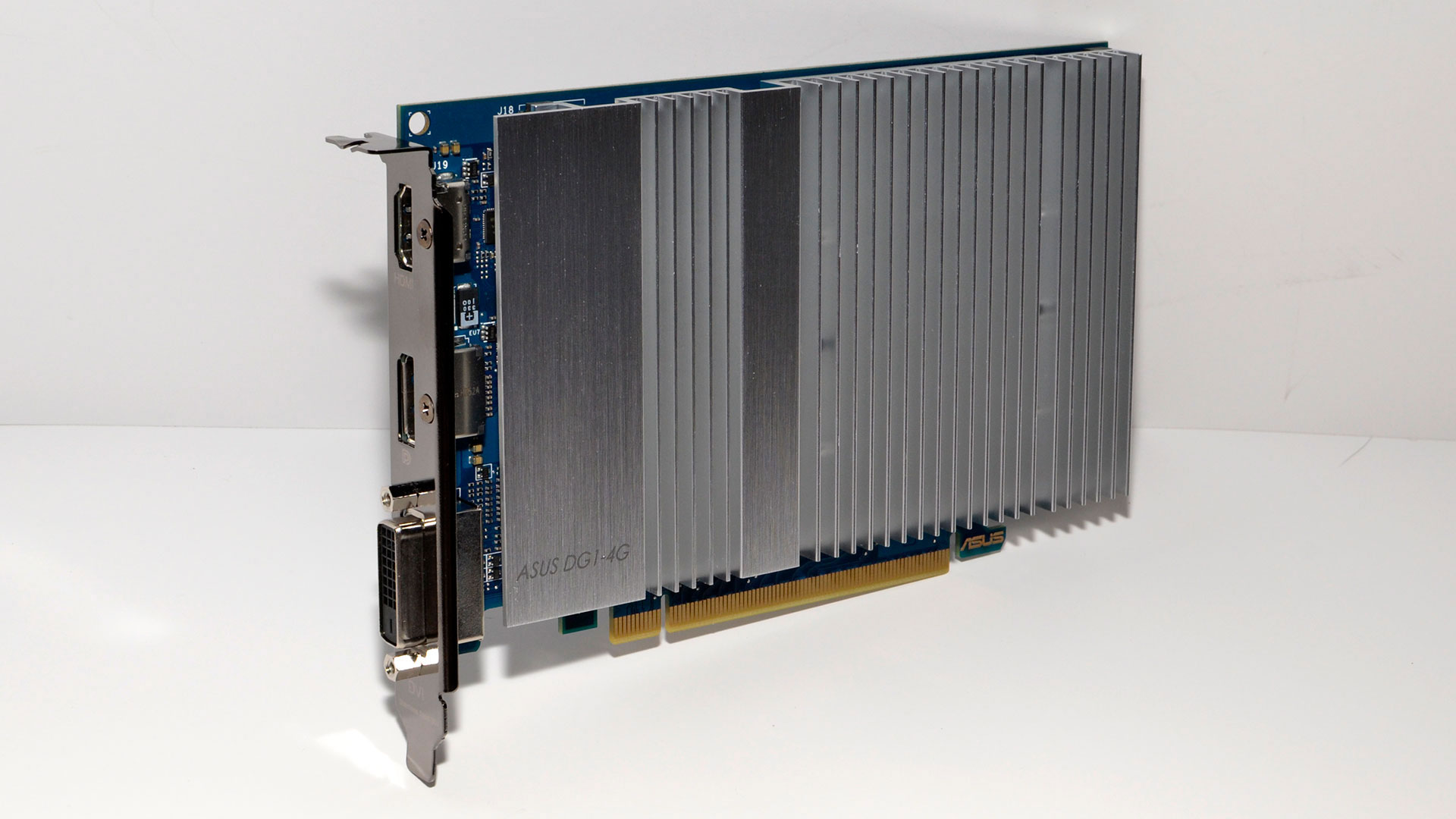

Arc isn't Intel's first discrete graphics rodeo. It announced its intention to create dedicated graphics cards back in November 2017, when it hired Raja Koduri shortly after his departure from AMD. This wasn’t going to be a quick and easy task, though the initial plan was almost certainly supposed to be something competitive before 2022. And there was a precursor to Arc, even if most people never saw it.

First shown off at the start of 2020 during CES, Intel’s DG1 test vehicle — Discrete Graphics 1 — felt more like a way to drum up hype rather than something substantive. The hardware finally started shipping, in limited fashion, in mid-2021, with cards from Asus and Gunnir. The hardware basically consisted of the integrated graphics used in Intel’s 10th Gen Tiger Lake mobile processors, minus the CPU and with dedicated VRAM. But Intel opted to stick with LPDDR4x, the same memory used by the laptop processors.

How did it perform? In a word, poorly. In our DG1 benchmarks, it was roughly on par with Nvidia’s GT 1030 GDDR5 graphics card, an anemic solution that launched in 2017, cost $69, and wasn’t fit for much more than 720p gaming at low to medium settings. DG1 was also about as fast as AMD’s Vega 8 integrated graphics found in Ryzen 4000 U-series (15W) processors. But it was a start.

The problem is that a lot of older games remain immensely popular. Token support for DX9 via DX12 emulation might have sounded fine on paper, but in practice it was a glaring indication that Intel’s GPUs and drivers weren’t as good as AMD and Nvidia drivers. That’s even though most DX9 games aren't particularly demanding, especially when compared with recent DX11/12 releases, performance in DX9 is only as good as the emulation.

Simply put, at launch, the DX9 via DX12 emulation for Intel Arc wasn't very good. It was very prone to fluctuations in frametimes, leading to stuttering in games. People complained and Intel decided to rethink its DX9 strategy. The solution Intel came up with was to leverage more translation layers to turn DX9 API calls into either DX12 calls or Vulkan calls. The DX12 emulation still comes from Intel’s internal work on the drivers, or perhaps Microsoft’s D3D9On12 mapping layer, but now with a bit more tuning.

The bigger change appears to be the use of DXVK — DirectX to Vulkan — from Steam for “some cases.” Intel hasn’t detailed exactly which games use DXVK and which use DX12 emulation, but whatever the changes, frametimes and average performance have increased substantially. This was all rolled out with Arc’s December driver update.

This is great news if you want to buy an Arc GPU for a modern PC to run old games. Remember: PCIe Resizable BAR is basically required for Arc GPUs, which means you generally need a 10th gen or later Intel CPU, or Ryzen 5000 or later AMD CPU. Otherwise, you’ll lose about 20 percent of the potential performance, based on testing, at which point you should just buy an AMD or Nvidia GPU.

So Intel can't simply ignore older games, as weak performance in games that millions of people still play on a regular basis makes all Intel GPUs look bad. But how much has really changed?

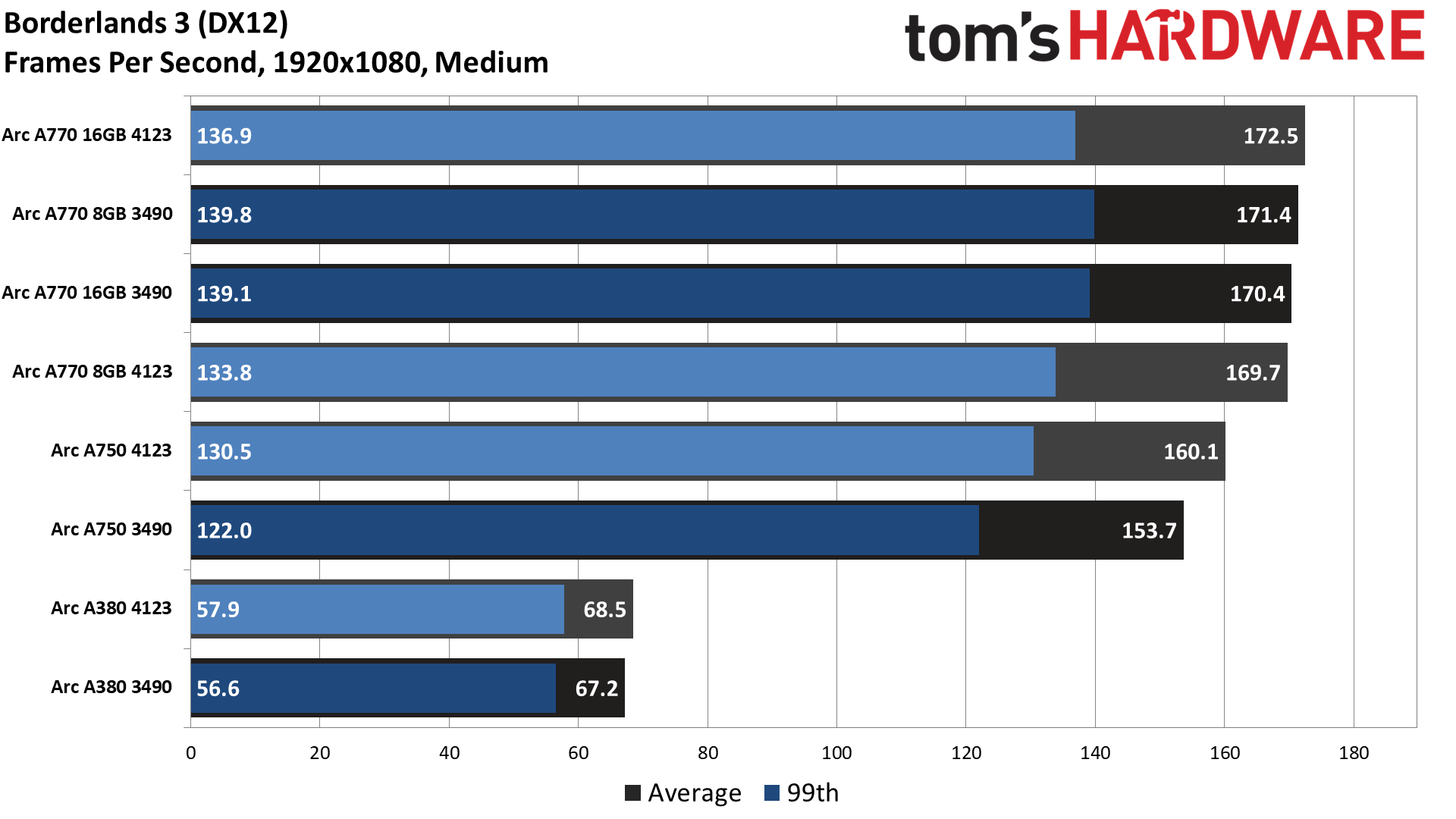

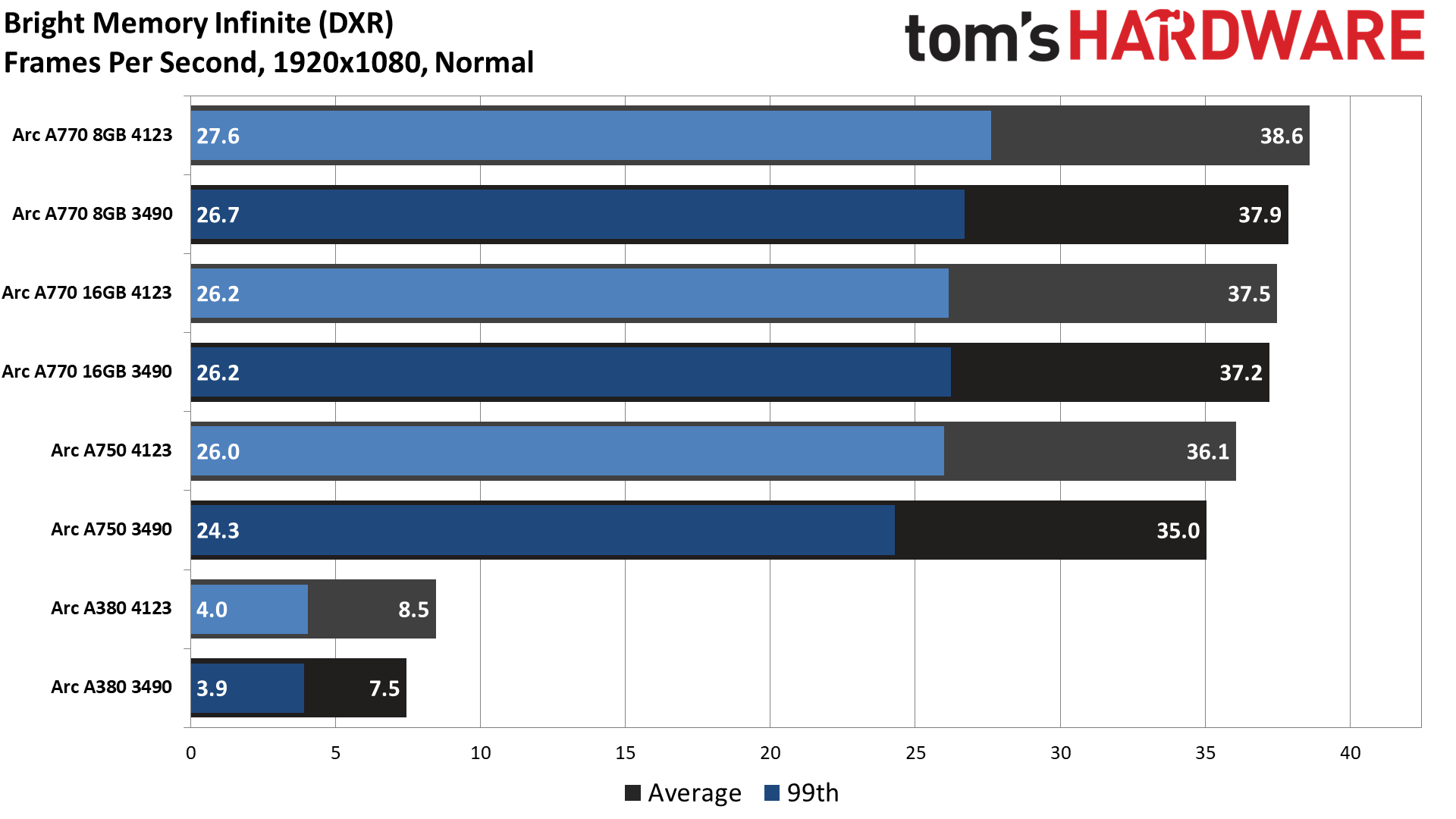

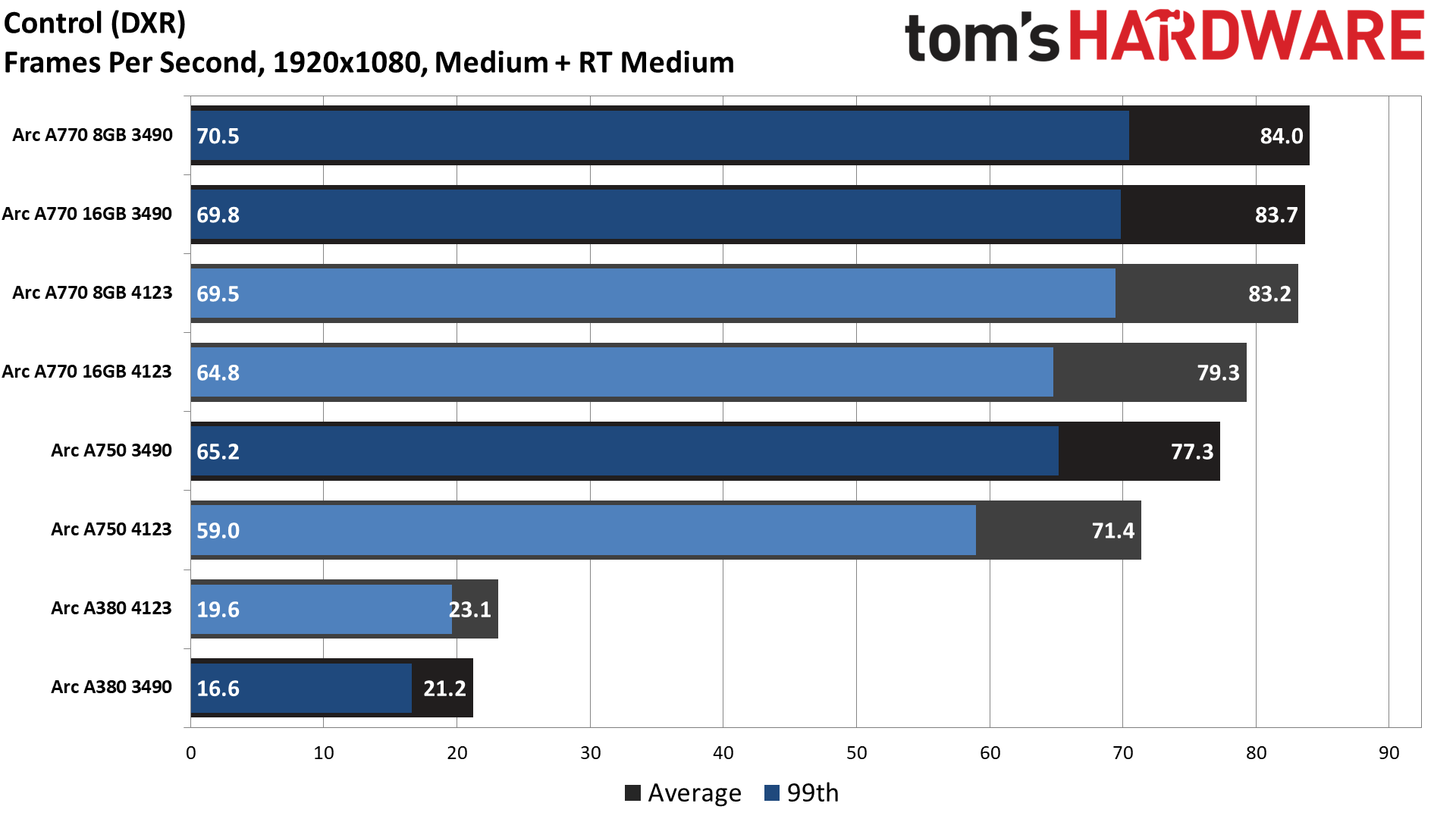

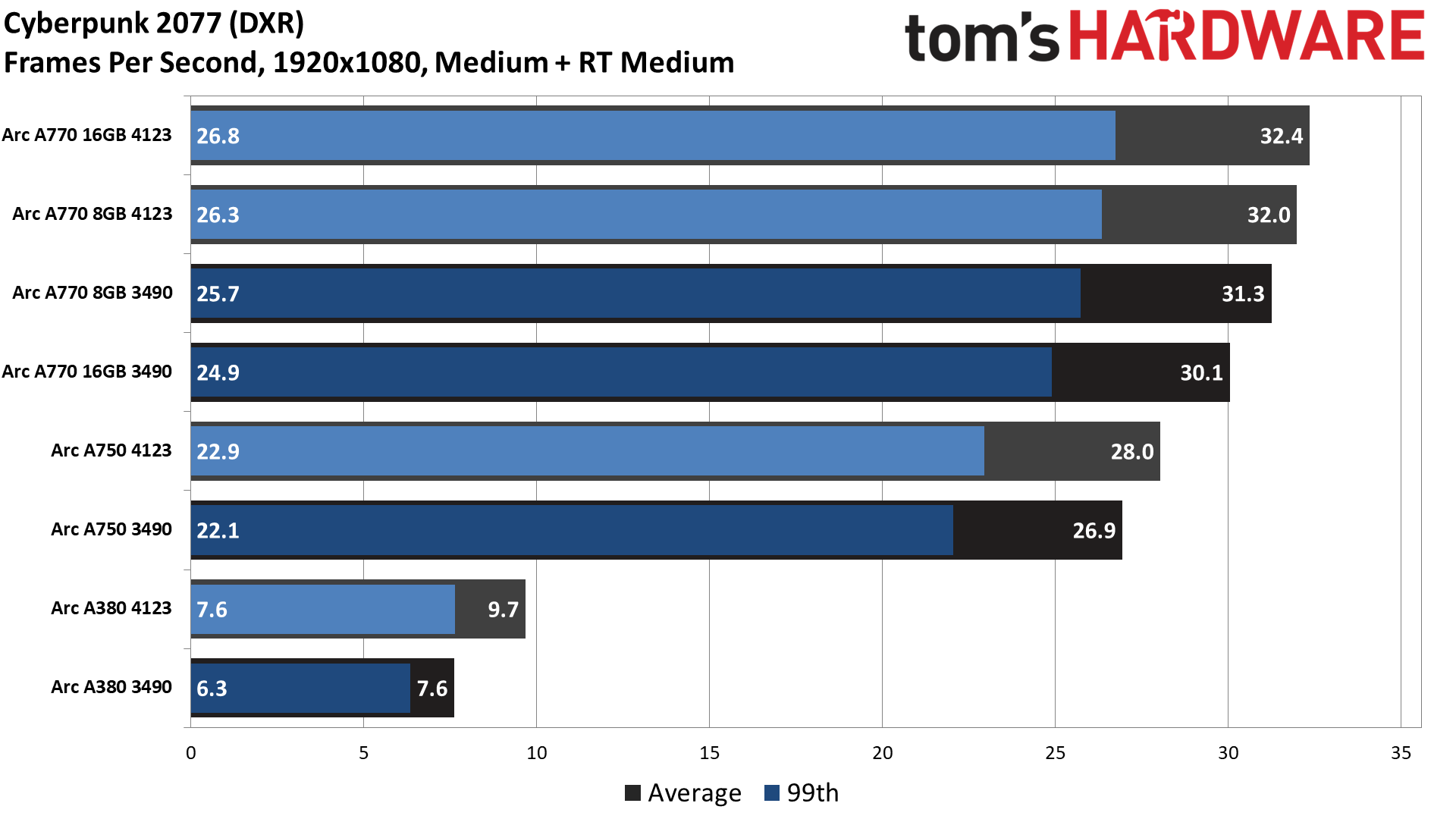

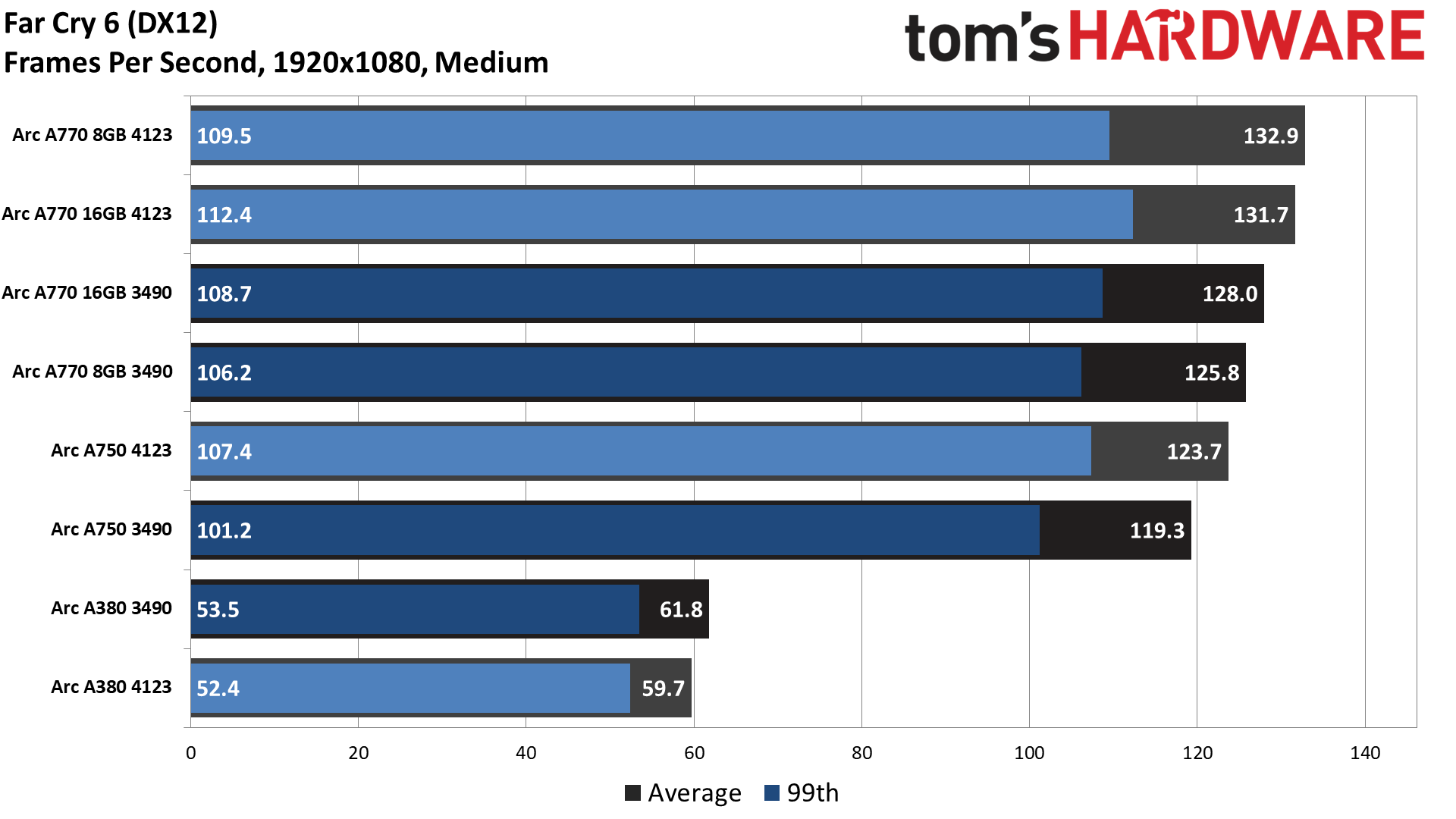

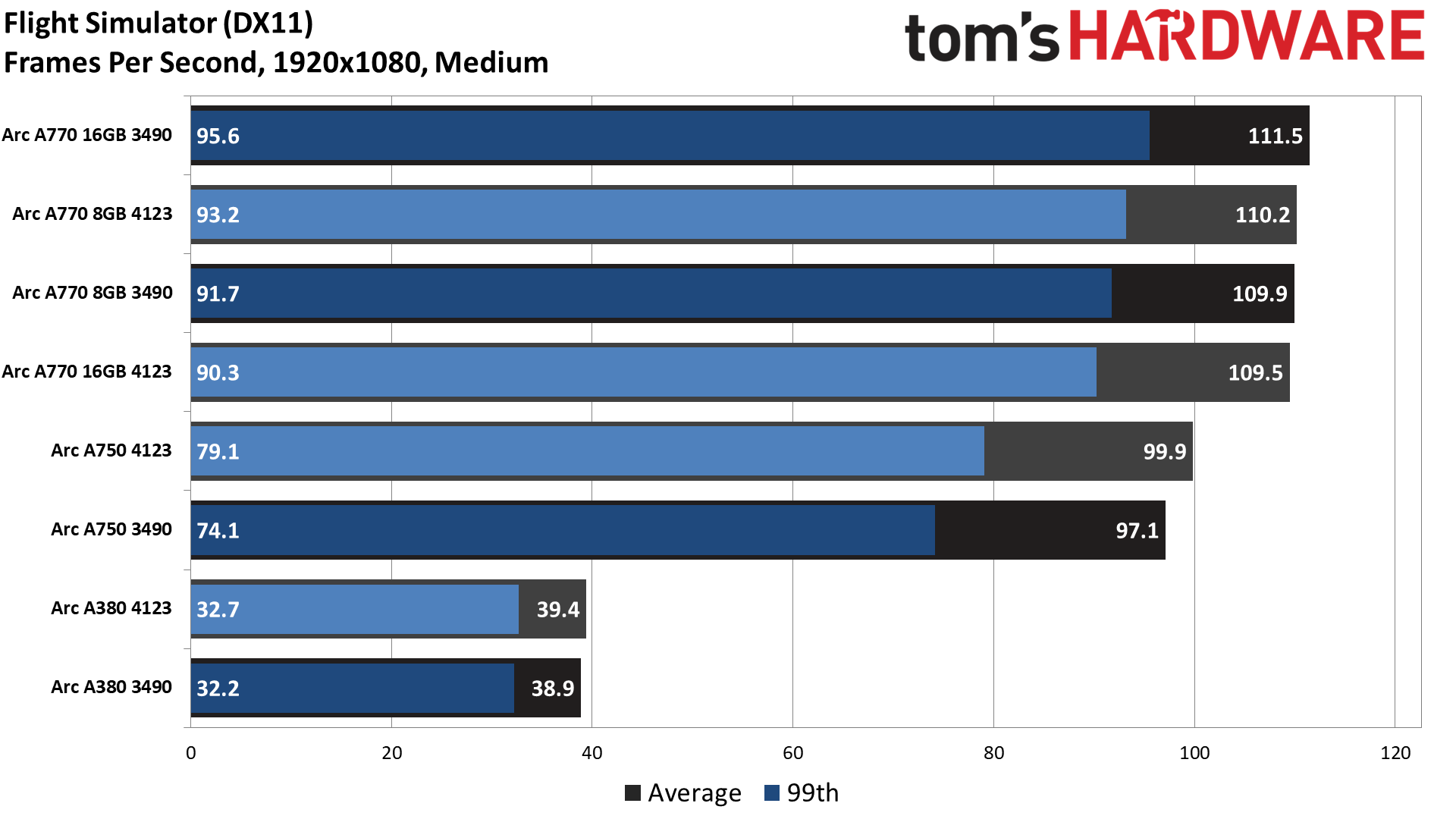

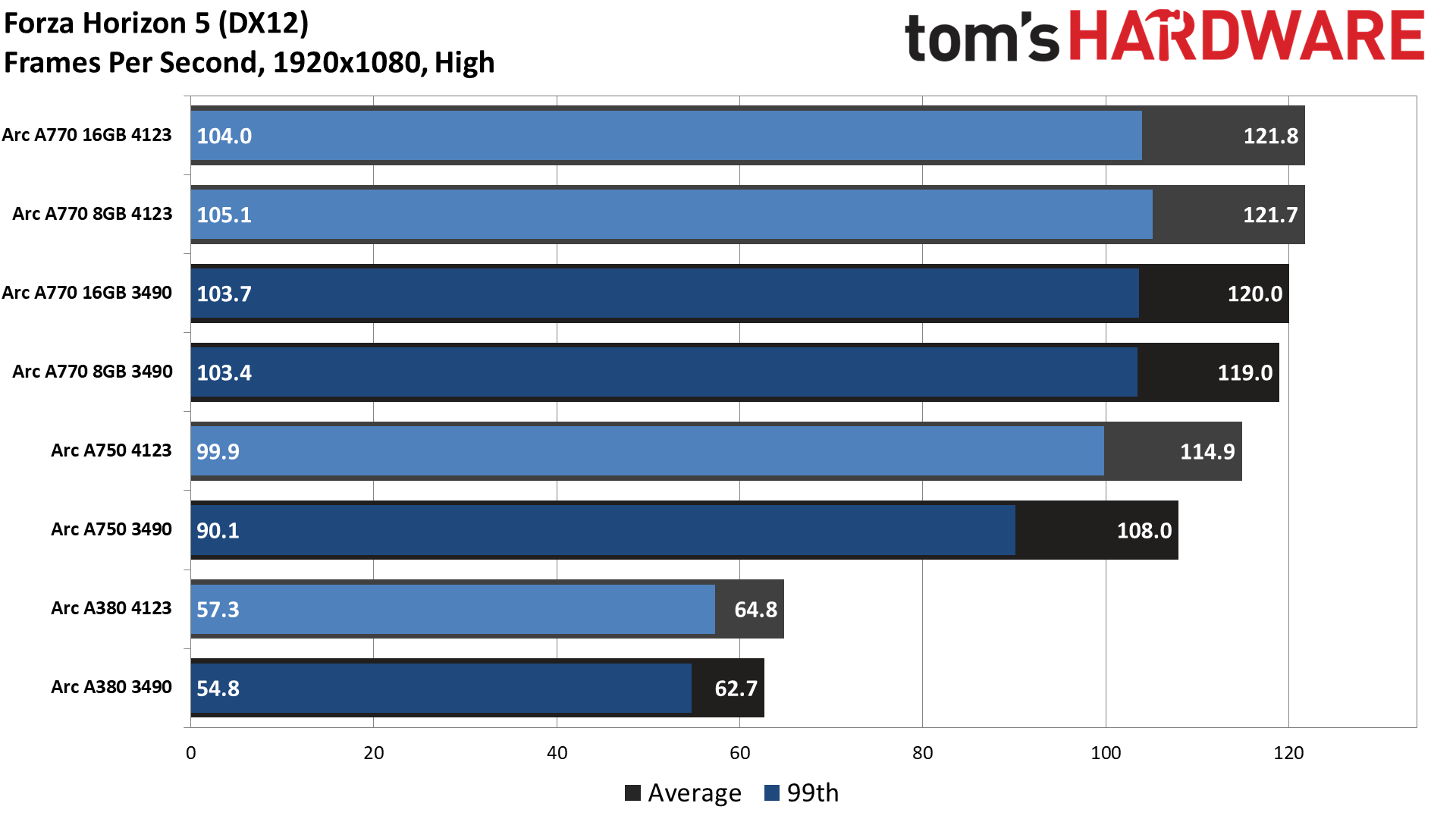

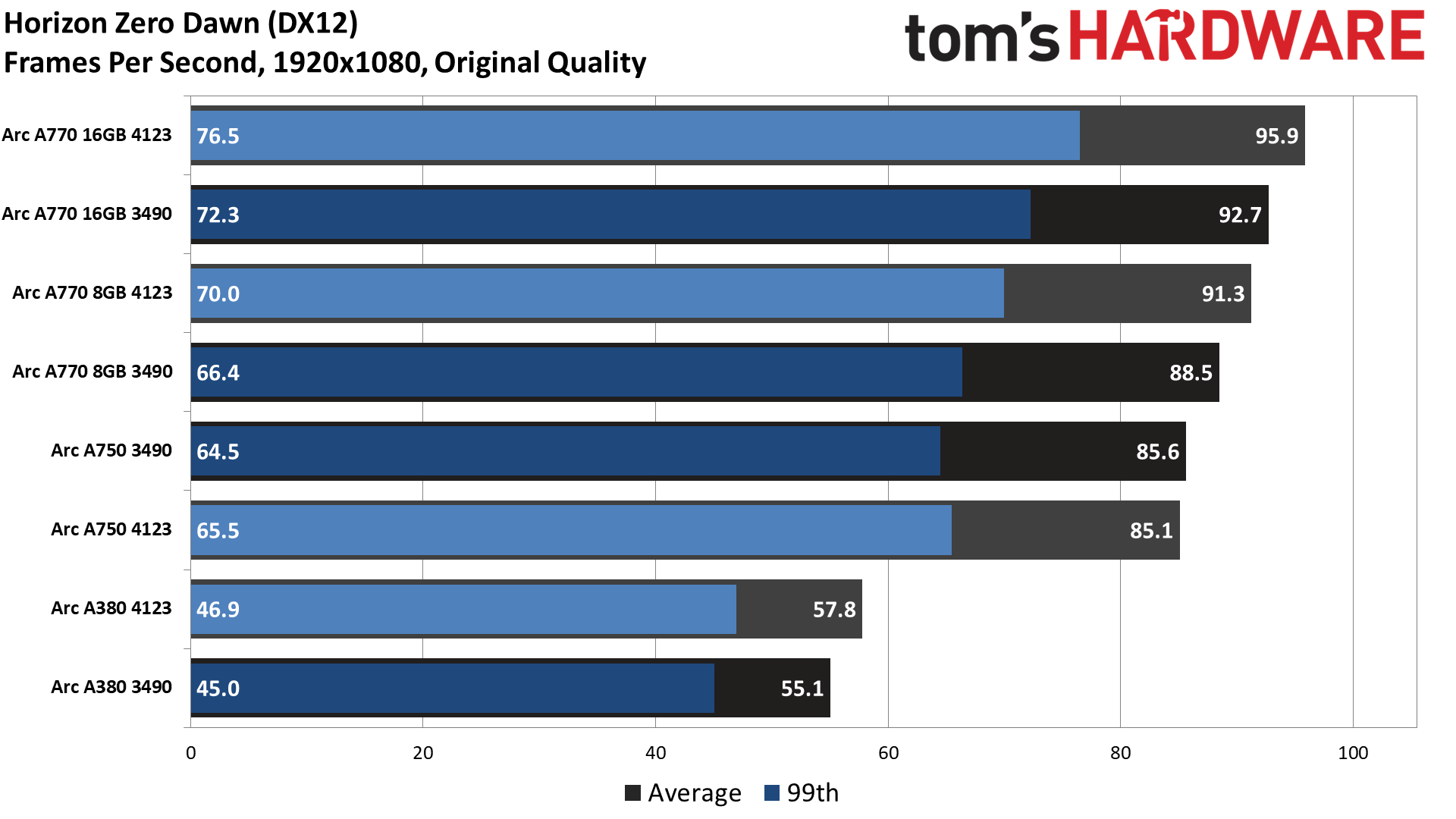

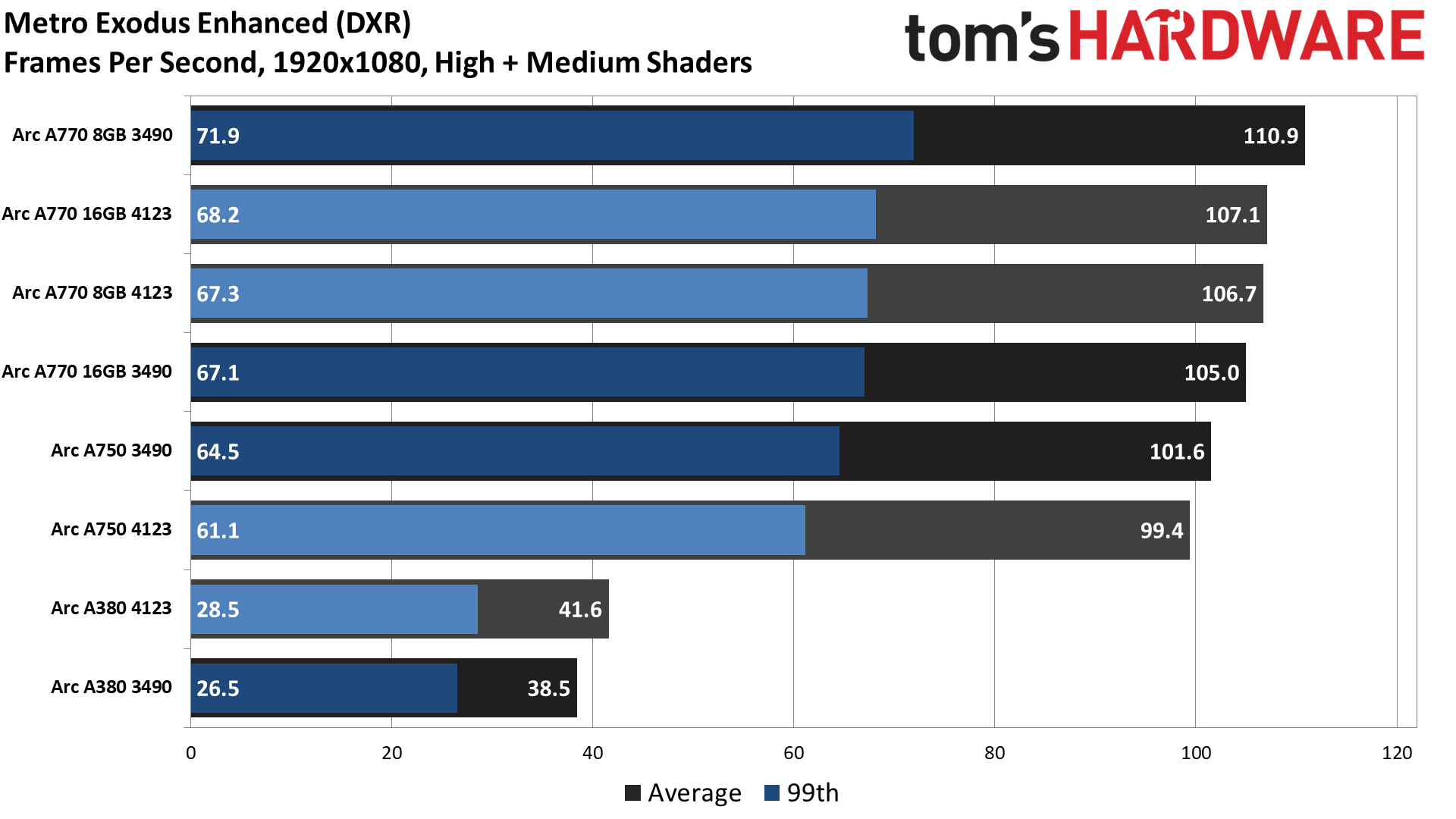

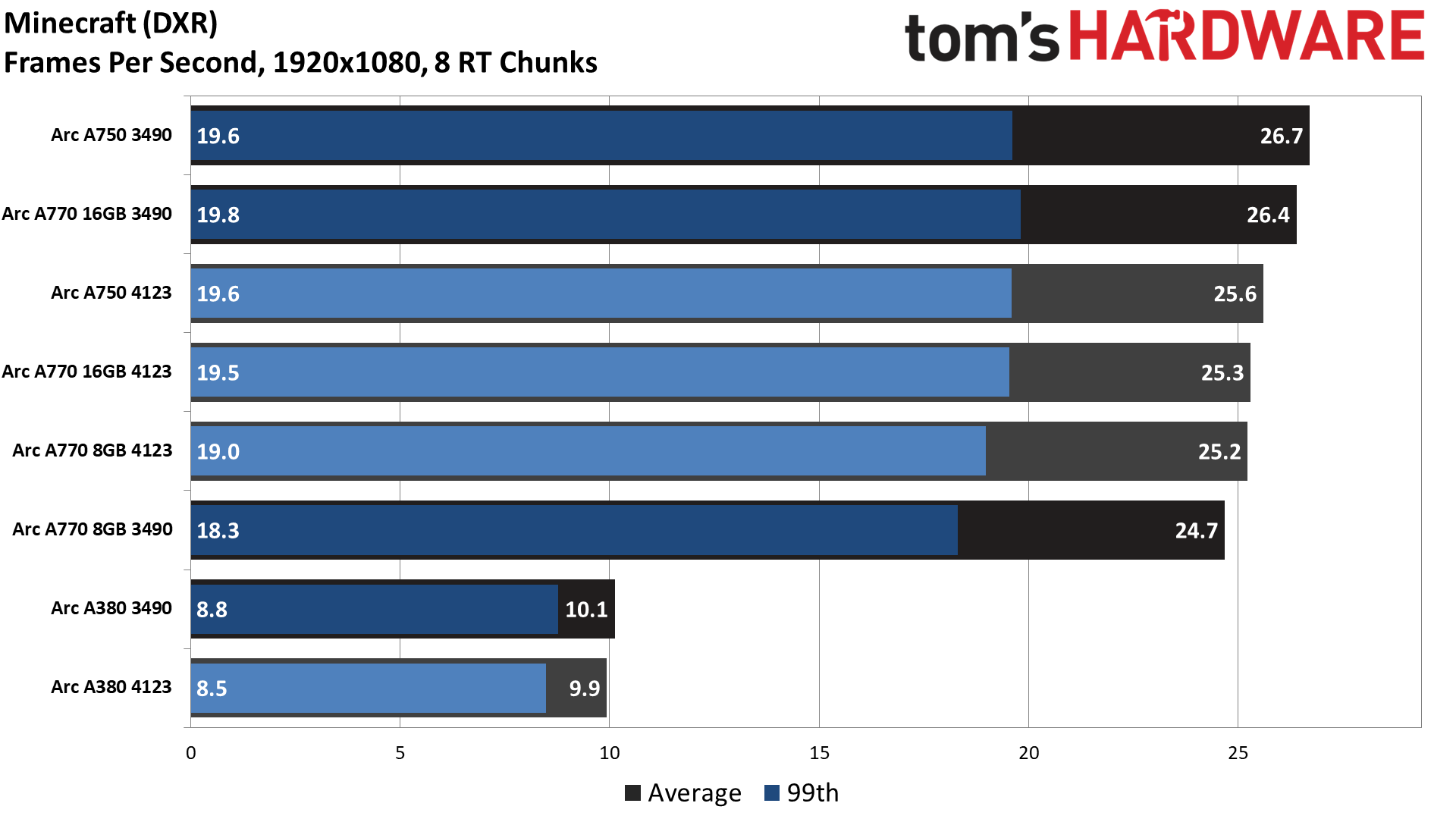

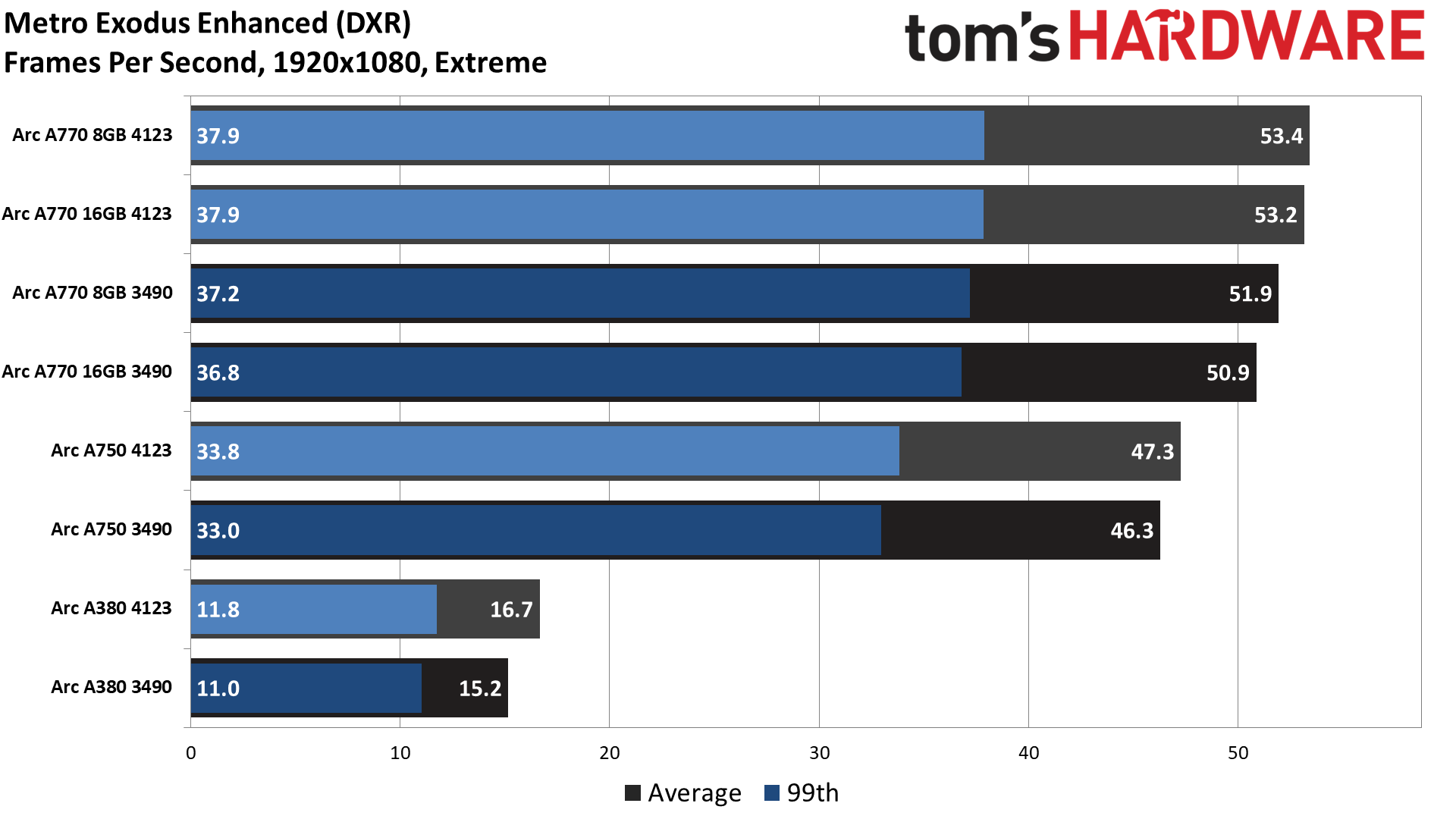

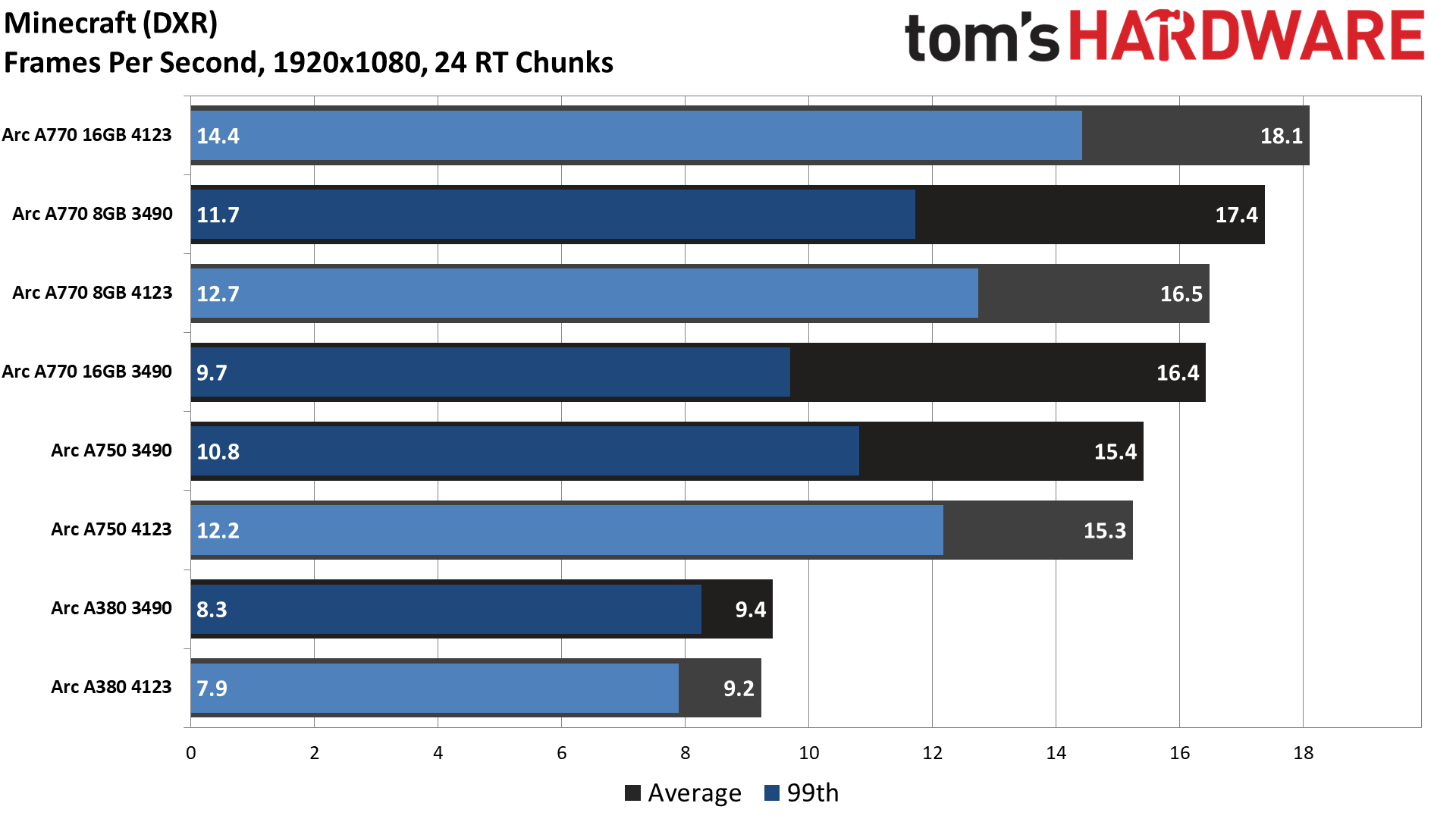

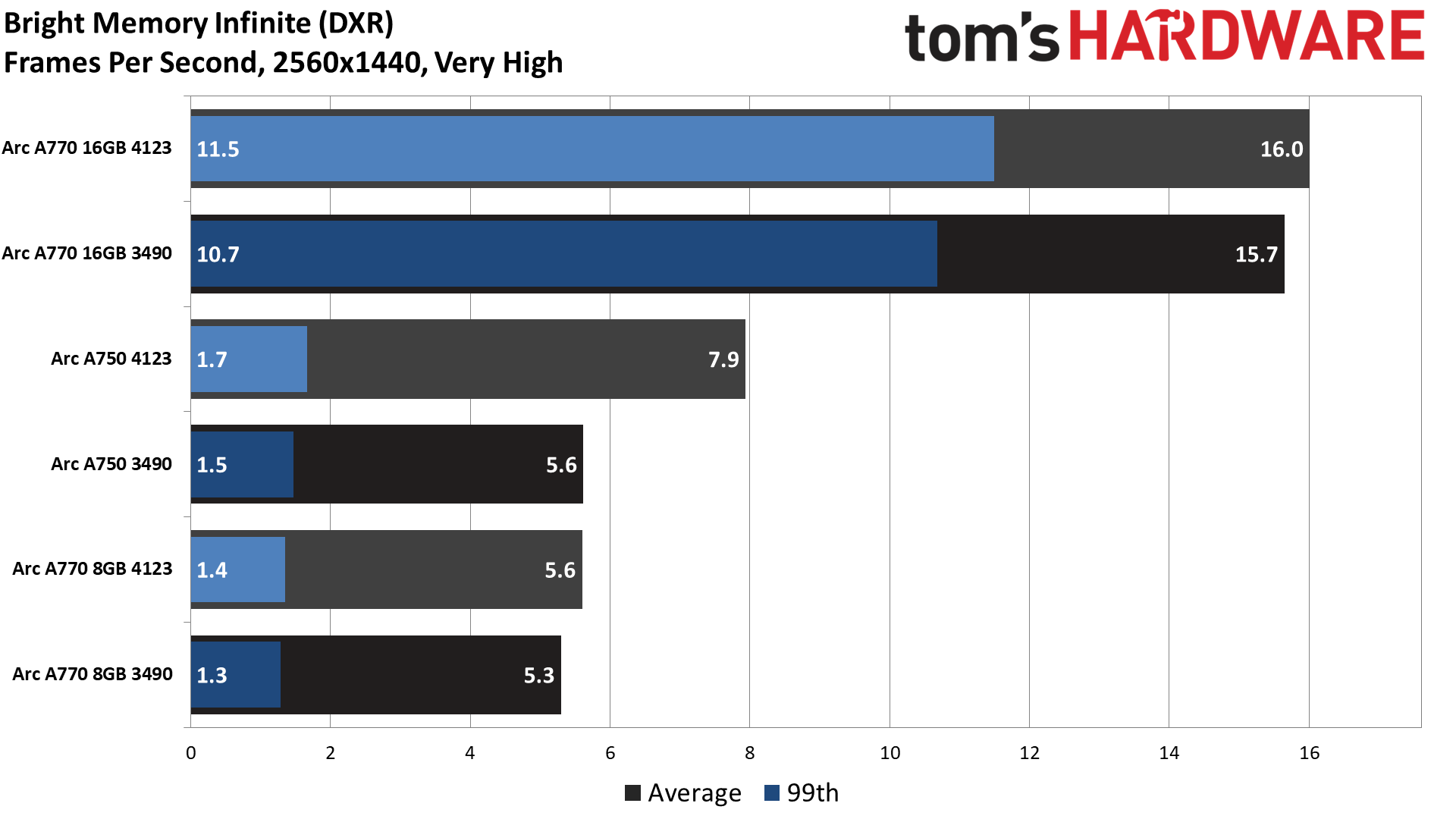

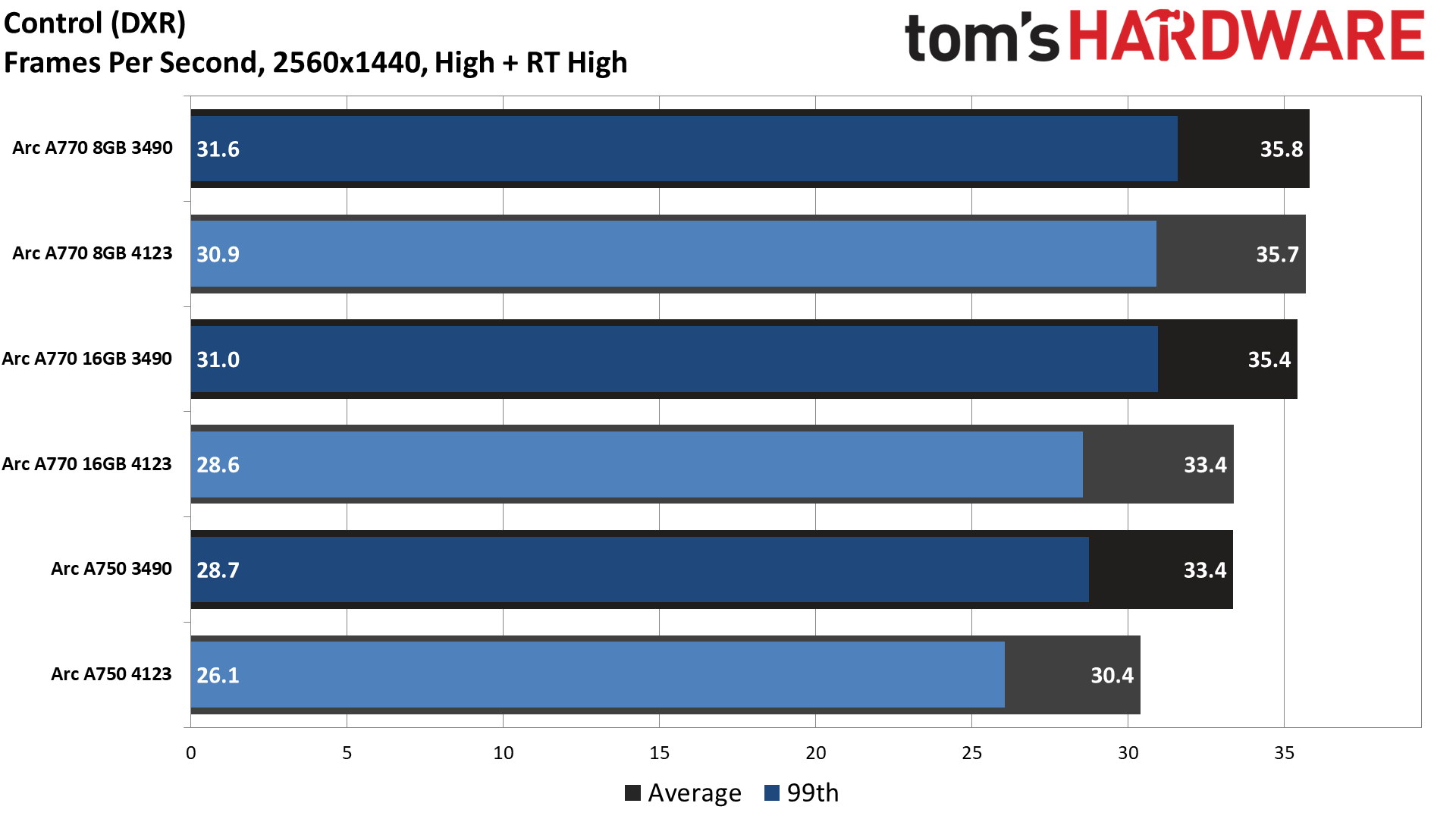

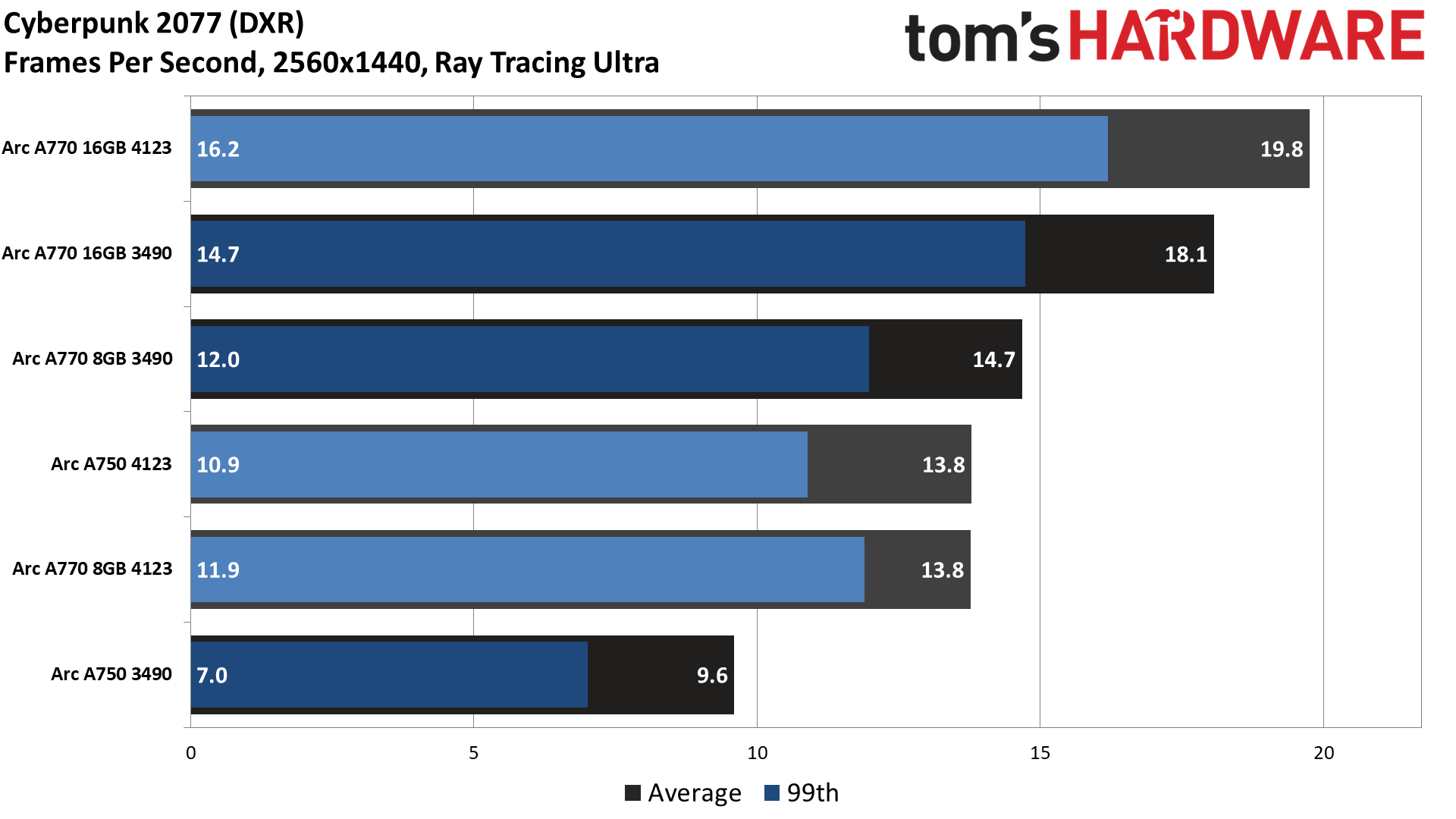

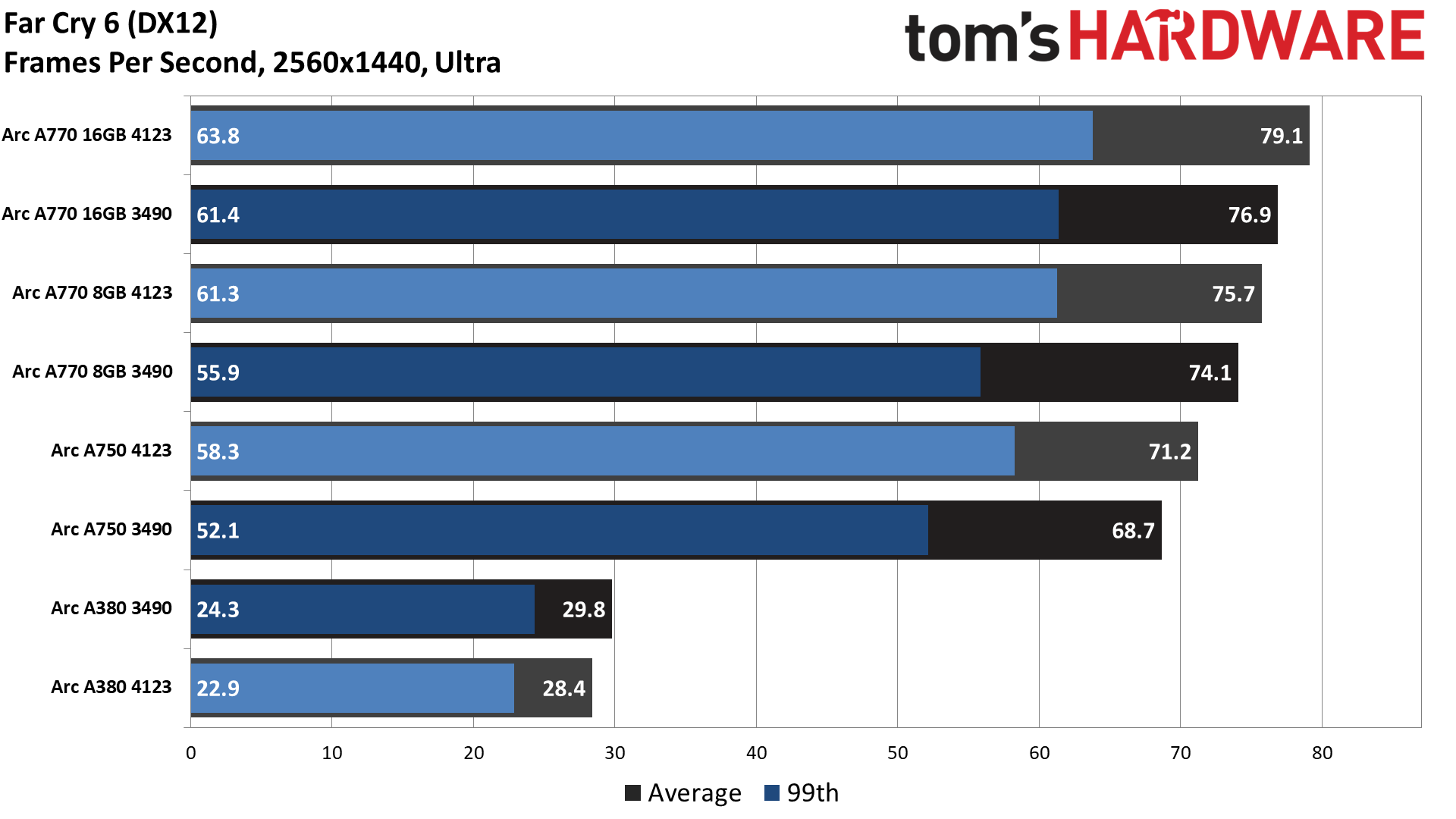

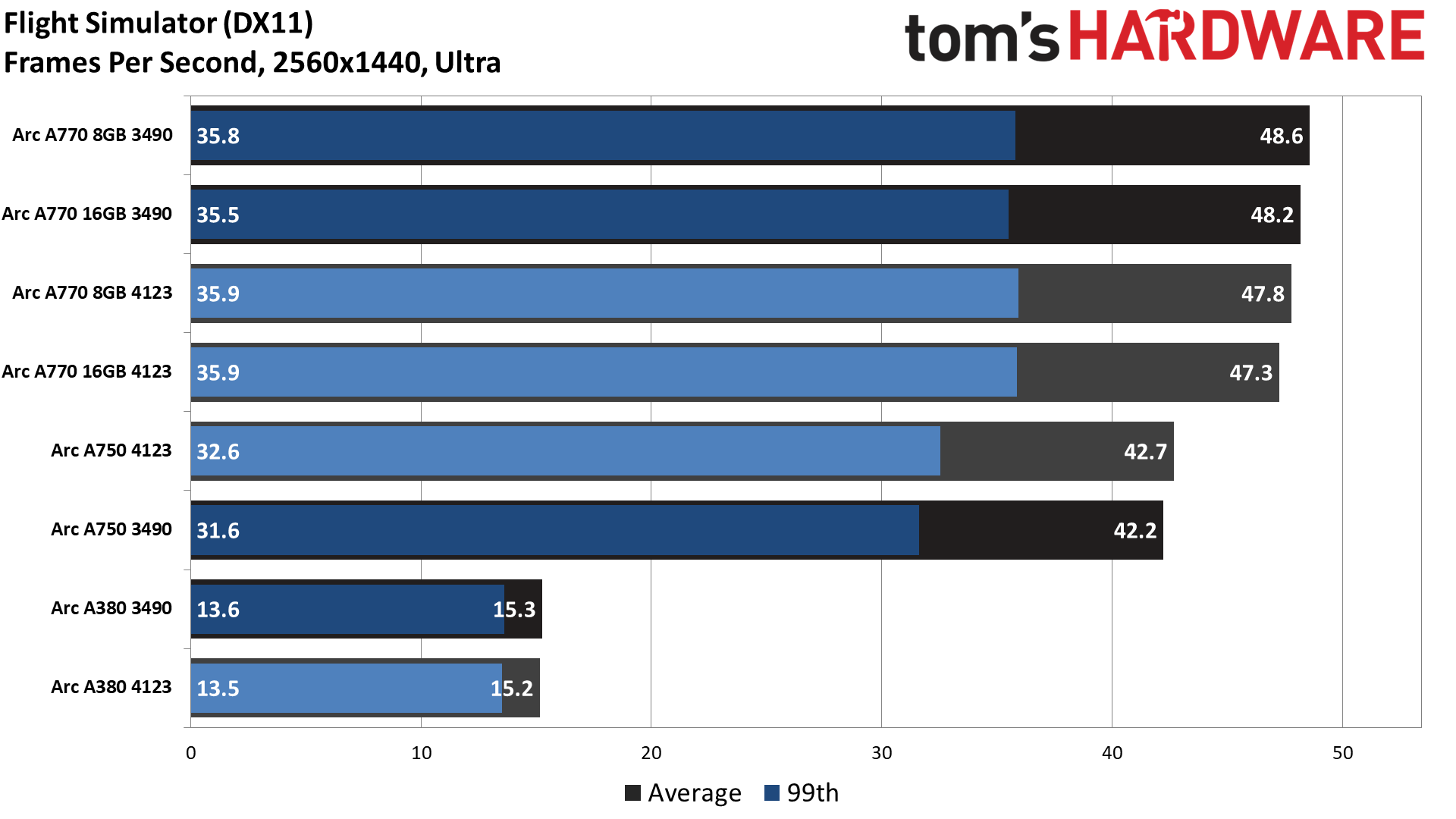

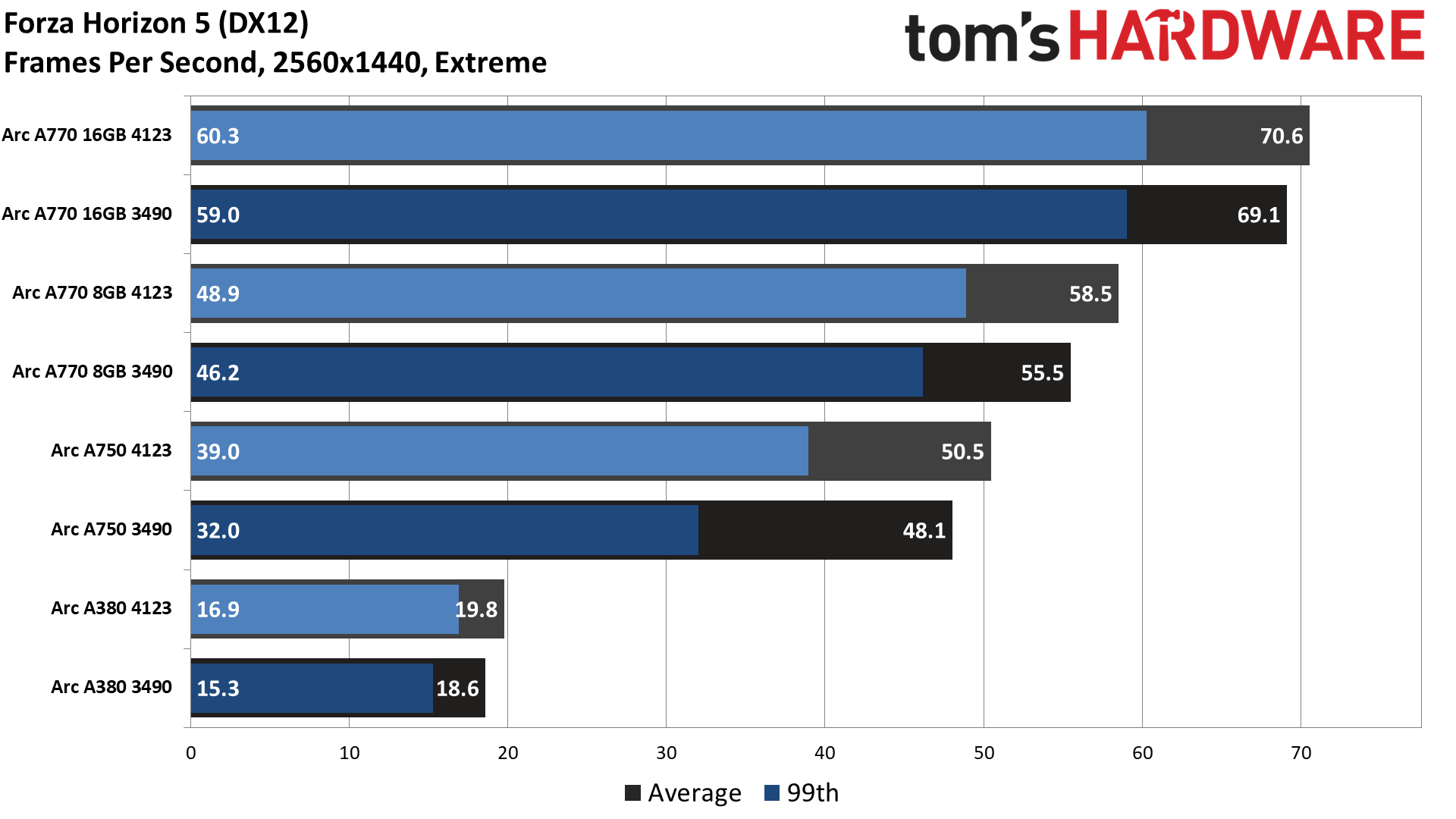

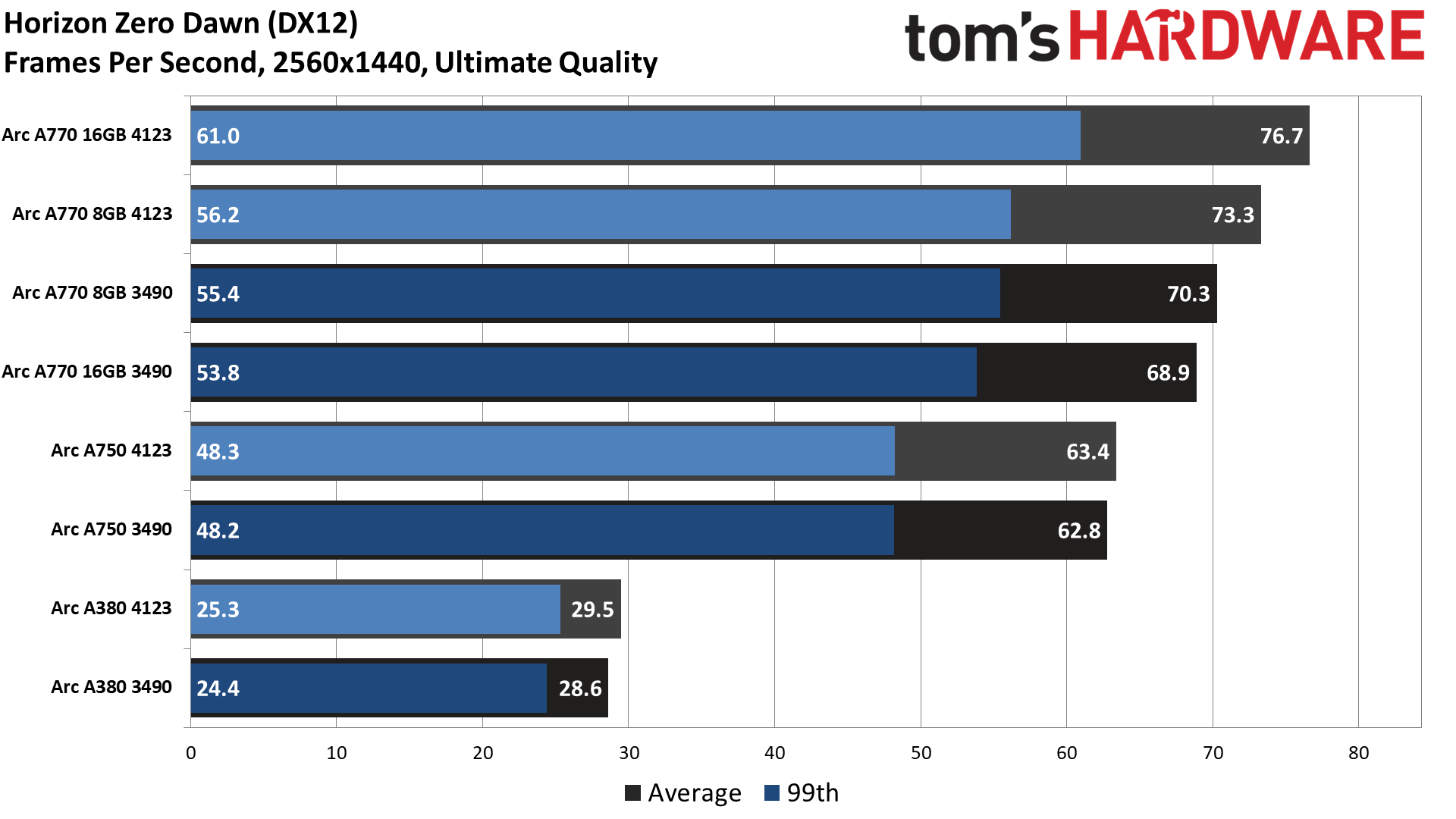

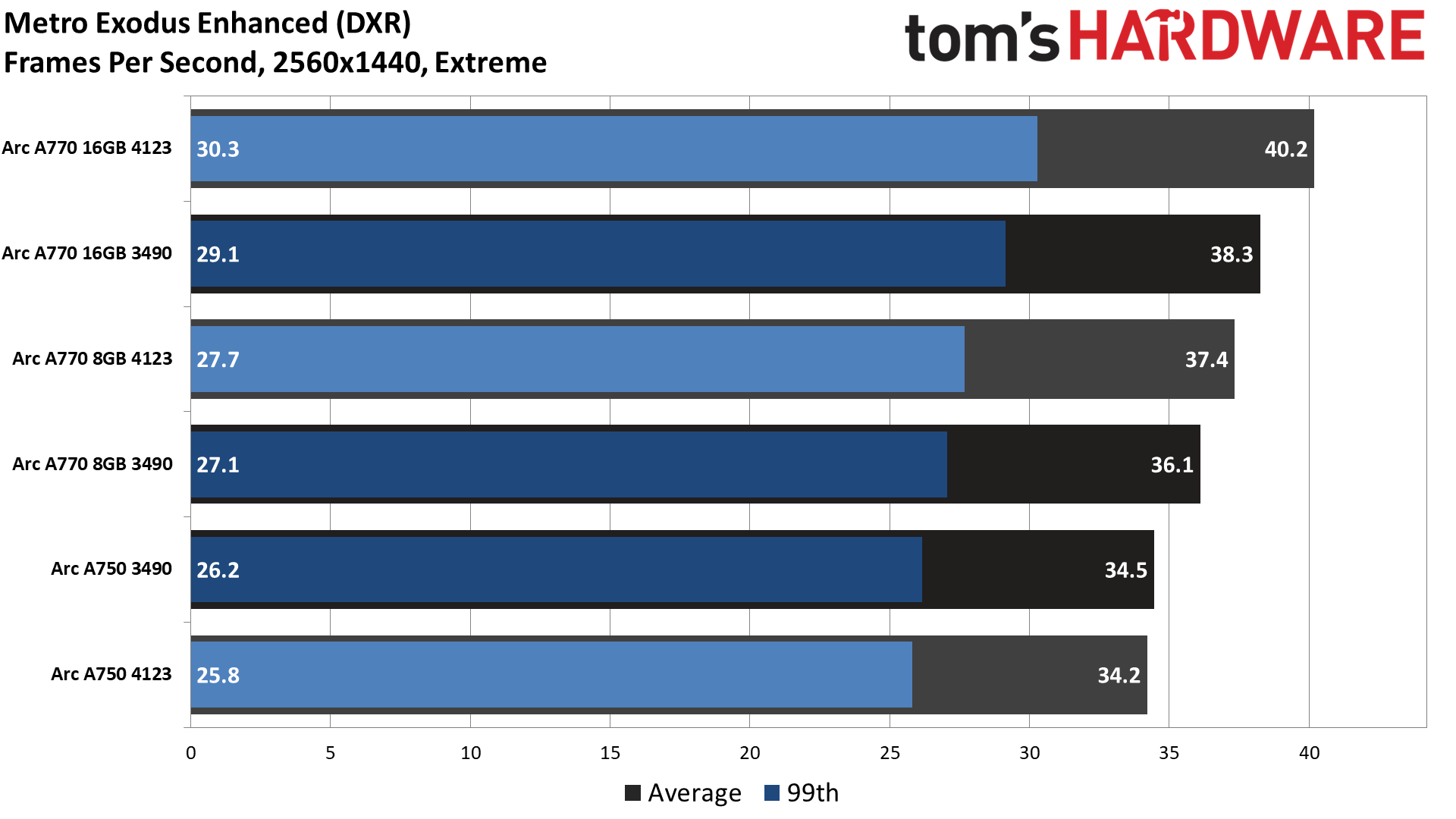

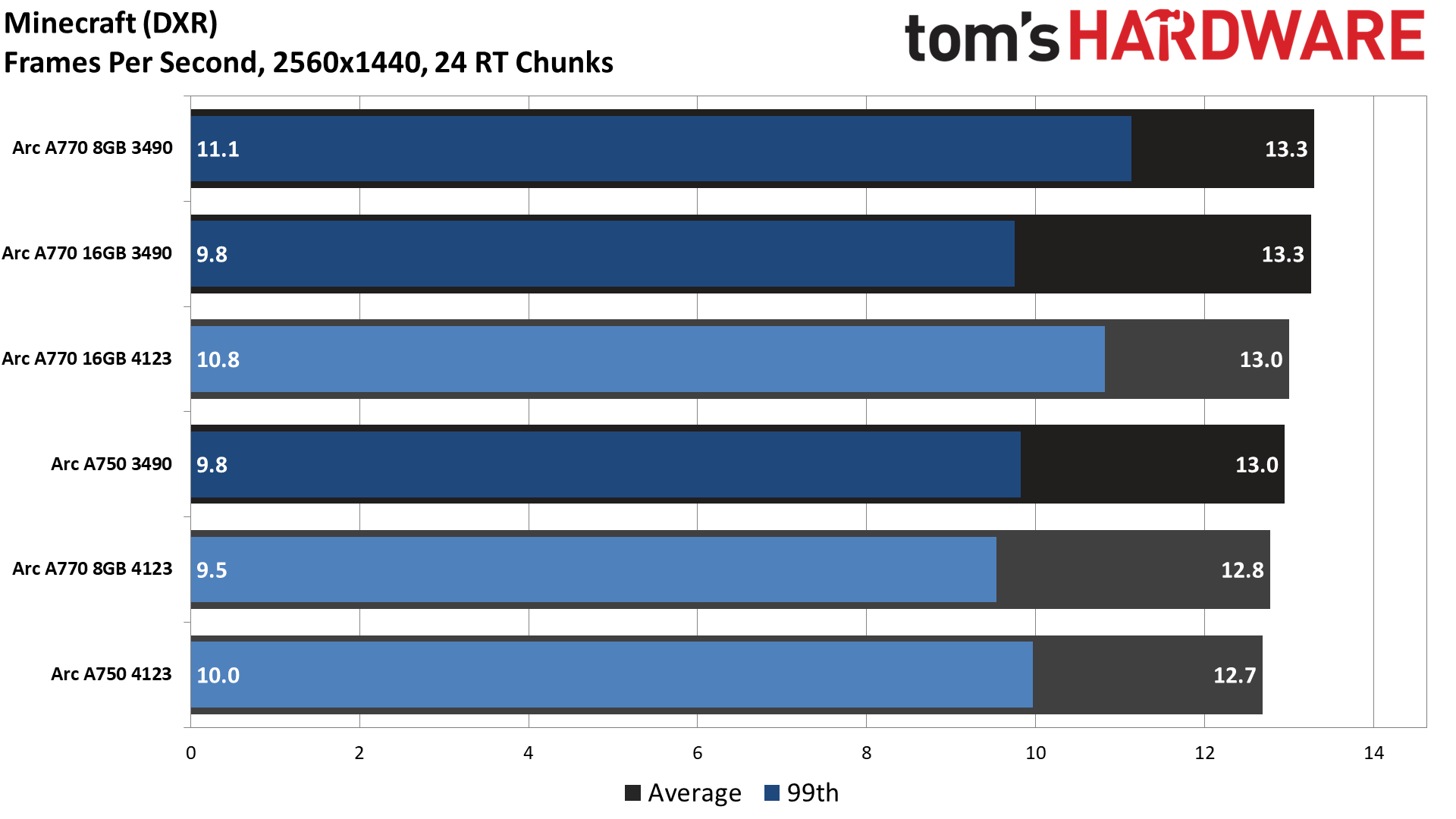

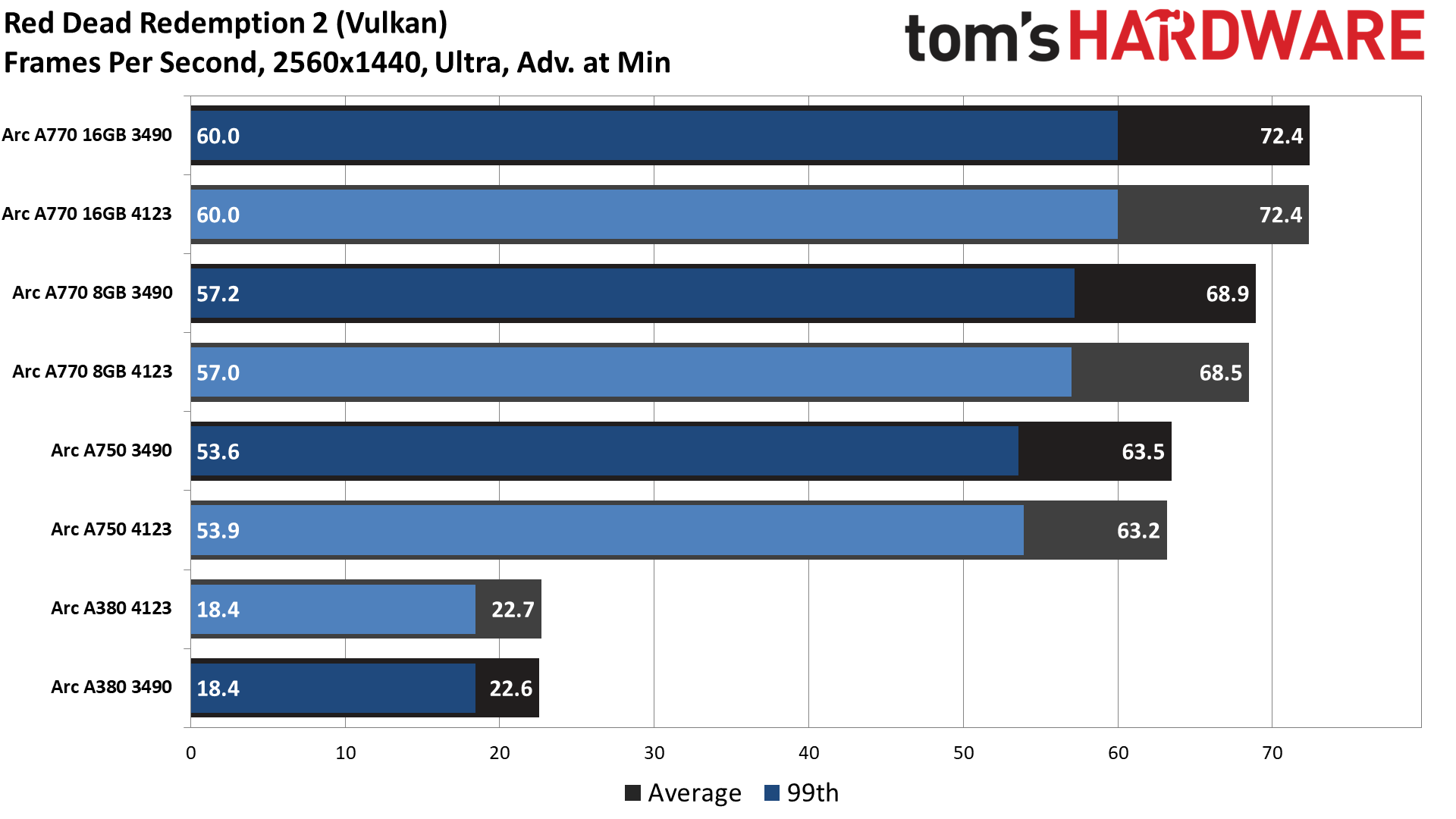

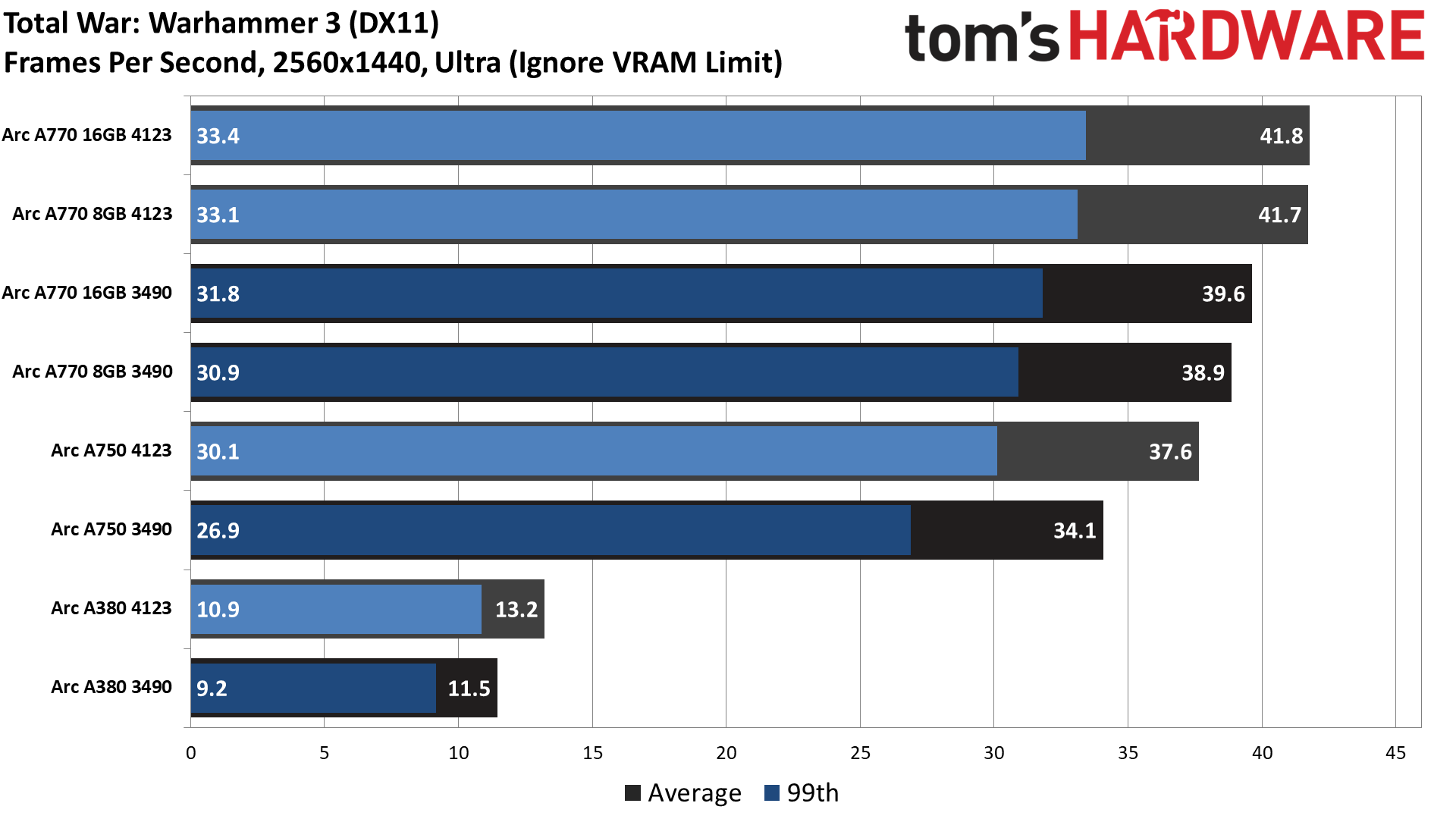

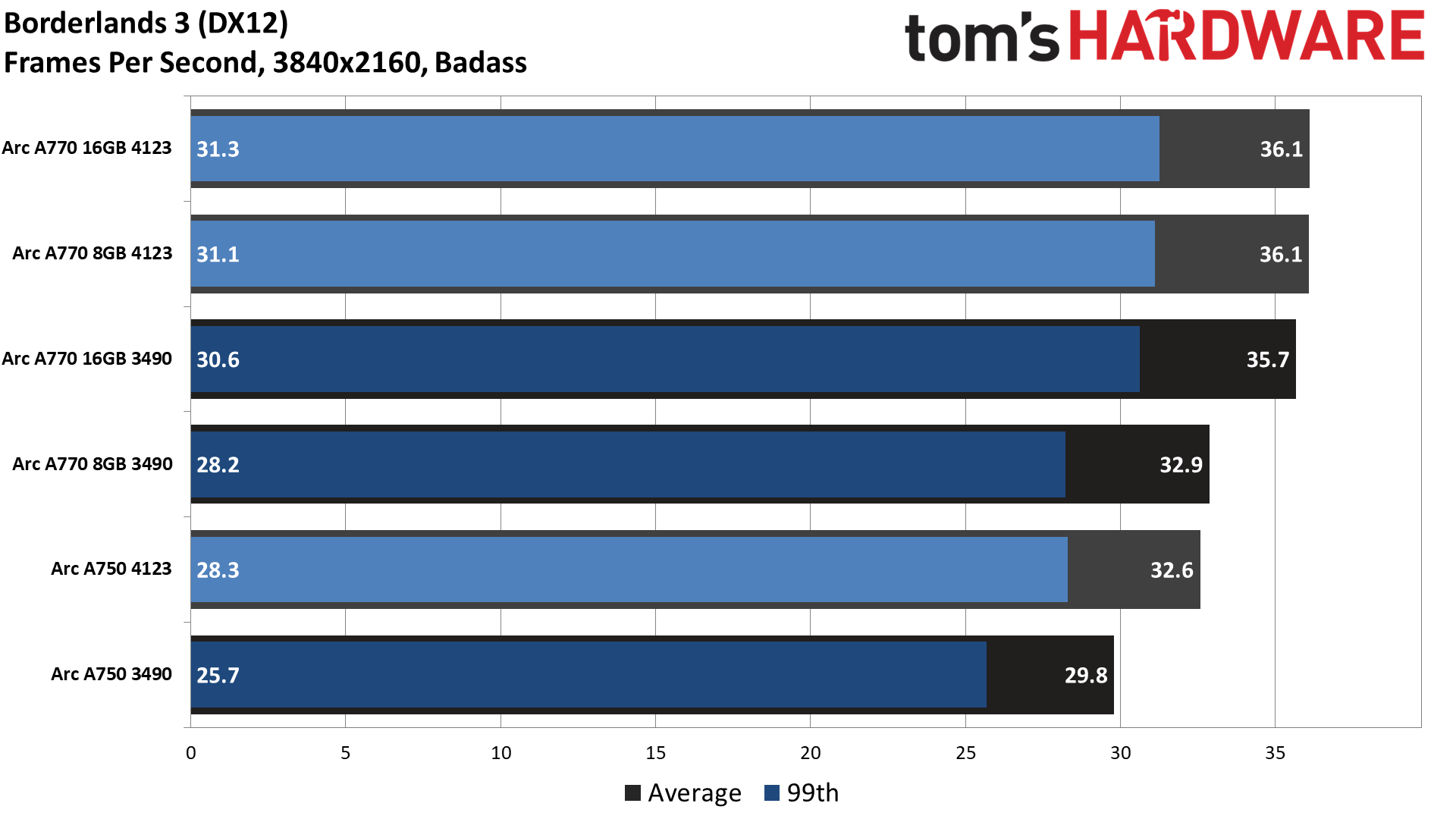

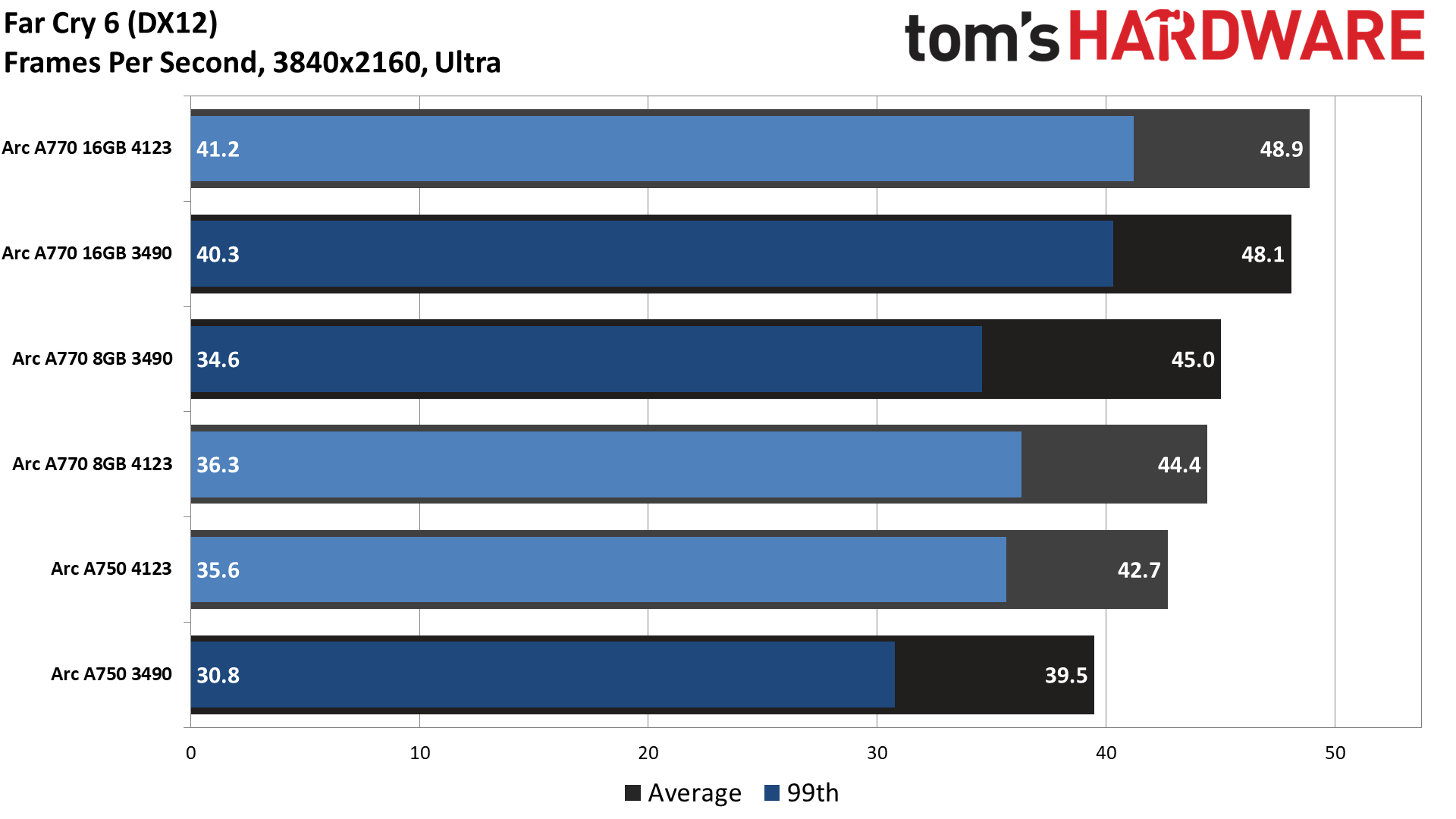

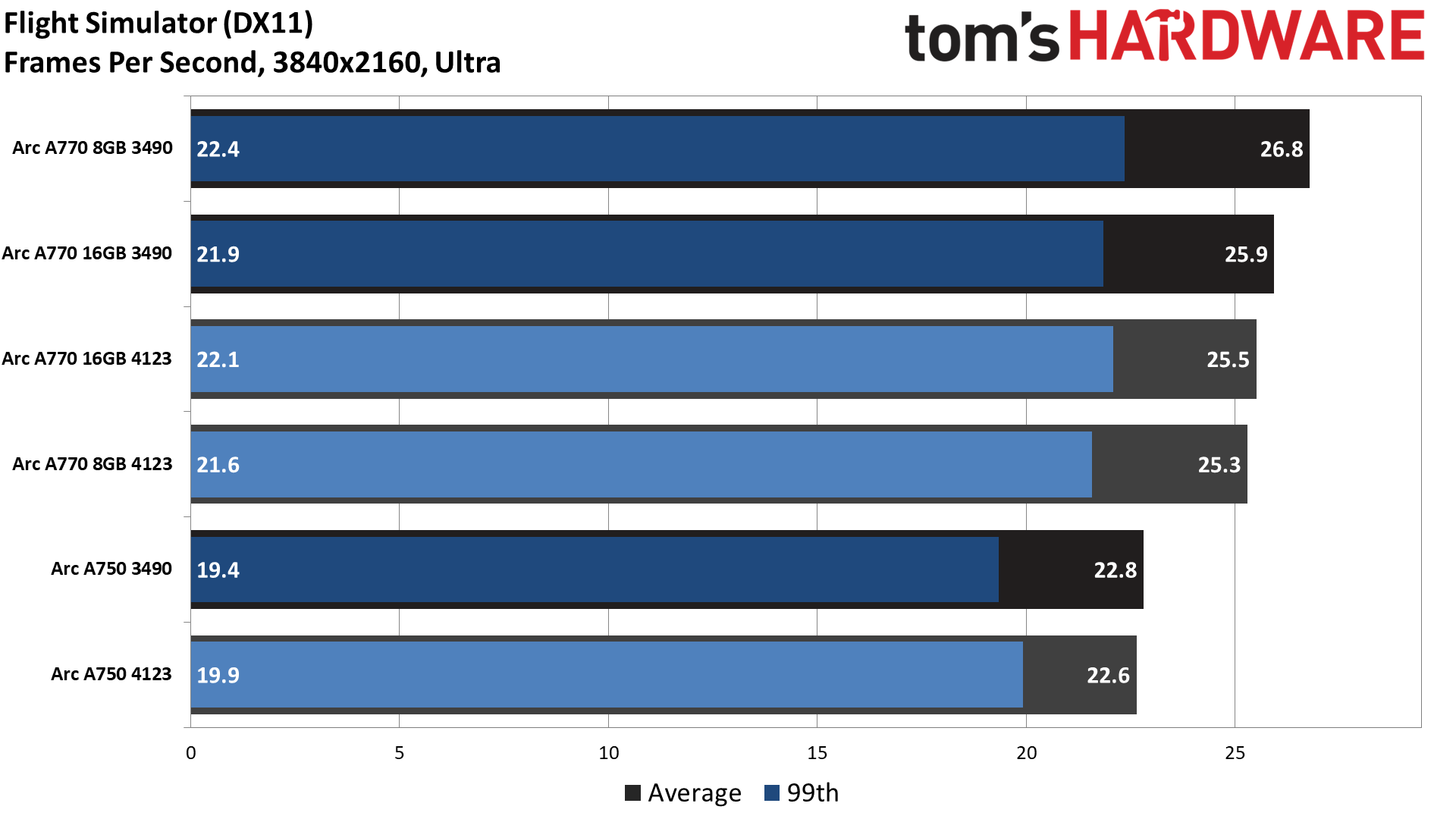

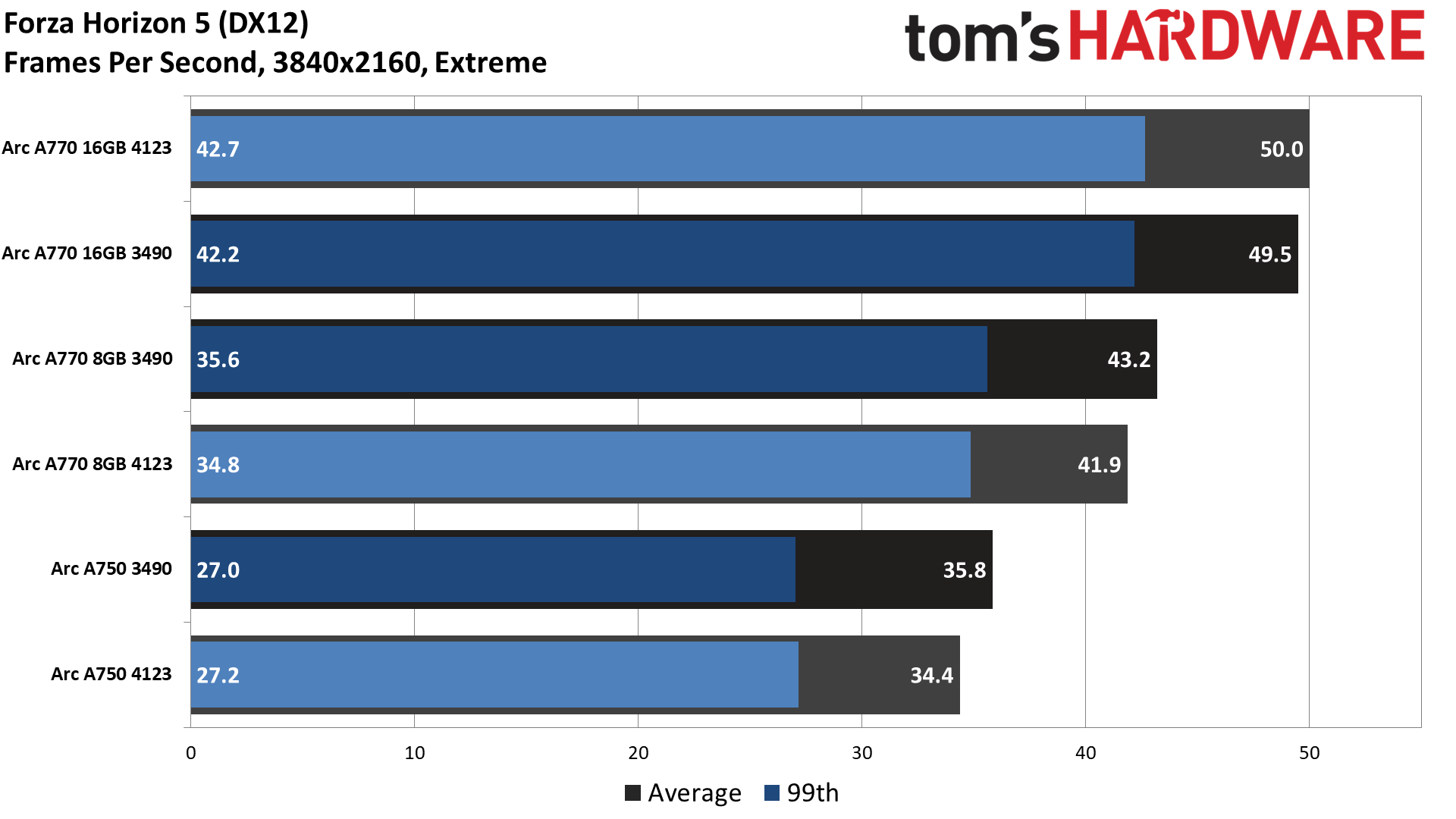

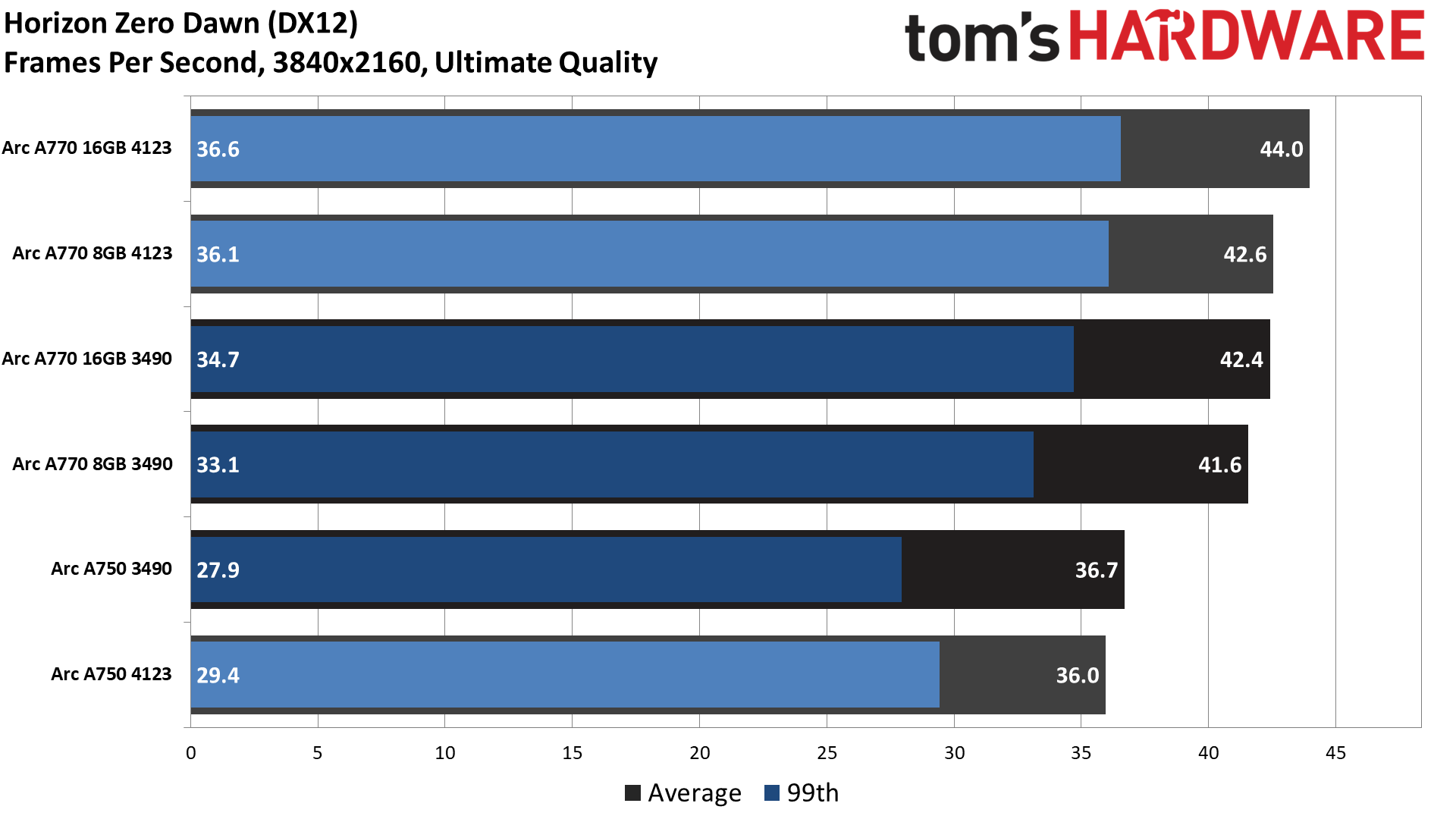

Arc Performance Update: Test Setup

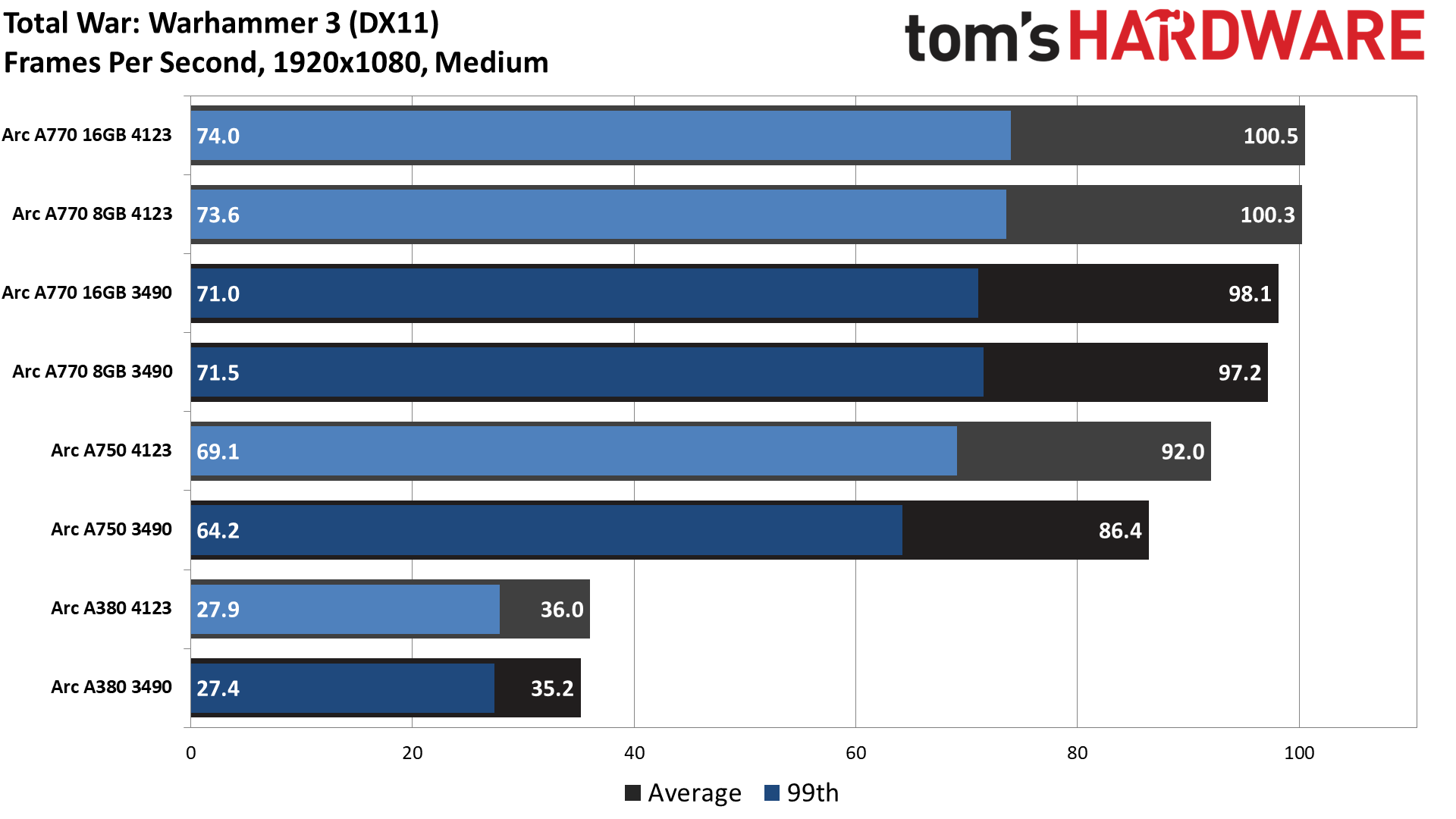

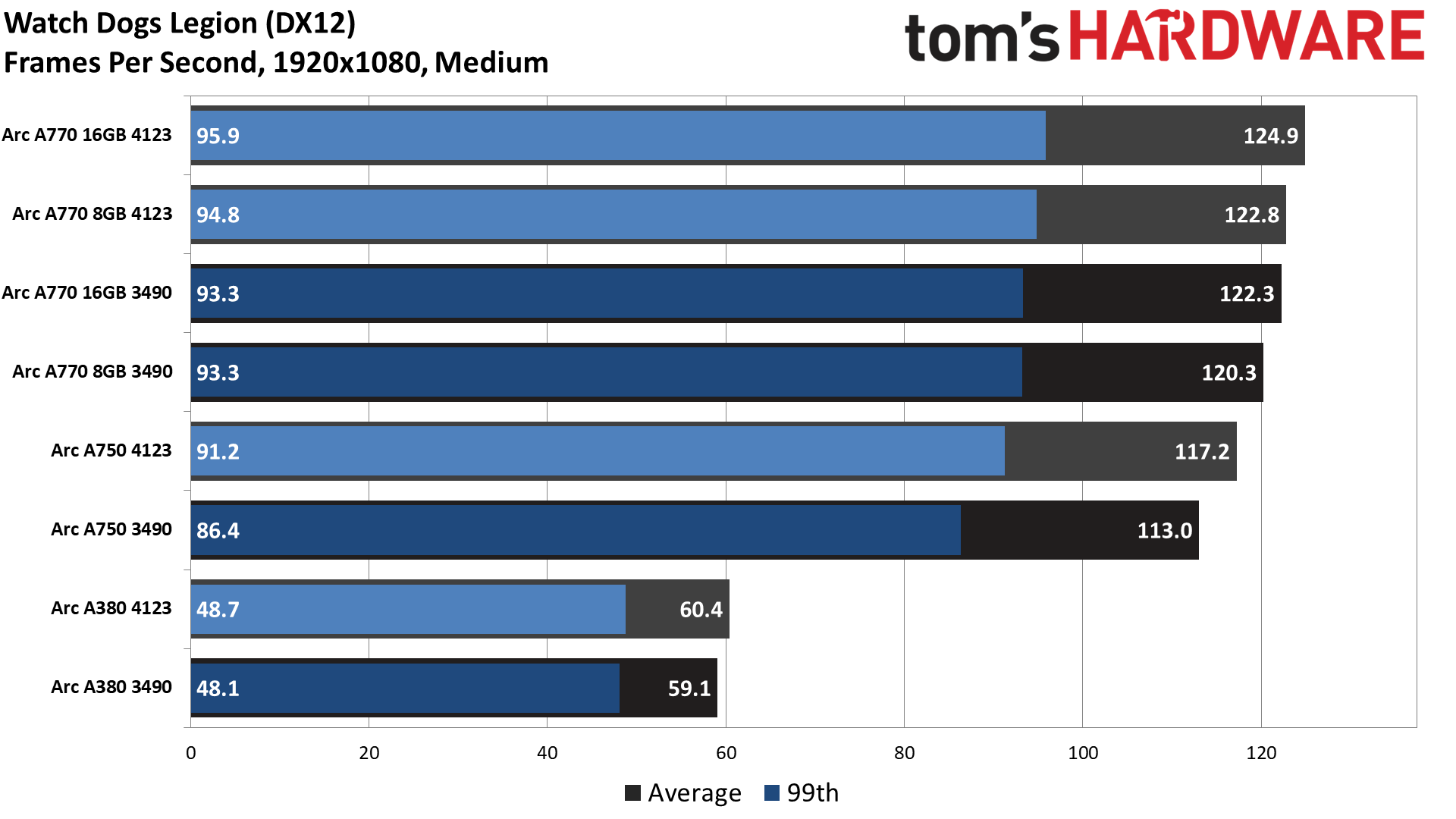

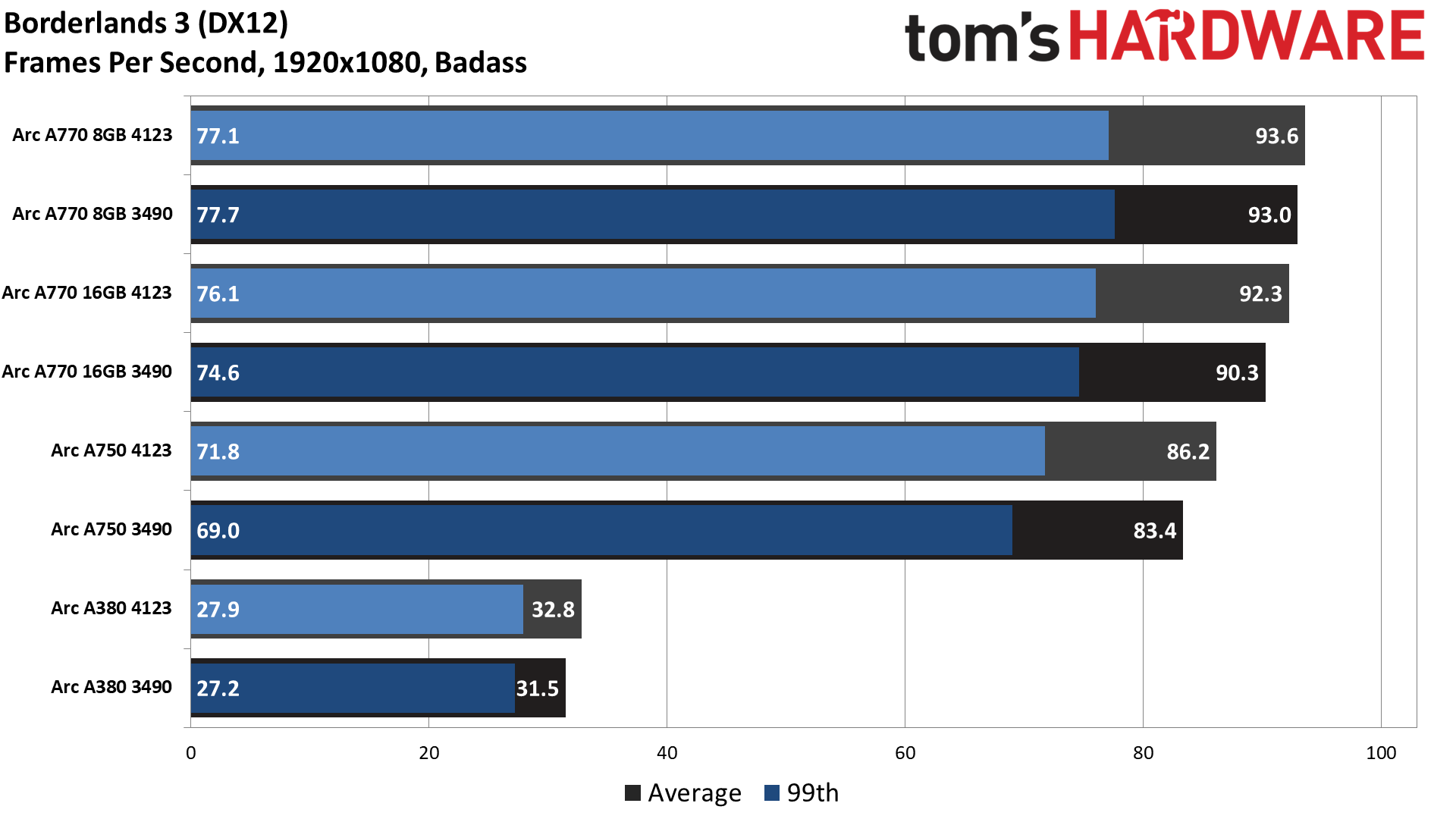

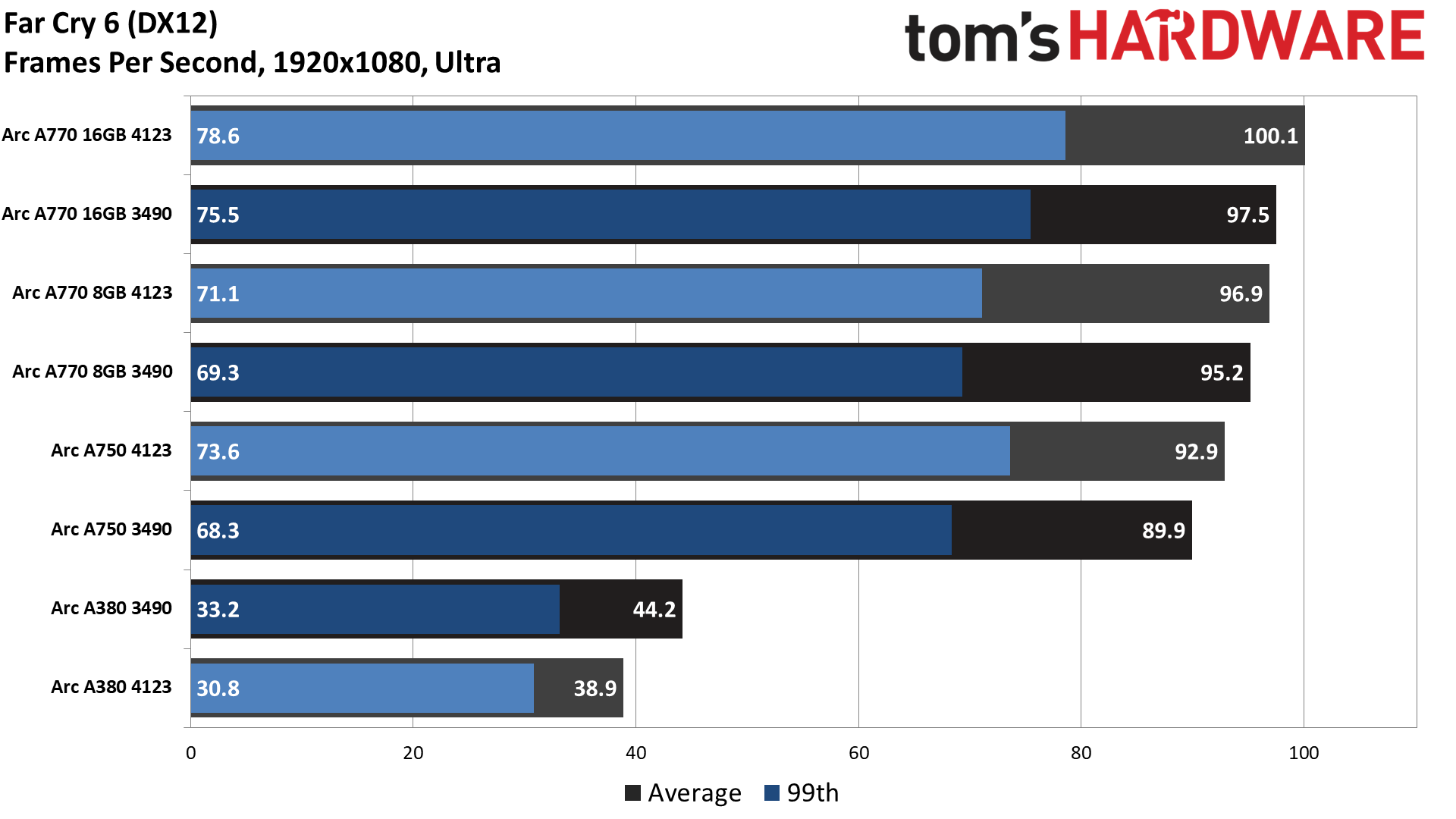

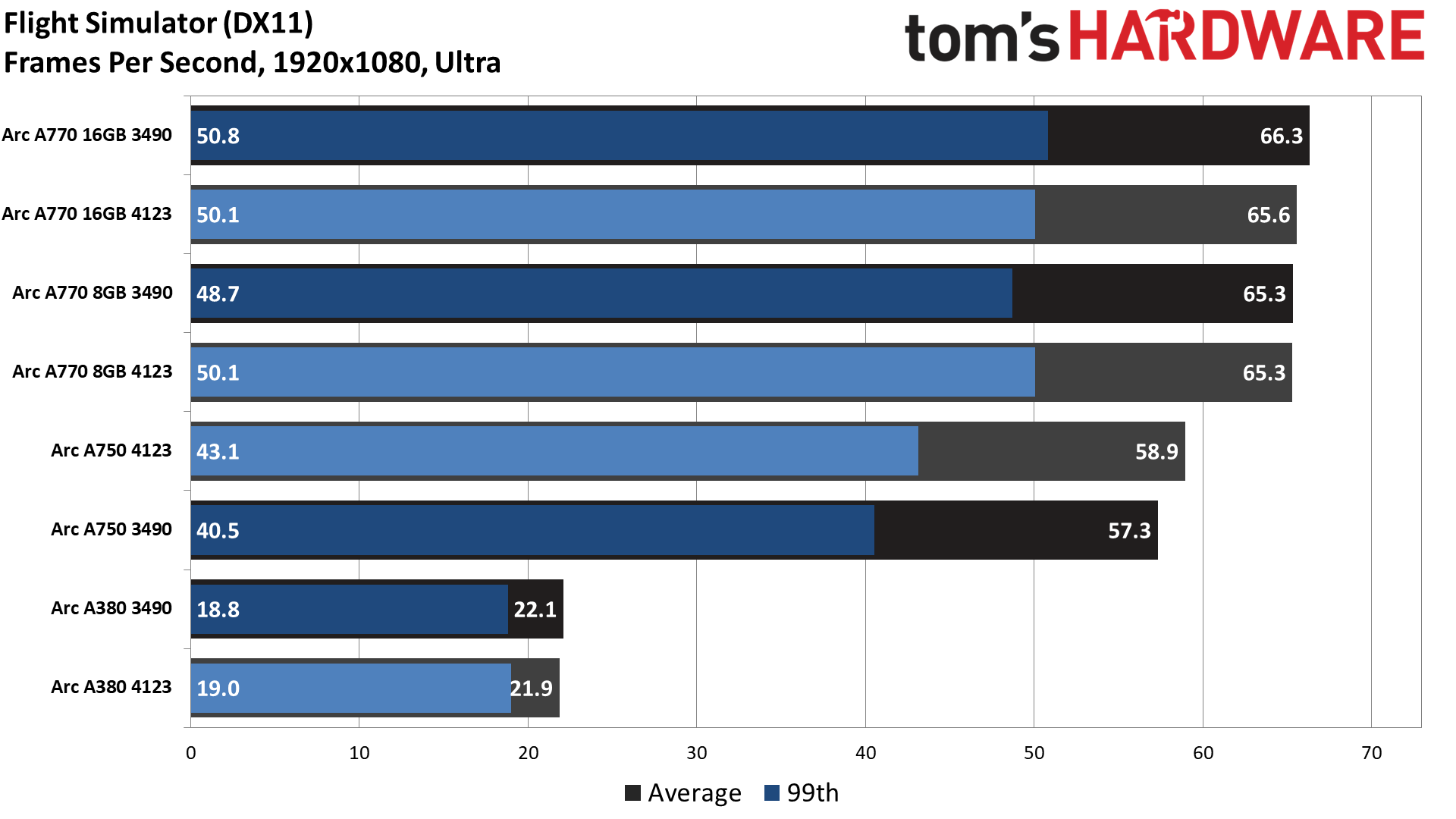

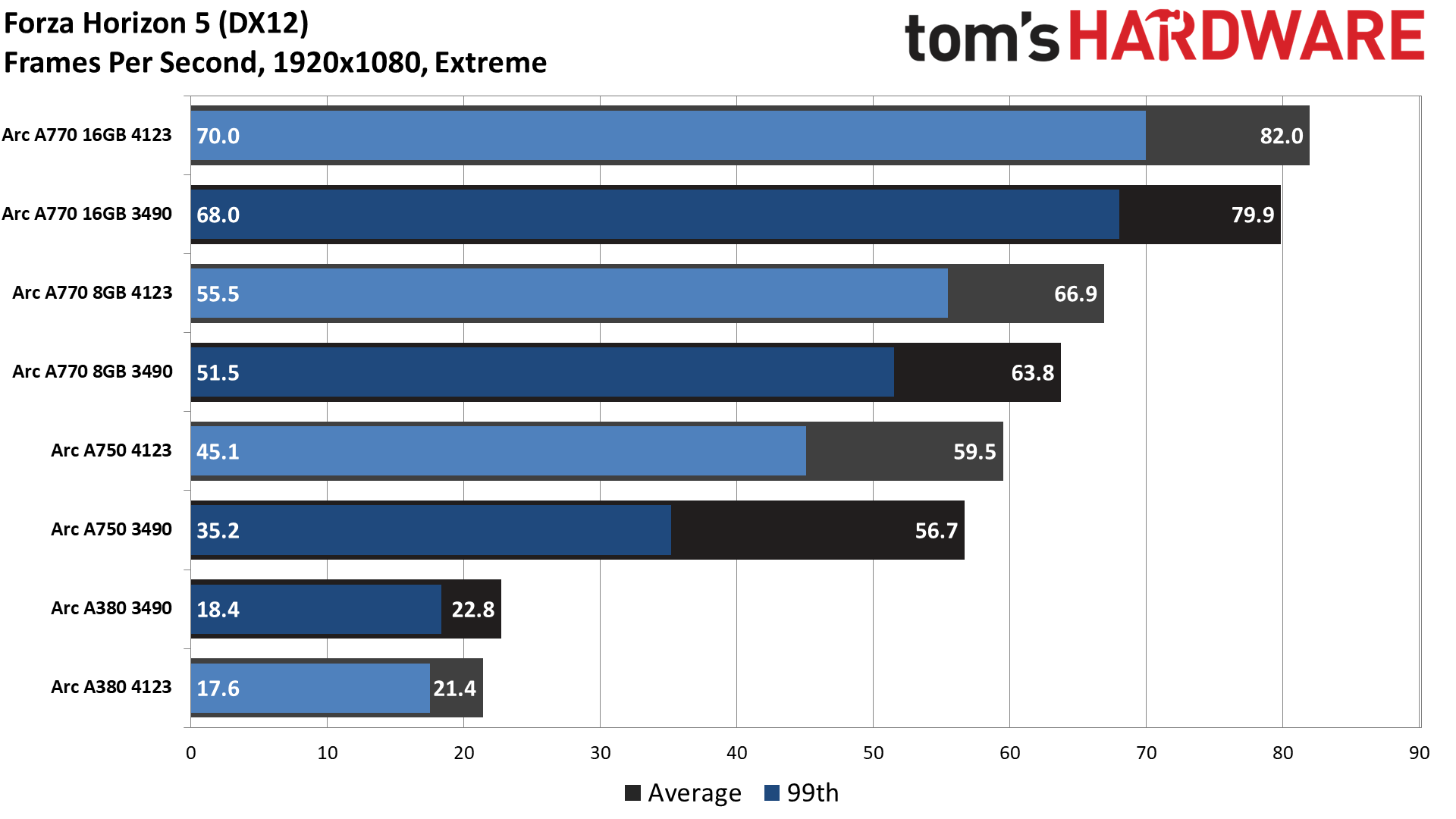

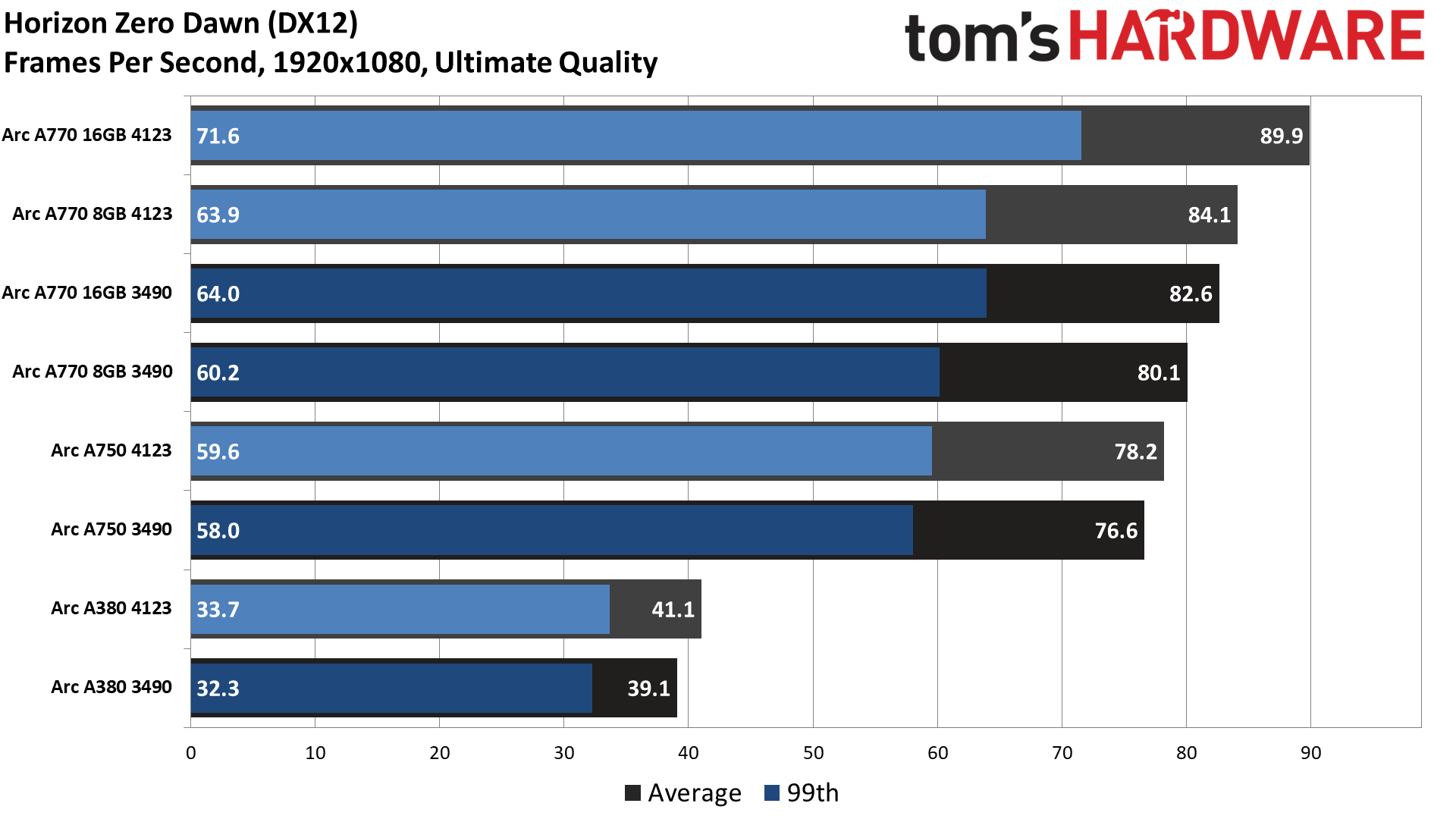

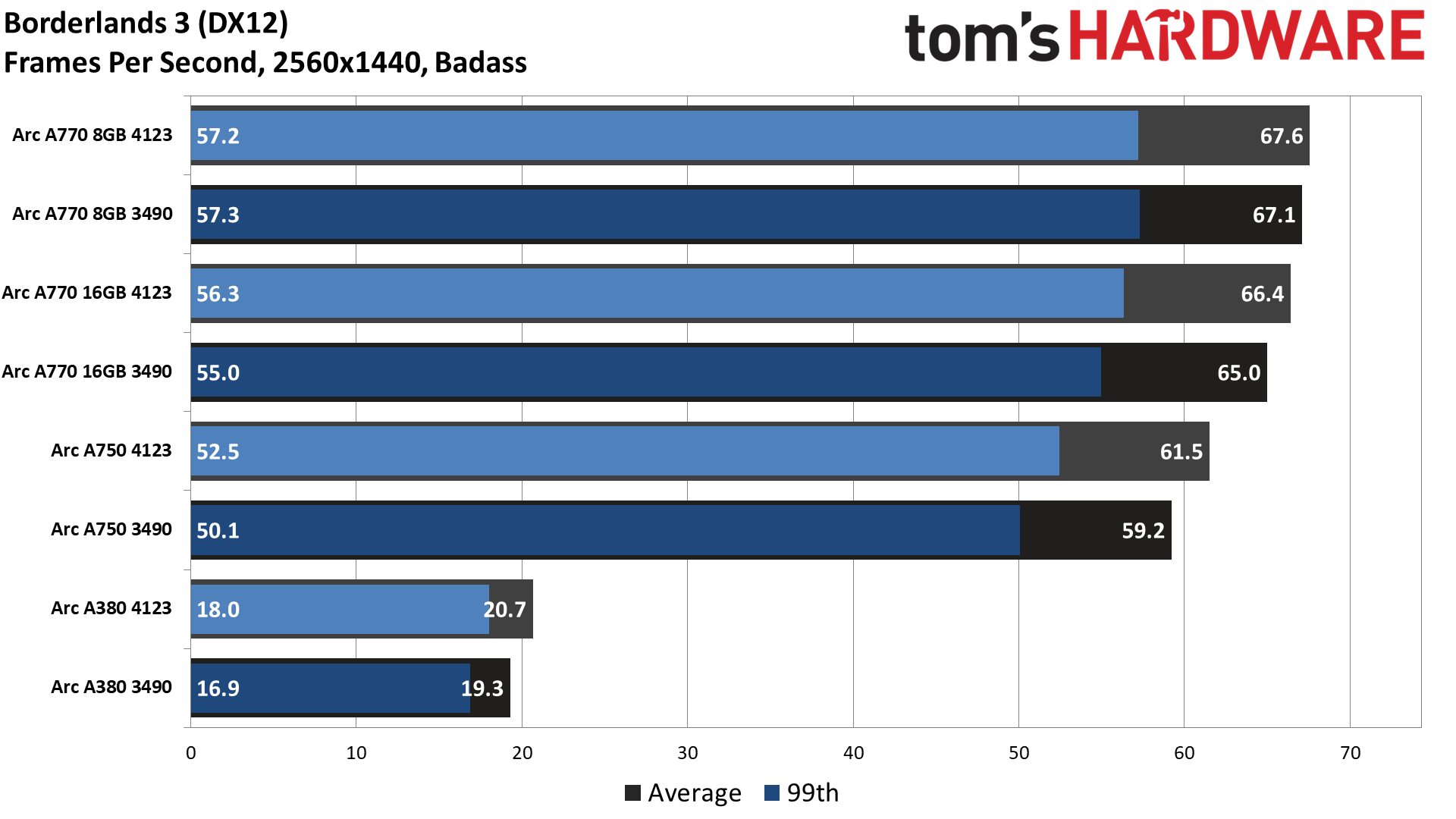

Given all the changes, we pulled out a collection of fifteen different games, including two DX9-based games, to check how the various cards perform. The fifteen games and their respective APIs consist of Borderlands 3 (DX12), Bright Memory Infinite Benchmark (DXR, or DirectX Raytracing), Control Ultimate Edition (DXR), Counter Strike: Global Offensive (DX9), Cyberpunk 2077 (DXR), Far Cry 6 (DX12), Flight Simulator (DX11), Forza Horizon 5 (DX12), Horizon Zero Dawn (DX12), Mass Effect 2 (DX9), Metro Exodus Enhanced (DXR), Minecraft (DXR), Red Dead Redemption 2 (Vulkan), Total War: Warhammer 3 (DX11), and Watch Dogs Legion (DX12).

All of the games were tested at "medium" and "ultra" settings, except for Mass Effect 2 — it only has three graphics options, and it's old enough that we simply enabled all three options for the "ultra" testing. The three A7-class cards were tested at 1080p, 1440p, and 4K resolutions, except for in ray tracing games where we dropped 4K testing (for what will become obvious reasons in a moment). The A380 dropped the 4K testing completely, and also dropped 1440p testing for ray tracing games.

Each game and setting were tested at least three times, dropping the high and low result. Where there was more variance (looking at you, CSGO), the tests were done five times and we took the median result. This is an important point, because sometimes the first run was very poor (again, CSGO, particularly on the 3490 drivers).

All these tests were done using the same Core i9-12900K PC that we used for the previously published Arc GPU launch reviews, so that we can look at how performance has changed. How would the old drivers differ from the launch performance and now? That's where things get a bit messy.

There have been multiple major updates to games in our test suite over the past few months, and we've been working to shift all of our testing to a new system with a Core i9-13900K, plus adjusting our test suite and settings as needed. Our older test system is now effectively (slightly) out of date, and while we've retested some cards with the updated games, it was best to just hit the reset button.

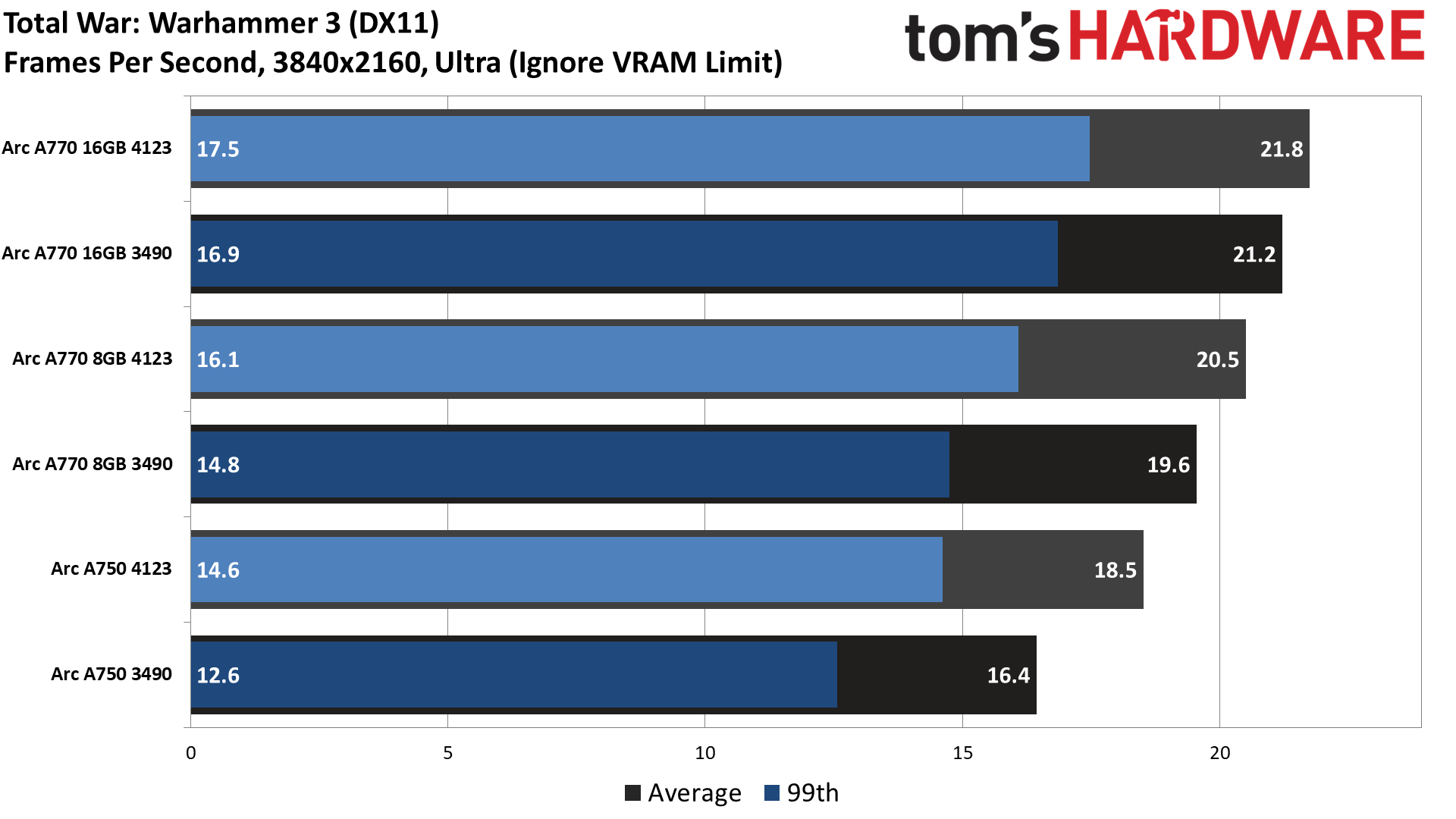

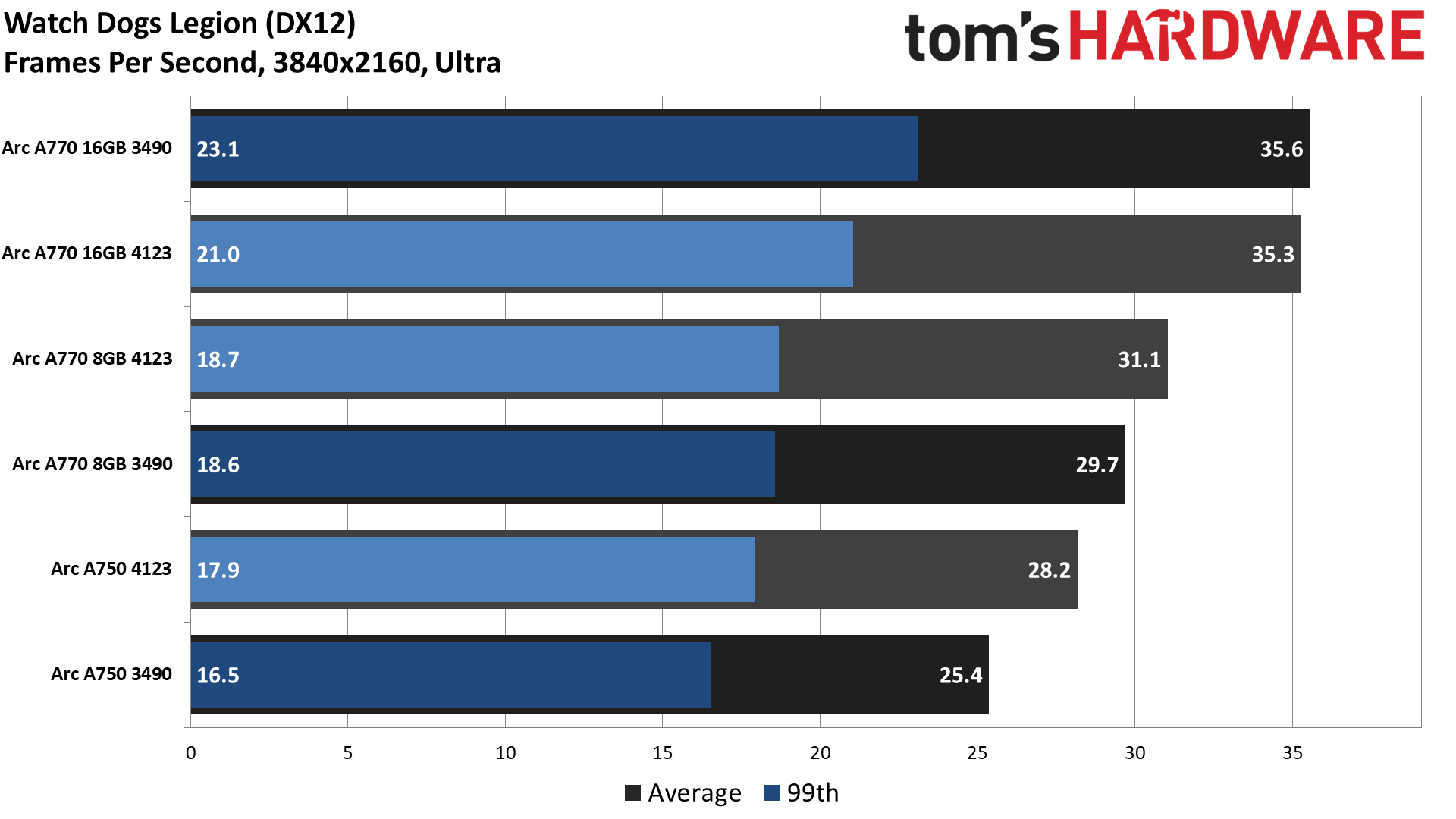

What we know for certain is that Cyberpunk 2077, Flight Simulator, Forza Horizon 5, and Total War: Warhammer 3 all had updates that changed performance and/or settings quite a bit — on some GPUs more than others. Cyberpunk now has DLSS 3 and FSR 2.1 support, Flight Simulator added DLSS 3 and DLAA, and Forza Horizon 5 added DLSS 2 and FSR 2.2 along with TAA. All three of those games also added support for Nvidia Reflex. As for Total War: Warhammer 3, a couple of months back there was a major update that improved performance by roughly 20% on virtually all GPUs.

And those are just the changes that were readily visible. Almost all of the other games in our test suite have also received various updates, and keeping track of what has changed and what remains consistent is difficult. Regardless, we'll have the original launch performance data in our charts, with an asterisk indicating it's from a different version of the game and may not reflect current performance. Just for good measure, we also applied all the latest Windows 11 updates and flashed our motherboard BIOS.

And somewhere along the way, we ran into a problem. Windows 11 defaults to Virtualization Based Security (VBS) being on, and we previously turned it off. Some time in the past few months, it got turned back on (likely when I was poking around at Stable Diffusion and tried using WSL2). You can still turn this off, but we didn't realize it was on until after testing was completed. So, if you saw this article earlier and we talked about how performance dropped about 7% on average since our launch testing last year, that's the explanation. We retested the A750 with VBS disabled and found that, in general, performance matched or exceeded our October 2022 results and was around 7% faster than our current VBS-enabled testing.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Intel Arc Graphics Performance: 3490 Launch vs. Current 4123 Drivers

We have two results for each of the test cards in the following chart: 3490 are the launch drivers for the A770 and A750 — the Arc A380 was available several months earlier with 3259 drivers, and we saw 3267, 3268, and 3276 drivers released before our review was finished. But we're sticking with the 3490 drivers for all of the Arc "launch" drivers to keep things consistent. 4123 are the "current" drivers (4125 drivers are "Game On" for Company of Heroes 3, The Settlers: New Allies, Atomic Heart, and Wild Hearts; more recently, 4146 are "Game On" for Destiny 2: Lightfall and Wo Long: Fallen Dynasty). Let's start with the 1080p medium test suite.

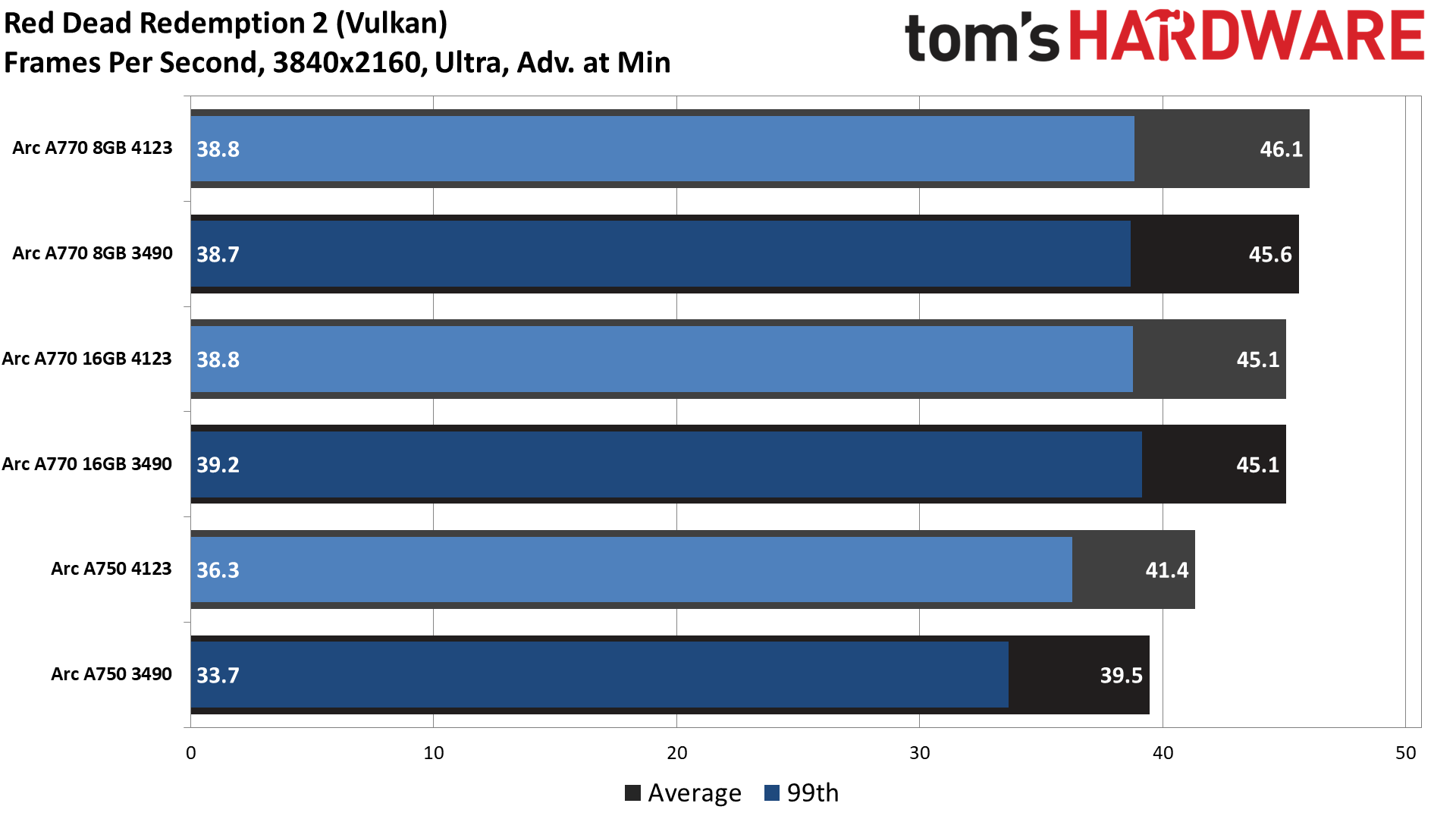

We should also discuss some of the anomalies we'll see before we get to the charts. First, Red Dead Redemption 2 using the Vulkan API consistently crashed our test PC with the A750 card — and it crashed a different test PC as well. It was mostly stable using the original 3490 launch drivers, enough so that we could complete a few test runs before the PC would restart, but every later driver we checked had stability problems. Intel was able to replicate the problem and it’s limited to the A750; a fix was made available in the latest 4146 drivers, and we've included those results here (even though they're still labeled "4123" for our charting purposes).

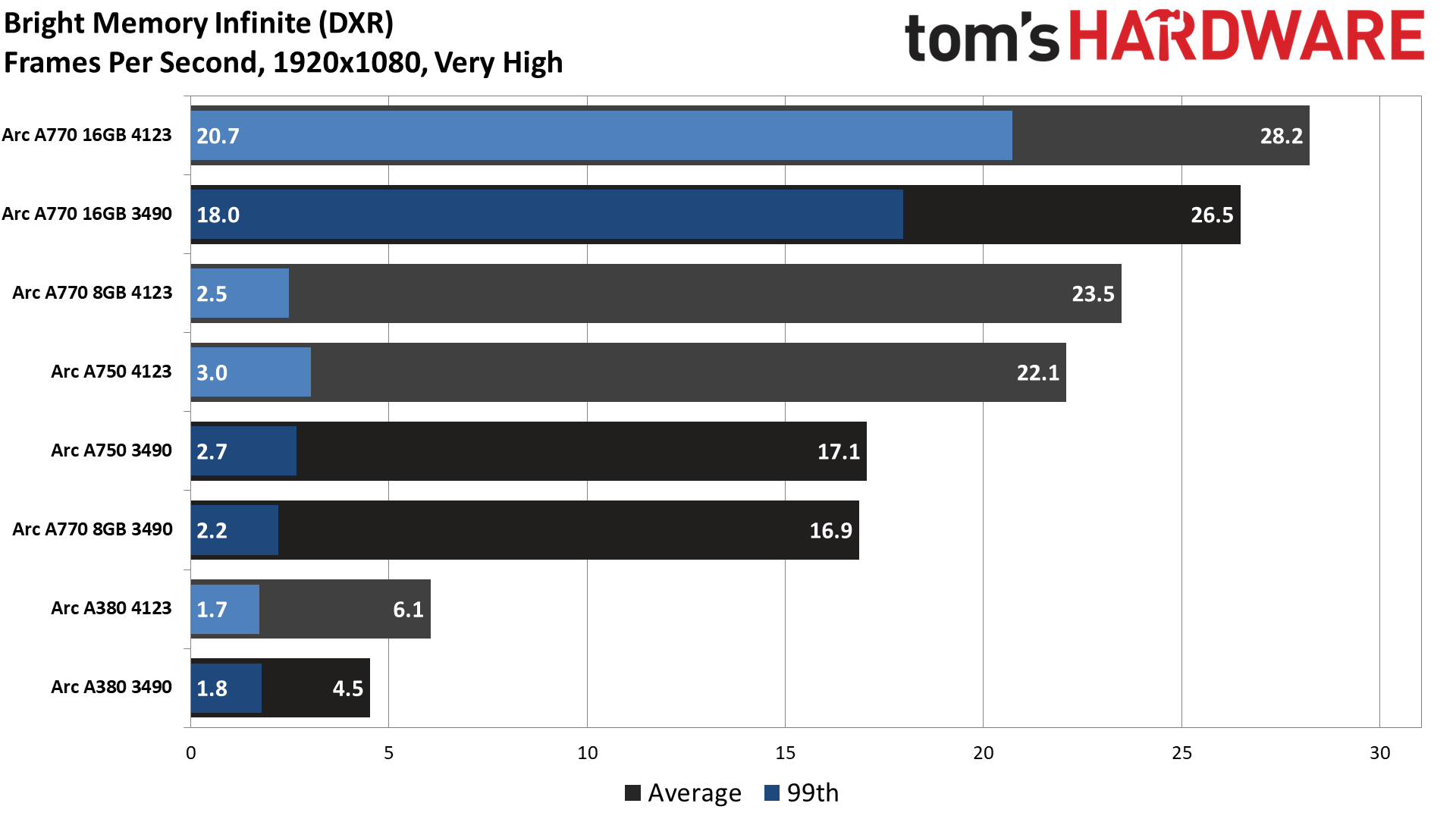

Next, Bright Memory Infinite Benchmark, a ray tracing title, has a rather severe VRAM memory leak problem — or at least, that's how it appears. We could complete one benchmark run at 1080p with the Normal preset on the 8GB cards at 1080p, but higher settings (and the A380) would go from being somewhat smooth to a stuttering mess partway through the benchmark, with minimum framerates of 1 fps. This is another bug that Intel has confirmed and is working to fix — it's not clear if this affects the game as well, or only the standalone benchmark (which is far more demanding and better looking than the actual game, if you were wondering).

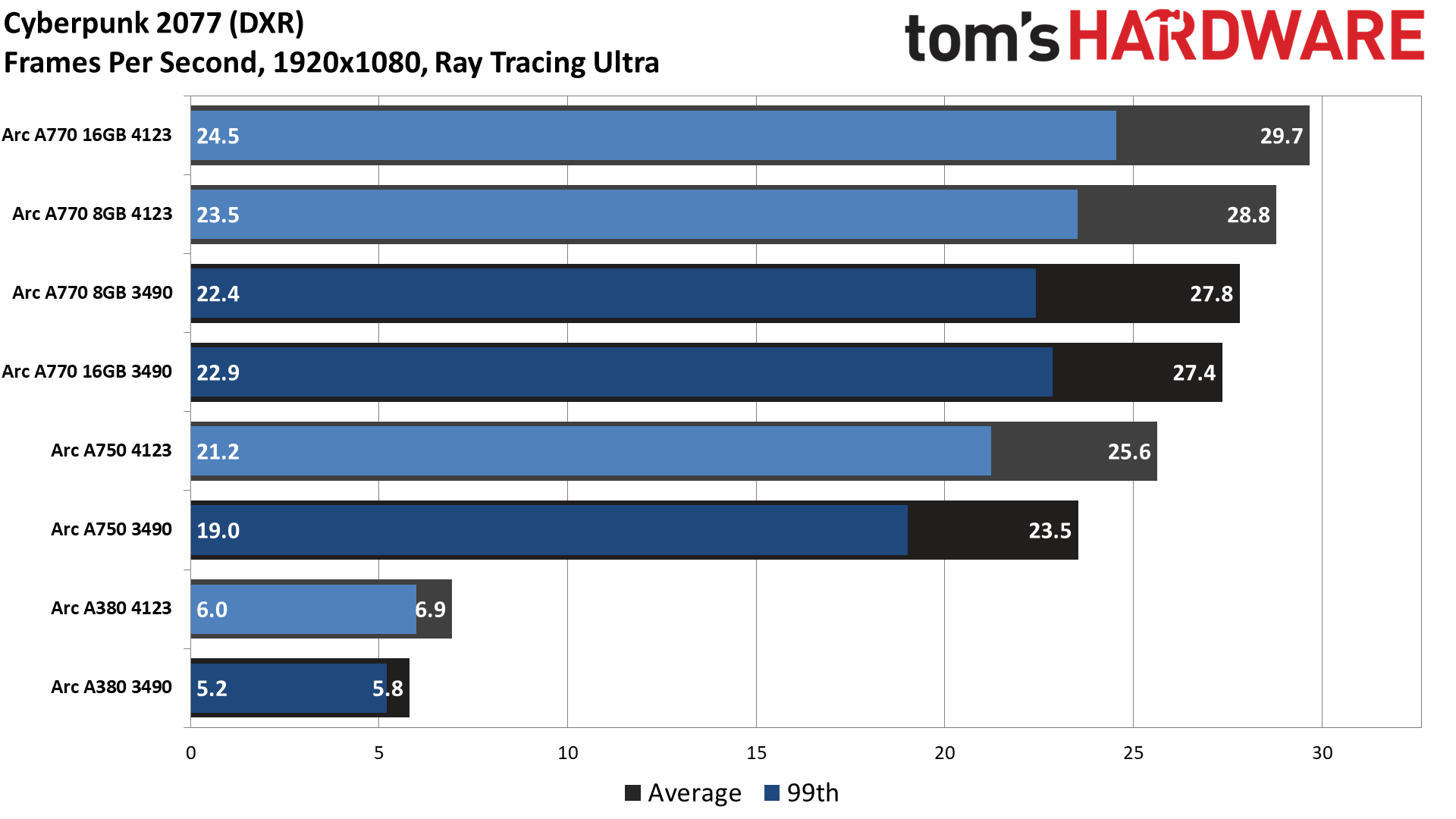

Finally, Minecraft performs very poorly on all Arc GPUs right now. When the cards first launched, you couldn’t even turn on ray tracing in the game, as apparently it was coded with a “whitelist” of cards that support DXR. An update to the game in December finally allowed the Intel GPUs to run with ray tracing enabled, but the results remain much lower than expected. As a point of reference, in other demanding ray tracing games like Cyberpunk 2077, the A770 is only 10 percent slower than an RTX 3060; in Minecraft, the RTX 3060 is currently nearly triple the performance. In fact, even the RX 6600 outperforms the fastest Arc GPU by 25 percent, where the A770 is 60 percent faster in Cyberpunk. Intel is also looking into the Minecraft performance, so hopefully that improves with a future driver.

Disclaimers aside, here are the results of our testing.

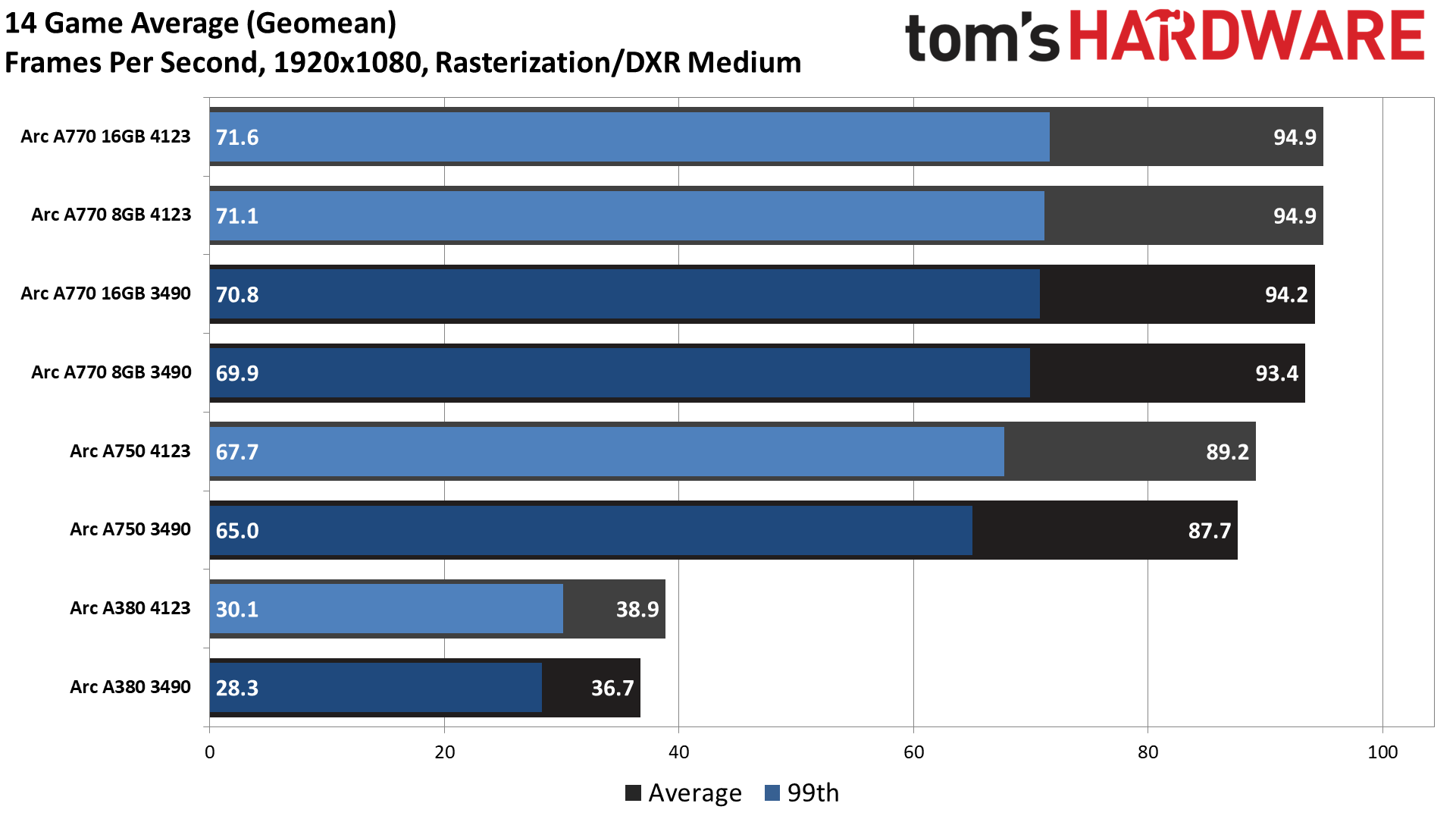

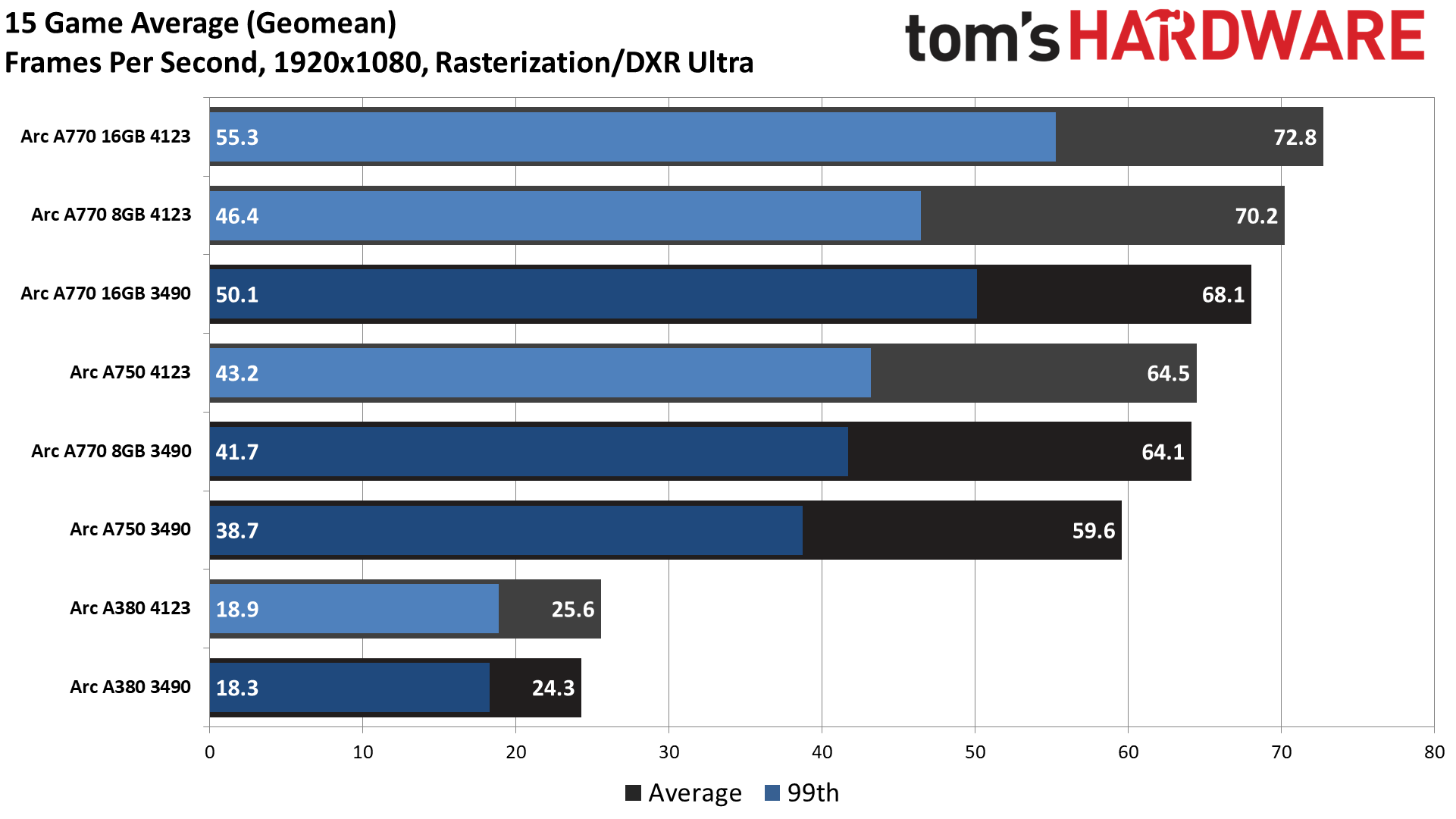

Overall performance changes between the 3490 and 4123 drivers at 1080p medium are… not a lot. That's pretty much expected. The three A7-class cards are 1–2 percent faster, while the A380 is 5% faster.

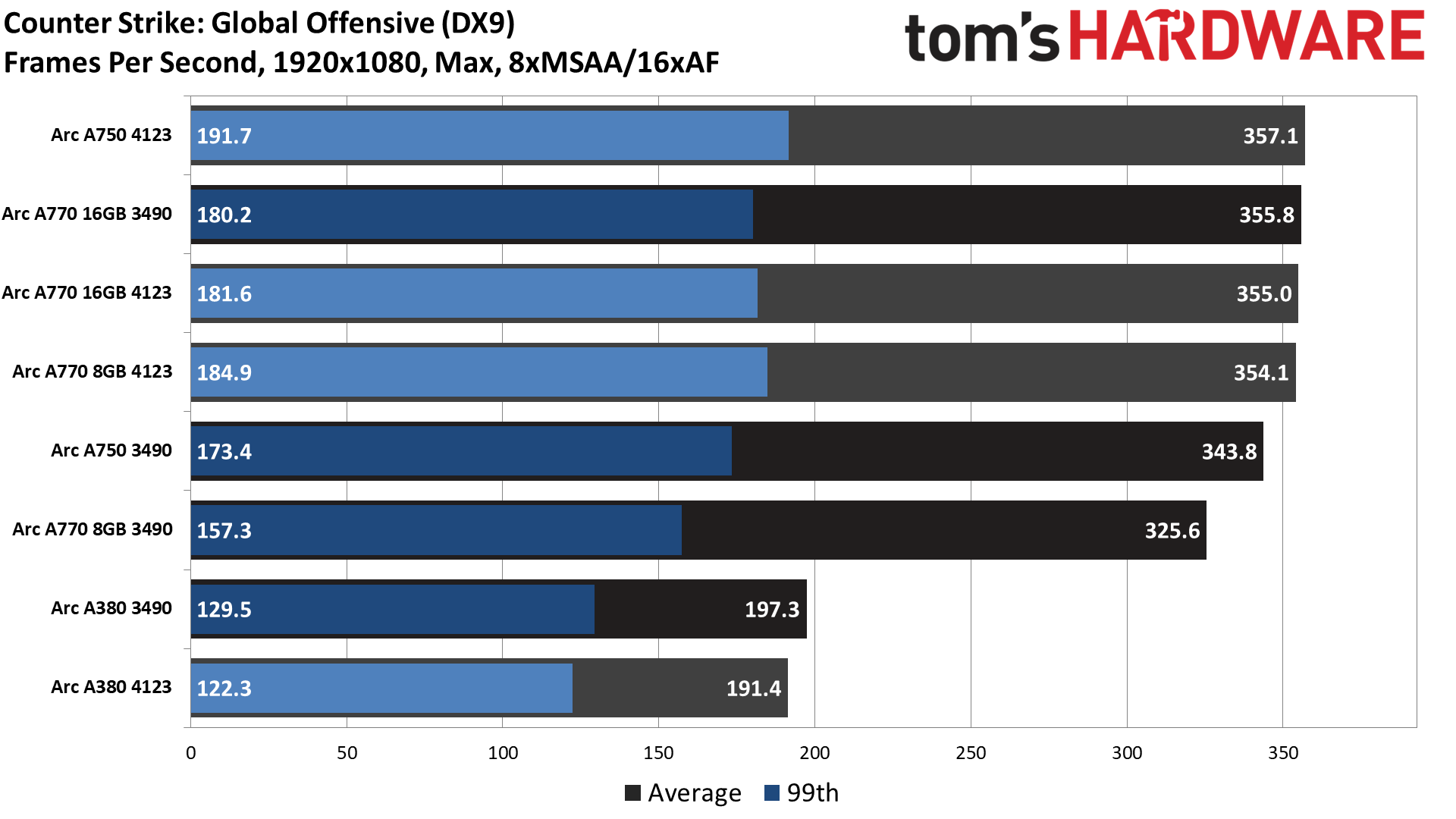

We don't have a "medium" result from Mass Effect 2, and CSGO appears to be mostly CPU limited to around 360 fps (with fluctuations as it's a non-deterministic test sequence — I played against harmless bots on the Mirage map, running the same route each time, basically a loop to the right through bomb site A as a counter terrorist). That means two of the biggest potential gains are out of the picture, except on the A380 where CSGO performance was 16% higher on the updated drivers.

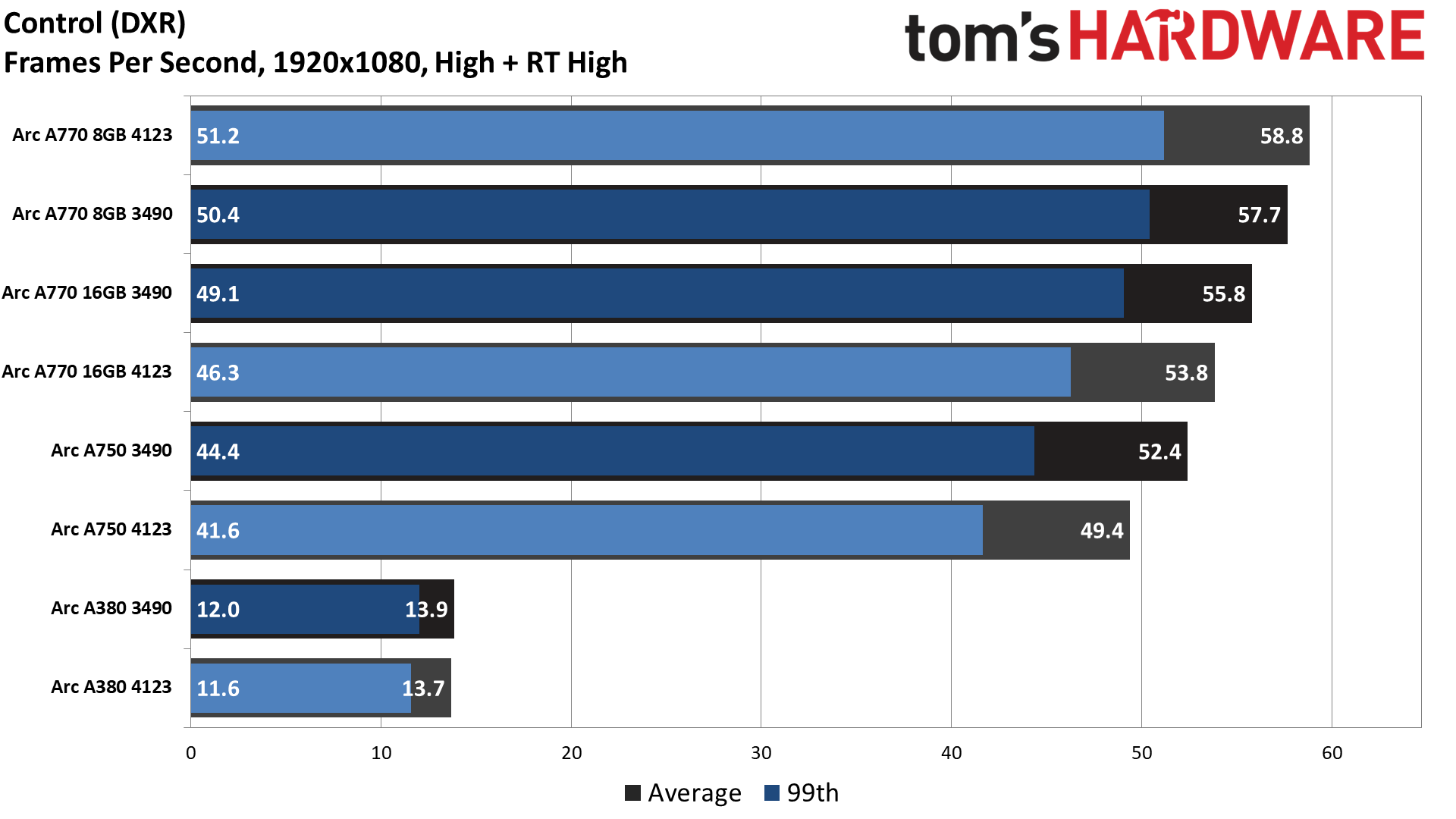

Flipping through the individual charts, the only major differences are the somewhat larger than expected drop in performance in Control on the two Intel Arc Limited Edition cards — the ASRock A770 8GB and A380 didn't show such behavior — with modest gains elsewhere. That's mostly the same pattern we'll see at the other test settings.

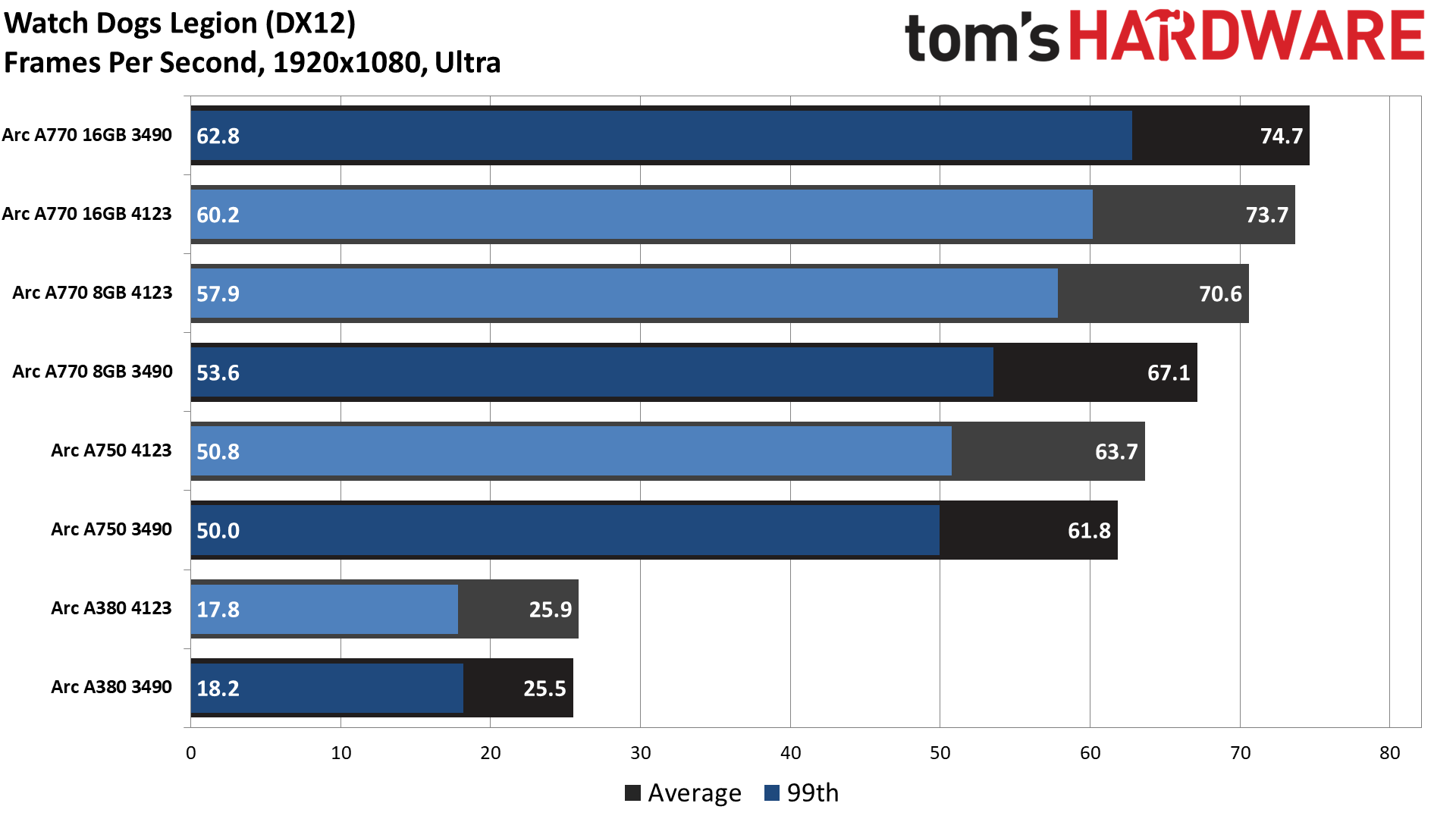

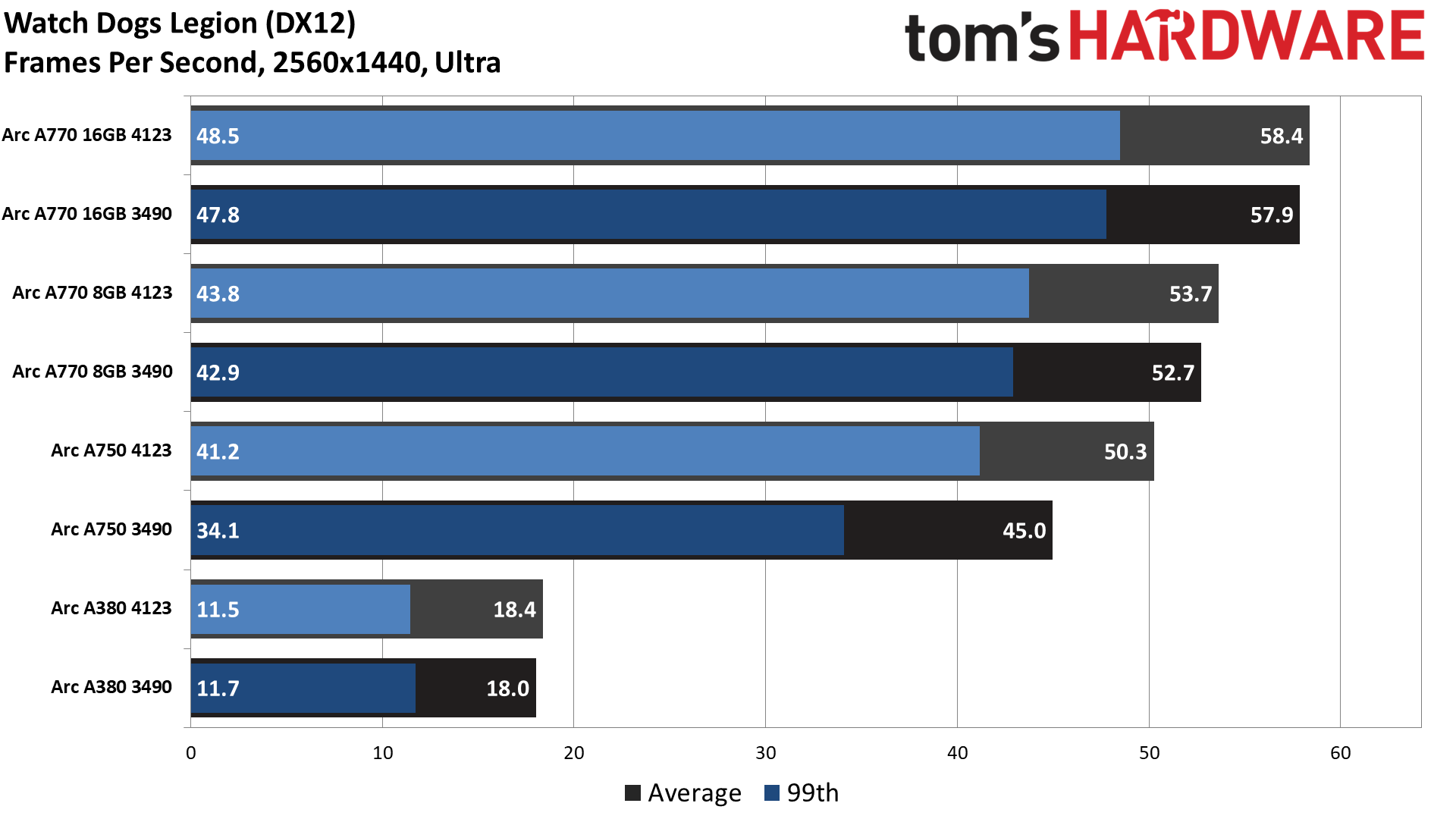

Moving the quality setting to "ultra" results in bigger gains overall for the latest drivers, but much of that comes from the two DirectX 9 games. Overall, we're looking at a 5–10 percent aggregate improvement in performance with the 4123 drivers compared to the 3490 launch drivers. However, if we drop the two DX9 games from the geometric mean calculation, then the net improvement is only 3–6 percent.

There are positive and negative changes once again, with some of the biggest deltas coming in DXR games where they're often meaningless — A380 benchmarked at 6.1 fps compared to 4.5 fps, is a 34% increase in performance… at completely unplayable framerates.

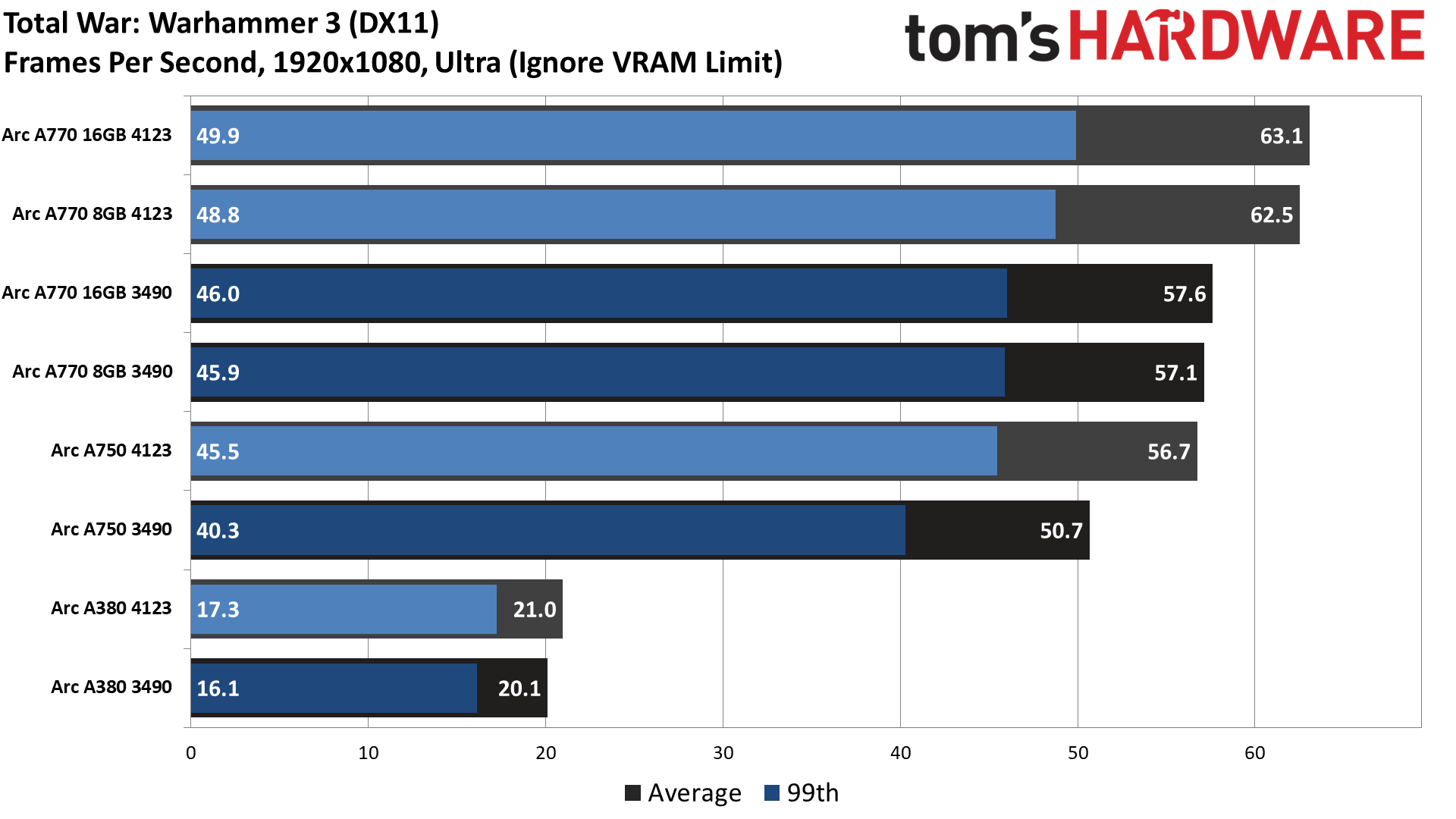

There are a few cases where we see up to a 10% improvement in games that aren't using DXR or DX9, like Total War: Warhammer 3, but overall the changes are mostly within the margin of error, or at least close to it. But look at the two DX9 games… or at least the one DX9 game.

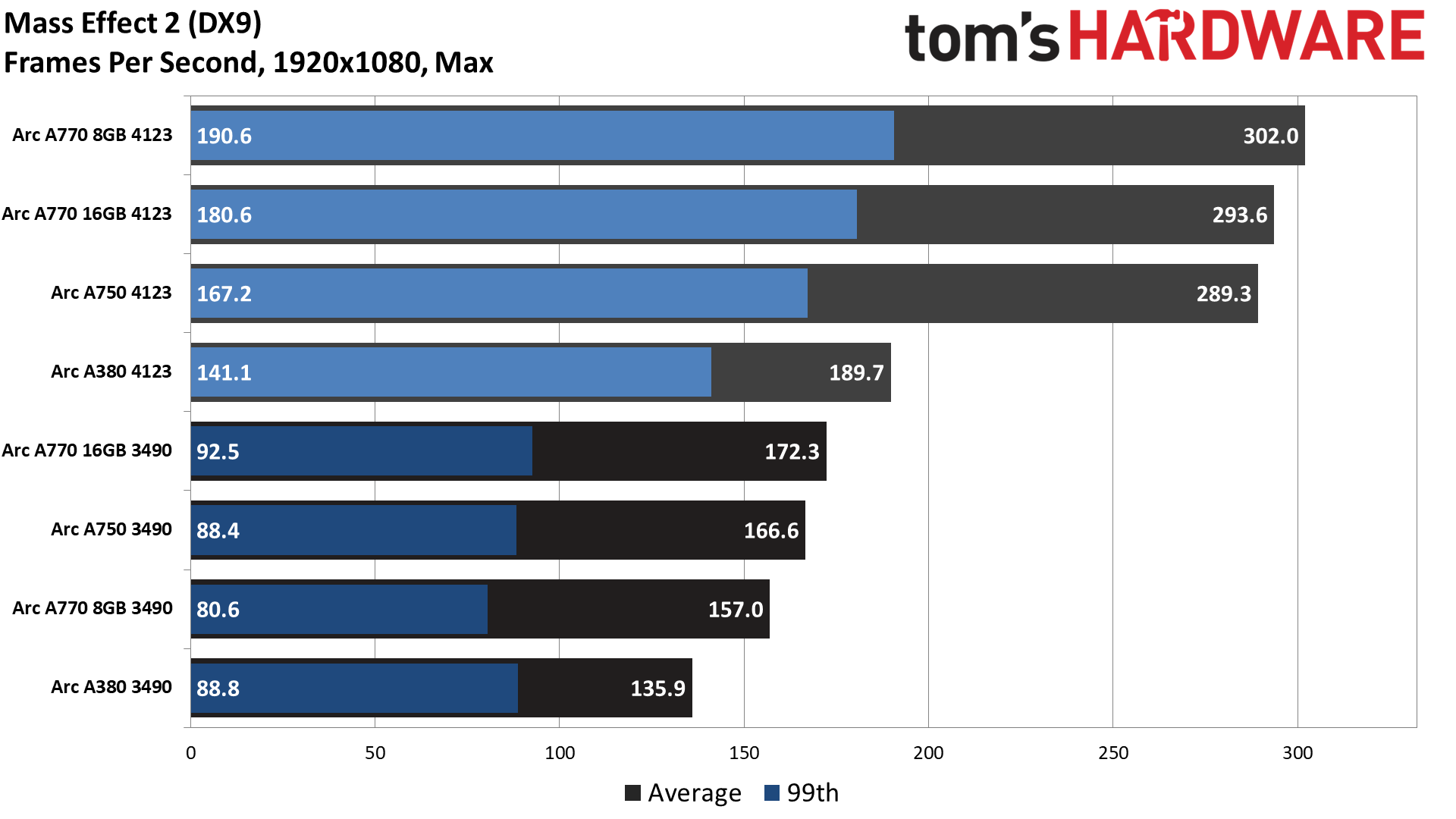

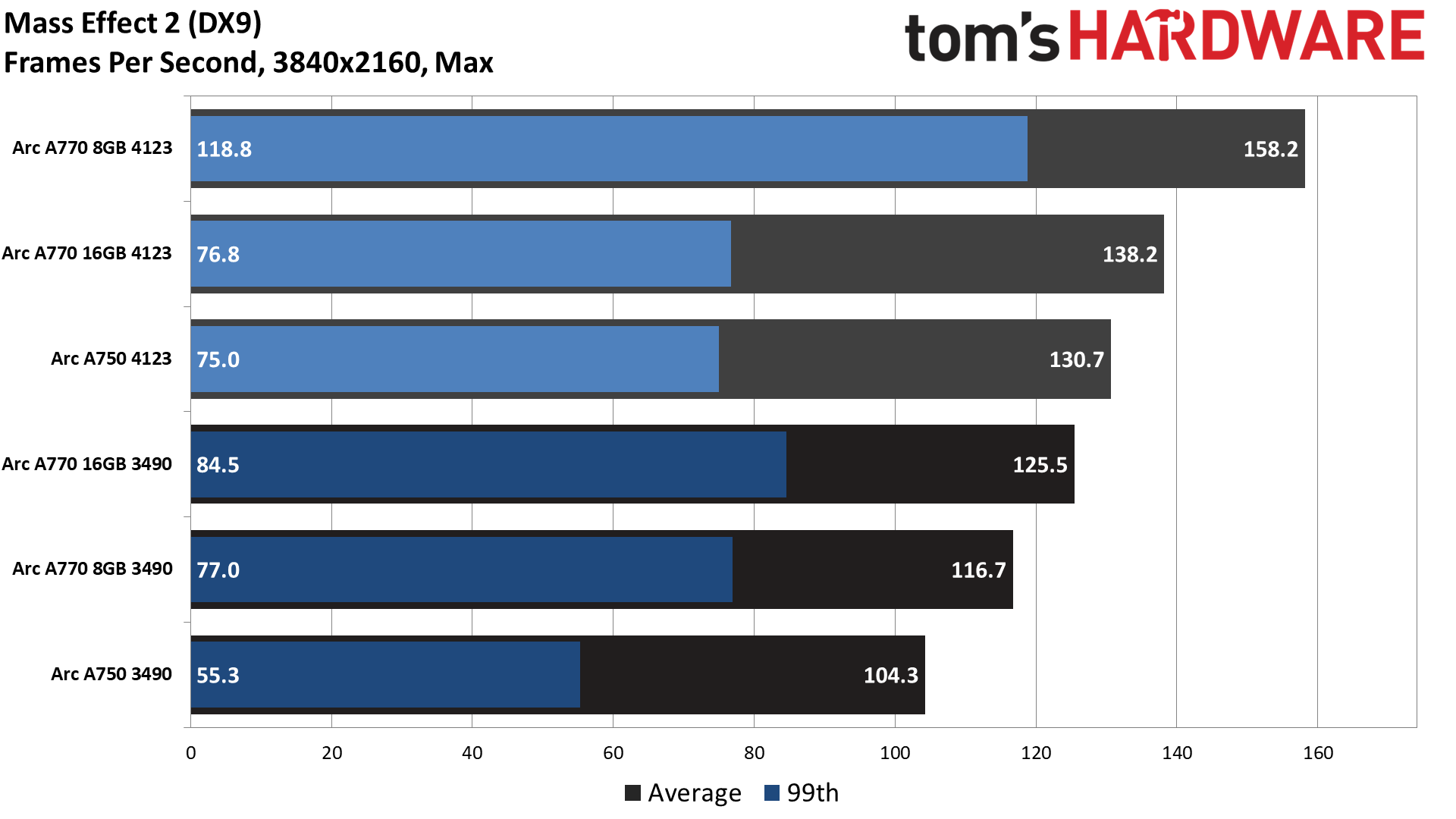

Mass Effect 2 performance improved by anywhere from 40% on the A380 to as much as 90% on the A770 8GB. That's for average fps, but the 99th percentile fps shows even bigger gains. The low fps results are 60% faster with the 4123 drivers on the A380 and up to 135% higher on the A770 8GB.

What about CSGO? The A770 16GB card shows basically no change, which makes sense given the large amount of VRAM. The A770 8GB improved by 9%, and the A750 by a few percent, but the A380 performance was slightly slower. Perhaps Intel focused its DX9 optimizations more on the bigger chips than on the ACM-G11 used in the A380? There's also the question of what map to use and how to test, so perhaps other CSGO maps would show different performance results, or maybe playing online rather than against bots.

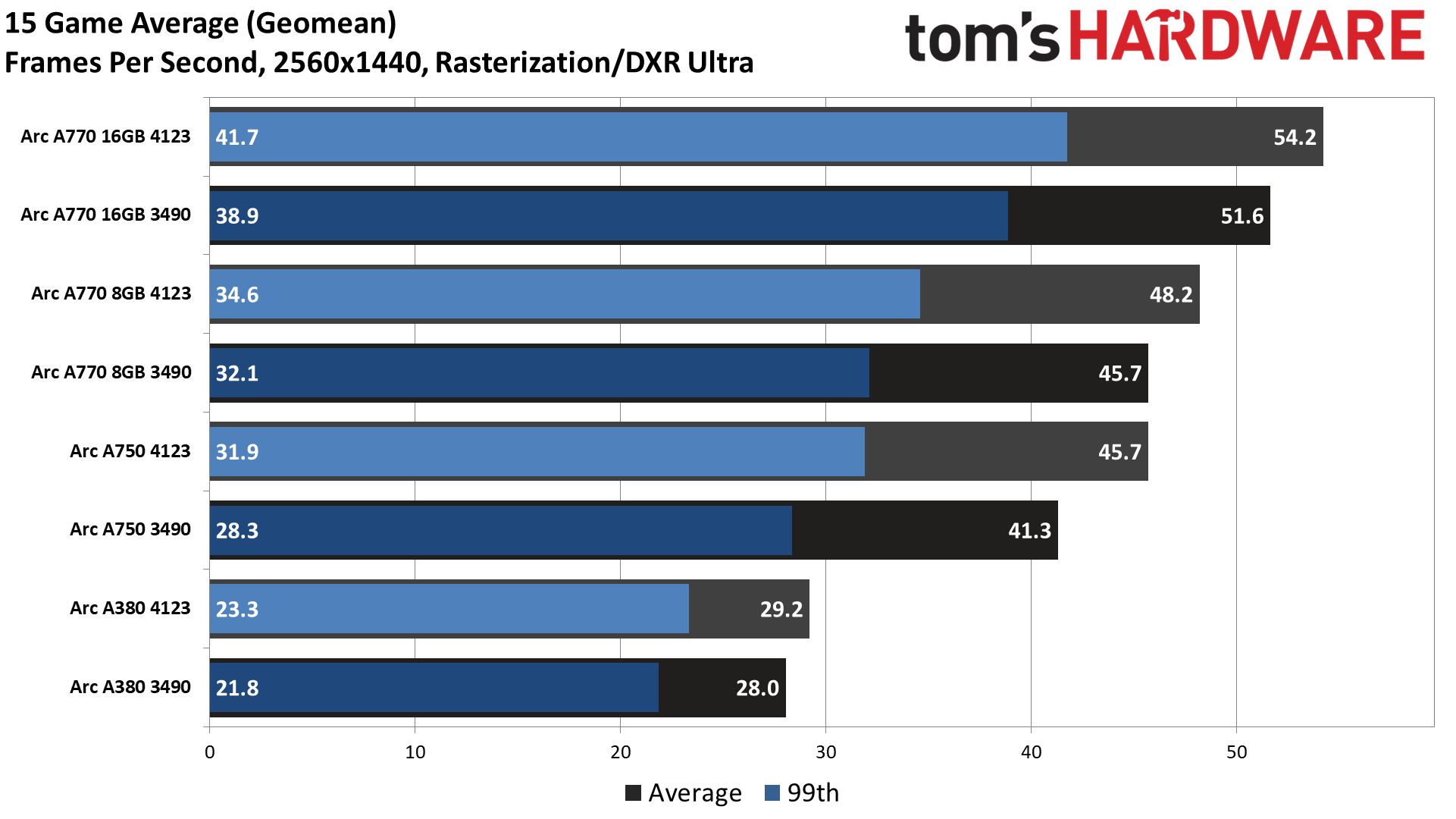

Moving to 1440p ultra testing, the A380 is now mostly not a factor to consider outside of playing older DX9 games. Overall, we again see a 5–10 percent improvement in performance for the three A7-class Arc cards, with the A750 showing the biggest overall increase. Of course, if we omit the DX9 games, it goes back to being almost margin of error, 2–3 percent, with the A750 still improving by 9% thanks to a big increase in the Cyberpunk result, from an unplayable 10 fps to a still-unplayable 14 fps.

The gains in CSGO are still pretty muted, 3% on the A770 16GB, 10% on the A770 8GB, and 17% on the A750 — and the A380 performance again dropped, this time by 6%. Mass Effect 2 on the other hand shows gains of 50% on the Intel Limited Edition cards, and 70% on the ASRock A770 8GB — but only 22% on the A380, which still manages to break 100 fps on the 13-year-old game.

There's not much more to add. Outside of the two DX9 games, most of the changes aren't something you'd really notice as a gamer. It's worth mentioning that improvements in minimum FPS on the DX9 games are often even larger than the average FPS gains — CSGO had a lot of stuttering on the first couple of test runs with the 3490 drivers, and that's mostly gone now, but our use of the median result of five runs partially obscures this fact.

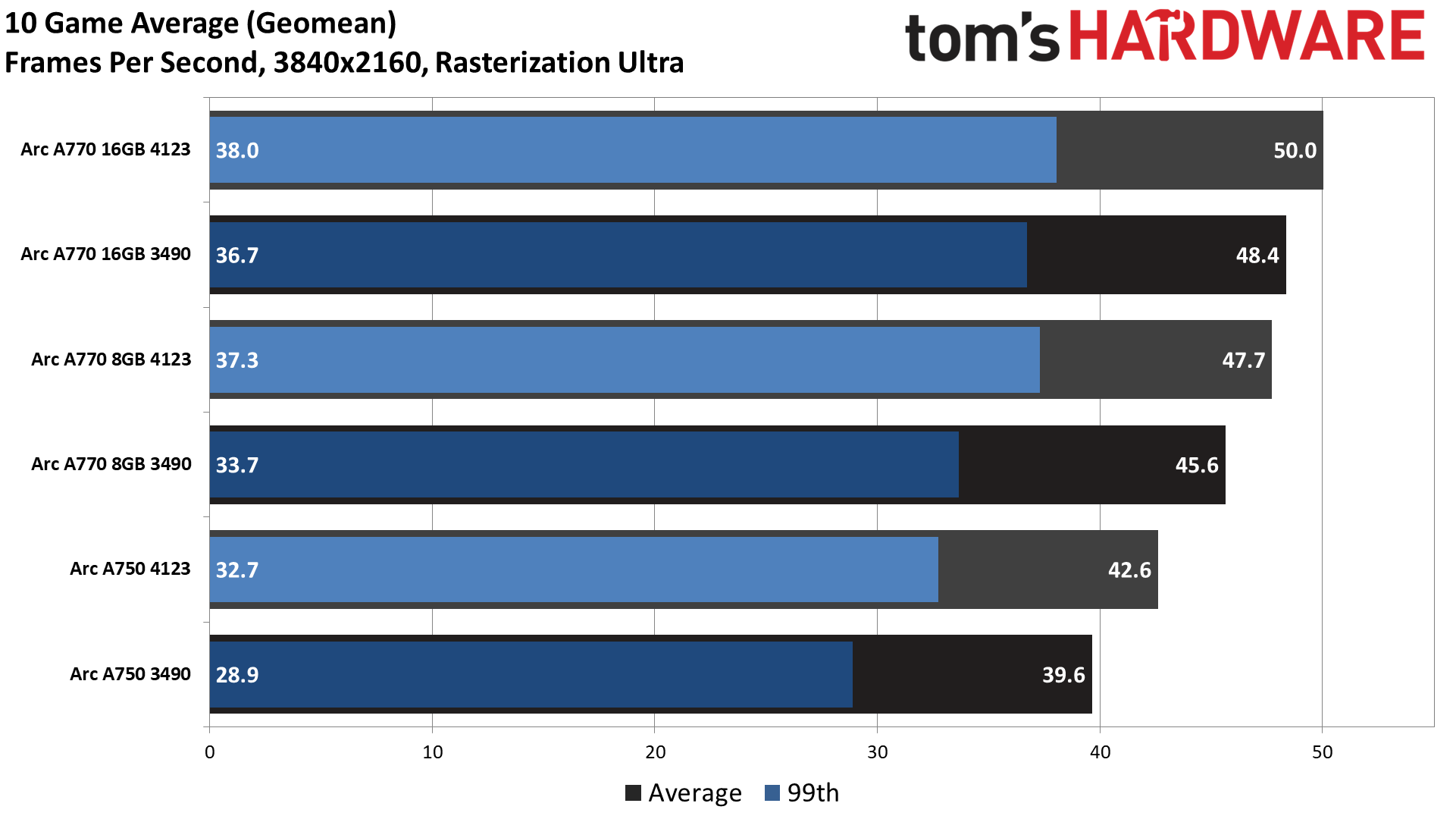

Last we have the 4K testing results, though we didn't do any DXR tests so the overall chart is down to 10 rasterization games. The three ACM-G10 based graphics cards show 3–7 percent aggregate gains in performance using the full test suite, but only a 1–5 percent increase if we omit the two DX9 games.

Mass Effect 2 now shows improvements of 10–35 percent, with the 16GB card benefiting the least, and the factory overclocked ASRock card benefiting the most. CSGO does show modest gains as well this time on the two Intel Limited Edition cards, while the ASRock card only shows a 3% improvement. Again, there was a lot of variability between runs in CSGO, and the minimum fps does show much better results with 35–50 percent higher 99th percentile framerates.

Playing Intel Arc, the Iteration Game

It's been an interesting six or so months since the Arc A380 first appeared. Given all the claimed performance gains, we were hoping to see more… consistency across our test suite. The latest drivers generally do outperform the launch drivers, but some issues remain, and that's on a still relatively limited test suite of 15 games.

None of our findings are particularly surprising, including the continued oddities. AMD and Nvidia have been playing the drivers game for decades, and even they have occasional problems. Intel has had graphics drivers for integrated graphics solutions for decades as well, but the performance on tap was so low that there often wasn’t any pressure to even try to optimize for newly released games. Creating dedicated graphics solutions changes the user expectations, and now Intel is playing catch up.

The most important things to note with the driver updates coming out of Intel are that there’s a regular cadence, and that older DirectX 9 games got some much-needed TLC to get them running more smoothly. It’s rare that more than two weeks passes without some new driver being announced, and Intel has also been doing better on getting Game Ready drivers out for bigger launches, including "Game On" drivers for the Hogwarts Legacy and Atomic Heart launches over the past couple of weeks.

If Intel can keep that up, plus add the occasional larger overhaul that provides more universal improvements, in a couple of years we hopefully won't even need to have a serious discussion about Intel’s GPU drivers. And of course, we're only scratching the surface with our game performance testing.

The games, settings, resolutions, and even test sequences can absolutely affect performance and potential gains from newer drivers. Maybe a different CSGO map would have yielded bigger performance improvements from the newer drivers. Or maybe the 12900K CPU was holding us back a bit more than the 13900K Intel used in its testing. We're looking into our CSGO results and may do some further testing and update this article as appropriate.

Regardless, let’s hope that Intel’s GPU and driver teams get the needed time to continue working on future drivers and architectures. Right now, it doesn’t sound like many people are biting on Arc graphics cards. Intel recently dropped the official price of its A750 Limited Edition card to $249, down $40 or 14 percent from the launch price. Big companies don’t do that if parts are flying off the shelves. How long can Intel continue to bleed money on consumer GPUs? Perhaps more importantly, can Intel afford to not invest more money into consumer GPUs?

There’s a lot riding on Arc Battlemage, which is currently slated to arrive in 2024. Intel's Raja Koduri has also said that the company is more interested in competing with mainstream GPUs than worrying about halo cards, with $200–$300 being the sweet spot. We’ll have to wait and see if Intel can narrow the gap between Arc and its AMD and Nvidia competition come next year — which, incidentally, is when we expect we'll see the next generation Nvidia Blackwell and AMD RDNA 4 GPUs launch.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

PlaneInTheSky The reason I stick with Nvidia is because Nvidia has native DX9.0 support. AMD and Intel do not.Reply

It has nothing to do with performance for me.

There are so many older DX9.0 titles you can pick up for $1-$5. They always work great on Nvidia, on AMD it is hit or miss. It has nothing to do with performance, many are locked at 60fps anyway and the ones that aren't run at 100+ fps on potato hardware. It is about compatbility.

The Nvidia control panel allows you to set V-sync per game, per application, it works wonderfully on older DX9.0 titles also.

And it doesn't really matter to me that Intel gets better at DX9.0 performance. It is not about that, it is still not native support. Why would I pick a translation layer over actual native support Nvidia offers. There's no reason to pick Intel.

This whole "drivers are getting better, just wait" situation is the exact same reason I switched from AMD to Nvidia back in the day and never went back. I really loathed AMD driver issues and I now base my GPU decisions on driver quality first, and performance second. And Nvidia wins on this every single time. And I am not alone in this, AMD and Intel tend to offer more FPS per $, but Nvidia wins out on stability, compatibility, NVENC, CUDA, etc, and Nvidia dominates as a result. -

ohio_buckeye Except nvidia’s price gouging lately. Being realistic though amd or Intel would likely do the same if they could.Reply

I’ll be interested to see Intel’s 2nd generation cards. -

digitalgriffin ReplyPlaneInTheSky said:The reason I stick with Nvidia is because Nvidia has native DX9.0 support. AMD and Intel do not.

It has nothing to do with performance for me.

There are so many older DX9.0 titles you can pick up for $1-$5. They always work great on Nvidia, on AMD it is hit or miss. It has nothing to do with performance, many are locked at 60fps anyway and the ones that aren't run at 100+ fps on potato hardware. It is about compatbility.

The Nvidia control panel allows you to set V-sync per game, per application, it works wonderfully on older DX9.0 titles also.

And it doesn't really matter to me that Intel gets better at DX9.0 performance. It is not about that, it is still not native support. Why would I pick a translation layer over actual native support Nvidia offers. There's no reason to pick Intel.

This whole "drivers are getting better" situation is the exact same reason I switched from AMD to Nvidia back in the day and never went back. I really loathed AMD driver issues and I now base my GPU decisions on driver quality first, and performance second. And Nvidia wins on this every single time. And I am not alone in this, AMD and Intel tend to offer more FPS per $, but Nvidia wins out on stability, compatibility, NVENC, CUDA, etc, and Nvidia dominates as a result.

Non-native dx9 support? What have you been smoking? A large part of the whole article is talking about how dx9 emulation has been replaced by a real dx9 driver.

And AMD made some big gains in dx9 and openGL in the last two years. So AMD are a heck of a lot closer to Nvidia gen on gen with similar disparities compared to dx 12. -

kerberos_20 Reply

source that amd doesnt have native dx9 support?PlaneInTheSky said:The reason I stick with Nvidia is because Nvidia has native DX9.0 support. AMD and Intel do not. -

PlaneInTheSky Replysource that amd doesnt have native dx9 support?

Source: Microsoft

https://i.postimg.cc/PxHthn4c/sdfsfsfsfsfs.png -

KyaraM Reply

AMD already is doing it. The 7900XT is not that much better than the 4070Ti, and loses in RT, and costs more. And even without that, everyone complains about Nvidia prices, but AMD is right up there, too, selling for 1 grand and all.ohio_buckeye said:Except nvidia’s price gouging lately. Being realistic though amd or Intel would likely do the same if they could.

I’ll be interested to see Intel’s 2nd generation cards. -

PlaneInTheSky Replydigitalgriffin said:And AMD made some big gains in dx9 and openGL in the last two years.

AMD already said they don't give a crap about all their DX9 driver issues and won't devote time to older games.

I am voting with my wallet, just like everyone else buying Nvidia. People sometimes ask why AMD (and now Intel) sell so poorly while offering similar or better FPS per $. You really don't need to read the tea leaves to discover why, it is because Nvidia has better compatibility, driver stability, support, NVENC, CUDA, etc.

Intel is off to a really poor start. AMD's driver issues have haunted them for years, and they will haunt Intel for years. Drivers matter at least as much as the hardware.

https://i.postimg.cc/L8v7hK2w/sdfsfsfsfsf.jpg -

kerberos_20 Reply

oh dude, that page is dated as hell...that is for legacy intel/amd hardware and pretty much for mobile devices, you could scroll down abit and see thisPlaneInTheSky said:Source: Microsoft

https://i.postimg.cc/PxHthn4c/sdfsfsfsfsfs.png

The last drivers for these devices are those available on WU and on the OEM/IHV’s websites; many date from Vista, and many of these final version drivers exhibit problems on Windows 8. In addition, nVidia has recently stated that they will not support their DX9 (Vista and older) mobile (notebook) parts for Windows 8. They continue to support their desktop DX9 parts -

kerberos_20 Reply

yes there were issues when amd switched from crimson to adrenalin...fixed already btwPlaneInTheSky said:AMD already said they don't give a crap about all their DX9 driver issues and won't devote time to older games.

I am voting with my wallet, just like everyone else buying Nvidia. People sometimes ask why AMD (and now Intel) sell so poorly while offering similar or better FPS per $. You really don't need to read the tea leaves to discover why, it is because Nvidia has better compatibility, driver stability, support, NVENC, CUDA, etc.

Intel is off to a really poor start. AMD's driver issues have haunted them for years, and they will haunt Intel for years. Drivers matter at least as much as the hardware.

https://i.postimg.cc/L8v7hK2w/sdfsfsfsfsf.jpg -

PlaneInTheSky Replyyes there were issues when amd switched from crimson to adrenalin...fixed already btw

Right, it's the same story every year. AMD has major driver issues every year, and every single year there are people coming out of the woodworks claiming that "everything is fixed now", and "it's improving".

GPU are very costly. They need to work and need to work perfectly. It would requires years, years, of perfect drivers for AMD to regain the trust of all the people who had stability issues, crashes and DX9.0 issues, for AMD to ever regain these users who are now used to Nvidia drivers, Nvidia that offers compability and stability.

And Intel better watch out if they think drivers are secondary to hardware, because AMD's reputation of having bad drivers haunts them in sales.