AMD 3D V-Cache enables RAM disk to hit 182 GB/s speeds — over 12X faster than the fastest PCIe 5.0 SSDs

Who would have thought that the best CPUs could rival the best SSDs? Apparently, it's possible to run a RAM disk on AMD's Ryzen 3D V-Cache processors and achieve sequential read and write speeds that blow even the fastest PCIe 5.0 SSDs out of the water.

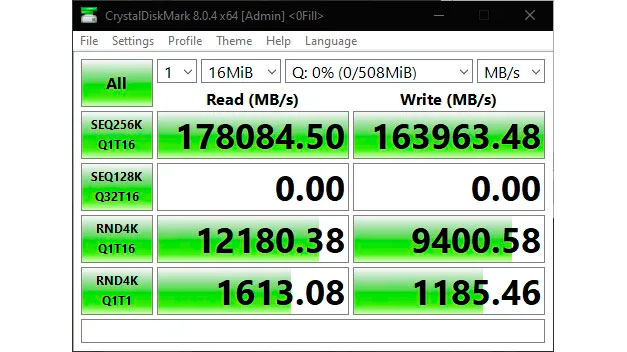

The enigma started with an intriguing screenshot shared by our cooling expert Albert Thomas, where a RAM disk delivered sequential read and write speeds around 178 GB/s and 163 GB/s, respectively, in CrystalDiskMark. What stood out was that the results were reportedly from a RAM disk running on AMD's Ryzen 7 7800X3D processor.

At first, there was some skepticism to the claim because you would need to expose the L3 as a block storage device to run the CrystalDiskMark benchmark. The supposed volume (508MB) is larger than the 3D V-Cache on the Ryzen 7 7800X3D, which has 96MB of L3 cache. However, there appears to be a legitimate method to leverage the 3D V-Cache for a RAM disk.

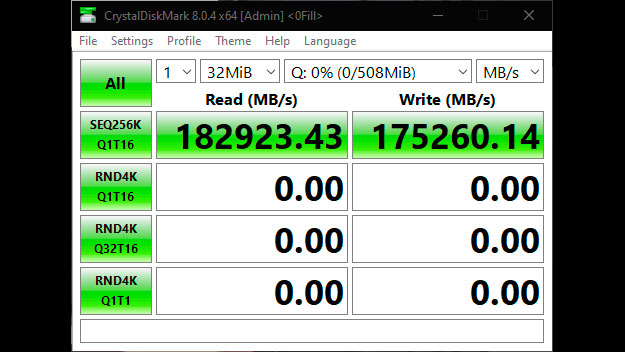

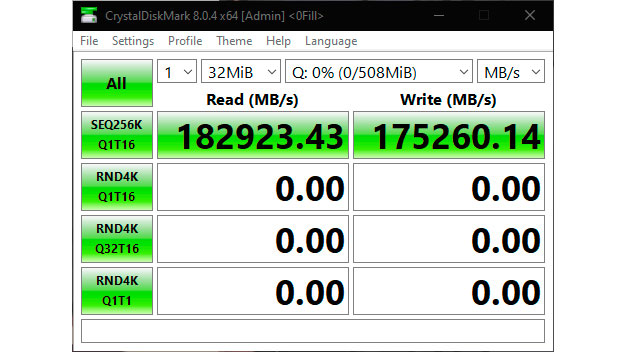

Nemez, a user on X (formerly Twitter), discovered the method. The enthusiast shared the steps to make it work in February, but it had passed under the radar. The results were even more remarkable than those from Thomas, with the RAM disk hitting sequential read and write speeds around 182 GB/s and 175 GB/s, respectively, on the last-generation Ryzen 7 5800X3D.

The method is based on OSFMount, free software that allows you to create RAM disks and mount the image files in different formats. Creating a RAM disk with FAT32 formatting doesn't sound like anything out of this world. However, you need to use precise settings on CrystalDiskMark to make it work. According to Nemez, you must configure the test values to SEQ 256KB, queue depth to 1, and threads to 16. You also have to set the data fill to zeros instead of random. Due to the nature of the system load, the method may not work on the first try, so you might have to run the benchmark a few times.

Seeing enthusiasts find new usage for AMD's 3D V-Cache is fascinating. While the performance figures look extraordinary, they're still far from fulfilling 3D V-Cache's potential. For instance, the first-generation 3D V-Cache has a peak throughput of 2 TB/s. AMD subsequently bumped the bandwidth up to 2.5 TB/s on the second-generation variant.

The experiment is cool but not practical in real-world usage because there's no consistent way to tap the 3D V-Cache. The method isn't infallible and sometimes requires trial and error work. Besides, the 3D V-Cache on consumer Ryzen chips is way too small to be helpful. For instance, the flagship Ryzen 9 7950X3D only has 128MB of L3 cache. On the other hand, AMD's EPYC processors, such as Genoa-X, which has 1.3GB of L3 cache, could be an interesting use case.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Nonetheless, we think there's potential with a 3D V-Cache and a RAM disk. It's a clever way of making old-school and new technologies gel together. SSDs have made RAM disks obsolete, but maybe massive slabs of 3D V-Cache can revive them. Just think of the possibilities if AMD embraced the idea and put out a fail-safe implementation where consumers can turn the 3D V-Cache into a RAM disk with a flip of a switch.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

hotaru.hino What would make this even more worthless is if you do manage to fill up the cache with stuff from the RAM disk, you've now evicted everyone else's data and instructions. So performance drops for everyone else because they all have cache misses now.Reply

It's amusing to see that number in a benchmark though. -

usertests This is a good time to speculate: when will AMD go for a bigger L3 cache (or even add L4)?Reply

3D V-Cache 1st/2nd gen are a single layer of 64 MiB SRAM, but AMD/TSMC said from the start that multiple layers are possible. There are diminishing returns for games and more layers would make it more expensive, but multi-layer 3D V-Cache chiplets could be made for the benefit of Epyc-X customers at least.

AMD might want to grow or shrink the SRAM layer capacity at some point. There's also a possibility of future microarchitectures sharing L3 between multiple chiplets, potentially allowing a single core to use more than the current 96 MiB limit. -

Pete Mitchell There is zero use for this on Ryzen outside of a one time "hey, that's cool". No matter how hard tech bloggers try to make it seem there is so they can pad out their articles.Reply -

GoldyChangus Reply

Is there any use for your comment other than to make yourself sound impressive by being so unimpressed by everything?Pete Mitchell said:There is zero use for this on Ryzen outside of a one time "hey, that's cool". No matter how hard tech bloggers try to make it seem there is so they can pad out their articles. -

waltc3 A fun foray into ram disks, but apples and oranges...;) A ram disk is way fast--but when you power down the machine, you lose your data. NVMe SSDs are not system ram! They hold their data when powered off. Very big difference. The correct performance comparison for NVMe is HDD. But HDD wins hands down when it comes to capacity comparison.Reply

This story reminded me of when I was using ram disks way back with my Amigas--I had a startup sequence that took a few minutes at boot to copy a bunch of disk-based data into a ram disk I automated at the same time. It was fun, for awhile, until I ran out of ram, among other major inconveniences, like the time it consumed at boot. Try and imagine having more than, say, a couple of Terabytes of system ram, so that when you booted the system it could make the ram disk and then copy ~2 TBs of program data to the ram disk. What about people with 10 TBs of programs, games, and data? You quickly run into the practical limitations of ram disks. System ram is very fast and so cheap these days because it cannot store data when the power is off as that is not its purpose. -

AdelaideSimone Reply

I think you missed the point of the comparison. The point was that for most use cases, DRAM RAM disks are not beneficial anymore because top-tier SSD speeds nearly reach DRAM speeds.waltc3 said:A fun foray into ram disks, but apples and oranges...;) A ram disk is way fast--but when you power down the machine, you lose your data. NVMe SSDs are not system ram! They hold their data when powered off. Very big difference. The correct performance comparison for NVMe is HDD. But HDD wins hands down when it comes to capacity comparison.

This story reminded me of when I was using ram disks way back with my Amigas--I had a startup sequence that took a few minutes at boot to copy a bunch of disk-based data into a ram disk I automated at the same time. It was fun, for awhile, until I ran out of ram, among other major inconveniences, like the time it consumed at boot. Try and imagine having more than, say, a couple of Terabytes of system ram, so that when you booted the system it could make the ram disk and then copy ~2 TBs of program data to the ram disk. What about people with 10 TBs of programs, games, and data? You quickly run into the practical limitations of ram disks. System ram is very fast and so cheap these days because it cannot store data when the power is off as that is not its purpose.

On build servers, I've used RAM disks on occasion because there's usually a very good speed up on huge projects, like LLVM.

Shouldn't you be on Reddit or something, what with your idiotic "AkTShuAlly"? They also mentioned the currently larger sizes like 1.3GB, and it being possibly useful in the future. Read: FUTURE.Pete Mitchell said:There is zero use for this on Ryzen outside of a one time "hey, that's cool". No matter how hard tech bloggers try to make it seem there is so they can pad out their articles.

Having the ability to easily map files and ensure they remain in cache can be very beneficial. It's not something most programs should be doing, especially without explicitly making the user aware. But aptly used, it can be an extreme boon.. -

das_stig Maybe of little use now, but one day when the L3 is big enough to not miss some memory being offloaded to a complete app or O/S.Reply -

hotaru.hino Reply

It could be, but cache is transparent to software in most ISAs. MIPS is the only one I'm aware of that allows software to directly manipulate cache. Besides the method that the person used to get the figure, at least as the article writes it, is how cache is supposed to work anyway. If you want something to remain in cache, just have the software poke at the memory location enough times.AdelaideSimone said:Having the ability to easily map files and ensure they remain in cache can be very beneficial. It's not something most programs should be doing, especially without explicitly making the user aware. But aptly used, it can be an extreme boon.. -

bit_user File Under: "Hold my beer"Reply

This is a neat parlor trick, but utterly and completely useless.

Seeing enthusiasts find new usage for AMD's 3D V-Cache is fascinating. While the performance figures look extraordinary, they're still far from fulfilling 3D V-Cache's potential. For instance, the first-generation 3D V-Cache has a peak throughput of 2 TB/s.

First, it's not a new use. It's a cheap trick. You can't actually use it for any practical purpose, because it stops working as soon as your PC starts doing literally anything else. This can only work when it's comptelely idle, and isn't even reliable then.

Second, the V-Cache is shared by all of the cores on the chiplet. A single core can't max out the 2 TB/s of bandwidth.

Source: https://chipsandcheese.com/2022/01/21/deep-diving-zen-3-v-cache/

AMD's EPYC processors, such as Genoa-X, which has 1.3GB of L3 cache, could be an interesting use case.

Nope. Won't work for two reasons. The first is that AMD's L3 cache is segmented. Each CCD only gets exclusive access to its own slice. That means even the mighty EPYC wil top out at 96 MB, for something like a RAM drive. Worse, if the thread that's running your benchmark gets migrated to another CCD, then your performance will drop because now it has to fault in the contents from the other CCD.

Finally - and this gets to the heart of how useless the trick actually is - your system must be completely idle. Some other background process spinning up can blow your cache contents, forcing the benchmark to re-fetch it from DRAM. That's why the benchmark is so temperamental and must be run multiple times to get a good result. So, good job taking a 96-core/192-thread CPU and turning it into a single-core, single-thread one!

we think there's potential with a 3D V-Cache and a RAM disk. It's a clever way of making old-school and new technologies gel together. SSDs have made RAM disks obsolete, but maybe massive slabs of 3D V-Cache can revive them.

Nope. Nothing you do on your PC is that I/O-bound, especially not if it fits in such a small amount of space.

RAM disks started to go obsolete even before the SSD era, when operating systems do sophisticated caching and read-ahead optimizations. Those are still at play now, but you don't notice them as much because the difference is much less vs. reading from storage.

Just think of the possibilities if AMD embraced the idea and put out a fail-safe implementation where consumers can turn the 3D V-Cache into a RAM disk with a flip of a switch.

Absolutely terrible idea! You're saying you want to reserve a huge chunk of your L3 cache for storage? The ratio of memory reads/writes to storage reads/writes is many orders of magnitude higher. This would absolutely tank performance.