Intel Kills Itanium, Last Chip Will Ship in 2021

On Thursday, Intel notified its partners that it's going to discontinue the Itanium 9700-series Kittson processors, which were the last processors on the market to use Intel’s Itanium architecture. Intel plans to stop shipping the Itanium-based Kittson processors by mid-2021

The impact on customers is expected to be minimal as HP Enterprise (HPE) is the only Itanium customer left. HPE is expected to support its Itanium-based servers until late 2025. HP is expected to request the final Itanium processor orders by January 30, 2020. Intel will then ship the last Itanium CPU by July 29, 2021.

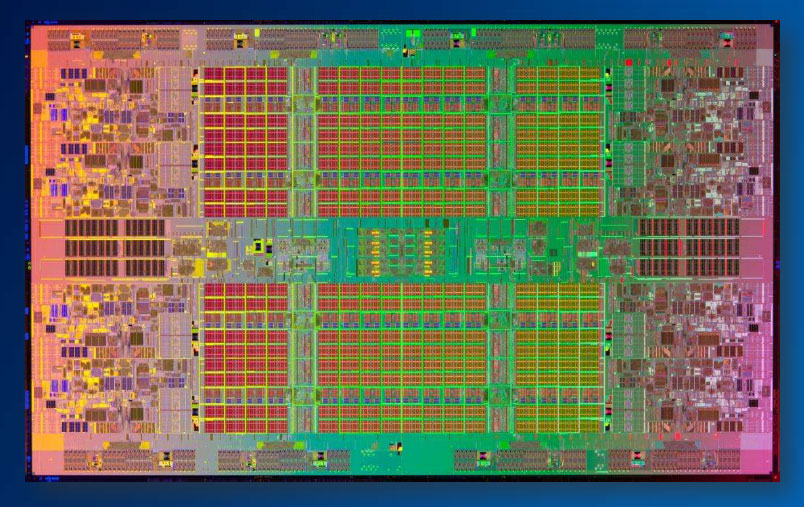

Intel introduced the Kittson processors in 2017, while also announcing that they will be the last processors that the company will make using the IA-64 architecture. Kittson wasn’t a brand new microarchitecture design, but rather a higher clocked version of the 2012 9500-series Poulson microarchitecture. The Poulson microarchitecture was supposed to be a performance class above Intel's Xeon processors, featuring 12-instructions per cycle issue width, four-way Hyper-Threading and multiple reliability, availability and serviceability (RAS) capabilities.

Intel also hasn’t moved Itanium chips manufacturing to a new process node since 2010 and is made on Intel’s 2010 32nm planar processor node.

Although the end-of-life for the Itanium chips has been planned since at least 2017, the fact that Hyper-Threading was found to be be vulnerable to at least two attacks last year, may have also played a role in sunsetting its Hyper-Threading-heavy Itanium processors.

Itanium, the Future that Never Was

Itanium (originally called EPIC) was a 64-bit architecture initially developed by HP before Intel joined efforts. The tech was supposed to replace the 32-bit x86 architecture, once operating systems and applications would start needing to support 64-bit operations.

The first Itanium processors were supposed to ship in 1998, but despite the fact that Microsoft and other operating system vendors committed to supporting it, it turned out that Itanium’s very long instruction word (VLIW) architecture was too difficult to implement while maintaining the competitive prices and performance of other architectures, including the 32-bit x86 architecture.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The first Itanium chip was delayed to 2001 and failed to impress most potential customers who stuck to their x86, Power and SPARC chips.

By 2003, AMD launched the 64-bit AMD64 instruction set architecture (ISA) extension to the x86 ISA, which was quickly adopted by the industry due to the easy migration path from the 32-bit x86 ISA. Under pressure from Microsoft, Intel also adopted the AMD64 ISA, and the rest is history. AMD64 became the de facto 64-bit ISA in the industry. Intel kept improving the Itanium architecture over the years, but it's been nothing like the rate of improvement we saw for x86 chips in the past two decades.

HP itself may have single-handedly kept Itanium alive in the time since the AMD64 ISA took over. In a 2008 lawsuit between HP and Oracle, unsealed documents revealed that HP paid Intel $440/£336 million to support the development of Itanium processors between 2009 and 2014.

HP and Intel signed another contract together promising Intel another $690/£528 million for the development of Itanium processors until 2017, which is when the last Itanium processor was built.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

jimmysmitty The biggest loss is that we never went to a pure 64bit uArch and are still tied down to the aging x86 design. While its nice to have for migration it doesn't help us move to a pure 64bit world. Many programs are still written in 32bit which don't take advantage of the biggest thing 64bit gave us, more than 4GB of total system memory.Reply -

joeblowsmynose Reply21732215 said:The biggest loss is that we never went to a pure 64bit uArch and are still tied down to the aging x86 design. While its nice to have for migration it doesn't help us move to a pure 64bit world. Many programs are still written in 32bit which don't take advantage of the biggest thing 64bit gave us, more than 4GB of total system memory.

If the main feature of a 64bit world is the ability to use more than 4gb of RAM (which I agree, it is), and many programs still don't require more than 4gb, and, if your program does need more than that then programing it in 64 bit would be your choice (if HD caching can't be used due to performance restrictions), I am not sure where the loss really is ...

Would there be an advantage if we removed the 32bit instruction sets from everything? If so, where might we find that? -

richardvday That's not true.Reply

The operating system vendors are almost unanimously killing off 32 bit versions of there OS anyway

Just because an application is 32 bit does not limit the operating system to only 4gb.

Now that 32-bit application is limited in how much memory it can access.

Most applications do not need that much memory anyway and the applications that do are written in 64-bit so they can access the memory they need .

I'm not saying we should stick to 32 bit by any means the move to 64-bit needs to continue by default most companies are writing their applications in 64-bit now anyway -

jimmysmitty Reply21733044 said:21732215 said:The biggest loss is that we never went to a pure 64bit uArch and are still tied down to the aging x86 design. While its nice to have for migration it doesn't help us move to a pure 64bit world. Many programs are still written in 32bit which don't take advantage of the biggest thing 64bit gave us, more than 4GB of total system memory.

If the main feature of a 64bit world is the ability to use more than 4gb of RAM (which I agree, it is), and many programs still don't require more than 4gb, and, if your program does need more than that then programing it in 64 bit would be your choice (if HD caching can't be used due to performance restrictions), I am not sure where the loss really is ...

Would there be an advantage if we removed the 32bit instruction sets from everything? If so, where might we find that?

The main advantage is a clean uArch that doesn't rely on an older one to function. x86-64 relies on x86 code to run and even if all programs and OSes move to 64bit we will still be on x86-64. Still tied to an older standard.

21733109 said:That's not true.

The operating system vendors are almost unanimously killing off 32 bit versions of there OS anyway

Just because an application is 32 bit does not limit the operating system to only 4gb.

Now that 32-bit application is limited in how much memory it can access.

Most applications do not need that much memory anyway and the applications that do are written in 64-bit so they can access the memory they need .

I'm not saying we should stick to 32 bit by any means the move to 64-bit needs to continue by default most companies are writing their applications in 64-bit now anyway

You can still get 32bit 10 ISOs but I was mainly talking about software. Windows 7 64bit was smoother and faster than its 32bit counterpart, much different than XP or Vista where 64bit was still a mess, but it always makes me wonder if an OS is written purely for a 64bit uArch and we don't have any x86 32bit code to pull us down how much better would it be? -

CerianK A few thoughts:Reply

1. Although there are some 64-bit programs that require access to more than 4GB of RAM, few of those need access to more than 4GB all at once. Most could be rewritten to use the blazing-fast paging of a modern SSD, with no significant penalty if done properly. Back when IA64 came out, slow paging was a big impetus for expanding RAM addressing. Contrary to popular belief, almost all 64-bit dependent operations can be broken down into 32-bit operations with minimal performance degredation. Even 128-bit operations run extremely well on today's 64-bit CPUs (thanks to advances in both compilers and CPU micro-ops).

2. Although 64-bit OSes are getting better, there is still room for improvement. My colleagues are still amazed at how fast some of our-old-and-tired 32-bit multi-threaded applications are when run under Windows 7's XP Mode. Microsoft only gave it 1 core to work with and yet it is instantly responsive with most anything I click or throw at it (within reason). I wouldn't be surprised if one of the reasons that its free-license on 7 Pro wasn't passed on to Win 8+ was that it was 'too good'. On the other hand, perhaps I'm the only one that has to wait 5 or 10 seconds for the Win 10 Start Menu to open on occasion, so having it open in 1/10th of a second in XP Mode sets an unreasonable expectation. -

lsorense Reply21732215 said:The biggest loss is that we never went to a pure 64bit uArch and are still tied down to the aging x86 design. While its nice to have for migration it doesn't help us move to a pure 64bit world. Many programs are still written in 32bit which don't take advantage of the biggest thing 64bit gave us, more than 4GB of total system memory.

We already had pure 64 bit architectures, like the Alpha. Of course intel as well as incompetent management at Digital, Compaq and HP killed that off. It was certainly a better design than the itanium. At least we got hyperthreading and point to point interconnects (like hypertransport and QPI) out of the Alpha work and intel and AMD gained some good leftover engineers.

The end of the Itanium is a relief. About time. Good riddance. All it caused was harm to better architectures and divert resources from better things. -

sergeyn Reply21733412 said:A few thoughts:

1. ... Most could be rewritten to use the blazing-fast paging of a modern SSD, with no significant penalty if done properly. ....

I have an impression that you have no idea what you are talking about. -

Xajel Reply21732215 said:The biggest loss is that we never went to a pure 64bit uArch and are still tied down to the aging x86 design. While its nice to have for migration it doesn't help us move to a pure 64bit world. Many programs are still written in 32bit which don't take advantage of the biggest thing 64bit gave us, more than 4GB of total system memory.

Backward compatibility has it's cost, Even today, you can't install Windows 98 for example on a modern system, hell some system can't even start Windows XP or 2000, this is mainly duo to the move to UEFI from BIOS but even the CPU's now are different. Apple is leading the move in this regard, they stopped accepting 32bit iOS apps a while ago, Google followed them, even Apple now doesn't accept 32bit macOS apps. They're forcing the developers to move away from 32bit.

Both Intel and AMD slowly deprecate older instructions that no longer are required, then eventually will remove them all together, this happened before with 16bit and 32bit, starting from the OS (Windows) then moving to the CPU's.

Any company can make a big move like this, pure 64bit CPU. But moving the whole world to it is very hard and costly, nearly impossible.

Itanium while it was good, but it's performance wasn't that much to justify the move (cost, time and loss of backward compatibility), it's dependence on VLIW also made things harder compared to x86, you know x86 isn't just well known to developers, it's also easier to develop and optimise specially for the kind of software we're using.

Itanium x86/32bit code was basically emulation, suffered huge performance drop also to the point it was slower than most much much less expensive x86 systems, while Itanium was first meant for enterprise market, the later issue meant it will never find it self go to the mainstream.

AMD64 paved the way not just for the mainstream market, but it also promoted many enterprise/server market to move away from SPARC/POWER/Itanium to lower cost and easy to develop x86 systems. Intel later adopted the x86-64 with it's own implementation (they called it Intel64) and used it, the x86 market even in specialised market exploded then. -

lsorense Reply21736315 said:21733412 said:A few thoughts:

1. ... Most could be rewritten to use the blazing-fast paging of a modern SSD, with no significant penalty if done properly. ....

I have an impression that you have no idea what you are talking about.

I would certainly hate the idea of going back to every application doing it's own overlays to do code and data paging that we used to use back in the dos days. That was part of why people started using the 32 bit dos extenders so they could go to flat memory maps and use more memory and avoid that insanity.

And of course paging to an SSD constantly would be a great way to kill any SSD's lifespan. -

CerianK Reply

A few more thoughts, as I am an assembly language programmer, therefore my take on things might be a little different.21737805 said:21736315 said:21733412 said:A few thoughts:

1. ... Most could be rewritten to use the blazing-fast paging of a modern SSD, with no significant penalty if done properly. ....

I have an impression that you have no idea what you are talking about.

I would certainly hate the idea of going back to every application doing it's own overlays to do code and data paging that we used to use back in the dos days. That was part of why people started using the 32 bit dos extenders so they could go to flat memory maps and use more memory and avoid that insanity.

And of course paging to an SSD constantly would be a great way to kill any SSD's lifespan.

I was not suggesting to go back to 32-bit, but hinting at why IA-64 got it wrong (i.e. pressure to move forward due to slow paging, without consideration for existing code libraries). Extended/Expanded memory solutions were just a stop-gap measure that avoided the real issue with memory addressing. Aside from the memory addressing issue, extending 32-bit code to emulate 64-bits or above is tedious (and for more advanced projects requires degrees in both mathematics and computer science).

Paging to an SSD is certainly off-limits for write-intensive operations, therefore not a viable solution for certain classes of programs.

I am pleased with the current architectural state in terms of bringing programming back to the masses, but worry that there is not enough push in basic science education to address the potential future issue with a wide-spread need for quantum algorithm development (e.g. trying to learn a second spoken language after 5-8 years old is difficult, though not impossible, but usually is never as robust as having learned it much earlier).