Nvidia Presents Adaptive Temporal Anti-Aliasing Technology

Nvidia has developed a new anti-aliasing technique it dubbed Adaptive Temporal Anti-Aliasing (ATAA). ATAA builds upon the existing Temporal Anti-Aliasing (TAA) method, but kicks things up a notch by adding adaptive ray tracing rendering techniques to the mix.

Temporal Anti-Aliasing has become one of the more popular anti-aliasing options for gamers as it produces great results in most situations without imposing a performance burden on their systems. But as with every anti-aliasing method, Temporal Anti-Aliasing is not free of drawbacks, and the principal issue is that it tends to add a considerable amount of blur to the scene. The blurriness is more noticeable than ever when there is a lot of object motion on screen. However, Nvidia has found a solution to this problem - or so it claims.

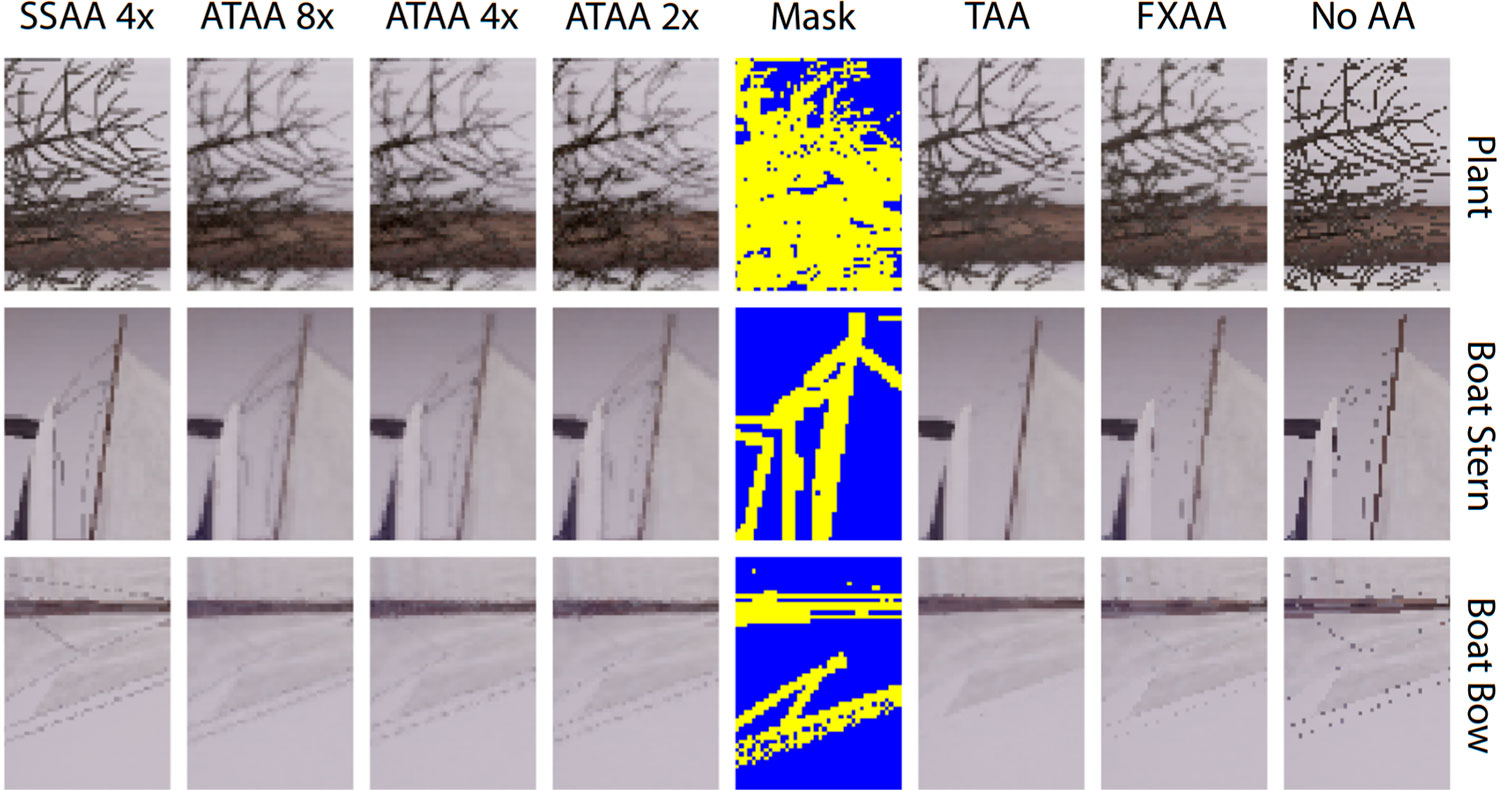

According to Nvidia's recently published document on Adaptive Temporal Anti-Aliasing, the new algorithm promises to remove the blurry and ghosting artifacts associated with the Temporal Anti-Aliasing technique, and at the same time, deliver a level of image quality close to 8x Super-Sampling Anti-Aliasing (SSAA). But what's even more impressive is staying within the 33ms standard that dictates the majority of games. The secret sauce in Nvidia's recipe is real-time adaptive sampling made possible with the implementation of ray tracing technology and rasterization. Microsoft's DirectX Raytracing (DXR) API and Nvidia RTX act as the cornerstones for the Adaptive Temporal Anti-Aliasing.

Nvidia cooked up a quick demonstration in Unreal Engine 4 to show off the prowess of its Adaptive Temporal Anti-Aliasing technique. The scene is that of a modern house. Not much is known about the test system's specifications other that it was powered by a $2,999 Nvidia Titan V graphics card. The system was running on a Windows 10 operating system with the latest April 2018 Update (1803) and Nvidia's GeForce 398.11 WHQL graphics driver. Nvidia confirmed that ATAA was operating at 18.4ms at 8x supersampling, 9.3ms at 4x supersampling, and 4.6ms at 2x supersampling for the sample image with a 1920 x 1080 resolution. The demonstration included the creation of the TAA result, the segmentation mask, and the adaptive ray tracing.

Unfortunately, it'll take some time before we see Adaptive Temporal Anti-Aliasing in games as it relies on Microsoft's DXR API, which is yet to be supported by mainstream gaming graphics cards. Nvidia has scheduled a talk about its new anti-aliasing technique at SIGGRAPH 2018, which will take place in Vancouver, British Columbia between August 12-16.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

jimmysmitty https://blogs.msdn.microsoft.com/directx/2018/03/19/announcing-microsoft-directx-raytracing/Reply

"What Hardware Will DXR Run On?

Developers can use currently in-market hardware to get started on DirectX Raytracing. There is also a fallback layer which will allow developers to start experimenting with DirectX Raytracing that does not require any specific hardware support. For hardware roadmap support for DirectX Raytracing, please contact hardware vendors directly for further details."

Per Microsoft it should be viable on current in-market hardware such as Pascal or Vega GPUs. -

Verrin I don't know, all these new forms of AA still seem to suffer from the blurring effect. MSAA and SSAA seem to be dead technique in modern titles, despite their superior quality. I understand that they're too expensive for most configurations, but I would still like the option, in the off-chance I have some kind of headroom for it (now or perhaps with future hardware).Reply

Ultimately these days, I always opt to turn off any kind of AA altogether and just go for a resolution bump to help smooth out the jaggies. And if I was forced to play at lower resolutions, I would still pick the horror of no-AA over the vaseline of TAA and FXAA. That's how much I dislike the effect. ATAA doesn't seem much better judging from this demonstration. -

Gillerer MSAA is unviable not because of the rendering cost - even if it is high - but because modern game titles (post DX9) employ deferred rendering techniques that cause MSAA to not work for most jaggies in the image.Reply

Check Deferred shading#Disadvantages (on Wikipedia).

-

bigpinkdragon286 I can't help but be reminded of Matrox's 16 year old Fragment Anti-Aliasing technology. Looks like somebody finally updated it to work within textures this time, but looking at the new mask images is like looking at an updated version of the old mask images.Reply

-

Dosflores Reply21191935 said:https://blogs.msdn.microsoft.com/directx/2018/03/19/announcing-microsoft-directx-raytracing/

"What Hardware Will DXR Run On?

Developers can use currently in-market hardware to get started on DirectX Raytracing. There is also a fallback layer which will allow developers to start experimenting with DirectX Raytracing that does not require any specific hardware support. For hardware roadmap support for DirectX Raytracing, please contact hardware vendors directly for further details."

Per Microsoft it should be viable on current in-market hardware such as Pascal or Vega GPUs.

Where does Microsoft say that it should be viable on Pascal or Vega? Microsoft says you can use currently in-market hardware, which could mean the Titan V alone.

-

shpankey So AA techniques add input lag?? I guess I didn't know that. More reason to never ever us it ever again no matter what.Reply -

jimmysmitty Reply21193978 said:21191935 said:https://blogs.msdn.microsoft.com/directx/2018/03/19/announcing-microsoft-directx-raytracing/

"What Hardware Will DXR Run On?

Developers can use currently in-market hardware to get started on DirectX Raytracing. There is also a fallback layer which will allow developers to start experimenting with DirectX Raytracing that does not require any specific hardware support. For hardware roadmap support for DirectX Raytracing, please contact hardware vendors directly for further details."

Per Microsoft it should be viable on current in-market hardware such as Pascal or Vega GPUs.

Where does Microsoft say that it should be viable on Pascal or Vega? Microsoft says you can use currently in-market hardware, which could mean the Titan V alone.

Titan V is not a consumer grade gaming card. DXR is mainly a gaming technology. Therefore it is easy to assume that "currently in-market hardware" means current gaming hardware, Pascal and Vega.

As well Volta only has one feature above Pascal in DX12_1 which is tier 3 Conservative Rasterization while Pascal is Tier 2. That should have NOTHING to do with Ray Tracing as Ray Tracing and Rasterization are two methods of processing models.

https://www.pcper.com/news/Graphics-Cards/NVIDIA-RTX-Technology-Accelerates-Ray-Tracing-Microsoft-DirectX-Raytracing-API

"DXR will still run on other GPUS from NVIDIA that aren’t utilizing the Volta architecture. Microsoft says that any board that can support DX12 Compute will be able to run the new API. But NVIDIA did point out that in its mind, even with a high-end SKU like the GTX 1080 Ti, the ray tracing performance will limit the ability to integrate ray tracing features and enhancements in real-time game engines in the immediate timeframe. It’s not to say it is impossible, or that some engine devs might spend the time to build something unique, but it is interesting to hear NVIDIA infer that only future products will benefit from ray tracing in games."

Microsoft states any cards that support DX12 Compute will run DXR. nVidia only states that the Titan V will be the best performing GPU that is currently out (until of course the 11 series is announced) but that anything else will still be capable of running it.

Is that a good enough quote? -

Dosflores Reply21194884 said:Is that a good enough quote?

Not really.

Microsoft talks about "specific hardware support" and about "hardware roadmap support", which makes it easy to assume that current gaming hardware isn't viable for it. There's a great difference between "able to run the new API" and being viable. Intel integrated GPUs are also able to run the DX12 API, but I wouldn't call them viable for gaming. -

jimmysmitty Reply21194926 said:21194884 said:Is that a good enough quote?

Not really.

Microsoft talks about "specific hardware support" and about "hardware roadmap support", which makes it easy to assume that current gaming hardware isn't viable for it. There's a great difference between "able to run the new API" and being viable. Intel integrated GPUs are also able to run the DX12 API, but I wouldn't call them viable for gaming.

I was just correcting an error in the article. The article stated that there was no support for DXR in current hardware. That is false. Microsoft claims that DXR will be supported by any GPU that supports DX12 Compute (i.e. Pascal, Vega, GCN 5, Maxwell etc)

As for Ray Tracing, it has been supported for quite a while, older than Vega and Pascal. The issue is that its a very resource intensive method which is why no games actually utilize it fully, some do but as a mix with Ray Tracing being utilized for some and rasterization for others.

And yes an Intel IGP might support it. They actually support most of DX12_1. However very few people will ever game on an Intel or any IGP.

So again DXR is currently supported by Pascal and Vega.