How Nvidia's NVLink Boosts GPU Performance

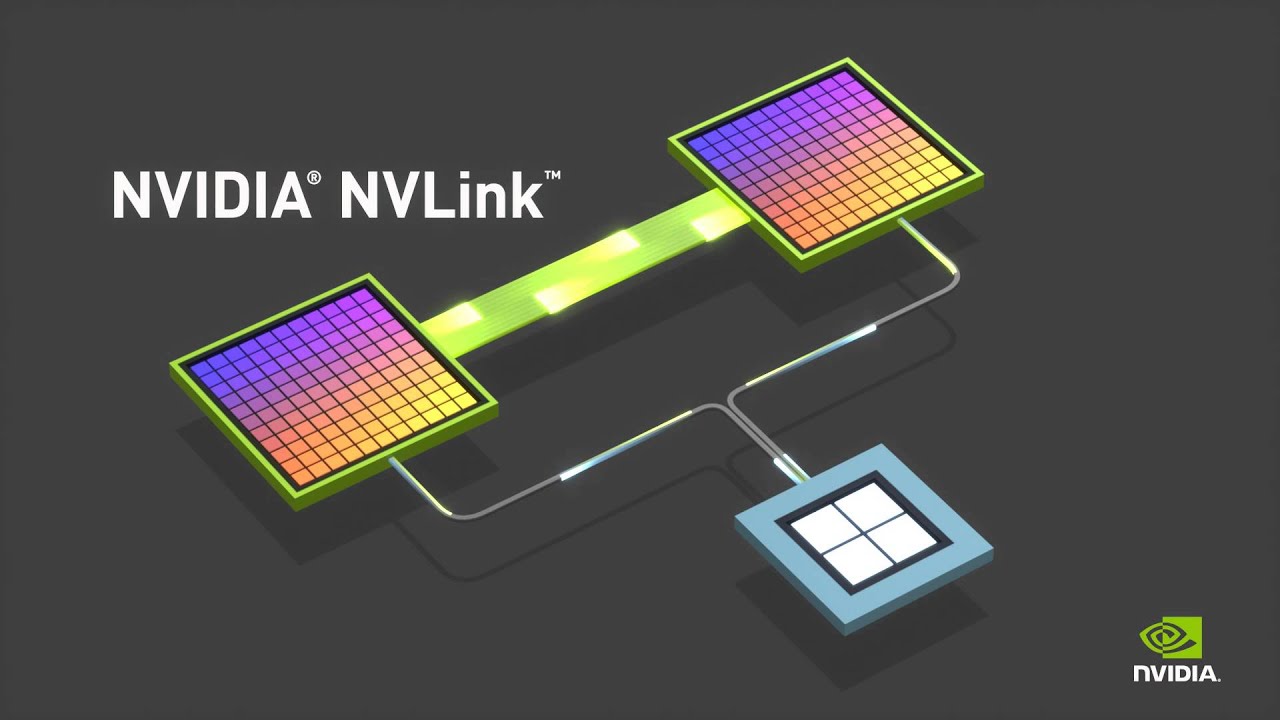

NVLink is a new feature for Nvidia GPUs that aims to drastically improve performance by increasing the total bandwidth between the GPU and other parts of the system.

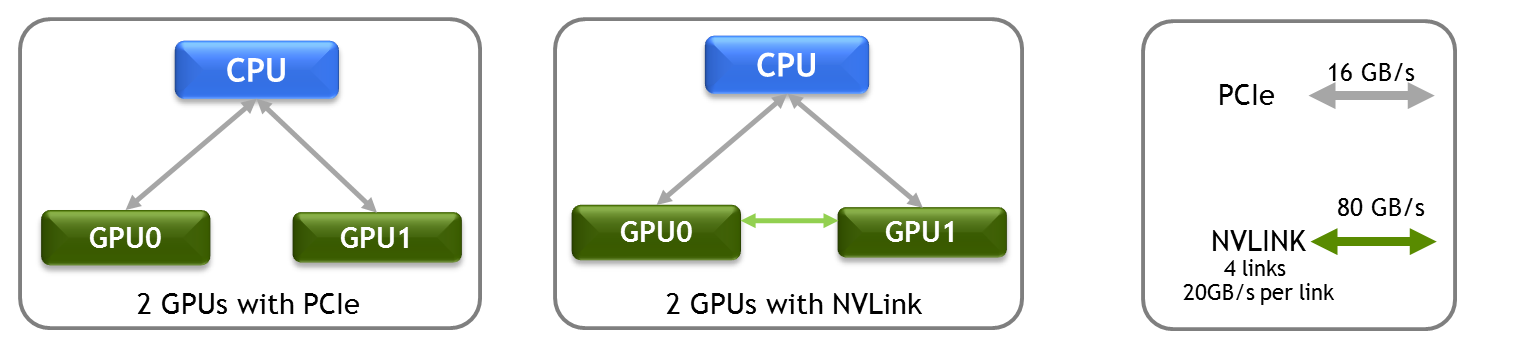

In modern PCs, GPUs and numerous other devices are connected by PCI-E lanes to the CPU's or the motherboard's chipset. For some GPUs, using the available PCI-E lanes provides sufficient bandwidth that a bottleneck does not occur, but for high-end GPUs and multi-GPU setups, the number of PCI-E lanes and total bandwidth available is insufficient to meet the needs of the GPU(s) and can cause a bottleneck.

In an attempt to improve this situation, some motherboard manufacturers will sometimes opt to use PLX chips, which can help better utilize the bandwidth from the PCI-E lanes coming from the CPU, but overall bandwidth does not really increase. Nvidia's solution to this problem is called NVLink.

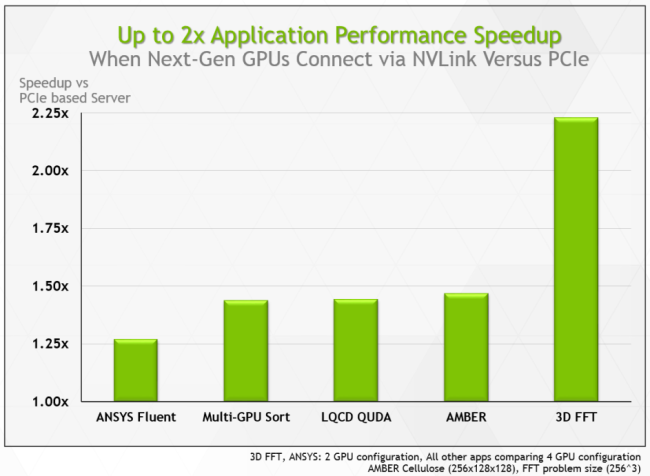

According to Nvidia, NVLink is the world's first high-speed interconnect technology for GPUs, and it allows data to be transferred between the GPU and CPU five to 12 times faster than PCI-E. Nvidia also claimed that application performance using NVLink can be up to twice as fast, relative to PCI-E.

Programs that utilize the Fast Fourier Transform (FFT) algorithm, which is heavily used in seismic processing, signal processing, image processing and partial differential equations, see the greatest performance increase. These types of applications are heavily used inside of servers and are typically bottlenecked by the PCI-E bus.

Other applications used in various fields of research see performance increases, too. According to Nvidia, one application used to study the behavior of matter by simulating molecular structures, called AMBER, gains up to a 50 percent performance increase using NVLink.

When two GPUs are utilized inside of the same system, they can be joined by four NVLink links, which can provide 20 GB/s transfer per link, totaling 80 GB/s transfer between the two cards. Because the cards no longer need to communicate using some of the scarce PCI-E bandwidth, this frees up additional bandwidth for the CPU to send data to the GPUs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Nvidia claimed that IBM is currently integrating it into future POWER CPUs, and the U.S. Department of Energy announced that it will utilize NVLink in its next flagship supercomputer.

Follow Michael Justin Allen Sexton @LordLao74. Follow us @tomshardware, on Facebook and on Google+.

-

digitalvampire This is really impressive and will be great for HPC application developers ... as long as it's not locked down for CUDA only. There have been enough devs (especially in academia) already moving away from CUDA to OpenCL due to vendor lock-in. I'd hate to see a great hardware innovation largely ignored because of this, especially with NVidia's great GPUs.Reply -

gamebrigada This doesn't make any sense. PCIE has been giving us plenty of bandwidth, it's always stayed ahead of actual needed performance. Its also a tried and true fact that 16 lanes of the thing are unnecessary for today's graphics cards. The performance difference between using 16 and 4 lanes is only marginal. The difference between x8 and x16 is pretty much zero. In fact some tested higher in x8 but within margin of error. This is why hooking up your graphics card over thunderbolt is a liable idea.Reply -

oczdude8 ReplyThis doesn't make any sense. PCIE has been giving us plenty of bandwidth, it's always stayed ahead of actual needed performance. Its also a tried and true fact that 16 lanes of the thing are unnecessary for today's graphics cards. The performance difference between using 16 and 4 lanes is only marginal. The difference between x8 and x16 is pretty much zero. In fact some tested higher in x8 but within margin of error. This is why hooking up your graphics card over thunderbolt is a liable idea.

You are assuming the only use GPUs have are for gaming. That's not true. The article CLEARLY states some of the applications that do benefit from this technology. GPUs are very good at computations, much much faster then CPUs. -

jasonelmore Haswell and Broadwell only have 1 16X PCI 3.0 Lane. That's good for two 980's in SLI, with each card running in 8x instead of 16x.Reply

When you add the third card, your out of bandwidth. Or if you use any sort of NVME PCI SSD like M.2, (which uses 4x PCI E 3.0 Lanes.)

thats why the big rigs use Haswell E.. Those 6 and 8 core Intel CPU's have 40 PCIE 3.0 Lanes

Thats what separates the big boys from the little boys. The number of pci lanes. -

mmaatt747 ReplyThis doesn't make any sense. PCIE has been giving us plenty of bandwidth, it's always stayed ahead of actual needed performance. Its also a tried and true fact that 16 lanes of the thing are unnecessary for today's graphics cards. The performance difference between using 16 and 4 lanes is only marginal. The difference between x8 and x16 is pretty much zero. In fact some tested higher in x8 but within margin of error. This is why hooking up your graphics card over thunderbolt is a liable idea.

Forget about GTX gaming cars. Workstation cards (Quadros) can benefit from this technology.

-

Shankovich This is more or less something for professionals. I have to say thought that nVidia's use in Fluent is pretty bad. It only recently got implemented, but just for radiation models. Most of us use Fluent for fluid dynamics ontop of heat transfer so....yea. Would like to see actual GPGPU use in Fluent soon. (To my credit, I did my thesis on wing design using a K5000).Reply

Also, what is NV Link physically? Is it a bridge wire, chip? I don't want a g-sync style premium on gaming boards if this comes to that level.... -

mmaatt747 Reply15739383 said:This is more or less something for professionals. I have to say thought that nVidia's use in Fluent is pretty bad. It only recently got implemented, but just for radiation models. Most of us use Fluent for fluid dynamics ontop of heat transfer so....yea. Would like to see actual GPGPU use in Fluent soon. (To my credit, I did my thesis on wing design using a K5000).

Also, what is NV Link physically? Is it a bridge wire, chip? I don't want a g-sync style premium on gaming boards if this comes to that level....

Isn't the premium paid for g-sync at the monitor level? But anyway you bring up a good point. I guarantee you there will be a premium for this. Seems like it will be at the motherboard level. -

paulbatzing According to the Anandtech article about the announcement, it will be a mezzanine connector, so you will have to lie down the GPU on the motherboard, at least to use it for GPU-CPU communication. If they go that way, they might as well go for GPU sockets and remove the external card stuff... As has been said before: this will not come to the consumer market, at best there will emerge a new standard from this at some point in the future.Reply -

A_J_S_B ReplyThis doesn't make any sense. PCIE has been giving us plenty of bandwidth, it's always stayed ahead of actual needed performance. Its also a tried and true fact that 16 lanes of the thing are unnecessary for today's graphics cards. The performance difference between using 16 and 4 lanes is only marginal. The difference between x8 and x16 is pretty much zero. In fact some tested higher in x8 but within margin of error. This is why hooking up your graphics card over thunderbolt is a liable idea.

You are assuming the only use GPUs have are for gaming. That's not true. The article CLEARLY states some of the applications that do benefit from this technology. GPUs are very good at computations, much much faster then CPUs.This doesn't make any sense. PCIE has been giving us plenty of bandwidth, it's always stayed ahead of actual needed performance. Its also a tried and true fact that 16 lanes of the thing are unnecessary for today's graphics cards. The performance difference between using 16 and 4 lanes is only marginal. The difference between x8 and x16 is pretty much zero. In fact some tested higher in x8 but within margin of error. This is why hooking up your graphics card over thunderbolt is a liable idea.

You are assuming the only use GPUs have are for gaming. That's not true. The article CLEARLY states some of the applications that do benefit from this technology. GPUs are very good at computations, much much faster then CPUs.This doesn't make any sense. PCIE has been giving us plenty of bandwidth, it's always stayed ahead of actual needed performance. Its also a tried and true fact that 16 lanes of the thing are unnecessary for today's graphics cards. The performance difference between using 16 and 4 lanes is only marginal. The difference between x8 and x16 is pretty much zero. In fact some tested higher in x8 but within margin of error. This is why hooking up your graphics card over thunderbolt is a liable idea.

You are assuming the only use GPUs have are for gaming. That's not true. The article CLEARLY states some of the applications that do benefit from this technology. GPUs are very good at computations, much much faster then CPUs.This doesn't make any sense. PCIE has been giving us plenty of bandwidth, it's always stayed ahead of actual needed performance. Its also a tried and true fact that 16 lanes of the thing are unnecessary for today's graphics cards. The performance difference between using 16 and 4 lanes is only marginal. The difference between x8 and x16 is pretty much zero. In fact some tested higher in x8 but within margin of error. This is why hooking up your graphics card over thunderbolt is a liable idea.

You are assuming the only use GPUs have are for gaming. That's not true. The article CLEARLY states some of the applications that do benefit from this technology. GPUs are very good at computations, much much faster then CPUs.

You are correct but so is GameBrigada...for majority of PC users, it's a useless tech...and proprietary, witch makes it even worse.

-

mapesdhs Blimey A_J_S_B, what happened to your quoting? :DReply

Re the article, blows my mind how many people here read this stuff yet

seem able to view this sort of tech only from a gamer perspective. Kinda

bizarre, gaming isn't remotely the cutting edge of GPU tech, never has been.

It can feel like it to those who buy costly GTXs, but in reality the real

cutting edge is in HTPC, defense imaging, etc. SGI's Group Station

was doing GPU stuff 20 years ago which is still not present in the

consumer market (unless that is anyone here knows of a PC that

can load and display a 67GByte 2D image in less than 2 seconds).

Ian.