Intel's Workstation Refresh Chips Brace For Threadripper 7000

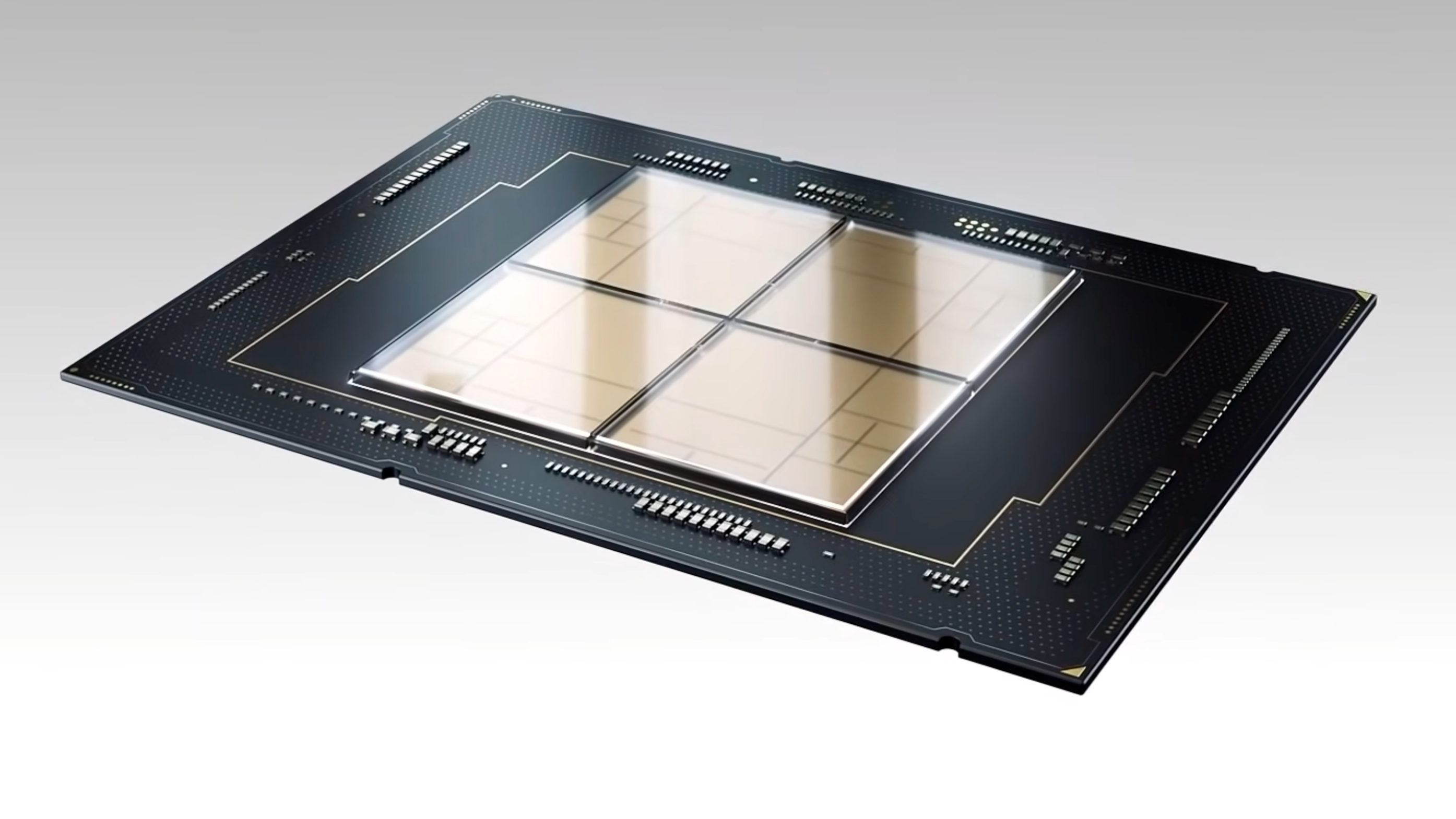

The lineup for Intel's upcoming Xeon W-2500 series has leaked, and ostensibly, it's largely a refresh of the current generation W-2400 series based on Sapphire Rapids, albeit with a few extra cores enabled. This is apparently the company's response to AMD's upcoming Threadripper 7000 workstation CPUs, which is certainly going to be a powerhouse.

The leak comes courtesy of Yuuki Ans on X (formerly Twitter), and it details the full lineup for the W-2500 series. It seems that every SKU on the list has two more cores, more cache, and higher clock speeds than its W-2400 counterpart. These CPUs seem to still only have four memory channels and 64 PCIe 5.0 lanes, however.

This is allegedly all Intel is planning for Xeon W in the near future, as the leaker said he had no knowledge of a hypothetical W-3500 refresh, which they theorized either doesn't exist or is delayed. The leaker also claimed Emerald Rapids (which is to Sapphire Rapids what Raptor Lake was to Alder Lake) may not come to Xeon W at all. Both claims stand in contrast to an earlier leak that projected a W-2500 and W-3500 series based on Emerald Rapids.

Performance-wise, this doesn't seem to be a huge overhaul for Intel. The fastest member of the W-3400 series is the w9-3495X, and it offers just 56 cores compared to the Threadripper 7980X's allotment of 64 cores. The Threadripper Pro 7995WX comes in at a whopping 96 cores, though it commands a much higher price than both the 7980X and w9-3495X. The leaked w7-2595X with its 26 cores can, at best, compete with the 24-core 7960X.

However, Intel's strategy here does seem sound because the W-2500 series ducks beneath Threadripper 7000 instead of trying to compete directly with it. Threadripper 7000 starts at $1,499 with the 7960X, while the W-2400's cheapest CPU is $359. The W-2500 could even come with a price cut to bring the w7-2595X in line with the 7960X. Plus, Intel has AMD beat on PCIe 5.0 lanes, as the non-Pro Threadrippers offer only 48 lanes, while even the lowest-end W-2400 (and now W-2500) CPUs come with 64.

Intel's return to HEDT happened just under a year ago, and it seems Xeon W is once again in dire straits. Ideally, Intel would have some CPU it could throw out to counter Threadripper 7000, but it doesn't seem that CPU exists yet. At the very least, Intel can focus on the low-end segment of HEDT CPUs, which AMD doesn't really compete in at the moment.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.

-

thestryker I was hoping some of the higher cache seen in the 5th gen server parts would propagate down, but it seems that isn't the case. These CPUs logical viability will definitely come down to where Intel is willing to price them. I know I'd consider the lowest unlocked one if it was going to cost $700-750.Reply -

bit_user Reply

Yeah, the 14-core W5-2555X is somewhat of a natural choice, given that it's the first one which can reach 4.8 GHz.thestryker said:I know I'd consider the lowest unlocked one if it was going to cost $700-750.

To justify that, you should use AVX-512 or need the PCIe lanes. Otherwise, a i9-13900K or i9-14900K is probably of comparable performance. -

thestryker Reply

I mostly just want the PCIe lanes (with proper bifurcation) as I tend to sit on my PCs for as long as they work for me so I'm willing to spend more, but not the current pricing more. That being said I've already kicked the can down the road on upgrading multiple times so as long as my current system works I'm not adverse to waiting.bit_user said:Yeah, the 14-core W5-2555X is somewhat of a natural choice, given that it's the first one which can reach 4.8 GHz.

To justify that, you should use AVX-512 or need the PCIe lanes. Otherwise, a i9-13900K or i9-14900K is probably of comparable performance.

Will also be waiting to see what the overclocks look like as I wouldn't touch it if I couldn't get min 2 cores to 5.5ghz. -

P.Amini I have a question (in fact it's in my mind for so many years!). Why don't hi-end mainstream CPUs (for example i9s) support quad channel memory instead of dual channel?Reply

Enthusiasts spend a lot of money on CPU and Motherboard so they don't care if they spend a bit more on 4 memory modules instead of 2 and gain performance. -

thestryker Reply

For the most part desktop CPUs aren't memory bandwidth limited (now that we have DDR5) though moving to quad channel across the board would be better for consumers (IGP memory bandwidth, and potentially being able to optimize latency without losing bandwidth). This does cost more to make boards wise and adds memory controller space to the CPU which is likely the reason why it has never happened (keep in mind even Apple's base M series is 128 bit).P.Amini said:I have a question (in fact it's in my mind for so many years!). Why don't hi-end mainstream CPUs (for example i9s) support quad channel memory instead of dual channel? -

bit_user Reply

Hmmm... the best way to test this would be to look at memory speed scaling benchmarks. Memory latency doesn't change much, with respect to speed. The number of nanoseconds is generally about the same, making it a pretty good test for bandwidth bottlenecks.thestryker said:For the most part desktop CPUs aren't memory bandwidth limited (now that we have DDR5)

After a few minutes of searching, here's the best example of DDR5 scaling I found:

Source: https://www.pugetsystems.com/labs/articles/impact-of-ddr5-speed-on-content-creation-performance-2023-update/

That shows a 13.5% advantage provided by using 45.5% faster memory, on an i9-13900K. It's a pretty clear indication memory can be a bottleneck. I don't know if that benchmark is fully multi-threaded, but if it's indeed running 32 threads, then half of those threads will be at a competitive disadvantage, since the E-core clusters have only a single bus stop for each 4 E-cores. Therefore, perhaps a better interconnect topology would show even better scaling on the same workload.

@P.Amini , Intel used to have a HEDT-tier of non-Xeon processors, but that's now gone. As of today, stepping up to a quad-channel configuration is possible with the Xeon W 2400 series, but too expensive for the sake of memory bandwidth alone.thestryker said:moving to quad channel across the board would be better for consumers (IGP memory bandwidth, and potentially being able to optimize latency without losing bandwidth). This does cost more to make boards wise and adds memory controller space to the CPU which is likely the reason why it has never happened -

thestryker Reply

It's a well multithreaded benchmark, and you can really take all of the memory up until 6000 together as they're running JEDEC B timings. Subtimings are of course a question, but I have zero clue about those as I'm not going to try to dive through memory specs. What I'd mostly like to know with that graph is about the jump from 5600 to 6000 being higher than 4400 to 5600.bit_user said:Hmmm... the best way to test this would be to look at memory speed scaling benchmarks. Memory latency doesn't change much, with respect to speed. The number of nanoseconds is generally about the same, making it a pretty good test for bandwidth bottlenecks.

That shows a 13.5% advantage provided by using 45.5% faster memory, on an i9-13900K. It's a pretty clear indication memory can be a bottleneck. I don't know if that benchmark is fully multi-threaded, but if it's indeed running 32 threads, then half of those threads will be at a competitive disadvantage, since the E-core clusters have only a single bus stop for each 4 E-cores. Therefore, perhaps a better interconnect topology would show even better scaling on the same workload.

Most of the other tests are all over the place which is unfortunately about what I've come to expect from memory on Intel Golden Cove platforms. The only time I've seen memory consistently at the top was the memory scaling video HUB did and they got optimal Intel timings and subtimings from Buildzoid. It seems like some of the subtimings really matter which wasn't so much the case prior to DDR5. -

bit_user Reply

Here's the CAS time, converted from cycles to nanoseconds:thestryker said:What I'd mostly like to know with that graph is about the jump from 5600 to 6000 being higher than 4400 to 5600.

Speed (MHz)CAS (cycles)CAS (ns)4400

36

8.184800

40

8.335200

42

8.085600

46

8.216000

50

8.336400

32

5.00

That value for 6400 is the outlier, but it arose from enabling XMP. What if they messed up and accidentally enabled XMP for 6000, as well? It seems plausible, given 5600 is the highest specified speed of the Raptor Lake CPU they used. So, once they push above that, perhaps the BIOS went into some kind of auto-overclocking mode.