Next-gen GPUs likely arriving in late 2024 with GDDR7 memory — Samsung and SK hynix chips showed chips at GTC

GDDR7 16Gb chips in production, 24Gb coming next year.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

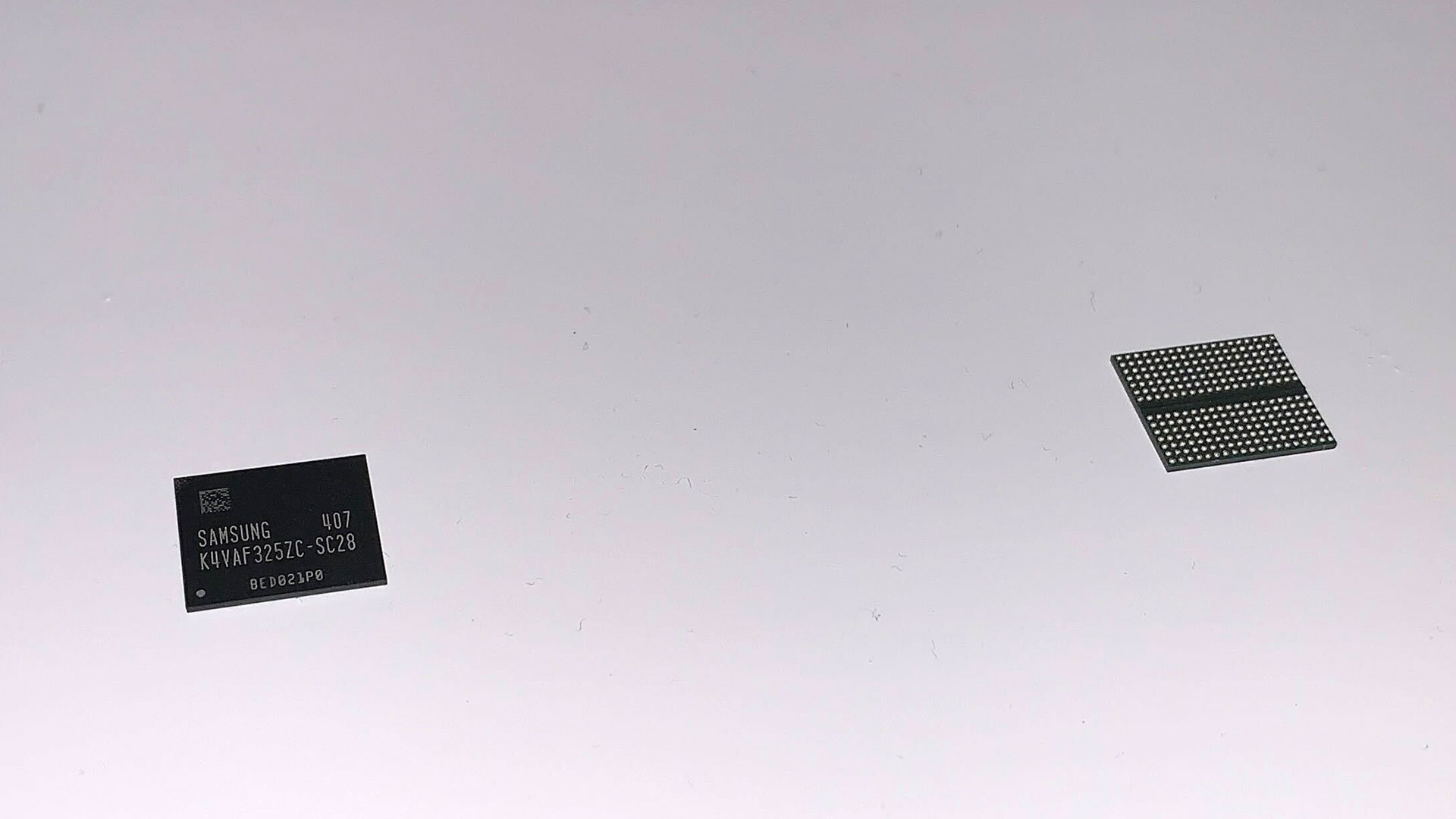

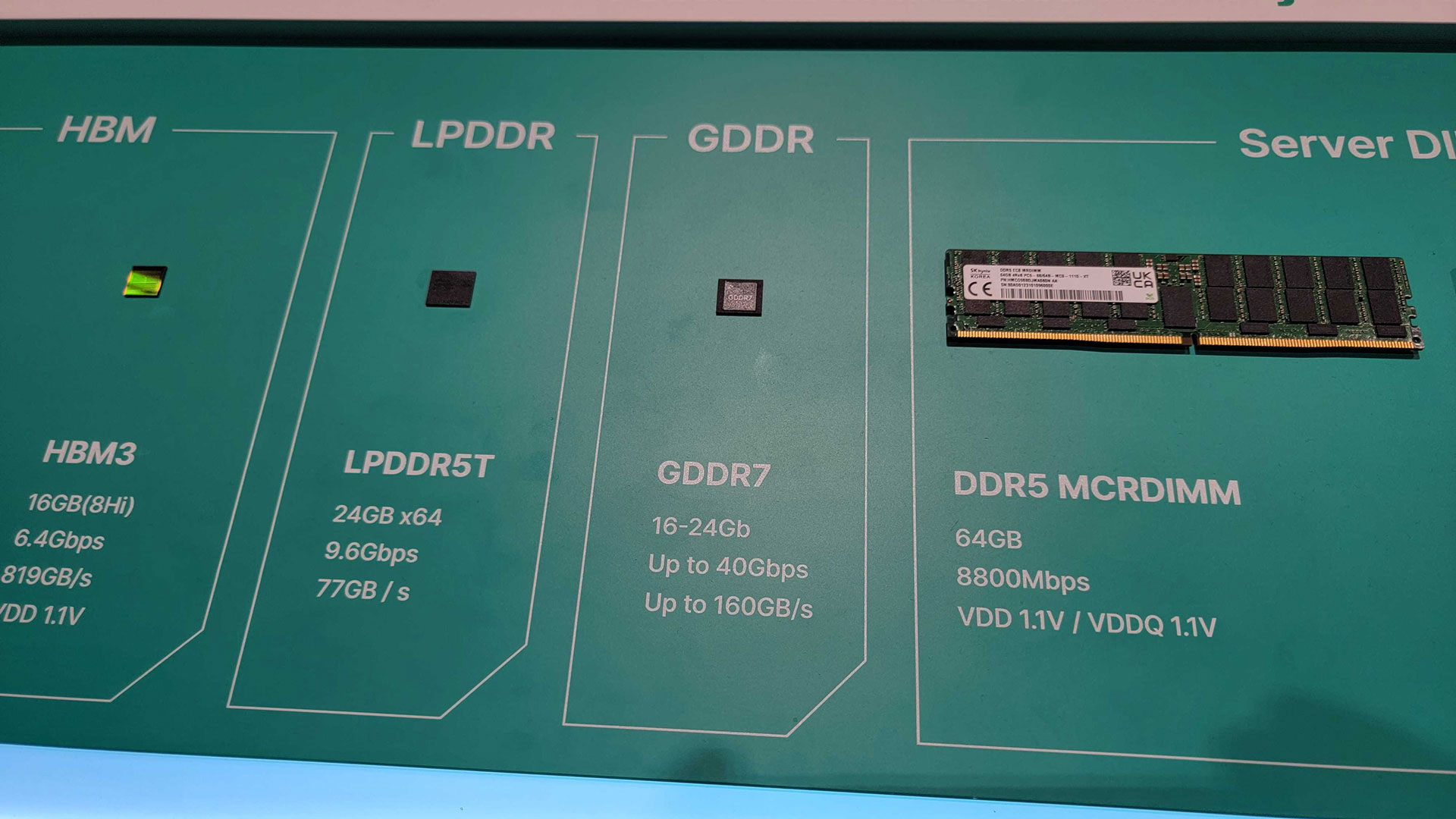

Samsung and SK hynix were showing off their upcoming GDDR7 memory solutions at GTC 2024. We spoke with the companies, as well as Micron and others, about GDDR7 and when we can expect to see it in the best graphics cards. It could arrive sooner than expected, based on some of those discussions, and that has some interesting implications for the next generation Nvidia Blackwell and AMD RDNA 4 GPUs.

Micron didn't have any chips on display, but representatives said their GDDR7 solutions should be available for use before the end of the year — and the finalized JEDEC GDDR7 standard should pave the way. The chips shown by SK hynix and Samsung meanwhile would have been produced prior to the official standard, though that may not matter in the long run.

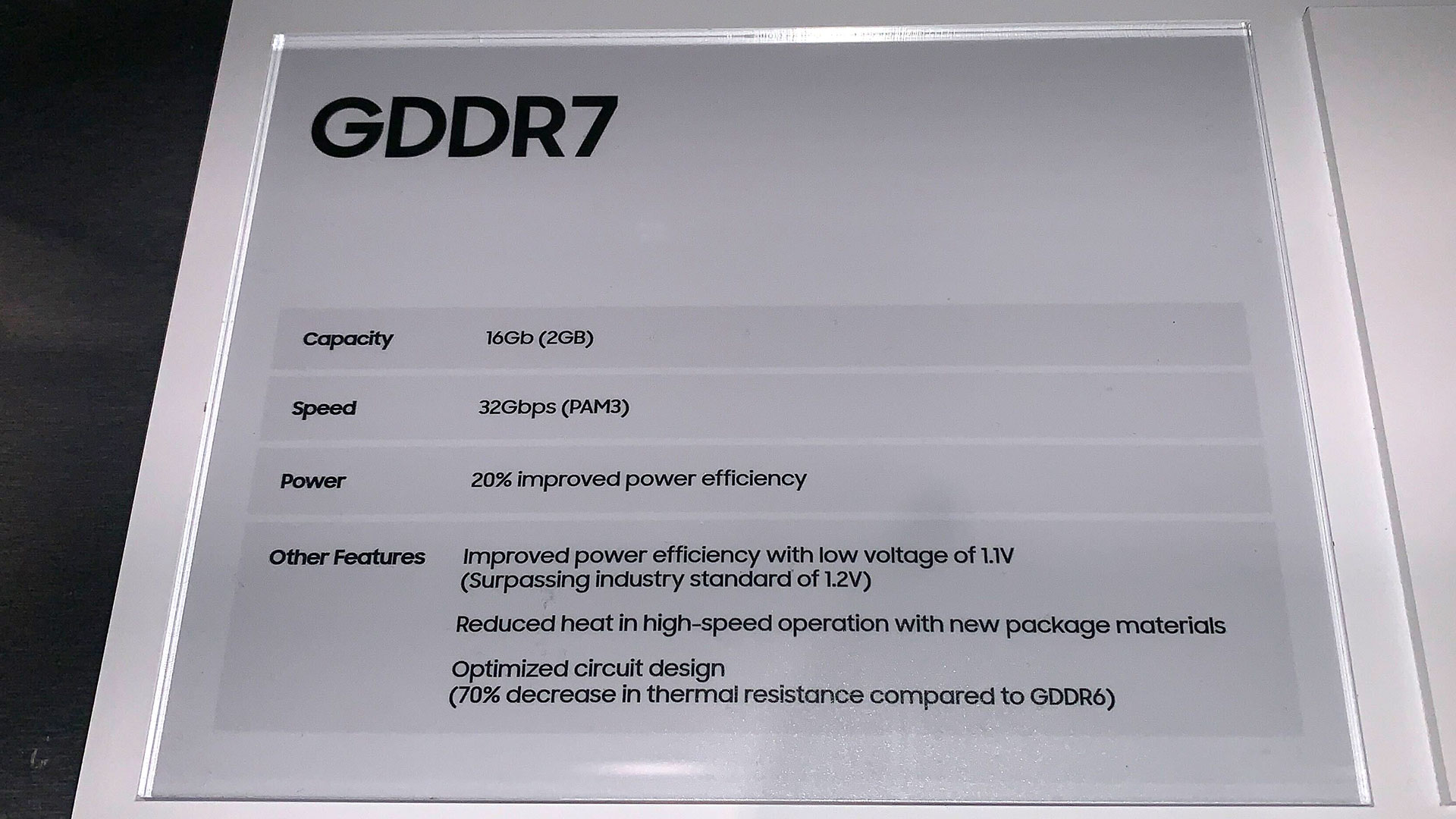

What's interesting about the information shown by SK hynix and Samsung is that both were showing off 16Gb (2GB) devices. We asked the companies about memory capacities and were told that 16Gb chips are in production and could show up in shipping products by the end of the year. 24Gb (3GB) chips on the other hand aren't going to be in the initial wave, and will very likely show up in 2025.

That could make sense, as there were also rumblings at GTC 2024 that consumer Blackwell GPUs could begin shipping before the end of the year. Whether AMD RDNA 4 will also ship this year was less clear, but of course this was an Nvidia conference and AMD wasn't in attendance.

Historically, seeing an "RTX 5090" Blackwell card in the October ~ December timeframe would make perfect sense. Nvidia has been pretty good about having a two-year cadence for new GPU architectures, going back a decade. The Maxwell GTX 900-series launched in September 2014, Pascal GTX 10-series arrived in May 2016, and Turing RTX 20-series came out in September 2018. Then we got Ampere and RTX 30-series in September 2020 — just in time for a cryptocurrency surge to ruin everything for the next two years — and finally Ada Lovelace and RTX 40-series launched in October–November 2022.

There have been questions about whether Blackwell consumer GPUs would arrive in 2024, and the relatively late launch of the RTX 40-series Super update at least hinted at a potential push of the next generation. But a few people that I spoke with at GTC basically said the Super cards were "late" and that Blackwell consumer parts likely wouldn't be affected. So... RTX 50-series coming in late 2024? That seems increasingly likely after what we saw at GTC, including the massive Blackwell B200 reveal.

Current Blackwell GPU rumors suggest the top consumer model GB202 will feature a 512-bit memory interface, while the step down GB203 will only have a 256-bit interface. After seeing GB200 with its dual-die solution that links via NV-HBI (Nvidia High Bandwidth Interface), we can't help but think that's at least potentially on the table for consumer chips as well. Imagine if GB202 is simply two GB203 chips — which would then explain the doubling in memory bus width. Otherwise, another 384-bit interface would have seemingly made more sense.

Beyond that, however, there are other implications. 16Gb GDDR7 chips are basically ready and will be shipping in volume later this year... just in time for that supposed 512-bit memory interface. Sixteen chips working together would yield 32GB of VRAM, and that seems like a perfectly sensible configuration for a future "RTX 5090" — or whatever Nvidia wants to call it. But the step down to a 256-bit interface for "RTX 5080" is a bit less impressive.

Using the same 16Gb GDDR7 chips would give us 16GB of VRAM on the second tier GPUs. That's certainly "enough" for most current workloads, outside of AI, but AMD has been shipping 16GB cards for four years now. The alternative would be to wait for 24Gb GDDR7 devices to become available, which would mean 24GB on a 256-bit interface. We'd prefer that over yet another 16GB card, but we'll have to see how things play out.

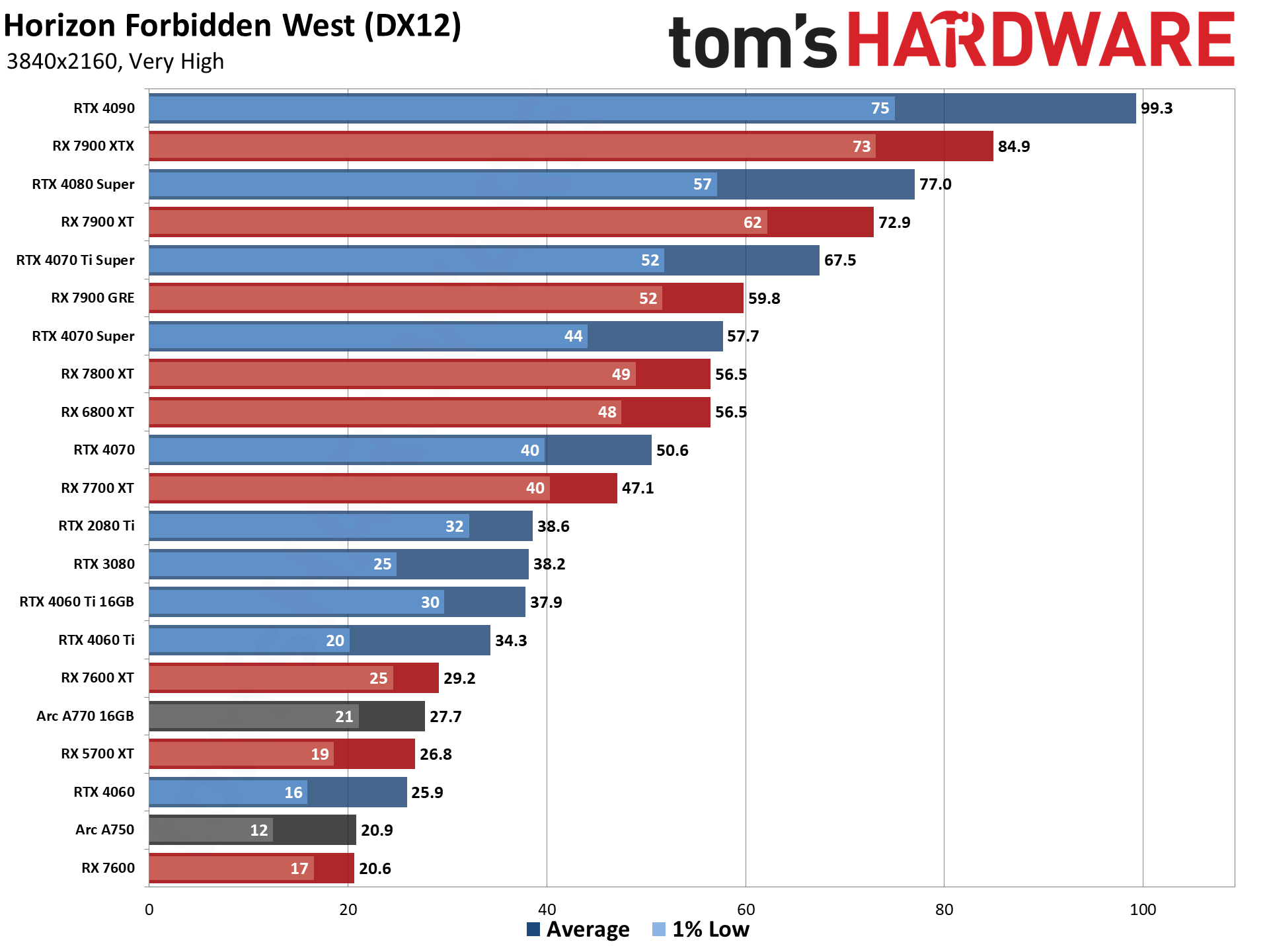

More importantly, it's the GPUs below the top two solutions — from Nvidia, AMD, and Intel — where those 24Gb chips become important. We're now seeing plenty of games where 12GB of VRAM is basically the minimum you'd want for maxed out gaming performance. Look at Horizon Forbidden West as an example, and pay attention to the RTX 3080 10GB and RTX 4070 12GB cards. Those two GPUs are basically tied at 1080p and 1440p, but performance drops substantially on the 3080 at 4K with maxed out settings as it exceeds the 10GB VRAM.

If we're already getting games that need 12GB, it would only make sense to begin shipping more mainstream-level GPUs that have more than 12GB. AMD's RX 7800 XT and RX 7900 GRE both have 16GB for around $500–$550, while Nvidia's RTX 4070 and RTX 4070 Super only have 12GB because they use a 192-bit memory interface. But if Nvidia waits for 24Gb GDDR7, that same 192-bit interface can easily provide 18GB of total VRAM — and double that figure in clamshell mode with chips on both sides of the PCB.

Even more critically, 24Gb GDDR7 means a narrower 128-bit interface — which has been a serious cause for concern with the RTX 4060 Ti and RTX 4060 — wouldn't be as much of a problem. Those would still be able to provide 12GB of memory with one device per 32-bit channel, so there'd be no need for a consumer RTX 4060 Ti 16GB. And naturally the same math applies to AMD, where a future upgraded RX 7600-level GPU would get 12GB instead of 8GB.

And it's not just about memory capacity, though that's certainly important. GDDR7 will have speeds of up to 32 Gbps according to Samsung, while SK hynix says it will have up to 40 Gbps GDDR7 chips available. Even if we stick with the lower number, that's 128 GB/s per device, or 512 GB/s for a 128-bit interface, and 768 GB/s for a 192-bit interface. Both would be a substantial increase in memory bandwidth, which would take care of the second concern we've had with the lower tier GPUs of the current generation. 40 Gbps GDDR7 would bump the 128-bit interfaces up to 640 GB/s and the 192-bit bus to 800 GB/s, though we suspect we won't see such configurations in consumer GPUs until late 2025 at earliest.

This isn't new information as such, but it's all starting to come together now. Based on what we've heard and seen, we expect the next-gen Nvidia and AMD GPUs will fully embrace GDDR7 memory, with the first solutions now likely to arrive before the end of 2024. Those will be extreme performance and price models, with wider interfaces that can still provide 16GB to 32GB of memory. The second wave could then take the usual staggered release and come out in 2025, once higher capacity non-power-of-two GDDR7 chips are widely available. Hopefully this proves correct, as we don't want to see more 8GB graphics cards launching next year — not unless they're priced well south of the $300 mark (like $200 or so).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Giroro I'm sure Nvidia will probably have some "5090" class card targeted toward AI with 24-32 GB VRAM and a $2k+ price at some point later this year, and they'll have much more expensive workstation equivalents with more VRAM and unlocked CUDA. . .Reply

But the question is: Why would Nvidia ever release anything other than the highest-end AI/workstation graphics?

They know what they have, and they aim to make all the money that has ever existed in the history of mankind before the AI bubble pops. Nvidia would be losing billions in opportunity cost if they released new gaming cards this year, unless those cards were made on an older non-competing node and completely incapable of AI - which is not that likely.

Maybe they'll release a severely overpriced 4090 Ti or a 4090 GDDR7 to cash in on inflated aftermarket 4090 prices. Otherwise, Nvidia said they want to be an AI company not a graphics company and their investors seem to agree. -

jp7189 Reply

xx90 series doesn't compete with their AI class cards - they open the door to them. In my experience, people experiment with consumer cards before spending money on bigger systems. College kids can get a gaming card and 'play' with AI, and eventually bring that CUDA knowledge (and preference) to a fulltime job. This is THE primary reason why CUDA dominates the market.Giroro said:I'm sure Nvidia will probably have some "5090" class card targeted toward AI with 24-32 GB VRAM and a $2k+ price at some point later this year, and they'll have much more expensive workstation equivalents with more VRAM and unlocked CUDA. . .

But the question is: Why would Nvidia ever release anything other than the highest-end AI/workstation graphics?

They know what they have, and they aim to make all the money that has ever existed in the history of mankind before the AI bubble pops. Nvidia would be losing billions in opportunity cost if they released new gaming cards this year, unless those cards were made on an older non-competing node and completely incapable of AI - which is not that likely.

Maybe they'll release a severely overpriced 4090 Ti or a 4090 GDDR7 to cash in on inflated aftermarket 4090 prices. Otherwise, Nvidia said they want to be an AI company not a graphics company and their investors seem to agree.

I do agree with you about missed opp cost if the consumer cards are stealing capacity on leading edge wafers. -

hannibal NVIDIA did confirm that there will be less next gen gaming cards on the release aka there is very limited supply = profit!Reply

So AI boom will affect the prices and the supply… -

blppt "NVIDIA did confirm that there will be less next gen gaming cards on the release aka there is very limited supply = profit!"Reply

Guaranteed the scalpers out there will be back in full force. -

JTWrenn Reply

A lot of this will be down binning I think. They won't give up too much wafer space, if any to make these cards when that space/production capacity could go to something bigger. However, if all of these are just down binned then it is just using chips that don't meet spec for the big boy, only using one chip, adding less to the die when it comes to memory things like that.Giroro said:I'm sure Nvidia will probably have some "5090" class card targeted toward AI with 24-32 GB VRAM and a $2k+ price at some point later this year, and they'll have much more expensive workstation equivalents with more VRAM and unlocked CUDA. . .

But the question is: Why would Nvidia ever release anything other than the highest-end AI/workstation graphics?

They know what they have, and they aim to make all the money that has ever existed in the history of mankind before the AI bubble pops. Nvidia would be losing billions in opportunity cost if they released new gaming cards this year, unless those cards were made on an older non-competing node and completely incapable of AI - which is not that likely.

Maybe they'll release a severely overpriced 4090 Ti or a 4090 GDDR7 to cash in on inflated aftermarket 4090 prices. Otherwise, Nvidia said they want to be an AI company not a graphics company and their investors seem to agree.

Could even be entirely different production lines that couldn't handle their big boys. In short I highly doubt they will sell anything lower than the full on chip if it takes away any production possibilities from it since they are far exceeding their supply with demand. -

Jaxstarke9977 Definitely skipping the 5000 series. Have a 3070 collecting dust and a 4080 with no decent games to play.Reply -

Notton Ooph. 3GB density chips arriving in 2025?Reply

It sounds like there will be no interesting GPUs for a year at minimum.

That is a long drought for interesting GPUs. Flop after flop