Why you can trust Tom's Hardware

Nvidia GeForce RTX 5060 Ti 16GB Test Setup

This is mostly going to be a rehash of what we've said in other recent reviews, as our testing hasn't changed. At the end of last year, just in time for the Arc B580 launch, we revamped our test suite and our test PC, wiping the slate clean and requiring new benchmarks for every graphics card we want to have in our GPU benchmarks hierarchy.

We have finally updated the GPU benchmarks to use the new test suite and PC (older results are on pages two and three), and we're nearly finished with testing all current and previous generation GPUs. It's been a busy five months, with nine new GPU launches (ten if you count the 5060 Ti 8GB as a separate item), plus retesting previous generation cards.

While Nvidia offers extra software like DLSS that can boost performance and potentially even improve image quality (DLSS Transformers in quality mode can look better than native rendering with traditional TAA), all our primary testing omits the use of upscaling or frame generation technologies. That's because different algorithms — DLSS, FSR, and XeSS — don't always look or feel the same, so we feel it's best to start with the base level of performance you can expect.

Keep in mind that quality mode upscaling roughly equates to dropping resolution one 'notch' — so 4K with quality mode upscaling renders at 1440p. Performance mode upscaling drops the render resolution another notch (4K renders at 1080p before upscaling). The higher the upscaling factor, the more potential there is for noticeable upscaling artifacts.

Frame generation — including the new MFG (Multi Frame Generation) of the RTX 50-series — makes things even more complex. It can smooth out the presentation of frames to your display, while at the same time reducing the number of user input samples that get taken relative to the framerate, and introducing some additional input latency. The overall experience can vary quite a bit from game to game, as well as between different technologies like DLSS 3 framegen, DLSS 4 MFG, FSR 3.1 framegen, FSR 4 framegen, and even XeSS 2 framegen.

In short, trying to test and quantify performance for all of the various upscaling and frame generation algorithms adds a lot of complexity and uncertainty. The TLDR is that all upscaling and framegen solutions will boost performance (and/or smoothness), potentially at the cost of some image fidelity. If GPU X runs faster than GPU Y at native 1080p rendering, it should also be faster at 4K with performance mode upscaling... but depending on the supported algorithms, the game rendering may or may not look the same.

TOM'S HARDWARE AMD ZEN 5 PC

AMD Ryzen 7 9800X3D

ASRock Taichi X670E

G.Skill TridentZ5 Neo 2x16GB DDR5-6000 CL28

Crucial T700 4TB

Cooler Master ML280 Mirror

Corsair HX1500i

GRAPHICS CARDS

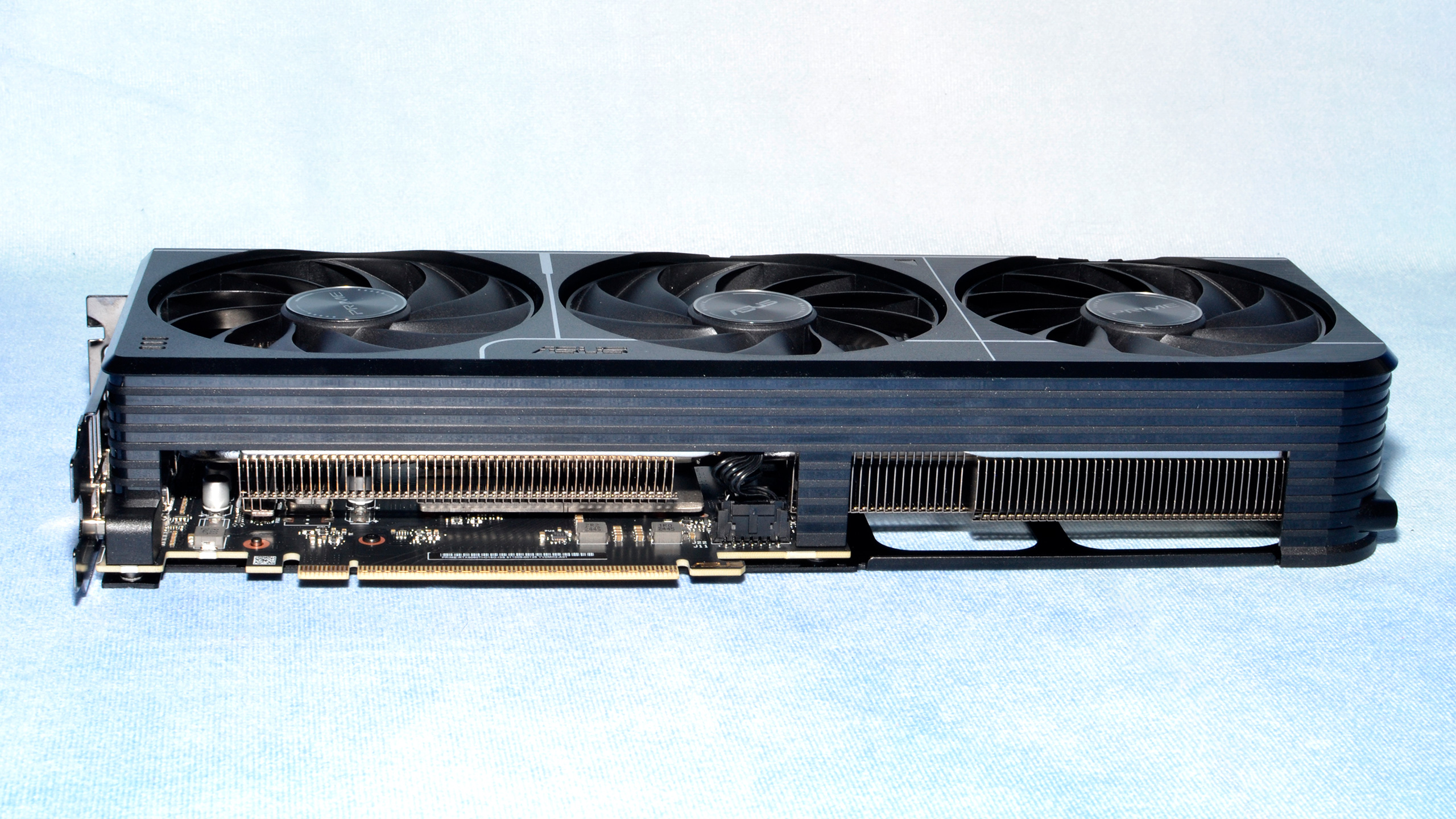

Asus RTX 5060 Ti 16GB Prime OC

PNY RTX 5060 Ti 16GB OC

Nvidia RTX 5070 Founders Edition

Nvidia RTX 4070 Super Founders Edition

Nvidia RTX 4070 Founders Edition

Gigabyte RTX 4060 Ti 16GB Gaming OC

Nvidia RTX 4060 Ti 8GB Founders Edition

Asus RTX 4060 Dual OC

AMD RX 9070 (PowerColor Reaper)

AMD RX 7800 XT (MBA reference card)

AMD RX 7700 XT (XFX QICK319)

Sapphire RX 7600 XT Pulse

AMD RX 7600 (MBA reference card)

Our GPU test PC has an AMD Ryzen 7 9800X3D processor, the fastest current CPU for gaming purposes. We also have 32GB of DDR5-6000 memory from G.Skill with AMD EXPO timing enabled (CL30) on an ASRock X670E Taichi motherboard. We're running Windows 11 24H2, with the latest drivers at the time of testing.

We used AMD's 25.3.2 drivers for the 7700/7800 GPUs, with AMD's preview 24.30.31.03 drivers for the 9070, and older drivers on the 7600/7600 XT. The Nvidia GPUs have used several different drivers from the 572 family, with most using the latest 572.83 drivers. The RTX 5060 Ti 16GB cards were tested with preview 575.94 drivers. We haven't had time to retest everything on the latest releases, unfortunately, but we've retested a few games and apps where earlier results seemed to not correlate with later testing.

Our PC is hooked up to an MSI MPG 272URX QD-OLED display, which supports G-Sync and Adaptive-Sync, allowing us to properly experience the higher frame rates that RTX 50-series GPUs with MFG are supposed to be able to reach. Most games won't get anywhere close to the 240Hz limit of the monitor at 4K when rendering at native resolution, which is where framegen and MFG can be particularly helpful.

Our GPU test suite has been trimmed down to 18 games for now, as we had to cut a few that were showing oddities. We're in the process of retesting Control Ultimate using the updated Ultra settings, and we have a couple of other games we'll add as well once additional testing is complete. For now, we have four games with RT support enabled, and the remaining 14 games are run in pure rasterization mode.

We'll look at supplemental testing in the coming days to further investigate full RT along with DLSS 4 upscaling and MFG. While we've had a bit more time for this launch, it wasn't sufficient to go and test 11 other GPUs on additional games.

All games are tested using 1080p 'medium' settings (the specifics vary by game and are noted in the chart headers), along with 1080p, 1440p, and 4K 'ultra' settings. This provides a good overview of performance in a variety of situations. Depending on the GPU, some of those settings don't make as much sense as others, but everything so far has managed to (mostly) run up to 4K ultra.

Our OS has all the latest updates applied. We're also using Nvidia's PCAT v2 (Power Capture and Analysis Tool) hardware, which means we can grab real power use, GPU clocks, and more during our gaming benchmarks. We'll cover those results on page eight.

Finally, because GPUs aren't purely for gaming these days, we run professional and AI application tests. We've previously tested Stable Diffusion, using various custom scripts, but to level the playing field and hopefully make things a bit more manageable (AI is a fast moving field!), we're turning to standardized benchmarks.

We use Procyon and run the AI Vision test as well as the Stable Diffusion 1.5 and XL tests; MLPerf Client 0.5 preview for AI text generation; SPECworkstation 4.0 for Handbrake transcoding, AI inference, and professional applications; 3DMark DXR Feature Test to check raw hardware RT performance; and finally Blender Benchmark 4.3.0 for professional 3D rendering.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Nvidia GeForce RTX 5060 Ti 16GB Test Setup

Prev Page PNY GeForce RTX 5060 Ti 16GB OC Next Page Nvidia GeForce RTX 5060 Ti 16GB Rasterization Gaming Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.