Raspberry Pi 5 patch boosts performance up to 18% via NUMA emulation — Geekbench tests reveal gains in both single and multi-threaded performance

More power!

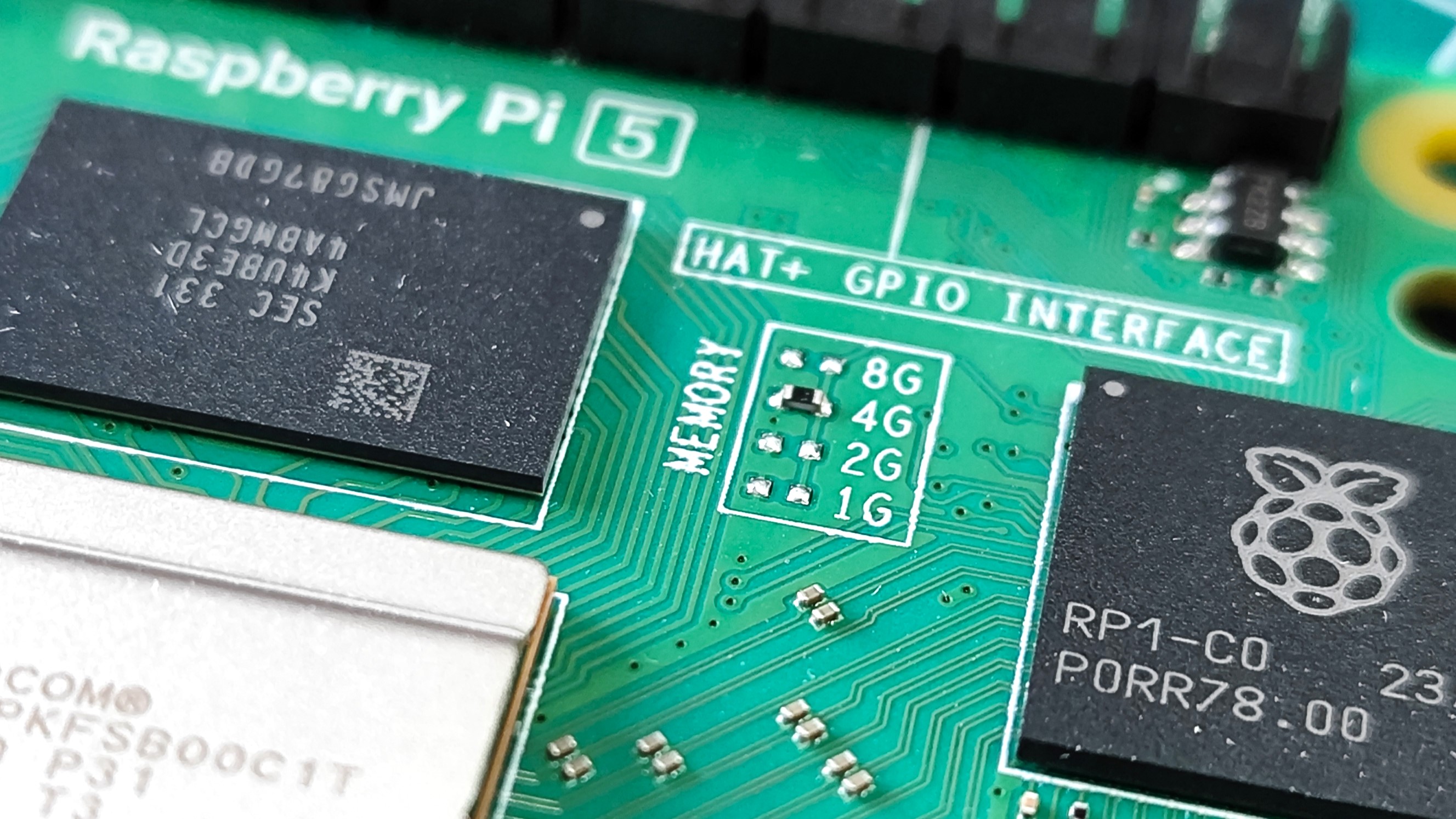

Igalia, the free software consultancy perhaps best known for its work on the Raspberry Pi's GPU, has revealed that it is investigating NUMA (Non-Uniform Memory Access) emulation for ARM64 devices. The investigations have so far yielded a potential and significant performance uplift for the Raspberry Pi 5, discussed on a Linux kernel list via a message from Tvrtko Ursulin.

The patch details were posted to the mailing list, and it appears to be around 100 lines in length. However, those 100 lines potentially have a big impact on the Raspberry Pi 5 and many other ARM64 devices.

According to the post. "This series adds a very simple NUMA emulation implementation and enables selecting it on arm64 platforms."

This improves single-core performance by 6% and multi-core performance by approximately 18%. These figures were determined using Geekbench 6 test runs.

Ursulin explains in a little more depth: "[...] splitting the physical RAM into chunks and utilizing an allocation policy such as interleaving can enable the BCM2721 memory controller to better utilize parallelism in physical memory chip organization."

What could this mean for the Raspberry Pi 5? Overall better performance from an already performant 2.4 GHz Arm CPU, which can be easily overclocked to 3 GHz or more.

The code is out for review, and with a little luck and hard work from the Linux Kernel developers, this patch could add even more performance to the Raspberry Pi 5 and many other ARM64 devices.

NUMA emulation, mainly used in systems with multiple processors, is a computer memory design where memory access times depend on the memory location that is relative to a processor. In simple terms, NUMA allows each CPU to have its own bank of locally attached memory while still having access to the memory directly connected to other processors in the system. This results in fast latency for 'near' memory (locally attached) but slightly slower latency for 'far' memory (memory directly attached to other processors in the system).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The Linux Kernel documentation page goes into NUMA with a little more depth when it comes to the Linux software stack. "Linux divides the system’s hardware resources into multiple software abstractions called “nodes.” Linux maps the nodes onto the physical cells of the hardware platform, abstracting away some of the details for some architectures. As with physical cells, software nodes may contain 0 or more CPUs, memory and/or IO buses. And, again, memory accesses to memory on “closer” nodes–nodes that map to closer cells–will generally experience faster access times and higher effective bandwidth than accesses to more remote cells."

The patch claims, "Code is quite simple and new functionality can be enabled using the new NUMA_EMULATION Kconfig option and then at runtime using the existing (shared with other platforms) numa=fake=<N> kernel boot argument."

We'll investigate this and see if we can reproduce Igalia's results.

Les Pounder is an associate editor at Tom's Hardware. He is a creative technologist and for seven years has created projects to educate and inspire minds both young and old. He has worked with the Raspberry Pi Foundation to write and deliver their teacher training program "Picademy".

-

abufrejoval At first glance I want to decry this as such evident bogus, that on second thought I keep thinking "clickbait!"Reply

Third thought: "perhaps I should feed this to some AI to demonstrate their disablity to reason?"

In case you're not firmly grounded in NUMA, it's mostly about fixing a problem that a uniform memory SoC like the RP5 shouldn't have: On multi-CPU (or multi CCD) systems the memory bottleneck tends to be relived by giving each cluster of cores its own DRAM bus, while implementing a mechanism via which DRAM physically attached to another CPU can still be used in a logically transparent manner having that CPU act as a proxy.

Those proxy services come at a cost, because now two CPUs are kept busy for memory access, but it can be judged better than simply haveing a task run out ouf memory altogether or having to share data via even less efficient means like fabrics, networks or even files.

So NUMA libraries will let applications exploit locality, keeping cores, code and data as much on locally attached memory and caches as possible to avoid the proxy overhead.

And on small systems with only a single memory bus, that situation should never occur, all RAM is local to the CPU core cluster that this code is running on.

But then in a way non-locality has crept into our SoCs because avoiding the terrible bottleneck of a single memory bus has created such immense pressure, that the vast majority of the surface area of even the smallest chips is now covered with caches.

And keeping your caches uncontested by any other core or thread is critical in avoiding cache line flushes and reloading data from lower level caches or the downright terrible DRAM, which btw. can in fact also be split into banks and open page sets, which again have been created to lessen the terrible overhead of going to fully unprepared raw RAM (some if it might even be sleeping!).

That's why high-performance computing applications need such careful tuning while lots of then still only scratch single digit percentages out of the max theoretical computing capabilities of the tens of thousands CPUs they are running on.

And evidently that work hasn't been done with the standard variant of Geekbench.

And that mostly exposes the dilemma that the classic abstraction or work separation between operating systems, its libraries and applications are facing: they simply don't know enough about each other to deliver an optimal RAM allocation strategy.

Typically you want both: locality for your working thread, keeping things as closely together, yet also aligned to data type and cache line boundaries (which wastes space but runs faster), but also as spread out across distinct portions of the cache, so distinct threads won't trample across each others cache lines.

And there an additional problem is that cache tags aren't fully mapped so cache lines that aren't actually pointing to the very same logical address can still evict each other: HPC type profiling and tuning may be requried, but is also hardware dependent e.g. between different implementations or even generations of x86.

It still doesn't quite explain why single threaded parts of the benchmark should gain so significantly, but that may just be because Geekbench too needs to somehow straddle completely opposing demands: reasonable runtime and results reasonable enough to allow comparison.

And the only way to dig deeper is to read and observe the running code via profiling, which is why any benchmark without source code can't really be any good. -

bit_user Reply

I haven't seen a good explanation of why it should matter for caches, but I suspect it's really just about pipelining memory accesses across different banks of DRAM. It would be interesting to see this benchmark repeated across different memory capacities of the Pi hardware, if there are any two that use the same density DRAM chips.abufrejoval said:And keeping your caches uncontested by any other core or thread is critical in avoiding cache line flushes and reloading data from lower level caches or the downright terrible DRAM, which btw. can in fact also be split into banks and open page sets, which again have been created to lessen the terrible overhead of going to fully unprepared raw RAM (some if it might even be sleeping!).

Probably because it (more often than not) moves the GPU into a separate DRAM bank from the CPU cores running the thread.abufrejoval said:It still doesn't quite explain why single threaded parts of the benchmark should gain so significantly, -

mitch074 Reply

Or the OS itself - as this patch is at the kernel level, this allows the scheduler to make sure the process is running wholly from a single memory area, separate from the kernel and system services. Stuff like execution prediction and prefetchers may just work better as a side effect of "fake" NUMA (which is more akin to a software implemented NUMA since it is, actually, non uniform at a hardware level, simply not considered as such).bit_user said:Probably because it (more often than not) moves the GPU into a separate DRAM bank from the CPU cores running the thread.