Wireless Networking: Nine 802.11n Routers Rounded Up

How We Tested

We’ll state right up front that we didn’t use dd-wrt (www.dd-wrt.com) or any other “hacks” on these routers. There are always decisions to make in coming up with a standard testing methodology, and we ultimately decided that our roundup would proceed from the assumption that most users would want warrantied product used according to manufacturer recommendations. This meant going with the most current firmware offered by the vendor at the time of our testing. In some cases, yes, we could have obtained faster results with dd-wrt, and perhaps that will be an interesting study for a different day. For now, we’re going vendor-approved only.

For similar reasons, we required that vendors provide us with a “matching” client adapter. The last thing we wanted was for testing to get derailed by accusations of “well, our router has issues with that XYZ adapter” or some such thing. Fine. Theoretically, if the vendor provides the router and client adapter, this should provide the highest assurance of compatibility and optimization. So that’s how we designed our testing. Too bad it doesn’t always pan out that way in real life.

We tested across three locations from a ground floor corner dining room in a two-story, 2,600-square foot home in a neighborhood smack in the middle of Oregon’s “SiliconForest” area. Thus our test neighborhood was populated with Intel, Radisys, Tektronix, IDT, and plenty of other tech industry employees, seemingly all of which run at least one home wireless network. Throughout our week-long testing period, there was never a time in which we detected fewer than ten competing WLANs with at least a 60% signal strength, and those are just the ones we could see.

We used two notebooks for testing: an HP Compaq nc8000 as the server and a Dell Latitude E6400 as the client. Location 1 placed the client 10 feet away from the server—at the opposite end of the dining room table. Location 2 was straight across the ground floor, about 70 feet from the server with one wall separating the PCs. Location 3 moved the client upstairs, with multiple barriers and about a 50-foot separation.

We had several concerns to address in testing. We wanted firm numbers on sustained throughput for both TCP and UDP, so we started with a 1GB folder and measured transfer time both to and from the server notebook, which was Ethernet-connected to the router. We actually did this twice, first with the folder stocked with scores of various system and media files, just to reflect the extra overhead of a normal folder transfer, and then with the folder containing a single 1.00GB ZIP file. A 1GB folder is pretty big in wireless testing scenarios, but we felt the jumbo size was important to help average out anomalies from environmental fluctuations, such as a neighbor using a microwave oven. We converted the results into MB/s readings.

Next, we swung in with Ixia’s IxChariot to examine both throughput and response time. Note the interesting difference between IxChariot’s shorter, more synthetic transfer rates and those obtained with our 1GB folder transfers.

Then we brought in Ruckus Wireless’s Zap command line benchmarking tool, seen on Tom’s Hardware in a couple of prior wireless articles, and used it to derive average throughput speeds for both TCP and UDP data. However, because some readers justifiably question our use of a Ruckus-made tool in testing Ruckus gear, we circled back with the Advanced Networking Test in PassMark’s PerformanceTest suite, a great benchmark collection that deserves more attention than it gets.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

All routers and adapters were patched with the latest factory firmware and driver versions before testing. Similarly, all routers were configured for maximum channel width.

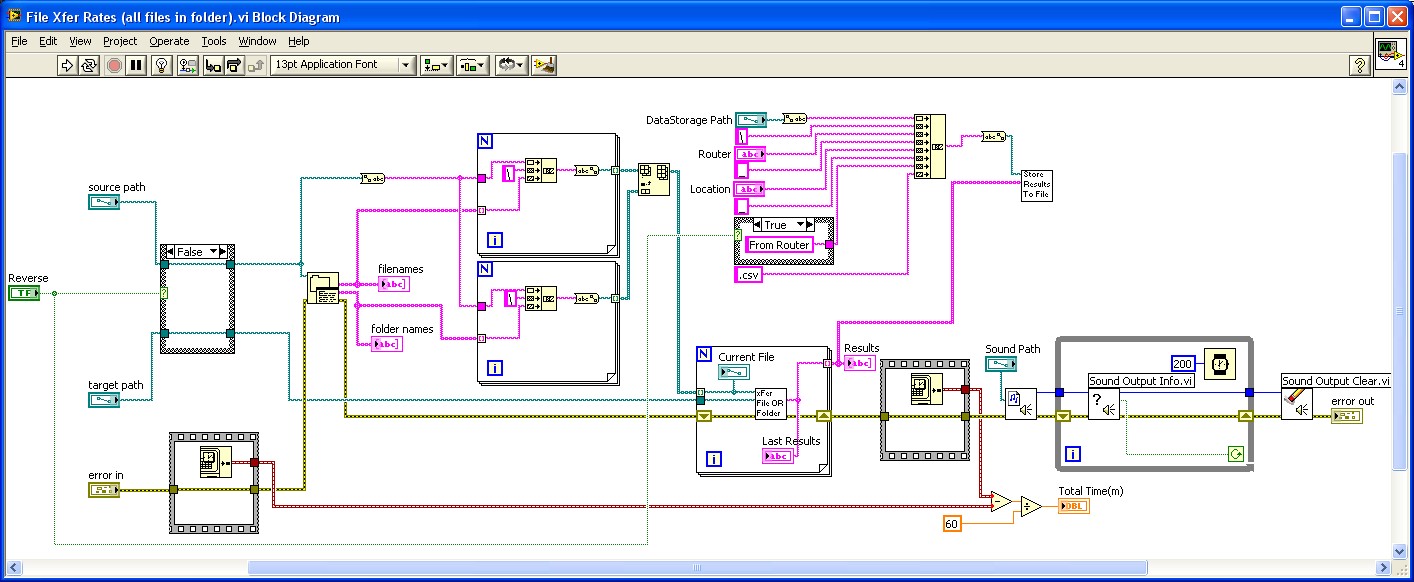

For curious programmers, we created a custom routine to automate the running of some of these benchmarks, since each location run-through took about four hours. Here’s a look at the diagram used to create our test set.

Current page: How We Tested

Prev Page ZyXEL X550N Next Page Benchmark Results: 1GB Transfer, Many Files-

deividast I want one of those Linksys :) I use now WRT54G and it's doing it's job, but it's a bit slow some times then transfering files from notebook to PC :)Reply -

vant I'm surprised the 610N won. Without testing, the general consensus is that Linksys sucks except for their WRT54s.Reply -

The testing is flawed in that there could be great variability in adapter performance, as admitted by the author. A true "router" comparison would use a common non-partial built-in Intel wifi link miniPCIe card to isolate router performance. Otherwise, too many variables are introduced. Besides, most ppl buy routers for routers, not in matching pairs since most ppl already own wifi laptops or adapters. Smallnetbuilders tested the Netgear WNDR3700 as one of the best performing routers on the market. Obviously this review unit is hampered by the Netgear adapter.Reply

-

vant kevinqThe testing is flawed in that there could be great variability in adapter performance, as admitted by the author. A true "router" comparison would use a common non-partial built-in Intel wifi link miniPCIe card to isolate router performance. Otherwise, too many variables are introduced. Besides, most ppl buy routers for routers, not in matching pairs since most ppl already own wifi laptops or adapters. Smallnetbuilders tested the Netgear WNDR3700 as one of the best performing routers on the market. Obviously this review unit is hampered by the Netgear adapter.Good point.Reply -

cag404 I just replaced my Linksys WRT600N with the Netgear WNDR3700. I have not used the WRT610 that is reviewed here, but I can say that the difference in routers is noticeable. The reason I replaced the router was that the WRT600N was dropping my port settings used to provide remote access to my home server, and I got tired of it. Wanted to try a different router so I went with the Netgear based on a favorable Maximum PC review. Glad I did. It has a snappier feel and I get a stronger signal throughout my two-floor house. The Netgear has not dropped my port settings for my home server yet. Also, I didn't like that fact that Linksys abandoned the WRT600N with no further firmware updates after about the first or second one.Reply -

pato Was the Linksys the V1 or V2 variant?Reply

Which firmware was installed on it?

I have one (V1), but am very unhappy about the signal range! I have it replaced with a WNDR3700 and have now a twice as strong signal as before! -

Would have been nice to see the WAN-LAN throughput/connections as well for wired connections, but I guess all people but me use wireless for everything nowadays...Reply