AMD Radeon Vega RX 64 8GB Review

Why you can trust Tom's Hardware

Board Layout & Components

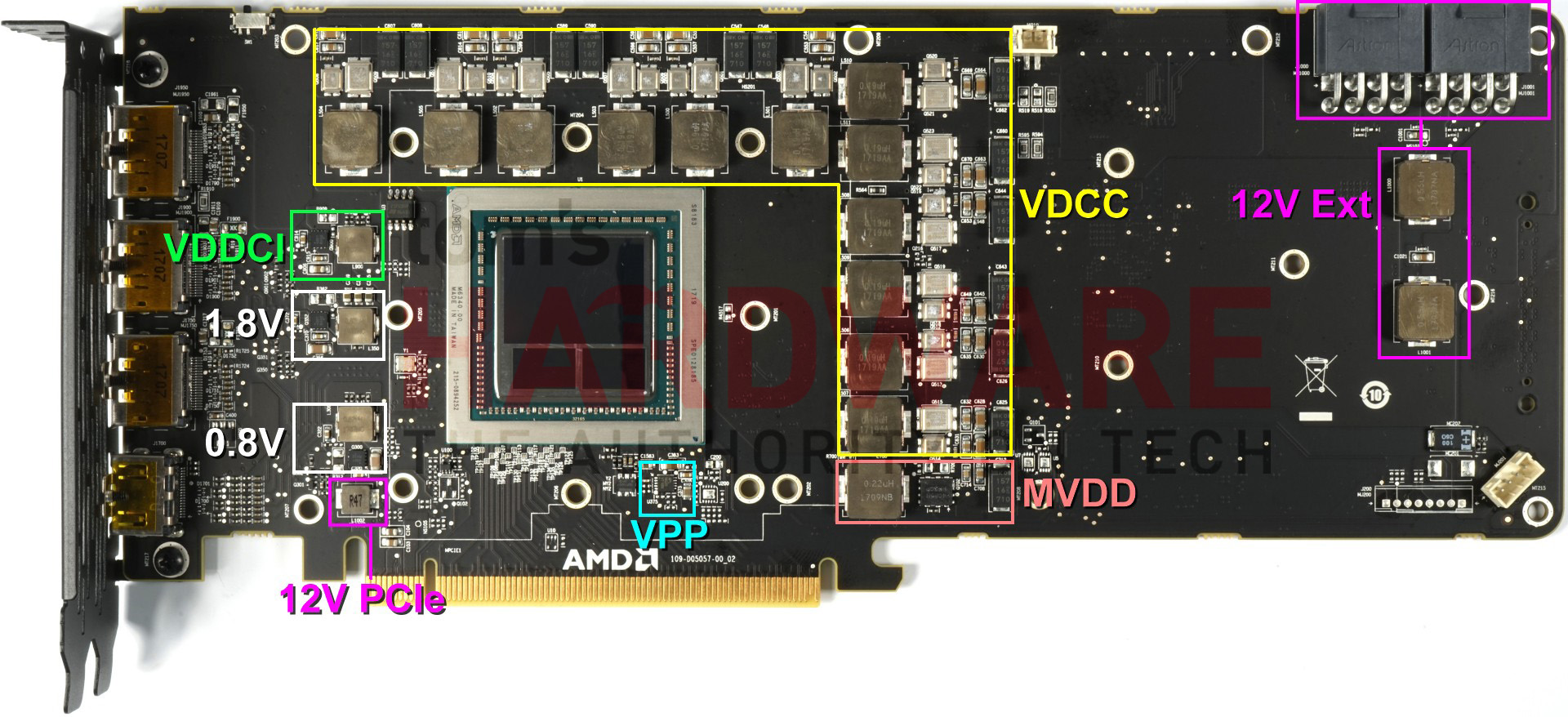

Board Layout

AMD’s RX Vega 64 and Vega Frontier Edition employ the exact same board and components. The only differences are the package that’s soldered to the board, with RX Vega 64 getting half of the Vega Frontier Edition’s memory and adjusted firmware. The only reason the card needs to be this long is to accommodate the cooler and large radial fan. It could be shortened to the size of a Radeon R9 Nano with ease.

A pair of eight-pin auxiliary power connectors have one coil each; they help smooth out certain voltage peaks. Interestingly, though, there are no large capacitors to be seen.

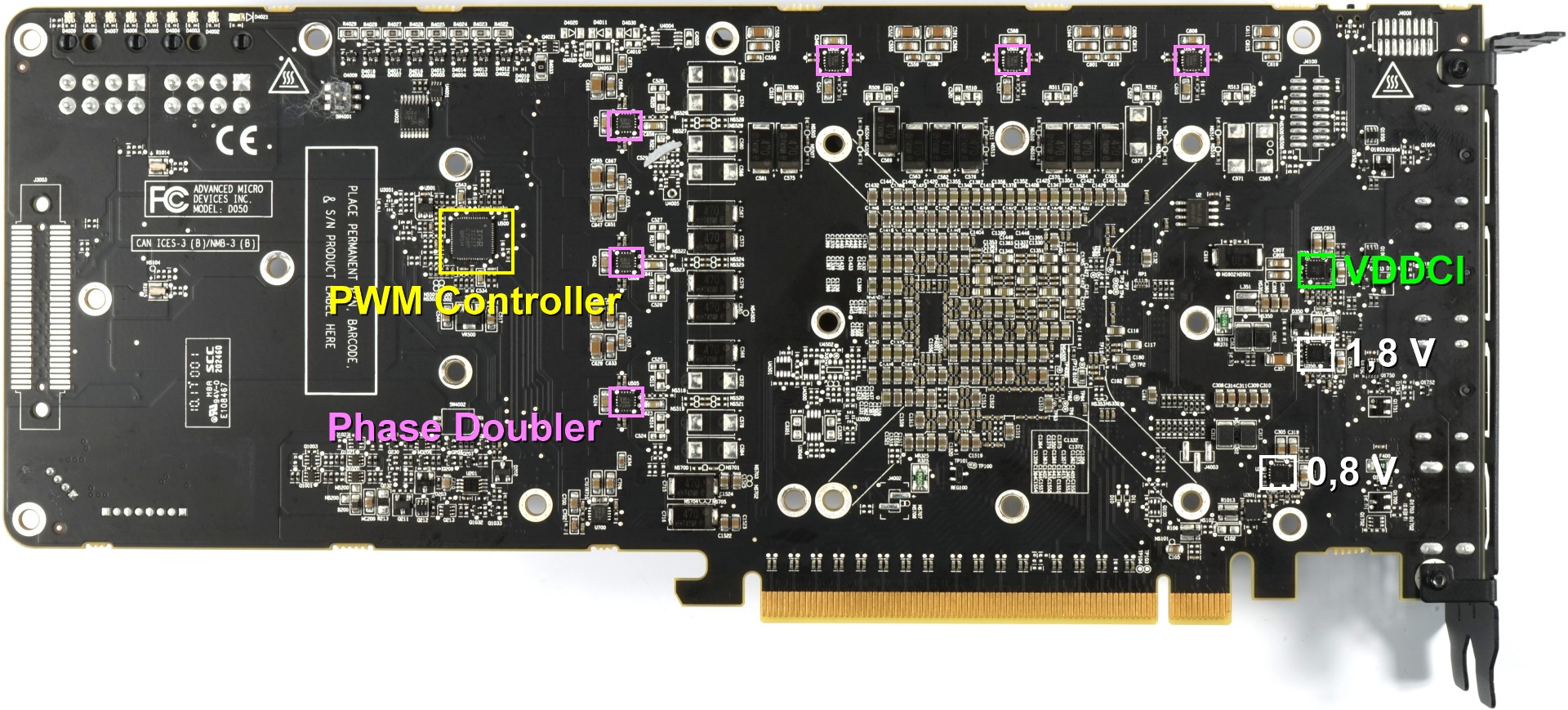

On the back of the board, we spy a densely-packed area under the GPU/memory, a PWM controller, and several other surface-mounted components.

GPU Power Supply

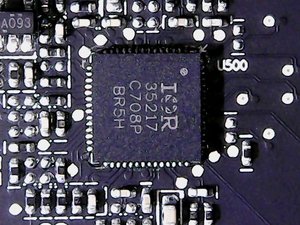

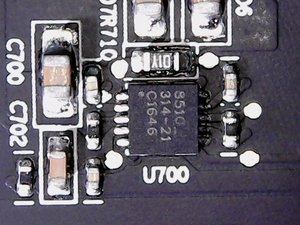

At the center of it all is International Rectifier's IR35217, a poorly-documented, dual-output, multi-phase controller able to provide six phases for the GPU and two additional phases. A closer look reveals 12 regulator circuits though, not just six. This is a result of doubling, allowing the load from each phase to be distributed between two regulator circuits.

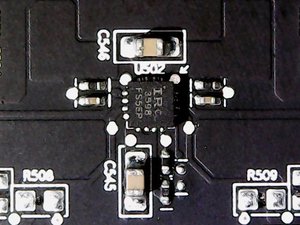

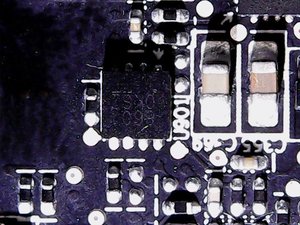

Six IR3598 interleaved MOSFET drivers on the back of the board are responsible for the doubling. These are the parts we pointed out earlier. The following video we created using AMD's Frontier Edition card starts at idle and shows how the PWM controller switches the load back and forth between circuits. This keeps efficiency high by using only one phase, but also avoids overloading a single circuit over prolonged periods of time.

The actual voltage conversion for each of the 12 regulator circuits is handled by an IRF6811 on the high side and an IRF6894 on the low side, which also contains the necessary Schottky diode. Both are International Rectifier HEXFETs that we've seen AMD use before.

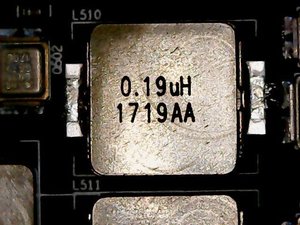

For the coils, AMD went with encapsulated ferrite core chokes that are soldered in the front. At 190nH, their inductivity is a bit lower than the 220nH we often see.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Memory Power Supply

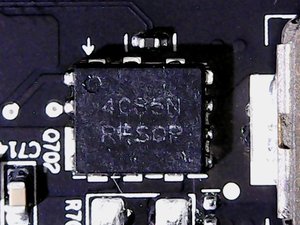

The memory's one phase is supplied by the IR35217 also. One phase is plenty, since on-package HBM2 needs a lot less power. A CHL815 gate driver is found on the back of the board. For the voltage converters, AMD went with ON Semiconductor's NTMFD4C85N, a dual N-channel MOSFET that supplies the high and low sides.

It’s interesting that AMD went with flat SMD capacitors instead of can caps. The somewhat lower capacity is compensated for by simply running two of them in parallel on the back of the board. It does make sense to spread the hot-spots and make the thermal solution's job a little easier. Waste heat is kept to a minimum, as is the cost associated with cooling.

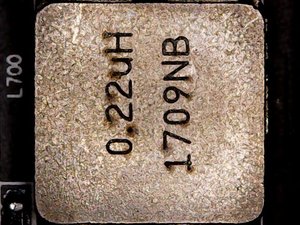

At 220nH, the coils are a bit larger this time around. The ones corresponding to the "partial voltage" converters, which operate at a much lower frequency, are even larger at 820nH. They don’t have to deal with the same amounts of power, though.

Other Voltage Converters

Creating the VDDCI isn’t a very difficult task. But it's an important one because this regulates the transition between the internal GPU and memory signal levels. It’s essentially the I/O bus voltage between the GPU and the memory. As such, two constant sources of 1.8V and 0.8V are supplied.

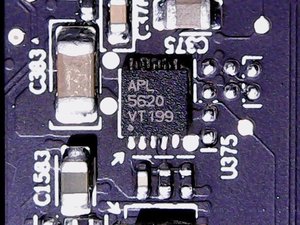

Underneath the GPU, there’s an Anpec APL5620 low drop-out linear regulator, which provides the very low voltage for the phase locked loop (PLL) area.

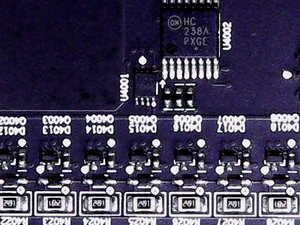

ON Semiconductor's MC74HC238A demultiplexer drives the LED bar that shows the power supply’s load. It’s a fun gimmick, but does get annoying in a dark room at night due to its brightness.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Board Layout & Components

Prev Page Disassembly, Cooler & Interposer Next Page How We Tested AMD's Vega RX 64 8GB