AMD Radeon Vega RX 64 8GB Review

Why you can trust Tom's Hardware

Vega Architecture & HBM2

Although we've detailed Vega's architecture before, it's worthwhile to provide it here again as a refresher. Vega represents a new GPU generation for AMD, with a reported 200+ changes and improvements separating it from the GCCN implementation that came before.

HBM2: A Scalable Memory Architecture

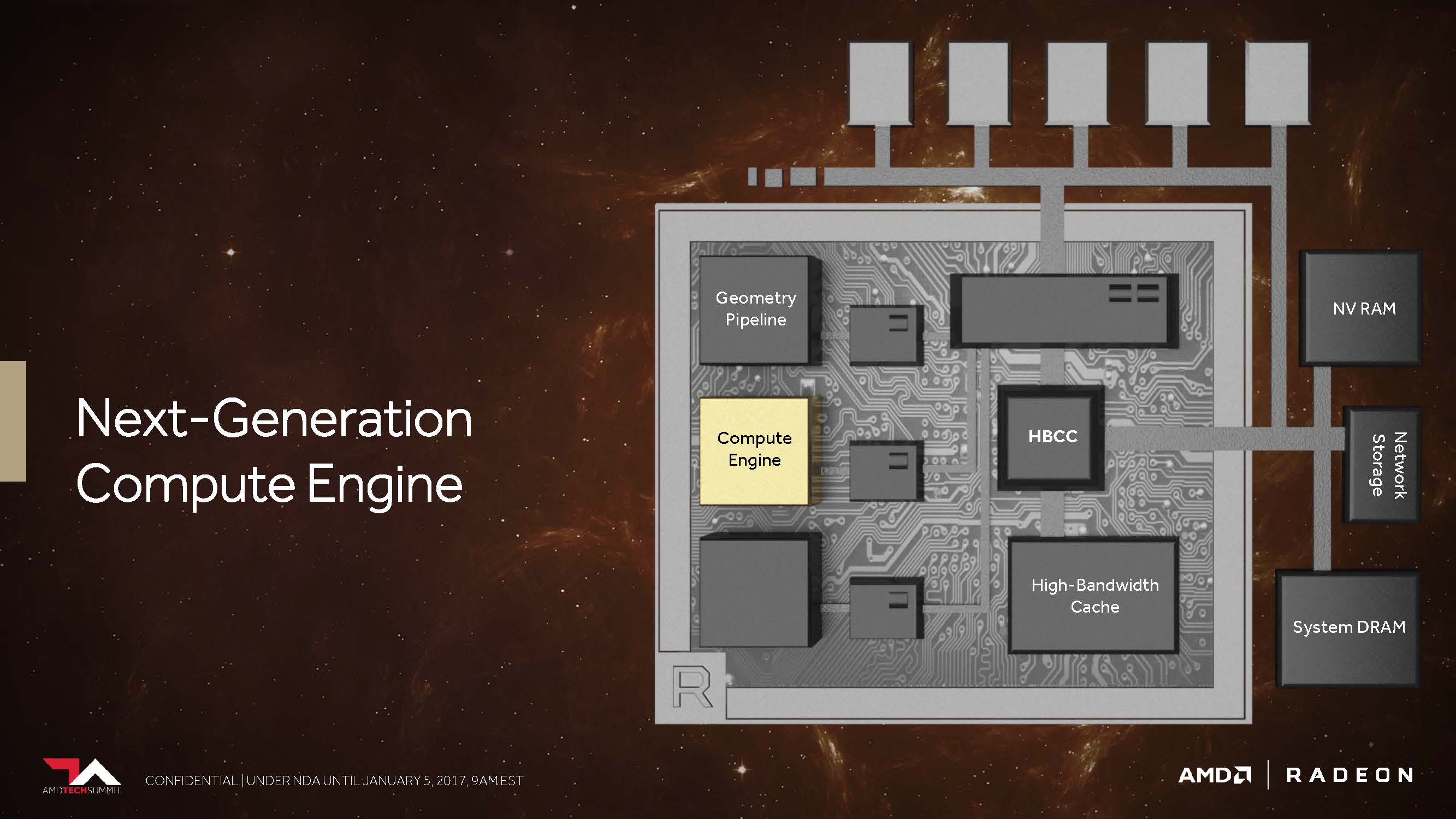

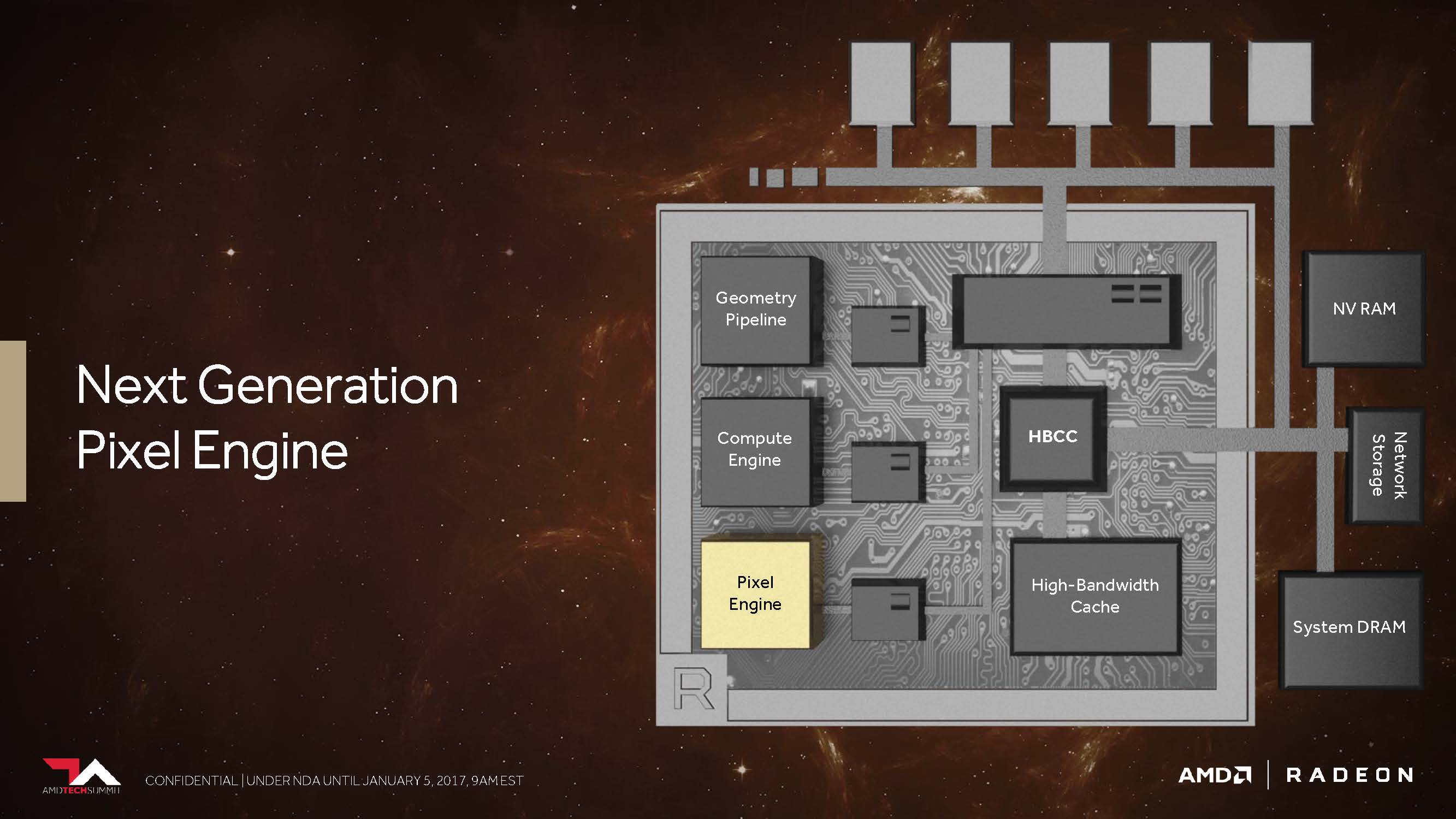

Both AMD and Nvidia are working on ways to reduce host processor overhead, maximize throughput to feed the GPU, and circumvent existing bottlenecks—particularly those that surface in the face of large datasets. Getting more capacity closer to the GPU in a fairly cost-effective manner seemed to be the Radeon Pro SSG’s purpose. And Vega appears to take this mission a step further with a more flexible memory hierarchy.

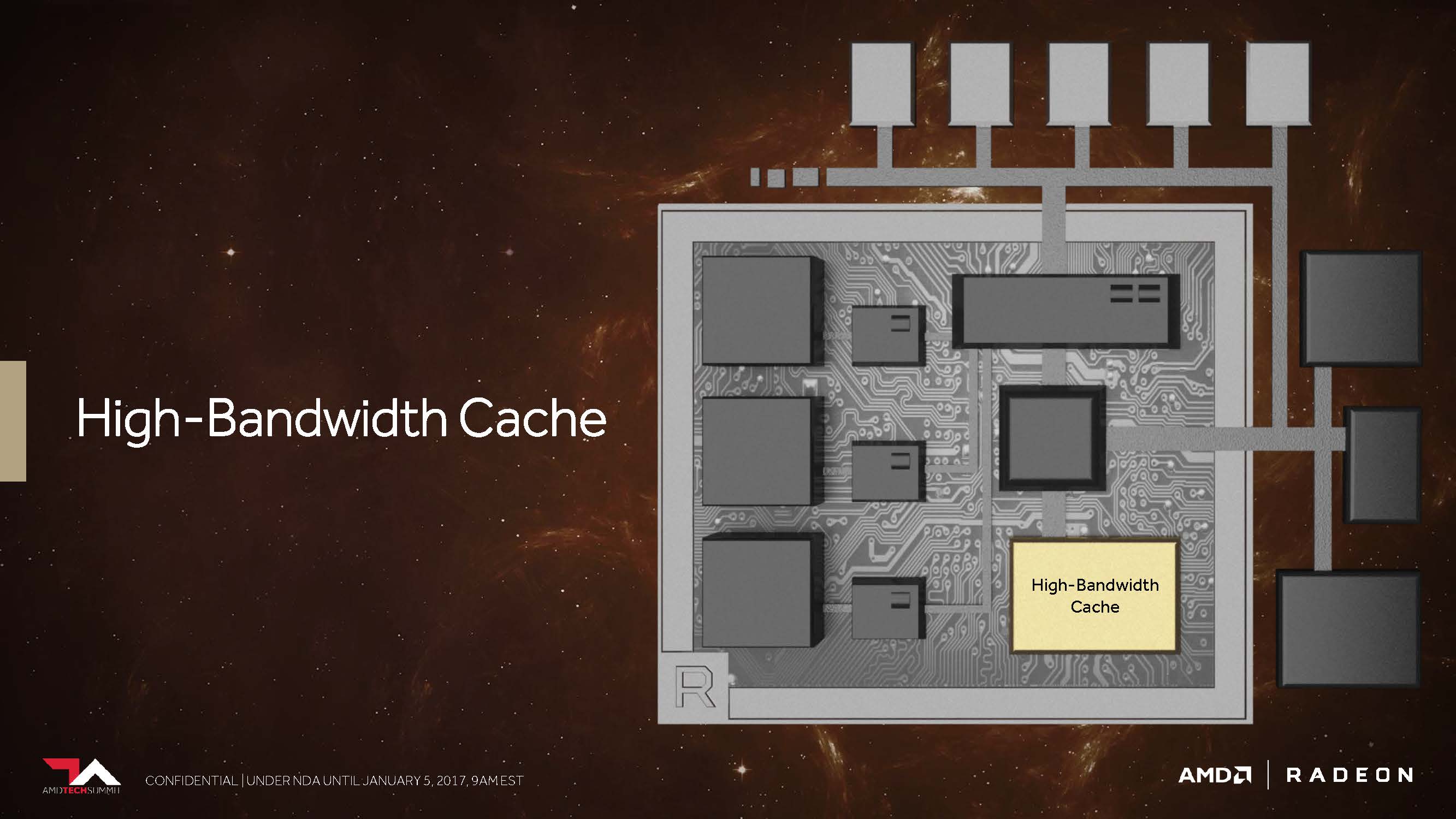

Vega makes use of HBM2, of course, which AMD officially introduced more than six months ago. At the time, we also discovered that the company calls this pool of on-package memory—previously the frame buffer—a high-bandwidth cache. HBM2 equals high-bandwidth cache in AMD parlance. Got it?

According to Joe Macri, corporate fellow and product CTO, the vision for HBM was to have it be the highest-performance memory closest to the GPU. However, he also wanted system memory and storage available to the graphics processor, as well. In the context of this broader memory hierarchy, sure, it’s logical to envision HBM2 as a high-bandwidth cache relative to slower technologies. But for the sake of disambiguation, we’re going to continue calling HBM2 what it is.

After all, HBM2 represents a significant step forward already. An up-to-8x capacity increase per vertical stack, compared to first-gen HBM, addresses questions enthusiasts raised about Radeon R9 Fury X’s longevity. Further, a doubling of bandwidth per pin significantly increases potential throughput.

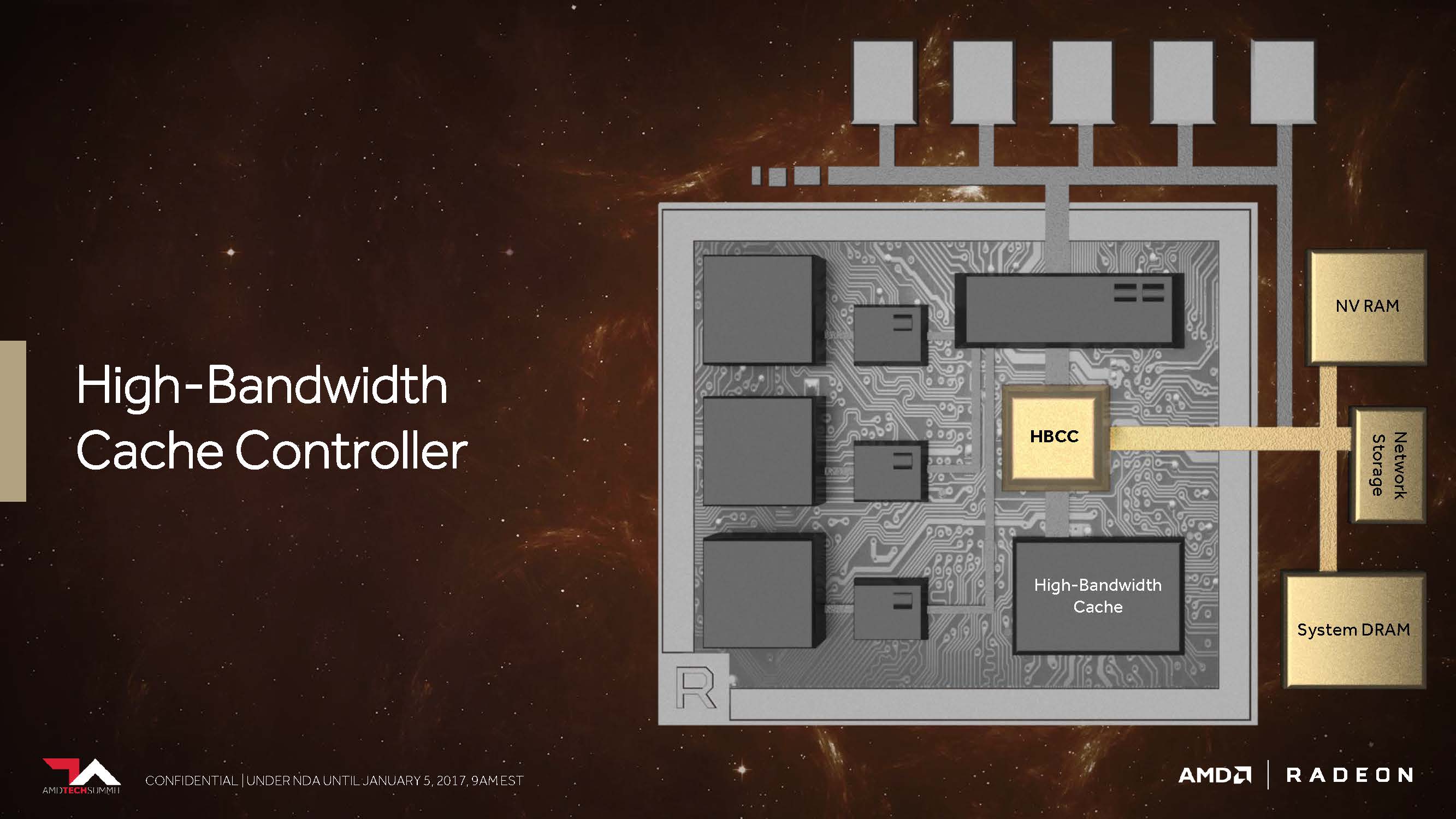

That’s the change we expect to have the largest impact on gamers as far as Vega's memory subsystem goes. However, AMD also gives the high-bandwidth cache controller (no longer just the memory controller) access to a massive 512TB virtual address space for large datasets.

When asked about how the Vega architecture's broader memory hierarchy might be utilized, AMD suggested that Vega can move memory pages in fine-grained fashion using multiple, programmable techniques. It can receive a request to bring in data and then retrieve it through a DMA transfer while the GPU switches to another thread and continues work without stalling. The controller can go get data on demand but also bring it back in predictively. Information in the HBM can be replicated in system memory like an inclusive cache, or the HBCC can maintain just one copy to save space. All of this is managed in hardware, so it should be quick and low-overhead.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

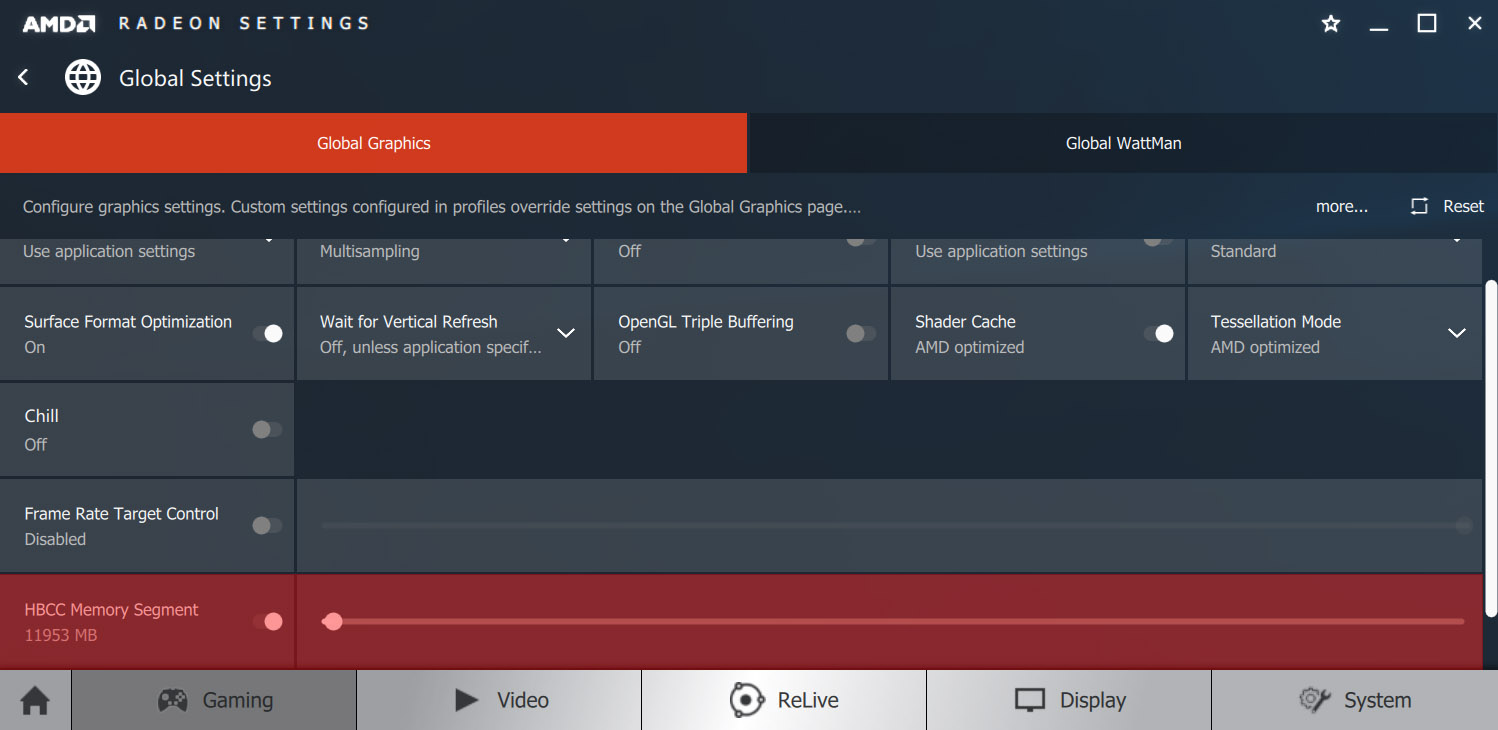

As it pertains to Radeon RX Vega 64, AMD exposes an option in its driver called HBCC Memory Segment to allocate system memory to Vega's cache controller. The corresponding slider determines how much memory gets set aside. Per AMD, once the HBCC is operating, it'll monitor the utilization of bits in local GPU memory and, if needed, move unused information to the slower system memory space, effectively increasing the capacity available to the GPU. Given Vega 64's 8GB of HBM2, this option is fairly forward-looking; there aren't many games that need more. However, AMD has shown off content creation workloads that truly need access to additional memory. Of course, the one recommendation is to have plenty of system RAM available before using the HBCC. If you have 16GB installed, you don't want to lose 4GB or 8GB to the HBCC.

AMD suggested that Unigine Heaven might reflect some influence from enabling the HBCC memory segment, so we ran the benchmark at 4K using 8x anti-aliasing and Ultra quality. With the HBCC disabled, we measured 25.7 FPS. Allocating an extra 4GB of DDR4 at 3200 MT/s nudged that result up to 26.9 FPS.

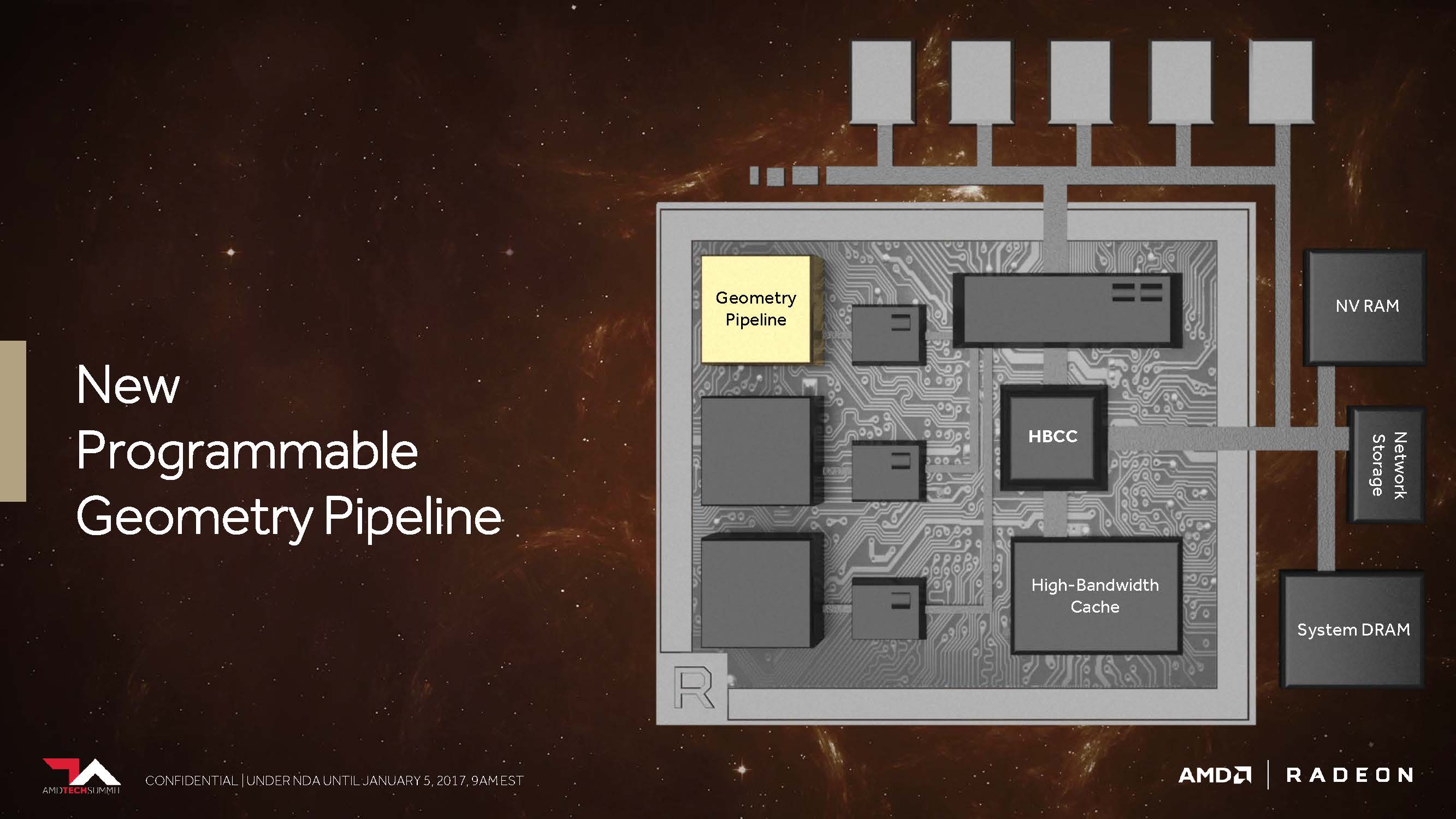

New Programmable Geometry Pipeline

The Hawaii GPU (Radeon R9 290X) incorporated some notable improvements over Tahiti (Radeon HD 7970), one of which was a more robust front end with four geometry engines instead of two. The more recent Fiji GPU (Radeon R9 Fury X) maintained that same four-way Shader Engine configuration. However, because it also rolled in goodness from AMD’s third-gen GCN architecture, there were some gains in tessellation throughput, as well. More recently, the Ellesmere GPU (Radeon RX 480/580) implemented a handful of techniques for getting more from a four-engine arrangement, including a filtering algorithm/primitive discard accelerator.

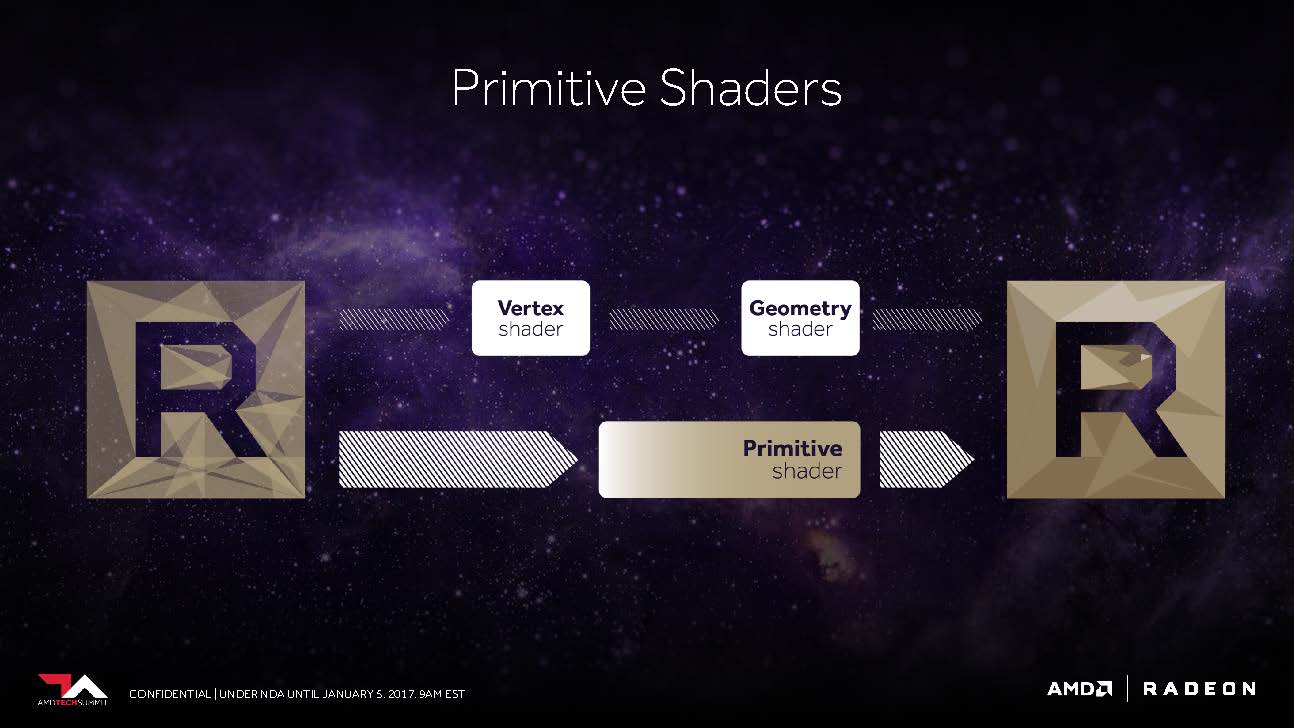

AMD promised us last year that Vega’s peak geometry throughput would be 11 polygons per clock, up from the preceding generations' four, yielding up to a 2.75x boost. That specification came from adding a new primitive shader stage to the geometry pipeline. Instead of using the fixed-function hardware, this primitive shader uses the shader array for its work.

More recently, AMD published a Vega architecture whitepaper that revised the geometry pipeline's peak rate to more than 17 primitives per clock.

AMD describes this as having similar access as a compute shader for processing geometry in that it’s lightweight and programmable, with the ability to discard primitives at a high rate. The primitive shader’s functionality includes a lot of what the DirectX vertex, hull, domain, and geometry shader stages can do but is more flexible about the context it carries and the order in which work is completed.

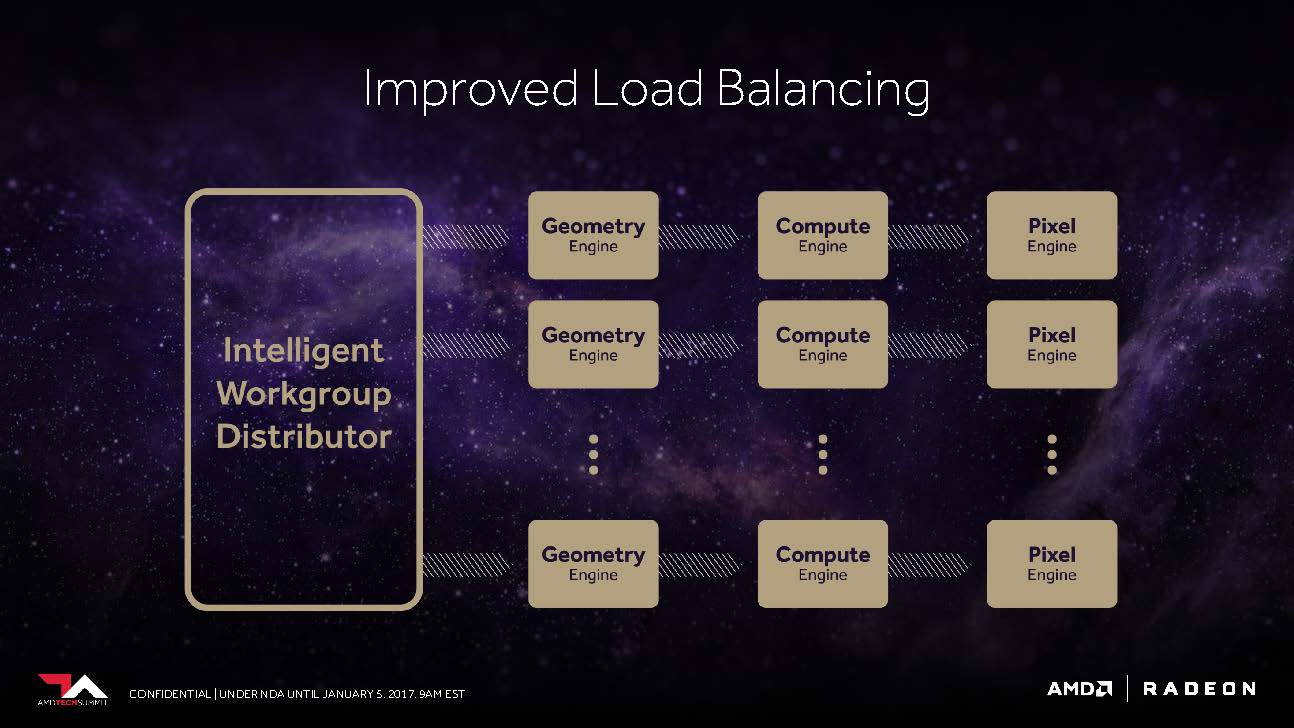

The front-end also benefits from an improved workgroup distributor, responsible for load balancing across programmable hardware. AMD says this comes from its collaboration with efficiency-sensitive console developers, and that effort will now benefit PC gamers, as well.

Vega’s Next-Generation Compute Unit (NCU)

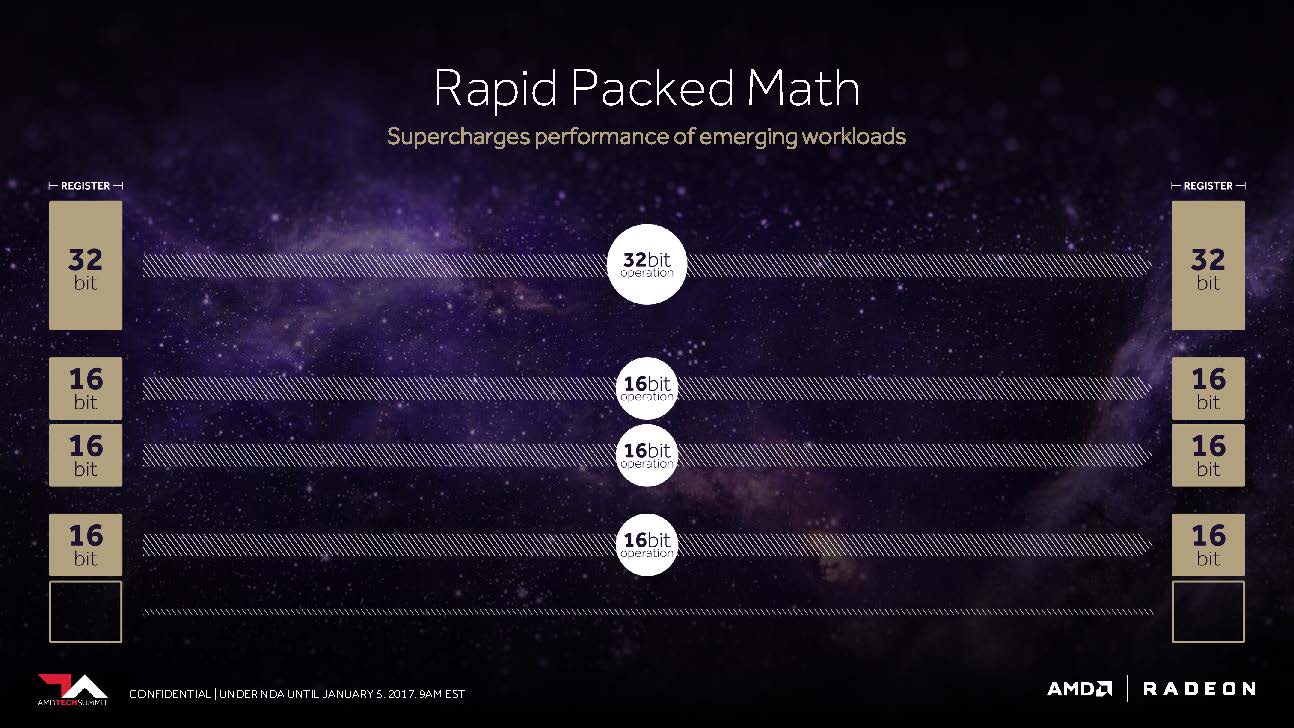

Using its many Pascal-based GPUs, Nvidia is surgical about segmentation. The largest and most expensive GP100 processor offers a peak FP32 rate of 10.6 TFLOPS (if you use the peak GPU Boost frequency). A 1:2 ratio of FP64 cores yields a double-precision rate of 5.3 TFLOPS, and support for half-precision compute/storage enables up to 21.2 TFLOPS. The more consumer-oriented GP102 and GP104 processors naturally offer full-performance FP32 but deliberately handicap FP64 and FP16 rates so you can’t get away with using cheaper cards for scientific or training datasets.

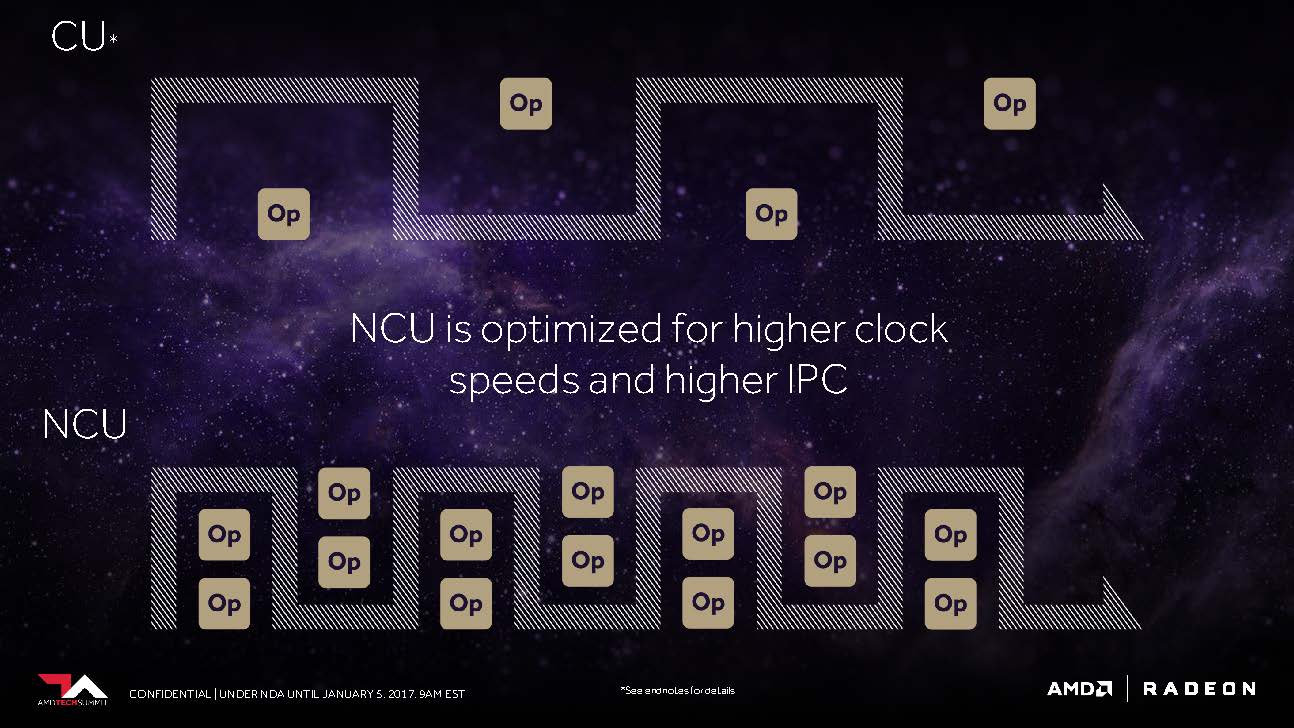

Conversely, AMD looks like it’s trying to give more to everyone. The Compute Unit building block, with 64 IEEE 754-2008-compliant shaders, persists, only now it’s being called an NCU, or Next-Generation Compute Unit, reflecting support for new data types. Of course, with 64 shaders and a peak of two floating-point operations/cycle, you end up with a maximum of 128 32-bit ops per clock. Using packed FP16 math, that number turns into 256 16-bit ops per clock. AMD even claimed it can do up to 512 eight-bit ops per clock. Of course, leveraging this functionality requires developer support, so it’s not going to manifest as a clear benefit at launch.

Double-precision is a different animal—AMD doesn’t seem to have a problem admitting it sets FP64 rates based on target market, and we confirmed that Vega 10’s FP64 rate is 1:16 of its single-precision specification. This is another gaming-specific architecture; it’s not going to live in the HPC space at all.

The impetus for Vega 10's flexibility may have very well come from the console world. After all, we know Sony’s PlayStation 4 Pro can use half-precision to achieve up to 8.4 TFLOPS—twice its performance using 32-bit operations. Or perhaps it started with AMD’s aspirations in the machine learning space, resulting in products like the upcoming Radeon Instinct MI25 that aim to chip away at Nvidia’s market share. Either way, consoles, datacenters, and PC gamers alike stand to benefit.

AMD claimed the NCUs are optimized for higher clock rates, which isn’t particularly surprising, but it also implemented larger instruction buffers to keep the compute units busy.

Next-Generation Pixel Engine: Waiting For A Miracle

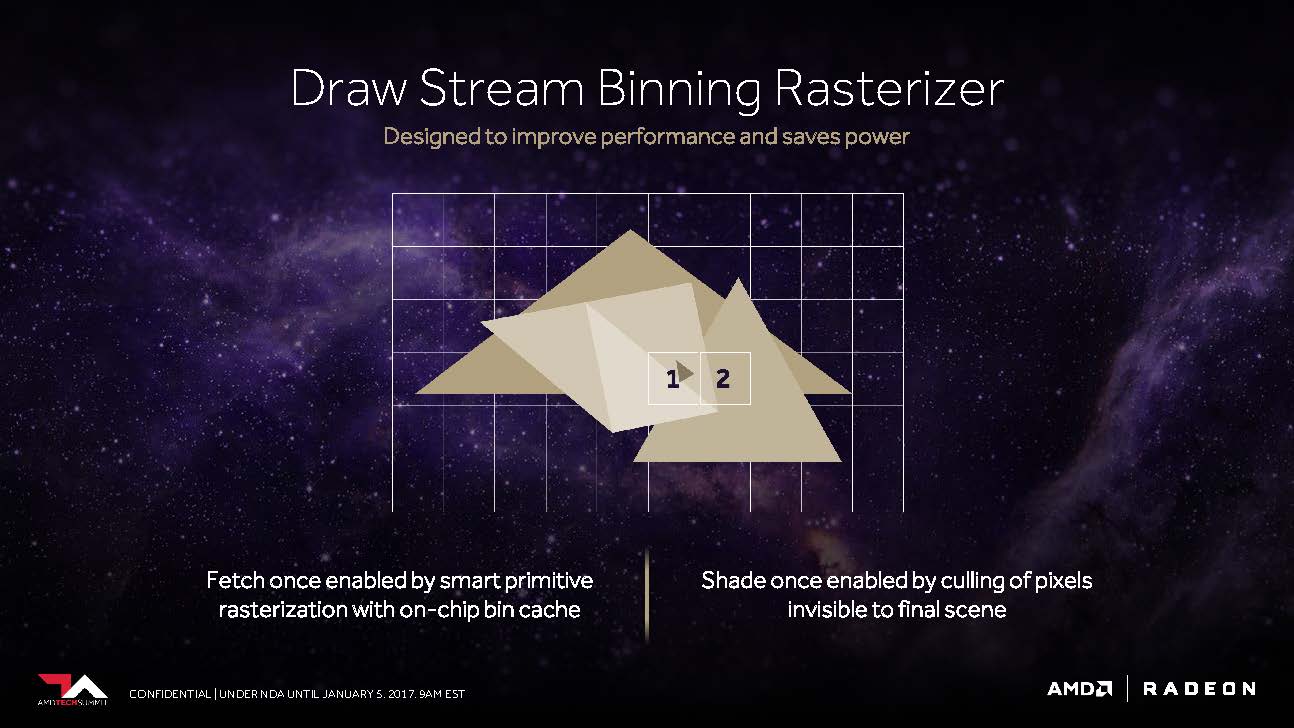

Let’s next take a look at AMD’s so-called Draw Stream Binning Rasterizer, which is supposed to be a supplement to the traditional ROP, and as such, should help improve performance while simultaneously lowering power consumption.

At a high level, an on-chip bin cache allows the rasterizer to fetch data only once for overlapping primitives, and then shade pixels only once by culling pixels not visible in the final scene.

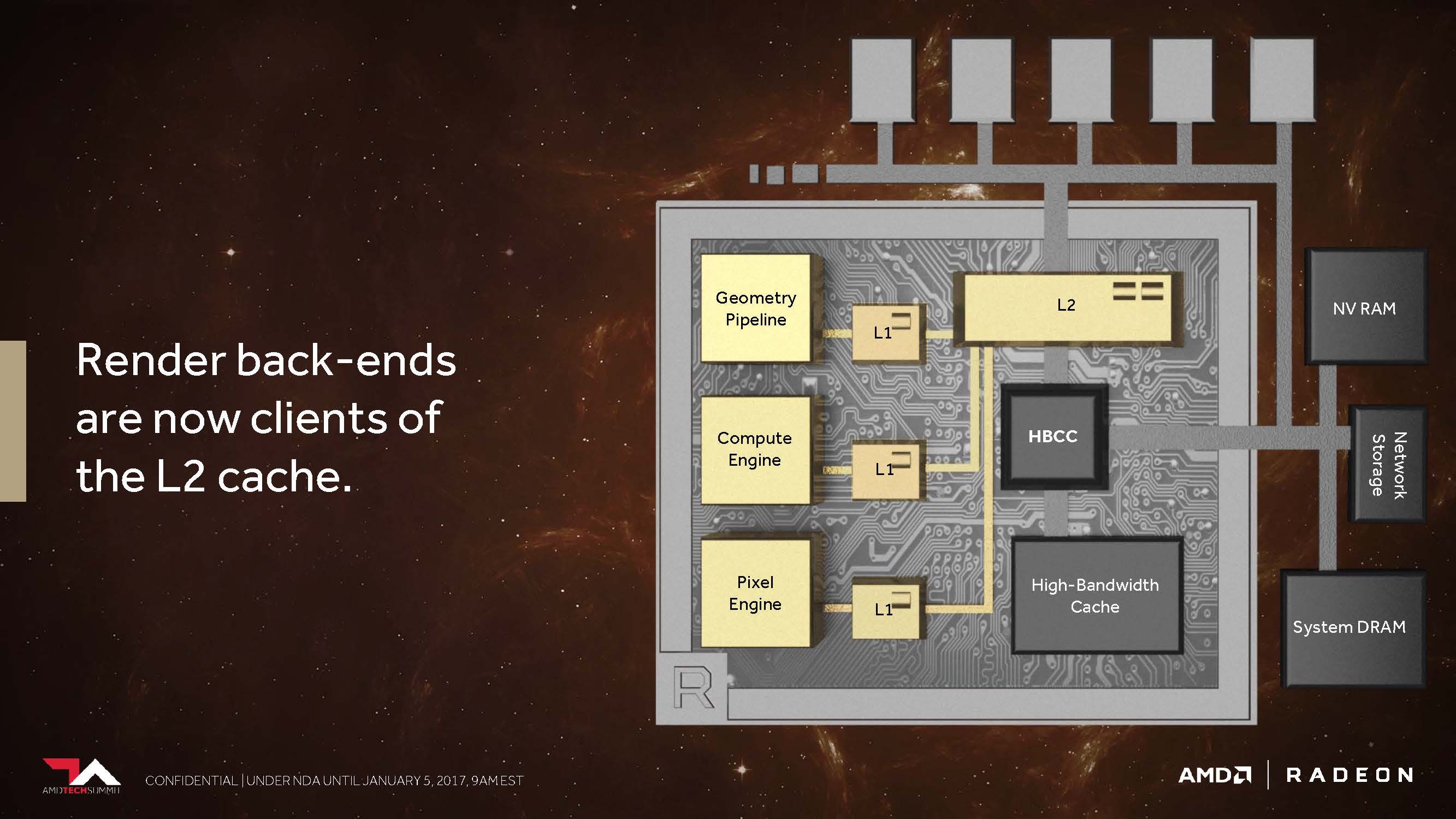

AMD fundamentally changes its cache hierarchy by making the render back-ends clients of the L2.

In architectures before Vega, AMD had non-coherent pixel and texture memory access, meaning there was no shared point for each pipeline stage to synchronize. In the example of texture baking, where a scene is rendered to a texture for reuse later and then accessed again through the shader array, data has to be pulled all the way back through off-die memory. Now, the architecture has coherent access, which AMD said particularly boosts performance in applications that use deferred shading.

Spoiler alert: AMD's launch driver doesn't unleash the massive performance improvements we were hoping for after reviewing the Frontier Edition board. We'd remind you, though, that Fiji and Hawaii, just like good wine (especially good red wine), took some time to achieve their full potential.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Vega Architecture & HBM2

Prev Page Introduction Next Page Disassembly, Cooler & Interposer-

10tacle We waited a year for this? Disappointing. Reminds me of the Fury X release which was supposed to be the 980Ti killer at the same price point ($649USD if memory serves me correctly). Then you factor in the overclocking ability of the GTX 1080 (Guru3D only averaged a 5% performance improvement overclocking their Vega RX 64 sample to 1700MHz base/boost clock and a 1060MHz memory clock). This almost seems like an afterthought. Hopefully driver updates will improve performance over time. Thankfully AMD can hold their head high with Ryzen.Reply -

Sakkura For today's market I guess the Vega 64 is acceptable, sort of, since the performance and price compare decently with the GTX 1080. It's just a shame about the extreme power consumption and the fact that AMD still has no answer to the 1080 Ti.Reply

But I would be much more interested in a Vega 56 review. That card looks like a way better option, especially with the lower power consumption. -

envy14tpe Disappointing? what. I'm impressed. Sits near a 1080. Keep that in mind when thinking that FreeSync sells for around $200 less than Gsync. So pair that with this GPU and you have awesome 1440p gaming.Reply -

SaltyVincent This was an excellent review. The Conclusion section really nailed down everything this card has to offer, and where it sits in the market.Reply -

10tacle Reply20060001 said:Disappointing? what. I'm impressed. Sits near a 1080.

The GTX 1080 has been out for 15 months now, that's why. If AMD had this GPU at $50 less then it would be an uncontested better value (something AMD has a historic record on both in GPUs and CPUs). At the same price point however to a comparable year and three month old GPU, there's nothing to brag about - especially when looking at power use comparisons. But I will agree that if you include the cost of a G-Sync monitor vs. a FreeSync monitor, at face value the RX 64 is the better value than the GTX 1080. -

redgarl It`s not a bad GPU, however I would not buy one. I am having an EVGA 1080 FTW that I am living to hate (2 RMAs in 10 months), however even if I wanted to switch to Vega, might not be a good idea. It will not change anything.Reply

However two Vega 56 in CF might be extremely interesting. i did that with two 290x 2 years ago and it might be still the best combo out there. -

blppt IIRC, both AMD and Nvidia are moving away from CF/SLI support, so you'd have to count on game devs supporting DX12 mgpu (not holding my breath on that one for the near future).Reply -

cknobman I game at 4k now (just bought 1080ti last week) and it appears for the time being the 1080ti is the way to go.Reply

I do see promise in the potential of this new AMD architecture moving forward.

As DX12 becomes the norm and more devs take advantage of async then we will see more performance improvements with the new AMD architecture.

If AMD can get power consumption under control then I may move back in a year or two.

Its a shame too because I just built a Ryzen 7 rig and felt a little sad combining it with an Nvidia gfx card. -

AgentLozen I'm glad that AMD has a video card for enthusiasts who run 144hz monitors @ 1440p. The RX 580 and Fury X weren't well suited for that. I'm also happy to see that Vega64 can go toe to toe with the GTX 1080. Vega64 and a Freesync monitor are a great value proposition.Reply

That's where the positives end. I'm upset with the lack of progress since Fury X like everyone else. There was a point where Fury X was evenly matched with nVidia's best cards during the Maxwell generation. Nvidia then released their Pascal generation and a whole year went by before a proper response from AMD came around. If Vega64 launched in 2016, this would be totally different story.

Fury X championed High Bandwidth Memory. It showed that equipping a video card with HBM could raise performance, cut power consumption, and cut physical card size. How did HBM2 manifest? Higher memory density? Is that all?

Vega64's performance improvement isn't fantastic, it gulps down gratuitous amounts of power, and it's huge compared to Fury X. It benefits from a new generation of High Bandwidth memory (HBM2) and a 14nm die shrink. How much more performance does it receive? 23% in 1440p. Those are Intel numbers!

Today's article is a celebration of how good Fury X really was. It still holds up well today with only 4GB of video memory. It even beat the GTX 1070 is several benchmarks. Why didn't AMD take the Fury X, shrink it to 14nm, apply architecture improvements from Polaris 10, and release it in 2016? That thing would be way better than Vega64.

edit: Reworded some things slightly. Added a silly quip. 23% comes from averaging the differences between Fury X and Vega64. -

zippyzion Well, that was interesting. Despite its flaws I think a Vega/Ryzen build is in my future. I haven't been inclined to give NVidia any of my money for a few years now, since a malfunction with an FX 5900 destroyed my gaming rig... long story. I've been buying ATI/AMD cards since then and haven't felt let down by any of them.Reply

Let us not forget how AMD approaches graphics cards and drivers. This is base performance and baring any driver hiccups it will only get better. On top of that this testing covers the air cooled version. We should see better performance on the water cooled version that would land it between the 1080 and the Ti.

Also, I'd really like to see what low end and midrange Vega GPUs can do. I'm interested to see what the differences are with the 56, as well as the upcoming Raven Ridge APU. If they can deliver RX 560 (or even just 550) performance on an APU, AMD will have a big time winner there.