AMD Ryzen 5 2400G Review: Zen, Meet Vega

Why you can trust Tom's Hardware

Power & Thermals, Briefly

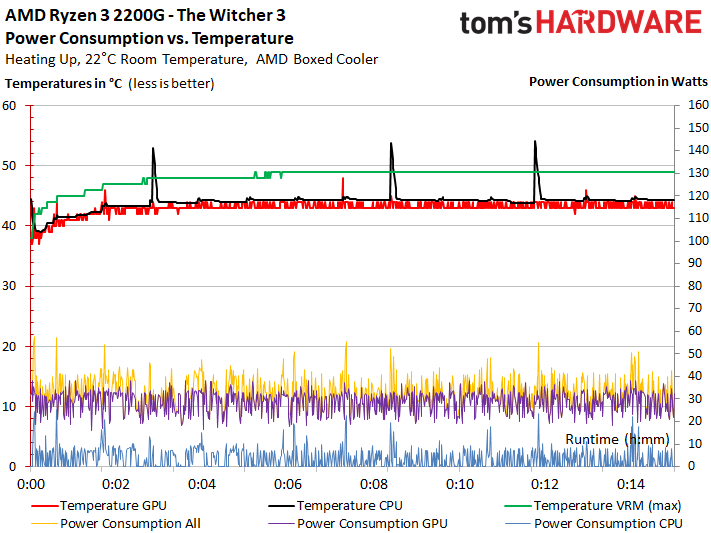

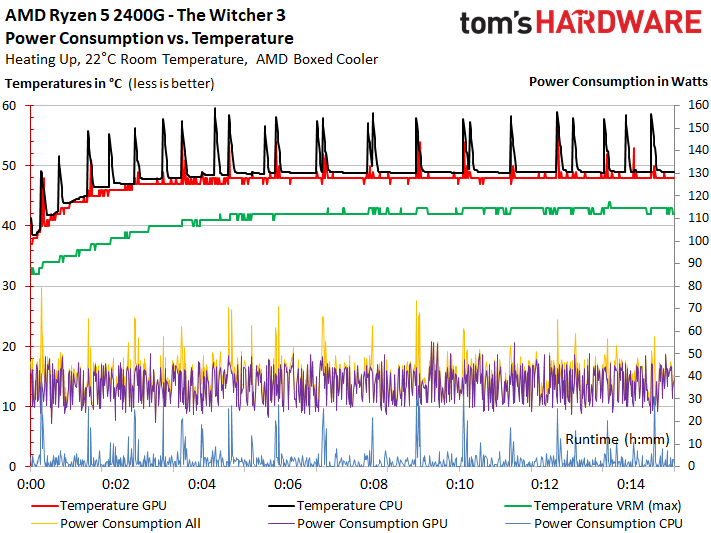

We love that AMD uses Indium solder between its die and heat spreader on Ryzen CPUs. However, the company broke from tradition and applied non-metallic thermal interface material to its 2000-series processors. AMD claims this is necessary, given their low cost. Both Raven Ridge-based processors are rated for 65W and Ryzen CPUs typically only hit ~4 GHz anyway, so we don't foresee significant problems with heat dissipation from the die to the IHS.

AMD bundles its Wraith Stealth cooler with these processors. The aluminum-core sink is designed for 65W chips, so you'll want a beefier aftermarket solution for aggressive overclocking. The down-blowing design usually helps with additional airflow over voltage regulation circuitry, which is a nice bonus. However, it doesn't come with the bright LEDs like AMD's higher-end models. The company does sell its 125W Wraith Max for $45.

We can generate multiple power consumption and thermal profiles for a processor with such a beefy graphics engine. Some applications tax the CPU cores or GPU, while others spread load between the units. There are a number of ways to represent the data and interpret its impact. As a result, we're splitting that part of our review into a separate story. We also have a couple of slides under The Witcher 3 to give you an idea of how these processors behave in a real-world game.

Overclocking

Overclocking with AMD's Ryzen Master utility is simple. The execution cores responded readily to our efforts, and the Ryzen 5 2400G floated up to 4 GHz with a 1.4V vCore setting. We also adjusted the VDDCR SoC voltage, which is a single rail that feeds the uncore and graphics domains, to 1.25V. That allowed us to dial in an easy 1555 MHz graphics clock rate and push the memory up to DDR4-3200 with 14-14-14-34 timings.

A Noctua NH-U12S SE-AM4 cooler helped us circumvent thermal challenges (we measured 75°C under the AIDA CPU/GPU stress test).

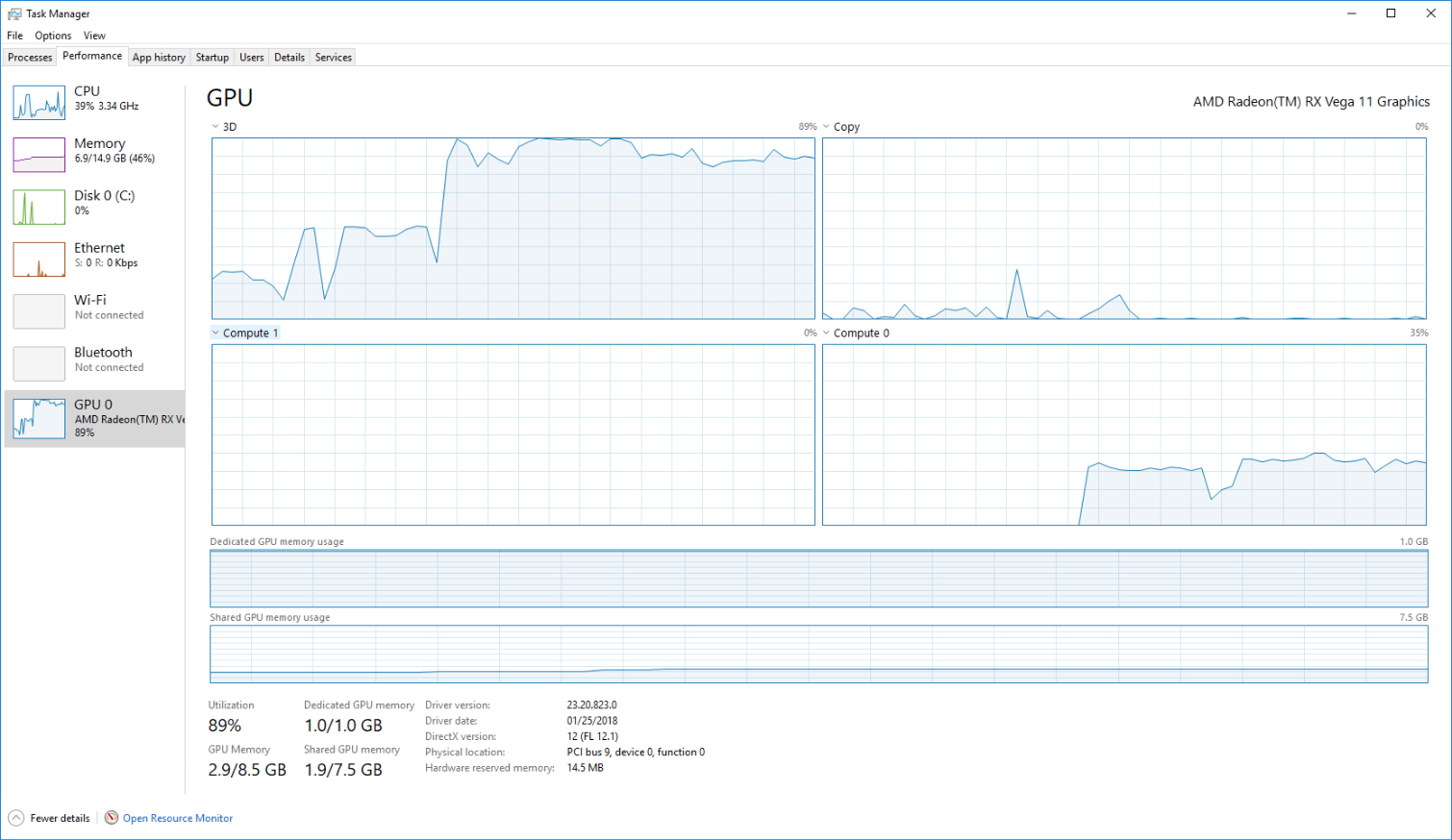

We tested gaming at 1280x720 and 1920x1080, but didn't have time to run comparison tests with the BIOS-enabled UMA frame buffer setting. Increasing this setting allocates more system memory to the on-die graphics, although it also chews into memory available for other tasks. As you can see in the screenshot above, the graphics subsystem consumes system memory at stock settings, so allocating even more is a bit of a trade-off. Shared GPU memory is RAM that the system dynamically provisions between the CPU or GPU based on workload. By default, the operating system limits this shared pool to half of the system memory's total capacity.

AMD says the benefit of a larger UMA frame buffer is evident in the ability to specify higher levels of detail. Just don't expect faster frame rates at 1080p. This should be an interesting setting to experiment with. Right out of the gate, AMD says that a user with 16GB of DDR4 would benefit from assigning 4GB to the graphics engine.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Test Methodology & Systems

AMD's Raven Ridge performs best with Windows 10 Build 1709, so we fully updated our test systems before benchmarking. The latest Windows build adds Multi-Plane Overlay, which provides a more efficient way of rendering video and compositing 2D surfaces. It also saves power by alpha-blending accelerated surfaces and culling the ones you cannot see. That major change means you cannot compare these test results to previous reviews.

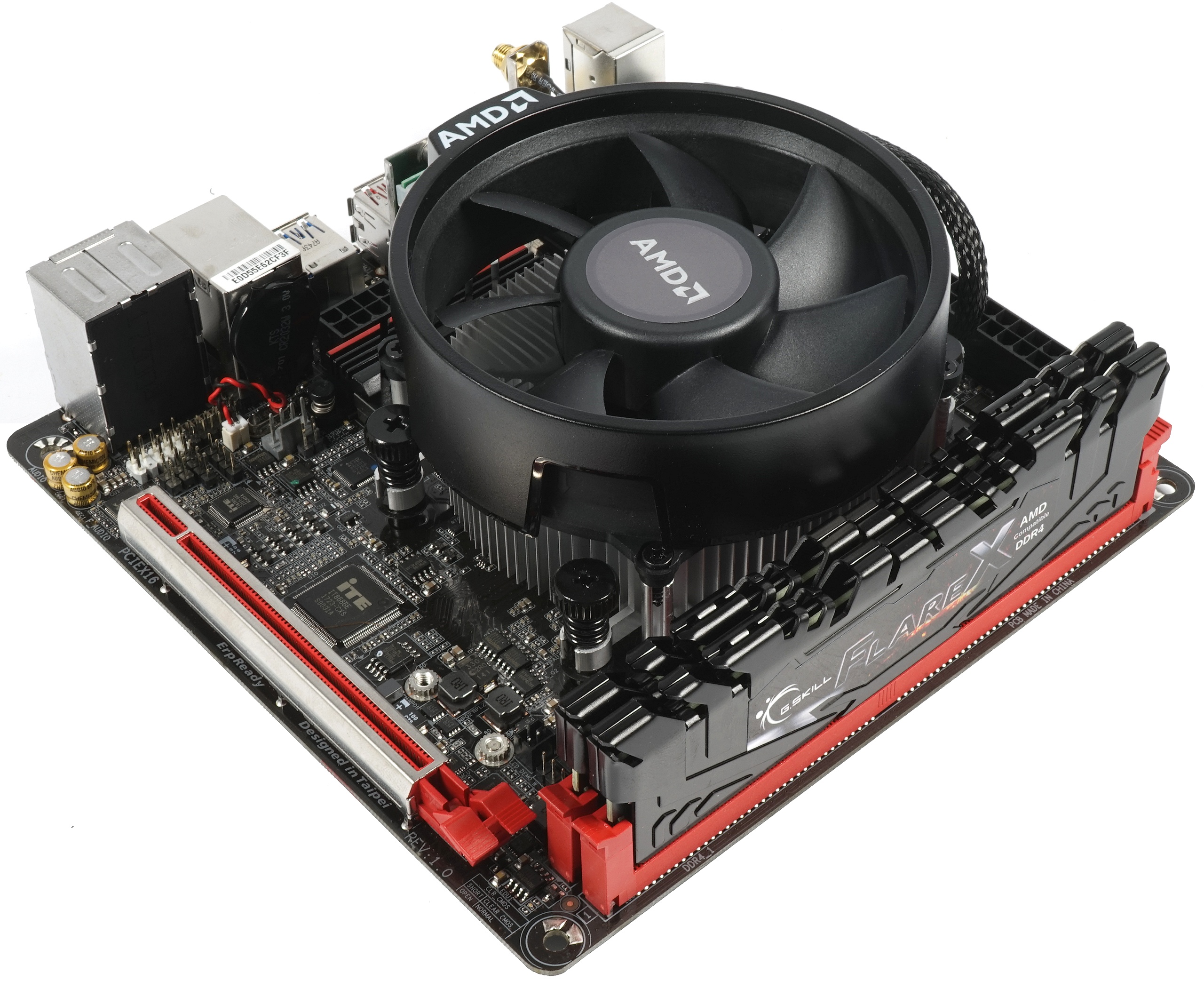

AMD sent along the mini-ITX Gigabyte AB350N Gaming WiFi motherboard and a 2x 8GB G.Skill FlareX DDR4-3200 memory kit. We used the bundled Wraith Stealth cooler for testing applications and games at stock settings, then switched over to the aforementioned Noctua cooler for overclocking.

Test Systems

| Test System & Configuration | |

|---|---|

| Hardware | Gigabyte AB350N Gaming WiFiAMD A10-9700AMD Ryzen 5 2400GRyzen 3 2200GFlare X 16GB DDR4-3200 @ 2400, 2699, & 3200Intel LGA 1151 (Z370)Core i3-8100Core i5-8400Z370 Gaming Pro Carbon ACG.Skill RipJaws V DDR4-3200 (2x 8GB) @ 2400 & 2666Intel LGA 1151 (Z270)Intel Core i3-7100MSI Z270 Gaming M7G.Skill RipJaws V DDR4-3200 (2x 8GB) @ 2400AllEVGA GTX 1080Samsung PM863 (960GB)SilverStone ST1500-TI Windows 10 Pro 64-bitCreators Update v.1709 (10.0.16299.214)Hydro H115i |

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Current page: Power & Thermals, Overclocking & Test Setup

Prev Page SoC Capabilities & Vega Next Page 3DMark & Battlefield 1

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

InvalidError Looking at Zeppelin and Raven dies side by side, proportionally, Raven seems to be spending a whole lot more die area on glue logic than Zeppelin did. Since the IGP takes the place of the second CCX, I seriously doubt its presence has anything to do with the removal of 8x PCIe lanes. Since PCIe x8 vs x16 still makes very little difference on modern GPUs where you're CPU-bound long before PCIe bandwidth becomes a significant concern, AMD likely figured that nearly nobody is going to pair a sufficiently powerful GPU with a 2200G/2400G for PCIe x8 to matter.Reply -

Olle P 1. Why did you use 32GB RAM for the Coffee Lake CPUs instead of the very same RAM used for the other CPUs?Reply

2. In the memory access tests I feel to see the relevance of comparing to higher teer Ryzen/ThreadRipper. Would rather see comparison to the four core Ryzens.

3. Why not also test overclocking with the Stealth cooler? (Works okay for Ryzen 3!)

4. Your comments about Coffee Lake on the last page:

"Their locked multipliers ... hurt their value proposition...

... a half-hearted attempt to court power users with an unlocked K-series Core i3, ... it requires a Z-series chipset..."As of right now all Coffee Lake CPUs require a Z-series chipset, so that's not an added cost for overclocking. I'd say a locked multiplier combined with the demand for a costly motherboard is even worse. (This is suppsed to change soon though.) -

AgentLozen Tom's must think highly of this APU to give it the Editor's Choice award. It seems to be your best bet for an extremely limited budget.Reply

I totally understand if you only have a few hundred dollars to build your PC with and you desperately want to get in on some master race action. That's the situation where the 2400G shines brightest. But the benchmarks show that games typically don't run well on this chip. They DO work under the right circumstances, but GTAV isn't as fun to play at low settings.

Buying a pre-built PC from a boutique with a GeForce 1050Ti in it will make your experience noticeably better if you can swing the price. -

akamateau What most writers and critics of integrated graphics processors such as AMD's APU or Intel iGP all seem to forget, is not EVERYONE in the world has a disposable or discretionary income equal to that of the United States, Europe, Japan etc. Not everyone can afford bleeding edge gaming PC's or laptops. Food, housing and clothing must come first for 80% of the population of the world.Reply

An APU can grant anyone who can afford at least a decent basic APU the enjoyment of playing most computer games. The visual quality of these games may not be up to the arrogantly high standards of most western gamers, but then again these same folks who are happy to have an APU also can not barely afford a 750p crt monitor much less a 4k flat screen.

This simple idea is huge not only for the laptop and pc market but especially game developers who can only expect to see an expansion of their Total Addressable Market. And that is good for everybody as broader markets help reduce the cost of development.

This in fact was the whole point behind AMD's release of Mantle and Microsoft and The Kronos Group's release of DX12 and Vulkan respectively.

Today's AMD APU has all of the power of a GPU Add In Board of not more than a several years back. -

Blas "Meanwhile, every AMD CPU is overclockable on every Socket AM4-equipped motherboard" (in the last page)Reply

That is not correct, afaik, not for A320 chipsets. It is for B350 and X370, though. -

salgado18 "with a GeForce 1050Ti in it will make your experience noticeably better if you can swing the price."Reply

"a card is still needed"

You do realize that these CPUs have an integrated graphics chip as strong as a GT 1030, right? And that you are comparing a ~$90 GPU to a ~$220 GPU?

If you can swing the price, grab a GTX 1080ti already, and let us mITX/poor/HTPC builders enjoy Witcher 3 in 1080p for a fraction of the price ;) -

InvalidError Reply

When 1080p displays are available for as little as $80, there isn't much point in talking about 720p displays. I'm not even sure I can still buy one of those even if I wanted to unless I shopped used. (But then I could also shop for used 1080p displays and likely find one for less than $50.)20700012 said:but then again these same folks who are happy to have an APU also can not barely afford a 750p crt monitor much less a 4k flat screen.

The price of 4k TVs is coming down nicely, I periodically see some 40+" models with HDR listed for as little as $300, cheaper than most monitors beyond 1080p.

Depends for who, not everyone is hell-bent on playing everything at 4k Ultra 120fps 0.1% lows. Once the early firmware/driver bugs get sorted out, it'll be good enough for people who aren't interested in shelling out ~$200 for a 1050/1050Ti alone or $300+ for anything beyond that. If your CPU+GPU budget is only $200, that only buys you a $100 CPU and GT1030 which is worse than Vega 11 stock.20700022 said:Graphics still too weak , a card is still needed.

If my current PC had a catastrophic failure and I had to rebuild in a pinch, I'd probably go with the 2400G instead of paying a grossly inflated price for a 1050 or better. -

Istarion People come here expecting to find an overclockeable 4 core with a 1080-like performance for 160$. And a good cooler. I'd love to be so optimistic :DReply

Summarizing: we are saving around 50-100$ for the same low-end performance. That's 25% to 40% cheaper. What are we complaining about?!? I'd be partying right now if that happened in high-end too!!! 300$ for a 1080...

All those comments saying "too weak", or "isn't fun to play at low settings", seriously, travel around the globe or just open your mind, there's poor people in 90% of the world, do you think they'll buy a frakking 1080 and a 8700k?!?

And there's even non-poor people that doesn't care about good graphics! Go figure!

Otherwise, why there are pixel graphics games all over the place? Or unoptimized/breaking early access games??

I have a high-end pc and still lower fps to minimum for competitive play, so I won't see any difference between a 1080Ti vs a 1070 (250 vs 170fps, who's gonna see that, my cat?!? No 'cause my monitor is not fast enough!). -

rush21hit As a cyber cafe owner, I would love to replace my old A5400s to the lower R3.Reply

Except that the DDR4 sticks went crazy expensive over here. FML