AMD Ryzen Threadripper 1950X Game Mode, Benchmarked

Finding The Right Modes

If you crave lots of cores and tons of PCIe connectivity, like most content creators, multitaskers, and software developers, then Threadripper is for you. It might also be a good fit if you're a gamer who simultaneously runs heavily threaded productivity applications in the background.

The Zeppelin die really is a feat of modern engineering. However, its architecture is dissimilar from anything that came before, creating issues in some software written prior to Ryzen's introduction. AMD worked with game developers to iron out the performance wrinkles we identified at launch, and we've seen big speed-ups in a number of titles as a result.

But expanding beyond Ryzen 7, 5, and 3 into a dual-die configuration adds a new set of challenges for Threadripper.

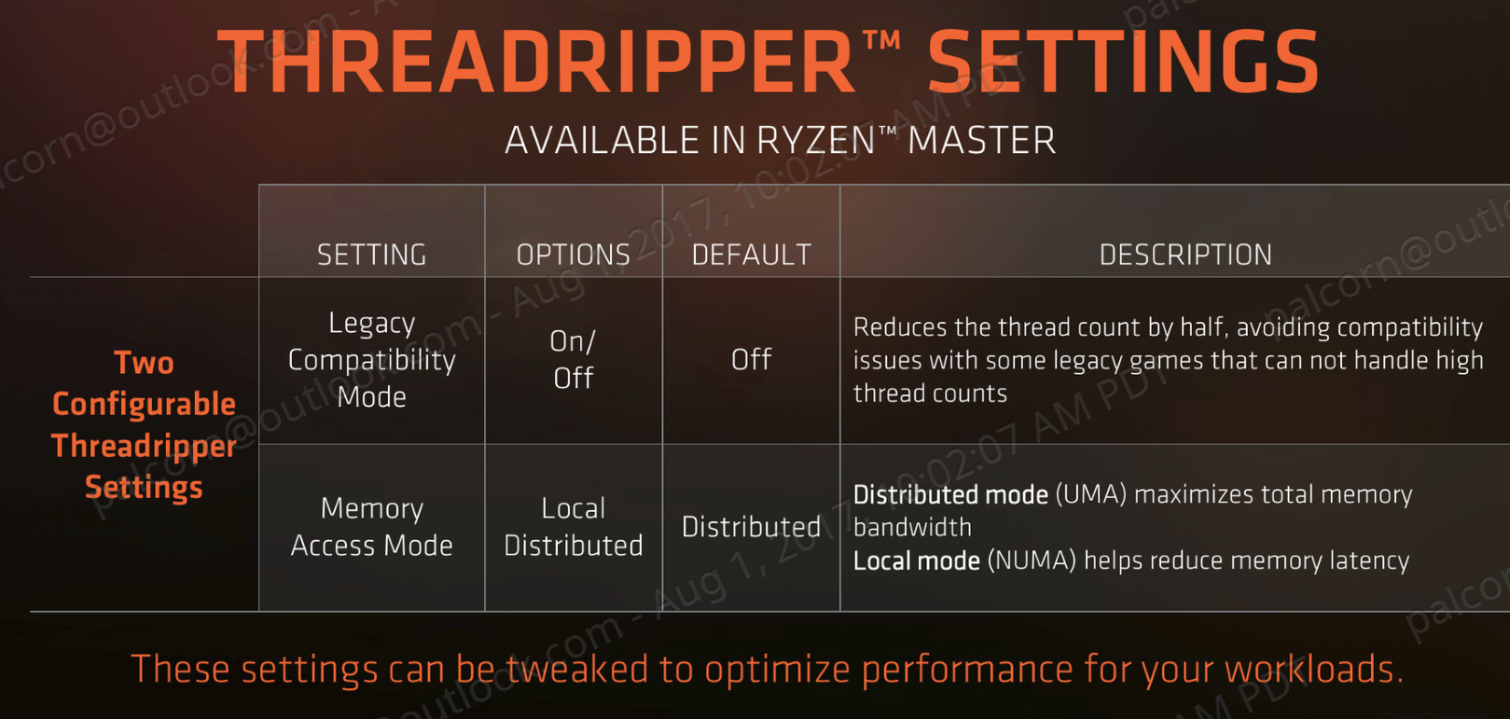

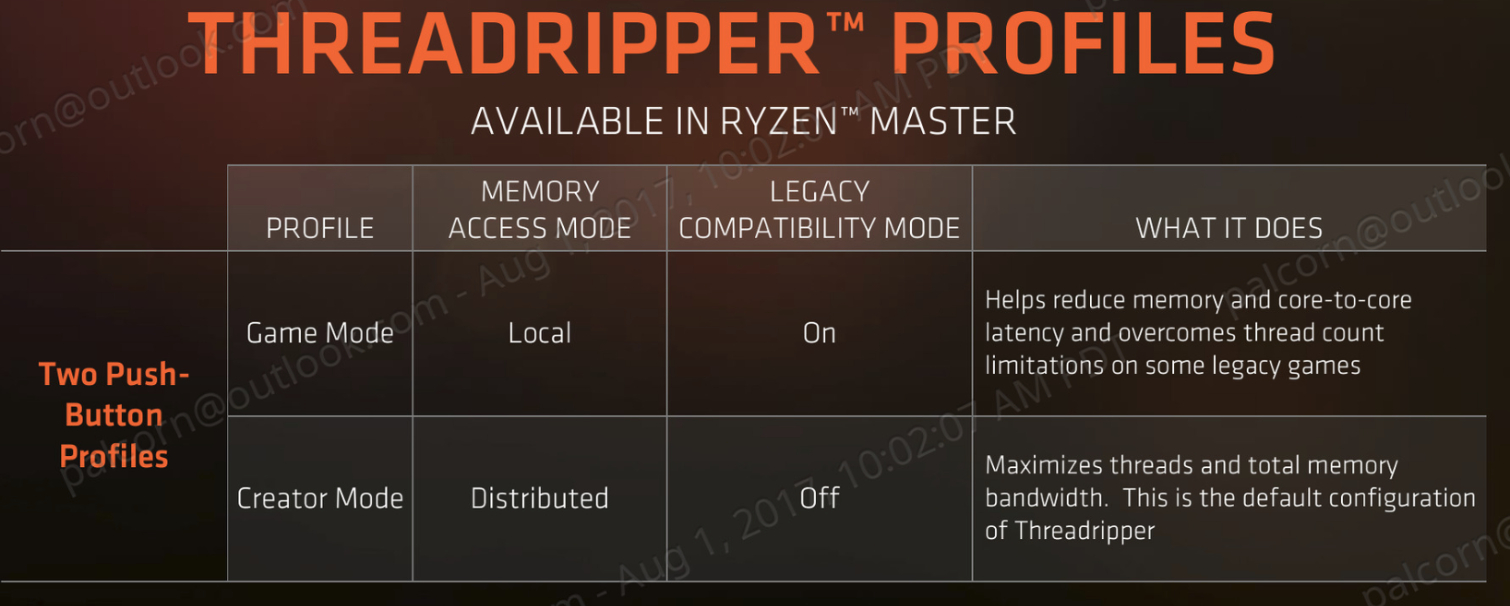

AMD's fix involves two toggles that affect how the processor operates, giving you modes optimized for whatever workload you're running. These switches create a total of four unique configurations to choose from. So, in a bid to condense the number of combinations, AMD created its Creator and Game modes.

Why Do We Need Game Mode?

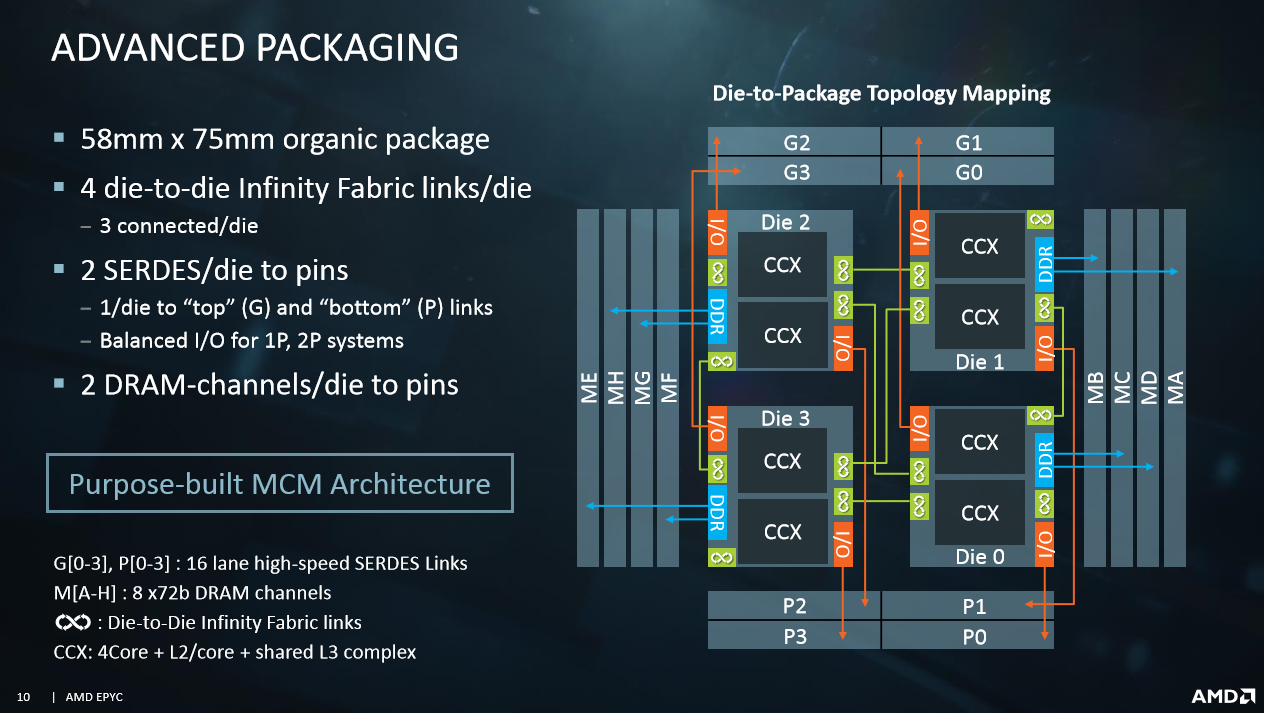

The Zeppelin die consists of two quad-core CPU complexes (CCXes) woven together with the Infinity Fabric interconnect. Even in the single-die Ryzen 7/5/3 processors, this creates a layer of latency that affects communication between the CCXes. AMD builds upon that design with its Threadripper processors, leveraging two active Zeppelin dies. As you might imagine, this introduces another layer of Infinity Fabric latency.

Each die has its own memory and PCIe controllers. So, if a thread running on one core needs to access data resident in cache on the other die, it has to traverse the fabric between those dies and incur significant latency. Naturally, the latency penalty between dies is higher than it is between CCXes in the single-die configurations. To combat the potential for performance regression as a result of its "go-wide" approach, AMD devised an interesting solution: it introduced a new memory access switch that you can toggle via motherboard BIOS or the Ryzen Master software. The Local and Distributed settings flip between either NUMA (Non-Uniform Memory Access) or UMA (Uniform Memory Access).

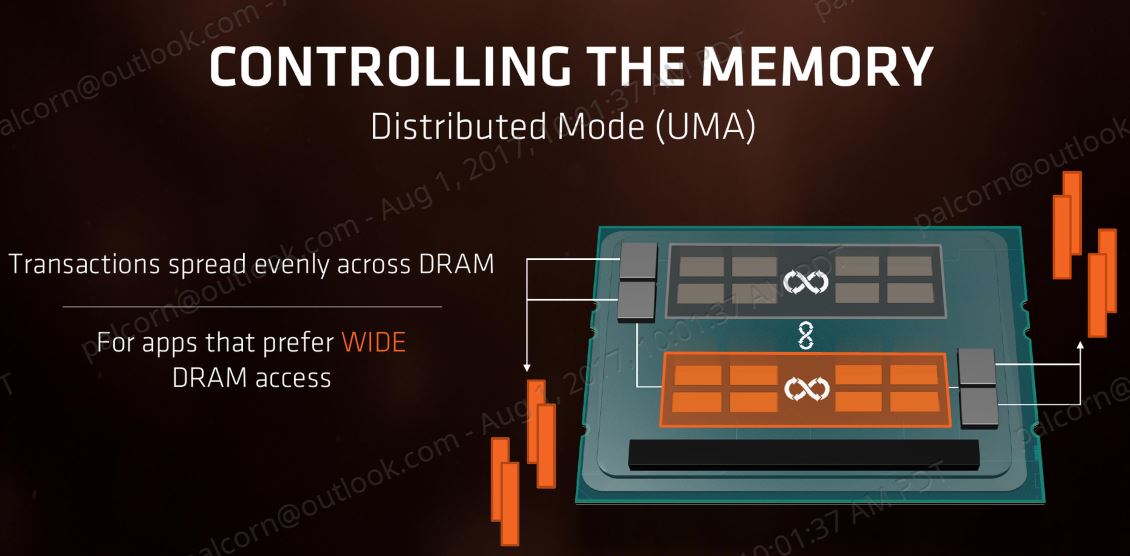

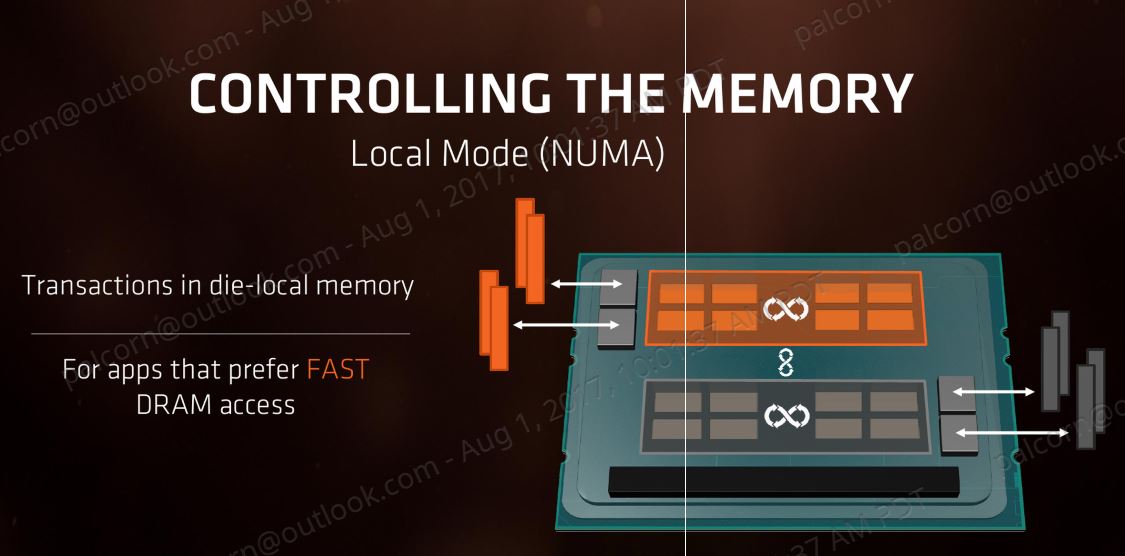

UMA (Distributed) is pretty simple; it allows both dies to access all of the attached memory. NUMA mode (Local) attempts to keep all data for the process executing on the die confined to its directly attached memory controller, establishing one NUMA node per die. The goal is to minimize requests to remote memory attached to the other die.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

NUMA works best if programs are designed specifically to utilize it. Even though most desktop PC software wasn't written with NUMA in mind, performance gains are still possible in non-NUMA applications.

Breaking Games

Some games simply won't load up when presented with Threadripper's 32 threads. That's right, AMD's flagship broke a few titles. The same thing will happen to Intel when its highest-end Skylake-X chips surface shortly.

Out of necessity, AMD created a Legacy Compatibility mode that executes a "bcdedit /set numproc XX" command in Windows. This command cuts the thread count in half. Fortunately, due to the operating system's default assignments, the command disables all of the cores/threads on the second die. That has a side benefit of eliminating thread-to-thread communication between disparate dies, solving the constant latency-inducing synchronization between threads during gaming workloads. It also prevents thread migration, lessening the chance of cache misses.

But What To Test?

The two new toggles give us a menu of options to mess with. AMD's Creator preset exposes 16C/32T and leaves the operating system in Distributed memory access mode. Those settings together should yield excellent performance in most productivity applications. Game mode cuts half the threads via compatibility mode and reduces memory and die-to-die latency with Local memory access.

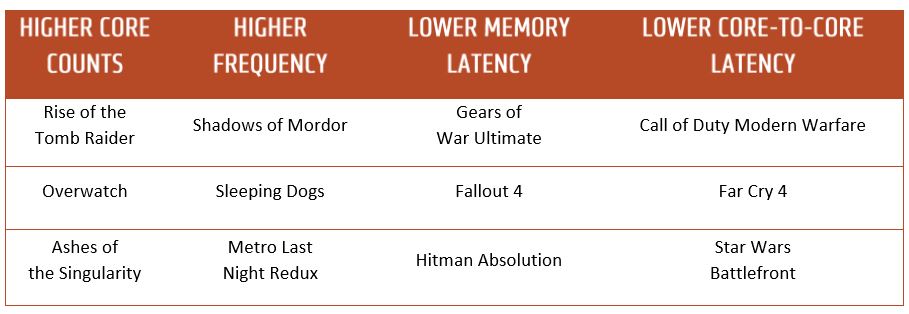

As early as our first Ryzen review, we found that disabling SMT had a positive impact on some games. However, throwing in another setting to consider expands our list of viable configurations. Of course, some options make little sense for gaming. But they might be interesting to test with normal applications. We narrowed our list down based on Infinity Fabric measurements and prior experience with AMD processors.

| Configurations | Local (NUMA) / Distributed (UMA) | Legacy Mode (On/Off) | SMT (Multi-Threading) |

| Creator Mode | Distributed | Off | On |

| Game Mode | Local | On | On |

| Custom - Local/SMT Off | Local | Off | Off |

| Custom - Local/SMT On | Local | Off | On |

Disabling SMT in Game mode, which already halves core/thread count, would further cut into our available execution resources. So, we left SMT on and both dies active. We also had the option to leave all cores/threads active, but try isolating memory access to the local memory controller. We found that combination particularly useful in threaded games during our initial review. Of course, we also decided to test using the default Creator and Game modes.

Unfortunately, you can't just throw a switch and fire up your favorite game. Each change requires a reboot, chewing up precious time as you save open projects, halt conversations, and try to remember which web browser tabs to relaunch. Again, Game mode also halves thread count, which isn't good for running heavily threaded applications at full speed in the background while you play. Luckily, the breadth of available options should allow us to find a better configuration that leaves more processing resources at the ready.

AMD designed its Threadripper processors primary for use in enthusiast-oriented PCs, which tend to ship with high-resolution monitors. Higher resolutions are typically GPU-bound, reducing the variance between CPU modes. To better highlight performance trends and maximize our limited testing time, though, we chose to benchmark at 1920x1080, allowing us to spot the subtle differences between AMD's settings.

Overall, Game mode is designed to improve memory latency and help avoid excessive die-to-die Infinity Fabric traffic. Let's start with examining how our settings affect the Infinity Fabric and memory subsystem.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Current page: Finding The Right Modes

Next Page Testing Ryzen's Infinity Fabric & Memory Subsystem

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

beshonk "extra cores could enable more performance in the future as software evolves to utilize them better"Reply

I can't believe we're still saying this in 2017. Developers suck at their job. -

sztepa82 "AMD aims Threadripper at content creators, heavy multitaskers, and gamers who stream simultaneously. It also says the processors are ideal for gaming at high resolutions. Ryzen Threadripper 1950X isn't intended for playing around at low resolutions, particularly in older, lightly-threaded titles. ____Still, we tested at 1920x1080____ ...."Reply

Thank you for being out there for us, Tom's, no other website has ever done that. The only other thing we can hope for is that you'll also do a 2S Epyc 7601 review playing Project Cars in 320x240. -

shrapnel_indie ReplyEach change requires a reboot, chewing up precious time as you save open projects, halt conversations, and try to remember which web browser tabs to relaunch.

not if you're running the right browser with the right options active. Firefox can remember the last tabs you had open and reopen them upon startup... of course this is within the last Firefox window closed, and you have to properly exit. (no killing the thread(s).) -

-Fran- Since this CPU (and Intel's X and XE line) are aimed for big spenders, when are you guys going to test multi GPU in these CPUs?Reply

Also, you mentioned streaming as part of the big CPU charisma, but there was no actual test with it. Why not just run OBS with the same software encoding settings for each platform and run a game? It's not that hard to do, is it?

Cheers! -

Dyseman Quote- 'When I go to pause the video (ad) your site takes me to another tab. Bye, bye.'Reply

It's easy enough to disable the JW Player with ublock. Those videos are not considered ads but adblockers, but you can tell it to block anything that uses JW Player, then whitelist any other site that needs to use it for NON-ADs. -

rhysiam Thanks for this investigation Toms, really thorough and interesting article.Reply

It's interesting and a little disappointing that an OC to 3.9Ghz seems to pretty consistently achieve a small but measurable bump in gaming. The 1950X can use XFR to get to 4.2Ghz on lightly threaded workloads. Obviously in well-threaded games the CPU isn't going to be able to sustain 4.2Ghz, but it's a bit disappointing it can't manage 3.9-4ghz across the 4-6 cores used in gaming workloads. In fact, judging from the results it seems to be sitting around 3.7-3.8Ghz or so in most games. That seems low to me. There should be plenty of thermal and power headroom available to to get 4-6 cores up to nice high clocks, which should be enough cores for pretty much every game in the suite (except perhaps AOTS). If that was happening we'd see the OC making no difference, or even perhaps causing a slight performance regression in games (like it does in synthetic single-threaded tests). But clearly that's not the case.

It seems to me that AMD's power management implementation is resulting in some pretty conservative clock speeds in the 4-6 core workload range. That has implications outside of gaming as well, because 4-6 thread workloads are quite common even in the productivity and content creation space. It's hardly a deal breaker (we're only looking a couple of hundred mhz), but I'm curious whether others think AMD is giving up a little more performance than they should be here? Or am I missing something? -

jdwii Reply20187640 said:Thanks for this investigation Toms, really thorough and interesting article.

It's interesting and a little disappointing that an OC to 3.9Ghz seems to pretty consistently achieve a small but measurable bump in gaming. The 1950X can use XFR to get to 4.2Ghz on lightly threaded workloads. Obviously in well-threaded games the CPU isn't going to be able to sustain 4.2Ghz, but it's a bit disappointing it can't manage 3.9-4ghz across the 4-6 cores used in gaming workloads. In fact, judging from the results it seems to be sitting around 3.7-3.8Ghz or so in most games. That seems low to me. There should be plenty of thermal and power headroom available to to get 4-6 cores up to nice high clocks, which should be enough cores for pretty much every game in the suite (except perhaps AOTS). If that was happening we'd see the OC making no difference, or even perhaps causing a slight performance regression in games (like it does in synthetic single-threaded tests). But clearly that's not the case.

It seems to me that AMD's power management implementation is resulting in some pretty conservative clock speeds in the 4-6 core workload range. That has implications outside of gaming as well, because 4-6 thread workloads are quite common even in the productivity and content creation space. It's hardly a deal breaker (we're only looking a couple of hundred mhz), but I'm curious whether others think AMD is giving up a little more performance than they should be here? Or am I missing something?

Ryzen hits a certain point in return pretty darn fast for example CPU might only use 1.15V to get 3.6ghz stable but 3.9ghz needs like 1.3V way to much. -

papality Reply20185985 said:"extra cores could enable more performance in the future as software evolves to utilize them better"

I can't believe we're still saying this in 2017. Developers suck at their job.

Intel's billions had a lot to say in this.