Battlefield 1 Performance In DirectX 12: 29 Cards Tested

High-End PC, 2560x1440

Low Quality Preset

Our 2560x1440 results compare nine different cards from AMD and nine others from Nvidia. Even then, we had to cut some of the cards we benchmarked. There are just a ton of viable options for the range of quality settings at this resolution that might make sense with a Core i7-6700K-based platform.

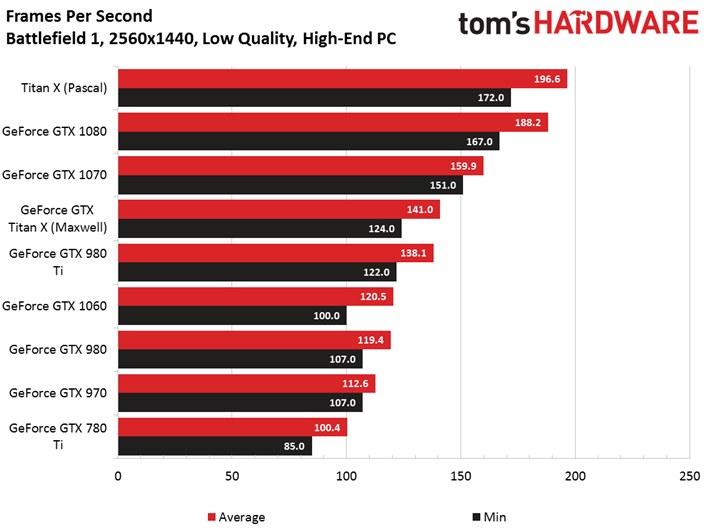

Dropping all the way to Low quality, a Titan X continues to peg Battlefield 1’s 200 FPS ceiling with GeForce GTX 1080 not far behind.

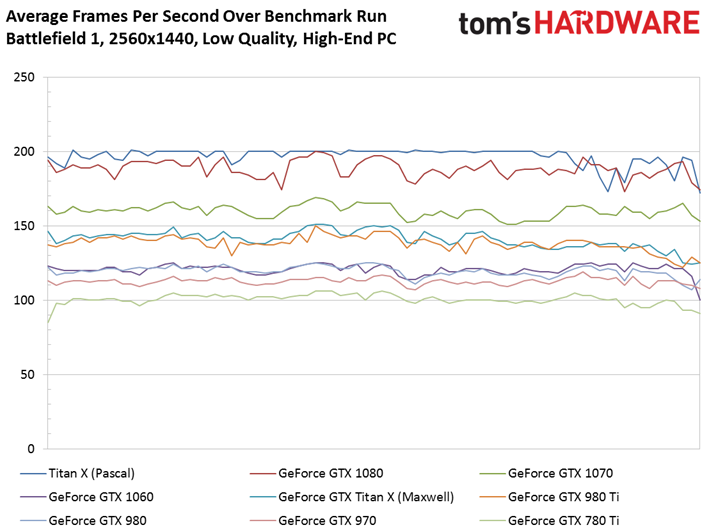

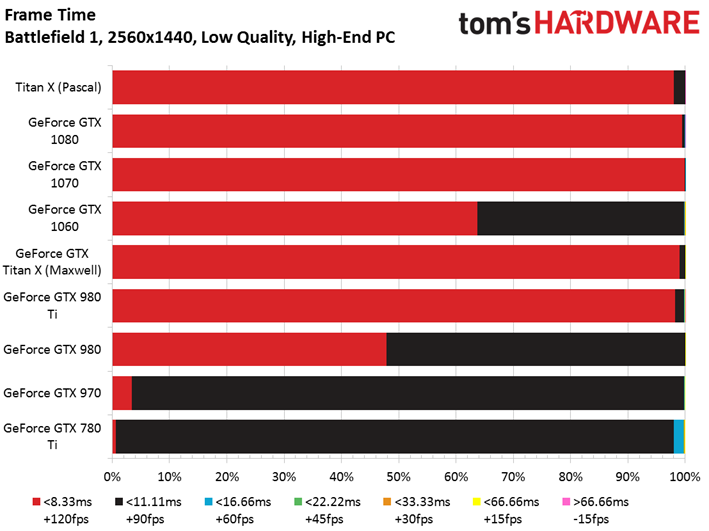

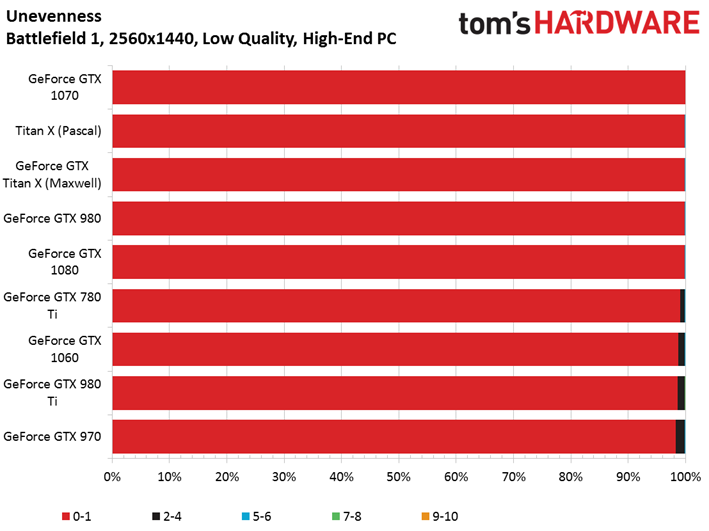

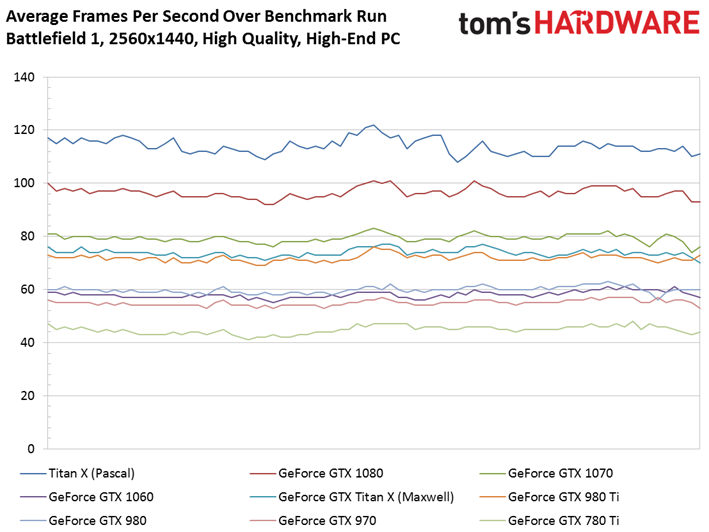

Notice that the GeForce cards’ minimum frame rates are, at most, 20 FPS or so behind the averages. Flip over to the frame rate over time chart, and you’ll see relatively consistent trend lines. We’ll compare those results to AMD’s Radeons in the next set of charts because they differ from each other quite a bit.

In any case, even a GeForce GTX 780 Ti averages over 100 FPS at QHD. Playability isn’t an issue on a fast card using this game’s Low preset.

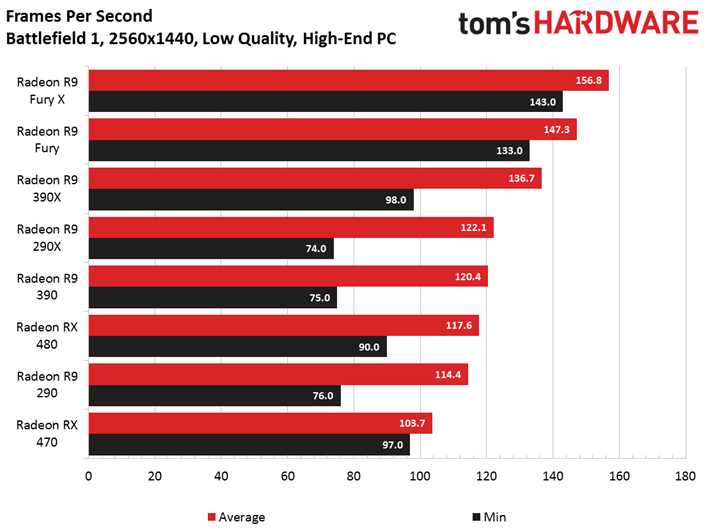

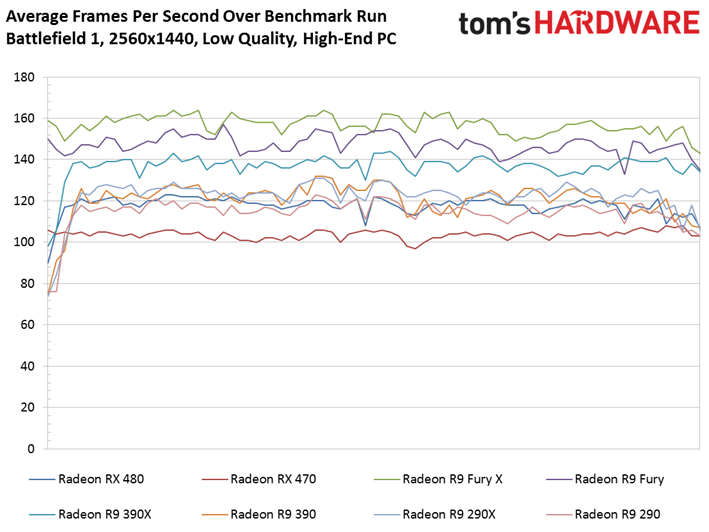

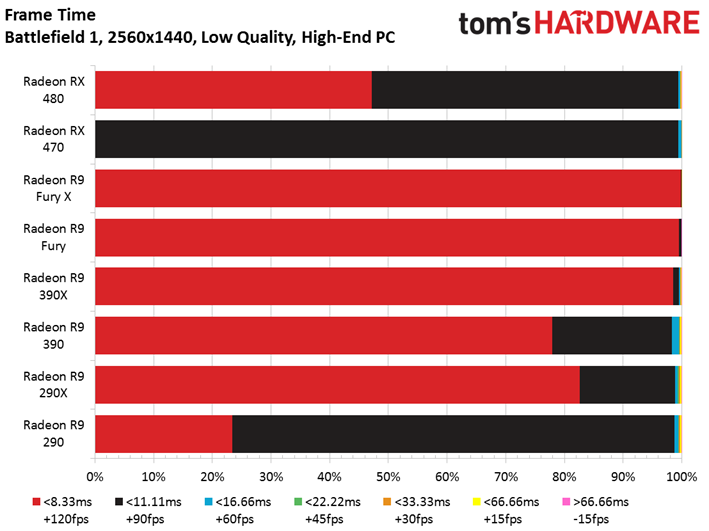

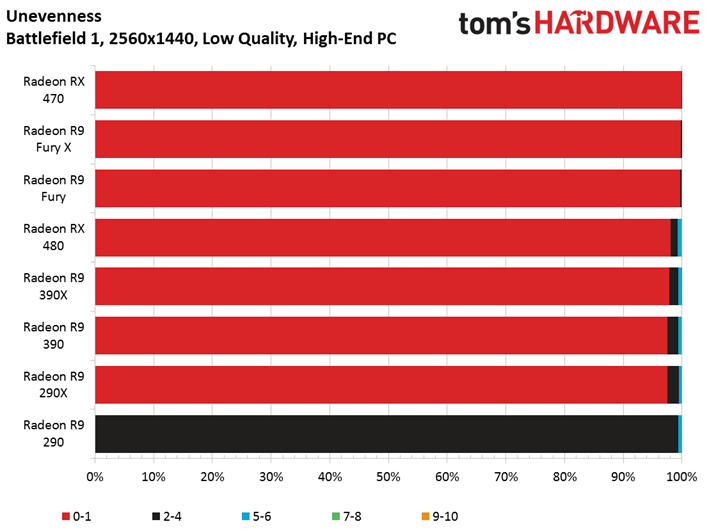

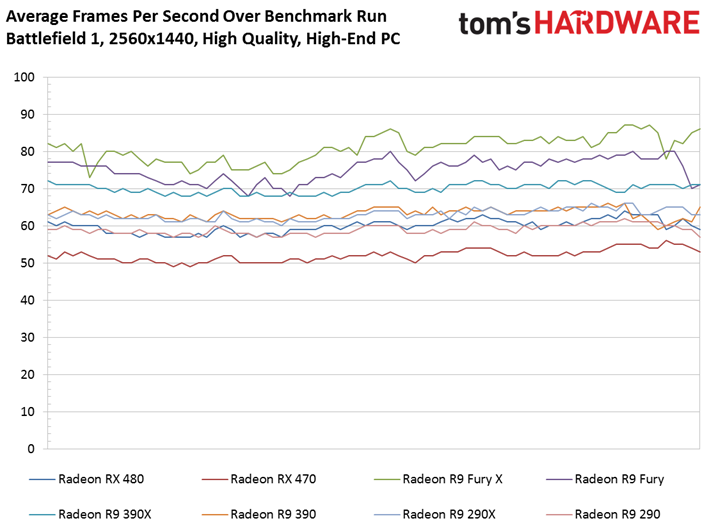

AMD’s last few generations also kick back average frame rates in excess of 100 FPS. But notice their minimums are all over the place. The average frame rate over time chart shows the HBM-equipped Fury X and Fury launching right into our benchmark sequence without missing a beat. But the boards with GDDR5 take a few seconds to get up to speed. The only exception is Radeon RX 470, which starts higher than several faster cards, but ultimately remains the chart’s slowest contender.

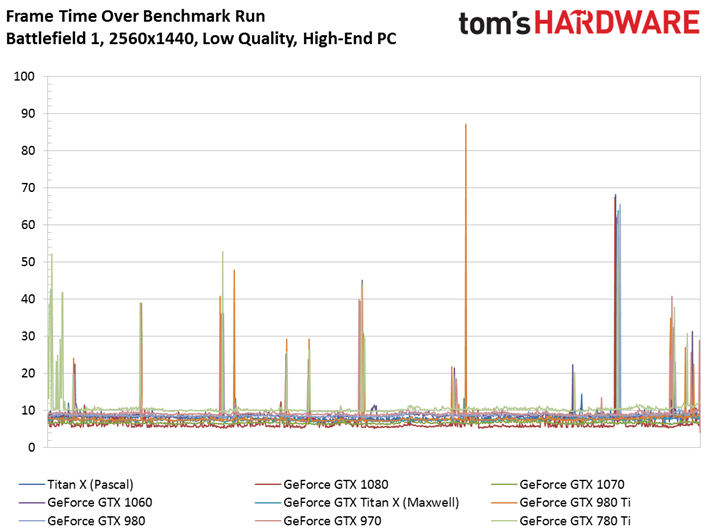

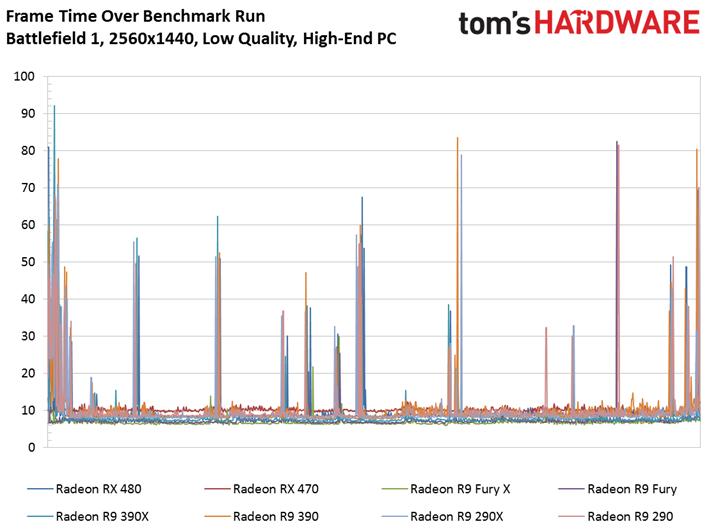

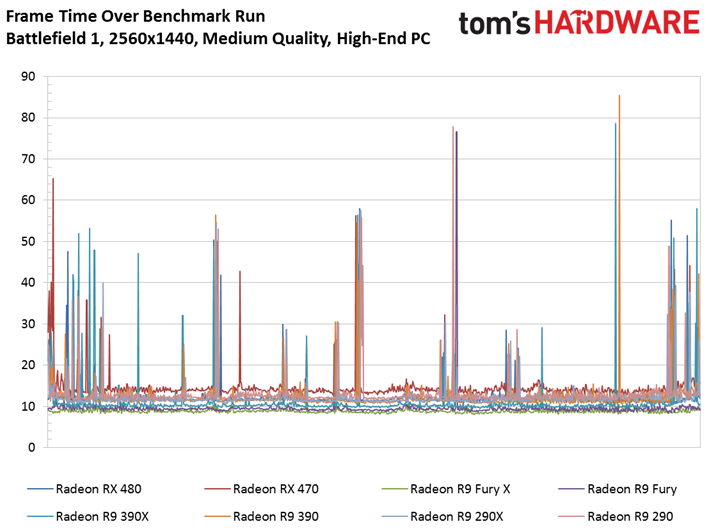

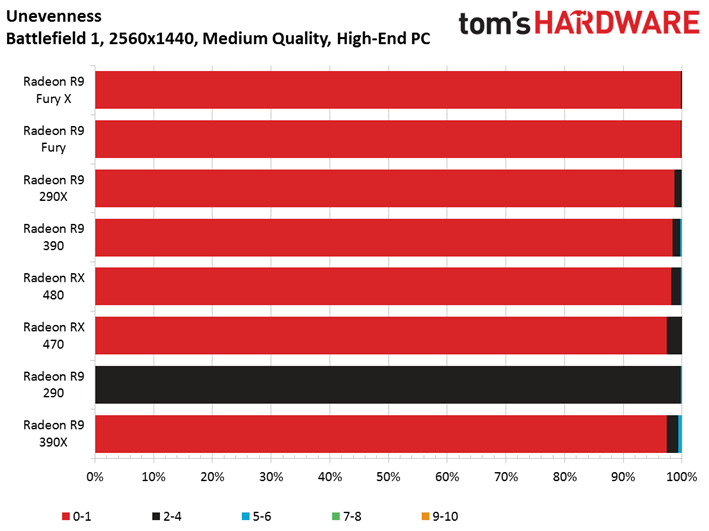

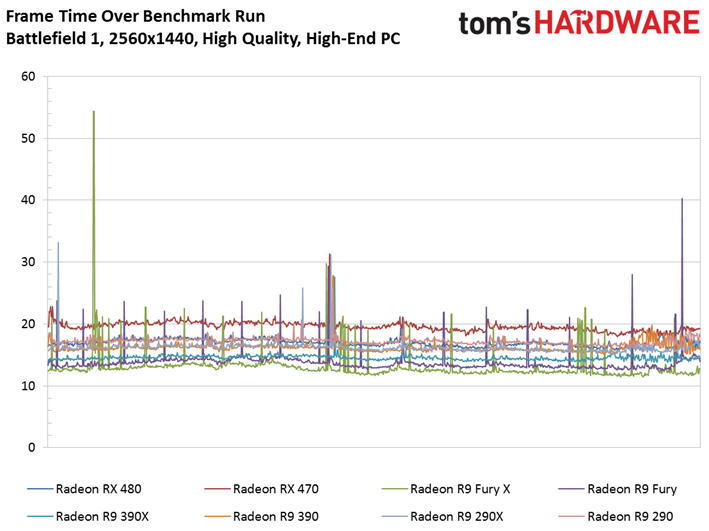

Certain events trigger frame time spikes across the Radeon line-up. We’re thinking these correspond to explosions during our run that “shake” the camera. The GeForce cards experience a similar phenomenon, though the effects don’t appear as pronounced or as frequent.

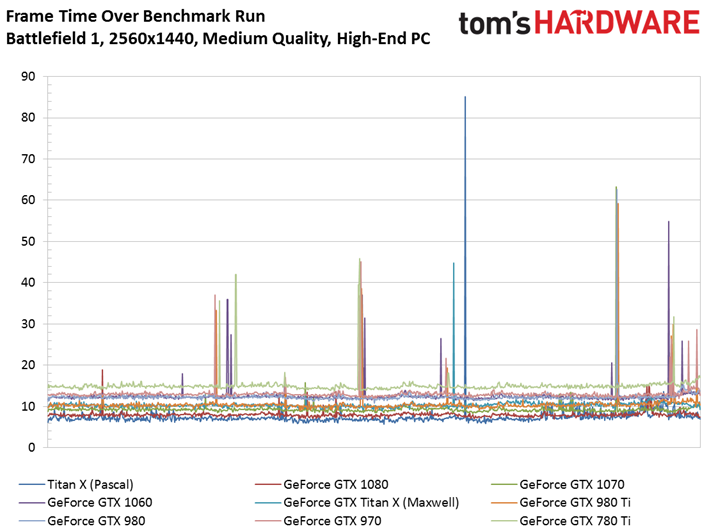

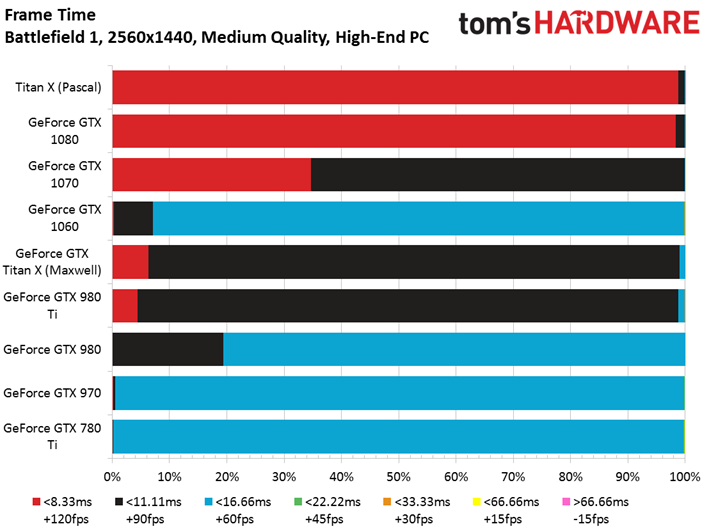

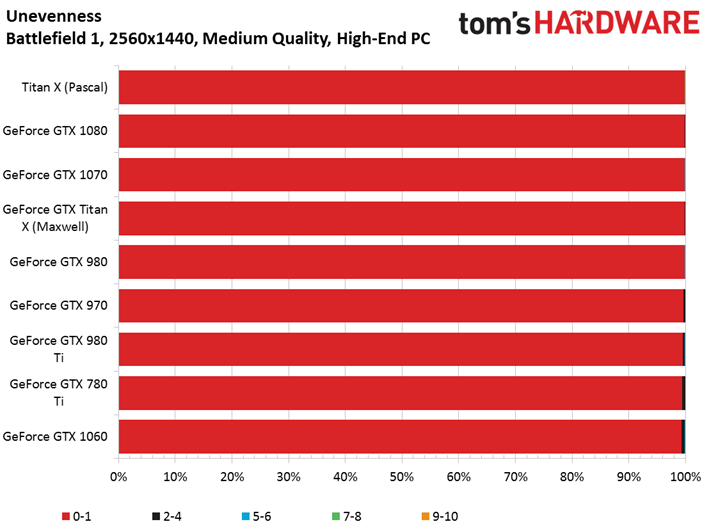

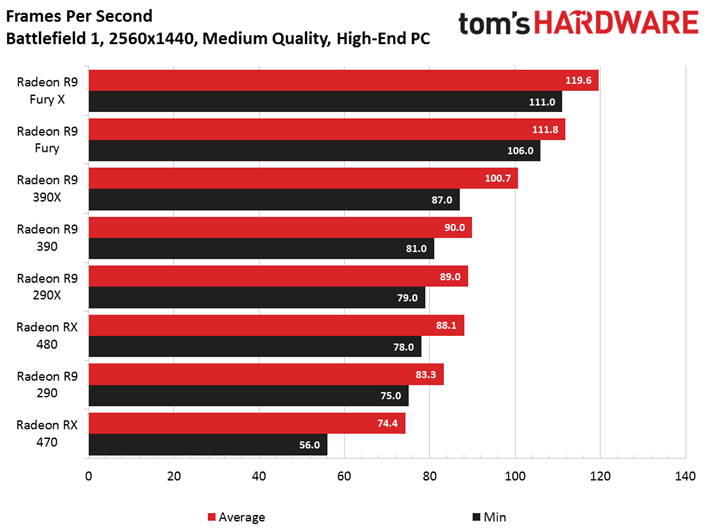

Medium Quality Preset

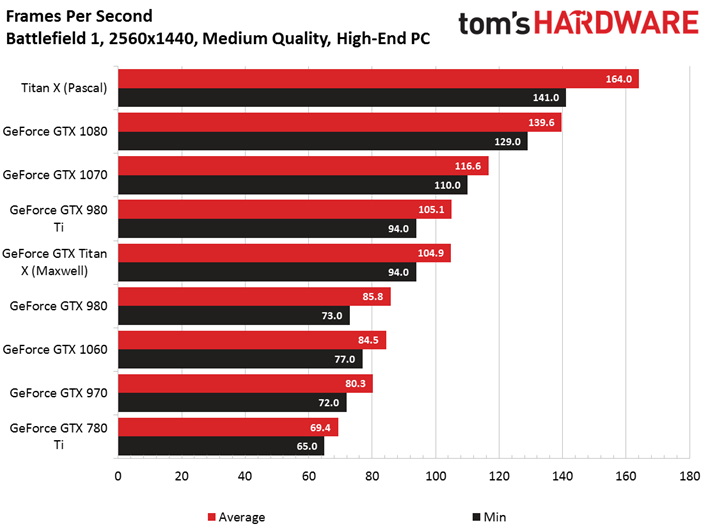

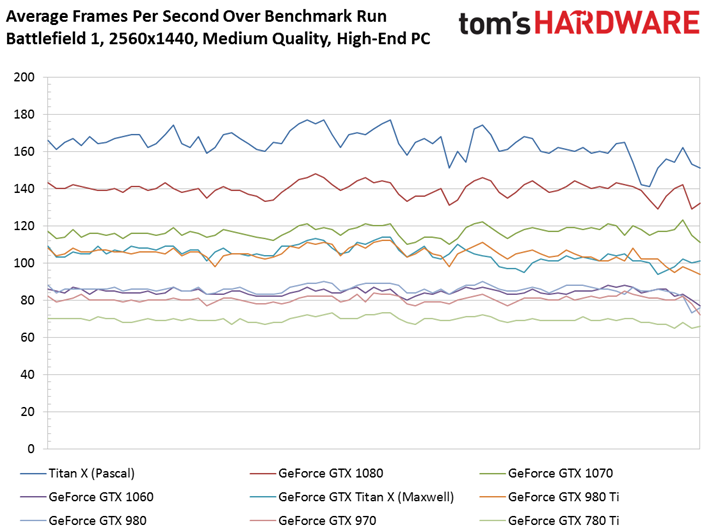

A step up to the Medium preset has a big impact on how Battlefield 1 looks, and it affects performance just as significantly. Even still, our slowest card, the GeForce GTX 780 Ti, keeps its nose above 60 FPS through the whole run. We’re waiting to see if the 780 Ti’s 3GB of memory becomes a more prominent bottleneck at higher detail settings or resolutions. But for now, a $500 GeForce GTX 1080 is almost exactly twice as fast as the 780 Ti, which sold for $700 three years ago.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

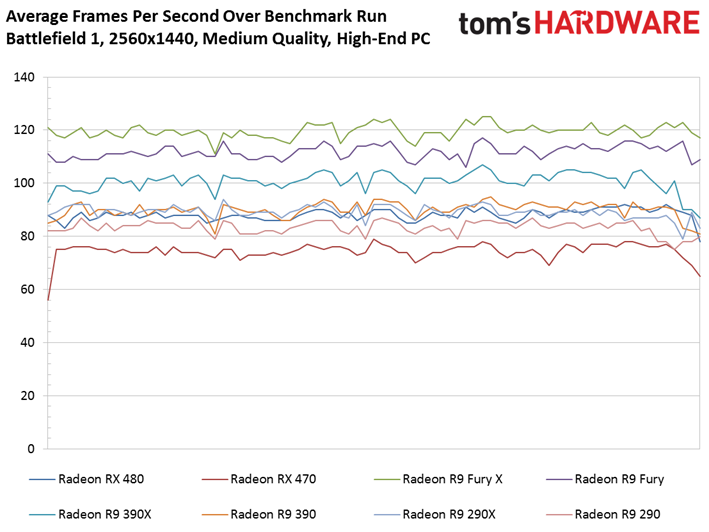

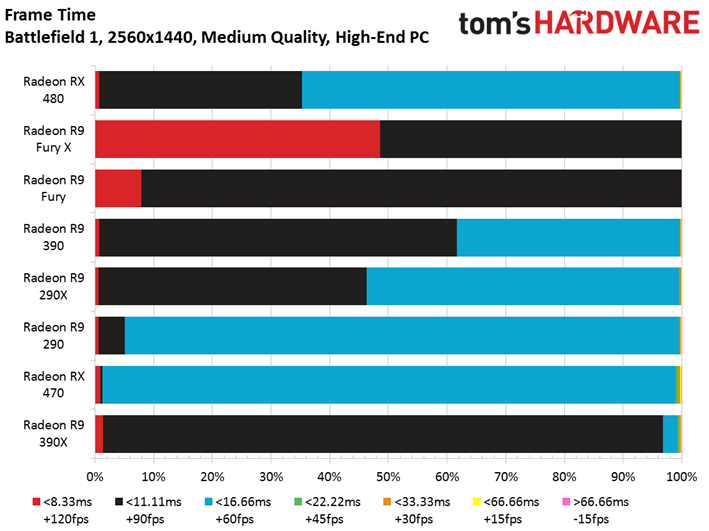

More demanding detail settings smooth out the performance inconsistency most Radeon cards experienced during the first few seconds of our benchmark sequence.

Both Fiji-based boards enjoy a significant advantage over the rest of AMD’s portfolio. The Radeon R9 Fury X does battle with Nvidia’s GeForce GTX 1070, while the vanilla Fury is a bit faster than GeForce GTX 980 Ti.

Back in 2015, GeForce GTX 980 Ti was generally quicker than Radeon R9 Fury X. So it’s a pretty big deal that two years later, AMD beats that same card with its Radeon R9 Fury.

Meanwhile, the Radeon RX 480 8GB is quicker than GeForce GTX 980 and 1060 6GB.

High Quality Preset

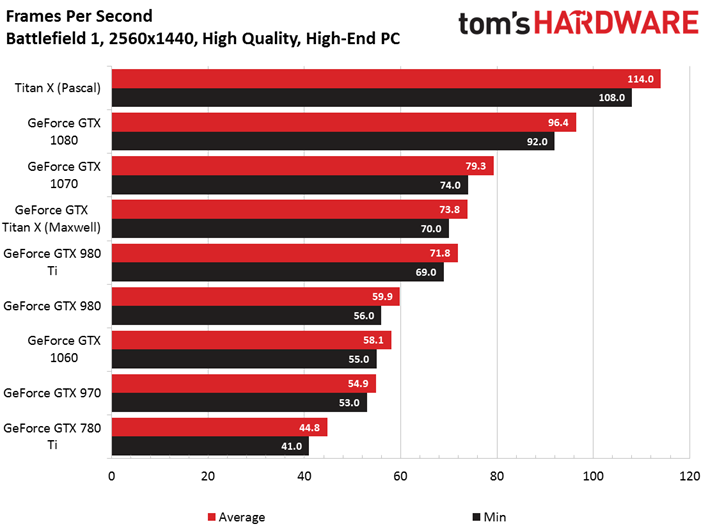

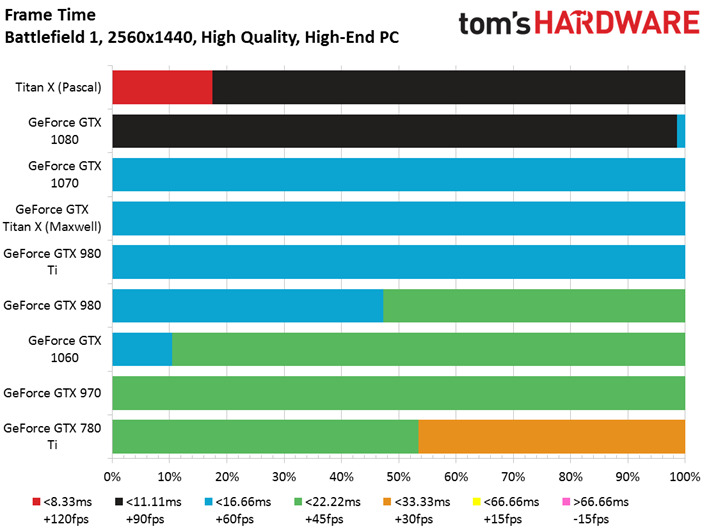

Gamers sinking big bucks into high-end hardware want to see games the way their developers intended, with graphics quality options as high as possible. By simply dialing up to the High preset, rather than Medium, a mid-range card like GeForce GTX 1060 6GB sheds ~31% of its average frame rate, dropping from 84.5 to 58.1 FPS. With that said, even a GeForce GTX 780 Ti maintains >40 FPS through our run.

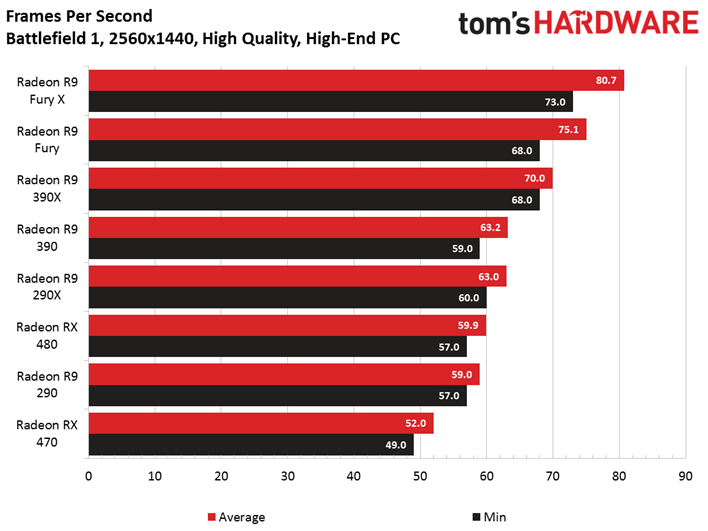

AMD’s fastest cards can’t compete with Nvidia’s, but the Radeon R9 Fury X does fare well against the GeForce GTX 1070. Unfortunately, Fiji-based boards are no longer readily available.

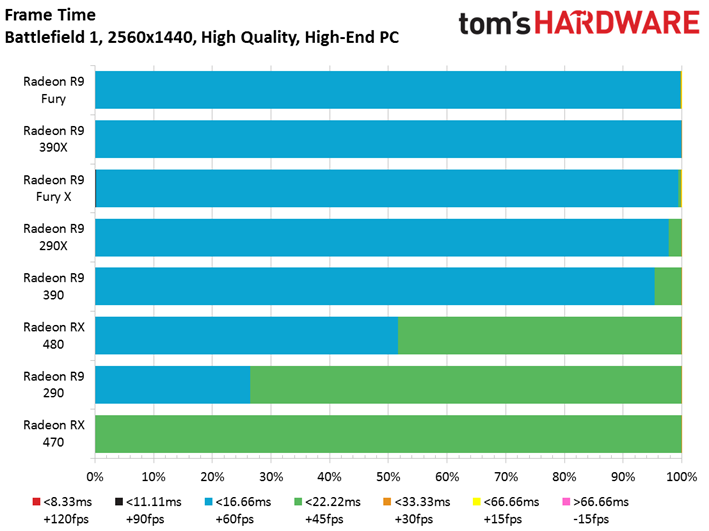

The Ellesmere-based Radeon RX 480 and 470 are, though. Both serve up playable performance at 2560x1440 using Battlefield 1’s High preset. Radeon RX 480 posts similar frame rates as a GeForce GTX 980, narrowly beating the 1060 6GB.

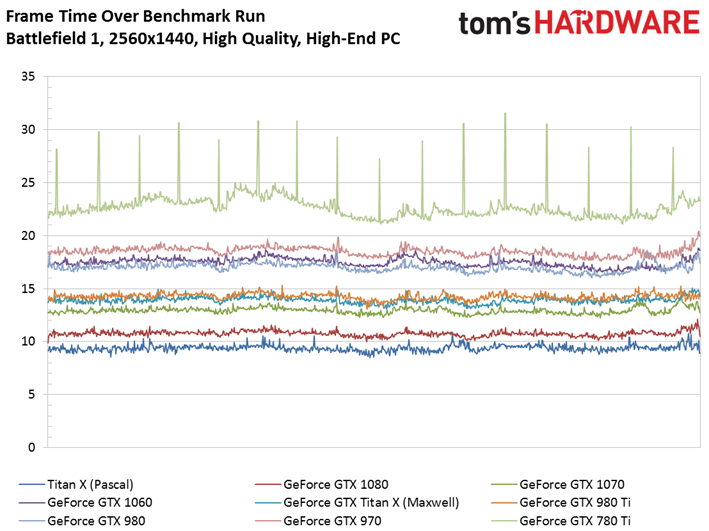

Our frame time over time charts show both HBM-equipped Fiji boards enduring small frame time spikes at regular intervals, similar to what we just saw from Nvidia’s GeForce GTX 780 Ti. These artifacts are interesting because they persist as we shift to Ultra quality, but are deemphasized as other influences cause much more significant frame time variance. Those aren’t the only 4GB cards we’re testing, so it’s not clear what imposes the evenly-spaced spikes.

Ultra Quality Preset

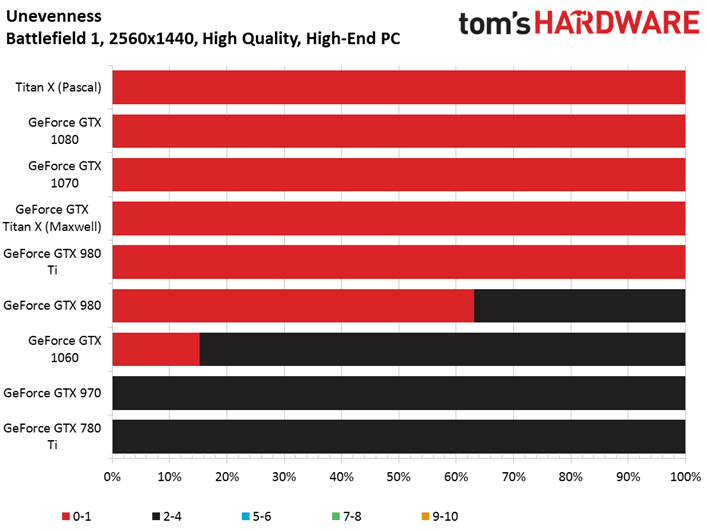

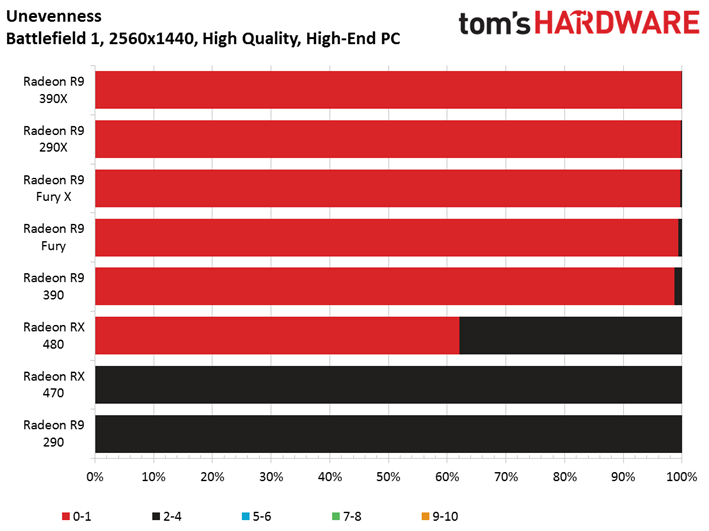

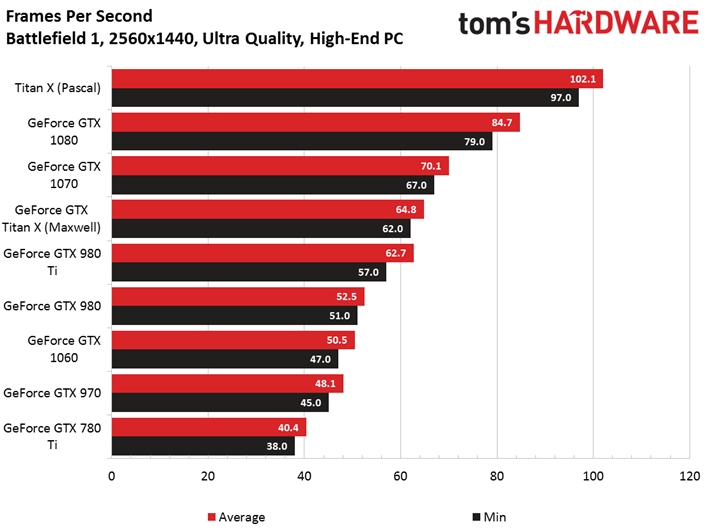

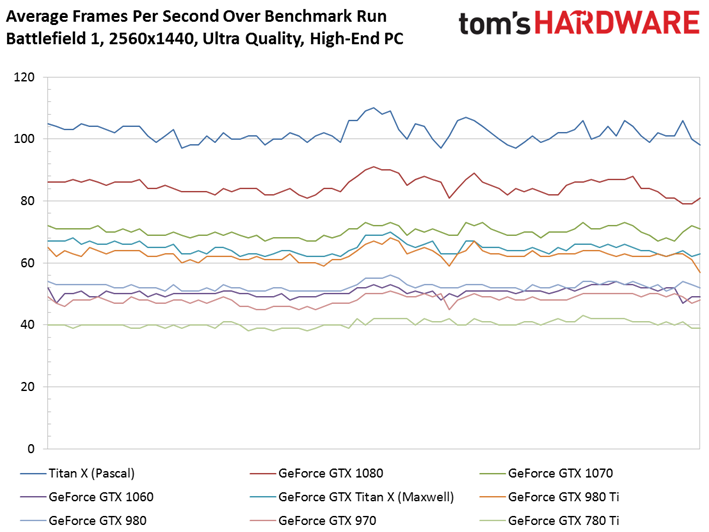

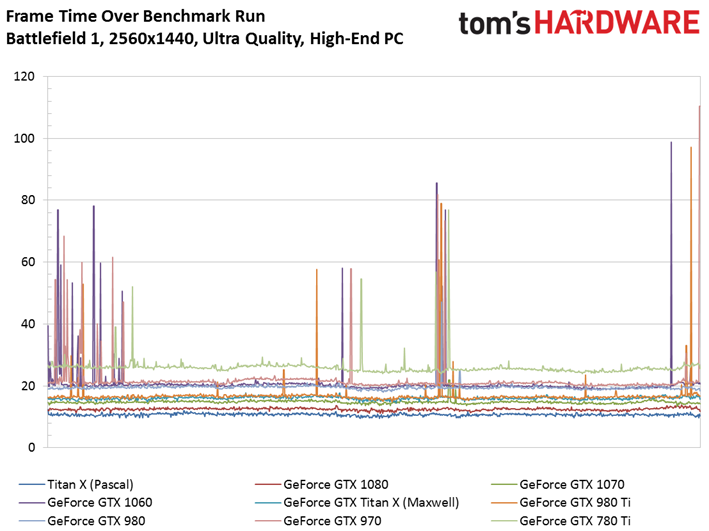

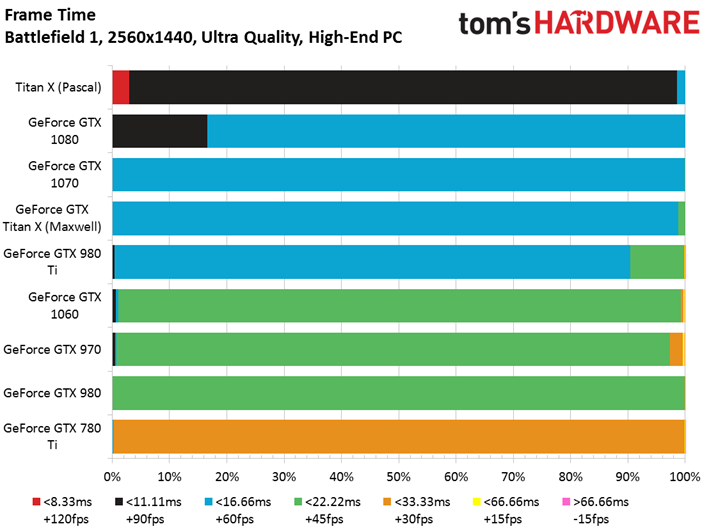

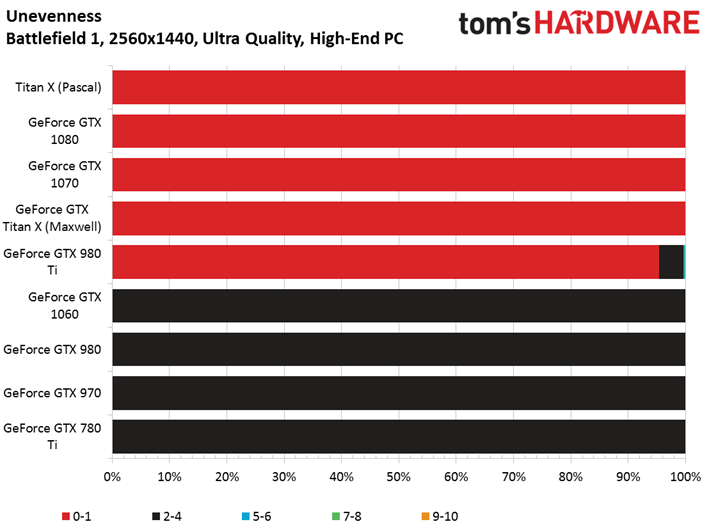

Our unevenness index makes the case that all of these GeForce cards serve up consistent-enough performance to be considered playable. The GeForce GTX 1060 6GB, 980 Ti, 970, and 780 Ti incur some fairly significant frame time spikes at similar points in our benchmark run. Incidentally, those are the cards at the bottom of the aforementioned index, which reflects smoothness.

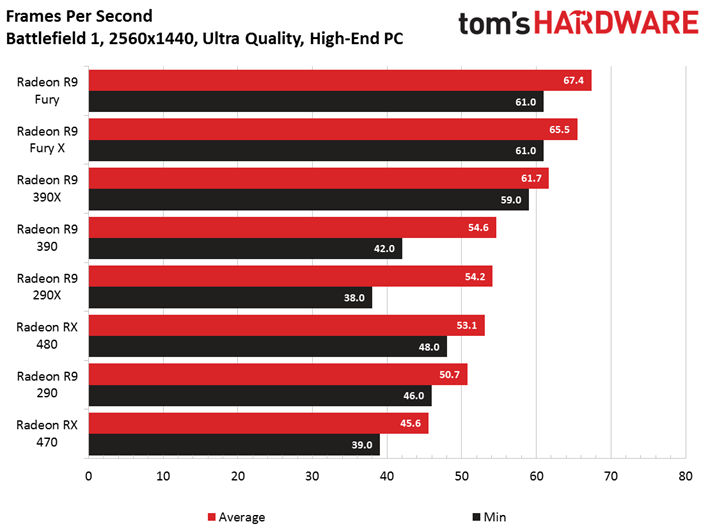

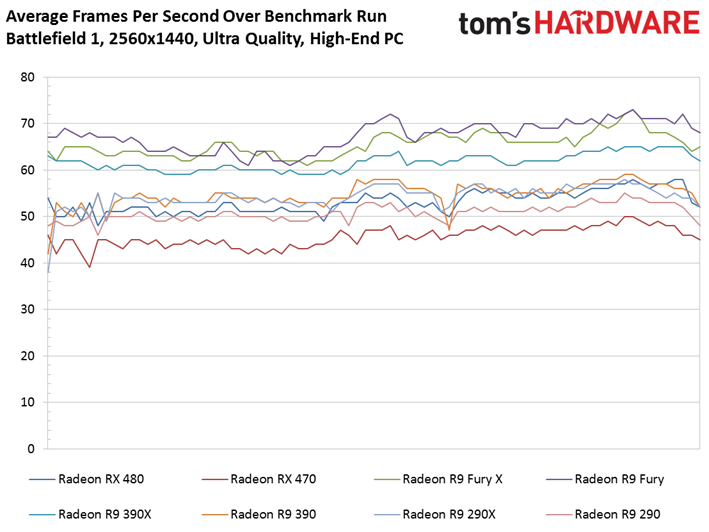

The Fiji-based cards continue beating GeForce GTX 980 Ti, but the R9 Fury X now shows up behind GeForce GTX 1070.

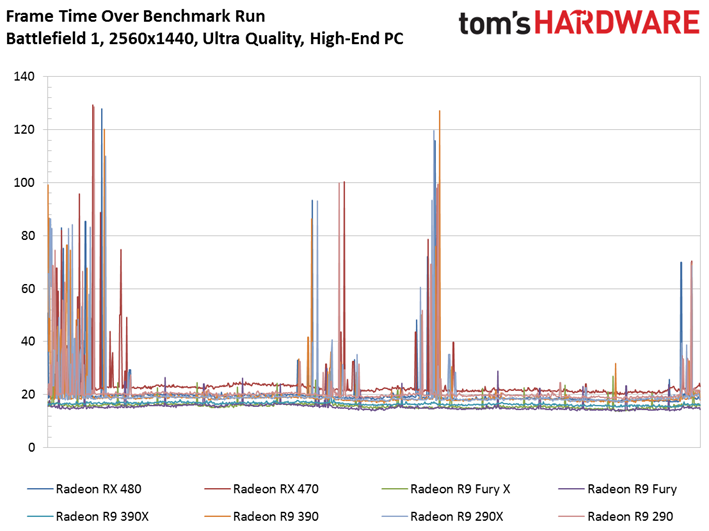

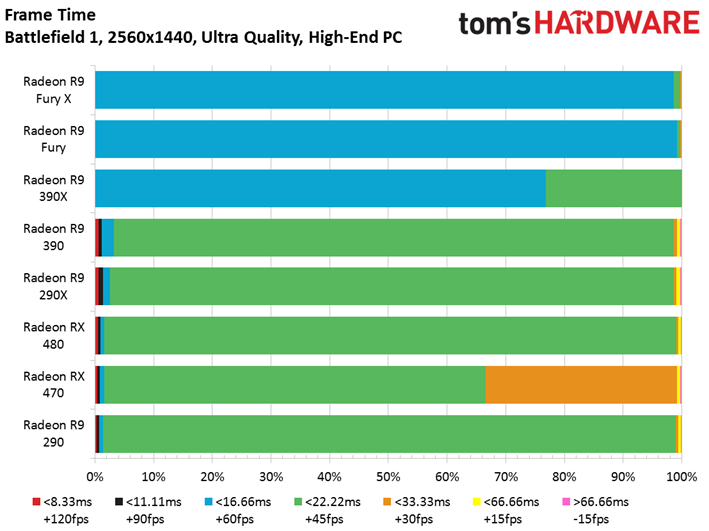

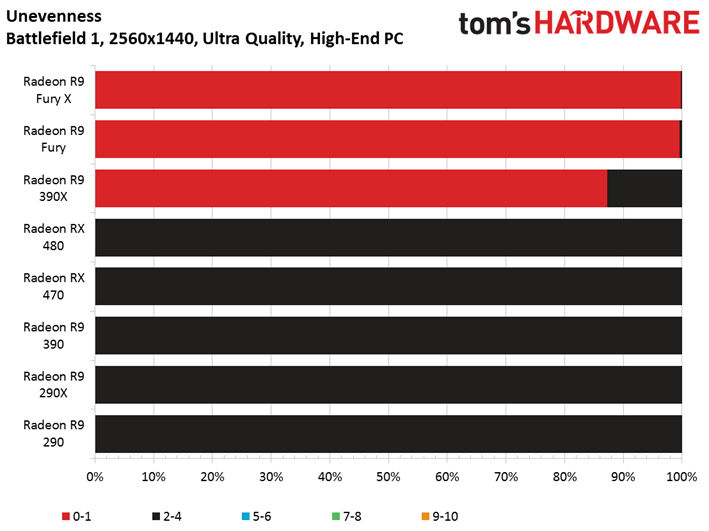

Big frame time variance at the start of our run takes a toll on minimum frame rates and affects the smoothness metric, where five Radeon cards demonstrate noticeable stutter. Even still, most cards are playable.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: High-End PC, 2560x1440

Prev Page High-End PC, 1920x1080 Next Page High-End PC, 3840x2160-

envy14tpe Wow. The amount of work in putting this together. Thanks, from all the BF1 gamers out there. You knocked my socks off, and are pushing me to upgrade my GPU.Reply -

computerguy72 Nice article. Would have been interesting to see the 1080ti and the Ryzen 1800x mixed in there somewhere. I have a 7700k and a 980ti it would be good info to get some direction on where to take my hardware next. I'm sure other people might find that interesting too.Reply -

Achaios Good job, just remember that these "GPU showdowns" don't tell the whole story b/c cards are running at Stock, and there are GPU's that can get huge overclocks thus performing significantly better.Reply

Case in point: GTX 780TI

The 780TI featured here runs at stock which was 875 MHz Base Clock and 928 MHz Boost Clock, whereas the 3rd party GPU's produced ran at 1150 MHz and boosted up to 1250-1300 MHz. We are talking about 30-35% more performance here for this card which you ain't seeing here at all. -

xizel Great write up, just a shame you didnt use any i5 CPUS, i would of really liked to se how an i5 6600k competes with its 4 cores agains the HT i7sReply -

Verrin Wow, impressive results from AMD here. You can really see that Radeon FineWine™ tech in action.Reply -

And then you run in DX11 mode and it runs faster than DX12 across the board. Thanks for effort you put in this but rather pointless since DX12 has been nothing but pile of crap.Reply

-

NewbieGeek @XIZEL My i5 6600k @4.6ghz and rx 480 get 80-90 fps max settings on all 32 v 32 multiplayer maps with very few spikes either up or down.Reply -

Jupiter-18 Fascinating stuff! Love that you are still including the older models in your benchmarks, makes for great info for a budget gamer like myself! In fact, this may help me determine what goes in my budget build I'm working on right now, which I was going to have dual 290x (preferably 8gb if I can find them), but now might have something else.Reply