Battlefield 1 Performance In DirectX 12: 29 Cards Tested

Mainstream PC, 1920x1080

We begin our adventure with a fairly mainstream system consisting of an AMD FX-8320 processor, MSI’s 990FXA-GD80 motherboard, 16GB of G.Skill DDR3 memory operating at 1866 MT/s, and a 500GB Crucial MX200 SSD.

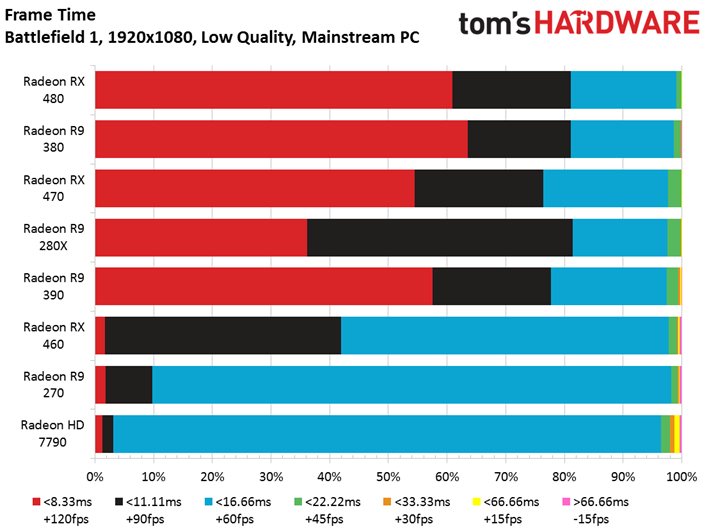

Low Quality Preset

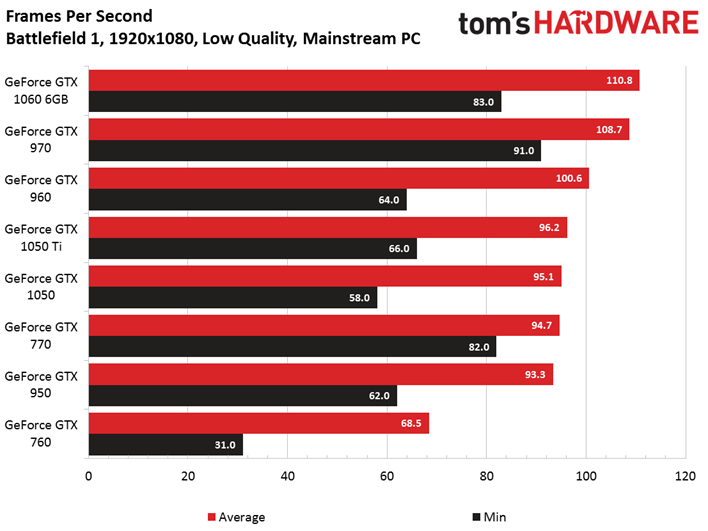

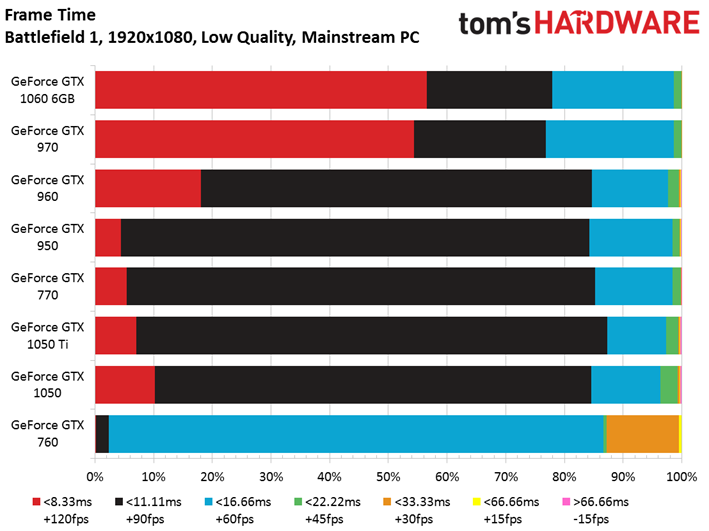

Across three generations of Nvidia architectures, dropping to Battlefield 1’s Low quality preset is great for ensuring playable frame rates if you can tolerate the loss of fidelity.

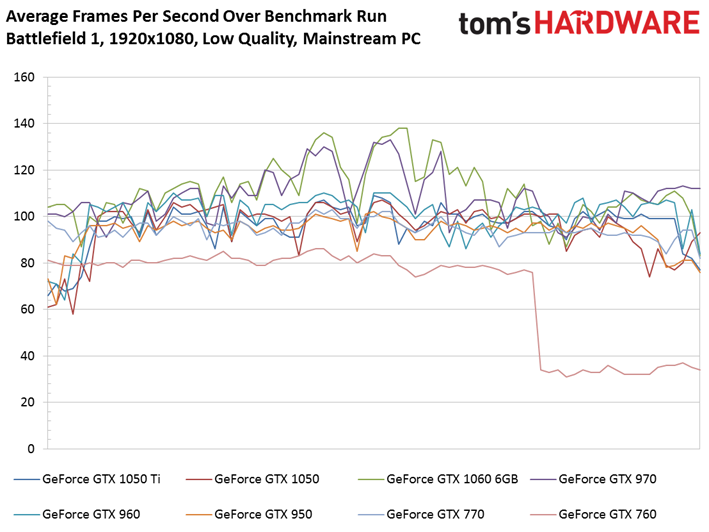

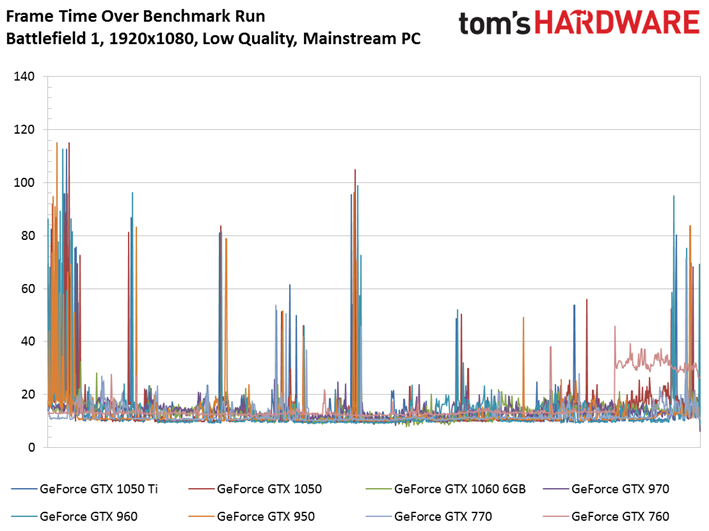

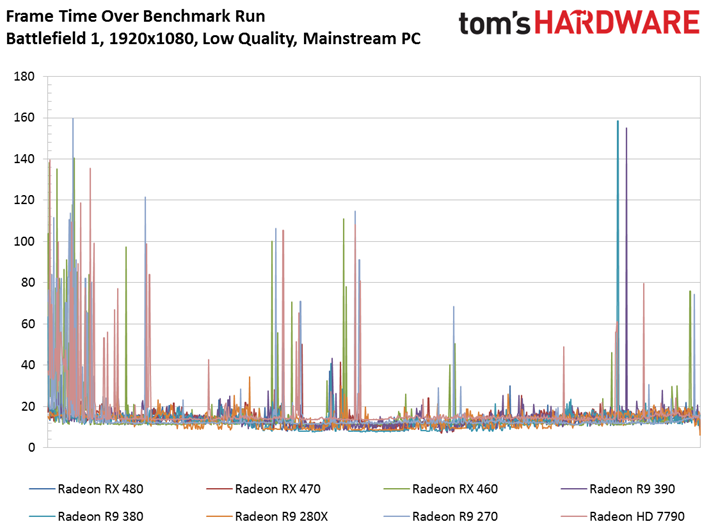

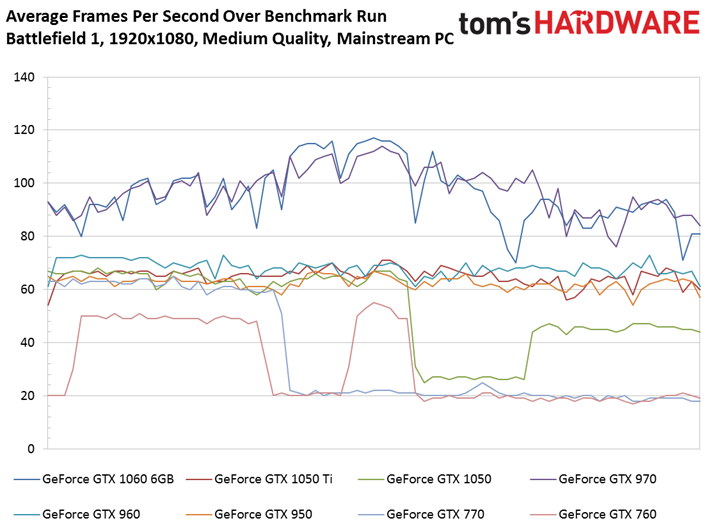

One card seems to suffer more than the rest: Nvidia’s GeForce GTX 760. It’s not the only board with 2GB of GDDR5 memory, but it clearly hits a wall toward the end of our benchmark run. This is a pattern we’ll see more of as the graphics details get turned up, allowing us to draw our first conclusion of this little exercise: 2GB of memory isn’t enough for 1920x1080, even under Battlefield’s most relaxed preset.

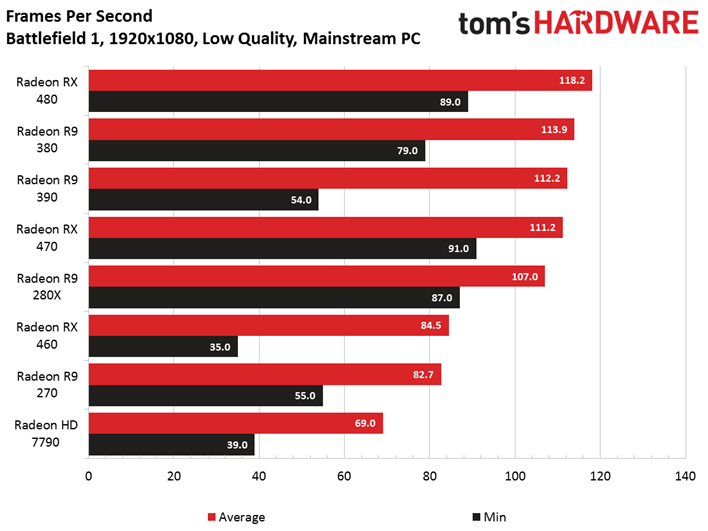

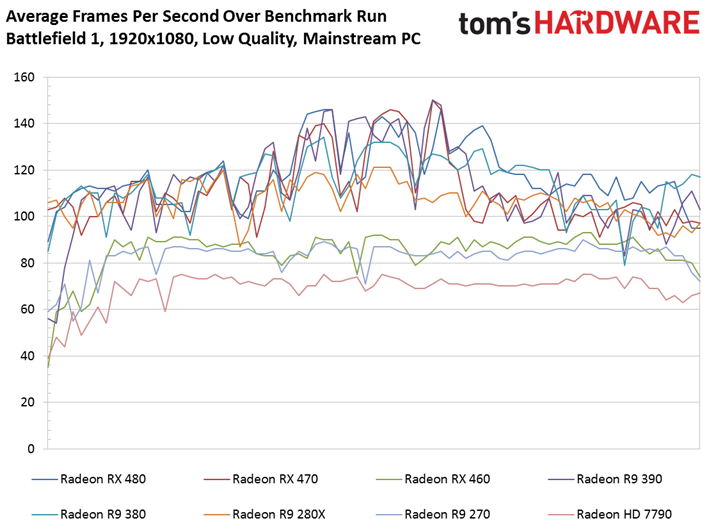

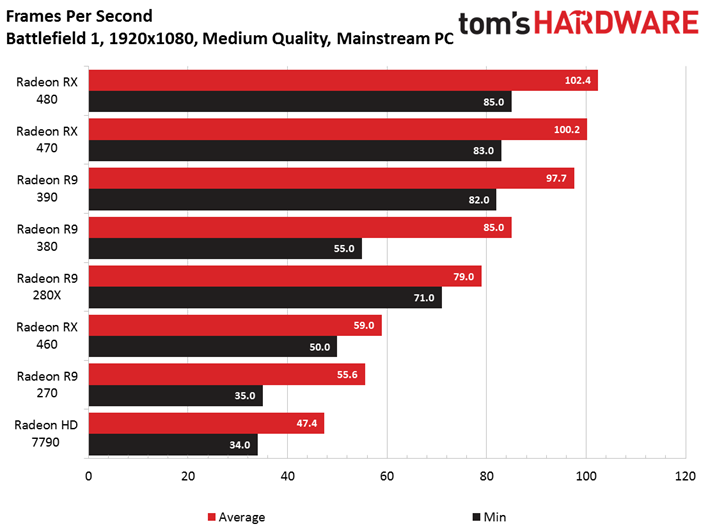

Right out of the gate, we see AMD’s Radeon RX 480 post higher average and minimum frame rate figures than Nvidia’s GeForce GTX 1060 6GB. In fact, even the Radeon RX 470 is faster in both metrics.

At the other end of the spectrum, our frame rate over time charts show 2GB Radeon cards running into performance trouble, characterized by low minimums at the beginning of our run. This typically happens when we skip part of the cut scene, causing the level to start as assets are still being loaded. However, you'll notice it's most prevalent on our mainstream test PC. The issue is less pronounced on the Core i7-based box.

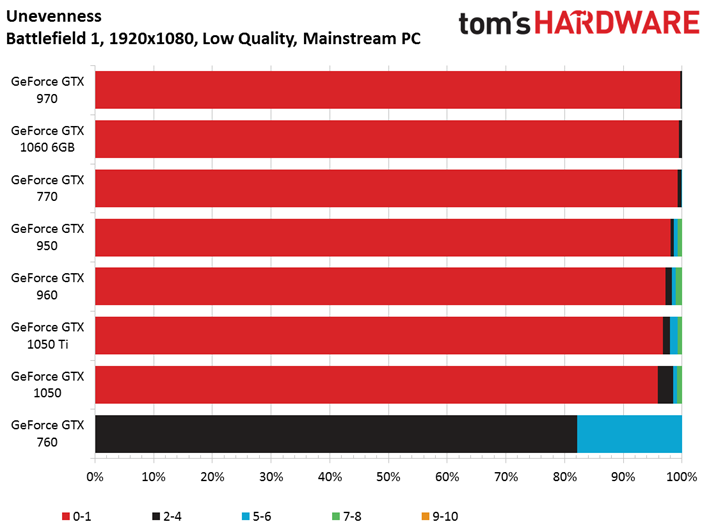

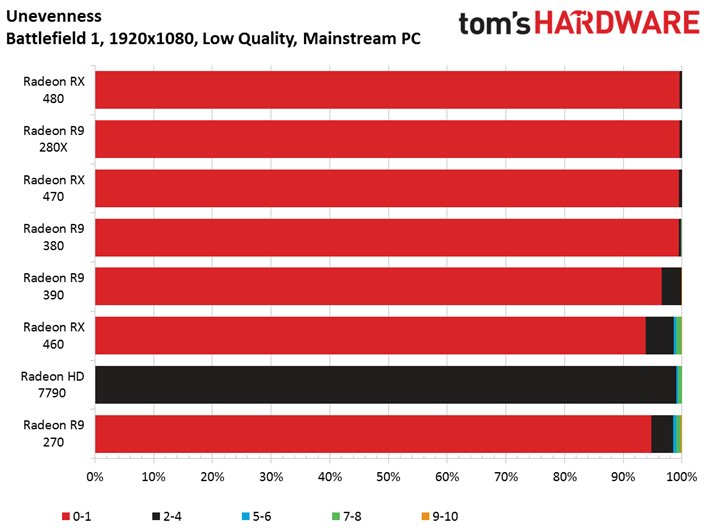

Nevertheless, our unevenness metric tells us all of these cards facilitate a fairly smooth experience.

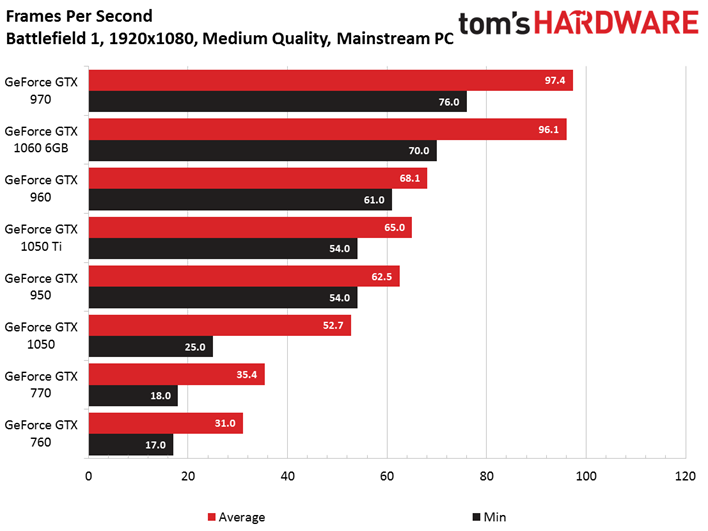

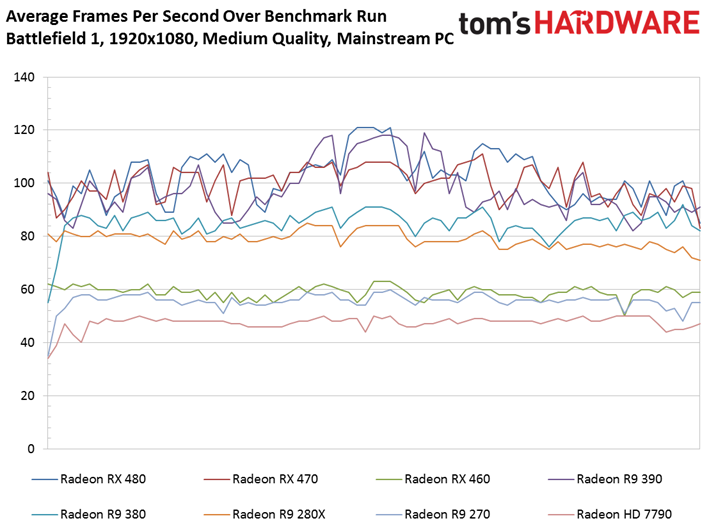

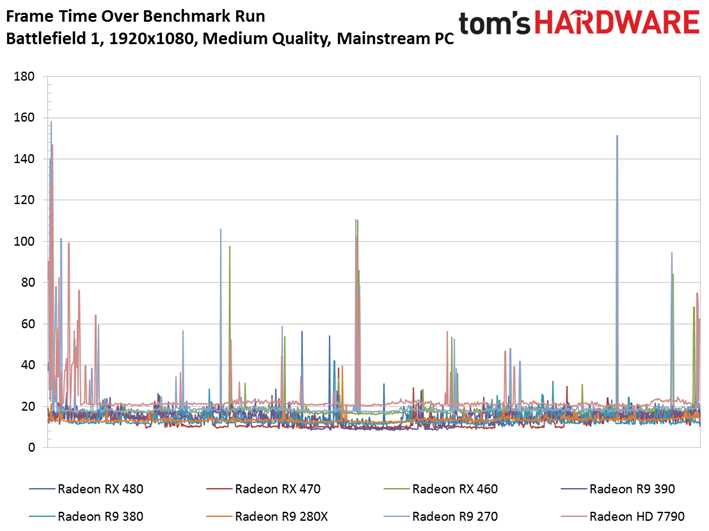

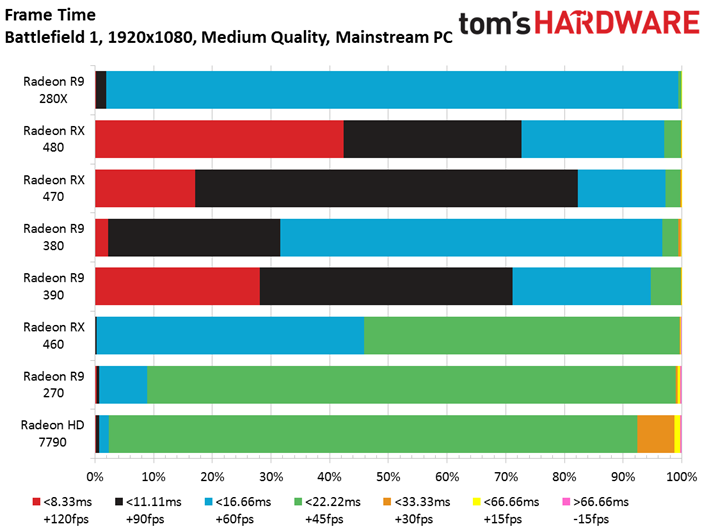

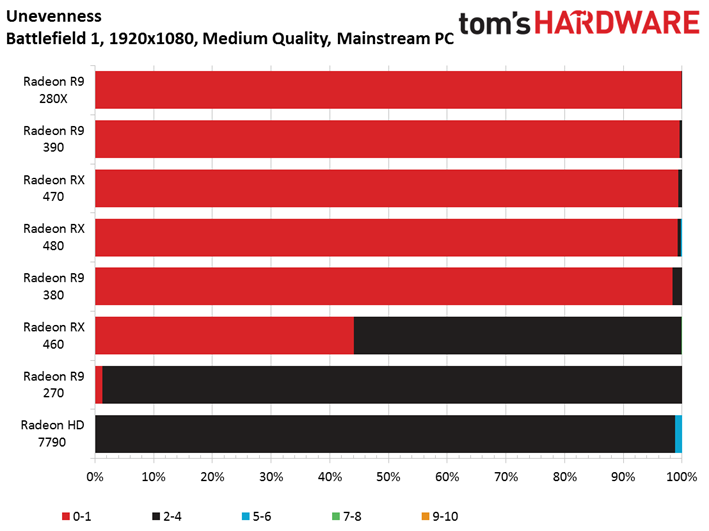

Medium Quality Preset

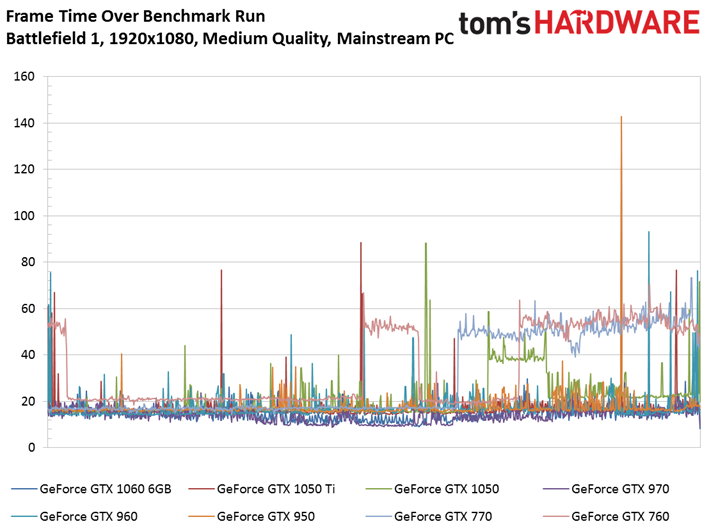

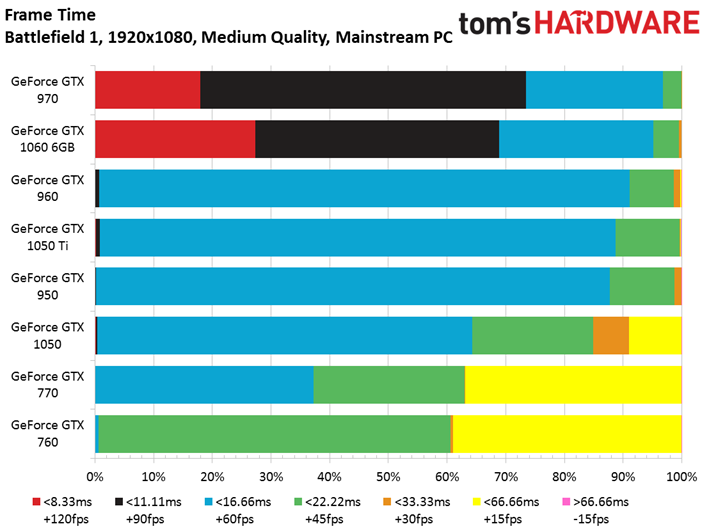

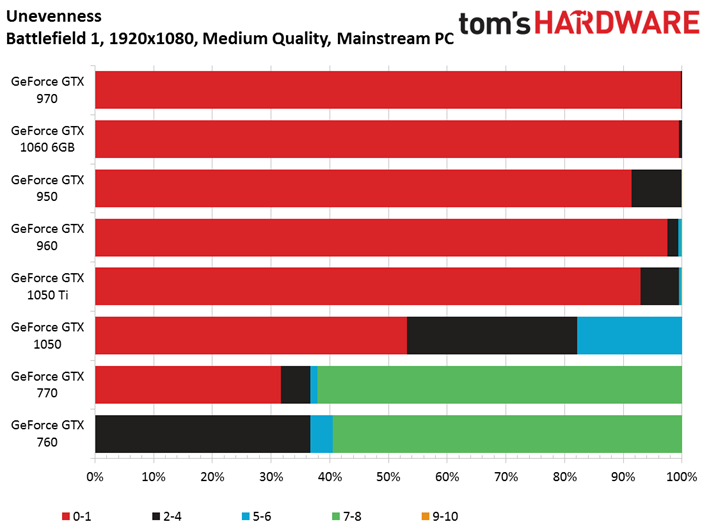

Although the GeForce GTX 970 and 1060 6GB handle a shift to medium quality gracefully enough, the rest of the field gets hammered into a fairly narrow performance range. Further, we now have three 2GB cards suffering severe performance loss at certain points during the benchmark sequence. For all intents and purposes, the GeForce GTX 760, 770, and 1050 are already unplayable (despite the 1050’s reasonable average frame rate).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

AMD’s line-up doesn’t escape unscathed, either. The Radeon RX 460, R9 270, and HD 7790 are all quite a bit slower than the rest of the field. However, they’re much more consistent, so our unevenness measurement shows them to be playable.

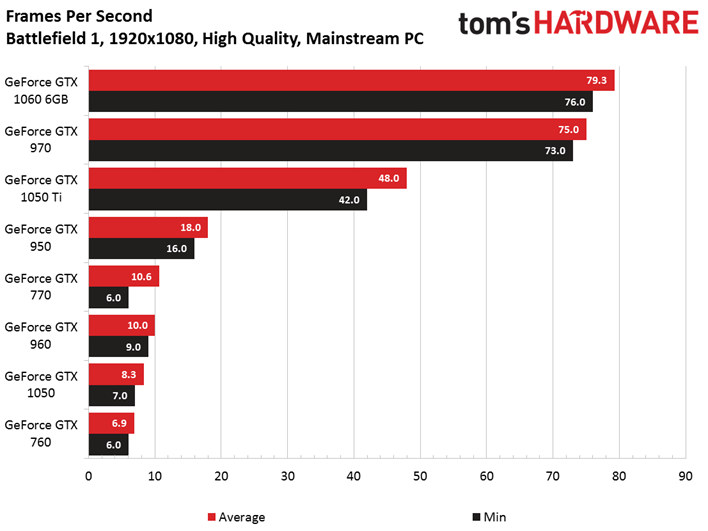

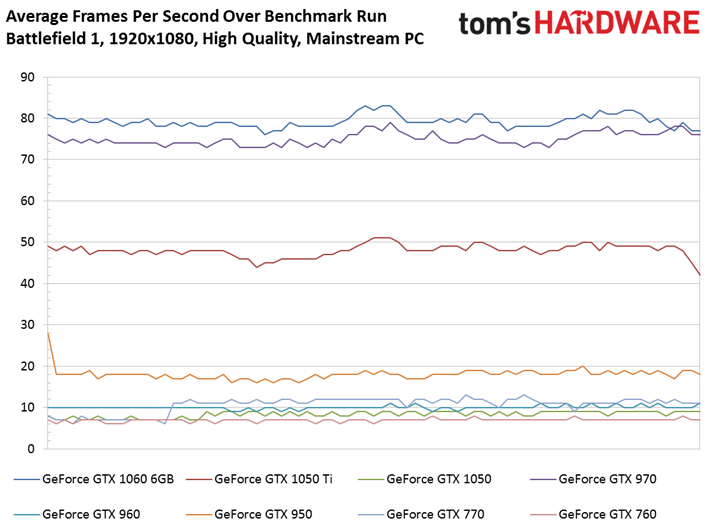

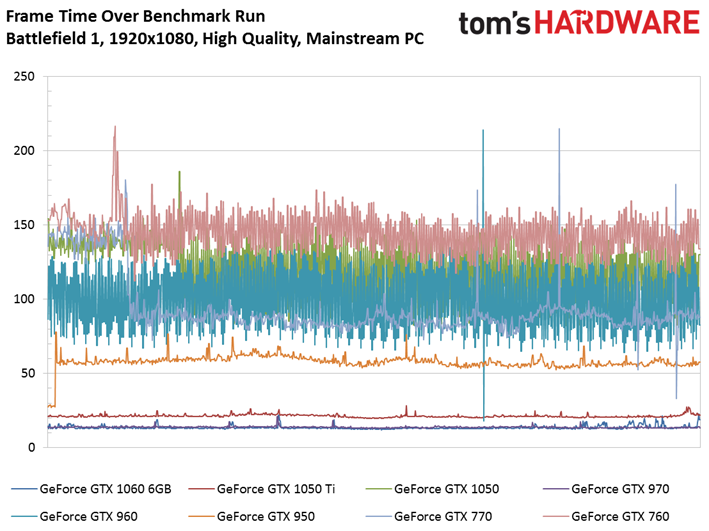

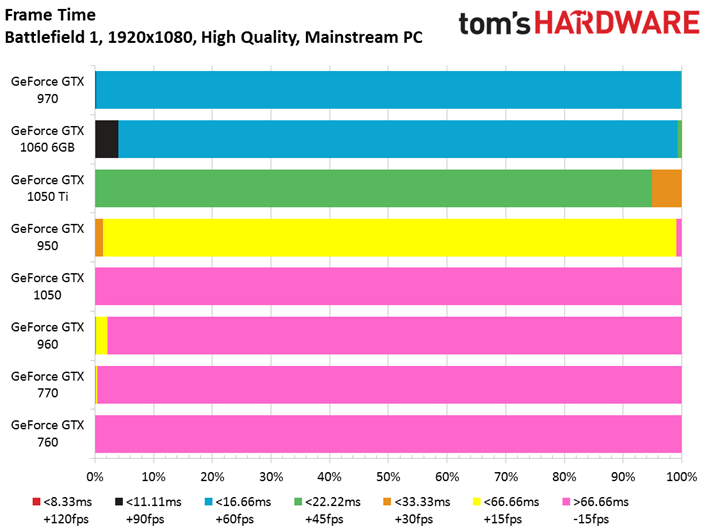

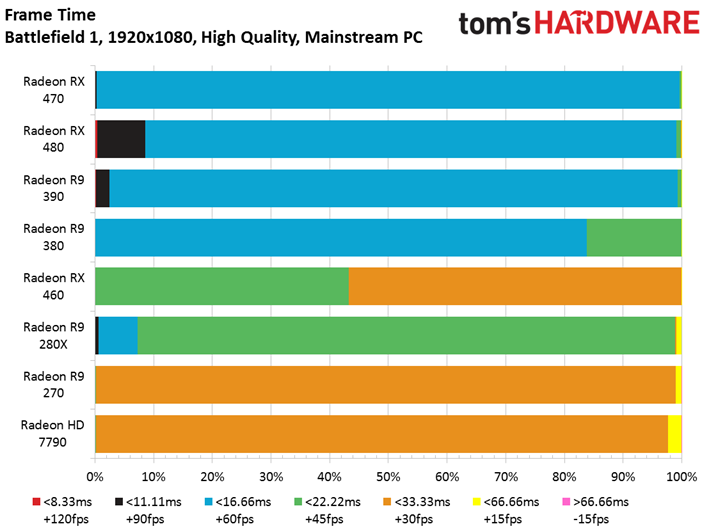

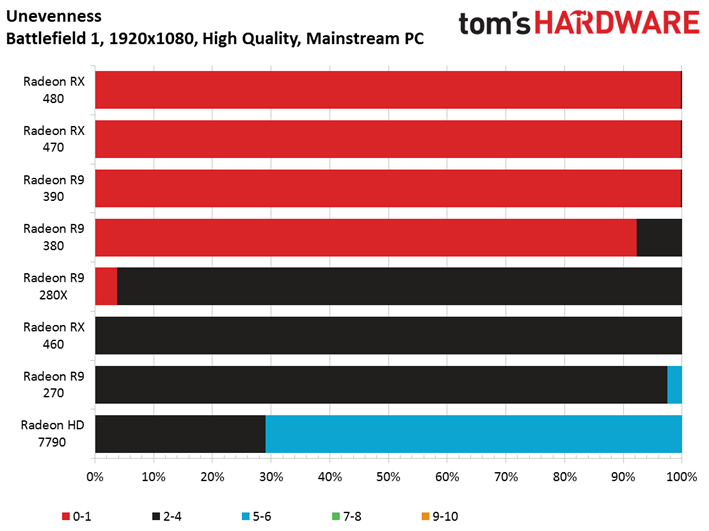

High Quality Preset

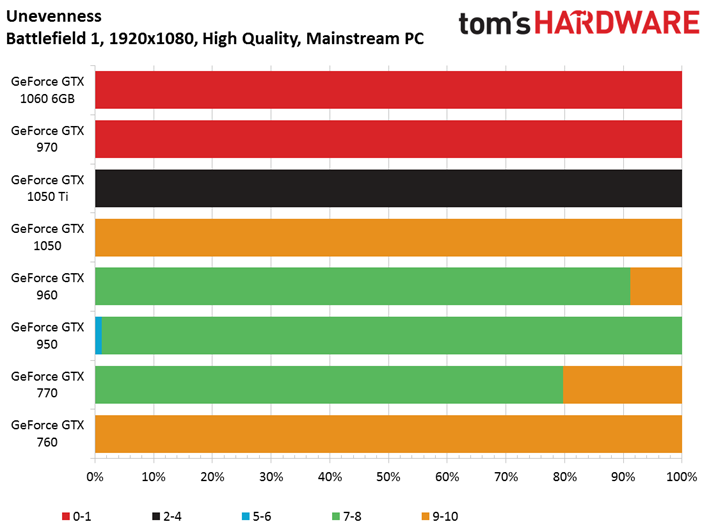

Pushing graphics quality to the next level up further separates the 2GB and 4/6GB GeForce cards. Everything from a four-year-old GeForce GTX 760 to a modern 1050 is utterly unplayable at 1920x1080. The higher-end 1050 Ti 4GB might be considered marginal, while the 1060 GB and 970 cruise along at admirable frame rates.

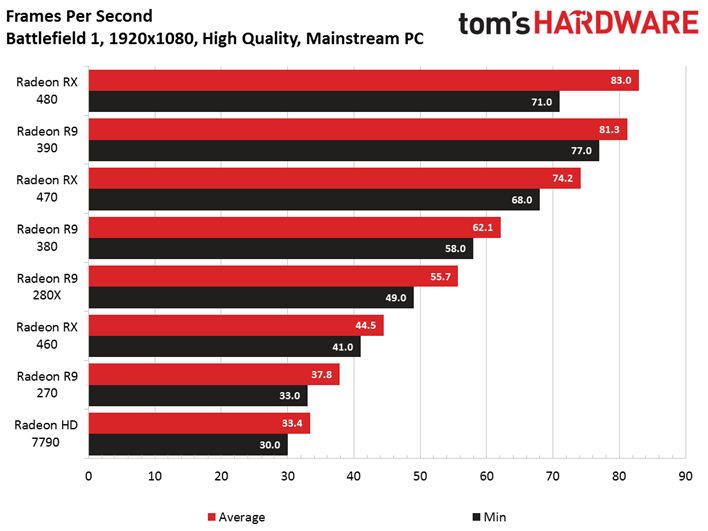

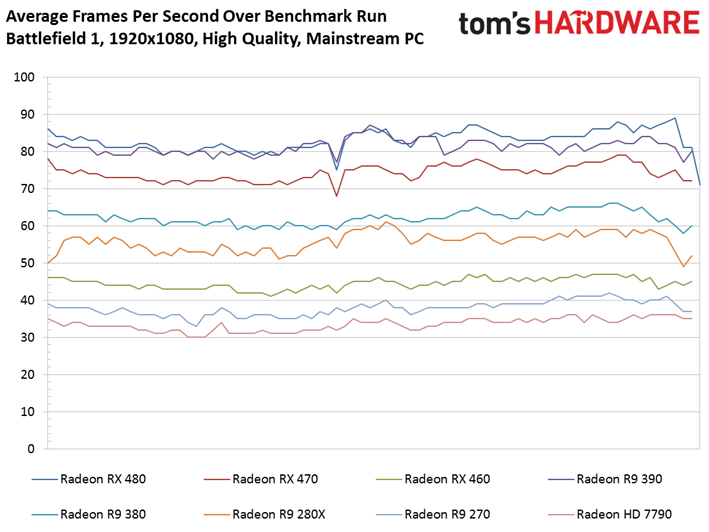

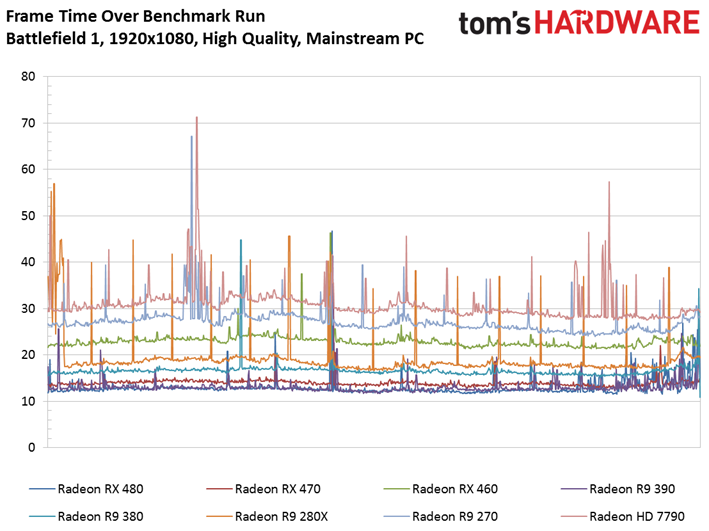

In comparison, the Radeon line-up scales down in a much more linear way, with none of the cards (even the 2GB ones) falling off an obvious performance cliff. A fairly new Radeon RX 460 is smooth enough, as is the 2013-era Radeon R9 280X.

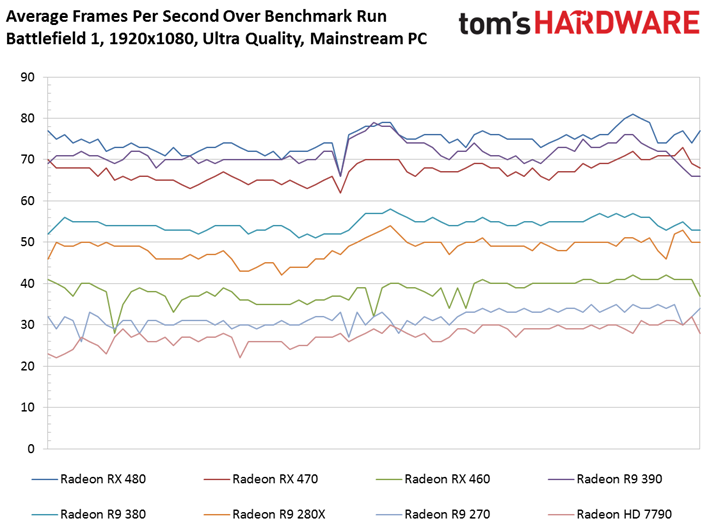

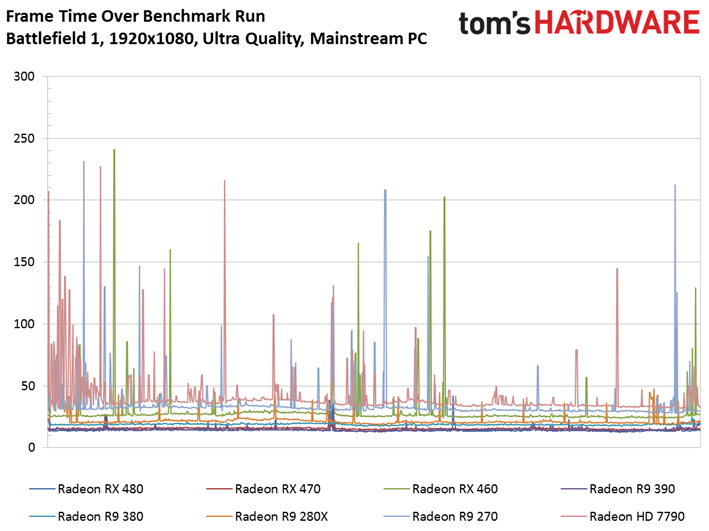

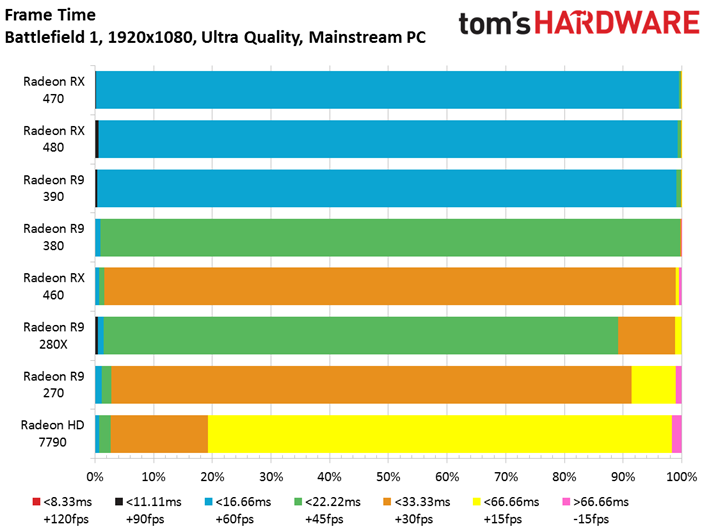

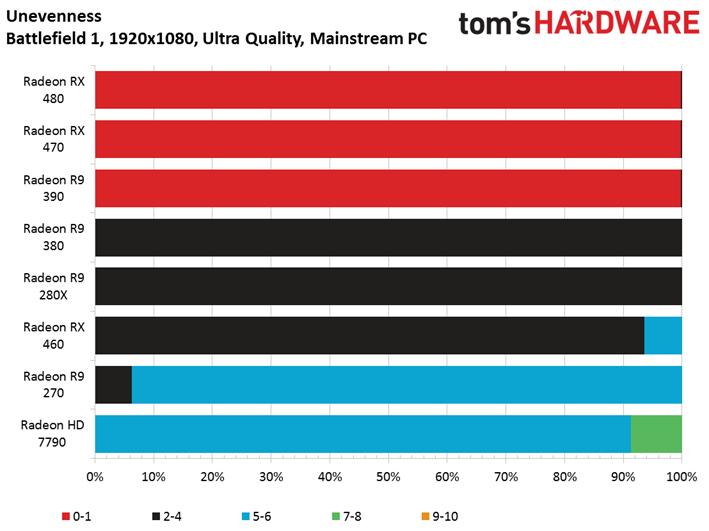

Ultra Quality Preset

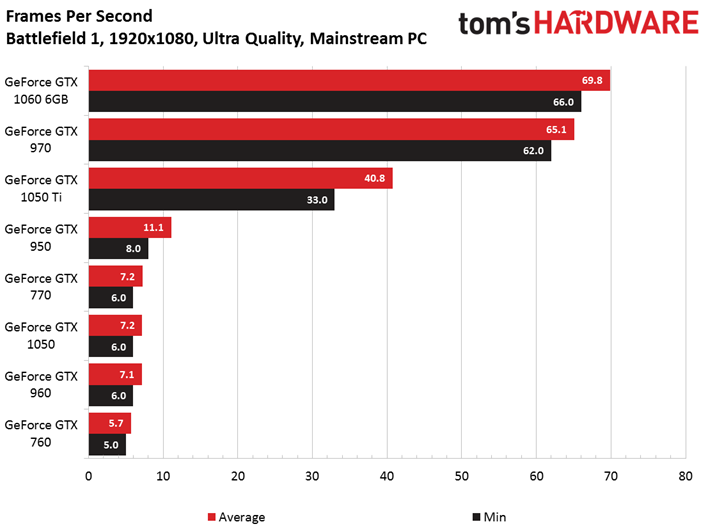

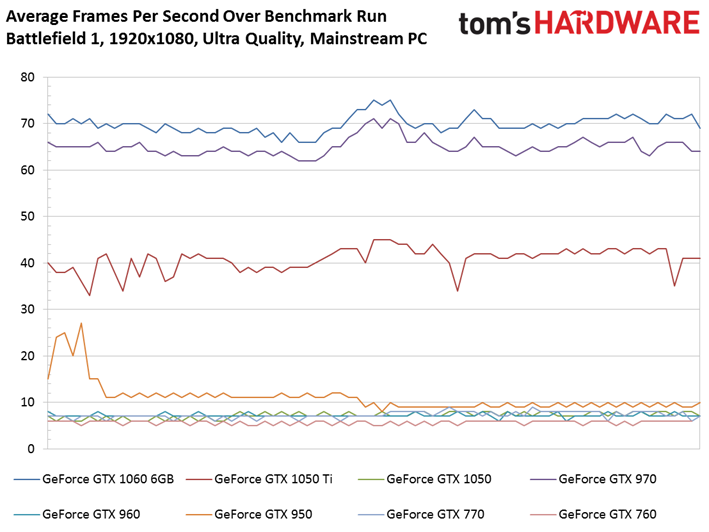

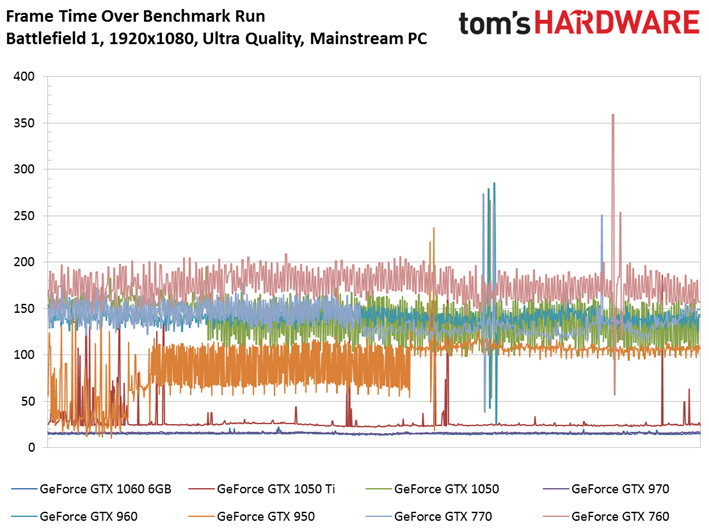

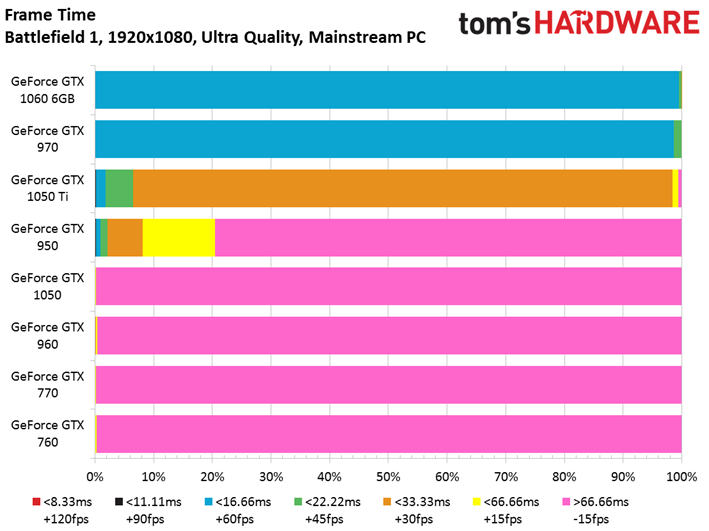

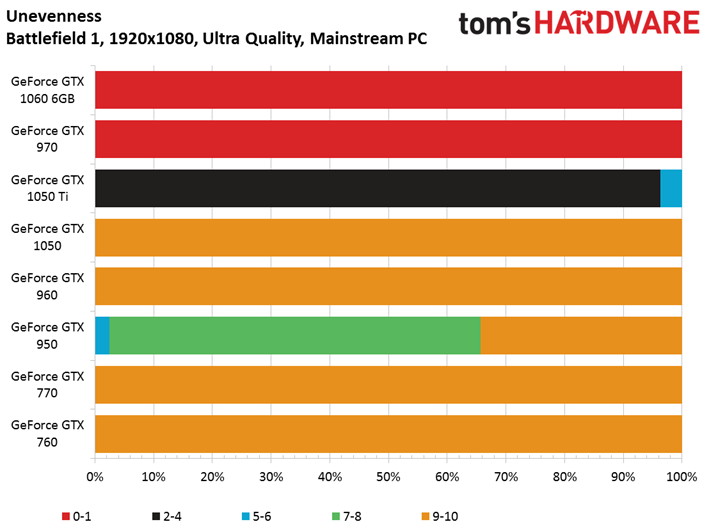

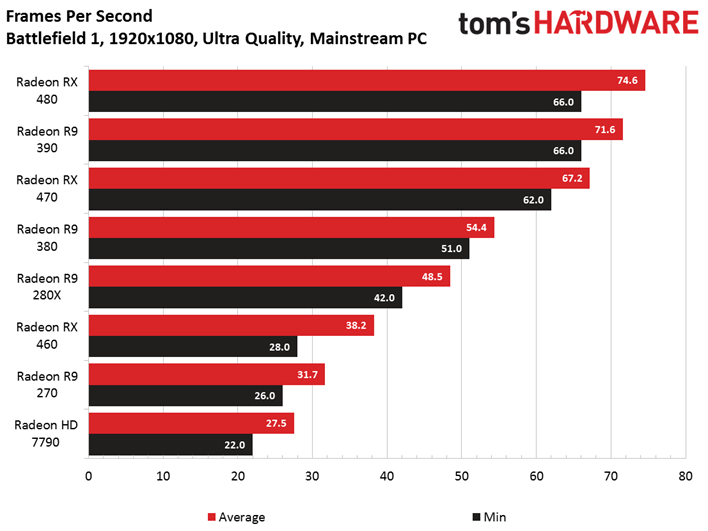

Although we wanted performance data using the Low, Medium, and High quality presets to establish trends and patterns, Ultra is the gold standard we shoot for when we recommend graphics cards to our readers. And at 1920x1080, only two of the GeForce cards we’re testing are really ready for Battlefield 1’s Ultra preset. Obviously, there are higher-end boards capable of going even faster. But if you own an FX-8320 or some other mainstream gaming platform, this is the scope of GPUs that make sense. Those single-digit frame rates. Those frame time results. That unevenness chart. Wow.

The Radeon RX 480 and R9 390 both outperform Nvidia’s GeForce GTX 1060 6GB, and the Radeon RX 470 slides past the GTX 970. But even AMD’s older Radeon R9 380 and 280X are relatively playable.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPU Content

Current page: Mainstream PC, 1920x1080

Prev Page Benchmarking Battlefield 1 in DirectX 12 Next Page Mainstream PC, 2560x1440-

envy14tpe Wow. The amount of work in putting this together. Thanks, from all the BF1 gamers out there. You knocked my socks off, and are pushing me to upgrade my GPU.Reply -

computerguy72 Nice article. Would have been interesting to see the 1080ti and the Ryzen 1800x mixed in there somewhere. I have a 7700k and a 980ti it would be good info to get some direction on where to take my hardware next. I'm sure other people might find that interesting too.Reply -

Achaios Good job, just remember that these "GPU showdowns" don't tell the whole story b/c cards are running at Stock, and there are GPU's that can get huge overclocks thus performing significantly better.Reply

Case in point: GTX 780TI

The 780TI featured here runs at stock which was 875 MHz Base Clock and 928 MHz Boost Clock, whereas the 3rd party GPU's produced ran at 1150 MHz and boosted up to 1250-1300 MHz. We are talking about 30-35% more performance here for this card which you ain't seeing here at all. -

xizel Great write up, just a shame you didnt use any i5 CPUS, i would of really liked to se how an i5 6600k competes with its 4 cores agains the HT i7sReply -

Verrin Wow, impressive results from AMD here. You can really see that Radeon FineWine™ tech in action.Reply -

And then you run in DX11 mode and it runs faster than DX12 across the board. Thanks for effort you put in this but rather pointless since DX12 has been nothing but pile of crap.Reply

-

NewbieGeek @XIZEL My i5 6600k @4.6ghz and rx 480 get 80-90 fps max settings on all 32 v 32 multiplayer maps with very few spikes either up or down.Reply -

Jupiter-18 Fascinating stuff! Love that you are still including the older models in your benchmarks, makes for great info for a budget gamer like myself! In fact, this may help me determine what goes in my budget build I'm working on right now, which I was going to have dual 290x (preferably 8gb if I can find them), but now might have something else.Reply