Battlefield 1 Performance In DirectX 12: 29 Cards Tested

High-End PC, 3840x2160

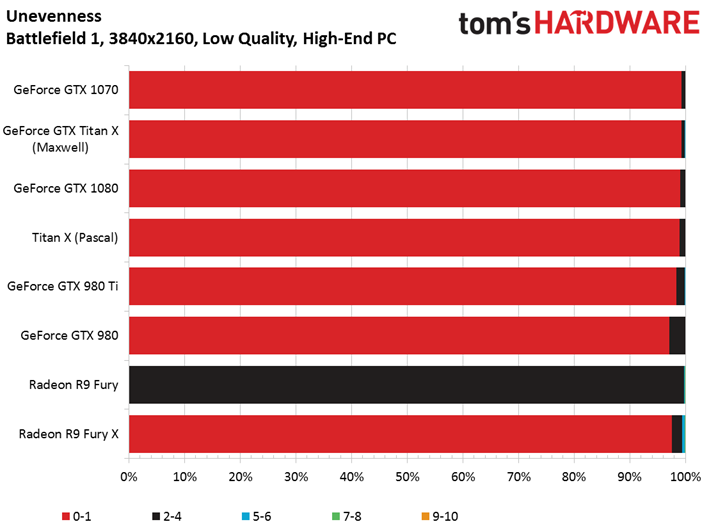

Low Quality Preset

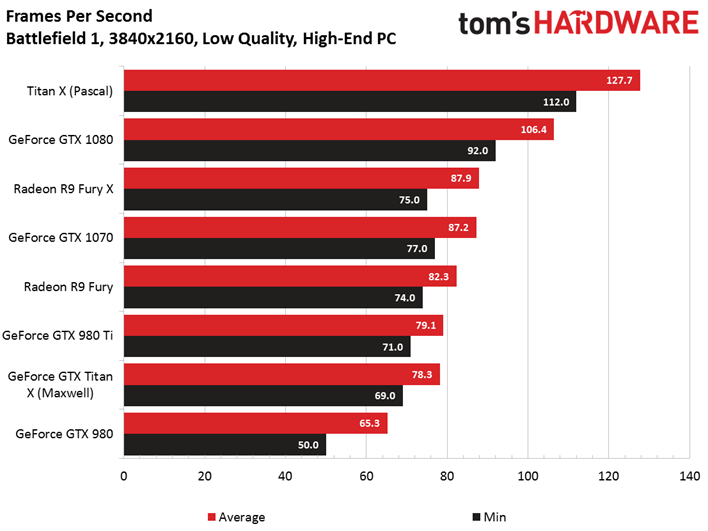

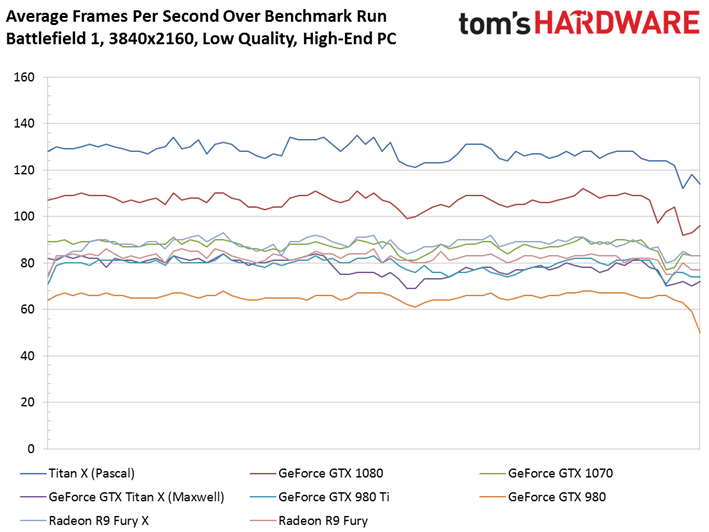

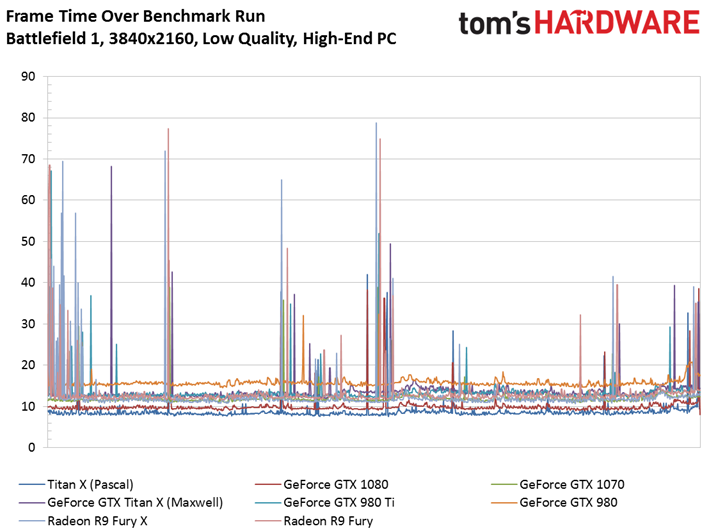

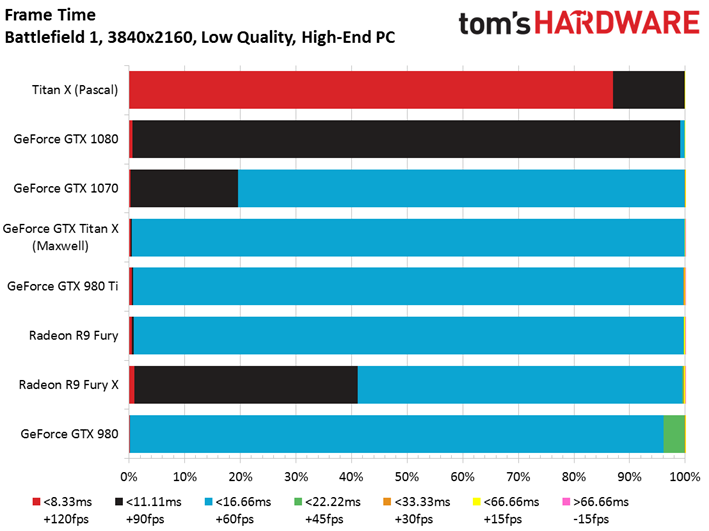

There aren’t as many cards capable of enjoyable performance at 3840x2160, so we combine the GeForce and Radeon products into one set of graphs.

Battlefield 1’s Low quality preset gives up a lot of the game’s great visuals, so we can’t imagine anyone would willingly combine high-end graphics and an expensive monitor, only to compromise detail for performance. It’s more likely that you’d dial down to 2560x1440 and maintain Medium or High quality. Nevertheless, our purpose here is establishing a baseline whereby all eight contenders serve up playable performance, and that’s exactly what we see before intensifying the workload.

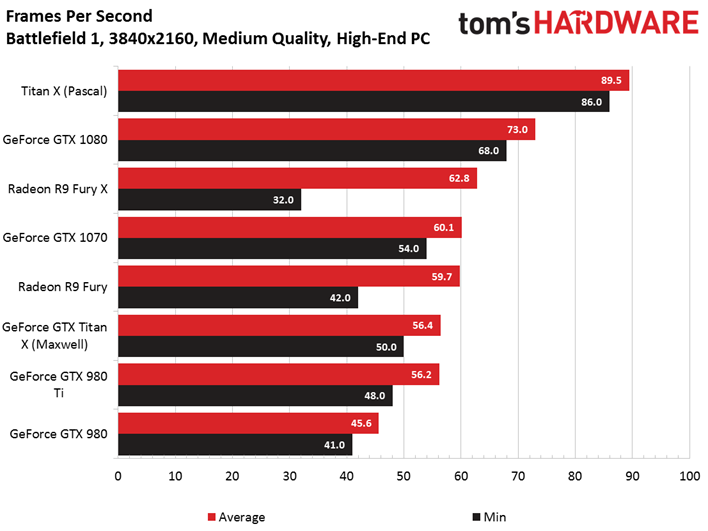

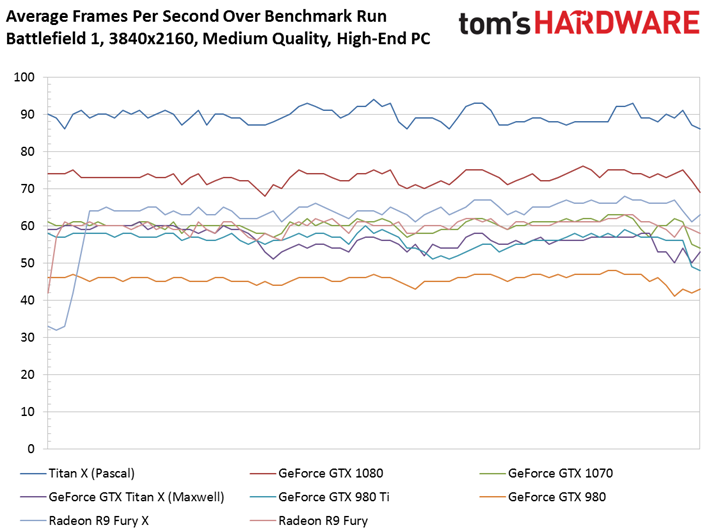

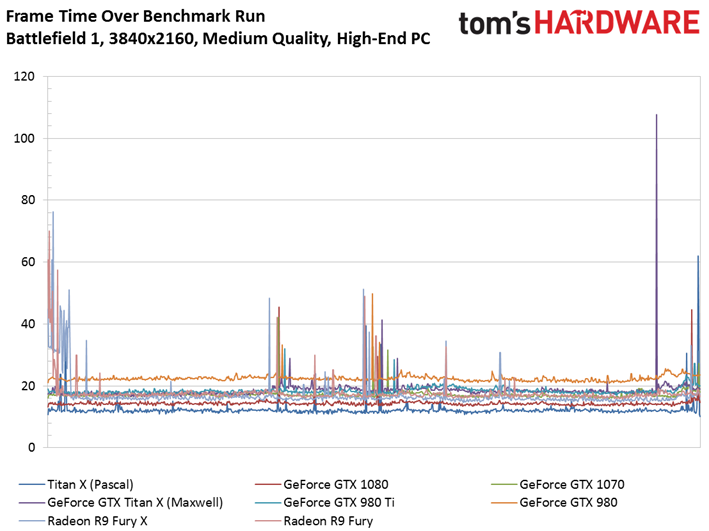

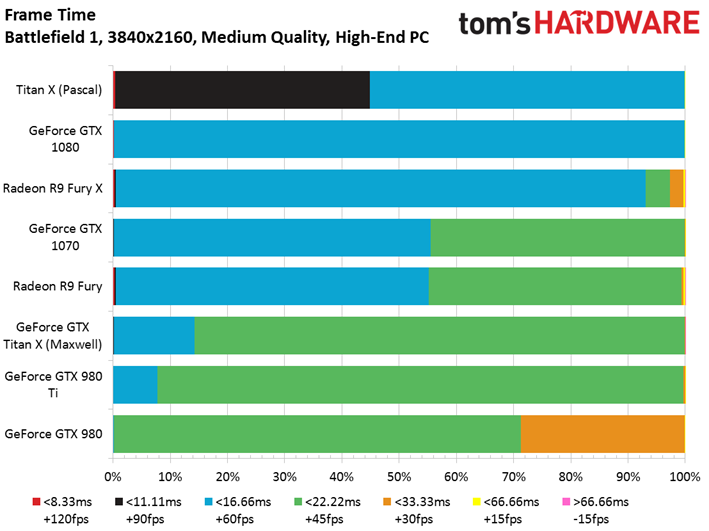

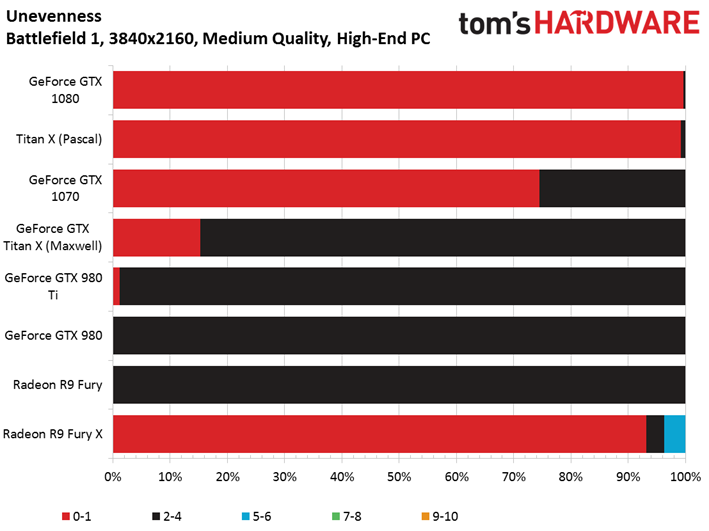

Medium Quality Preset

The Radeon R9 Fury X and Fury both stumble as the benchmark begins, causing their low minimum frame rates. After recovering, though, the Fury X beats GeForce GTX 1070, while AMD’s vanilla Fury slots in above GeForce GTX Titan X (Maxwell) and 980 Ti.

Though all of the results might technically be considered usable, we wouldn’t be thrilled about playing at Medium details on the GTX 980 at frame rates in the mid-40s. QHD at the Ultra preset would look and feel much better.

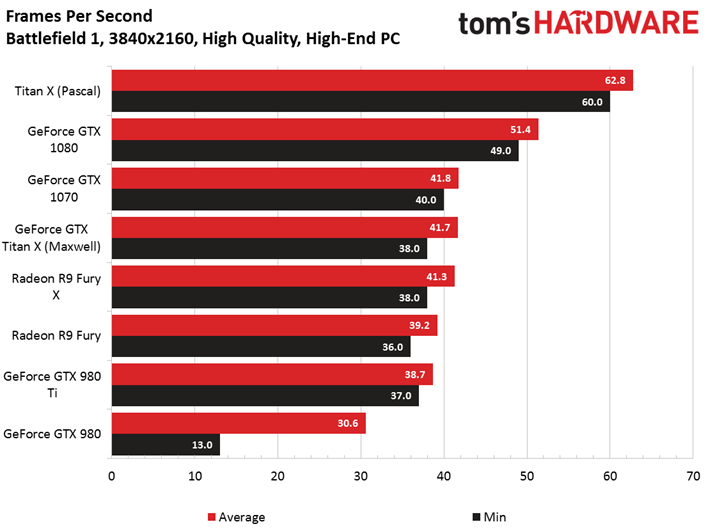

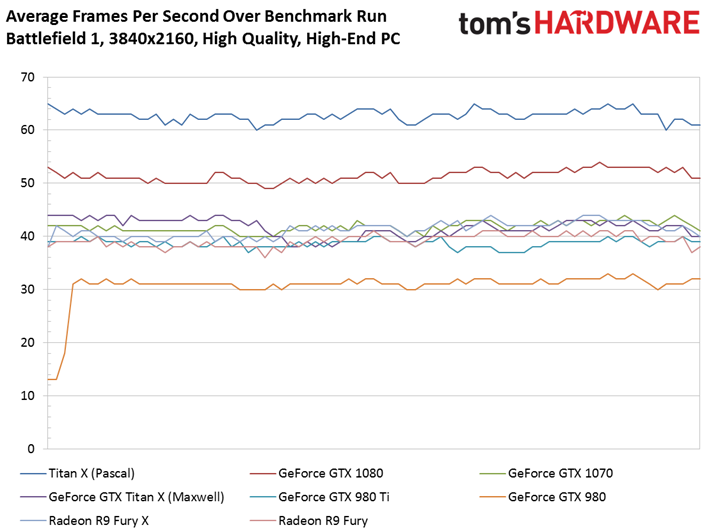

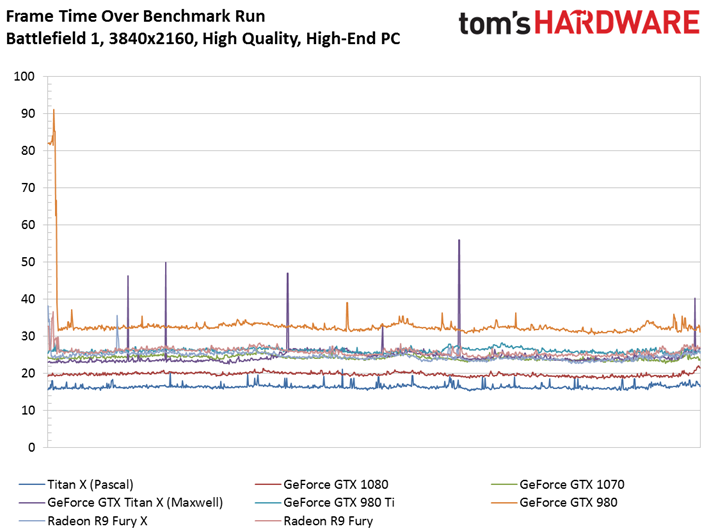

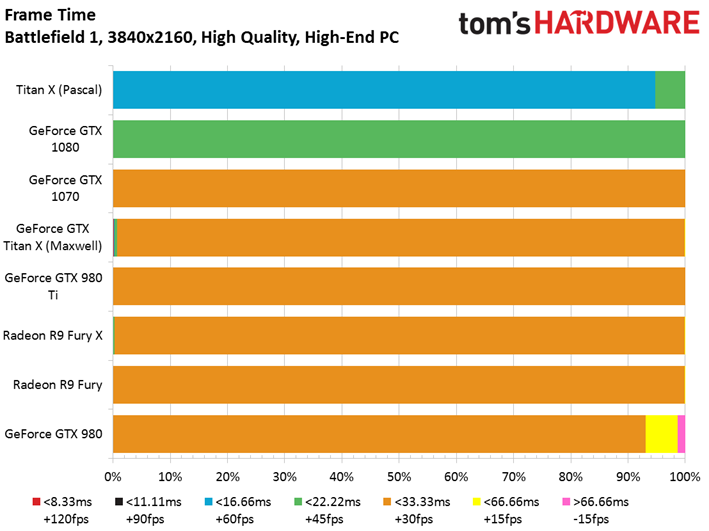

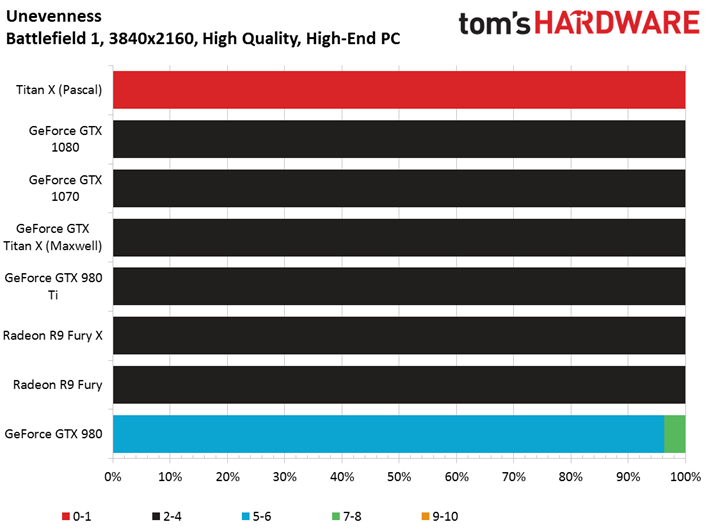

High Quality Preset

Severe slow-downs at the beginning of our benchmark aren’t exclusive to Radeon cards. Here, the GeForce GTX 980 must sputter to life, causing a 13 FPS minimum frame rate. Regardless, by the time we select Battlefield 1’s High preset, most of these cards toe the line above and below 40 FPS. Only the GeForce GTX 1080 and Titan X (and the 1080 Ti, we assume) run smooth enough to keep most gamers happy.

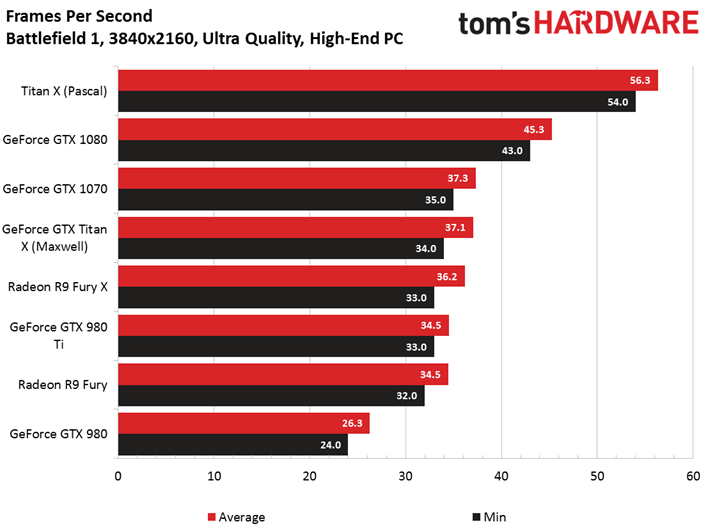

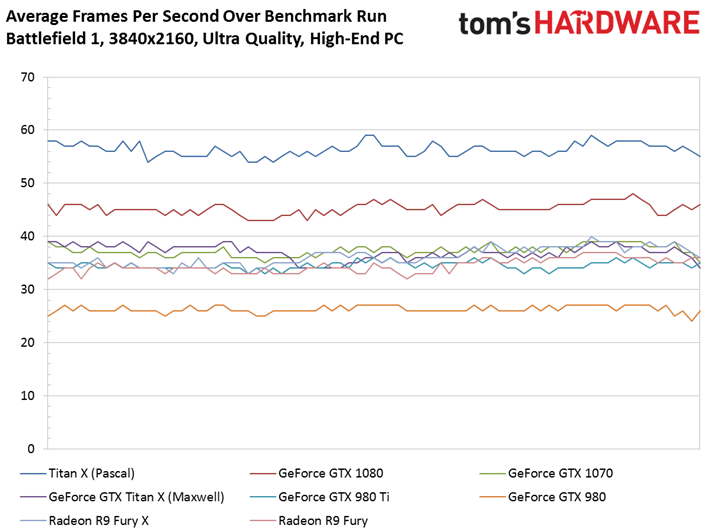

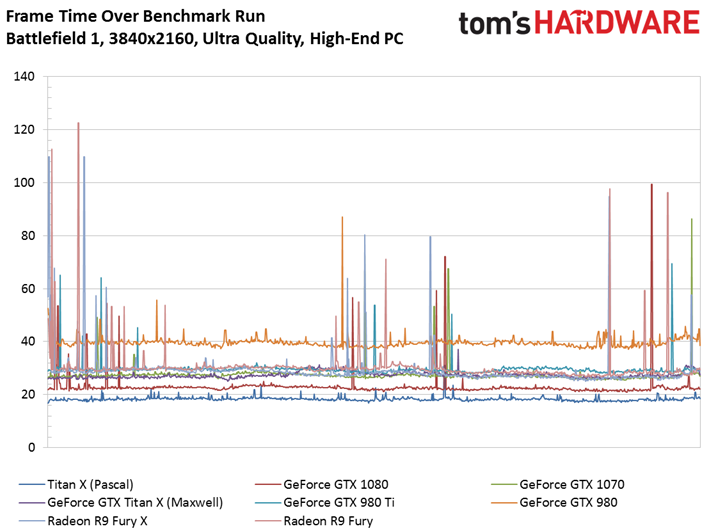

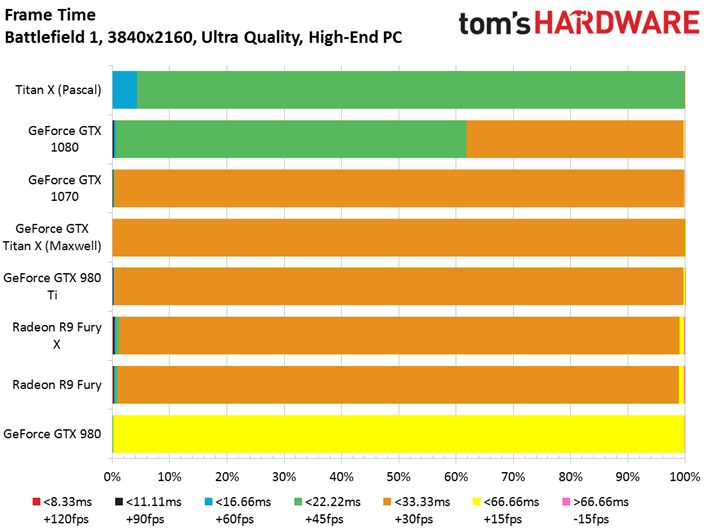

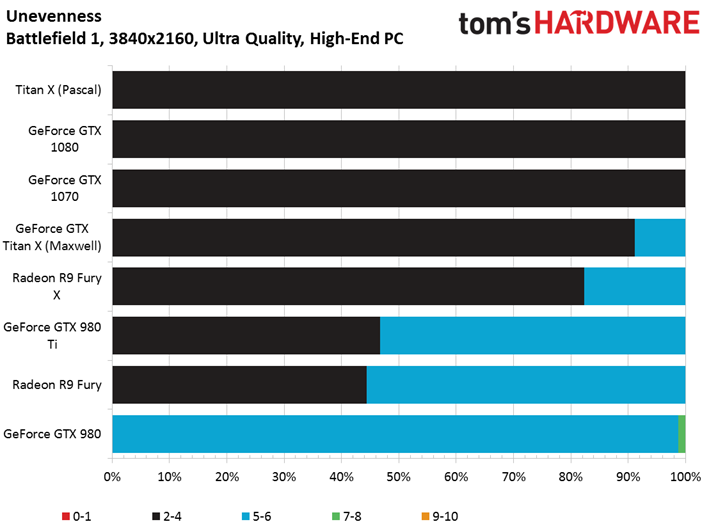

Ultra Quality Preset

The most demanding settings we test at—3840x2160 using Ultra settings—are too severe for most single-GPU solutions. A Titan X or GeForce GTX 1080 Ti keeps you above 50 FPS through our benchmark run. The GeForce GTX 1080 maintains at least 40 FPS. Everything else falls into the 30s and below.

MORE: Best CPUs

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: Intel & AMD Processor Hierarchy

MORE: All CPU Content

Battlefield 1

Current page: High-End PC, 3840x2160

Prev Page High-End PC, 2560x1440 Next Page RX 480 And GTX 1060: Mainstream Vs. High-End-

envy14tpe Wow. The amount of work in putting this together. Thanks, from all the BF1 gamers out there. You knocked my socks off, and are pushing me to upgrade my GPU.Reply -

computerguy72 Nice article. Would have been interesting to see the 1080ti and the Ryzen 1800x mixed in there somewhere. I have a 7700k and a 980ti it would be good info to get some direction on where to take my hardware next. I'm sure other people might find that interesting too.Reply -

Achaios Good job, just remember that these "GPU showdowns" don't tell the whole story b/c cards are running at Stock, and there are GPU's that can get huge overclocks thus performing significantly better.Reply

Case in point: GTX 780TI

The 780TI featured here runs at stock which was 875 MHz Base Clock and 928 MHz Boost Clock, whereas the 3rd party GPU's produced ran at 1150 MHz and boosted up to 1250-1300 MHz. We are talking about 30-35% more performance here for this card which you ain't seeing here at all. -

xizel Great write up, just a shame you didnt use any i5 CPUS, i would of really liked to se how an i5 6600k competes with its 4 cores agains the HT i7sReply -

Verrin Wow, impressive results from AMD here. You can really see that Radeon FineWine™ tech in action.Reply -

And then you run in DX11 mode and it runs faster than DX12 across the board. Thanks for effort you put in this but rather pointless since DX12 has been nothing but pile of crap.Reply

-

NewbieGeek @XIZEL My i5 6600k @4.6ghz and rx 480 get 80-90 fps max settings on all 32 v 32 multiplayer maps with very few spikes either up or down.Reply -

Jupiter-18 Fascinating stuff! Love that you are still including the older models in your benchmarks, makes for great info for a budget gamer like myself! In fact, this may help me determine what goes in my budget build I'm working on right now, which I was going to have dual 290x (preferably 8gb if I can find them), but now might have something else.Reply