2011 Flash Memory Summit Recap: Tom's Hardware Represents

Our Take On SSD Benchmarking

Synthetic vs Application vs. Trace-based

Performance has a profound impact on purchasing decisions, whether you're buying a new car, a vacuum, or a CPU. That's precisely the reason so much effort goes into picking the benchmarks that best represent real-world use.

Testing SSDs is no different. Just as we see in the processor and graphics markets, SSD #1 might SSD #2 in one benchmark, and then fall behind SSD #2 in a second metric. The secret is in the tests themselves. But whereas it's easy to reflect the performance of other PC-oriented components using a wide swath of real-world applications that truly tax the hardware, it's much more difficult to reflect SSD performance in the same way.

| Benchmarks | Synthetic | Application | Trace-based |

|---|---|---|---|

| Example | Iometer | BAPCo SYSmark | Intel IPEAK |

| I/O Workload | Static | Dynamic | Dynamic |

| I/O Settings | Set By User | No Controls | Created By User |

| Performance Evaluation | Specific Scenario | Specific Workload | Long-Term |

Benchmarks ultimately fall into three groups: synthetic, application-based, and trace-based. But everyone at the conference seemed to have a different take on which tests are best. Some vendors, such as Micron and HP, prefer synthetic benchmarks. Others, like Virident Systems, advocate application-level testing. Meanwhile, Intel maintains that a trace-based benchmark created using software like IPEAK is the best measure of storage performance.

Asked about the right way to test SSDs, there is no easy answer. Each metric has unique strengths. For consumers, however, we have to say that trace-based benchmarking is most representative, so long as the trace properly mirrors what the consumer is doing on his or her machine as well. Why is this?

Well, synthetic benchmarks test one specific scenario. For example, running Iometer requires defined settings for queue depth, transfer sizes, and seek distance. But how often is your workload exactly 80% 4 KB random reads and 20% 4 KB random writes at queue depth of four? Even if you change those values, it can be difficult to see how they relate to the real-world when you're trying to isolate one specific performance attribute. This does work well for gauging the behavior of many enterprise applications, where a server might host a single piece of software accessing data in a consistent way.

Application benchmarks are a little different. There, you're measuring the performance of a specific application (or a set of applications), but without regard to queue depth, transfer sizes, or seek distance. Some tests, such as SYSmark, spit out a relative score based on an arbitrary unit of measure. Other application-based metrics are incredibly useful because they measure performance in a more tangible way. For example, the MySQL TPCC benchmark provides scores in transactions per second. That's an intuitive measurement for an IT manager tasked with increasing the number of queries a server can handle. Unfortunately, in many cases, application-based benchmarks are either ambiguous or limited to a specific scenario.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Trace-based benchmarks are a mix of the two aforementioned approaches, measuring performance as the drive is actually used. This involves recording the I/O commands at the operating system level and playing them back. The result is a benchmark where queue depth, transfer size, and seek distance change over time. That's a great way to shed some light on a more long-term view of performance, which is why we based our Storage Bench v1.0 on a trace.

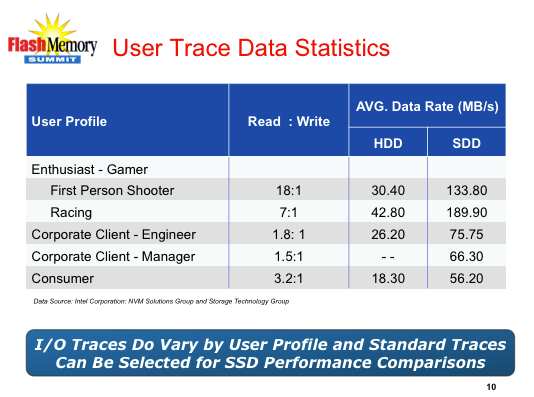

The complication is that no two users' workloads are the same. So, a trace is most valuable to the folks who run similar applications.

However, traces still offer an intuitive way to express the overall performance difference between two storage solutions. Synthetic benchmarks are usually dialed up to unrealistic settings, demonstrating the most fringe differences between two drives as a means to draw some sort of conclusion at the end of the story. An application-based benchmark might do a better job of illustrating real-world differences, but then only in a specific piece of software. Using traces, it's possible to see how responsive one drive is versus another.

Current page: Our Take On SSD Benchmarking

Prev Page 2011 Flash Memory Summit Next Page The Dirty Secrets Of SSD Benchmarking-

compton Is there a way for a user to record their own week of disk access, and in so doing make their own Storage Bench (with the assistance of some other software)?Reply -

Still very surprised hybrid hard disks like the Momentus XT aren't getting more R&D. If they included even half the capacity of a mainstream HD and a quarter the capacity of a mainstream SSD, they'd have an amazing product. Just think: a 500GB platter paired with 32GB SSD for, what, $150? I'd buy that in a jiffy.Reply

-

chovav caywen - I don't think I agree. This approach means you will lose both SSD and hard drive if one fails. Second, a small SSD like that will only be useful for caching, which we all saw until now isn't worth much.Reply

lastly, wanting to upgrade will mean having to upgrade both, instead of one. I think this is one of the reasons why the XT was never so popular...

O.T - nice read. It did feel a bit as though it was stopped in the middle.. -

mkrijt chovavIt did feel a bit as though it was stopped in the middle..Reply

That was exactly what I was thinking. I was looking for the "next page" button but couldn't find it. I was like wtf? went back to the first page to look at the index finding it really ended there :( -

cknobman mkrijtThat was exactly what I was thinking. I was looking for the "next page" button but couldn't find it. I was like wtf? went back to the first page to look at the index finding it really ended thereReply

LOL me too!!!

For me personally I am willing to drop up to $120 on a SSD but that is the breaking poing for me and I am not willing to settle for anything less than 120GB due to the performance drop in smaller SSD's and also how much space I need for my OS and other apps (and no I am not counting data like movies or pics).

The main thing holding me off on purchasing a SSD right now is the lack of confidence in reliability. The bugs in Intel, Sandforce, and whatever controller crucial uses in the m4 makes me worried. Just looking at articles here and user reviews on NewEgg was enough for me to get gun shy and wait. There are few things I hate more than having to setup my system and even though I can ghost my boot drive there will always be some loss in a drive failure and that is just something I want to avoid if I can. Unfortunately it just seems like owning a SSD right now leaves too high a risk of drive failure. Plus it does not help reading how manufacturers refuse to comment or give any real hard data on reliability. -

nforce4max What about page file related write attributed to normal use? It is very easy to see more than 10gb a day worth of writes depending on the apps used.Reply -

razvanz nforce4max I agree with you. I haven't seen any SSD tests using bittorent. How does a SSD fare against a HDD in bittorent usage. I think bittorent will easily overwhelm the read/write cycles of a SSD.Reply -

JamesAbel On the surveys on "willingless to pay for SSDs" - has this ever been done on someone that has TRIED an SSD? Reading about a benchmark is different than experiencing it. On my SSDs, I see huge benefits in things like app load time vs. an HDD. Also, SSDs are much faster vs. HDDs when multiple apps are running and causing lots of head seeks (drive thrash). Give someone an SSD for a month and then give them back an HDD - I think many won't be so willing to switch back (and be willing to pay from there on out).Reply -

Mark_Alberta I have been testing various SATA SSD's for recording CCTV footage for several months now. The results have been disappointing when compared to mechanical hard drives. First off, unless I set the maximum file size to 85% or less of the drive's capacity, instead of 95% with say, a WD Caviar Black, the drive would over write the data 3-4 times and then suddenly become undetectable on the system until I reboot it. Not a good thing when considering the purpose of the unit. This has occurred on three different manufacturer's drives, so it's obviously not a one-off issue. Another interesting problem that can up was on the 40GB OS drive, the detected volume dropped from 37GB to roughly 24GB. I reformatted the drive, re-installed the OS and everything returned to normal. I am going to continue to try and perfect a system using SSD's, but it is a lot more of a headache that the simplicity of plugging in a 3.5" mechanical drive and moving on.Reply -

drwho1 I would be willing to pay from $150 to @200 top for a 500GB SSD.Reply

When prices and size ratio meet this price standards then I would take a serious look into SSD's.

Have in mind that current 2TB hard drives are under $100 dollars (true they are green drives). But when a SSD per GB cost 10 to 20 times as much a hard drive is just not right to me.