The Myths Of Graphics Card Performance: Debunked, Part 1

Did you know that Windows 8 can gobble as much as 25% of your graphics memory? That your graphics card slows down as it gets warmer? That you react quicker to PC sounds than images? That overclocking your card may not really work? Prepare to be surprised!

To Enable Or Disable V-Sync: That Is The Question

Speed is the first dimension that comes to mind in a graphics card evaluation. How much faster is the latest and greatest than whatever came before? The Internet is littered with benchmarking data from thousands of sources trying to answer that question.

So, let's start by exploring speed and the variables to consider if you really want to know how fast a given graphics card is.

Myth: Frames rate is the indicator of graphics performance

Let's start with something that the Tom's Hardware audience probably knows already, but remains a misconception elsewhere. Common wisdom suggests that for a game to be playable, it should run at 30 frames per second or more. Some folks believe lower frame rates are still alright, and others insist that 30 FPS is far too low.

In the debate, however, it's not always reinforced that FPS is just a rate, and there is a host of complexity behind it. Most notably, while the frame rate of a movie is constant, a rendered game varies over time and is consequently expressed as an average. Variation is a byproduct of the horsepower required to process any given scene, and as the on-screen content changes, so does frame rate.

The simple point is that there is more to quality of a gaming experience than the instantaneous (or average) rate at which frames are rendered. The consistency of their delivery is an additional factor. Imagine traveling on a highway at a constant 65 MPH compared to the same trip at an average of 65 MPH, spending a lot more time switching between accelerator and brake. You reach your destination in roughly the same amount of time, but the experience is quite a bit different.

So, let's set the question "How much performance is enough?" aside for a moment. We'll get back to it after touching a few other relevant topics.

Introducing V-sync

Myths: Frame rates over 30 FPS aren't necessary; the human eye can't tell a difference. Values above 60 FPS on a 60 Hz display aren't necessary; the monitor is already refreshing 60 times a second. V-sync should always be enabled. V-sync should always be disabled.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

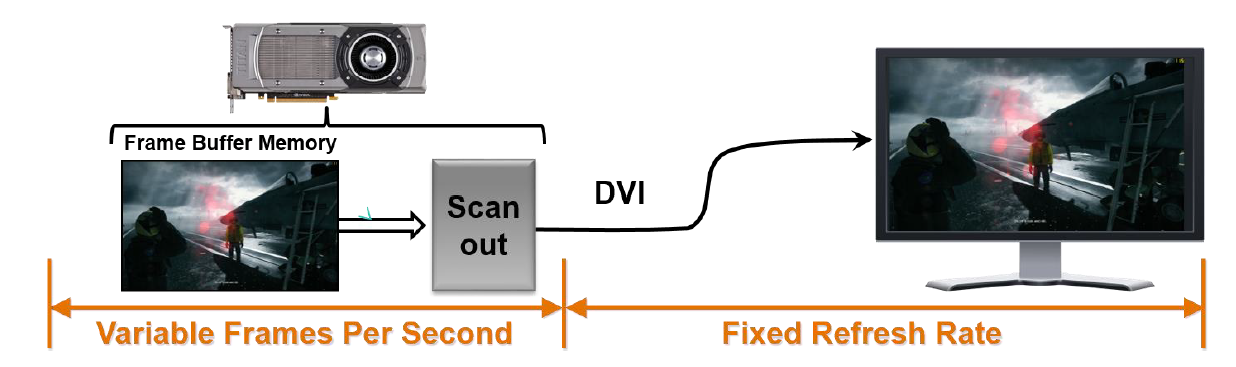

How are rendered frames actually displayed? Because of the way almost all LCD displays work, the image on-screen is updated a fixed number of times per second. Typically, the magic number is 60, though there are also 120 and 144 Hz panels capable of more refreshes per second. When you talk about this mechanism, you're referring to the refresh rate, which of course is measured in Hertz.

Now, the mismatch between the graphics card's variable frame rate and the display's fixed refresh rate can be problematic. When the former happens faster than the latter, you end up with multiple frames displayed in the same scan, resulting in an artifact called screen tearing. In the image above, the colored bars denote unique frames from the graphics card getting thrown up on-screen as they're ready. This can be highly distracting, particularly in a fast-paced shooter.

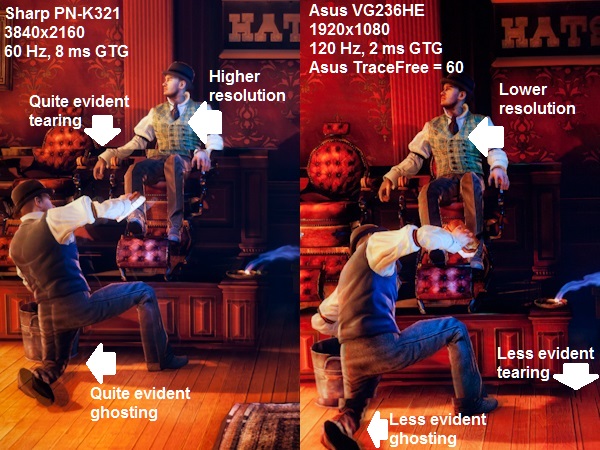

The image below shows another artifact commonly seen on-screen, but rarely documented. Because it's a display artifact, it doesn't show up in screen shots, but instead represents the image your eyes actually see. You need a fast camera to capture it. FCAT, which is what Chris Angelini used to create the traffic cone shot in Battlefield 4, does reflect tearing, but not the ghosting effect I'm illustrating.

Screen tearing is evident in both of my BioShock Infinite images. But it's more evident on the 60 Hz Sharp than the 120 Hz Asus panel because the VG236HE runs at a refresh rate that's twice as high . This artifact is the clearest indicator that a game is running with V-sync, or vertical synchronization, disabled.

The other issue in the BioShock image is ghosting, which you can see especially in the bottom of the left image. This is attributable to screen latency. In short, individual pixels don't change color quickly enough and show this type of afterglow. The in-game effect is far more dramatic than my images suggest. An 8 ms gray-to-gray response time, which is what the Sharp screen on the left is specified for, appears blurry whenever fast movement happens on-screen.

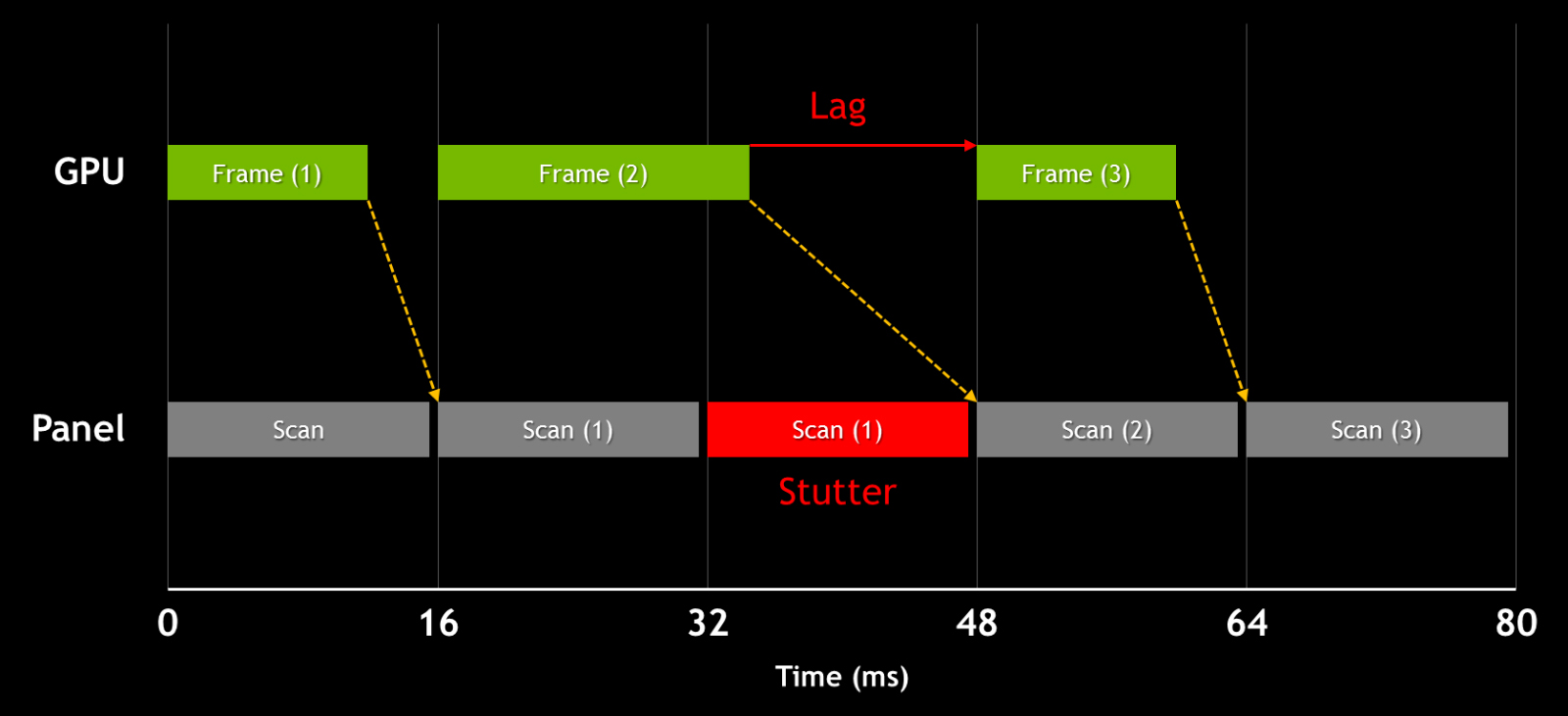

Back to tearing. The aforementioned V-sync is an old solution to the problem, which synchronizes the rate at which the video card presents frames to the screen's refresh rate. Because multiple frames no longer show up in a single panel refresh, tearing is no longer an issue. However, if your crank up the graphics quality of your favorite title and its frame rate drops below 60 FPS (or whatever your panel's refresh is set to), then your effective frame rate bounces between integer multiples of the refresh, illustrated below. Now, you face another artifact called stuttering.

One of the Internet's oldest arguments is whether you should turn V-sync on or leave it off. Some folks insist it's one or the other, and some enthusiasts will change the setting based on the game they're playing.

So, V-sync On, Or V-sync Off?

Let's say you're in the majority and own a typical 60 Hz display:

- If you play first-person shooter games competitively, and/or have issues with perceived input lag, and/or if your system cannot sustain at least 60 FPS in a given title, and/or you're benchmarking your graphics card, then you should turn V-sync off.

- If none of the above applies to you and you experience significant screen tearing, then you should turn V-sync on.

- As a general rule, or if you don’t feel strongly either way, just keep V-sync off.

If you own a gaming-oriented 120/144 Hz display (if you have one, there's a good chance you bought it specifically for its higher refresh rate):

- You should consider leaving V-sync on only when playing older games, where you experience a sustained >120 FPS and you are experiencing screen tearing.

Note that there are certain cases where the frame rate-halving impact of V-sync doesn't apply, such as applications supporting triple buffering, though those cases aren't common. Also, in some games (like The Elder Scrolls V: Skyrim), V-sync is enabled by default. Forcing it off by modifying certain files can cause issues with the game engine itself. In those cases, you're best off leaving V-sync on.

G-Sync, FreeSync, and the Future

G-Sync Technology Preview: Quite Literally A Game Changer was a preview of Nvidia's solution to all of this. AMD made a somewhat feeble attempt at responding by showing off its FreeSync technology at CES 2014, though that might only be viable on laptops for now - that said, we applaud AMD's open-source approach to the technology as the right way to go. Both capabilities work around V-sync's compromises by allowing the display to operate at a variable refresh.

It is hard to say where the industry is heading, but as I mentioned in my G-Sync coverage, we're not fans of proprietary standards (and I bet most OEMs agree). I'd like to see Nvidia consider opening up G-Sync to the rest of the community, though we know from experience that the company tends not to do this.

Current page: To Enable Or Disable V-Sync: That Is The Question

Prev Page Graphics Card Myth Busting: How We Tested Next Page Do I Need To Worry About Input Lag?-

manwell999 The info on V-Sync causing frame rate halving is out of date by about a decade. With multithreading the game can work on the next frame while the previous frame is waiting for V-Sync. Just look at BF3 with V-Sync on you get a continous range of FPS under 60 not just integer multiples. DirectX doesn't support triple buffering.Reply -

hansrotec with over clocking are you going to cover water cooling? it would seem disingenuous to dismiss overclocking based on a generating of cards designed to run up to maybe a speed if there is headroom and not include watercooling which reduces noise and temperature . my 7970 (pre ghz editon) is a whole different card water cooled vs air cooled. 1150 mhz without having to mess with the voltage on water with temps in 50c without the fans or pumps ever kicking up, where as on air that would be in the upper 70s lower 80s and really loud. on top of that tweeking memory incorrectly can lower frame rateReply -

hansrotec I thought my last comment might have seemed to negative, and i did not mean it in that light. I did enjoy the read, and look forward to more!Reply -

hansrotec I thought my last comment might have seemed to negative, and i did not mean it in that light. I did enjoy the read, and look forward to more!Reply -

noobzilla771 Nice article! I would like to know more about overclocking, specifically core clock and memory clock ratio. Does it matter to keep a certain ratio between the two or can I overclock either as much as I want? Thanks!Reply -

chimera201 I can never win over input latency no matter what hardware i buy because of my shitty ISPReply -

immanuel_aj I'd just like to mention that the dB(A) scale is attempting to correct for perceived human hearing. While it is true that 20 dB is 10 times louder than 10 dB, but because of the way our ears work, it would seem that it is only twice as loud. At least, that's the way the A-weighting is supposed to work. Apparently there are a few kinks...Reply