The Myths Of Graphics Card Performance: Debunked, Part 1

Did you know that Windows 8 can gobble as much as 25% of your graphics memory? That your graphics card slows down as it gets warmer? That you react quicker to PC sounds than images? That overclocking your card may not really work? Prepare to be surprised!

The Myths Surrounding Graphics Card Memory

Video memory enables resolution and quality settings, does not improve speed

Graphics memory is often used by card vendors as a marketing tool. Because gamers have been conditioned to believe that more is better, it's common to see entry-level boards with far more RAM than they need. But enthusiasts know that, as with every subsystem in their PCs, balance is most important.

Broadly, graphics memory is dedicated to a discrete GPU and the workloads it operates on, separate from the system memory plugged in to your motherboard. There are a couple of memory technologies used on graphics cards today, the most popular being DDR3 and GDDR5 SDRAM.

Myth: Graphics cards with 2 GB of memory are faster than those with 1 GB

Not surprisingly, vendors arm inexpensive cards with too much memory (and eke out higher margins) because there are folks who believe more memory makes their card faster. Let's set the record straight on that. The memory capacity a graphics card ships with has no impact on that product's performance, so long as the settings you're using to game with don't consume all of it.

What does having more video memory actually help, then? In order to answer that, we need to know what graphics memory is used for. This is simplifying a bit, but it helps with:

- Loading textures

- Holding the frame buffer

- Holding the depth buffer ("Z Buffer")

- Holding other assets that are required to render a frame (shadow maps, etc.)

Of course, the size of the textures getting loaded into memory depends on the game you're playing and its quality preset. As an example, the Skyrim high-resolution texture pack includes 3 GB of textures. Most applications dynamically load and unload textures as they're needed, though, so not all textures need to reside in graphics memory. The textures required to render a particular scene do need to be in memory, however.

The frame buffer is used to store the image as it is rendered, before or during the time it is sent to the display. Thus, its memory footprint depends on the output resolution (an image at at 1920x1080x32 bpp is ~8.3 MB; a 4K image at 3840x2160x32 is ~33.2 MB), the number of buffers (at least two; rarely three or more).

As specific anti-aliasing modes (FSAA, MSAA, CSAA, CFAA, but not FXAA or MLAA) effectively increase the number of pixels that need to be rendered, they proportionally increase overall required graphics memory. Render-based anti-aliasing in particular has a massive impact on memory usage, and that grows as sample size (2x, 4x, 8x, etc) increases. Additional buffers also occupy graphics memory.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

So, a graphics card with more memory allows you to:

- Play at higher resolutions

- Play at higher texture quality settings

- Play with higher render-based antialiasing settings

Now, to address the myth.

Myth: You need 1, 2, 3, 4, or 6 GB of graphics memory to play at (insert your display's native resolution here).

The most important factor affecting the amount of graphics memory you need is the resolution you game at. Naturally, higher resolutions require more memory. The second most important factor is whether you're using one of the anti-aliasing technologies mentioned above. Assuming a constant quality preset in your favorite game, other factors are less influential.

Before we move on to the actual measurements, allow me to express one more word of caution. There is a particular type of high-end card with two GPUs (AMD's Radeon HD 6990 and 7990, along with Nvidia's GeForce GTX 590 and 690) that are equipped with a certain amount of on-board memory. But as a result of their dual-GPU designs, data is essentially duplicated, halving the effective memory. A GeForce GTX 690 with 4 GB, for instance, behaves like two 2 GB cards in SLI. Moreover, when you add a second card to your gaming configuration in CrossFire or SLI, the array's graphics memory doesn't double. Each card still has access only to its own memory.

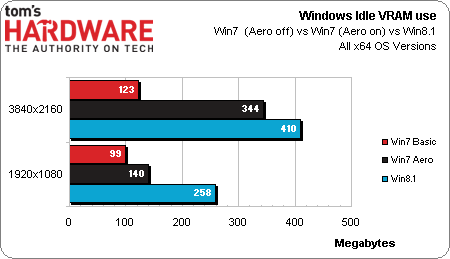

These tests were run on a Windows 7 x64 setup with Aero disabled. If you’re using Aero (or Windows 8/8.1, which doesn't have Aero), you should add ~300 MB to each and every individual measure you see listed below.

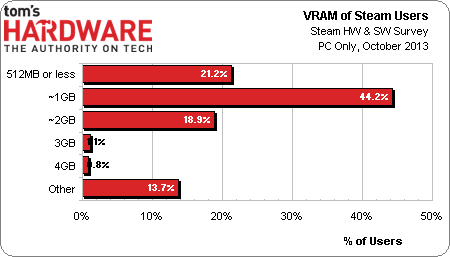

As you can see from the latest Steam hardware survey, most gamers (about half) tend to own video cards with 1 GB of graphics memory, ~20% have about 2 GB, and the number of users with 3 GB or more is less than 2%.

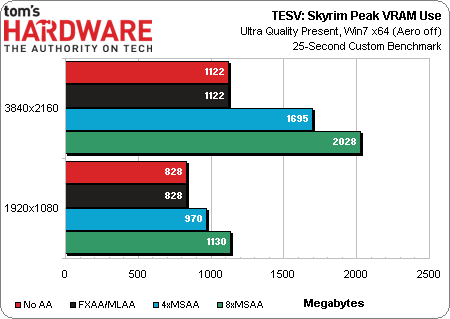

We tested Skyrim with the official high-resolution texture pack enabled. As you can see, 1 GB of graphics memory is barely enough to play the game at 1080p without AA or with MLAA/FXAA enabled. Two gigabytes will let you run at 1920x1080 with details cranked up and 2160p with reduced levels of AA. To enable the full Ultra preset and 8xMSAA, not even 2 GB card is sufficient.

Bethesda’s Creation Engine is a unique creature in this set of benchmarks. It is not easily GPU-bound, and is instead often limited by platform performance. But in these tests, we newly demonstrate how Skyrim can be bottlenecked by graphics memory at the highest-quality settings.

It's also worth noting that enabling FXAA uses no memory whatsoever. There's a value trade-off to be made in cases where MSAA is not an option.

Current page: The Myths Surrounding Graphics Card Memory

Prev Page Do I Need To Worry About Input Lag? Next Page More Graphics Memory Measurements-

manwell999 The info on V-Sync causing frame rate halving is out of date by about a decade. With multithreading the game can work on the next frame while the previous frame is waiting for V-Sync. Just look at BF3 with V-Sync on you get a continous range of FPS under 60 not just integer multiples. DirectX doesn't support triple buffering.Reply -

hansrotec with over clocking are you going to cover water cooling? it would seem disingenuous to dismiss overclocking based on a generating of cards designed to run up to maybe a speed if there is headroom and not include watercooling which reduces noise and temperature . my 7970 (pre ghz editon) is a whole different card water cooled vs air cooled. 1150 mhz without having to mess with the voltage on water with temps in 50c without the fans or pumps ever kicking up, where as on air that would be in the upper 70s lower 80s and really loud. on top of that tweeking memory incorrectly can lower frame rateReply -

hansrotec I thought my last comment might have seemed to negative, and i did not mean it in that light. I did enjoy the read, and look forward to more!Reply -

hansrotec I thought my last comment might have seemed to negative, and i did not mean it in that light. I did enjoy the read, and look forward to more!Reply -

noobzilla771 Nice article! I would like to know more about overclocking, specifically core clock and memory clock ratio. Does it matter to keep a certain ratio between the two or can I overclock either as much as I want? Thanks!Reply -

chimera201 I can never win over input latency no matter what hardware i buy because of my shitty ISPReply -

immanuel_aj I'd just like to mention that the dB(A) scale is attempting to correct for perceived human hearing. While it is true that 20 dB is 10 times louder than 10 dB, but because of the way our ears work, it would seem that it is only twice as loud. At least, that's the way the A-weighting is supposed to work. Apparently there are a few kinks...Reply