The Myths Of Graphics Card Performance: Debunked, Part 1

Did you know that Windows 8 can gobble as much as 25% of your graphics memory? That your graphics card slows down as it gets warmer? That you react quicker to PC sounds than images? That overclocking your card may not really work? Prepare to be surprised!

Do I Need To Worry About Input Lag?

Myth: Graphics Cards Affect Input Lag

Let’s say you’re getting shot up in your favorite multi-player shooter before you have the chance to even react. Is your opposition really that much better than you? Could they be cheating? Or is something else going on?

Aside from the occasional cheat, which does happen, the truth might be that those seemingly super-human reflexes are at least partly assisted by technology. And they might have very little to do with your graphics card.

It takes time for what happens in a game to show up on your screen. It takes from for you to react. And it takes time for your mouse and keyboard inputs to register. Somewhat improperly, the delay between you issuing a command and the on-screen action is commonly called input lag. So, if you press the trigger in a first-person shooter and your weapon fires .1 seconds later, your input lag is effectively 100 milliseconds.

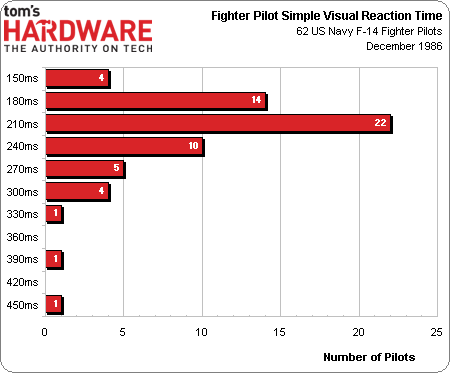

Human reaction times to visual inputs vary. According to a 1986 U.S. Navy study, the average F-14 fighter pilot reacted to a simple visual stimulus in an average of 223 ms. And it might not seem correct, but human beings actually react faster to sound than visual inputs. Reactions to auditory stimuli tend to be in the ~150 ms range.

If you're curious, you can test for yourself how quickly you react to either by clicking the simple visual test and then the audio test.

Fortunately, no matter how poorly-configured your PC may be, it probably won't hit 200 ms of input lag. So, your personal reaction time remains the biggest influencer of how quickly your character responds in a game.

As differences in input lag increase, however, they increasingly do affect gameplay. Imagine a professional gamer with reflexes comparable to the best fighter pilots at 150 ms. A 50 ms slow-down in input means that person will be 30% slower (that's four frames on a 60 Hz display) than his competition. At the professional level, that's notable.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

For mere mortals (including me; I scored 200 ms in the visual test linked above), and for anyone who would rather play Civilization V leisurely than Counter Strike 1.6 competitively, it’s an entirely different story; you can likely ignore input lag altogether.

Here are some of the factors that can worsen input lag, all else being equal:

- Playing on an HDTV (even more so if its game mode is disabled) or playing on an LCD display that performs some form of video processing that cannot be bypassed. Check out DisplayLag's Input Lag database for a great list organized by model.

- Playing on LCD displays, which employ higher-response time IPS panels (5-7 ms G2G typical), versus TN+Film panels (1-2 ms GTG possible), versus CRT displays (the fastest available).

- Playing on displays with lower refresh rates; the newest gaming displays support 120 or 144 Hz natively.

- Playing at low frame rates (30 FPS is one frame every 33 ms; 144 FPS is one frame every 7 ms).

- Using a USB-based mouse with a low polling rate. The default 125 Hz is a ~6 ms cycle time, yielding a ~3 ms input lag on average. Meanwhile, gaming mice can go to ~1000 Hz for ~0.5 ms average input lag.

- Using a low-quality keyboard (keyboard input lag is 16 ms typically, but can be higher for poor ones).

- Enabling V-sync, especially so when using triple buffering as well (there is a myth that Direct3D does not implement triple buffering; the reality is that Direct3D does account for the option of multiple back buffers, but few games exploit this). Check out Microsoft's write-up, if you're technically inclined.

- Playing with high render-ahead queues. The default in Direct3D is three frames, or 48 ms at 60 Hz. This figure can be increased to 20 for greater “smoothness” and dropped to one for increased responsiveness at the cost of greater frame time variance and, in some cases, somewhat lower FPS overall. There is no such setting as a zero setting; what zero does is simply reset to the default value of three. Check out Microsoft's write-up, if you're technically inclined.

- Playing on a high-latency Internet connection. While this goes beyond what would be defined as input lag, if effectively stacks with it

Factors that do not make a difference include:

- Using a PS/2 or USB keyboard (see a dedicated page in our article: Five Mechanical-Switch Keyboards: Only The Best For Your Hands)

- Using a wireless or wired network connection (just try pinging your router if you don’t believe us; you should see ping times of less than 1 ms).

- Enabling SLI or CrossFire. The longer render queues required to enable these technologies are generally compensated by higher frame throughput.

Bottom Line: Input lag only matters in "twitch" games, and really matters only at highly competitive levels.

There is a lot more to input lag than just display technology or a graphics card. Your hardware, hardware settings, display, display settings, and application settings all influence this measurement.

Current page: Do I Need To Worry About Input Lag?

Prev Page To Enable Or Disable V-Sync: That Is The Question Next Page The Myths Surrounding Graphics Card Memory-

manwell999 The info on V-Sync causing frame rate halving is out of date by about a decade. With multithreading the game can work on the next frame while the previous frame is waiting for V-Sync. Just look at BF3 with V-Sync on you get a continous range of FPS under 60 not just integer multiples. DirectX doesn't support triple buffering.Reply -

hansrotec with over clocking are you going to cover water cooling? it would seem disingenuous to dismiss overclocking based on a generating of cards designed to run up to maybe a speed if there is headroom and not include watercooling which reduces noise and temperature . my 7970 (pre ghz editon) is a whole different card water cooled vs air cooled. 1150 mhz without having to mess with the voltage on water with temps in 50c without the fans or pumps ever kicking up, where as on air that would be in the upper 70s lower 80s and really loud. on top of that tweeking memory incorrectly can lower frame rateReply -

hansrotec I thought my last comment might have seemed to negative, and i did not mean it in that light. I did enjoy the read, and look forward to more!Reply -

hansrotec I thought my last comment might have seemed to negative, and i did not mean it in that light. I did enjoy the read, and look forward to more!Reply -

noobzilla771 Nice article! I would like to know more about overclocking, specifically core clock and memory clock ratio. Does it matter to keep a certain ratio between the two or can I overclock either as much as I want? Thanks!Reply -

chimera201 I can never win over input latency no matter what hardware i buy because of my shitty ISPReply -

immanuel_aj I'd just like to mention that the dB(A) scale is attempting to correct for perceived human hearing. While it is true that 20 dB is 10 times louder than 10 dB, but because of the way our ears work, it would seem that it is only twice as loud. At least, that's the way the A-weighting is supposed to work. Apparently there are a few kinks...Reply