Tom's Hardware Verdict

Based on the same TU116 processor as GeForce GTX 1660 Ti, Nvidia’s GeForce GTX 1660 loses two Streaming Multiprocessors and swaps out GDDR6 memory for slower GDDR5. As a result, it remains an excellent choice for gaming at 1920x1080 but isn’t recommended for 2560x1440. Just be sure to comparison shop before making your purchase. Hot deals on Radeon RX 580 cards may warrant a look, despite their lower performance.

Pros

- +

Excellent 1080p performance

- +

Attractive price at $220 entry point

- +

Reasonable 120W power consumption helps keep heat and noise low

Cons

- -

Not ideal for 1440p gaming

- -

Similar power profile as the faster GeForce GTX 1660 Ti

Why you can trust Tom's Hardware

Nvidia GeForce GTX 1660 Review

It was only a matter of time before Nvidia took the pristine TU116 graphics processor in its GeForce GTX 1660 Ti and carved it up a bit to create a lower-cost derivative. The new GeForce GTX 1660 is, unsurprisingly, very similar to the higher-end model in that it lacks the Turing architecture’s signature RT and Tensor cores. Instead, it aims on-die resources at accelerating today’s rasterized games.

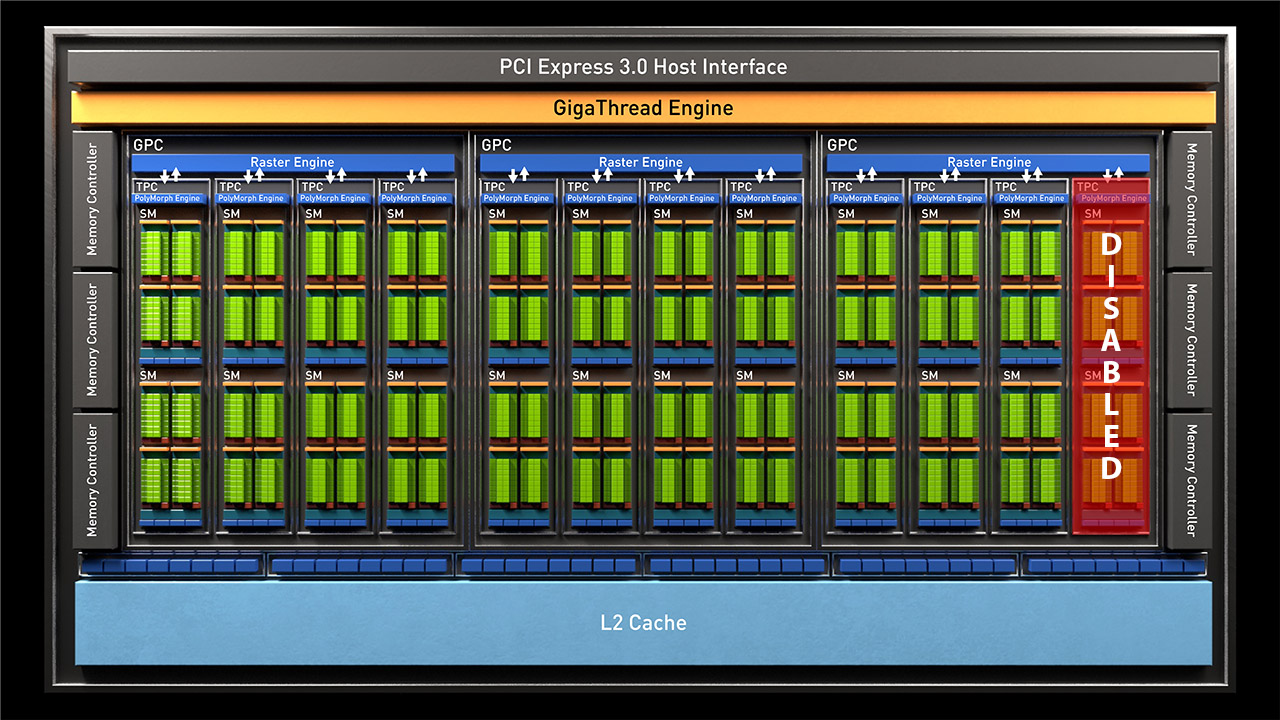

Nvidia doesn’t even cut much from TU116's resource pool in the creation of GeForce GTX 1660: a pair of Streaming Multiprocessors are excised, taking 128 CUDA cores and eight texture units with them. But the GPU is otherwise quite complete. This card’s biggest loss is its lack of GDDR6 memory. By swapping in 8 Gb/s GDDR5 instead, bandwidth drops from the 1660 Ti’s 288 GB/s to a mere 192 GB/s.

Naturally, the GeForce GTX 1660 is primarily aimed at FHD gaming, where 6GB of slower memory won’t hurt performance as much as it would at higher resolutions. Can the $220/£200 board maintain fast enough frame rates to stave off AMD’s Radeon RX 590 with more GDDR5 on a wider bus, though?

TU116 Recap: Turing Without the RT and Tensor Cores

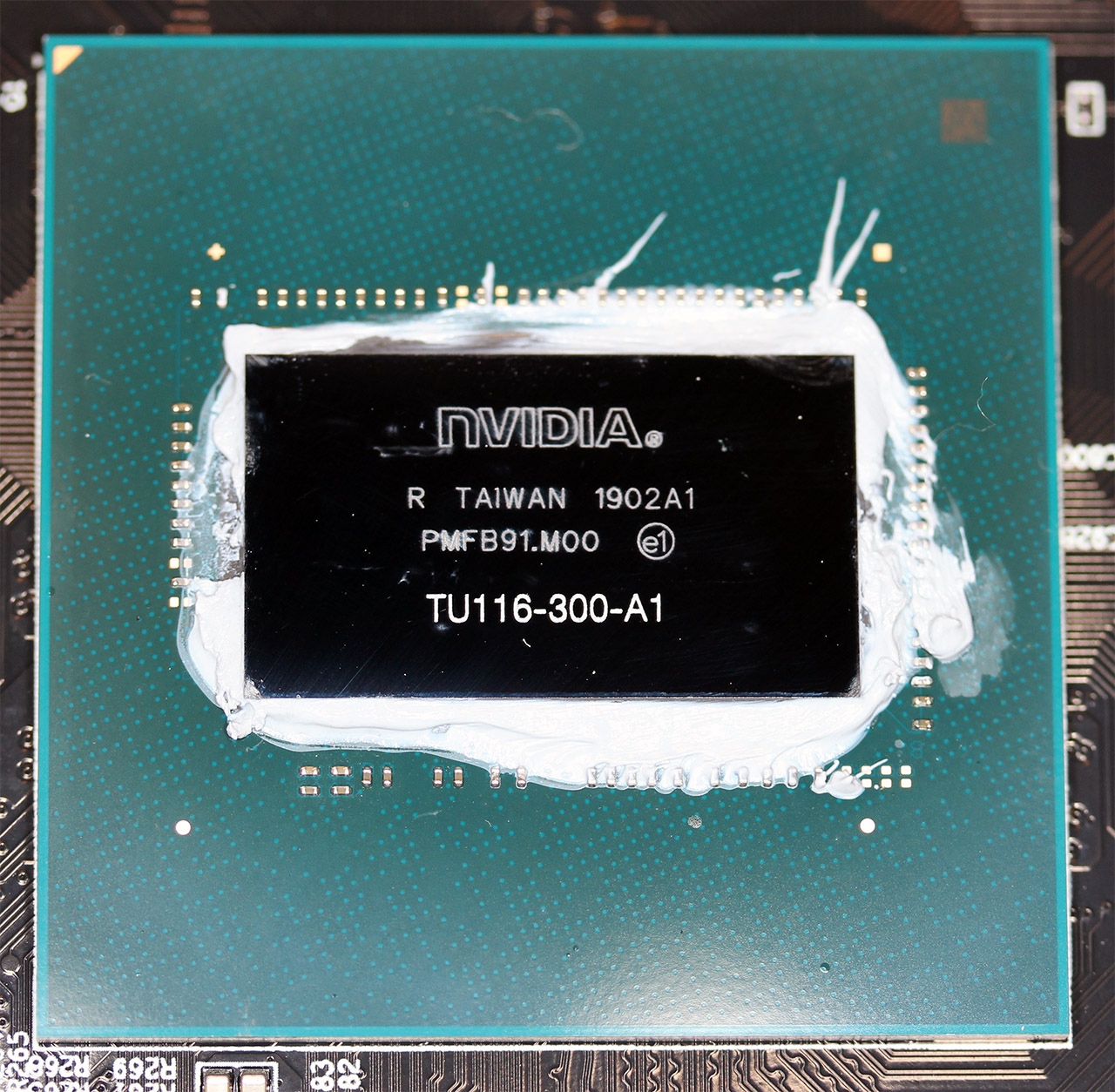

The GPU at the heart of GeForce GTX 1660 is specifically named TU116-300-A1. It’s a close relative of the GeForce GTX 1660 Ti’s TU116-400-A1, trimmed from 24 Streaming Multiprocessors to 22. We’re obviously still dealing with a processor devoid of Nvidia’s future-looking RT and Tensor cores, measuring 284mm² and composed of 6.6 billion transistors manufactured using TSMC’s 12nm FinFET process.

Despite its smaller transistors, TU116 is 42 percent larger than the GP106 processor that preceded it. Some of that growth is attributable to the Turing architecture’s more sophisticated shaders. Like the higher-end GeForce RTX 20-series cards, GeForce GTX 1660 supports simultaneous execution of FP32 arithmetic instructions, which constitute most shader workloads, and INT32 operations (for addressing/fetching data, floating-point min/max, compare, etc.). When you hear about Turing cores achieving better performance than Pascal at a given clock rate, this capability largely explains why.

Turing’s Streaming Multiprocessors are composed of fewer CUDA cores than Pascal’s, but the design compensates in part by spreading more SMs across each GPU. The newer architecture assigns one scheduler to each set of 16 CUDA cores (2x Pascal), along with one dispatch unit per 16 CUDA cores (same as Pascal). Four of those 16-core groupings comprise the SM, along with 96KB of cache that can be configured as 64KB L1/32KB shared memory or vice versa, and four texture units. Because Turing doubles up on schedulers, it only needs to issue an instruction to the CUDA cores every other clock cycle to keep them full. In between, it's free to issue a different instruction to any other unit, including the INT32 cores.

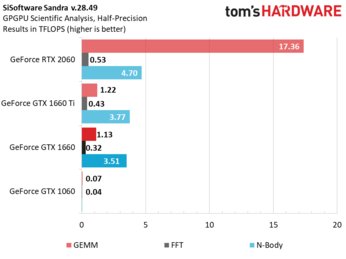

In TU116, Nvidia replaces Turing’s Tensor cores with 128 dedicated FP16 cores per SM, which allow GeForce GTX 1660 to process half-precision operations at 2x the rate of FP32. The other Turing-based GPUs boast double-rate FP16 as well through their Tensor cores, so TU116’s configuration serves to maintain that standard through hardware put in place specifically for this GPU. The following chart is an updated version of the one published in our GeForce GTX 1660 Ti review, which illustrates TU116’s massive improvement to half-precision throughput compared to GeForce GTX 1060 and its Pascal-based GP106 chip.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

When we ran Sandra's Scientific Analysis module, which tests general matrix multiplies, we see how much more FP16 throughput TU106's Tensor cores achieve compared to TU116. GeForce GTX 1060, which only supported FP16 symbolically, barely registers on the chart at all.

In addition to the Turing architecture’s shaders and unified cache, TU116 also supports a pair of algorithms called Content Adaptive Shading and Motion Adaptive Shading, together referred to as Variable Rate Shading. We covered this technology in Nvidia’s Turing Architecture Explored: Inside the GeForce RTX 2080. That story also introduced Turing's accelerated video encode and decode capabilities, which carry over to GeForce GTX 1660 as well.

Putting It All Together…

Nvidia packs 24 SMs into TU116, splitting them between three Graphics Processing Clusters. With 64 FP32 cores per SM, that’s 1,536 CUDA cores and 96 texture units across the entire GPU. In losing two SMs, GeForce GTX 1660 ends up with 1,408 active CUDA cores and 88 usable texture units.

Board partners will undoubtedly target a range of frequencies to differentiate their cards. However, the official base clock rate is 1,530 MHz with a GPU Boost specification of 1,785 MHz. Both of those numbers are slightly higher than GeForce GTX 1660 Ti’s clocks, though they cannot compensate entirely for the missing SMs.

Our Gigabyte GeForce GTX 1660 OC 6G sample maintained a steady 1,935 MHz through three runs of Metro: Last Light, operating about 90 MHz faster than the 1660 Ti we reviewed a few weeks ago. On paper, then, GeForce GTX 1660 offers up to 5 TFLOPS of FP32 performance and 10 TFLOPS of FP16 throughput.

Six 32-bit memory controllers give TU116 an aggregate 192-bit bus, which is populated by 8 Gb/s GDDR5 modules that push up to 192 GB/s. That’s comparable to GeForce GTX 1060 6GB, and a 33% reduction compared to GeForce GTX 1660 Ti. Combined with the loss of two SMs, dropping from GDDR6 to GDDR5 memory accounts for GeForce GTX 1660’s lower performance versus 1660 Ti.

Each memory controller is associated with eight ROPs and a 256KB slice of L2 cache. In total, TU116 exposes 48 ROPs and 1.5MB of L2. GeForce GTX 1660’s ROP count compares favorably to RTX 2060, which also utilizes 48 render outputs. But TU116’s L2 cache slices are half as large compared to TU106.

Given the similarities to GeForce GTX 1660 Ti, it’s no surprise that GeForce GTX 1660 is rated for the same 120W. Unfortunately, neither graphics card includes multi-GPU support. Nvidia continues pushing the narrative that SLI is meant to drive higher absolute performance, rather than give gamers a way to match single-GPU configurations.

| Header Cell - Column 0 | Gigabyte GeForce GTX 1660 OC 6G | GeForce GTX 1660 Ti | GeForce RTX 2060 FE | GeForce GTX 1060 FE | GeForce GTX 1070 FE |

|---|---|---|---|---|---|

| Architecture (GPU) | Turing (TU116) | Turing (TU116) | Turing (TU106) | Pascal (GP106) | Pascal (GP104) |

| CUDA Cores | 1408 | 1536 | 1920 | 1280 | 1920 |

| Peak FP32 Compute | 5 TFLOPS | 5.4 TFLOPS | 6.45 TLFOPS | 4.4 TFLOPS | 6.5 TFLOPS |

| Tensor Cores | N/A | N/A | 240 | N/A | N/A |

| RT Cores | N/A | N/A | 30 | N/A | N/A |

| Texture Units | 88 | 96 | 120 | 80 | 120 |

| Base Clock Rate | 1530 MHz | 1500 MHz | 1365 MHz | 1506 MHz | 1506 MHz |

| GPU Boost Rate | 1785 MHz | 1770 MHz | 1680 MHz | 1708 MHz | 1683 MHz |

| Memory Capacity | 6GB GDDR5 | 6GB GDDR6 | 6GB GDDR6 | 6GB GDDR5 | 8GB GDDR5 |

| Memory Bus | 192-bit | 192-bit | 192-bit | 192-bit | 256-bit |

| Memory Bandwidth | 192 GB/s | 288 GB/s | 336 GB/s | 192 GB/s | 256 GB/s |

| ROPs | 48 | 48 | 48 | 48 | 64 |

| L2 Cache | 1.5MB | 1.5MB | 3MB | 1.5MB | 2MB |

| TDP | 120W | 120W | 160W | 120W | 150W |

| Transistor Count | 6.6 billion | 6.6 billion | 10.8 billion | 4.4 billion | 7.2 billion |

| Die Size | 284 mm² | 284 mm² | 445 mm² | 200 mm² | 314 mm² |

| SLI Support | No | No | No | No | Yes (MIO) |

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: How to Buy the Right GPU

MORE: All Graphics Content

Current page: Nvidia GeForce GTX 1660 Review

Next Page Meet Gigabyte’s GeForce GTX 1660 OC 6G