Why you can trust Tom's Hardware

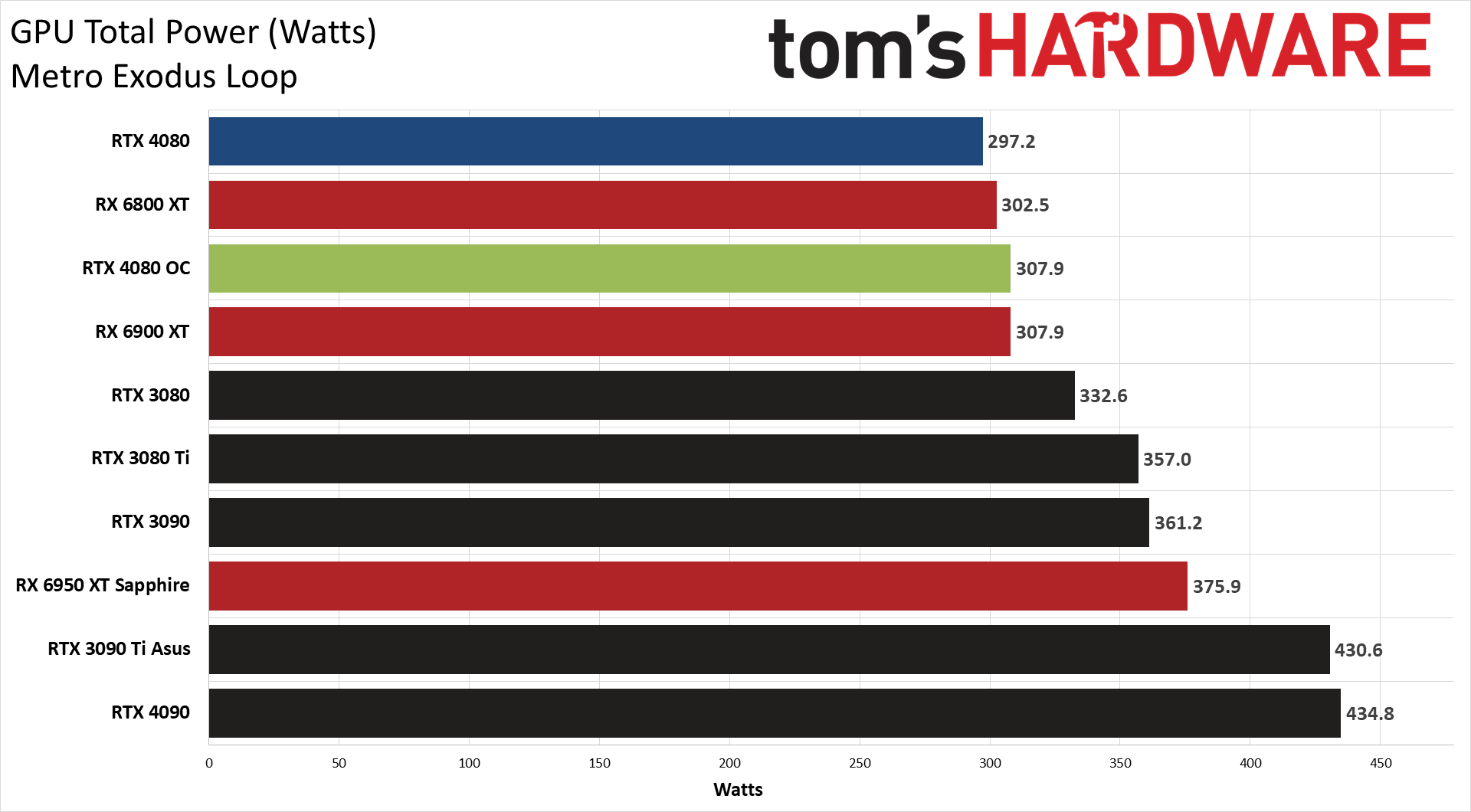

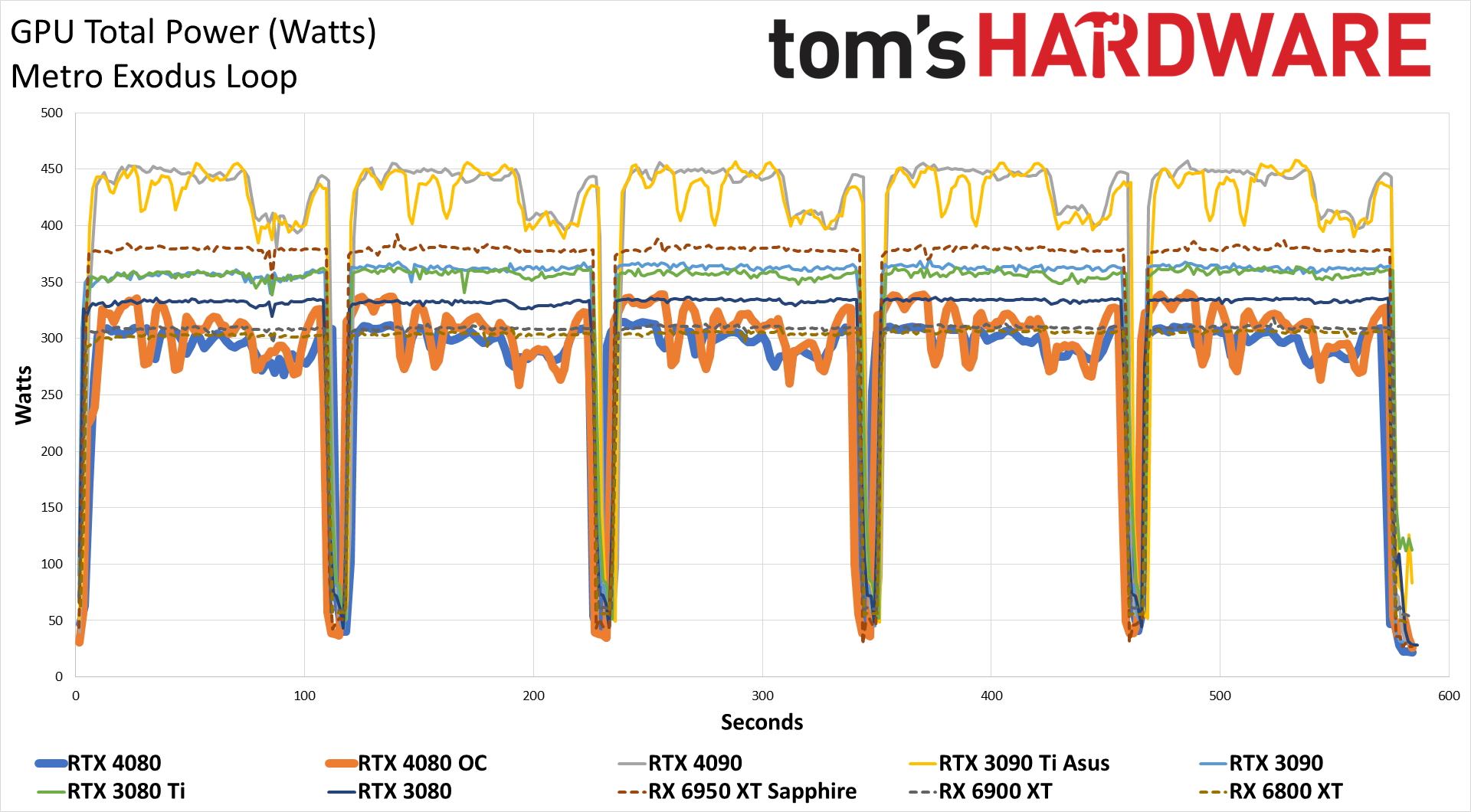

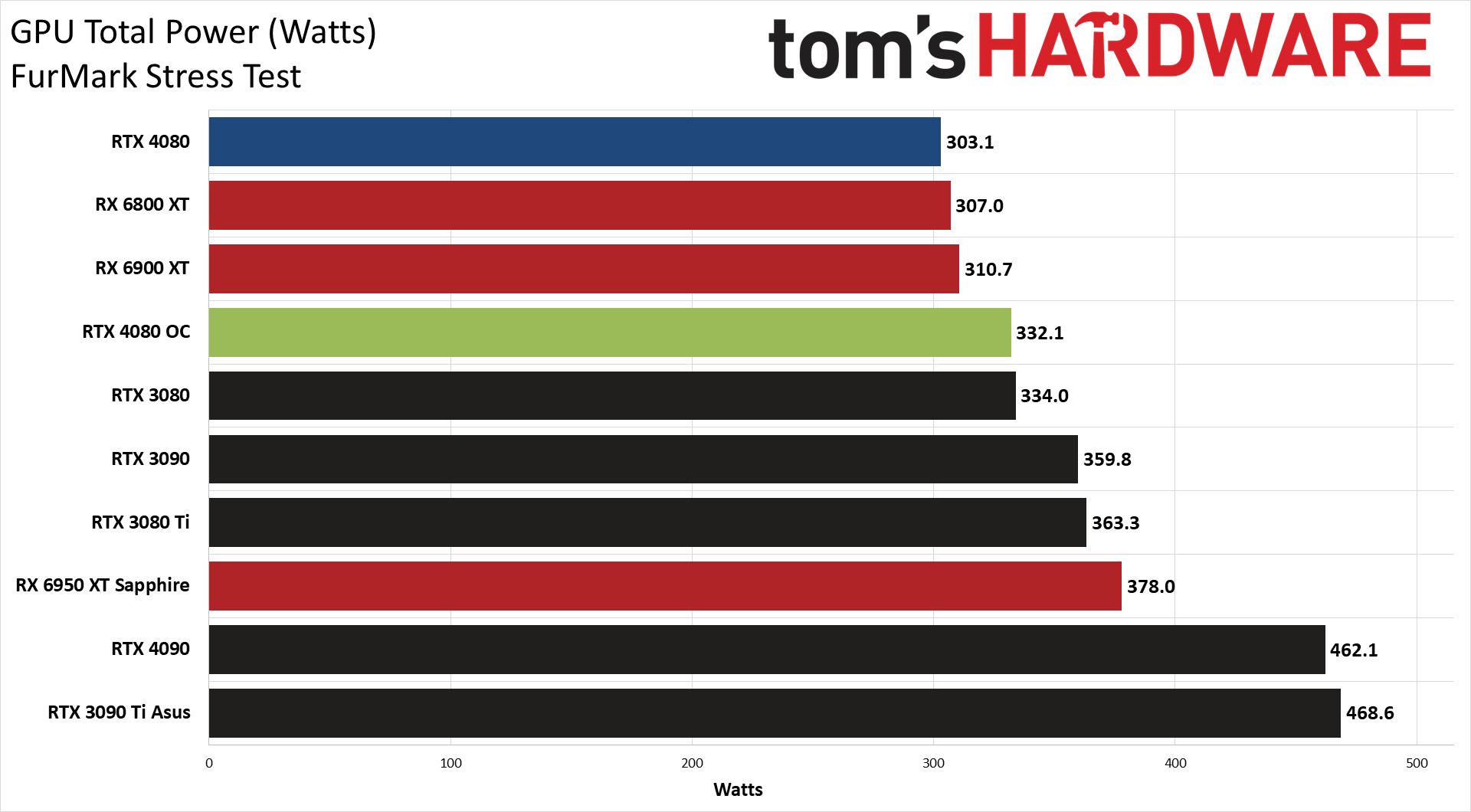

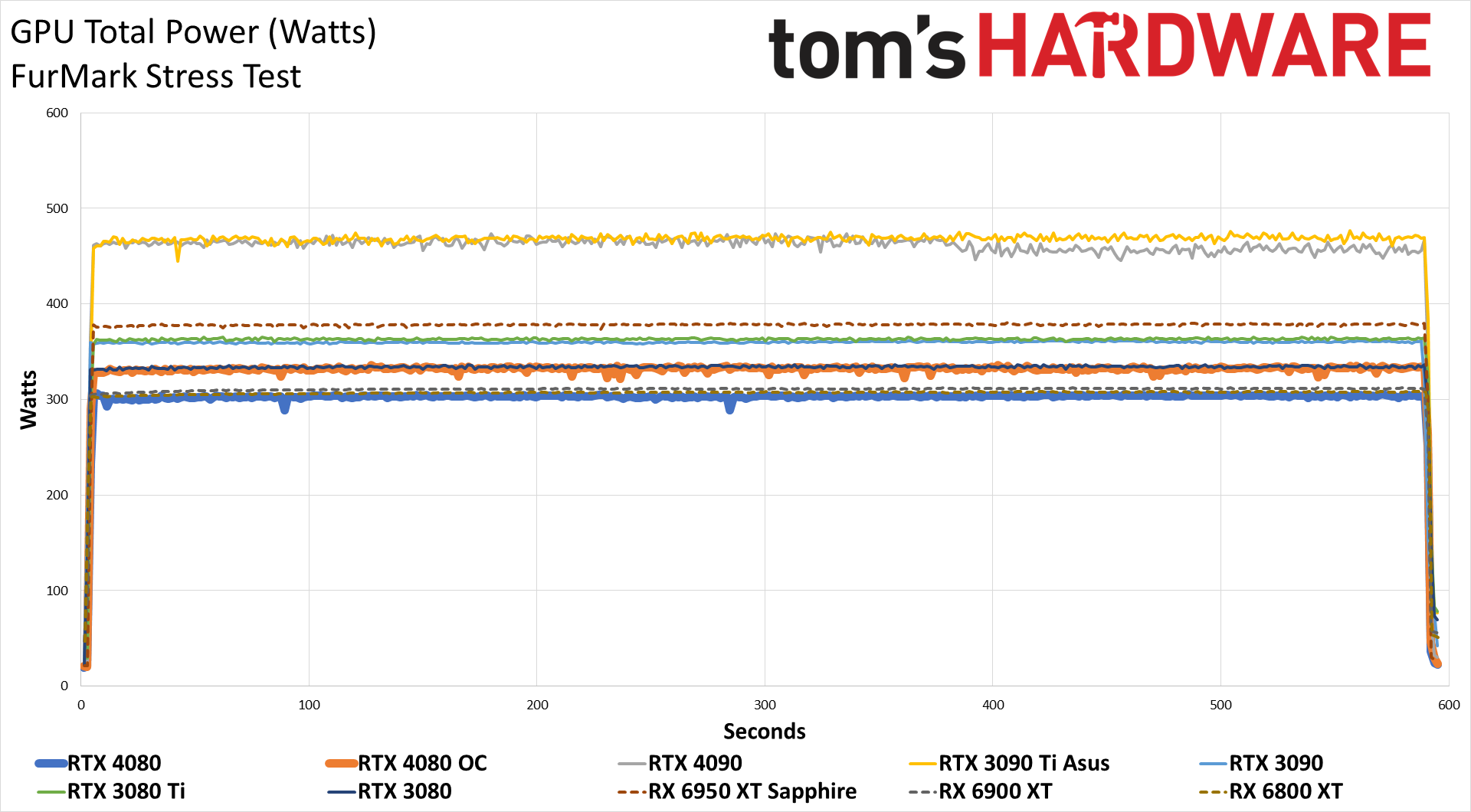

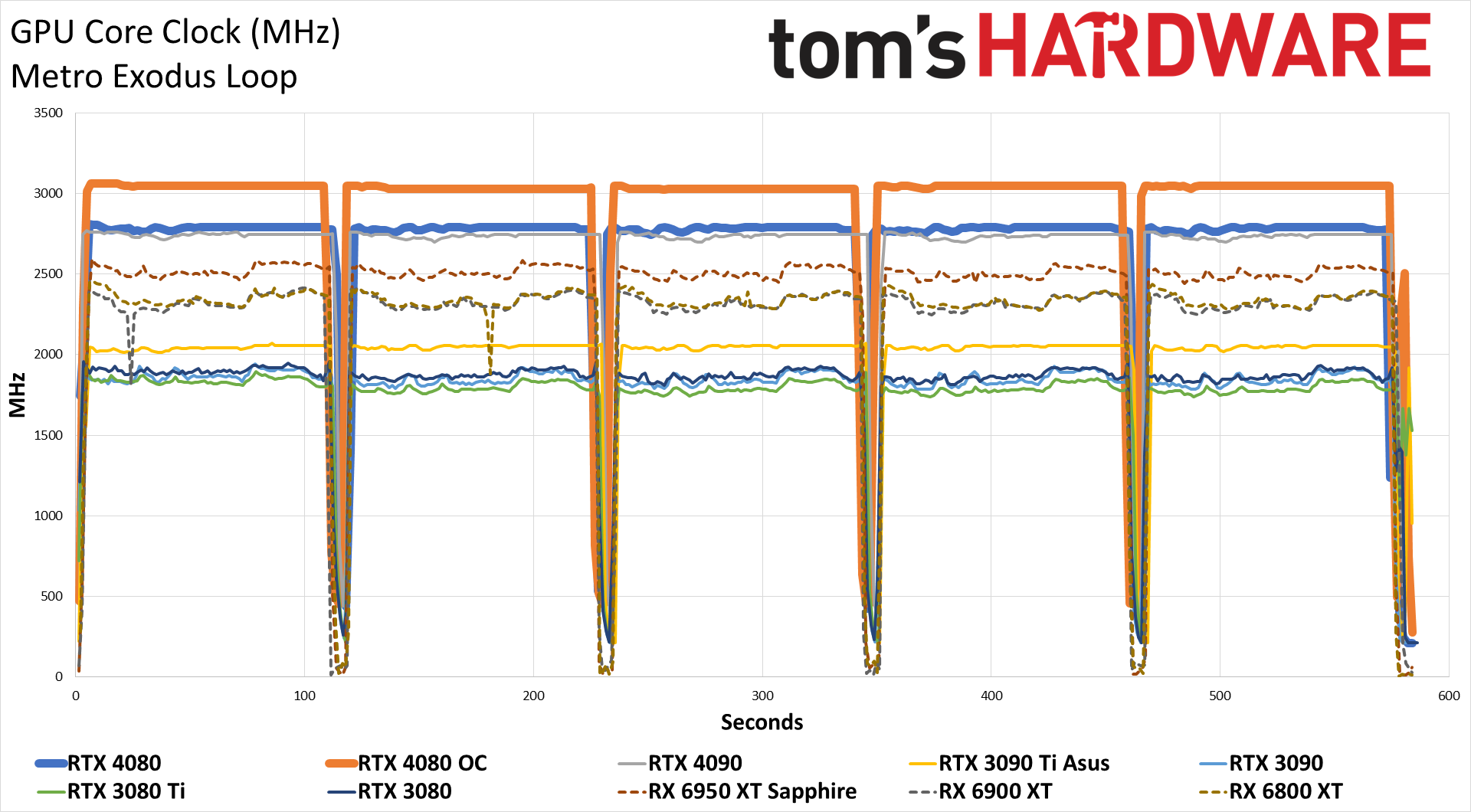

We measure real-world power consumption using Powenetics testing hardware and software. We capture in-line GPU power consumption by collecting data while looping Metro Exodus (the original, not the enhanced version) and while running the FurMark stress test. Our test PC remains the same old Core i9-9900K as we've used previously, to keep results consistent.

For the RTX 4080 Founders Edition, we ran Metro at 3840x2160 using the Extreme preset (no ray tracing or DLSS), and we ran FurMark at 2560x1440. The following charts are intended to represent something of a worst-case scenario for power consumption, temps, etc.

The RTX 4080 Founders Edition used quite a bit less power than the rated 320W TBP. We could only break that limit with overclocking and FurMark, where we hit 332W — still less than the previous generation RTX 3080 running at stock settings. That's a nice change of pace, and the result is a card that's far more efficient than the specifications might lead you to believe.

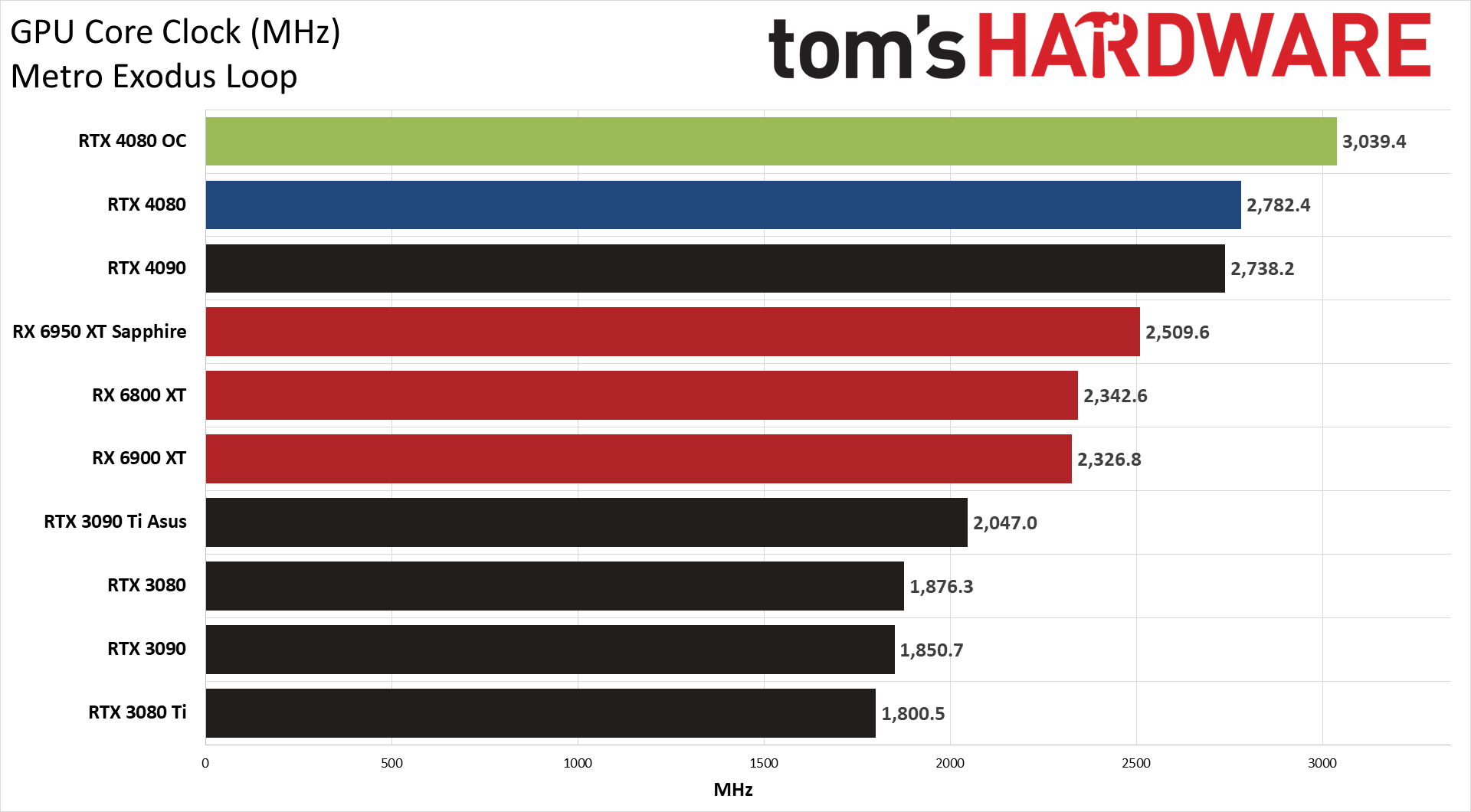

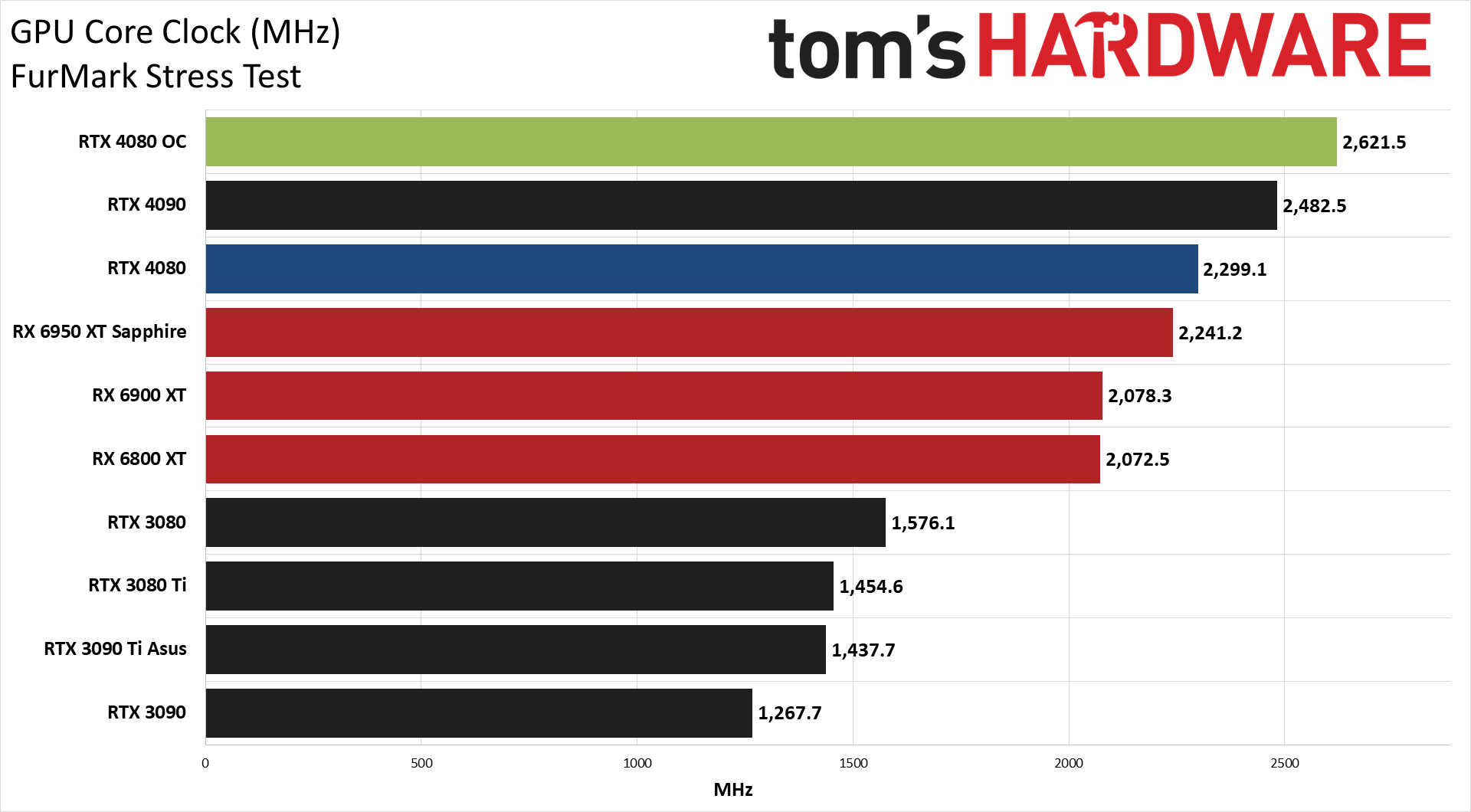

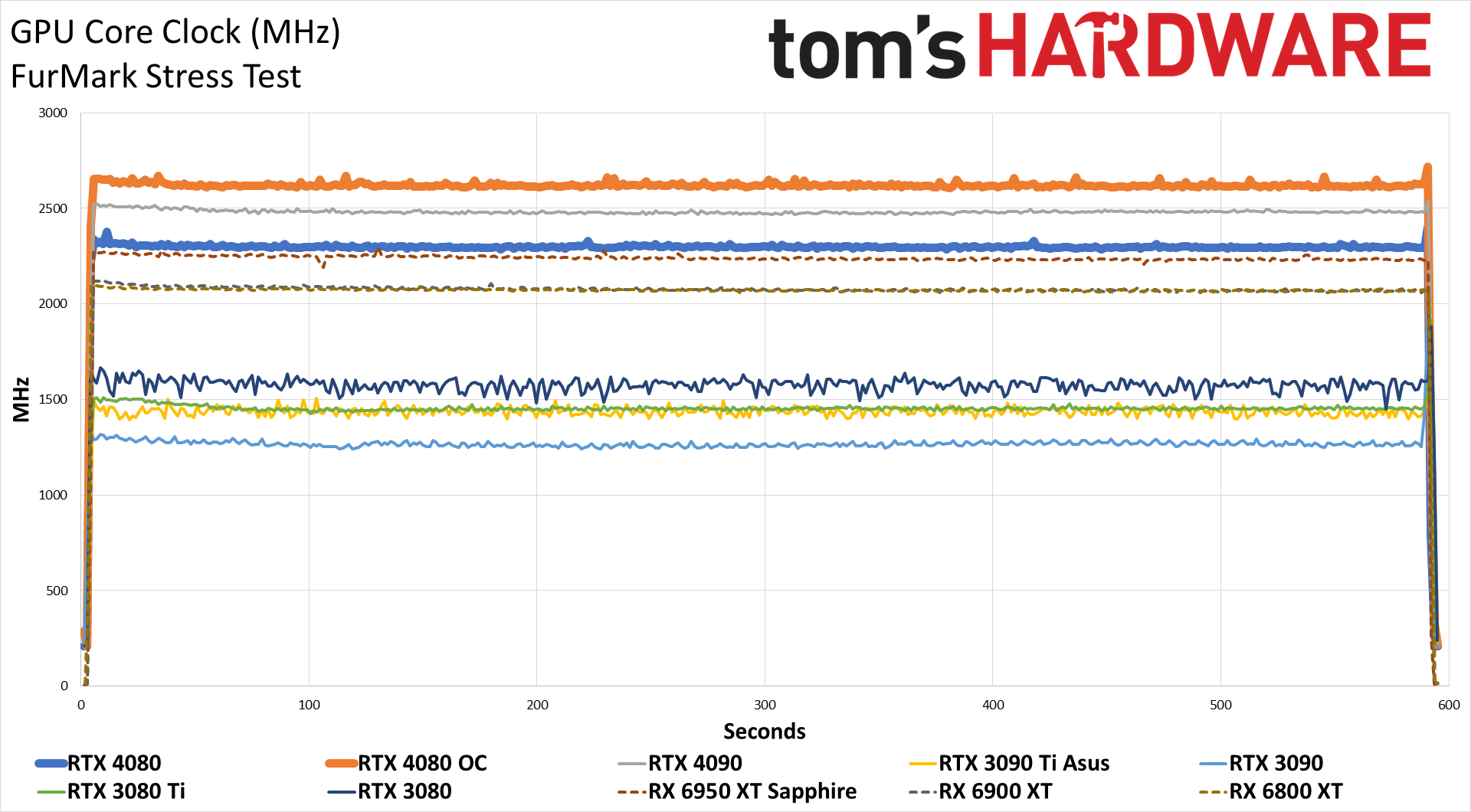

Clock speeds were also quite impressive, averaging 2.78 GHz in Metro, though the GPU did drop to 2.3 GHz with FurMark. Meanwhile, our manual overclock pushed the GPU beyond 3.0 GHz — the first time we've ever managed that using a card's stock cooler! FurMark clocks also increased to 2.62 GHz with our overclock.

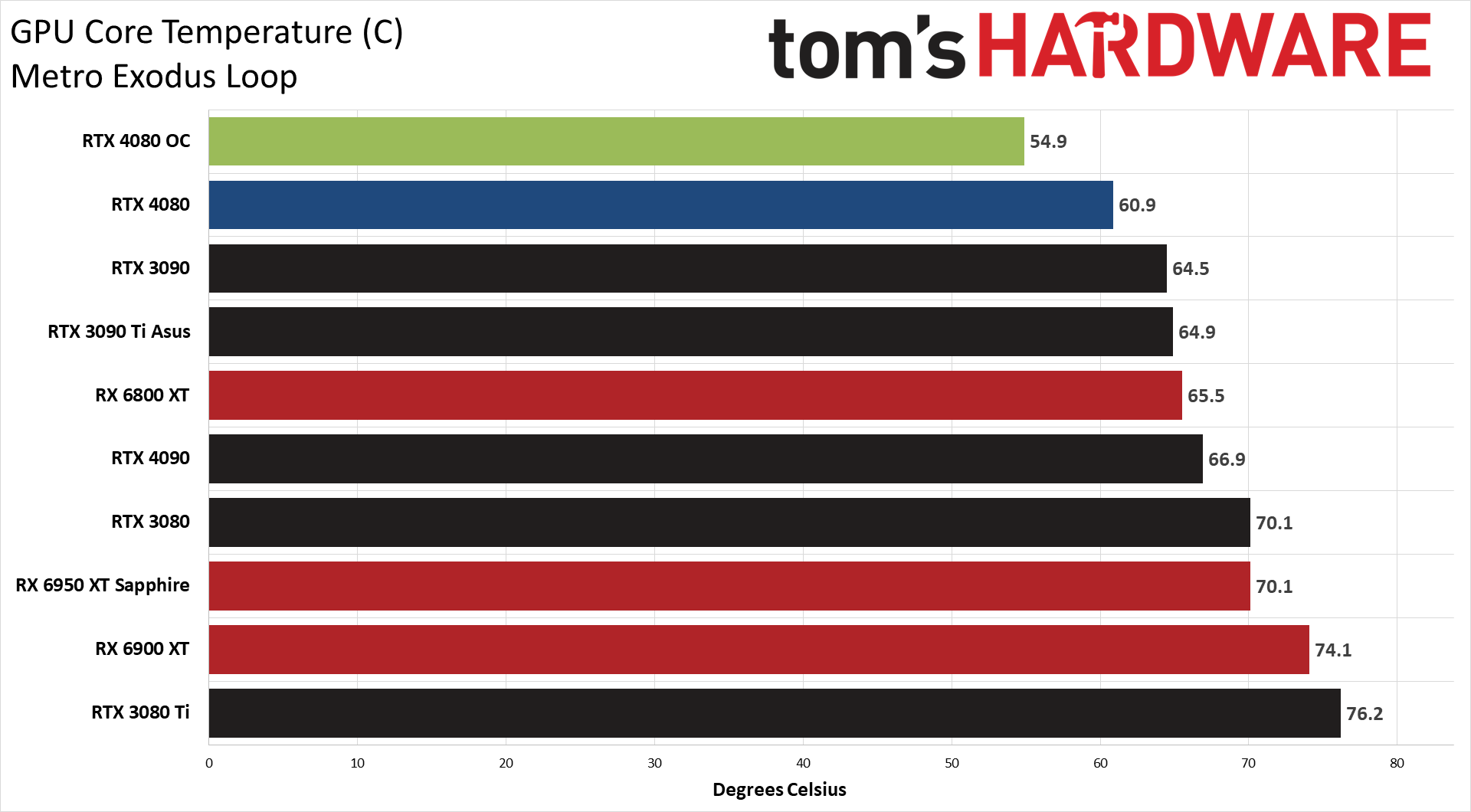

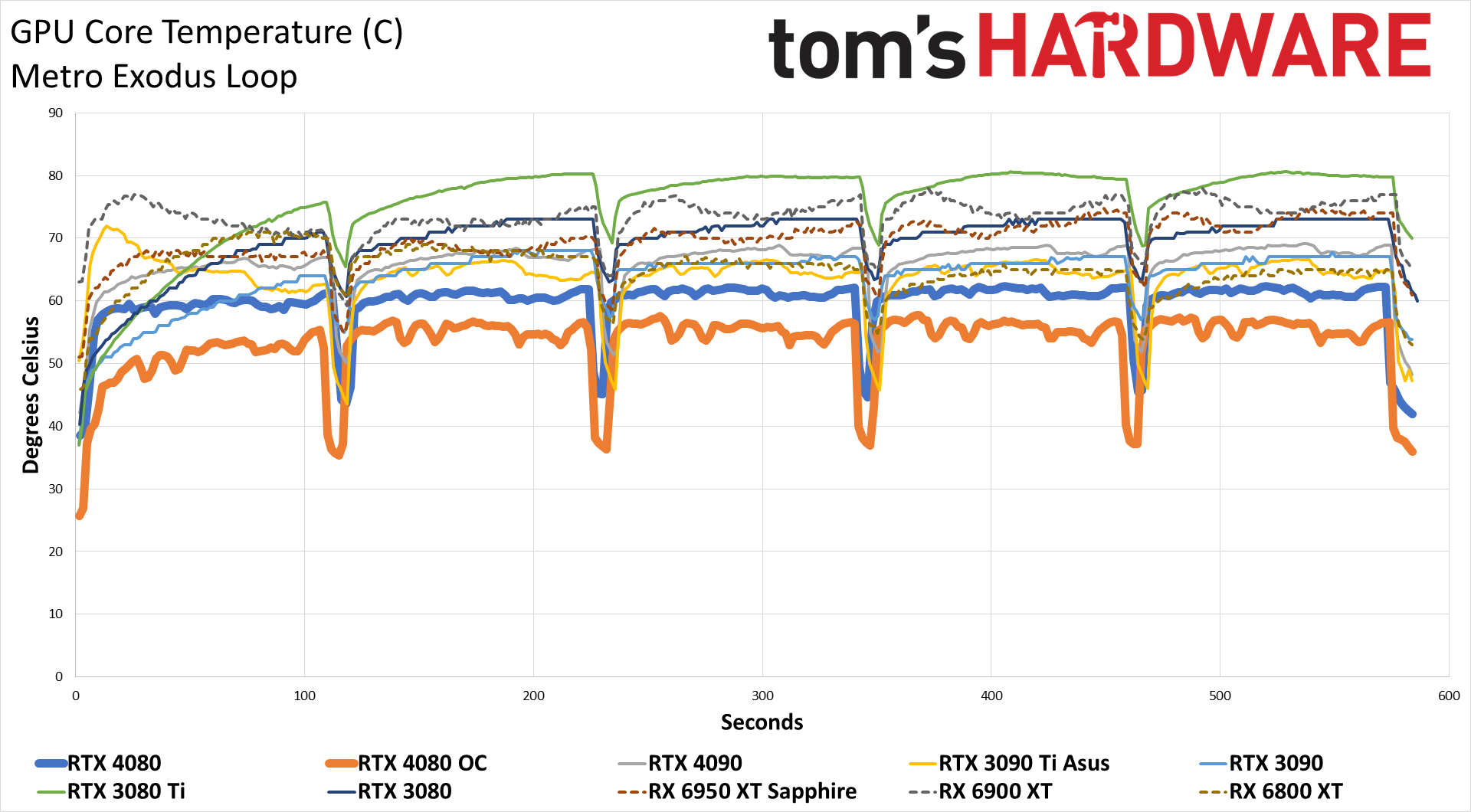

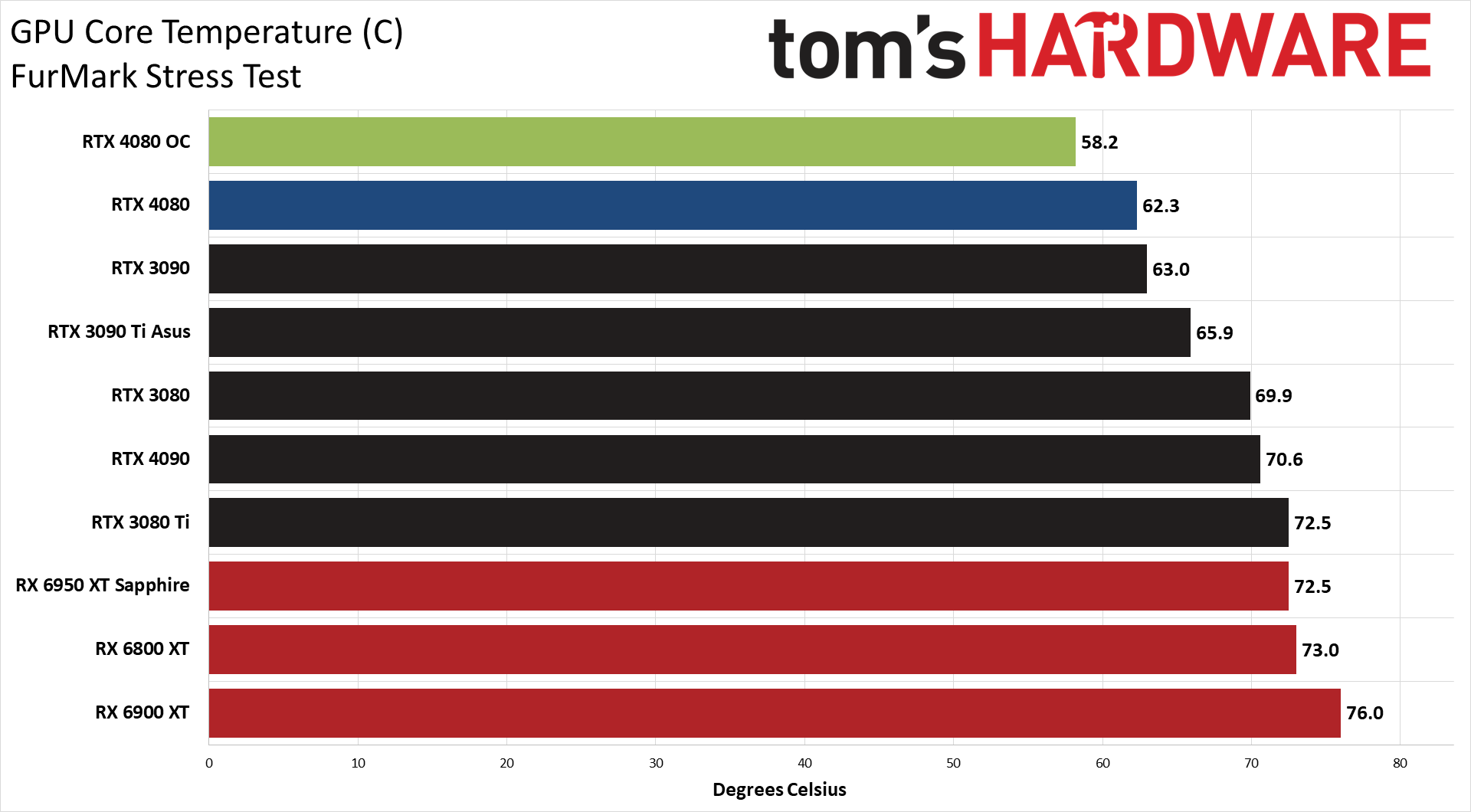

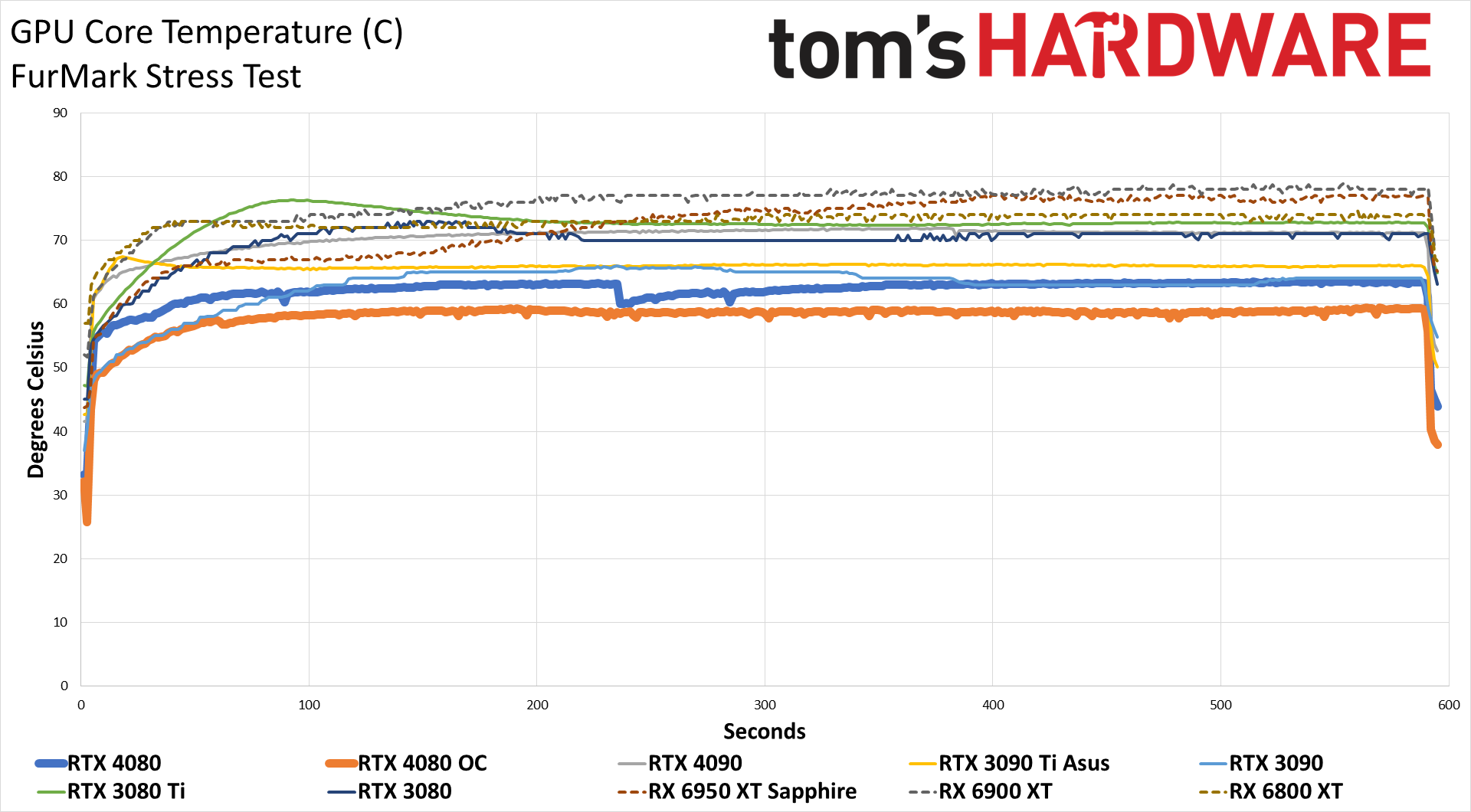

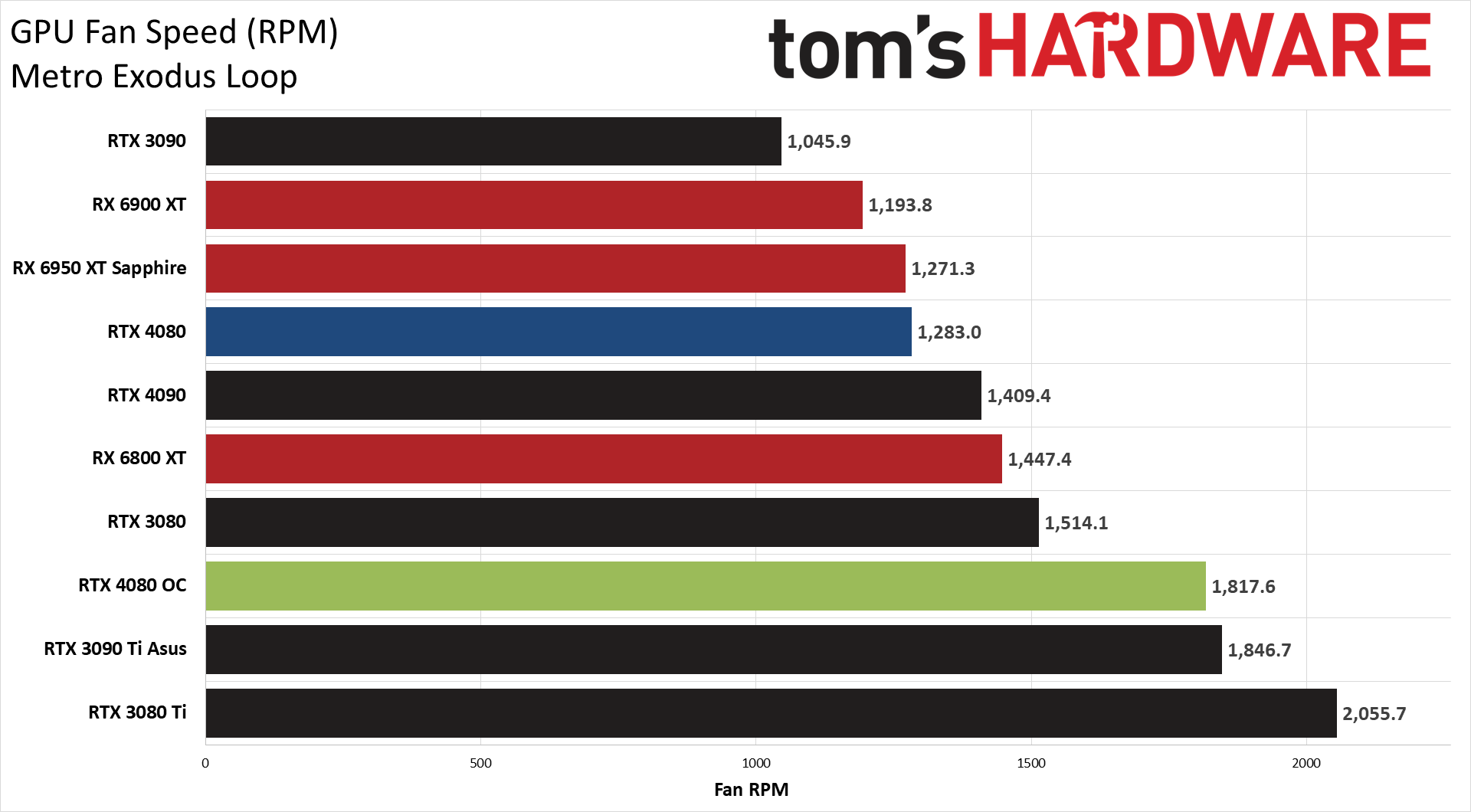

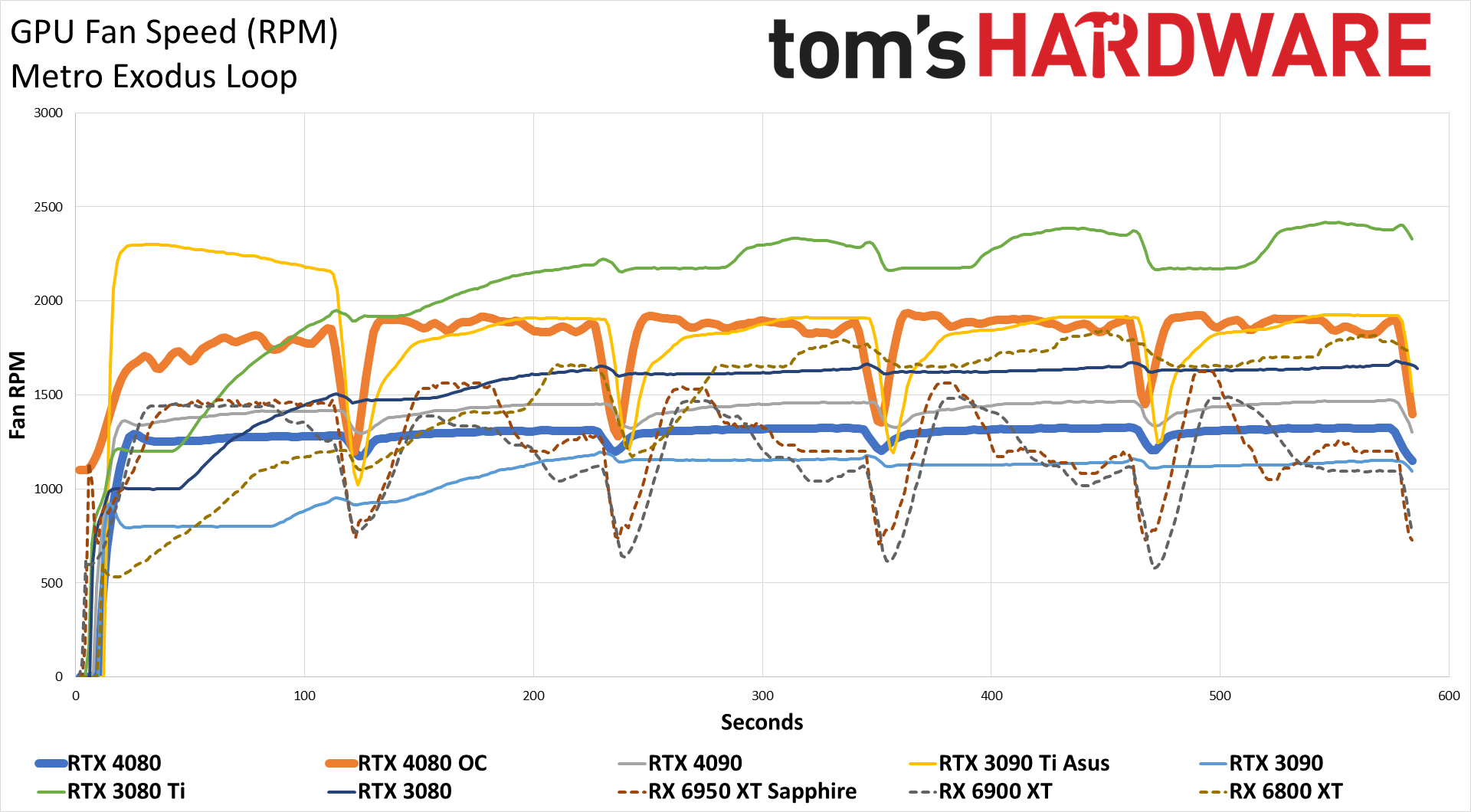

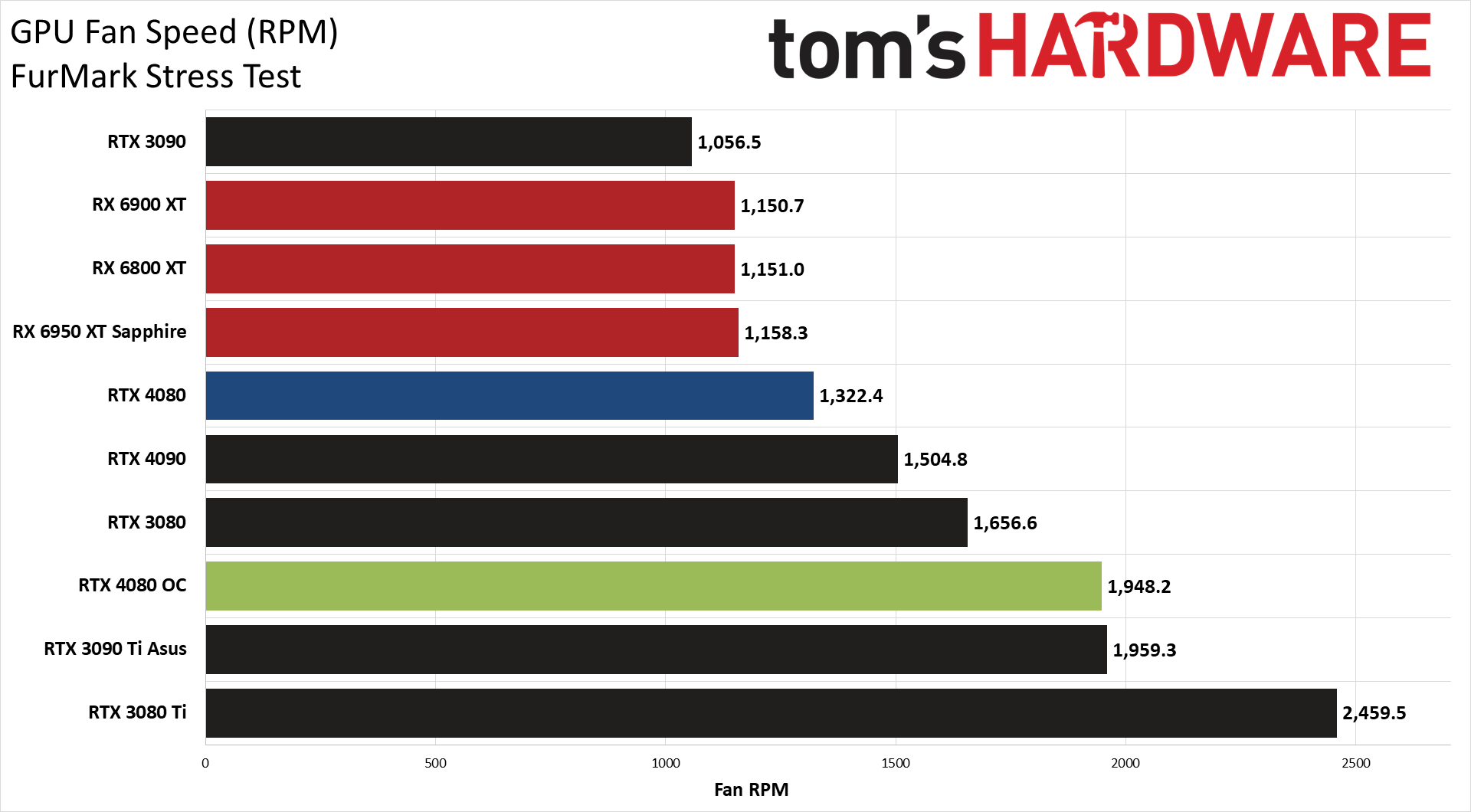

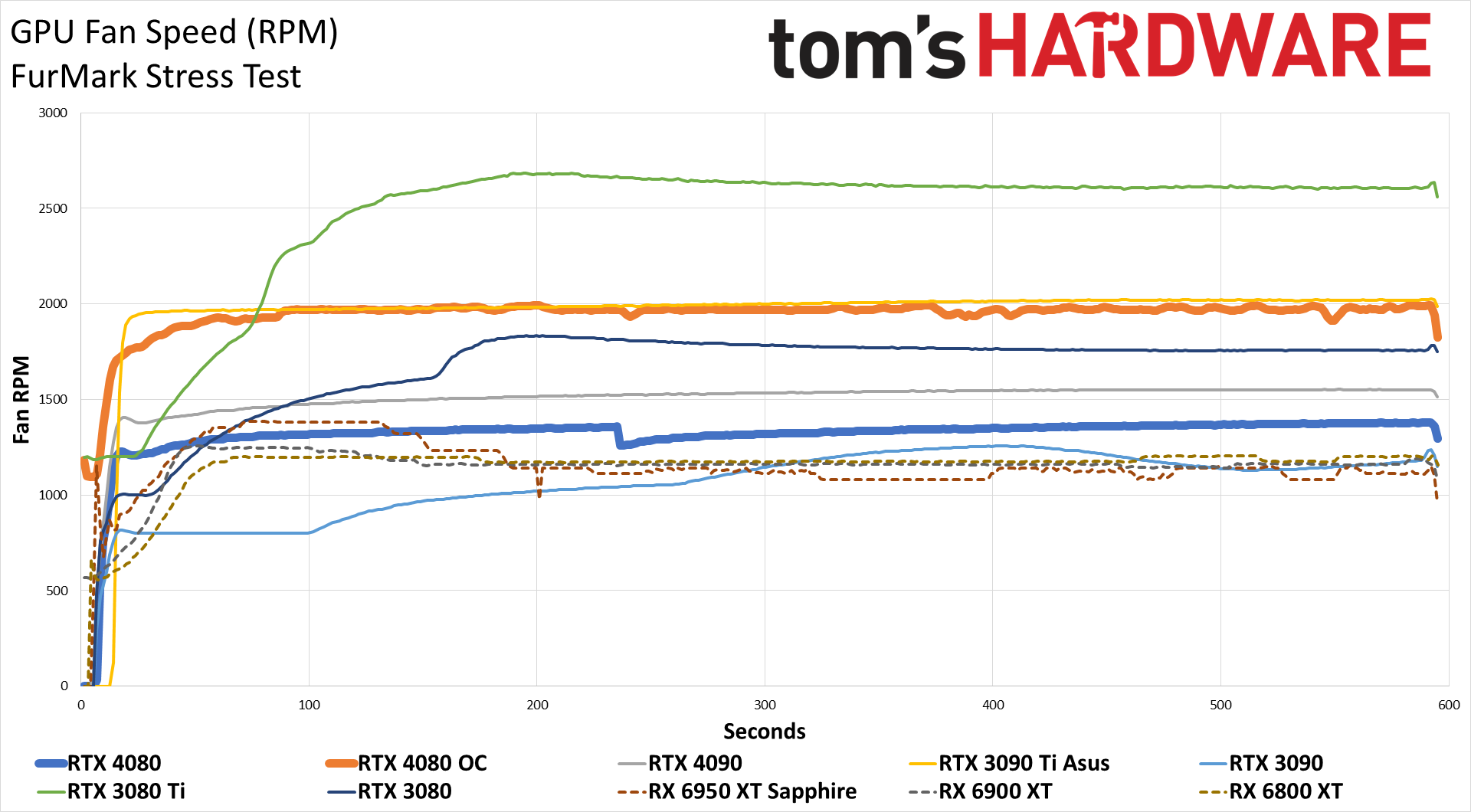

Temperatures, fan speeds, and noise levels all go hand-in-hand. Given the rather massive cooler, it's not too surprising that the RTX 4080 Founders Edition stayed in the low 60C range at stock. Our overclock includes higher fan speeds, resulting in even lower temperatures of 55C–58C. The fans only hit around 1300 RPMs at stock, but our more aggressive fan curve pushed that closer to 2000 RPM when overclocked.

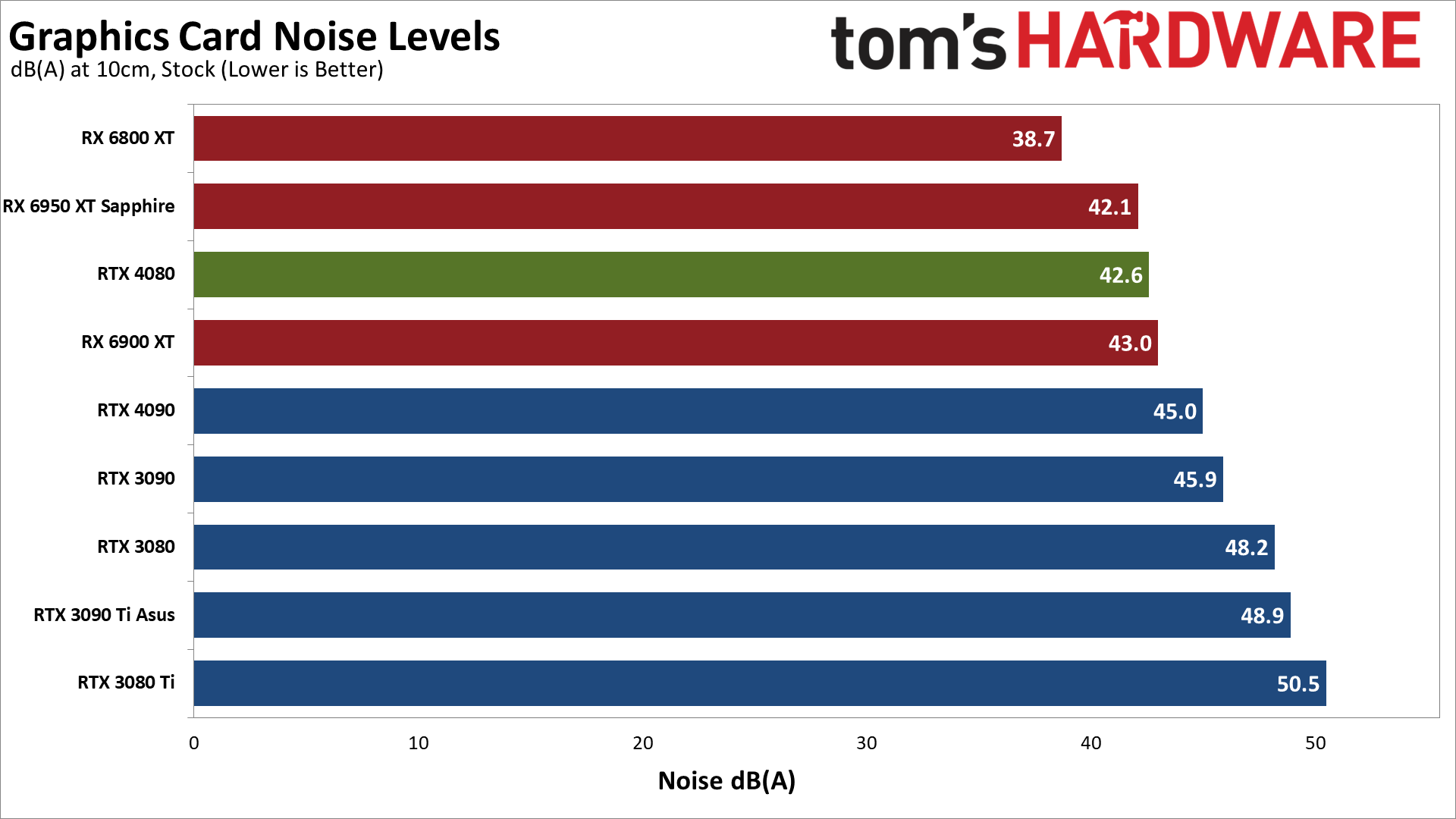

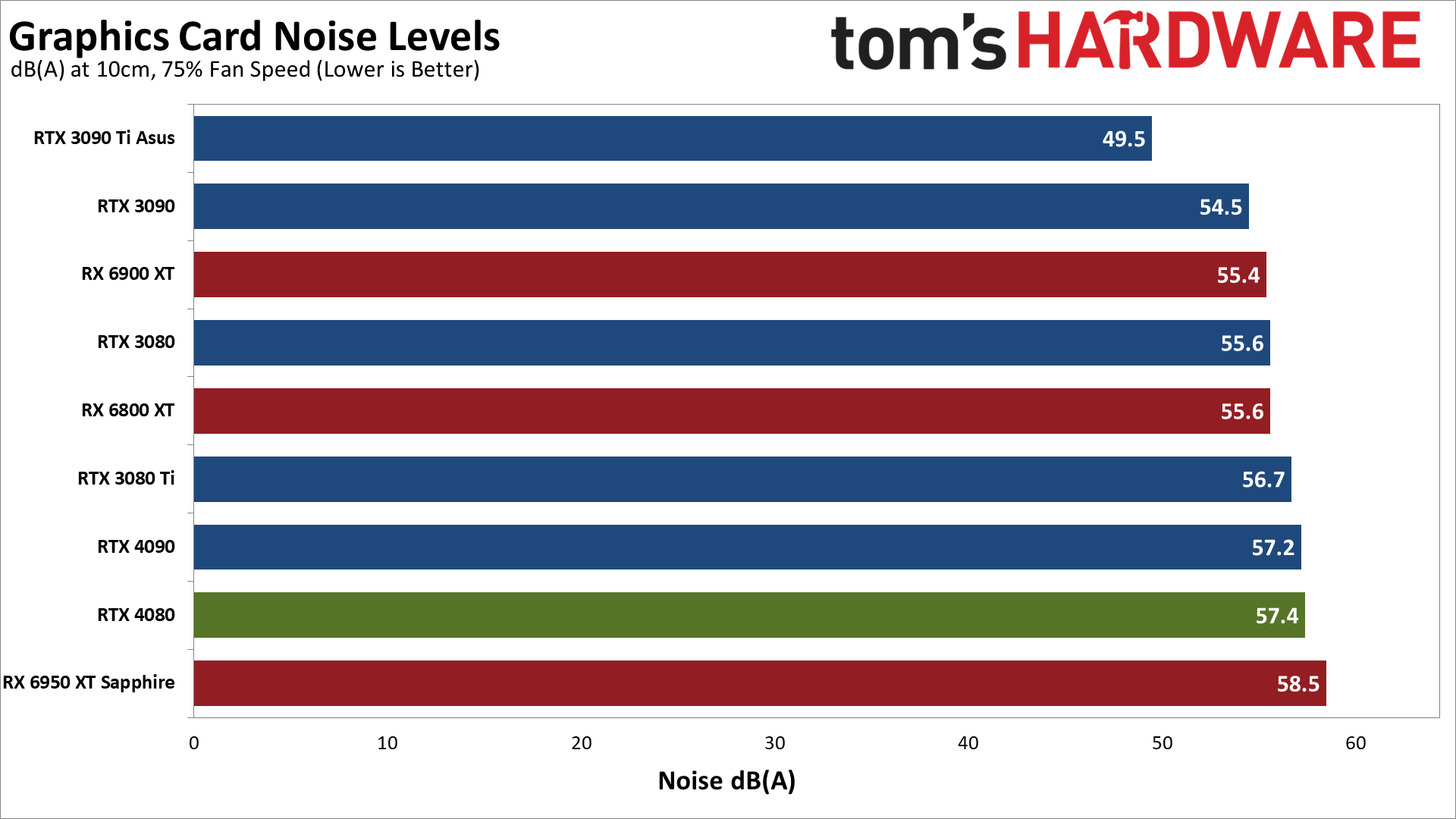

Finally, we have our noise levels, which we gather using an SPL (sound pressure level) meter placed 10cm from the card, with the mic aimed right at the GPU fan(s). This helps minimize the impact of other noise sources, like the fans on the CPU cooler. The noise floor of our test environment and equipment is around 32 dB(A).

After running a demanding game (Metro, at appropriate settings that tax the GPU), the RTX 4080 settled in at a fan speed of 38% and a noise level of 42.6 dB(A). While it's not shown in the chart, our overclocked settings resulted in a 70% fan speed and 55.5 dB(A). Finally, with a static fan speed of 75%, the 4080 Founders Edition generated 57.4 dB(A) of noise.

RTX 4080 Additional Power, Clock, and Temperature Testing

| Game | Setting | Avg FPS | Avg Clock | Avg Power | Avg Temp | Avg Utilization |

|---|---|---|---|---|---|---|

| 13-Game Geomean | 1080p 'Ultra' | 132.2 | 2795.9 | 221.4 | 53.5 | 79.5% |

| 13-Game Geomean | 1440p 'Ultra' | 104.9 | 2786.9 | 252.8 | 56.3 | 87.2% |

| 13-Game Geomean | 4k 'Ultra' | 62.7 | 2764.1 | 289 | 59.9 | 97.0% |

| 6-Game DXR Geomean | 1080p 'Ultra' | 131.5 | 2789.5 | 265.6 | 58.4 | 88.6% |

| 6-Game DXR Geomean | 1440p 'Ultra' | 91.2 | 2775.9 | 289.8 | 59.8 | 93.1% |

| 6-Game DXR Geomean | 4k 'Ultra' | 47.1 | 2744 | 300.2 | 61.2 | 95.6% |

| Borderlands 3 | 1080p Badass | 99.4 | 2789.8 | 280.8 | 60.7 | 99.0% |

| Borderlands 3 | 1440p Badass | 59.6 | 2782.2 | 295.9 | 62.3 | 99.0% |

| Borderlands 3 | 4k Badass | 27.3 | 2766.1 | 300.5 | 62.6 | 99.0% |

| Bright Memory Infinite | 1080p Very High | 217.4 | 2790 | 275.2 | 60.3 | 92.8% |

| Bright Memory Infinite | 1440p Very High | 165.7 | 2781.4 | 304.4 | 62.5 | 98.6% |

| Bright Memory Infinite | 4k Very High | 89.1 | 2761.5 | 308.8 | 63.2 | 99.0% |

| Control | 1080p High | 152.1 | 2805 | 284.6 | 55.3 | 99.0% |

| Control | 1440p High | 93.7 | 2787.6 | 301.9 | 59.1 | 99.0% |

| Control | 4k High | 45.6 | 2778.7 | 306.8 | 59 | 99.0% |

| Cyberpunk 2077 | 1080p RT-Ultra | 98.5 | 2790 | 277.4 | 58.4 | 97.9% |

| Cyberpunk 2077 | 1440p RT-Ultra | 62.8 | 2777.2 | 296 | 55.5 | 98.3% |

| Cyberpunk 2077 | 4k RT-Ultra | 30.3 | 2691.2 | 291.9 | 58.1 | 99.0% |

| Far Cry 6 | 1080p Ultra | 152.6 | 2805.1 | 141 | 42 | 61.4% |

| Far Cry 6 | 1440p Ultra | 150.5 | 2805 | 193.4 | 46.5 | 78.5% |

| Far Cry 6 | 4k Ultra | 109 | 2803.9 | 258.4 | 50.6 | 98.8% |

| Flight Simulator | 1080p Ultra | 77.9 | 2805 | 188.6 | 50 | 56.0% |

| Flight Simulator | 1440p Ultra | 77.5 | 2805 | 191 | 50.8 | 56.3% |

| Flight Simulator | 4k Ultra | 76.1 | 2739.1 | 305.8 | 62.8 | 97.8% |

| Fortnite | 1080p Epic | 119.2 | 2790 | 256.4 | 60.5 | 90.8% |

| Fortnite | 1440p Epic | 77.7 | 2784.5 | 283.8 | 62.5 | 94.5% |

| Fortnite | 4k Epic | 38.2 | 2747.1 | 294.9 | 63.2 | 96.3% |

| Forza Horizon 5 | 1080p Extreme | 159.8 | 2805 | 152.9 | 45.3 | 79.7% |

| Forza Horizon 5 | 1440p Extreme | 151.2 | 2805 | 179.1 | 49.4 | 84.0% |

| Forza Horizon 5 | 4k Extreme | 126.5 | 2801.9 | 227.9 | 55.7 | 95.5% |

| Horizon Zero Dawn | 1080p Ultimate | 183.8 | 2804.8 | 174.9 | 48.4 | 65.9% |

| Horizon Zero Dawn | 1440p Ultimate | 176 | 2795.1 | 219.1 | 53.6 | 83.6% |

| Horizon Zero Dawn | 4k Ultimate | 114.4 | 2790.4 | 277.6 | 58.6 | 99.0% |

| Metro Exodus Enhanced | 1080p Extreme | 114.8 | 2771.9 | 290.7 | 61 | 94.5% |

| Metro Exodus Enhanced | 1440p Extreme | 91 | 2735.2 | 304.8 | 62.1 | 98.0% |

| Metro Exodus Enhanced | 4k Extreme | 52 | 2702 | 307.9 | 63.1 | 99.0% |

| Minecraft | 1080p RT 24-Blocks | 116.2 | 2790 | 216.7 | 55.1 | 62.7% |

| Minecraft | 1440p RT 24-Blocks | 83.2 | 2790 | 251.5 | 57.3 | 73.2% |

| Minecraft | 4k RT 24-Blocks | 44.4 | 2785.2 | 291.5 | 61.1 | 82.5% |

| Total War Warhammer 3 | 1440p Ultra | 160.9 | 2789.9 | 255.8 | 57.8 | 85.2% |

| Total War Warhammer 3 | 1080p Ultra | 139.6 | 2787.6 | 294.9 | 60.7 | 97.5% |

| Total War Warhammer 3 | 4k Ultra | 74.6 | 2780.4 | 302.5 | 61.8 | 98.9% |

| Watch Dogs Legion | 1080p Ultra | 129.8 | 2810 | 171 | 46.4 | 68.2% |

| Watch Dogs Legion | 1440p Ultra | 125 | 2795.1 | 229.9 | 53.4 | 86.1% |

| Watch Dogs Legion | 4k Ultra | 87.8 | 2789 | 294.4 | 60 | 99.0% |

Besides our Powenetics testing, we collect all of our frametime data using Nvidia's FrameView, which also logs clock speeds, temperatures, power, and GPU utilization (and plenty of other data as well). We've verified with our Powenetics equipment that Nvidia's GPUs report power draw that's within a few percent of the actual power use, so the above results give a wider perspective on how the card runs.

As we've mentioned already, Nvidia's RTX 4090 and 4080 tend to come in below their maximum rated TBP in a lot of gaming workloads. That's especially true of our 1080p results, where the average power use for the card was just 221W. With a faster CPU like a Core i9-13900K or Ryzen 9 7950X, we'd likely see higher power use from the 4080, though it's likely even an overclocked 13900K wouldn't max out its power limit at 1080p.

In terms of efficiency, you can see the overall power, performance, etc., for thirteen of the games in our test suite. Overclocking increased performance at 4K by 7%, while power use went up by 7.7%. Normally, overclocking can really drop efficiency, but at least with the 4080 Founders Edition the card seems to handle a modest OC fairly well.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: GeForce RTX 4080: Power, Clocks, Temps, Fans, and Noise

Prev Page GeForce RTX 4080: Professional and Content Creation Performance Next Page GeForce RTX 4080: Little Sister

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

btmedic04 At $1200, this card should be DOA on the market. However people will still buy them all up because of mind share. Realistically, this should be an $800-$900 gpu.Reply -

Wisecracker ReplyNvidia GPUs also tend to be heavily favored by professional users

mmmmMehhhhh . . . .Vegas GPU compute on line one . . .

AMD's new Radeon Pro driver makes Radeon Pro W6800 faster than Nvidia's RTX A5000.

My CAD does OpenGL, too

AMD Rearchitects OpenGL Driver for a 72% Performance Uplift : Read more

:homer: -

saunupe1911 People flocked to the 4090 as it's a monster but it would be entirely stupid to grab this card while the high end 3000s series exist along with the 4090.Reply

A 3080 and up will run everything at 2K...and with high refresh rates with DLSS.

Go big or go home and let this GPU sit! Force Nvidia's hand to lower prices.

You can't have 2 halo products when there's no demand and the previous gen still exist. -

Math Geek they'll cry foul, grumble about the price and even blame retailers for the high price. but only while sitting in line to buy one.......Reply

man how i wish folks could just get a grip on themselves and let these just sit on shelves for a couple months while Nvidia gets a much needed reality check. but alas they'll sell out in minutes just like always sigh -

chalabam Unfortunately the new batch of games is so politized that it makes buying a GPU a bad investment.Reply

Even when they have the best graphics ever, the gameplay is not worth it. -

gburke I am one who likes to have the best to push games to the limit. And I'm usually pretty good about staying on top of current hardware. I can definitely afford it. I "clamored" to get a 3080 at launch and was lucky enough to get one at market value beating out the dreadful scalpers. But makes no sense this time to upgrade over lest gen just for gaming. So I am sitting this one out. I would be curious to know how many others out there like me who doesn't see the real benefit to this new generation hardware for gaming. Honestly, 60fps at 4K on almost all my games is great for me. Not really interested in going above that.Reply -

PlaneInTheSky Seeing how much wattage these GPU use in a loop is interesting, but it still tells me nothing regarding real-life cost.Reply

Cloud gaming suddenly looks more attractive when I realize I won't need to pay to run a GPU at 300 watt.

The running cost of GPU should now be part of reviews imo.

Considering how much people in Europe, Japan, and South East Asia are now paying for electricity and how much these new GPU consume.

Household appliances with similar power usage, usually have their running cost discussed in reviews. -

BaRoMeTrIc Reply

High end RTX cards have become status symbols amongst gamers.Math Geek said:they'll cry foul, grumble about the price and even blame retailers for the high price. but only while sitting in line to buy one.......

man how i wish folks could just get a grip on themselves and let these just sit on shelves for a couple months while Nvidia gets a much needed reality check. but alas they'll sell out in minutes just like always sigh -

sizzling I’d like to see a performance per £/€/$ comparison between generations. Normally you would expect this to improve from one generation to the next but I am not seeing it. I bought my mid range 3080 at launch for £754. Seeing these are going to cost £1100-£1200 the performance per £/€/$ seems about flat on last generation. Yeah great, I can get 40-60% more performance for 50% more cost. Fairly disappointing for a new generation card. Look back at how the 3070 & 3080 smashed the performance per £/€/$ compared to a 2080Ti.Reply