Why you can trust Tom's Hardware

Nvidia RTX 4080 Founders Edition Overclocking

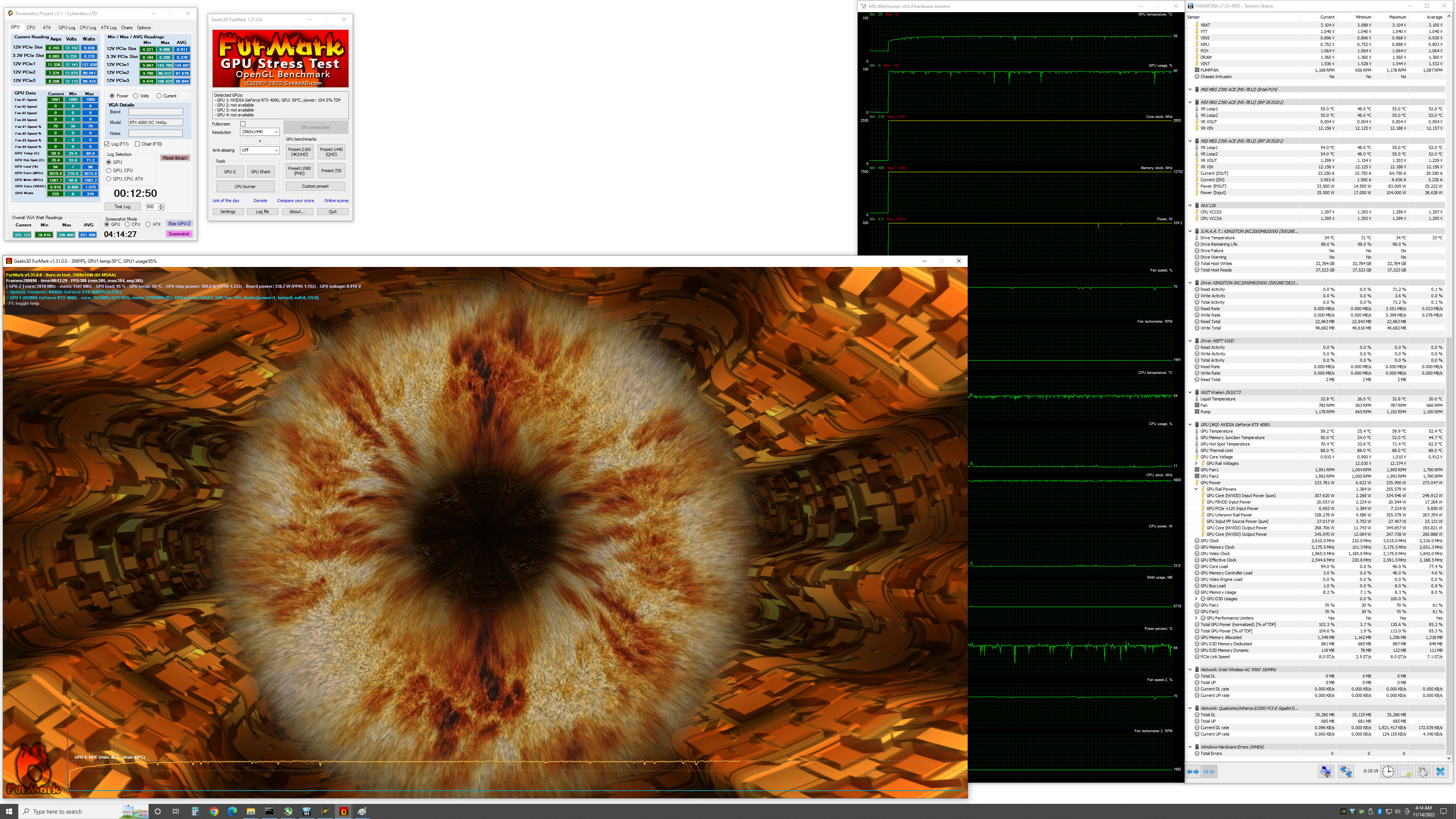

As always, overclocking involves trial and error, and results are not guaranteed. We attempt to dial in stable settings while running some stress tests, but what at first appears to work just fine may crash once we start running through our gaming suite.

We started by maxing out the power limit, which in this case was only 110%. Higher clocks were able to complete some tests, but we eventually settled in at a +200 MHz GPU core clock and +1500 MHz (25.4 Gbps effective) memory clock. We also set a custom fan speed that ramps from 30% at 30C up to 100% at 80C, though we never approached 80C in testing.

As with the RTX 4090, there's no way to increase the GPU voltage short of doing a voltage mod (not something we wanted to do), which seems to be a limiting factor. We did get above 3 GHz in some of our initial testing, and with our final overclock, we saw clocks in the high 2.9 and low 3.0 GHz range. We'll include our overclocking results for 1440p and 4K in our charts.

Nvidia RTX 4080 Test Setup

We updated our GPU test PC and gaming suite in early 2022, and we continue to use the same hardware for now. Nvidia recommends using an AMD Ryzen 9 7950X or Intel Core i9-13900K to further remove CPU bottlenecks… but I don't have one of those yet. (We're working on getting one for an updated GPU testbed.) We do enable XMP for a modest performance boost, and if you're actually going to go out and buy an RTX 4080 or 4090, it's at least worth considering a full PC upgrade.

Our CPU sits in an MSI Pro Z690-A DDR4 WiFi motherboard with DDR4-3600 memory — a nod to sensibility rather than outright maximum performance. We also upgraded to Windows 11 and are now running the latest 22H2 version (with VBS and HVCI disabled, as well as the latest patches) to ensure we get the most out of Alder Lake. You can see the rest of the hardware in the boxout.

We used Nvidia-provided 526.72 pre-release drivers for the RTX 4080. Most of the other cards, including the RTX 4090, were tested with earlier drivers, though we did retest a few games with the same 526.72 drivers where we saw performance oddities (i.e., the 4080 shouldn't outperform the 4090).

Our gaming tests consist of a "standard" suite of eight games without ray tracing enabled (even if the game supports it) and a separate "ray tracing" suite of six games that all use multiple RT effects. We've tested the RTX 4080 at all of our normal settings, though the focus here will be squarely on 4K and 1440p performance.

We tested at 1080p, which might be slightly more meaningful on the 4080 than on the 4090, but CPU limits definitely come into play. We also enabled and tested the RTX 4080 with DLSS 2 Quality mode at 1440p and 4K in the games that support it — two of which (Forza Horizon 5 and Flight Simulator) currently show no benefit and can actually have reduced performance. That's because the games are already hitting CPU limits, something DLSS 3 sort of overcomes.

As we did in the RTX 4090 Founders Edition review, we also tested DLSS 3 and total system latency in several games. The DLSS 3 tests will be limited to RTX 40-series GPUs, and we'll have to eventually look at retesting once final builds become available.

Finally, besides the gaming tests, we have a collection of professional and content creation benchmarks that can leverage the GPU. We're using SPECviewperf 2020 v3, Blender 3.30, OTOY OctaneBenchmark, and V-Ray Benchmark.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Nvidia RTX 4080 Overclocking and Test Setup

Prev Page Nvidia RTX 4080 Design and Assembly Next Page GeForce RTX 4080: 4K Gaming Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

btmedic04 At $1200, this card should be DOA on the market. However people will still buy them all up because of mind share. Realistically, this should be an $800-$900 gpu.Reply -

Wisecracker ReplyNvidia GPUs also tend to be heavily favored by professional users

mmmmMehhhhh . . . .Vegas GPU compute on line one . . .

AMD's new Radeon Pro driver makes Radeon Pro W6800 faster than Nvidia's RTX A5000.

My CAD does OpenGL, too

AMD Rearchitects OpenGL Driver for a 72% Performance Uplift : Read more

:homer: -

saunupe1911 People flocked to the 4090 as it's a monster but it would be entirely stupid to grab this card while the high end 3000s series exist along with the 4090.Reply

A 3080 and up will run everything at 2K...and with high refresh rates with DLSS.

Go big or go home and let this GPU sit! Force Nvidia's hand to lower prices.

You can't have 2 halo products when there's no demand and the previous gen still exist. -

Math Geek they'll cry foul, grumble about the price and even blame retailers for the high price. but only while sitting in line to buy one.......Reply

man how i wish folks could just get a grip on themselves and let these just sit on shelves for a couple months while Nvidia gets a much needed reality check. but alas they'll sell out in minutes just like always sigh -

chalabam Unfortunately the new batch of games is so politized that it makes buying a GPU a bad investment.Reply

Even when they have the best graphics ever, the gameplay is not worth it. -

gburke I am one who likes to have the best to push games to the limit. And I'm usually pretty good about staying on top of current hardware. I can definitely afford it. I "clamored" to get a 3080 at launch and was lucky enough to get one at market value beating out the dreadful scalpers. But makes no sense this time to upgrade over lest gen just for gaming. So I am sitting this one out. I would be curious to know how many others out there like me who doesn't see the real benefit to this new generation hardware for gaming. Honestly, 60fps at 4K on almost all my games is great for me. Not really interested in going above that.Reply -

PlaneInTheSky Seeing how much wattage these GPU use in a loop is interesting, but it still tells me nothing regarding real-life cost.Reply

Cloud gaming suddenly looks more attractive when I realize I won't need to pay to run a GPU at 300 watt.

The running cost of GPU should now be part of reviews imo.

Considering how much people in Europe, Japan, and South East Asia are now paying for electricity and how much these new GPU consume.

Household appliances with similar power usage, usually have their running cost discussed in reviews. -

BaRoMeTrIc Reply

High end RTX cards have become status symbols amongst gamers.Math Geek said:they'll cry foul, grumble about the price and even blame retailers for the high price. but only while sitting in line to buy one.......

man how i wish folks could just get a grip on themselves and let these just sit on shelves for a couple months while Nvidia gets a much needed reality check. but alas they'll sell out in minutes just like always sigh -

sizzling I’d like to see a performance per £/€/$ comparison between generations. Normally you would expect this to improve from one generation to the next but I am not seeing it. I bought my mid range 3080 at launch for £754. Seeing these are going to cost £1100-£1200 the performance per £/€/$ seems about flat on last generation. Yeah great, I can get 40-60% more performance for 50% more cost. Fairly disappointing for a new generation card. Look back at how the 3070 & 3080 smashed the performance per £/€/$ compared to a 2080Ti.Reply