Why you can trust Tom's Hardware

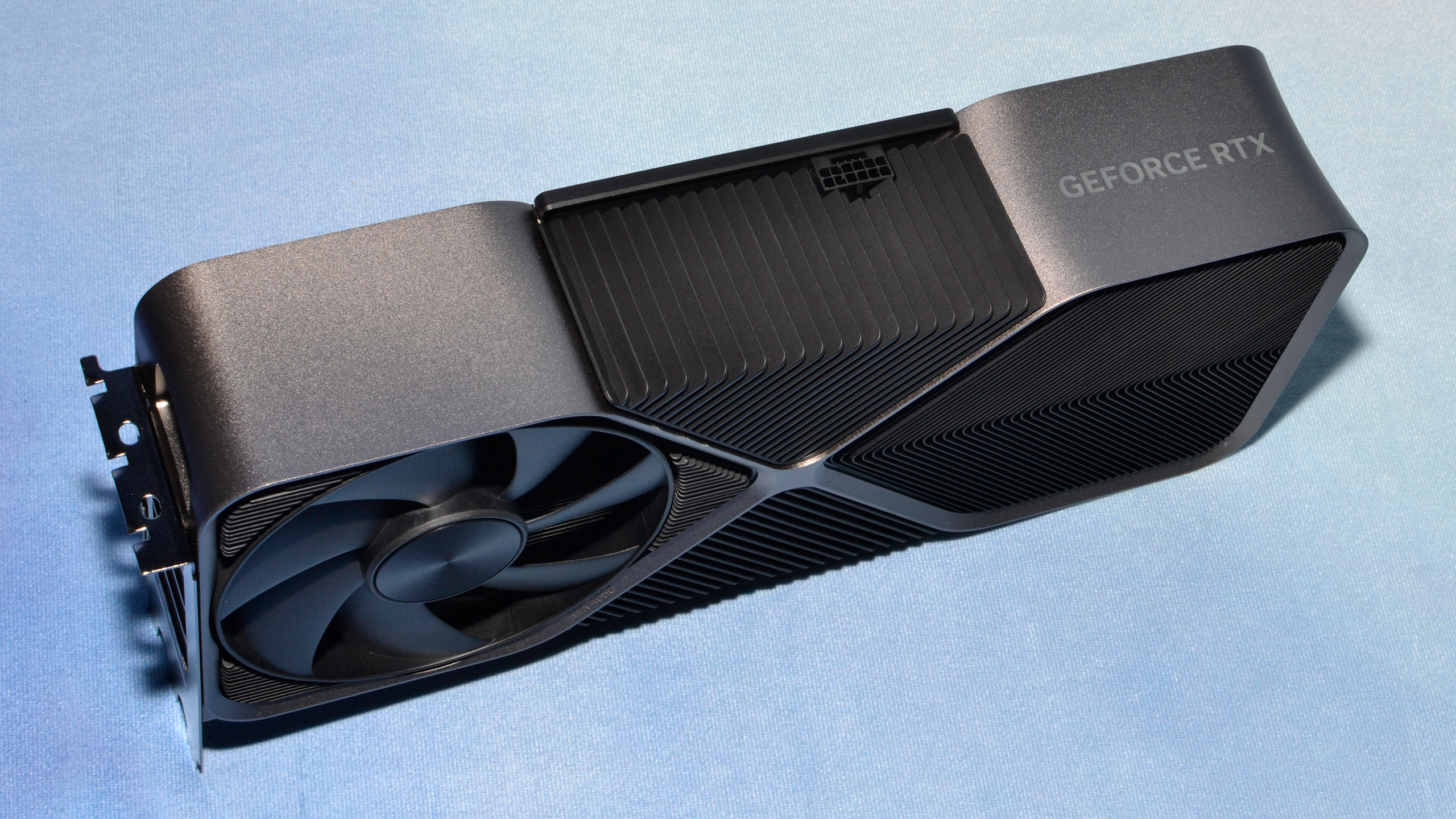

Nvidia RTX 4080 Founders Edition Design

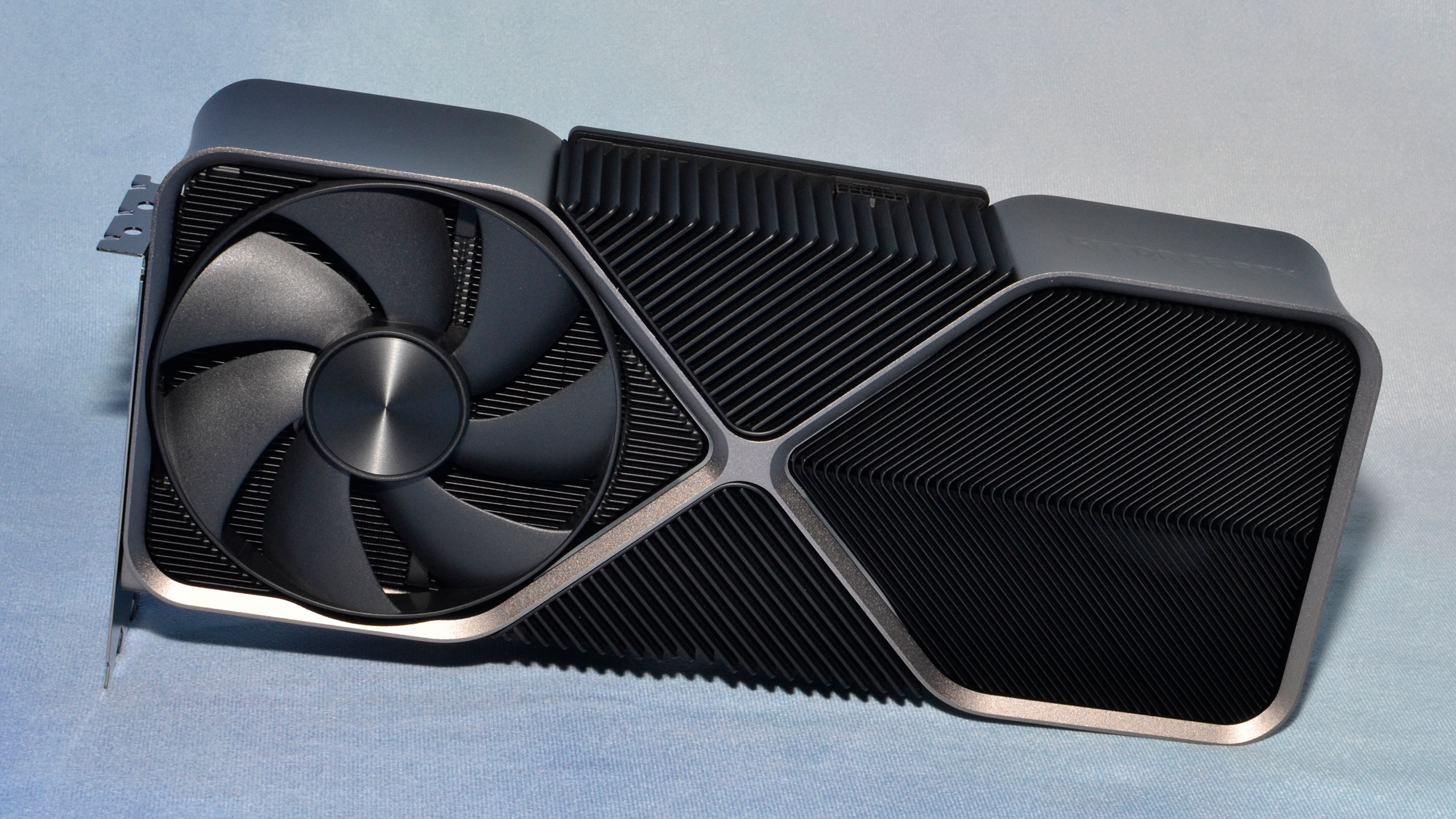

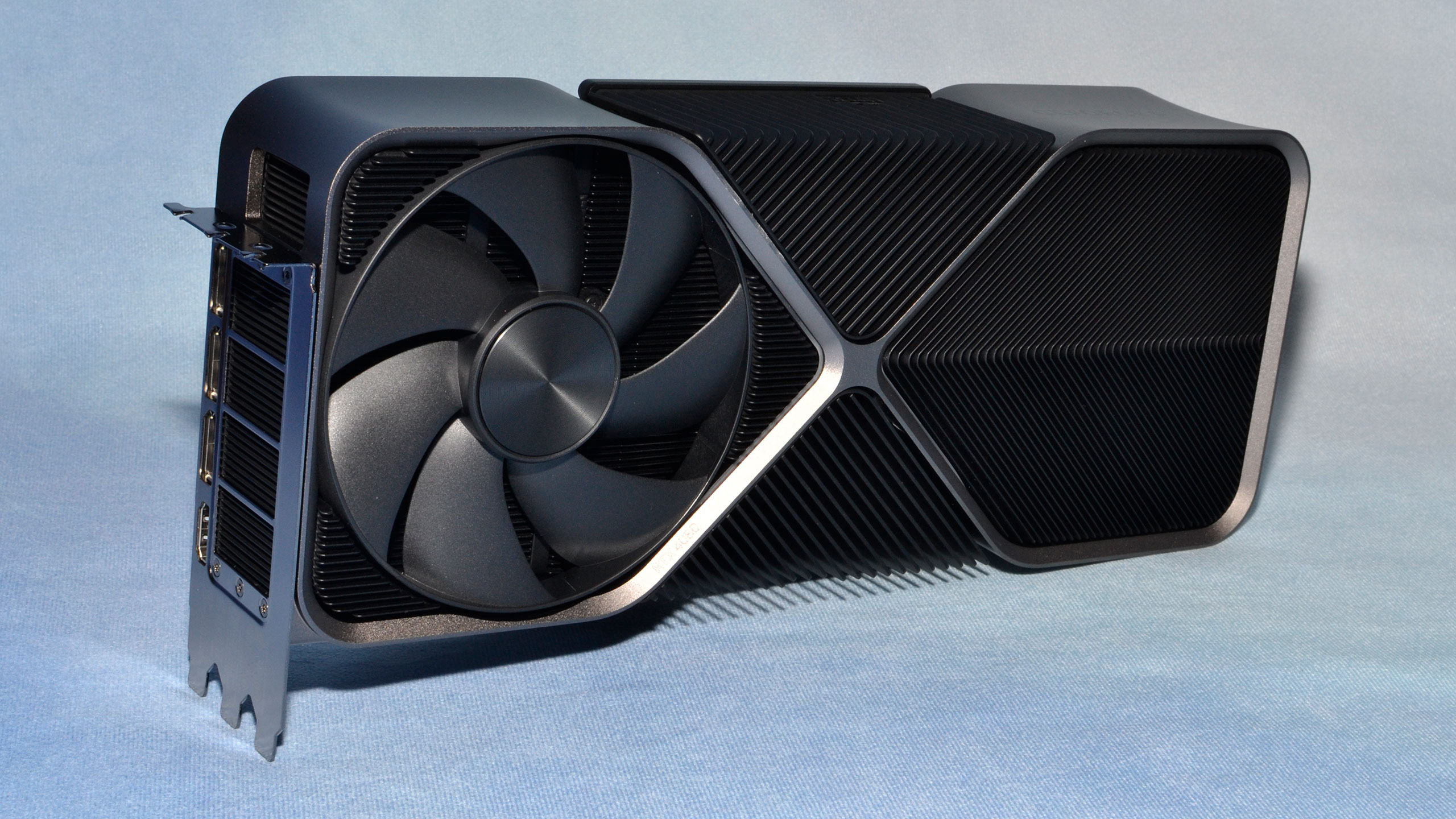

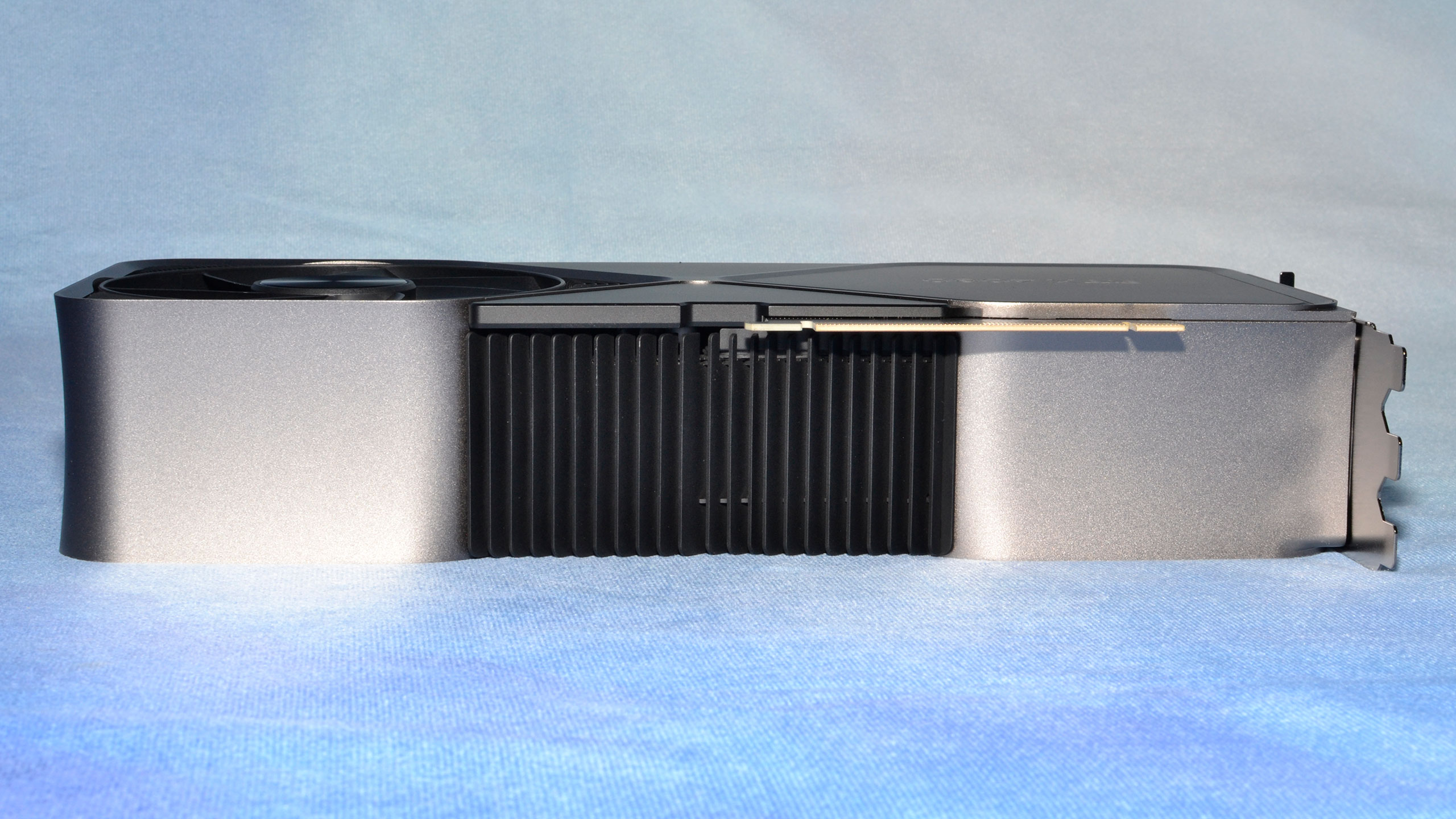

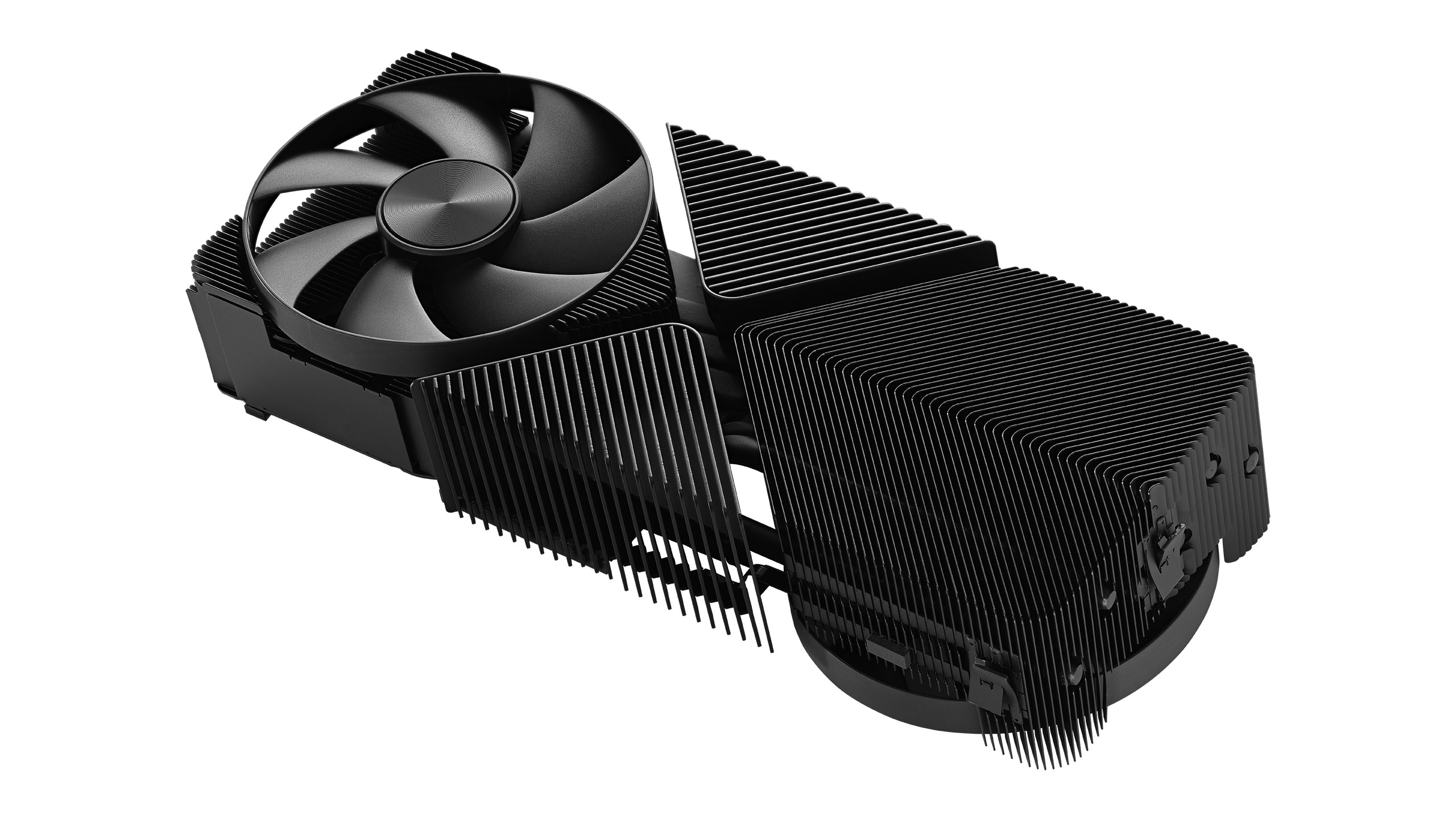

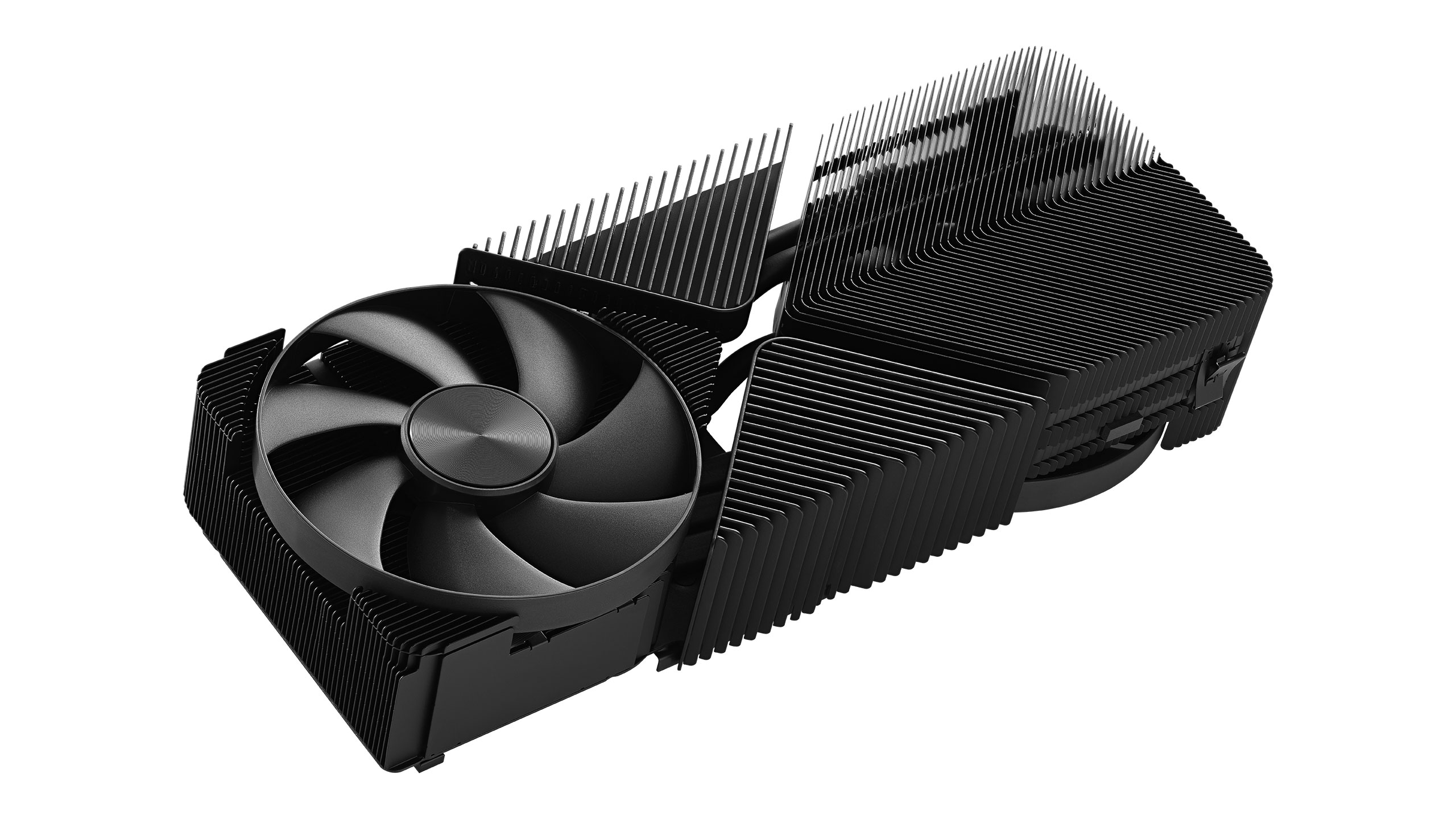

Nvidia's RTX 4080 Founders Edition looks identical to the RTX 4090 FE — that goes for the new packaging as well. Both also look mostly the same as the previous generation RTX 3090 FE. The new cards aren't quite as long, but they're a bit taller and thicker to make up for it. The RTX 4080 card measures 304 x 137 x 61mm and weighs 2132g, a bit lighter than the 4090's 2189g, thanks to having two fewer GDDR6X chips and a smaller GPU.

The industrial design of the RTX 40-series hasn't changed much at all, though the end results have improved substantially. The two fans are slightly larger than on the equivalent 30-series, and of course, where the 3080 and 3080 Ti were dual-slot cards that tended to run quite hot, the triple-slot design proves more than adequate for the RTX 4080.

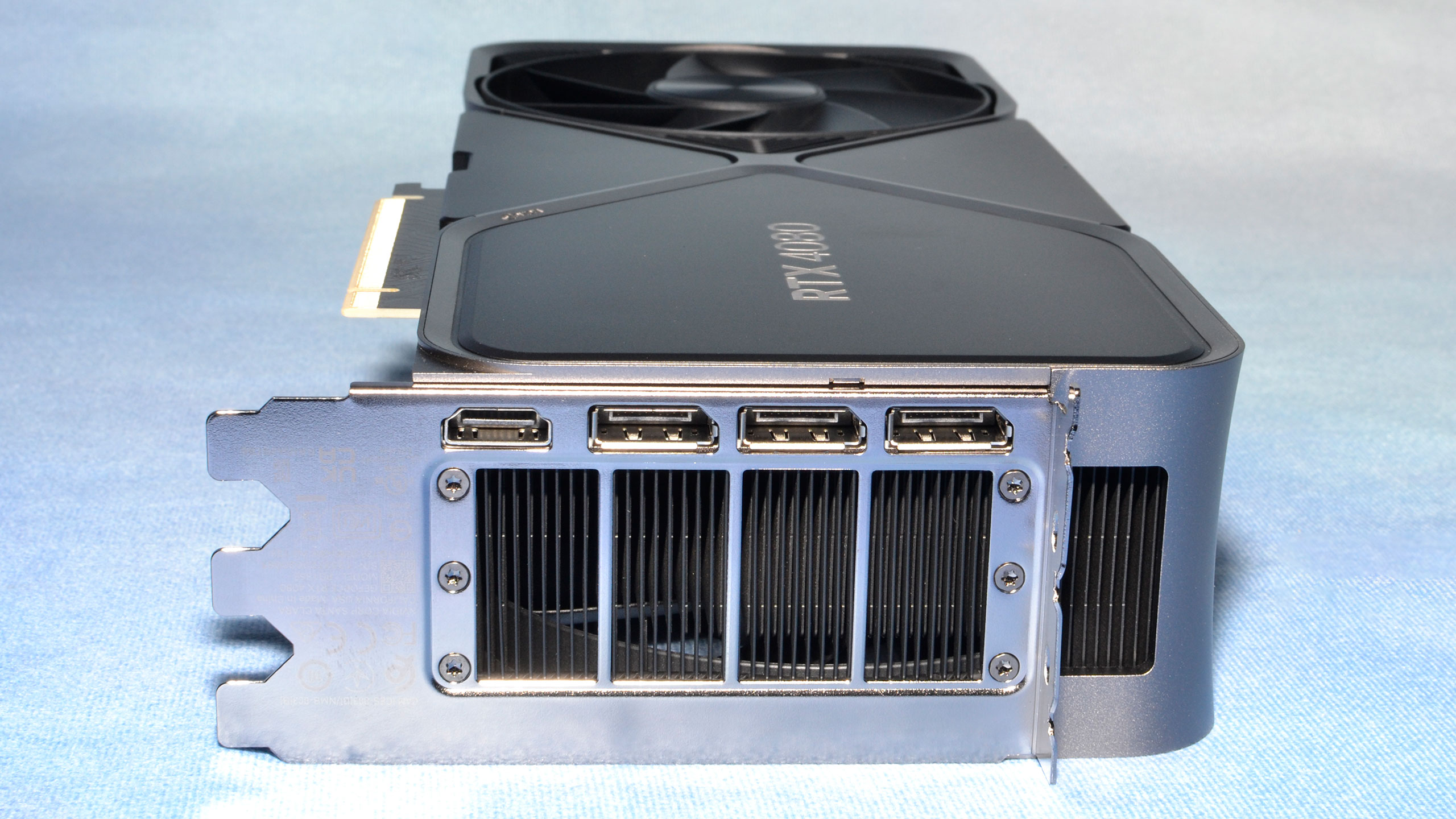

That also goes for the video outputs. You get three DisplayPort 1.4a and a single HDMI 2.1 port, all with Display Stream Compression (DSC) support. Those can handle up to 4K at 240 Hz or 8K at 60 Hz, which should be sufficient for the time being — we're still waiting for monitors that exceed those values to actually show up. AMD's DisplayPort 2.1 54 Gbps is superior, but it may not matter too much in practical terms unless you upgrade to a 4K display that supports a refresh rate above 240.

Things have changed a lot more under the hood, both for the GPU and the GDDR6X memory. We've tested five RTX 4090 cards now, and while all of them run the memory at the official 21 Gbps speed, two of the cards (Asus and Colorful) appear to have used faster 24 Gbps chips that Micron down-binned and labeled as 21 Gbps chips. The result was that memory temperatures, even under our most strenuous workloads, were only in the 60–65C range, almost 20C lower than the 'normal' 21 Gbps chips.

With the RTX 4080, all models should come with 24 Gbps GDDR6X memory since it's the only option that officially supports the 22.4 Gbps speed. That means the memory should stay chill, and it's exactly what we saw in testing: The RTX 4080 Founders Edition showed VRAM temperatures that peaked at 52C, even when we pushed the overclock as far as we could (we'll cover overclocking on the next page).

Unfortunately for Nvidia, our biggest concern right now has to be the 16-pin power connector. We haven't had any problems, but over 20 reported cases suggest there's more going on than just a few incorrectly connected cards. Does the single 16-bit connector even help compared to dual 8-pin connectors? Nope. It isn't easy to imagine a scenario where the cost savings of a single 16-pin connector with an adapter significantly outweigh the cost of dual 8-pin connectors.

It's worth pointing out that the RTX 4080 doesn't get the same 'quadropus' adapter as the RTX 4090 cards we've tested. Instead, you get a triple 8-pin to single 16-pin adapter. Will that be less prone to failure? We don't know, but the 320W TBP ought to help.

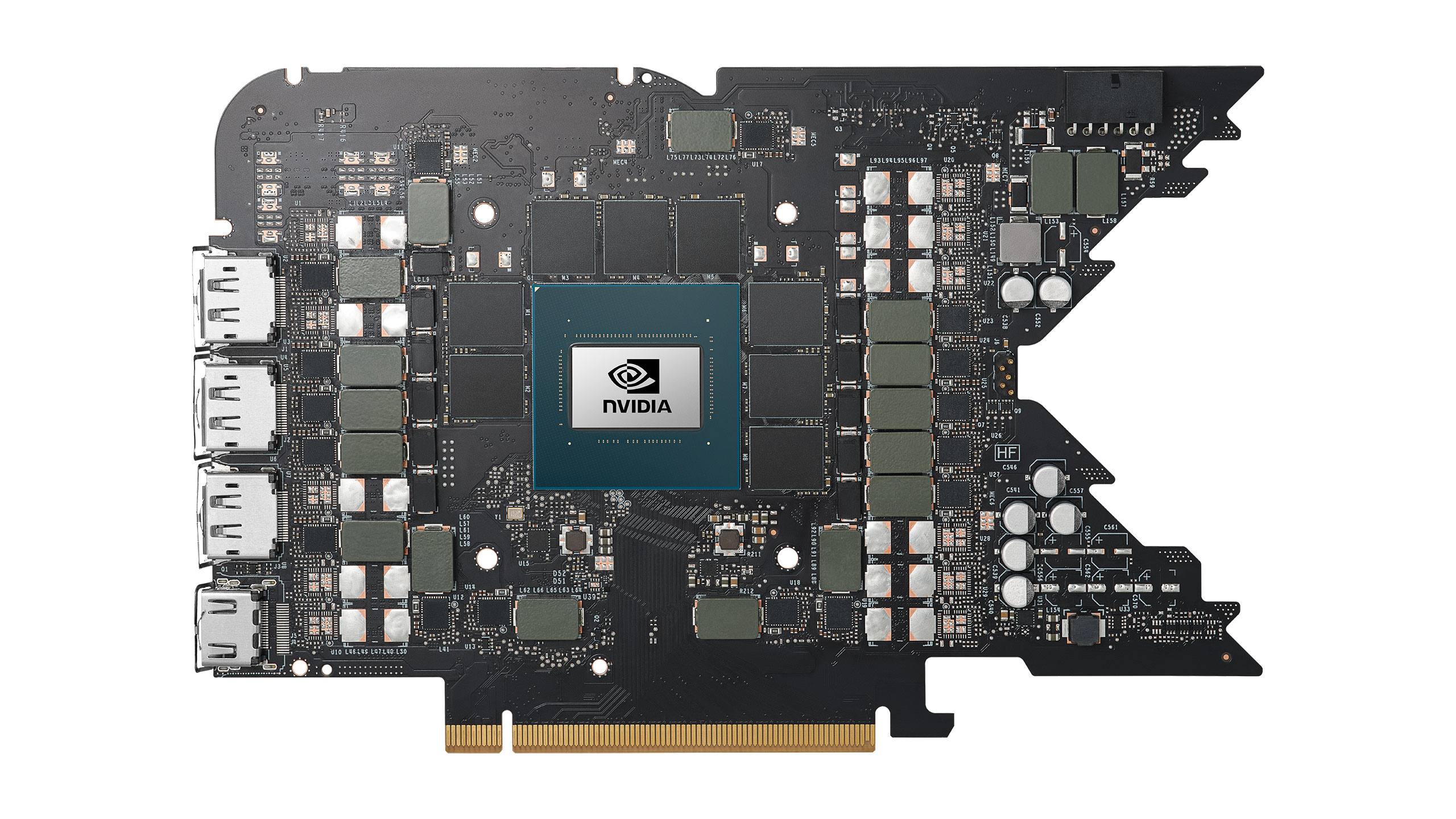

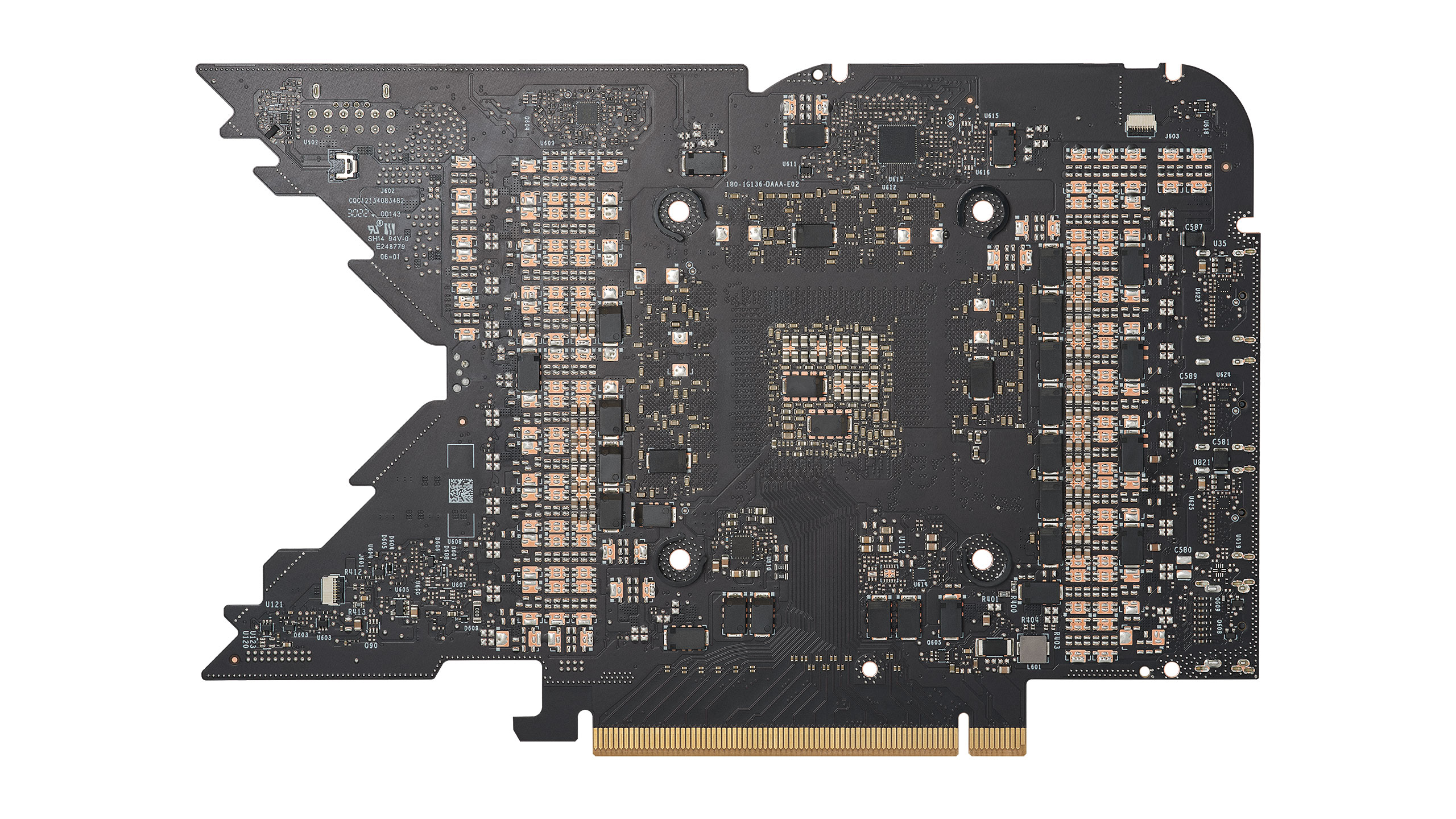

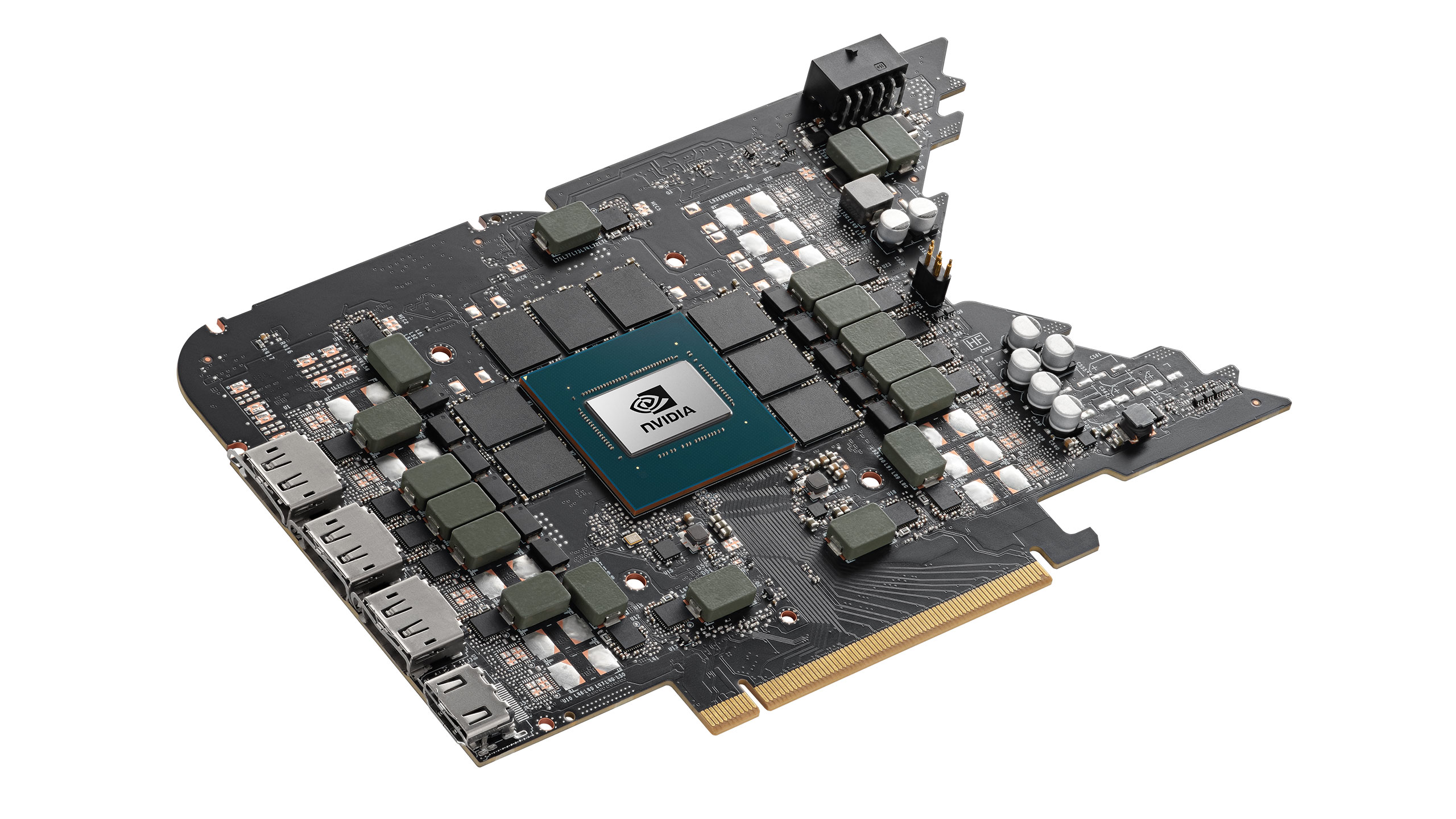

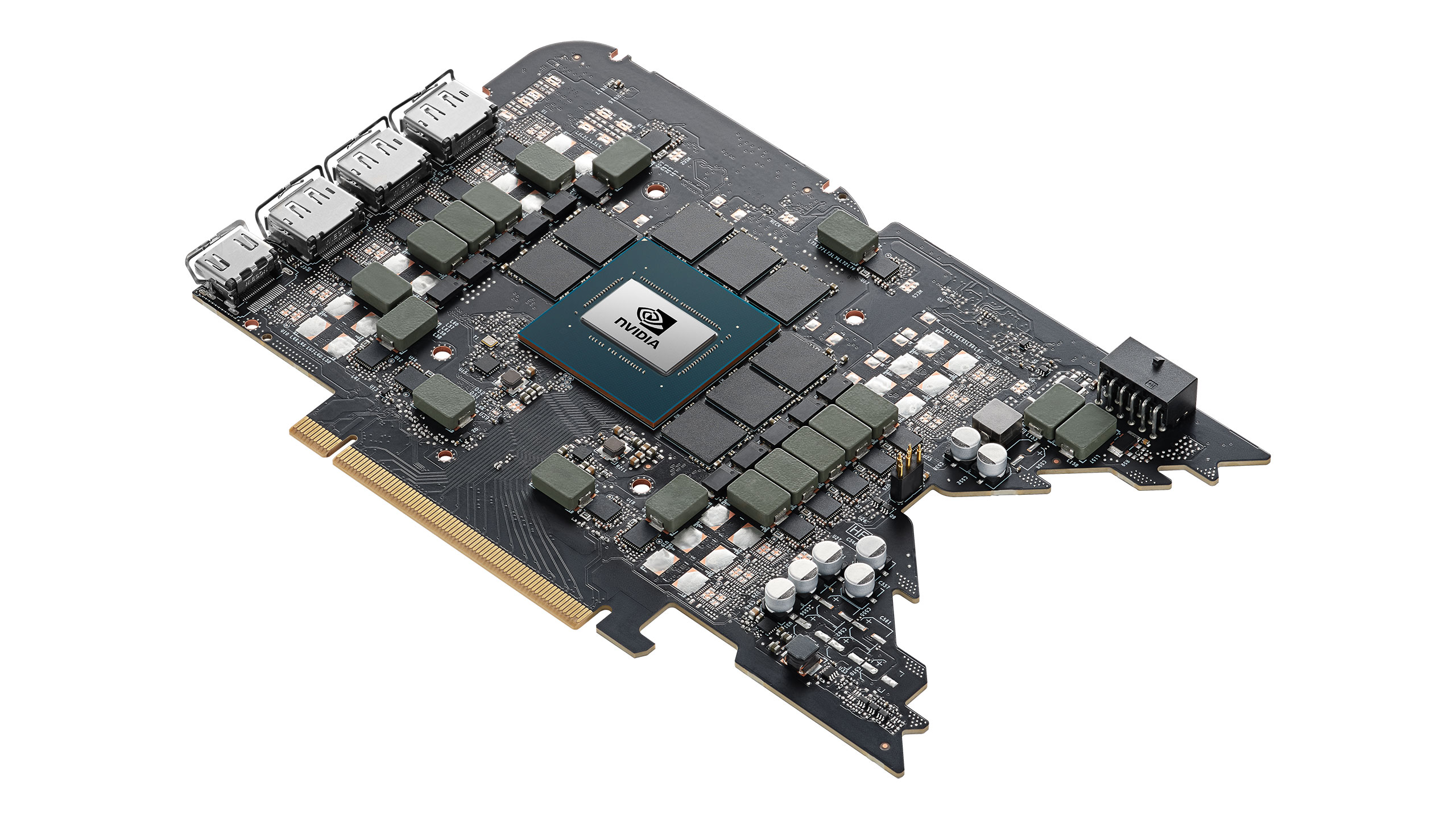

Nvidia RTX 4080 Founders Edition Internals

We don't normally disassemble reference cards like the RTX 4080 Founders Edition, since we like to keep them in their original state for future testing purposes. Nvidia provided the above photos of the PCB, GPU, and cooler, showing clear differences between the 4080 and 4090 PCBs. That's to be expected.

The two cards use different GPUs (AD102 and AD103), with the 4080 supporting two fewer GDDR6X memory channels and chips. That results in a lot of changes with the power phases and other aspects, and yet the two PCBs are the same dimensions so they can use the same cooler. It's clearly overkill for the 4080, but at least you shouldn't need to worry about the GPU or memory ever overheating.

The RTX 4080 Founders Edition board shows two input power phases, ten more for the GPU and then six more that appear to be for the GDDR6X memory — a 2+10+6 power phase design. (I might have that wrong, but there are 18 large inductors in various places on the board.) In addition, the RTX 4090 Founders Edition uses a 2+20+3 phase layout, while partner cards may have as many as 2+24+4 phases. You can also see eleven spots where the 4080 PCB has the mounting points for additional inductors but doesn't bother to fill them.

It's a safe bet that some partner cards will push much higher power limits, with more phases. The 16-pin connector using a triple 8-pin input should allow for up to 450W, plus 75W from the PCIe x16 slot, so there's ample headroom if an AIB (add-in board) partner chooses to expose it.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Nvidia RTX 4080 Design and Assembly

Prev Page Nvidia GeForce RTX 4080 Founders Edition Review Next Page Nvidia RTX 4080 Overclocking and Test Setup

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

btmedic04 At $1200, this card should be DOA on the market. However people will still buy them all up because of mind share. Realistically, this should be an $800-$900 gpu.Reply -

Wisecracker ReplyNvidia GPUs also tend to be heavily favored by professional users

mmmmMehhhhh . . . .Vegas GPU compute on line one . . .

AMD's new Radeon Pro driver makes Radeon Pro W6800 faster than Nvidia's RTX A5000.

My CAD does OpenGL, too

AMD Rearchitects OpenGL Driver for a 72% Performance Uplift : Read more

:homer: -

saunupe1911 People flocked to the 4090 as it's a monster but it would be entirely stupid to grab this card while the high end 3000s series exist along with the 4090.Reply

A 3080 and up will run everything at 2K...and with high refresh rates with DLSS.

Go big or go home and let this GPU sit! Force Nvidia's hand to lower prices.

You can't have 2 halo products when there's no demand and the previous gen still exist. -

Math Geek they'll cry foul, grumble about the price and even blame retailers for the high price. but only while sitting in line to buy one.......Reply

man how i wish folks could just get a grip on themselves and let these just sit on shelves for a couple months while Nvidia gets a much needed reality check. but alas they'll sell out in minutes just like always sigh -

chalabam Unfortunately the new batch of games is so politized that it makes buying a GPU a bad investment.Reply

Even when they have the best graphics ever, the gameplay is not worth it. -

gburke I am one who likes to have the best to push games to the limit. And I'm usually pretty good about staying on top of current hardware. I can definitely afford it. I "clamored" to get a 3080 at launch and was lucky enough to get one at market value beating out the dreadful scalpers. But makes no sense this time to upgrade over lest gen just for gaming. So I am sitting this one out. I would be curious to know how many others out there like me who doesn't see the real benefit to this new generation hardware for gaming. Honestly, 60fps at 4K on almost all my games is great for me. Not really interested in going above that.Reply -

PlaneInTheSky Seeing how much wattage these GPU use in a loop is interesting, but it still tells me nothing regarding real-life cost.Reply

Cloud gaming suddenly looks more attractive when I realize I won't need to pay to run a GPU at 300 watt.

The running cost of GPU should now be part of reviews imo.

Considering how much people in Europe, Japan, and South East Asia are now paying for electricity and how much these new GPU consume.

Household appliances with similar power usage, usually have their running cost discussed in reviews. -

BaRoMeTrIc Reply

High end RTX cards have become status symbols amongst gamers.Math Geek said:they'll cry foul, grumble about the price and even blame retailers for the high price. but only while sitting in line to buy one.......

man how i wish folks could just get a grip on themselves and let these just sit on shelves for a couple months while Nvidia gets a much needed reality check. but alas they'll sell out in minutes just like always sigh -

sizzling I’d like to see a performance per £/€/$ comparison between generations. Normally you would expect this to improve from one generation to the next but I am not seeing it. I bought my mid range 3080 at launch for £754. Seeing these are going to cost £1100-£1200 the performance per £/€/$ seems about flat on last generation. Yeah great, I can get 40-60% more performance for 50% more cost. Fairly disappointing for a new generation card. Look back at how the 3070 & 3080 smashed the performance per £/€/$ compared to a 2080Ti.Reply